Arista Networks

Arista’s WAN Routing System targets routing use cases such as SD-WANs

Arista Networks, noted for its high performance Ethernet data center switches, has taken its first direct step into WAN routing with new software, hardware and services, an enterprise-class system designed to link critical resources with core data-center and campus networks. The Arista WAN Routing System combines three new networking offerings: enterprise-class routing platforms, carrier/cloud-neutral internet transit capabilities, and the CloudVision® Pathfinder Service to simplify and improve customer wide area networks.

Based on Arista’s EOS® routing capabilities, and CloudVision management, the Arista WAN Routing System delivers the architecture, features, and platforms to modernize federated and software-defined wide area networks (SD-WANs. The WAN Routing introduction is significant because it is Arista’s first official routing platform.

The introduction of the WAN Routing System enables Arista’s customers to deploy a consistent networking architecture across all enterprise network domains from the client to campus to the data center to multi-cloud with a single instance of EOS, a consistent management platform, and a modern operating model.

Brad Casemore, IDC’s research vice president, with its Datacenter and Multicloud Networks group said:

“In the past, their L2/3 data-center switches were capable of and deployed for routing use cases, but they were principally data-center switches. Now Arista is expressly targeting an expansive range of routing use cases with an unambiguous routing platform.” By addressing SD-WAN use cases, WAN Routing puts Arista into competition in the SD-WAN space, Casemore noted.

“Arista positions the platform’s features and functionalities beyond the parameters of SD-WAN and coverage of traditional enterprise routing use cases, but it does SD-WAN, too, and many customers will be inclined to use it for that purpose. SD-WAN functionality was a gap in the Arista portfolio, and they address it with the release of this platform.”

Doug Gourlay, vice president and general manager of Arista’s Cloud Networking Software group wrote in a blog about the new package:

“Routed WAN networks, based on traditional federated routing protocols and usually manually configured via the CLI, are still the most predominant type of system in enterprise and carrier wide-area networks.”

“Traditional WAN and SD-WAN architectures are often monolithic solutions that do not extend visibility or operational consistency into the campus, data-center, and cloud environment,” Gourlay stated. “Many SD-WAN vendors developed highly proprietary technologies that locked clients into their systems and made troubleshooting difficult.”

“We took this feedback and client need to heart and developed an IP-based path-computation capability into CloudVision Pathfinder that enables automated provisioning, self-healing, dynamic pathing, and traffic engineering not only for critical sites back to aggregation systems but also between the core, aggregation, cloud, and transit hub environments.”

Modern WAN Management, Provisioning, and Optimization:

A new Arista WAN Routing System component is the CloudVision Pathfinder Service, which modernizes WAN management and provisioning, aligning the operating model with visualization and orchestration across all network transport domains. This enables a profound shift from legacy CLI configuration to a model where configuration and traffic engineering are automatically generated, tested, and deployed, resulting in a self-healing network. Arista customers can therefore visualize the entire network, from the client to the campus, the cloud, and the data center.

“As an Elite Partner and Arista Certified Services Provider (ACSP), we have been using Arista EOS and CloudVision for years and testing the Arista WAN Routing System in production environments for several quarters. The software quality and features within the system are ideal for enterprise network architectures embracing modern distributed application architectures across a blend of edge, campus, data center, cloud and SaaS environments,” stated Jason Gintert, chief technology officer at WAN Dynamics.

Arista WAN Platform Portfolio:

An enterprise WAN also requires modern platforms to interconnect campus, data center, and edge networks, as well as a variety of upstream carriers and Internet-based services. In addition to CloudEOS for cloud connectivity, the new Arista 5000 Series of WAN Platforms, powered by Arista EOS, offer high-performance control and data-plane scaling fit-to-purpose for enterprise-class WAN edge and aggregation requirements. Supporting 1/10/100GbE interfaces and flexible network modules while delivering from 5Gb to over 50Gbps of bidirectional AES256 encrypted traffic with high VRF and tunnel scale, the Arista 5000 Series sets the standard for aggregation and critical site interconnect with multiple use cases such as:

- Aggregation and High-Performance Edge Routing – The Arista 5500 WAN System, supporting up to 50Gbps of encrypted traffic, is ideal for data center, campus, high-performance edge, and physical transit hub architectures.

- Flexible Edge Routing – The Arista 5300 WAN System is suited for high-volume edge connectivity and transitioning WAN locations to multi-carrier, 5G, and high-speed Internet connectivity with performance rates of up to 5Gbps of encrypted traffic.

- Scalable Virtual Routing – Arista CloudEOS is a binary-consistent virtual machine implementing identical features and capabilities of the other Arista WAN systems. It is often deployed in carrier-neutral transit hub facilities or to provide scale-out encryption termination capabilities.

- Public Cloud Edge Routing – Arista CloudEOS is also deployable through public cloud marketplaces and enables Cloud Transit routing and Cloud Edge routing capabilities as part of the end-to-end WAN Routing System. CloudEOS is available on AWS, Azure, Google Cloud, and through Platform Equinix.

- CPE Micro Edge – In addition to the fully integrated, dynamically configured, and adaptive Arista WAN Routing System platforms, the Arista Micro Edge is capable of small-site interoperability with the WAN Routing System to provide simple downstream connectivity options.

Cloud and Carrier Neutral Transit Hubs:

The Arista WAN Routing System also embraces a new implementation of the traditional WAN core – the Transit Hub. These are physical or virtual routed WAN systems deployed in carrier-neutral and cloud-neutral facilities with dense telecommunications interconnections.

Artista has partnered with Equinix so Transit Hubs can be deployed on Equinix Network Edge and Bare Metal Cloud platforms via Arista CloudEOS software. CloudEOS is available on AWS, Azure, Google Cloud, and Equinix. As a result, Arista’s WAN Routing System will be globally deployable in Equinix International Business Exchange™ (IBX®) data centers. This enables customers to access a distributed WAN core leveraging multi-carrier and multi-cloud transit options – all provisioned through the CloudVision Pathfinder Service.

“Arista Pathfinder leverages Equinix’s Network Edge, Equinix Metal, and Equinix Fabric services to deliver scalable routing architectures that accelerate customers with cloud and carrier-neutral networking,” stated Zachary Smith, Global Head of Edge Infrastructure Services at Equinix. “Pathfinder’s ability to scale, in software, from a single virtual deployment to a multi-terabit globally distributed core that reallocates paths as network conditions change is a radical evolution in network capability and self-repair.”

“The Arista Transit Hub Architecture and the partnership with Equinix positions Arista for growth in hybrid and multicloud routing,” Casemore added.

Pricing and Availability:

The Arista WAN Routing system is in active customer trials and deployments with general availability in the summer of 2023. The following components are part of the Arista WAN Routing System:

- The Arista 5510 WAN Routing System for high-performance aggregation, transit hub deployment, and critical site routing which starts at $77,495.

- The Arista 5310 WAN Routing System for high-performance edge routing which starts at $21,495.

- Arista CloudEOS software for transit hub and scale-out routing in a virtual machine form factor.

- Arista CloudEOS, delivered through the public cloud for Cloud Edge and Cloud Transit routing, is available on AWS, Azure, Google Cloud Platform, and Platform Equinix.

- The CloudVision Pathfinder Service and CloudVision support for the Arista WAN Routing System is in field trials now and will generally be available in the second half of 2023.

About Arista Networks:

Arista Networks is an industry leader in data-driven client to cloud networking for large data center, campus, and routing environments. Arista’s award-winning platforms deliver availability, agility, automation, analytics, and security through an advanced network operating stack. For more information, visit www.arista.com.

ARISTA, EOS, CloudVision, NetDL and AVA are among the registered and unregistered trademarks of Arista Networks, Inc. in jurisdictions worldwide. Other company names or product names may be trademarks of their respective owners. Additional information and resources can be found at www.arista.com.

References:

Arista’s Doug Gourlay’s blog here

Deep dive into many of the technologies and innovations available with this video from the product and engineering leaders.

https://www.arista.com/en/solutions/enterprise-wan

https://blogs.arista.com/blog/modernizing-the-wan-from-client-to-cloud

https://www.networkworld.com/article/3691113/arista-embraces-routing.html

Facebook’s F16 achieves 400G effective intra DC speeds using 100GE fabric switches and 100G optics, Other Hyperscalers?

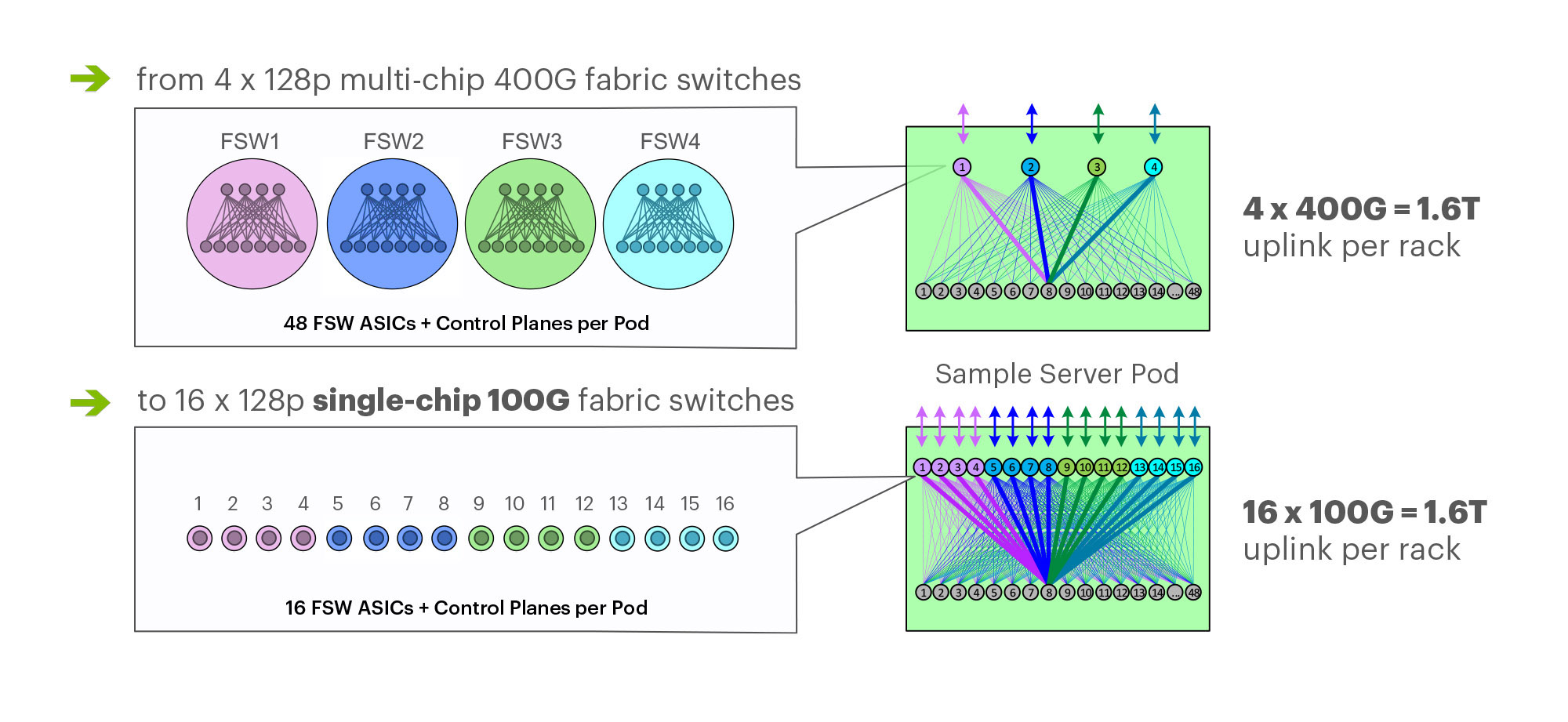

On March 14th at the 2019 OCP Summit, Omar Baldonado of Facebook (FB) announced a next-generation intra -data center (DC) fabric/topology called the F16. It has 4x the capacity of their previous DC fabric design using the same Ethernet switch ASIC and 100GE optics. FB engineers developed the F16 using mature, readily available 100G 100G CWDM4-OCP optics (contributed by FB to OCP in early 2017), which in essence gives their data centers the same desired 4x aggregate capacity increase as 400G optical link speeds, but using 100G optics and 100GE switching.

F16 is based on the same Broadcom ASIC that was the candidate for a 4x-faster 400G fabric design – Tomahawk 3 (TH3). But FB uses it differently: Instead of four multichip-based planes with 400G link speeds (radix-32 building blocks), FB uses the Broadcom TH3 ASIC to create 16 single-chip-based planes with 100G link speeds (optimal radix-128 blocks). Note that 400G optical components are not easy to buy inexpensively at Facebook’s large volumes. 400G ASICs and optics would also consume a lot more power, and power is a precious resource within any data center building. Therefore, FB built the F16 fabric out of 16 128-port 100G switches, achieving the same bandwidth as four 128-port 400G switches would.

Below are some of the primary features of the F16 (also see two illustrations below):

-Each rack is connected to 16 separate planes. With FB Wedge 100S as the top-of-rack (TOR) switch, there is 1.6T uplink bandwidth capacity and 1.6T down to the servers.

-The planes above the rack comprise sixteen 128-port 100G fabric switches (as opposed to four 128-port 400G fabric switches).

-As a new uniform building block for all infrastructure tiers of fabric, FB created a 128-port 100G fabric switch, called Minipack – a flexible, single ASIC design that uses half the power and half the space of Backpack.

-Furthermore, a single-chip system allows for easier management and operations.

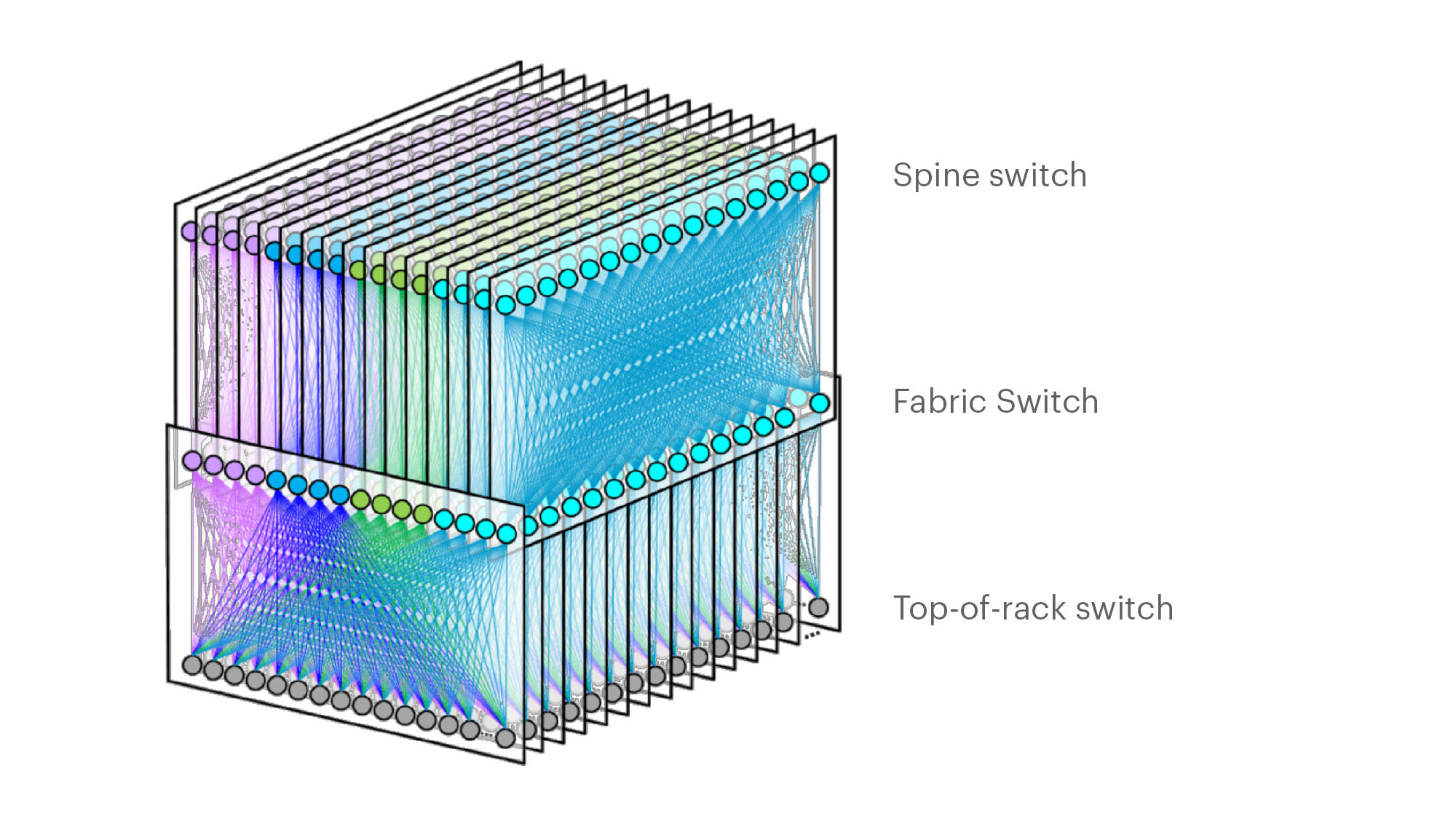

Facebook F16 data center network topology

Facebook F16 data center network topology

………………………………………………………………………………………………………………………………………………………………………………………………..

Multichip 400G b/sec pod fabric switch topology vs. FBs single chip (Broadcom ASIC) F16 at 100G b/sec

…………………………………………………………………………………………………………………………………………………………………………………………………..

In addition to Minipack (built by Edgecore Networks), FB also jointly developed Arista Networks’ 7368X4 switch. FB is contributing both Minipack and the Arista 7368X4 to OCP. Both switches run FBOSS – the software that binds together all FB data centers. Of course the Arista 7368X4 will also run that company’s EOS network operating system.

F16 was said to be more scalable and simpler to operate and evolve, so FB says their DCs are better equipped to handle increased intra-DC throughput for the next few years, the company said in a blog post. “We deploy early and often,” Baldonado said during his OCP 2019 session (video below). “The FB teams came together to rethink the DC network, hardware and software. The components of the new DC are F16 and HGRID as the network topology, Minipak as the new modular switch, and FBOSS software which unifies them.”

This author was very impressed with Baldonado’s presentation- excellent content and flawless delivery of the information with insights and motivation for FBs DC design methodology and testing!

References:

https://code.fb.com/data-center-engineering/f16-minipack/

………………………………………………………………………………………………………………………………….

Other Hyperscale Cloud Providers move to 400GE in their DCs?

Large hyperscale cloud providers initially championed 400 Gigabit Ethernet because of their endless thirst for networking bandwidth. Like so many other technologies that start at the highest end with the most demanding customers, the technology will eventually find its way into regular enterprise data centers. However, we’ve not seen any public announcement that it’s been deployed yet, despite its potential and promise!

Some large changes in IT and OT are driving the need to consider 400 GbE infrastructure:

- Servers are more packed in than ever. Whether it is hyper-converged, blade, modular or even just dense rack servers, the density is increasing. And every server features dual 10 Gb network interface cards or even 25 Gb.

- Network storage is moving away from Fibre Channel and toward Ethernet, increasing the demand for high-bandwidth Ethernet capabilities.

- The increase in private cloud applications and virtual desktop infrastructure puts additional demands on networks as more compute is happening at the server level instead of at the distributed endpoints.

- IoT and massive data accumulation at the edge are increasing bandwidth requirements for the network.

400 GbE can be split via a multiplexer into smaller increments with the most popular options being 2 x 200 Gb, 4 x 100 Gb or 8 x 50 Gb. At the aggregation layer, these new higher-speed connections begin to increase in bandwidth per port, we will see a reduction in port density and more simplified cabling requirements.

Yet despite these advantages, none of the U.S. based hyperscalers have announced they have deployed 400GE within their DC networks as a backbone or to connect leaf-spine fabrics. We suspect they all are using 400G for Data Center Interconnect, but don’t know what optics are used or if it’s Ethernet or OTN framing and OAM.

…………………………………………………………………………………………………………………………………………………………………….

In February, Google said it plans to spend $13 billion in 2019 to expand its data center and office footprint in the U.S. The investments include expanding the company’s presence in 14 states. The dollar figure surpasses the $9 billion the company spent on such facilities in the U.S. last year.

In the blog post, CEO Sundar Pichai wrote that Google will build new data centers or expand existing facilities in Nebraska, Nevada, Ohio, Oklahoma, South Carolina, Tennessee, Texas, and Virginia. The company will establish or expand offices in California (the Westside Pavillion and the Spruce Goose Hangar), Chicago, Massachusetts, New York (the Google Hudson Square campus), Texas, Virginia, Washington, and Wisconsin. Pichai predicts the activity will create more than 10,000 new construction jobs in Nebraska, Nevada, Ohio, Texas, Oklahoma, South Carolina, and Virginia. The expansion plans will put Google facilities in 24 states, including data centers in 13 communities. Yet there is no mention of what data networking technology or speed the company will use in its expanded DCs.

I believe Google is still designing all their own IT hardware (compute servers, storage equipment, switch/routers, Data Center Interconnect gear other than the PHY layer transponders). They announced design of many AI processor chips that presumably go into their IT equipment which they use internally but don’t sell to anyone else. So they don’t appear to be using any OCP specified open source hardware. That’s in harmony with Amazon AWS, but in contrast to Microsoft Azure which actively participates in OCP with its open sourced SONIC now running on over 68 different hardware platforms.

It’s no secret that Google has built its own Internet infrastructure since 2004 from commodity components, resulting in nimble, software-defined data centers. The resulting hierarchical mesh design is standard across all its data centers. The hardware is dominated by Google-designed custom servers and Jupiter, the switch Google introduced in 2012. With its economies of scale, Google contracts directly with manufactures to get the best deals.Google’s servers and networking software run a hardened version of the Linux open source operating system. Individual programs have been written in-house.