Month: April 2016

AT&T Bets on Internet of Things +WSJ on 600MHz band for managed IoT services

Preface: AT&T’s IoT initiative was formerly called “Emerging Devices,” headed by AT&T Executive Glenn Lurie.

Overview:

AT&T is looking to diversify its revenue stream by betting on the Internet of Things (IoT), which research company IDC predicts will be a $1.7 trillion market by 2020. The wireless industry sees opportunities for growth in such devices, as well as in connected cars. “We’re just at the beginning,” said Chris Penrose, senior vice president of AT&T’s Internet of Things operations. “It is a top priority of our company to continue to be a leader in the IoT space,” he added.

Internet of Things is a reported fast-growing area (we disagree as it’s ultra hyped) where industrial and consumer firms and software providers are teaming up to offer smarter ways of doing things such as predicting mechanical problems before they arise, controlling machines at home remotely or integrating municipal services.

AT&T already has a strong position in the automotive industry, where it has 10 major carmakers using a platform it has developed to deliver services such as roadside assistance, weather reports or Internet radio to cars on the road.

“We have the ability not only to connect things … we also have the ability to enable the collection and the analytics of the data behind those as well as do it in a secure manner and do it globally,” Penrose said.

But he said AT&T did not insist that customers use its own platforms, rather allowing them to use their preferred technology. “We want to be the very best collaboration partner,” he said. “Sometimes we will provide connectivity and that’s OK.”

At the Hannover Messe, AT&T announced new deals including one with Otis, in which it will connect data from Otis elevators to Microsoft’s Azure cloud, enabling Otis to access real-time equipment performance data. Penrose said in this case AT&T would add value through the security of its connection. In other cases, it might exploit its more mundane but essential expertise in areas like billing – a core competence of wireless carriers.

AT&T does not disclose how much revenue it makes from the Internet of Things. Rival Verizon said it made about $690 million in IoT revenue in 2015, up 18 percent year on year, of a total $132 billion in revenue.

AT&T has about 26 million connected objects and is connecting more than a million more every quarter, Penrose said.

References:

http://www.reuters.com/article/us-at-t-interet-idUSKCN0XO2DF

https://www.business.att.com/enterprise/Portfolio/internet-of-things/

In an April 28th WSJ op-ed “How to Bring The ‘Internet Of Things To Life,” Jerome Rota wrote:

The Federal Communications Commission (FCC) is considering bids for its latest spectrum auction, of licenses to transmit in the 600 megahertz band, now that the March 29 application deadline has passed. Though it might seem arcane, the resale of this enormous swath of airwaves, freed from TV broadcasters by Congress in 2012 and now pitched toward wireless carriers, is hugely important. That’s because the 600 megahertz band could finally bring a techie buzzword, the Internet of Things, to fruition.

This low-frequency bandwidth, in layman’s terms, isn’t great for carrying big loads of data, so it won’t be much help in streaming high-definition Netflix on your iPhone. But it is fantastic at traveling long distances and penetrating buildings—past metal doors and through concrete basements, where your cell coverage now wanes. That’s important for keeping online those sensors and gadgets that we keep hearing will soon pepper our homes and apartments.

From a consumer perspective, the Internet of Things has not yet delivered on its promise. Plenty of cool, connected and smart stuff is available for purchase, but the technology still requires a prohibitive amount of effort to get going. And even then, it often doesn’t work the way it should. The communications protocols, gateways, routers and hubs leave consistent device compatibility somewhere between a technical chore and an impossible dream.Perhaps worse, security remains a major issue, in part because it’s difficult to update software as device manufacturers release patches and bug fixes. Demonstrations uploaded to YouTube show the simplicity of hacking everything from baby monitors to coffee pots. With all the apps, boxes and devices needing updates, you would need a full-time IT specialist to make your home truly smart.

Instead of having to set up and maintain all of these networked devices, a wireless carrier could do it for you. The 600 megahertz band (FCC auction forthcoming) would ensure that a strong and secure signal is always available in any part of your home. Consider how this could change passive home-safety devices such as smoke alarms, air-quality monitors or flood sensors in dishwashers. The consumer could plug them in and forget them for years. They could be programmed to send text notifications if they sense something or need a battery change.

The Internet of Things could be listed as a service on wireless or cable bills. Consumers could purchase whatever smart stuff they want—a smart lightbulb or thermostat, say—and simply activate the devices with their mobile carriers upon installation. Device security or compatibility would be less of an issue, since they would be handled by a major telecom. The infrastructure already exists for this model to function. Making it work is merely a matter of setting up and marketing the service.

The telecom-empowered Internet of Things is already up and running at some modern hospitals, large corporations and municipalities. For example, AT& T has implemented the Internet of Things at Texas Medical Center in Houston, working with wheelchair-maker Perimobil to produce a connected wheelchair. General Electric uses Verizon to provide connectivity for software-enabled industrial machines and devices. Scaling this technology for everyday consumers is a natural next step.

Although wireless carriers aren’t the most beloved firms in the eyes of most Americans, they could make life a lot easier once brought into homeautomation. If the pricing is reasonable, the Internet of Things will finally provide the promised service without all of the maddening management headaches. None of this is available to the buying public today. For such a model to emerge, carriers would have to both snap up the spectrum and push their partners to explore these commercial possibilities. But the broadband-spectrum auction hints at the possibility of metamorphosis.

Mr. Rota is the chief scientist of usability at Greenwave Systems. From the company’s website:

“Greenwave Systems is a global Internet of Things (IoT) software and services company whose disruptive Greenwave360™ model enables category-leading brands to quickly and profitably deploy managed services. Greenwave Systems empowers market leading brands to profitably deploy their own Internet of Things managed services and products to foster deeper customer relationships and grow their business.”

Akamai – Shift in Network Topology to Distributed Computing Clouds Long-Term Outlook, David Dixon FBR & Alan J Weissberger

Summary by David Dixon (Overview by Alan J Weissberger is below):

Akamai (AKAM) delivered another quarter of robust financial results that largely exceeded Wall Street expectations. The security products continue to sell well while the prior departure of two of its largest customers weighed on media delivery solutions revenues but were mostly expected.

Although it is easy to gloss over the significance of the decline in media delivery solutions, as few customers have the scale to go DIY today, we believe the industry is in the early innings of a fundamental shift in network topology toward distributed computing over the next 12 months, coupled with the proliferation of datacenters and fiber that will greatly increase performance and reduce incremental costs associated with delivering media content directly via end-user cable and telecom networks. This should pave the way for further DIY adoption. Management has purposefully lowered the rate of head-count additions and pulled back on spending, which, we believe, is an attempt to preserve margins as revenue growth remains under pressure. We continue to believe that Akamai is on a downward dominance curve and anticipate greater revenue pressure going forward.

1Q16 Recap:

Consolidated revenues of $567.7M (+7.8% YOY) were ahead of consensus of $564.0M and our estimate of $558.1M. Media delivery solutions revenue declined 4.2% YOY, to $205.9M, below consensus of $208.7M. Performance and security solutions revenue grew 16.1% YOY, to $315.9M, versus consensus of $311.6M. Service and support solutions revenue increased 15.6% YOY, to $45.9M, versus consensus of $46.0M.

Adjusted EBITDA of $234.1M topped consensus’ expectation of $228.4M, driven by growth in the top line. Adjusted EPS were $0.66, versus consensus of $0.63.

Will sales force investments and international expansion pay off?

Akamai continues to accelerate investment in its sales force. Most of the company hiring will be done with a focus on international, where the company believes the revenue opportunity could one day equal North America. We think that the growth seen in international revenue supports the company decision to aggressively expand sales capacity and that the move could ultimately pay off.

Can newer products contribute enough to offset maturing core markets and drive sustained midteens, or better, growth?

Akamai s focus over the past few years has been to increasingly diversify its business beyond media delivery and Web performance. Through acquisitions and investments, the company entered new end markets and doubled its addressable market. Akamai s newer product groups Web security, carrier products, and hybrid cloud optimization are growing well, but overall growth is still determined by performance in Akamai s slowing core markets. These businesses are achieving scale, but the rate of slowing in the core CDN business is occurring faster than expected, and the magnitude and timing of OTT opportunities are unclear.

Will Akamai s business model be pressured over time by the irreversible mix shift of Internet traffic toward two-way content increasingly distributed on cloud-based architectures that provide compute and storage?

While the amount of Internet traffic is growing, there is an increase in DIY CDN business, and the amount of static, Akamaicacheable data on the Web is falling as a percentage of the total amount of data with which customers interact. In 1999, the Web was a read-only medium with very little user-generated content, customization, etc. Today, the flow is much more bidirectional (and therefore uncacheable). We do not see that Akamai has a play here; it may resist this architecture shift, as moving into these growth areas would likely cannibalize the CDN revenue base. More acquisitions to enhance the enterprise security portfolio in the interim are likely as the company continues to diversify away from the commodity CDN business segment. Yet the market has responded to the unification of software accessing three types of storage by moving toward distributed, layered IaaS/PaaS systems (e.g., AWS) using HTTPS APIs (versus FTP), providing compute and storage (versus caching of object storage). Improved performance, reliability, and scale are occurring fast, and we expect many cloud customers that are not scaled up will still require a CDN for performance enhancement.

Conclusions:

We believe Akamai Technologies is in transition as its core media delivery business matures. The company has stepped up its diversification efforts, including (1) broadening the product set, (2) ramping sales hiring, and (3) expanding internationally. The long-term impact of these efforts could be positive, but we see increased pressure on Akamai’s CDN-based business model over time, driven by the irreversible mix shift of Internet traffic toward “two-way” content increasingly distributed on cloud-based architectures that provide compute and storage. We view the risk/reward at current levels as negative: Near-term positive momentum is more than offset by fundamental challenges in the CDN segment.

Remainder of this post is by Alan J Weissberger, IEEE ComSoc Community site content manager.

Overview:

Akamai is the largest provider of content delivery network (CDN) services. A content delivery network or content distribution network (CDN) is a globally distributed network of proxy servers deployed in multiple data centers. The goal of a CDN is to serve content to end-users with high availability and high performance.

Akamai competes with Level 3 Communications (LVLT) and Limelight Networks (LLNW), as well as startups Fastly and CloudFlare. Verizon Communications (VZ), Amazon.com‘s (AMZN) Amazon Web Services, IBM (IBM) and Comcast (CMCSA) are also emerging as new rivals in some parts of the CDN market.

Worries that customers such as Apple (AAPL) and Facebook(FB) are shifting some of their data traffic to their own CDNs has pressured Akamai and analysts have lowered Q1 estimates.

Other Voices:

“Revenue guidance is slightly under consensus, due to year-over-year decline in revenue from two major media delivery customers (Apple and Facebook) that are taking more of their volume in-house,” Michael Olson, a Piper Jaffray analyst, said in a research report. “Importantly, the impact from these customers is becoming less material as they go from 11% of revenue in 2015 to around 6% in 2016.”

Colby Synesael, an analyst at Cowen & Co., says Akamai’s guidance might be too conservative.

“While we appreciate management’s decision to err on the side of being overly cautious after its surprising revelation regarding these two customers on its Q3 (2015) call, it highlights management’s lack of visibility with its own top customers,” he said in a report.

“Akamai has been very clear that the first half of 2016 would be marked by slower growth in the media segment, but then it expects (Internet TV) video to begin to accelerate growth. Similar to other large, secular growth opportunities, it is often difficult to project the exact timing of the opportunity, but we believe growth from (Internet TV) will begin to manifest in second half 2016,” said Michael Bowen, an analyst at Pacific Crest Securities, in a report.

References:

10 Best CDN Service Providers: Content Delivery Networks that Work

AT&T Facing Stiff Competition from T-Mobile: Analysis by David Dixon of FBR & Co.

Summary & Overview:

AT&T delivered in-line 1Q16 financial results, but domestic subscriber metrics were disappointing. While total wireless net adds of 2.3 million were impressive, most of this was driven by growth in Mexico, which has a much lower wireless penetration and profitability than the U.S. market. Excluding Mexican postpaid net adds of 529,000, this implied U.S. domestic postpaid sub growth of 129,000—business segment of 133,000 + consumer mobility of (4,000)—a YOY decline of 70.8%.

We believe this corroborates with T-Mobile’s very strong 1.75 porting ratio against AT&T during the quarter, the highest among T-Mobile’s competitors. With the DIRECTV acquisition now closed, AT&T is shifting towards bundling of two-year contracts (wireless, video, broadband), which has not yet resonated with consumers, in our view. Pressure from T-Mobile should continue: Its rollout of extended LTE on 700 MHz A Block spectrum (covering 194 million PoPs) is running ahead of schedule, with plans to acquire additional A Block spectrum that will cover 48 million PoPs. We expect AT&T to continue its ramped-up fiber network investment strategy (despite our view that this generates negative NPV), due to increased market share erosion risk from cable, which enjoys a lower incremental cost curve.

Meanwhile, T-Mobile USA reported (April 26) materially better 1Q16 financial and operating results, versus consensus and our estimates. It is likely that T-Mobile captured the bulk of the industry’s net subscriber adds this quarter, in our view. It is seeing increased success in capturing better quality market share at the expense of Sprint and AT&T, due to a similar network and device portfolio and customers balking at AT&T’s (pricier) bundles. The porting ratio was the highest (with AT&T as noted above), at 1.75 this quarter, followed by Sprint at 1.35 and Verizon at 1.34. T-Mobile is riding high on momentum, yet our vendor checks suggest it has about two years of network capacity left, which bodes well for another kick at the M&A can in 2017, in our view.

Key Points:

■ 1Q16 results recap. Consolidated revenues increased 3.8% YOY to $40.5B, in line with consensus’ estimate but modestly below our estimate of $40.8B. By segment: Business solutions delivered revenues of $17.6B, entertainment and Internet services had revenues of $12.7B, consumer mobility had revenues of $8.3B, and International had revenues of $1.9B. Adjusted EBITDA of $13.3B were in line with Street expectations and a hair below our estimate of $13.4B. EBITDA margins improved by 16 bps YOY, to 32.7%, driven by increased cost reductions. Mobility postpaid net adds were 28,294, and prepaid net adds were 12,171. Postpaid churn was 1.24%.

■ Worsening wireline business deterioration. Legacy voice and data service revenues continue to worsen as customers switch to wireless or VoIP. We believe there is a greater management urgency to shed the consumer wireline business to help fund low-band spectrum purchases. We think management is exploring additional consumer access line sales, given the looming wireless access substitution risk, higher costs, and a negative NPV profile to upgrade copper plant to fiber, in our view.

■ While we believe AT&T will continue to reap the benefits of an under-penetrated Mexican market and cost synergies and cross-selling opportunities with DTV, competition from T-Mobile USA will likely continue to weigh on wireless subscriber acquisitions in the medium term.

Outlook for discounted handset eligibility:

Declining iPhone churn confirmed that the loss of exclusivity and resurgence of T-Mobile US were manageable. Extended upgrade eligibility, upgrade fees, and accelerated upgrade opportunities through AT&T Next have material margin implications, and we expect continued discipline on upgrade eligibility, even in the event of flow share to Verizon and T-Mobile. With the LTE network buildout complete and AT&T diversifying into Mexico to alleviate churn pressures, further changes to upgrade eligibility are likely.

Can AT&T drive earnings growth?

Smartphone activations remain significant. Strategic initiatives with Samsung and Google, coupled with support of the Windows Phone ecosystem by MSFT, NOK, and other OEMs, are key to lower wireless subsidy pressure, but it is early days.

We think AT&T will continue to consider pricing action to augment growth once the LTE network build is complete, but competitive intensity is likely to increase in FY16, so this will prove difficult absent consolidation or until T-Mobile US becomes spectrum challenged, which we think is still one year away and a function of T-Mobile US s commitment to continue network investment.

How will AT&T fare in the changing wireless landscape in 2016 and beyond? Our strategic concerns for AT&T include:

(1) the Apple eSIM impact, should Apple be successful in striking wholesale agreements;

(2) the Google MVNO impact, which could strip the company of the last bastion of connectivity revenue; and

(3) a Wi-Fi first network from Comcast, coupled with a wholesale agreement with a carrier, which would enable a competitor and increase pricing pressure.

Does AT&T have a sustainable spectrum advantage compared with other carriers?

AT&T is behind Verizon in spectrum and out of spectrum in numerous major markets, according to our vendor checks. However, with additional density investment, it is reasonably well positioned to benefit from the combination of coverage layer (700 MHz and 850 MHz) and capacity layer (1,700 MHz and 1,900 MHz and soon-to-be-confirmed 2,300 MHz) spectrum and will focus on LTE and LTE Advanced, as well as refarming 850 MHz/1,900 MHz spectrum for additional coverage and capacity. Yet this nonstandard LTE band will cost more capex and take longer to implement. In the short run, aggressive cell splitting is expected, and metro Wi-Fi and small-cell solutions with economic backhaul solutions are becoming available, allowing for greater surgical reuse of existing spectrum. Sprint s differentiation through Clearwire spectrum in FY16 is only likely to modestly affect AT&T relative to Verizon. Furthermore, with 70% 80% of wireless data traffic on Wi-Fi and only 20% of capacity utilized, this suggests a focus in this area to manage data usage growth.

Conclusions:

We expect the wireless segment to continue to be challenged by a resurgent T-Mobile USA. We are less bullish on near-term improvements in capex intensity, due to cultural challenges associated with the much-needed migration to software-centric networks, coupled with the need to upgrade its fiber plant aggressively to improve its competitive positioning and lay the foundation for efficiency improvement.

Google’s Internet Access for Emerging Markets – Managed WiFi Network for India Railways

Introduction:

Speaking at Thursday’s April 21, 2016 NFV World Congress in San Jose, CA, Geng Lin, Head of global engineering in emerging markets at Google Access (part of Google parent company Alphabet), described his division’s strategy in improving Internet access and affordability in developing countries. He then cited India RailTel WiFi as a real world “case study” of extending Internet access to millions of new users via a managed WiFi network designed and developed by the Google Access division.

Internet Access in Developing Countries:

There are 3.2B unique Internet users worldwide of which 2B are from developing countries. Yet 4B people in developing countries remain offline, which is two thirds of that population. India is the most promising market for Google Access, while other markets include Indonesia, Brazil, and sub-Sahara Africa.

Internet Access for the Emerging Markets/Regions:

Three methods were proposed for Internet Accessibility in developing nations/regions*:

- Fiber/Wireline combined with terrestrial wireless for high population density areas.

- Aerial wireless (balloons) combined with terrestrial wireless for medium density areas.

- Aerial wireless (balloons) combined with satellite/space communications for low density areas.

*Geng’s comment (via email): “Each of the transmission technologies noted above can be used for both access and backhaul for the respective density areas.”

India Has Great Growth Potential for Internet Access:

India is a key emerging market for Google Access as there’s only 20% Internet penetration in a country of 1.2B people. Mobile users comprise 60% of the Indian population, but many only have cellular voice/text with no Internet access. There are only 200M smart phone users (16% of the total population) in India. With 100M new Internet users in the last year (2015) and many more wanting connectivity in coming years, there’s huge potential to significantly grow the number of broadband Internet users in India.

RailTel WiFi Project:

Last fall, Google announced in a blog post that it was partnering with state owned India Railways and India’s RailTel (which owns a Pan-India fiber optic network on exclusive Right of Way along railway track) to provide WiFi based Internet access in 400 Indian train stations.

The WiFi to be deployed is much more than “best effort” transport, Geng said. It’s a highly reliable, managed WiFi network: “This is a WiFi service that operates as a highly managed service, You could think of it as carrier class,” he added.

Many new Indian Internet users are expected each week when the managed WiFi network is fully deployed. The service was turned on in January 2016 in Mumbai’s central station as per this WSJ article. More train stations have been added this month (see Sidebar below). When fully deployed, it will be the world’s largest WiFi network!

Sidebar- from the referenced WSJ article:

“Everyone who comes here will get high quality, high-definition streaming,” said Gulzar Azad, head of access programs for Google in India. He said 100,000 people pass through Mumbai Central station daily.

At the station, users will be able to get high-speed Internet for the first hour. Then the speed slows to “normal,” Mr. Azad said. The Wi-Fi service is available on all the station’s platforms, but cuts off as soon as one steps outside the station.

Passengers at Mumbai’s railway station try out Google’s free Wi-Fi service on Jan. 22, 2016.

Composition of Google’s Managed WiFi Network:

Two critical technologies are used in Google’s managed WiFi network in India (and other countries in the future):

1. Cloud based Evolved Packet Core (EPC) control plane, which can massively scale to accommodate millions of new users.

2. Cloud platform based Service Operations for service level modeling, monitoring, and reporting (i.e. OSS type functions). Google’s cloud-based service operations is also delivering advanced analytics and service level monitoring to improve the customer experience.

The third piece of this managed network is the WiFi Radio Access Network (RAN), which is composed of WiFi Access Points (APs) and wirless LAN controllers. It’s fully automated with minimum staff required for maintenance.

Google is leveraging India Railways 45,000 km fiber optic backbone network for WiFi backhaul to Indian ISPs (not disclosed). There’s evidently fiber optics cable to each train station in India that will have managed WiFi access.

–>Google combines and integrates the Wi-Fi RAN with their cloud based EPC to provide the total access network which is connected to India RailTel’s fiber optic backbone to reach the ISPs point of presence.

Attributes of WiFi based Internet Access (Google-India Railtel):

- Google’s service operational cost is expected to be sub $ per month per subscriber. Geng’s clarification comment via email: “What I described was the service operation cost. However, we didn’t calculate the cost of fiber bandwidth etc. which we’re not able to know until we have more data.”

- Users in India are flocking to the service, which is currently being offered at no charge. Long-term, Google plans to offer a paid service, but it will also offer some level of WiFi service for free.

- End to end service stability has been superb so far, with 99.94% availability in the last week, according to a report Geng said he printed out Thursday morning. That makes for a great Internet user experience!

- 10 WiFi enabled train stations have been deployed since January 2016, with a total of 100 stations to be WiFi enabled by the end of 2016. The list of train stations is here.

- “The network is now live in 10 key stations across the country and will enable about 1.5 million people to access high-speed Internet service. We are scaling up our efforts to roll out the network quickly to cover some of smaller stations where connectivity is much more limited,” Google India head of access project Gulzar Azad told PTI.

- The aggregated WiFi train traffic has grown by 700% in the past month.

- Current average usage time is about 50 minutes per day or 366M bytes per user. “This is more intensive usage than an LTE network user,” Lin said.

- Users can download HD videos and watch mutli-media apps on their mobile devices without interruptions.

- 400 WiFi equipped train stations with a total of 10M daily new Internet users in India are projected in coming years as this project nears completion.

Author’s Notes:

1. It should be noted that Google’s free WiFi will only work at the railroad platforms and is not meant for use within the trains. According to Marian Croak, Google VP for Access and Emerging Markets, the company sees a potential of 10 million users accessing its WiFi each day by the end of 2016 from across these railway stations. Google wants to make sure that these 10 million users get broadband quality experience when using its managed Wi-Fi network.

2. In an effort to compete with Amazon’s AWS, Google’s been on a growth spurt with its Cloud Platform (AKA the global Google Compute Engine), which manages and controls this WiFi network. Last month the company said it is adding 12 regions (i.e., data centers) by the end of 2017.

3. Google’s huge infrastructure of advanced data centers are a big driver of capital expenditures that hit nearly $10 billion last year. Many of those data centers are used to create Google’s Cloud Platform.

Conclusions – Looking to the Future:

In closing, Lin said:

- Google’s cloud based EPC and cloud based service operation will scale to tens of millions of users for this WiFi Railtel project.

- The network architecture is extensible to possibly reach hundreds of millions or even a billion users by leveraging Google’s cloud data center platform.

Geng’s comment via email: “While conceptually correct, this perhaps is still a bit early to make a strong defensible statement.”

- The cloud EPC and cloud based service operation could also be offered as a wholesale service on the Google Compute Engine to incumbent carriers (not disclosed) that Google is now partnering with for the WiFi RailTel project.

End Note:

Revenue from what Google/Alphabet calls “Other Bets” or “Moon Shots” — including its Google Fiber business, driverless cars (autonomous vehicles) and the Nest thermostat — was $166 million, more than double what it was in the first quarter of 2015. However, losses for “Other Bets” rose to $802 million in the last quarter, from $633 million. Presumably, the “Other Bets” include Internet access via WiFi balloons and the managed WiFi network for India RailTel/India Railways.

The majority of those costs, according to Alphabet CFO Ruth Porat, are driven by the expense of building out Google Fiber. Alphabet plans to increase spending on bets that show promise, ask outside investors to contribute where it makes sense, perhaps around driverless cars, and cut down on bets where multiple teams are pursuing a similar objective.

Google CEO Sundar Pichai cited the development of machine learning and artificial intelligence as one of the key factors driving the company’s growth. It remains to be seen if the company can turn any of these research intitiatives into a real profit making business.

Comment by Michael Howard, co-founder of Infonetics and Prinicipal Analyst at IHS:

In speaking with Geng afterward, he observed the following:

1. Google’s WiFi service is different than the similar Facebook (FB) free network- Terragraph and Project ARIES:

a] Google’s WiFi provides full Internet access;

b] FB has a walled garden with access limited to a FB-chosen set of websites.

–>Geng said Google will not do location- or subscriber-based advertising. I think he hinted that FB does this or will be doing it in their network?

2. Passengers will now want to spend more time in the stations and the RailTel ridership will increase

My observation:

1. Google makes its large majority of revenue on search hits, which this service will open up to more people.

2. Google and FB are in a race to capture the billions of internet-unconnected on the planet, thus their service is designed to be different than FB service.

Update- Sept 27, 2016:

Google today announced a collection of updates aimed at helping get ‘the next billion’ internet users in India and emerging markets online. One of the more subtle yet interesting components to that push is the launch of Google Station, a project to enable free public Wi-Fi hotspots, which is now open for new partners.

In India alone, Google estimates that 10,000 people go online for the first time each hour, while in Southeast Asia the figure is 3.8 million per month.

The company made a big push on the free Wi-Fi initiative, and today it revealed that it now covers 50 national stations, providing internet access to 3.5 million people each month. (That’s up from 1.5 million in June.) Google and RailTel are targeting 400 stations nationwide, and, in addition to that, it has now opened the program up to other public organizations.

Google Station is aimed at all manner of public businesses, from malls, to bus stops, city centers, and cafes. And not just those in India, too, Google said.

“We’re just getting started and are looking for a few strategic, forward-thinking partners to work with on this effort,” it added in a statement.

For all the excitement around how Google is disrupting U.S. internet access with Project Fi and Google Fiber, Google Station has the potential to take things even further by giving the hundreds of millions who lack decent quality internet access, a reliable connection to get online regularly for the first time. That is potentially life-changing for many.

No doubt the project is in its early days, but it has the potential to do an incredible amount of good — and hopefully without running into conflicts of interest, as the free internet project from Facebook did.

https://techcrunch.com/2016/09/27/google-station-free-wifi-hotspots/

DISH Network’s Spectrum Strategy & Business Outlook, by David Dixon of FBR & Co.

Overview:

We continue to anticipate that DISH will opportunistically acquire spectrum in the incentive auction, taking advantage of VZ’s and T’s likely limited participation due to their greater emphases on spectrum reuse and weaker balance sheets (due to the AWS-3 auction). We believe DISH’s spectrum portfolio is progressively devaluing as the industry embraces low-cost, unlicensed and shared spectrum, namely, the 3.5 GHz spectrum band, to solve metro density challenges.

3.5 GHz spectrum is moving more quickly than expected. An ecosystem is quickly building around 150 MHz of shared 3.5 GHz spectrum, which can be deployed in 2017 ahead of priority licenses auctioned in 2017. Contrary to consensus, 150 MHz of 3.5 GHz spectrum should be a significant “5G metro hotspot” game changer. We expect this trend to shift meaningfully the spectrum supply curve, leading to a devaluation of DISH spectrum value.

What is the value creation potential of DISH s wireless spectrum portfolio?

DISH has assembled a potentially solid spectrum position, but the market values this spectrum too highly today. First, it needs to be combined with PCS, G, H, and AWS-4 bands to be optimal. Second, DISH is positioning its spectrum as downlink only; but, with the advent of the smartphone camera and enterprise mobility, uplink and downlink traffic will become more balanced. Third, in light of declining wireless revenues, the wireless industry is undergoing a major strategic rethink with respect to spectrum utilization (i.e., use of unlicensed spectrum for low-cost, small cell deployments, where 80% of traffic is occurring). The key to valuation is how soon the buyer of spectrum needs to move and the appetite for regulatory approval. AT&T and Verizon appear to be prime candidates, with major spectrum challenges in major markets, but they have the highest degree of regulatory risk and are in the midst of a strategic shift regarding their spectrum utilization paths going forward. Furthermore, even if there were appetite for a deal, we do not think a deal will be successful for either of the two major wireless operators until Sprint and/or T-Mobile US become significantly stronger operators. While the AWS-3 auction provided important market direction in valuing DISH s spectrum portfolio, DISH continues to face increased erosion of its pay TV customer base and needs to move quickly, in our view, despite extended buildout milestones. Post-SoftBank and post-Clearwire, Sprint is well positioned on capacity for four or five years and does not need to move quickly; T-Mobile is well positioned on spectrum to manage capacity needs for four to five years, according to Ericsson, so we believe DISH should move to acquire T-Mobile ahead of a Comcast MVNO launch. But we see more strategic alliance opportunities between T-Mobile and Comcast or Google.

What is the longterm outlook for Dish Networks’ organic business?

DISH faces a continued, competitive ARPU and churn disadvantage to cable operators and can only resell broadband. Network trials confirm major challenges with the fixed-broadband business model. We see a more challenging cash flow outlook as a result. Today, as the business slows, margin expansion comes from lower success-based installation costs; but fixed costs rise when DISH loses customers. DISH has to do something soon on the strategic front, particularly as AT&T is positioning to deploy fiber deeper across its access networks in additional markets, potentially covering an incremental 10 million to 15 million homes.

We believe increased competitive challenges in the pay TV market are not adequately balanced by DISH’s options in the wireless arena, which may take longer than expected to achieve. While a bearish signal, an acquisition of a wireless operator is a possible scenario, with T-Mobile being a potential target, in our view. However, we think that a spectrum lease appears to be the more likely outcome, but this may take longer to achieve.

Hyper Scale Mega Data Centers: Time is NOW for Fiber Optics to the Compute Server

Introduction:

Brad Booth, Principal Architect, Microsoft Azure Cloud Networking, presented an enlightening and informative keynote talk on Thursday, April 14th at the Open Server Summit in Santa Clara, CA. The discussion highlighted the tremendous growth in cloud computing/storage, which is driving the need for hyper network expansion within mega data centers (e.g. Microsoft Azure, Amazon, Google, Facebook, other large scale service providers, etc).

Abstract:

At 10 Gb/s and above, electrical signals cannot travel beyond the box unless designers use expensive, low-loss materials. Optical signaling behaves better but requires costly cables and connectors. For 25 Gb/s technology, datacenters have been able to stick with electrical signaling and copper cabling by keeping the servers and the first switch together in the rack. However, for the newly proposed 50 Gb/s technology, this solution is likely to be insufficient. The tradeoffs are complex here since most datacenters don’t want to use fiber optics to the servers. The winning strategy will depend on bandwidth demands, datacenter size, equipment availability, and user experience.

Overview of Key Requirements:

- To satisfy cloud customer needs, the mega data center network should be built for high performance and resiliency. Unnecessary network elements/equipment don’t provide value (so should be eliminated or at least minimized).

- It’s critically important to plan for future network expansion (scaling up) in terms of more users and higher speeds/throughput.

- For a hyper scale cloud data center (CDC), the key elements are: bandwidth, power, security, and cost.

- “Crop rotation” was also mentioned, but that’s really an operational issue of replacing or upgrading CDC network elements/equipment approximately every 3-4 years (as Brad stated in a previous talk).

Channel Bandwidth & Copper vs Fiber Media:

Brad noted what many transmission experts have already observed: as bit rates continue to increase, we are approaching Shannon’s limit for the bandwidth of a given channel. To increase channel capacity, we need to either increase the bandwidth of the channel or improve the Signal to Noise (S/N) ratio. However, as the bit rate increases over copper or fiber media, the S/N ratio decreases. Therefore, the bandwidth of the channel needs to improve.

“Don’t ever count out copper” is a common phrase. Copper media bandwidth has continued to increase over both short (intra-chasis/rack), medium (up to 100m), and longer distances (e.g. Vectored DSL/G.fast up to 5.5 km). While copper cable has a much higher signal loss than optical fiber, it’s inexpensive and benefits from not having to do Electrical to Optical (Server transmit), Optical to Electrical (Server receive), and Optical to Electrical to Optical (OEO – for a switch) conversions which are needed when fiber optics cable is used as the transmission media.

Fiber Optics is a much lower loss media than copper with significantly higher bandwidth. However, as noted in a recent ComSoc Community blog post, Bell Labs said optical channel speeds are approaching the Shannon capacity limits.

Brad said there’s minimal difference in the electronics associated with fiber transmission, with the exception of the EO, OE or OEO conversions needed.

A current industry push to realize a cost of under $1 per G b/sec of speed, would enable on board optics to strongly compete with copper based transmission in a mega data center (cloud computing and/or large network service providers).

Optics to the Server:

For sure, fiber optics is a more reliable and speed scalable medium than copper. Assuming the cost per G decreases to under $1 as expected, there are operational challenges in running fiber optics (rather than twin ax copper) to/from (Compute) servers and (Ethernet) switches. They include: installation, thermal/power management, and cleaning.

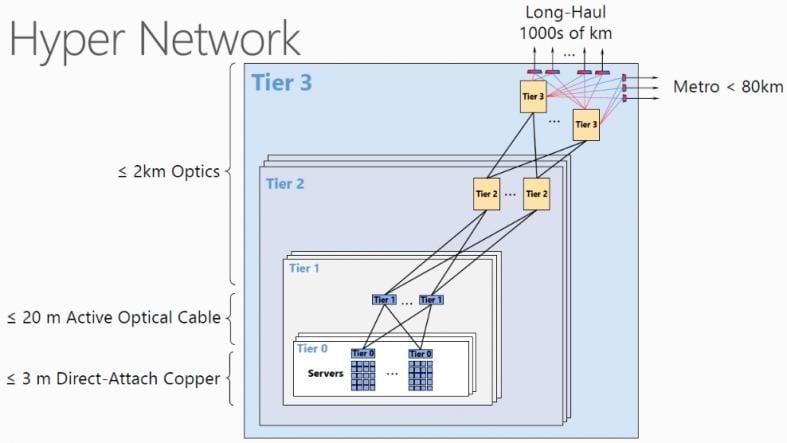

In the diagram below, each compute server rack has a Top of Rack (ToR) switch which connects to two Tier 1 switches for redundancy and possible load balancing. Similarly, each Tier 1 switch interconnects with two Tier 2 switches, also shown in the diagram below:

Figure (courtesy of Microsoft) illustrates various network segments within a contemporary Mega Data Center which uses optical cables between a rack of compute servers and a Tier 1 switch, and longer distance optical interconnects (at higher speeds) for switch to switch communications.

Brad suggested that a new switch/server topology would be in order when there’s a large scale move from twinax copper direct attach cables to fiber optics. The server to Top of Rack (ToR) switch topology would be replaced by a distributed mesh topology where Tier 1 Switches (first hop to/from server) connect to a rack of servers via fiber optical cable. Brad said Single Mode Fiber (SMF), which had previously been used primarily in WANs, was a better choice than Multi Mode Fiber (MMF) for intra Data Center interconnects up to 500M. Katherine Schmidke of Facebook said the same thing at events that were summarized at this blog site.

Using SMF at those distances, the Tier 1 switch could be placed in a centralized location, rather than today’s ToR switches. A key consideration for this distributed optical interconnection scenario is to move the EO and OE conversion implementation closer to the ASIC used for data communications within each optically interconnected server and switch.

Another advantage of on board optics is shorter PCB (printed circuit board) trace lengths which increases reliability and circuit density while decreasing signal loss (which increases with distance).

However, optics are very temperature sensitive. Ideally they operate best at temperatures of <70 degrees Fahrenheit. Enhanced thermal management techniques will be necessary to control the temperature and heat generated by power consumption.

Industry Preparedness for Optical Interconnects:

- The timeline will be drive by I/O technology: speed vs power vs cost.

- At OFC 2016, a 100Gb/sec PAM 4 (pulse amplitude modulation with 4 discrete levels/dimensions per signal element) was successfully demonstrated.

- Growing cloud computing market should give rise to a huge volume increase in cloud resident compute servers and the speeds at which they’re accessed.

- For operations, a common standardized optics design is greatly preferred to a 1 of a kind “snowflake” type of implementation. Simplified installation (and connectivity) is also a key requirement.

- Optics to the compute server enables a more centralized switching topology which was the mainstay of local area networking prior to 10G b/sec Ethernet. It enables an architecture where the placement of the ToR or Tier 0 switch is not dictated by the physical medium (the Direct Access Copper/twin-ax cabling).

- The Consortium for On-Board Optics (COBO) is a 45 member-driven standards-setting organization developing specifications for interchangeable and interoperable optical modules that can be mounted onto printed circuit boards.

COBO was founded to develop a set of industry standards to facilitate interchangeable and interoperable optical modules that can be mounted or socketed on a network switch or network controller motherboard. Modules based on the COBO standard are expected to reduce the size of the front-panel optical interfaces to facilitate higher port density and also to be more power efficient because they can be placed closer to the network switch chips.

Is the DC Market Ready for Optics to the Server?

That will depend on when all the factors itemized above are realized. In this author’s opinion, cost per G b/sec transmitted will be the driving factor. If so, then within the next 12 to 18 months we’ll see deployments of SMF based optical interconnects between servers and the Tier 1 switches at up to 500m distance in cloud and large service provider data centers (DCs).

Of course, as the server to Tier 1 switch distance extends to up to 500m (from very short distance twin ax connections), there’s a higher probability of a failure or defect. While fault detection, isolation and repair are important issues in any network, they’re especially urgent for hyper-scale data centers. Because such DCs are designed for redundancy of key network elements, a single failure is not as catastrophic.

This author believes that the availability of lower-cost SMF optics will open up a host of new options for building large DCs that can scale to support higher speeds (100G or even 400G) in the future without requiring additional cabling investments.

Here’s a quote from Brad in a March 21, 2016 COBO update press release:

“COBO’s work is important to make the leap to faster networks with the density needed by today’s data centers. COBO’s mission is a game-changer for the industry, and we’re thrilled with the growth and impact our member organizations have been able to achieve in just one year,” said Brad Booth, President of COBO. “We believe 2016 will be an important year as we drive to create standards for the data center market while investigating specifications for other market spaces.”

Addendum on SMF:

The benefits of SMF DC infrastructures include:

- Flexible reach from 500m to 10km within a single DC

- Investment protection by supporting speeds such as 40GbE, 100GbE and 400GbE on the same fiber plant

- Cable is not cost prohibitive, SMF is less expensive than MMF fiber

- Easy to terminate in the field with LC connectors when compared to MTP connectors

References:

http://cobo.azurewebsites.net/

http://www.gazettabyte.com/home/2015/11/25/cobo-looks-inside-and-beyond-the-data-centre.html

Internet of Things (IoT) Use Cases & Verizon IoT Report

Mainstream adoption and integration across varied business sectors is unleashing an array of Internet of Things (IoT) use cases. These practical IoT applications are making a substantive, real-world impact and represent a boon for businesses as well. From 2014 to 2015 alone, IoT network connections experienced marked growth.

Verizon and AT&T have created proprietary developer platforms to spur IoT applications, and their extensive wired and wireless networks, accelerated by 5G, will help support the next wave of advances.

This week’s release of Verizon’s “State of the Market: Internet of Things 2016” denotes a sea change for IoT. The focus today, Verizon notes, has shifted from merely forecasting the potential of IoT technology. Rather, mainstream adoption and integration across varied business sectors is unleashing an array of IoT use cases. These practical IoT applications are making a substantive, real-world impact and represent a boon for businesses as well. As Verizon’s data confirms, the IoT opportunity is substantial. From 2014 to 2015 alone, IoT network connections have experienced marked growth. Gains include energy/utilities (58 percent); home monitoring (50 percent); transportation (49 percent); smart cities (43 percent); agriculture (33 percent); healthcare/pharma (26 percent).

– Read more at: https://www.ustelecom.org/blog/business-case-iot#sthash.7LP9AAcI.dpuf

“We are beginning to see a more even distribution of IoT spend across regions as the momentum of this market drives enterprises to adopt IoT solutions globally,” said Carrie MacGillivray, vice president, IoT and Mobile research. “Vendors must be mindful to having global IoT product strategies, but at the same time, they must have a regional strategy to ensure that local requirements and regulations are met.”

FCC Wants to Revamp Oversight of Bulk Data Service Provided to Businesses

Details of the new FCC plan hadn’t yet been made public as of Thursday evening. The agency said it would vote on the plan at its April 28th meeting. Yet even before the proposal emerged, industry groups began battling each other over how far it should go and which sectors should be swept up.

Smaller telecom companies reached an understanding with giant telco Verizon Communications Inc. to recommend replacing the current patchwork of regulation with “a permanent policy framework for all dedicated services,” one that would be “technology-neutral,” according to a joint statement. The current regulatory system has been criticized for focusing on older market players and technologies, leaving them feeling disproportionately targeted.

Verizon and INCOMPAS (which represents competitive carriers) urged the FCC to adopt a “permanent policy framework” on access to broadband business services.

http://www.multichannel.com/news/fcc/verizon-clecs-strike-deal-special-access/403939

The Wall Street Journal reported that the cable industry said that such a move would target their firms. Some cable companies fear they might now face more regulation than in the past, since they represent a newer technology.

“The FCC should reject any call to impose new, onerous regulations on an industry that is stepping up to offer meaningful choices to business customers,” the National Cable & Telecommunications Association said in its own statement. “The FCC will not achieve competition if it burdens new…entrants with regulation.”

The so-called special-access market has proved to be a particularly difficult regulatory puzzle for the FCC to solve, at a time of rapid transformation in the telecom industry generally.

Some critics believe the FCC went too far in deregulating the market in 1999, the last time the agency made a major policy pronouncement.

For years, telecom companies such as Sprint Corp. and Level 3 Communications Inc. have griped that the big phone companies likeAT&T Inc. and Verizon Communications Inc. have taken unfair advantage of their power in the market. AT&T and Verizon, along with CenturyLink Inc. and Frontier Communications Corp., dominate the special access market because they effectively control the wires that were built by the legacy AT&T monopoly, which was broken up by the government in 1984.

Some smaller companies, for example, accuse the carriers of requiring them to make large volume commitments or face big fees. Sprint, which uses the special access to connect its cell towers, says it has had to pay huge termination fees to the larger carriers when it switched several thousand cell towers to alternative providers.

AT&T and the other large carriers have denied the allegations and said the market is generally competitive.

In addition, companies of all sizes have complained that the FCC deregulatory scheme adopted in 1999 was both overly complicated and ineffective at determining areas where the market still needed stronger oversight. As a result, the FCC already has taken some steps toward a new system of stronger oversight.

Adding to the problems, the 1996 Telecommunications Act (which has been a complete flop after the dot com bust) gives the FCC authority to police competitive behavior in the telecom market, but the agency’s jurisdiction over these types of contracts primarily covers older technologies. AT&T, Verizon and other carriers have invested in newer network technologies that aren’t subject to FCC oversight in this way.

AT&T Looks to Public-Private Partnerships for GigaFiber Expansion, especially in North Carolina

Reversing its previous policy of trying to block municipal broadband projects, AT&T now says it is eager to work with more municipalities to support the deployment of gigabit broadband access. That would fulfill its commitment to the Federal Communications Commission (FCC) to increase its fiber footprint, which was a condition of AT&T’s buying DirecTV (see below). For residential broadband, fiber to the home (AT&Ts GigaPower) is only in selected greenfield deployments and in cities where Google Fiber has been announced and/or is commercially available as per this map.

During a Light Reading Gigabit Cities Live conference Q &A, Vanessa Harrison, president of AT&T’s North Carolina operation said the U.S. mega-carrier favors projects that build on existing company assets. According to Light Reading’s Carol Wilson, Ms. Harrison said that AT&T likes to be invited to participate as a private company in municipal fiber-to-the-premises networks, either by a city or county government that is clear on what it needs and expects from a fiber network operator.

“We look for areas that demonstrate a demand, where there is infrastructure that is in our traditional service territory — where we can expand our facilities, enhance our facilities and deploy new facilities. And we also look for adoptability — areas where it is easy to adopt,” Ms. Harrison said.

AT&T’s Fiber Build-Out Promise to FCC:

Under the FCC’s terms of approval for the DirecTV acquisition, AT&T agreed to build out fiber to 12.5 million homes nationwide and is doing that across its existing footprint. North Carolina is home to seven cities on the AT&T GigaPower roadmap, some of which pre-date the DirecTV deal, and that’s in part because the state has long had policies that encourage private investment, Harrison said.

More recently, the public-private partnership North Carolina Next-Generation Network (NCNGN) brought together six municipalities and four major universities that invited private operators to compete to provide a gigabit network, and chose AT&T as their network operator. That’s the kind of public-private partnership invitation that AT&T likes, because it clearly spells out what the local communities need. Communication between potential partners is one of the most important factors, according to Ms. Harrison.

“We look to come to the table and say, ‘Here are the facilities we have. How can we partner together to meet your need?” she said. It’s important for communities to do their own needs assessment and know what they are looking for in a fiber network, and also what resources an operator is expected to bring to the effort.

“Deploying fiber is a big job and it takes a lot of time, and a lot of resources including a number of employees,” she noted.

It’s important that the municipality’s needs be clear upfront, so expectations can be correctly set, but the city must also recognize its responsibilities. That includes the ability to issue permits in a timely fashion, for example, which can create stresses on staff municipal departments.

Note that AT&T GigaPower has been available in Huntersville, NC to residential and small business customers since October 2015.

Addendum:

On March 15th, North Carolina voted overwhelmingly to borrow $2 billion to pay for a laundry list of infrastructure projects, collectively known as Connect NC. Almost half of the bond money is intended for projects within the UNC System, and another $350 million is for the community college system.

In a co-authored editorial in the February 22nd News & Observer, AT&T’s Vanessa Harrison wrote:

“The bond package will support new research, technology and innovation across all of our universities and community colleges……The investments in our state from Connect NC are critical to sustained economic growth and continued success in our global economy. Connect NC investments will benefit all North Carolinians. Whether you are an alum of North Carolina’s university or community college system, have or had a child in the system, or simply want our state to have the best-skilled workforce in the country, you will benefit from Connect NC.”

Analysis: Frontier Completes Acquisition of Verizon Wireline Operations in 3 States

Introduction & Backgrounder:

Over the past decade, Verizon (VZ) had acquired GTE and MCI Worldcom’s wireline networks and also built out its own fiber to residential customer premises for triple play services (FiOS for true broadband Internet, pay TV and voice). In February 2014, VZ bought out Vodafone to be the 100% owner of Verizon Wireless. That gave the huge telco a much larger stake in the U.S. wireless carrier market. That’s certainly the case today and for the foreseeable future with Verizon’s CEO Lowell McAdam talking about 5G trials while saying VZ deploy 5G in a live network years before the ITU-R standard is completed! Last September, VZ announced it would sell wireline assets to Frontier Communications. That deal was completed today (April 1st).

The REALLY Big News:

NO APRIL FOOL’s JOKE: Frontier Communications announced completion of its $10.54 billion acquisition of Verion’s wireline operations which provides services to residential, commercial and wholesale customers in California, Texas and Florida. The acquisition includes approximately 3.3 million voice connections, 2.1 million broadband connections, and 1.2 million FiOS® video subscribers, as well as the related incumbent local exchange carrier businesses. New customers will begin receiving monthly bills starting in mid-April.

From Verizon’s website:

The sale does not include the services, offerings or assets of other Verizon businesses, such as Verizon Wireless and Verizon Enterprise Solutions.

The transaction concentrates Verizon’s landline operations in contiguous northeast markets – which will enhance the efficiency of the company’s marketing, sales and service operations across its remaining landline footprint. It also allows Verizon to further strengthen and extend its industry leadership position in the U.S. wireless market, while returning value to Verizon’s shareholders. As previously announced, Verizon is using the proceeds of the transaction to pay down debt.

Approximately 9,400 Verizon employees who served customers in California, Florida and Texas are continuing employment with Frontier.

From the Business Wire Press Release:

“This is a transformative acquisition for Frontier that delivers first-rate assets and important new opportunities given our dramatically expanded scale,” said Daniel J. McCarthy, Frontier’s President and Chief Executive Officer. “It significantly expands our presence in three high-growth, high-density states, and improves our revenue mix by increasing the percentage of our revenues coming from segments with the most promising growth potential.”

Frontier will take on approximately 9,400 employees from Verizon. “Our new colleagues know their markets, their customers and their business extremely well,” McCarthy said. “As valued members of the Frontier team, they will ensure continuity of existing customer relationships.”

Analysis – Frontier:

Frontier has now just about doubled in size as the result of this Verizon acquisition. The shift by Frontier is also noteworthy because of the massive consolidation currently underway in the US broadband and pay-TV markets. Frontier is about to become one of the few significantly-sized triple play service providers that isn’t a cable company (Comcast, TW Cable, Charter, etc).

Arris Group Inc. (Nasdaq: ARRS) CEO Bob Stanzione expressed his opinion last fall that Frontier would invest in expanding the FiOS plant, and Frontier President and CEO Daniel McCarthy has been vocal about his plan to expand TV and broadband service to new customers. (See Video: Frontier’s Final Frontier and Frontier Gives Telco TV a Boost .)

Most recently, Frontier Director of Strategic Planning David Curran talked to Light Reading about Frontier’s evaluation of open networking technologies, and the company’s particular interest in CORD implementations — the idea of a Central Office Re-architected as a Data Center (CORD). With possible migrations to new open networking technologies, Frontier hopes to be able to better scale to higher broadband speeds and higher numbers of subscribers. (See Frontier Checks Out CORD, SDN and also Project CORD for GPON LTE, G.FAST DSL Modems & Mobile Networks)

Analysis – Verizon:

Light Reading reports that Verizon’s grand FiOS experiment that began more than a decade ago has wilted in recent years as Verizon has transferred its focus to wireless projects, and, more critically, wireless profits.

Verizon hasn’t given up on wireline altogether. The company did just spend $1.8 billion to acquire XO Communications and further build up its fiber network. Plus, Verizon is reportedly close to wrapping up an RFP process that will decide the vendors for a planned fiber upgrade project using next-generation PON technologies. (See Verizon Bags XO for $1.8B and Verizon Preps Next Major Broadband Upgrade.)

However, Verizon’s wireline strategy appears to center more on enterprise services and cellular backhaul, rather than broadband residential services. The telco has said it remains committed to its FiOS footprint on the east coast, but with even the director of FiOS TV promoting Verizon’s Go90 OTT mobile video service over her own product, it’s hard to know how seriously to take the company’s official position. (See FiOS TV Director Cuts the Cord.)

In a FCC filing last year, Florida Power & Light argued against Verizon selling wireline assets to Frontier:

“Verizon has made it clear it intends to be out of the wireline business within the next ten years, conveying this clear intent to regulated utilities in negotiations over joint use issues and explaining that Verizon no longer wants to be a pole owner. Indeed, the current proposed [$10.54 billion sale of Verizon facilities in Florida, Texas and California] proves this point.”

and later……………………………..