Hyper Scale Mega Data Centers: Time is NOW for Fiber Optics to the Compute Server

Introduction:

Brad Booth, Principal Architect, Microsoft Azure Cloud Networking, presented an enlightening and informative keynote talk on Thursday, April 14th at the Open Server Summit in Santa Clara, CA. The discussion highlighted the tremendous growth in cloud computing/storage, which is driving the need for hyper network expansion within mega data centers (e.g. Microsoft Azure, Amazon, Google, Facebook, other large scale service providers, etc).

Abstract:

At 10 Gb/s and above, electrical signals cannot travel beyond the box unless designers use expensive, low-loss materials. Optical signaling behaves better but requires costly cables and connectors. For 25 Gb/s technology, datacenters have been able to stick with electrical signaling and copper cabling by keeping the servers and the first switch together in the rack. However, for the newly proposed 50 Gb/s technology, this solution is likely to be insufficient. The tradeoffs are complex here since most datacenters don’t want to use fiber optics to the servers. The winning strategy will depend on bandwidth demands, datacenter size, equipment availability, and user experience.

Overview of Key Requirements:

- To satisfy cloud customer needs, the mega data center network should be built for high performance and resiliency. Unnecessary network elements/equipment don’t provide value (so should be eliminated or at least minimized).

- It’s critically important to plan for future network expansion (scaling up) in terms of more users and higher speeds/throughput.

- For a hyper scale cloud data center (CDC), the key elements are: bandwidth, power, security, and cost.

- “Crop rotation” was also mentioned, but that’s really an operational issue of replacing or upgrading CDC network elements/equipment approximately every 3-4 years (as Brad stated in a previous talk).

Channel Bandwidth & Copper vs Fiber Media:

Brad noted what many transmission experts have already observed: as bit rates continue to increase, we are approaching Shannon’s limit for the bandwidth of a given channel. To increase channel capacity, we need to either increase the bandwidth of the channel or improve the Signal to Noise (S/N) ratio. However, as the bit rate increases over copper or fiber media, the S/N ratio decreases. Therefore, the bandwidth of the channel needs to improve.

“Don’t ever count out copper” is a common phrase. Copper media bandwidth has continued to increase over both short (intra-chasis/rack), medium (up to 100m), and longer distances (e.g. Vectored DSL/G.fast up to 5.5 km). While copper cable has a much higher signal loss than optical fiber, it’s inexpensive and benefits from not having to do Electrical to Optical (Server transmit), Optical to Electrical (Server receive), and Optical to Electrical to Optical (OEO – for a switch) conversions which are needed when fiber optics cable is used as the transmission media.

Fiber Optics is a much lower loss media than copper with significantly higher bandwidth. However, as noted in a recent ComSoc Community blog post, Bell Labs said optical channel speeds are approaching the Shannon capacity limits.

Brad said there’s minimal difference in the electronics associated with fiber transmission, with the exception of the EO, OE or OEO conversions needed.

A current industry push to realize a cost of under $1 per G b/sec of speed, would enable on board optics to strongly compete with copper based transmission in a mega data center (cloud computing and/or large network service providers).

Optics to the Server:

For sure, fiber optics is a more reliable and speed scalable medium than copper. Assuming the cost per G decreases to under $1 as expected, there are operational challenges in running fiber optics (rather than twin ax copper) to/from (Compute) servers and (Ethernet) switches. They include: installation, thermal/power management, and cleaning.

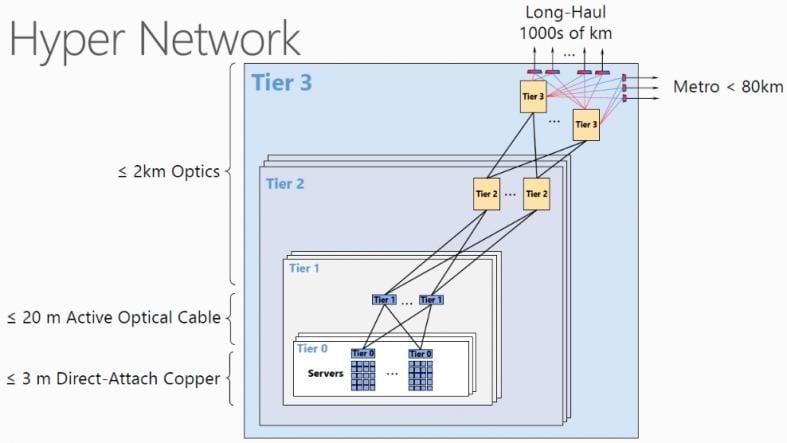

In the diagram below, each compute server rack has a Top of Rack (ToR) switch which connects to two Tier 1 switches for redundancy and possible load balancing. Similarly, each Tier 1 switch interconnects with two Tier 2 switches, also shown in the diagram below:

Figure (courtesy of Microsoft) illustrates various network segments within a contemporary Mega Data Center which uses optical cables between a rack of compute servers and a Tier 1 switch, and longer distance optical interconnects (at higher speeds) for switch to switch communications.

Brad suggested that a new switch/server topology would be in order when there’s a large scale move from twinax copper direct attach cables to fiber optics. The server to Top of Rack (ToR) switch topology would be replaced by a distributed mesh topology where Tier 1 Switches (first hop to/from server) connect to a rack of servers via fiber optical cable. Brad said Single Mode Fiber (SMF), which had previously been used primarily in WANs, was a better choice than Multi Mode Fiber (MMF) for intra Data Center interconnects up to 500M. Katherine Schmidke of Facebook said the same thing at events that were summarized at this blog site.

Using SMF at those distances, the Tier 1 switch could be placed in a centralized location, rather than today’s ToR switches. A key consideration for this distributed optical interconnection scenario is to move the EO and OE conversion implementation closer to the ASIC used for data communications within each optically interconnected server and switch.

Another advantage of on board optics is shorter PCB (printed circuit board) trace lengths which increases reliability and circuit density while decreasing signal loss (which increases with distance).

However, optics are very temperature sensitive. Ideally they operate best at temperatures of <70 degrees Fahrenheit. Enhanced thermal management techniques will be necessary to control the temperature and heat generated by power consumption.

Industry Preparedness for Optical Interconnects:

- The timeline will be drive by I/O technology: speed vs power vs cost.

- At OFC 2016, a 100Gb/sec PAM 4 (pulse amplitude modulation with 4 discrete levels/dimensions per signal element) was successfully demonstrated.

- Growing cloud computing market should give rise to a huge volume increase in cloud resident compute servers and the speeds at which they’re accessed.

- For operations, a common standardized optics design is greatly preferred to a 1 of a kind “snowflake” type of implementation. Simplified installation (and connectivity) is also a key requirement.

- Optics to the compute server enables a more centralized switching topology which was the mainstay of local area networking prior to 10G b/sec Ethernet. It enables an architecture where the placement of the ToR or Tier 0 switch is not dictated by the physical medium (the Direct Access Copper/twin-ax cabling).

- The Consortium for On-Board Optics (COBO) is a 45 member-driven standards-setting organization developing specifications for interchangeable and interoperable optical modules that can be mounted onto printed circuit boards.

COBO was founded to develop a set of industry standards to facilitate interchangeable and interoperable optical modules that can be mounted or socketed on a network switch or network controller motherboard. Modules based on the COBO standard are expected to reduce the size of the front-panel optical interfaces to facilitate higher port density and also to be more power efficient because they can be placed closer to the network switch chips.

Is the DC Market Ready for Optics to the Server?

That will depend on when all the factors itemized above are realized. In this author’s opinion, cost per G b/sec transmitted will be the driving factor. If so, then within the next 12 to 18 months we’ll see deployments of SMF based optical interconnects between servers and the Tier 1 switches at up to 500m distance in cloud and large service provider data centers (DCs).

Of course, as the server to Tier 1 switch distance extends to up to 500m (from very short distance twin ax connections), there’s a higher probability of a failure or defect. While fault detection, isolation and repair are important issues in any network, they’re especially urgent for hyper-scale data centers. Because such DCs are designed for redundancy of key network elements, a single failure is not as catastrophic.

This author believes that the availability of lower-cost SMF optics will open up a host of new options for building large DCs that can scale to support higher speeds (100G or even 400G) in the future without requiring additional cabling investments.

Here’s a quote from Brad in a March 21, 2016 COBO update press release:

“COBO’s work is important to make the leap to faster networks with the density needed by today’s data centers. COBO’s mission is a game-changer for the industry, and we’re thrilled with the growth and impact our member organizations have been able to achieve in just one year,” said Brad Booth, President of COBO. “We believe 2016 will be an important year as we drive to create standards for the data center market while investigating specifications for other market spaces.”

Addendum on SMF:

The benefits of SMF DC infrastructures include:

- Flexible reach from 500m to 10km within a single DC

- Investment protection by supporting speeds such as 40GbE, 100GbE and 400GbE on the same fiber plant

- Cable is not cost prohibitive, SMF is less expensive than MMF fiber

- Easy to terminate in the field with LC connectors when compared to MTP connectors

References:

http://cobo.azurewebsites.net/

http://www.gazettabyte.com/home/2015/11/25/cobo-looks-inside-and-beyond-the-data-centre.html