AI sparks huge increase in U.S. energy consumption and is straining the power grid; transmission/distribution as a major problem

The AI boom is changing how data centers are built and where they’re located, and it’s already sparking a reshaping of U.S. energy infrastructure, according to Barron’s. Energy companies increasingly cite AI power consumption as a leading contributor to new demand. That is because AI compute servers in data centers require a tremendous amount of power to process large language models (LLMs). That was explained in detail in this recent IEEE Techblog post.

Fast Company reports that “The surge in AI is straining the U.S. power grid.” AI is pushing demand for energy significantly higher than anyone was anticipating. “The U.S. electric grid is not prepared for significant load growth,” Grid Strategies warned. AI is a major part of the problem when it comes to increased demand. Not only are industry leaders such as OpenAI, Amazon, Microsoft, and Google either building or looking for locations on which to build enormous data centers to house the infrastructure required to power large language models, but smaller companies in the space are also making huge energy demands, as the Washington Post reports.

Georgia Power, which is the chief energy provider for that state, recently had to increase its projected winter megawatt demand by as much as 38%. That’s, in part, due to the state’s incentive policy for computer operations, something officials are now rethinking. Meanwhile, Portland General Electric in Oregon, recently doubled its five-year forecast for new electricity demand.

Electricity demand was so great in Virginia that Dominion Energy was forced to halt connections to new data centers for about three months in 2022. Dominion says it expects demand in its service territory to grow by nearly 5% annually over the next 15 years, which would almost double the total amount of electricity it generates and sells. To prepare, the company is building the biggest offshore wind farm in the U.S. some 25 miles off Virginia Beach and is adding solar energy and battery storage. It has also proposed investing in new gas generation and is weighing whether to delay retiring some natural gas plants and one large coal plant.

Also in 2022, the CEO of data center giant Digital Realty said on an earnings call that Dominion had warned its big customers about a “pinch point” that could prevent it from supplying new projects until 2026.

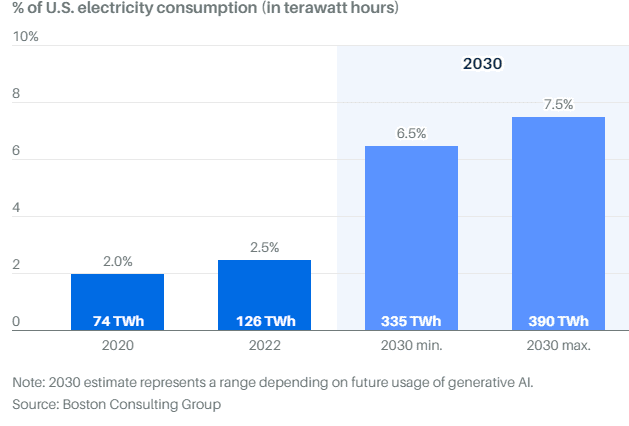

AES, another Virginia-based utility, recently told investors that data centers could comprise up to 7.5% of total U.S. electricity consumption by 2030, citing data from Boston Consulting Group. The company is largely betting its growth on the ability to deliver renewable power to data centers in the coming years.

New data centers coming on line in its regions ”represent the potential for thousands of megawatts of new electric load—often hundreds of megawatts for just one project,” Sempra Energy told investors on its earnings call last month. The company operates public utilities in California and Texas and has cited AI as a major factor in its growth.

There are also environmental concerns. While there is a push to move to cleaner energy production methods, such as solar, due to large federal subsidies, many are not yet online. And utility companies are lobbying to delay the shutdown of fossil fuel plants (and some are hoping to bring more online) to meet the surge in demand.

“Annual peak demand growth forecasts appear headed for growth rates that are double or even triple those in recent years,” Grid Strategies wrote. “Transmission planners need long-term forecasts of both electricity demand and sources of electricity supply to ensure sufficient transmission will be available when and where it’s needed. Such a failure of planning could have real consequences for investments, jobs, and system reliability for all electric customers.”

According to Boston Consulting Group, the data-center share of U.S. electricity consumption is expected to triple from 126 terawatt hours in 2022 to 390 terawatt hours by 2030. That’s the equivalent usage of 40 million U.S. homes, the firm says. Much of the data-center growth is being driven by new applications of generative AI. As AI dominates the conversation, it’s likely to bring renewed focus on the nation’s energy grid. Siemens Energy CEO Christian Bruch told shareholders at the company’s recent annual meeting that electricity needs will soar with the growing use of AI. “That means one thing: no power, no AI. Or to put it more clearly: no electricity, no progress.”

The technology sector has already shown how quickly AI can recast long-held assumptions. Chips, for instance, driven by Nvidia, have replaced software as tech’s hottest commodity. Nvidia has said that the trillion dollars invested in global data-center infrastructure will eventually shift from traditional servers with central processing units, or CPUs, to AI servers with graphics processing units, or GPUs. GPUs are better able to power the parallel computations needed for AI.

For AI workloads, Nvidia says that two GPU servers can do the work of a thousand CPU servers at a fraction of the cost and energy. Still, the better performance capabilities of GPUs is leading to more aggregate power usage as developers find innovative new ways to use AI.

The overall power consumption increase will come on two fronts: an increase in the number of GPUs sold per year and a higher power draw from each GPU. Research firm 650 Group expects AI server shipments will rise from one million units last year to six million units in 2028. According to Gartner, most AI GPUs will draw 1,000 watts of electricity by 2026, up from the roughly 650 watts on average today.

Ironically, data-center operators will use AI technology to address the power demands. “AI can be used to improve efficiency, where you’re modeling temperature, humidity, and cooling,” says Christopher Wellise, vice president of sustainability for Equinix, one of the nation’s largest data-center companies. “It can also be used for predictive maintenance.” Equinix states that using AI modeling at one of its data centers has already improved energy efficiency by 9%.

Data centers will also install more-effective cooling systems. , a leading provider of power and cooling infrastructure equipment, says that AI servers generate five times more heat than traditional CPU servers and require ten times more cooling per square foot. AI server maker Super Micro estimates that switching to liquid cooling from traditional air-based cooling can reduce operating expenses by more than 40%.

But cooling, AI efficiency, and other technologies won’t fully solve the problem of satisfying AI’s energy demands. Certain regions could face issues with their local grid. Historically, the two most popular areas to build data centers were Northern Virginia and Silicon Valley. The regions’ proximity to major internet backbones enabled quicker response times for applications, which is also helpful for AI. (Northern Virginia was home to AOL in the 1990s. A decade later, Silicon Valley was hosting most of the country’s online platforms.)

Today, each region faces challenges around power capacity and data-center availability. Both areas are years away making from the grid upgrades that would be needed to run more data centers, according to DigitalBridge, an asset manager that invests in digital infrastructure. DigitalBridge CEO Marc Ganzi says the tightness in Northern Virginia and Northern California is driving data-center construction into other markets, including Atlanta; Columbus, Ohio; and Reno, Nev. All three areas offer better power availability than Silicon Valley and Northern Virginia, though the network quality is slightly inferior as of now. Reno also offers better access to renewable energy sources such as solar and wind.

Ultimately, Ganzi says the obstacle facing the energy sector—and future AI applications—is the country’s decades-old electric transmission grid. “It isn’t so much that we have a power issue. We have a transmission infrastructure issue,” he says. “Power is abundant in the United States, but it’s not efficiently transmitted or efficiently distributed.”

Yet that was one of the prime objectives of the Smart Grid initiative which apparently is a total failure! Do you think IEEE can revive that initiative with a focus on power consumption and cooling in AI data centers?

References:

https://www.barrons.com/articles/ai-chips-electricity-usage-2f92b0f3

https://www.supermicro.com/en/solutions/liquid-cooling

Proposed solutions to high energy consumption of Generative AI LLMs: optimized hardware, new algorithms, green data centers

AI Frenzy Backgrounder; Review of AI Products and Services from Nvidia, Microsoft, Amazon, Google and Meta; Conclusions

3 thoughts on “AI sparks huge increase in U.S. energy consumption and is straining the power grid; transmission/distribution as a major problem”

Comments are closed.

Bloomberg: AI Needs So Much Power That Old Coal Plants Are Sticking Around

In a 30-square-mile patch of northern Virginia that’s been dubbed “data center alley,” the boom in artificial intelligence is turbocharging electricity use. Struggling to keep up, the power company that serves the area temporarily paused new data center connections at one point in 2022. Virginia’s environmental regulators considered a plan to allow data centers to run diesel generators during power shortages, but backed off after it drew strong community opposition.

In the Kansas City area, a data center along with a factory for electric-vehicle batteries that are under construction will need so much energy the local provider put off plans to close a coal-fired power plant.

This is how it is in much of the US, where electric utilities and regulators have been caught off guard by the biggest jump in demand in a generation. One of the things they didn’t properly plan for is AI, an immensely power-hungry technology that uses specialized microchips to process mountains of data. Electricity consumption at US data centers alone is poised to triple from 2022 levels, to as much as 390 terawatt hours by the end of the decade, according to Boston Consulting Group. That’s equal to about 7.5% of the nation’s projected electricity demand. “We do need way more energy in the world than we thought we needed before,” Sam Altman, chief executive officer of OpenAI, whose ChatGPT tool has become a global phenomenon, said at the World Economic Forum in Davos, Switzerland last week. “We still don’t appreciate the energy needs of this technology.”

For decades, US electricity demand rose by less than 1% annually. But utilities and grid operators have doubled their annual forecasts for the next five years to about 1.5%, according to Grid Strategies, a consulting firm that based its analysis on regulatory filings. That’s the highest since the 1990s, before the US stepped up efforts to make homes and businesses more energy efficient.

https://www.bloomberg.com/news/articles/2024-01-25/ai-needs-so-much-power-that-old-coal-plants-are-sticking-around

The artificial intelligence boom is driving a sharp rise in electricity use across the United States, catching utilities and regulators off guard. In northern Virginia’s “data center alley,” demand is so high that the local utility temporarily halted new data center connections in 2022. Nation-wide, electricity consumption at data centers alone could triple by 2030 to 390 TeraWatt Hours. Add in new electric vehicle battery factories, chip plants, and other clean tech manufacturing spurred by federal incentives, and demand over the next five years is forecast to rise at 1.5%—the fastest rate since the 1990s. Unable to keep pace, some utilities are scrambling to revise projections and reconsider previous plans of closing fossil fuel plants even as the Biden administration pushes for more renewable energy. Some older coal power plans will stay online, until the grid adds more power production capacity. The result could be increased emissions in the near term and risks of rolling blackouts if infrastructure continues lagging behind demand.

The situation is especially dire in Virginia, the world’s largest data center hub. The state’s largest utility, Dominion Energy, was forced to pause new data center connections for three months last year due to surging demand in Loudoun County. Though connections have resumed, Dominion expects load growth to almost double over the next 15 years. With data centers, EV factories, and other power-hungry tech continuing rapid expansion, experts warn the US national electricity grid is poorly equipped to handle the spike. Substantial investments in new transmission lines and generation are urgently needed to avoid businesses being turned away or blackouts in some regions. Though many tech companies aim to power operations with clean energy, factories are increasingly open to any available power source.

https://www.techpowerup.com/318326/ai-power-consumption-surge-strains-us-electricity-grid-coal-powered-plants-make-a-comeback

I asked Google’s Gemini its thoughts on AI power consumption. It suggests that AI will contribute between 0.5 to 5% of total power consumption by 2030. It will be interesting to see how the grid adjusts to increases from AI, EV, and other new developments cited above. I suspect there will be clever adaptations.

Gemini Response to the Question of the AI Impact on the Grid in 2030

Predicting AI’s impact on power consumption in 2030 is complex. Here’s a breakdown:

* **Total U.S. Power Consumption (Placeholder):** Estimating a moderate increase, it could reach around 4,000 Terawatt-hours (TWh) in 2030.

* **AI’s Contribution Range:** This is uncertain, with expert opinions suggesting a range of 0.5% to 5% of total U.S. power consumption in 2030.

* **Calculation:** This translates to a range of 20 TWh (low estimate) to 200 TWh (high estimate) attributed to AI in 2030.

**Key Points:**

* These are estimates based on current trends and might vary in reality.

* Data centers housing AI are significant power consumers, and their efficiency is crucial.

* AI could also reduce overall consumption by optimizing energy use in various sectors.

* Research is ongoing for more energy-efficient AI algorithms and hardware.

Overall, AI’s influence on power consumption in 2030 is a double-edged sword. It has the potential to be both a major consumer and contributor to efficient energy use.

**Sources:**

* U.S. Power Consumption Placeholder: While a definitive forecast isn’t available, rising global data center consumption due to AI is expected https://www.bloomberg.com/news/articles/2023-05-18/ai-data-center-boom-will-spur-energy-crisis-ampere-computing-ceo-says

* AI Contribution Range:

* Low Estimate (0.5%): By 2030, AI Could Consume Up to 3.5% of the World’s Electricity: https://www.rockingrobots.com/](https://www.rockingrobots.com/

* High Estimate (5%): 1 Artificial Intelligence-Powered Super Trend You Might Be Missing: https://www.fool.com/investing/2024/02/07/1-artificial-intelligence-powered-super-trend-you/