Generative AI

Big tech firms target data infrastructure software companies to increase AI competitiveness

- Meta announced Friday a $14.3 billion deal for a 49% stake in data-labeling company Scale AI. It’s 28 year old co-founder and CEO will join Meta as an AI advisor.

- Salesforce announced plans last month to buy data integration company Informatica for $8 billion. It will enable Salesforce to better analyze and assimilate scattered data from across its internal and external systems before feeding it into its in-house AI system, Einstein AI, executives said at the time.

- IT management provider ServiceNow said in May it was buying data catalogue platform Data.world, which will allow ServiceNow to better understand the business context behind data, executives said when it was announced.

- IBM announced it was acquiring data management provider DataStax in February to manage and process unstructured data before feeding it to its AI platform.

Gartner: Gen AI nearing trough of disillusionment; GSMA survey of network operator use of AI

Global IT spending is expected to total $5.61 trillion in 2025, an increase of 9.8% from 2024, according to the latest forecast by Gartner, Inc.

“While budgets for CIOs are increasing, a significant portion will merely offset price increases within their recurrent spending,” said John-David Lovelock, Distinguished VP Analyst at Gartner. “This means that, in 2025, nominal spending versus real IT spending will be skewed, with price hikes absorbing some or all of budget growth. All major categories are reflecting higher-than-expected prices, prompting CIOs to defer and scale back their true budget expectations.”

GenAI will Influence IT Spending, but IT Spending Won’t Be on GenAI Itself:

Segments including data center systems, devices and software will see double-digit growth in 2025, largely due to generative AI (GenAI) hardware upgrades (see Table 1). However, these upgraded segments will not differentiate themselves in terms of functionality yet, even with new hardware.

Table 1. Worldwide IT Spending Forecast (Millions of U.S. Dollars)

2024 Spending |

2024 Growth (%) |

2025 Spending |

2025 Growth (%) |

|

| Data Center Systems | 329,132 | 39.4 | 405,505 | 23.2 |

| Devices | 734,162 | 6.0 | 810,234 | 10.4 |

| Software | 1,091,569 | 12.0 | 1,246,842 | 14.2 |

| IT Services | 1,588,121 | 5.6 | 1,731,467 | 9.0 |

| Communications Services |

1,371,787 |

2.3 | 1,423,746 | 3.8 |

| Overall IT | 5,114,771 | 7.7 | 5,617,795 | 9.8 |

Source: Gartner (January 2025)

“GenAI is sliding toward the trough of disillusionment which reflects CIOs declining expectations for GenAI, but not their spending on this technology,” said Lovelock. “For instance, the new AI ready PCs do not yet have ‘must have’ applications that utilize the hardware. While both consumers and enterprises will purchase AI-enabled PC, tablets and mobile phones, those purchases will not be overly influenced by the GenAI functionality.”

Spending on AI-optimized servers easily doubles spending on traditional servers in 2025, reaching $202 billion dollars.

“IT services companies and hyperscalers account for over 70% of spending in 2025,” said Lovelock. “By 2028, hyperscalers will operate $1 trillion dollars’ worth of AI optimized servers, but not within their traditional business model or IaaS Market. Hyperscalers are pivoting to be part of the oligopoly AI model market.”

Gartner’s IT spending forecast methodology relies heavily on rigorous analysis of the sales by over a thousand vendors across the entire range of IT products and services. Gartner uses primary research techniques, complemented by secondary research sources, to build a comprehensive database of market size data on which to base its forecast.

More information on the forecast can be found in the complimentary Gartner webinar “IT Spending Forecast, 4Q24 Update: GenAI’s Impact on a $7 Trillion IT Market.”

………………………………………………………………………………………………………….

Gartner’s 2025 forecast for IT spending is consistent with the market research firm’s predictions from late last year that the move to AI is driving a surge in spending on data center infrastructure and IT services in Europe. IT spending across the continent will come in at US$1.28 trillion in 2025 they said. Presumably it takes a little longer to gather up the data necessary for predictions across the whole world.

……………………………………………………………………………………………………

Separately, Citi analysts expect 2025 growth to be largely driven by continued AI spending as data center capital expenditure for the biggest cloud service providers is forecasted to increase by 40% this year.

……………………………………………………………………………………………………

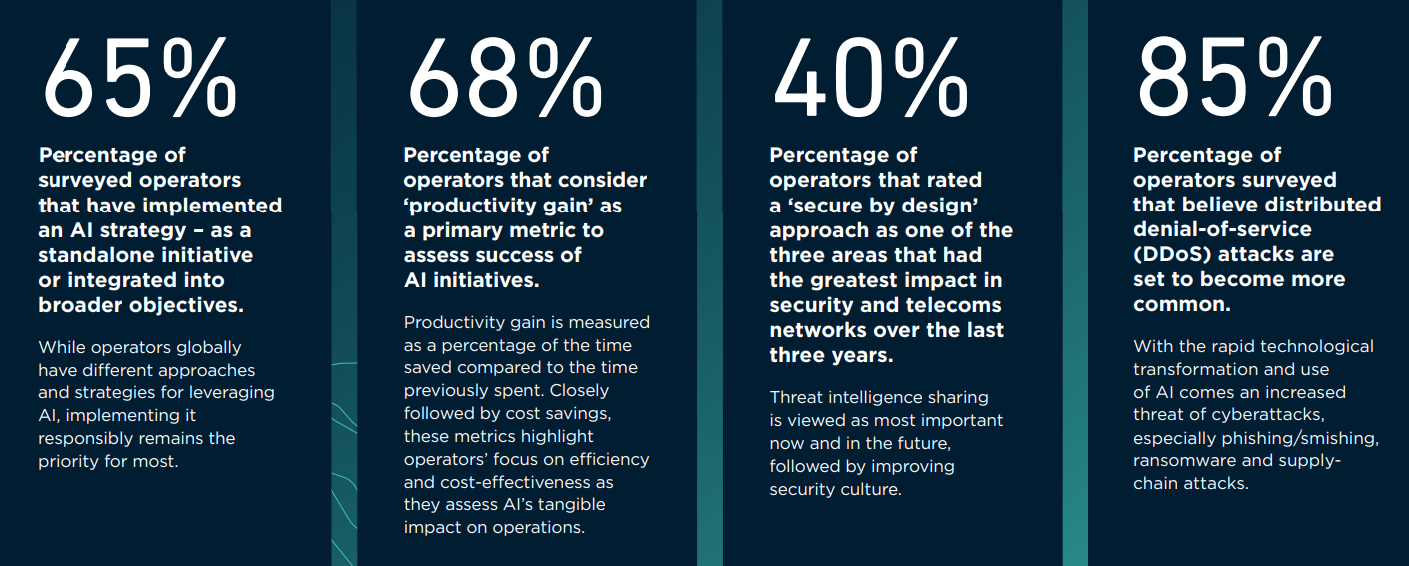

In a recent survey of network operators, GSMA found that telcos are allocating more resources to in-house and out-of-house AIs capabilities and projects, but only a subset are spending more than 15% of their digital budgets on AI. Nearly half of operators are dedicating 5% to 15% of their digital budgets towards AI, covering a range of categories, including data systems, large language models and infrastructure upgrades, the GSMA survey found. That AI money is also being allocated toward AI teams, tools and partnerships, said GSMA. The association, which primarily represents mobile operators, has been asked for more details about the size, scope and methodology of its latest study.

AI Status at Network Operators:

References:

Will billions of dollars big tech is spending on Gen AI data centers produce a decent ROI?

Canalys & Gartner: AI investments drive growth in cloud infrastructure spending

AI Echo Chamber: “Upstream AI” companies huge spending fuels profit growth for “Downstream AI” firms

AI wave stimulates big tech spending and strong profits, but for how long?

Telco spending on RAN infrastructure continues to decline as does mobile traffic growth

FT: New benchmarks for Gen AI models; Neocloud groups leverage Nvidia chips to borrow >$11B

The Financial Times reports that technology companies are rushing to redesign how they test and evaluate their Gen AI models, as current AI benchmarks appear to be inadequate. AI benchmarks are used to assess how well an AI model can generate content that is coherent, relevant, and creative. This can include generating text, images, music, or any other form of content.

OpenAI, Microsoft, Meta and Anthropic have all recently announced plans to build AI agents that can execute tasks for humans autonomously on their behalf. To do this effectively, the AI systems must be able to perform increasingly complex actions, using reasoning and planning.

Current public AI benchmarks — Hellaswag and MMLU — use multiple-choice questions to assess common sense and knowledge across various topics. However, researchers argue this method is now becoming redundant and models need more complex problems.

“We are getting to the era where a lot of the human-written tests are no longer sufficient as a good barometer for how capable the models are,” said Mark Chen, senior vice-president of research at OpenAI. “That creates a new challenge for us as a research world.”

The SWE Verified benchmark was updated in August to better evaluate autonomous systems based on feedback from companies, including OpenAI. It uses real-world software problems sourced from the developer platform GitHub and involves supplying the AI agent with a code repository and an engineering issue, asking them to fix it. The tasks require reasoning to complete.

“It is a lot more challenging [with agentic systems] because you need to connect those systems to lots of extra tools,” said Jared Kaplan, chief science officer at Anthropic.

“You have to basically create a whole sandbox environment for them to play in. It is not as simple as just providing a prompt, seeing what the completion is and then evaluating that.”

Another important factor when conducting more advanced tests is to make sure the benchmark questions are kept out of the public domain, in order to ensure the models do not effectively “cheat” by generating the answers from training data, rather than solving the problem.

The need for new benchmarks has also led to efforts by external organizations. In September, the start-up Scale AI announced a project called “Humanity’s Last Exam”, which crowdsourced complex questions from experts across different disciplines that required abstract reasoning to complete.

Meanwhile, the Financial Times recently reported that Wall Street’s largest financial institutions had loaned more than $11bn to “neocloud” groups, backed by their possession of Nvidia’s AI GPU chips. These companies include names such as CoreWeave, Crusoe and Lambda, and provide cloud computing services to tech businesses building AI products. They have acquired tens of thousands of Nvidia’s graphics processing units (GPUs) through partnerships with the chipmaker. With capital expenditure on data centres surging, in the rush to develop AI models, the Nvidia’s AI GPU chips have become a precious commodity.

Nvidia’s chips have become a precious commodity in the ongoing race to develop AI models © Marlena Sloss/Bloomberg

…………………………………………………………………………………………………………………………………

The $3tn tech group’s allocation of chips to neocloud groups has given confidence to Wall Street lenders to lend billions of dollars to the companies that are then used to buy more Nvidia chips. Nvidia is itself an investor in neocloud companies that in turn are among its largest customers. Critics have questioned the ongoing value of the collateralised chips as new advanced versions come to market — or if the current high spending on AI begins to retract. “The lenders all coming in push the story that you can borrow against these chips and add to the frenzy that you need to get in now,” said Nate Koppikar, a short seller at hedge fund Orso Partners. “But chips are a depreciating, not appreciating, asset.”

References:

https://www.ft.com/content/866ad6e9-f8fe-451f-9b00-cb9f638c7c59

https://www.ft.com/content/fb996508-c4df-4fc8-b3c0-2a638bb96c19

https://www.ft.com/content/41bfacb8-4d1e-4f25-bc60-75bf557f1f21

Tata Consultancy Services: Critical role of Gen AI in 5G; 5G private networks and enterprise use cases

Reuters & Bloomberg: OpenAI to design “inference AI” chip with Broadcom and TSMC

AI adoption to accelerate growth in the $215 billion Data Center market

AI Echo Chamber: “Upstream AI” companies huge spending fuels profit growth for “Downstream AI” firms

AI winner Nvidia faces competition with new super chip delayed

Tata Consultancy Services: Critical role of Gen AI in 5G; 5G private networks and enterprise use cases

“In the realm of network management, Generative AI could play a critical role in predicting 5G data flow patterns and optimizing performance, ultimately improving the customer experience. Similarly, in the area of security, Gen AI could be pivotal in identifying and predicting threats before they occur, strengthening overall network security,” Mayank Gupta, Global Head (Sales and Strategy Network Solutions and Services) at Tata Consultancy Services (TCS), told the Economic Times of India.

5G adoption has been happening in stages he said. When asked about the 5G monetization challenges, Gupta opined that one of the main challenges has been fully understanding the business case or use case required by the industry. “It is not the traditional ‘design, deploy and get paid’ model. Instead, you have to engage with the industry, collaborate with various stakeholders and take an ecosystem approach. It is essential to understand the specific business needs, define the problem and then build solutions around that,” Gupta said.

“Two years ago, 5G was moving at a very slow pace, but it has gained significant momentum. Globally, the U.S. and Canada are leading the way, while countries like South Korea and Brazil are accelerating their adoption, largely due to the liberalization of their spectrum. From a deployment standpoint, mobile private networks are among the first areas seeing significant progress, with deployments growing rapidly. “The focus is shifting from just connectivity to a broader transformation of operational technology,” he added.

Over 50% of 5G mobile data is now coming from the enterprise sector, which has become a major focus. Different countries prioritize different use cases based on their needs.

Watch the video interview here:

…………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………….

References:

Tata Communications launches global, cloud-based 5G Roaming Lab

5G Made in India: Bharti Airtel and Tata Group partner to implement 5G in India

LightCounting & TÉRAL RESEARCH: India RAN market is buoyant with 5G rolling out at a fast pace

Reliance Jio in talks with Tesla to deploy private 5G network for the latter’s manufacturing plant in India

Communications Minister: India to be major telecom technology exporter in 3 years with its 4G/5G technology stack

India to set up 100 labs for developing 5G apps, business models and use-cases

Adani Group to launch private 5G network services in India this year

Reuters & Bloomberg: OpenAI to design “inference AI” chip with Broadcom and TSMC

Bloomberg reports that OpenAI, the fast-growing company behind ChatGPT, is working with Broadcom Inc. to develop a new artificial intelligence chip specifically focused on running AI models after they’ve been trained, according to two people familiar with the matter. The two companies are also consulting with Taiwan Semiconductor Manufacturing Company(TSMC) the world’s largest chip contract manufacturer. OpenAI has been planning a custom chip and working on its uses for the technology for around a year, the people said, but the discussions are still at an early stage. The company has assembled a chip design team of about 20 people, led by top engineers who have previously built Tensor Processing Units (TPUs) at Google, including Thomas Norrie and Richard Ho (head of hardware engineering).

Reuters reported on OpenAI’s ongoing talks with Broadcom and TSMC on Tuesday. It has been working for months with Broadcom to build its first AI chip focusing on inference (responds to user requests), according to sources. Demand right now is greater for training chips, but analysts have predicted the need for inference chips could surpass them as more AI applications are deployed.

OpenAI has examined a range of options to diversify chip supply and reduce costs. OpenAI considered building everything in-house and raising capital for an expensive plan to build a network of chip manufacturing factories known as “foundries.”

REUTERS/Dado Ruvic/Illustration/File Photo Purchase Licensing Rights

OpenAI may continue to research setting up its own network of foundries, or chip factories, one of the people said, but the startup has realized that working with partners on custom chips is a quicker, attainable path for now. Reuters earlier reported that OpenAI was pulling back from the effort of establishing its own chip manufacturing capacity. The company has dropped the ambitious foundry plans for now due to the costs and time needed to build a network, and plans instead to focus on in-house chip design efforts, according to sources.

OpenAI, which helped commercialize generative AI that produces human-like responses to queries, relies on substantial computing power to train and run its systems. As one of the largest purchasers of Nvidia’s graphics processing units (GPUs), OpenAI uses AI chips both to train models where the AI learns from data and for inference, applying AI to make predictions or decisions based on new information. Reuters previously reported on OpenAI’s chip design endeavors. The Information reported on talks with Broadcom and others.

The Information reported in June that Broadcom had discussed making an AI chip for OpenAI. As one of the largest buyers of chips, OpenAI’s decision to source from a diverse array of chipmakers while developing its customized chip could have broader tech sector implications.

Broadcom is the largest designer of application-specific integrated circuits (ASICs) — chips designed to fit a single purpose specified by the customer. The company’s biggest customer in this area is Alphabet Inc.’s Google. Broadcom also works with Meta Platforms Inc. and TikTok owner ByteDance Ltd.

When asked last month whether he has new customers for the business, given the huge demand for AI training, Broadcom Chief Executive Officer Hock Tan said that he will only add to his short list of customers when projects hit volume shipments. “It’s not an easy product to deploy for any customer, and so we do not consider proof of concepts as production volume,” he said during an earnings conference call.

OpenAI’s services require massive amounts of computing power to develop and run — with much of that coming from Nvidia chips. To meet the demand, the industry has been scrambling to find alternatives to Nvidia. That’s included embracing processors from Advanced Micro Devices Inc. and developing in-house versions.

OpenAI is also actively planning investments and partnerships in data centers, the eventual home for such AI chips. The startup’s leadership has pitched the U.S. government on the need for more massive data centers and CEO Sam Altman has sounded out global investors, including some in the Middle East, to finance the effort.

“It’s definitely a stretch,” OpenAI Chief Financial Officer Sarah Friar told Bloomberg Television on Monday. “Stretch from a capital perspective but also my own learning. Frankly we are all learning in this space: Infrastructure is destiny.”

Currently, Nvidia’s GPUs hold over 80% AI market share. But shortages and rising costs have led major customers like Microsoft, Meta, and now OpenAI, to explore in-house or external alternatives.

References:

AI Echo Chamber: “Upstream AI” companies huge spending fuels profit growth for “Downstream AI” firms

AI Frenzy Backgrounder; Review of AI Products and Services from Nvidia, Microsoft, Amazon, Google and Meta; Conclusions

AI sparks huge increase in U.S. energy consumption and is straining the power grid; transmission/distribution as a major problem

Generative AI Unicorns Rule the Startup Roost; OpenAI in the Spotlight

AI winner Nvidia faces competition with new super chip delayed

The Clear AI Winner Is: Nvidia!

Strong AI spending should help Nvidia make its own ambitious numbers when it reports earnings at the end of the month (it’s 2Q-2024 ended July 31st). Analysts are expecting nearly $25 billion in data center revenue for the July quarter—about what that business was generating annually a year ago. But the latest results won’t quell the growing concern investors have with the pace of AI spending among the world’s largest tech giants—and how it will eventually pay off.

In March, Nvidia unveiled its Blackwell chip series, succeeding its earlier flagship AI chip, the GH200 Grace Hopper Superchip, which was designed to speed generative AI applications. The NVIDIA GH200 NVL2 fully connects two GH200 Superchips with NVLink, delivering up to 288GB of high-bandwidth memory, 10 terabytes per second (TB/s) of memory bandwidth, and 1.2TB of fast memory. The GH200 NVL2 offers up to 3.5X more GPU memory capacity and 3X more bandwidth than the NVIDIA H100 Tensor Core GPU in a single server for compute- and memory-intensive workloads. The GH200 meanwhile combines an H100 chip [1.] with an Arm CPU and more memory.

Photo Credit: Nvidia

Note 1. The Nvidia H100, sits in a 10.5 inch graphics card which is then bundled together into a server rack alongside dozens of other H100 cards to create one massive data center computer.

This week, Nvidia informed Microsoft and another major cloud service provider of a delay in the production of its most advanced AI chip in the Blackwell series, the Information website said, citing a Microsoft employee and another person with knowledge of the matter.

…………………………………………………………………………………………………………………………………………

Nvidia Competitors Emerge – but are their chips ONLY for internal use?

In addition to AMD, Nvidia has several big tech competitors that are currently not in the merchant market semiconductor business. These include:

- Huawei has developed the Ascend series of chips to rival Nvidia’s AI chips, with the Ascend 910B chip as its main competitor to Nvidia’s A100 GPU chip. Huawei is the second largest cloud services provider in China, just behind Alibaba and ahead of Tencent.

- Microsoft has unveiled an AI chip called the Azure Maia AI Accelerator, optimized for artificial intelligence (AI) tasks and generative AI as well as the Azure Cobalt CPU, an Arm-based processor tailored to run general purpose compute workloads on the Microsoft Cloud.

- Last year, Meta announced it was developing its own AI hardware. This past April, Meta announced its next generation of custom-made processor chips designed for their AI workloads. The latest version significantly improves performance compared to the last generation and helps power their ranking and recommendation ads models on Facebook and Instagram.

- Also in April, Google revealed the details of a new version of its data center AI chips and announced an Arm-based based central processor. Google’s 10 year old Tensor Processing Units (TPUs) are one of the few viable alternatives to the advanced AI chips made by Nvidia, though developers can only access them through Google’s Cloud Platform and not buy them directly.

As demand for generative AI services continues to grow, it’s evident that GPU chips will be the next big battleground for AI supremacy.

References:

AI Frenzy Backgrounder; Review of AI Products and Services from Nvidia, Microsoft, Amazon, Google and Meta; Conclusions

https://www.nvidia.com/en-us/data-center/grace-hopper-superchip/

https://www.theverge.com/2024/2/1/24058186/ai-chips-meta-microsoft-google-nvidia/archives/2

https://news.microsoft.com/source/features/ai/in-house-chips-silicon-to-service-to-meet-ai-demand/

Vodafone: GenAI overhyped, will spend $151M to enhance its chatbot with AI

GenAI is probably the most “overhyped” technology for many years in the telecom industry, said Vodafone Group’s chief technology officer (CTO) Scott Petty at a press briefing this week. “Hopefully, we are reaching the peak of those inflated expectations, because we are about to drop into a trough of disillusionment,” he said.

“This industry is moving too quickly,” Petty explained. “The evolution of particularly GPUs and the infrastructure means that by the time you’d actually bought them and got them installed you’d be N minus one or N minus two in terms of the technology, and you’d be spending a lot of effort and resource just trying to run the infrastructure and the LLMs that sit around that.”

Partnerships with hyper-scalers remain Vodafone’s preference, he said. Earlier this year, Vodafone and Microsoft signed a 10-year strategic agreement to use Microsoft GenAI in Vodafone’s network.

Vodafone is planning to invest some €140 million ($151 million) in artificial intelligence (AI) systems this year to improve the handling of customer inquiries, the company said on July 4th. Vodafone said it is investing in advanced AI from Microsoft and OpenAI to improve its chatbot, dubbed TOBi, so that it can respond faster and resolve customer issues more effectively.

The chatbot was introduced into Vodafone’s customer service five years ago and is equipped with the real voice of a Vodafone employee.

The new system, which is called SuperTOBi in many countries, has already been introduced in Italy and Portugal and will be rolled out in Germany and Turkey later this month with other markets to follow later in the year, Vodafone said in a press release.

According to the company, SuperTOBi “can understand and respond faster to complex customer enquiries better than traditional chatbots.” The new bot will assist customers with various tasks, such as troubleshooting hardware issues and setting up fixed-line routers, the company said.

Vodafone is not about to expose Vodafone’s data to publicly available models like ChatGPT. Nor will the UK based telco create large language models (LLMs) on its own. Instead, a team of 50 data scientists are working on fine-tuning LLMs like Anthropic and Vertex. Vodafone can expose information to those LLMs by dipping into its 24-petabyte data “ocean,” created with Google. Secure containers within public clouds ensure private information is securely cordoned off and unavailable to others.

According to Petty’s estimates, the performance speed of LLMs has improved by a factor of 12 in the last nine months alone, while operational costs have decreased by a factor of six. A telco that invested nine months ago would already have outdated and expensive technology. Petty, moreover, is not the only telco CTO wary of plunging into Nvidia’s GPU chips.

“This is a very weird moment in time where power is very expensive, natural resources are scarce and GPUs are extremely expensive,” said Bruno Zerbib, the CTO of France’s Orange, at the 2024 Mobile World Congress in Barcelona, Spain. “You have to be very careful with your investment because you might buy a GPU product from a famous company right now that has a monopolistic position.”

Petty thinks LLM processing may eventually need to be processed outside hyper-scalers’ facilities. “To really create the performance that we want, we are going to need to push those capabilities further toward the edge of the network,” he said. “It is not going to be the hype cycle of the back end of 2024. But in 2025 and 2026, you’ll start to see those applications and capabilities being deployed at speed.”

“The time it takes for that data to get up and back will dictate whether you’re happy as a consumer to use that interface as your primary interface, and the investment in latency is going to be critically important,” said Petty. “We’re fortunate that 5G standalone drives low latency capability, but it’s not deployed at scale. We don’t have ubiquitous coverage. We need to make sure that those things are available to enable those applications.”

Data from Ericsson supports that view, showing that 5G population coverage is just 70% across Europe, compared with 90% in North America and 95% in China. The figure for midband spectrum – considered a 5G sweet spot that combines decent coverage with high-speed service – is as low as 30% in Europe, against 85% in North America and 95% in China.

Non-standalone (NSA) 5G, which connects a 5G radio access network (RAN) to a 4G core (EPC), is “dominating the market,” said Ericsson.

Vodafone has pledged to spend £11 billion (US$14 billion) on the rollout of a nationwide standalone 5G network in the UK if authorities bless its proposed merger with Three. With more customers, additional spectrum and a bigger footprint, the combined company would be able to generate healthier returns and invest in network improvements, the company said. But a UK merger would not aid the operator in Europe’s four-player markets.

Petty believes a “pay for search” economic model may emerge using GenAI virtual assistants. “This will see an evolution of a two-sided economic model that probably didn’t get in the growth of the Internet in the last 20 years,” but it would not be unlike today’s market for content delivery networks (CDNs).

“Most CDNs are actually paid for by the content distribution companies – the Netflixes, the TV sports – because they want a great experience for their users for the paid content they’ve bought. When it’s free content, maybe the owner of that content is less willing to invest to build out the capabilities in the network.”

Like other industry executives, Petty must hope the debates about net neutrality and fair contribution do not plunge telcos into a long disillusionment trough.

References:

Vodafone CTO: AI will overhaul 5G networks and Internet economics (lightreading.com)

Vodafone UK report touts benefits of 5G SA for Small Biz; cover for proposed merger with Three UK?

Data infrastructure software: picks and shovels for AI; Hyperscaler CAPEX

For many years, data volumes have been accelerating. By 2025, global data volumes are expected to reach 180 zettabytes (1 zettabyte=1 sextillion bytes), up from 120 zettabytes in 2023.

In the age of AI, data is viewed as the currency for large language models (LLMs) and AI–enabled offerings. Therefore, demand for tools to integrate, store and process data is a growing priority amongst enterprises.

The median size of datasets required to train AI models increased from 5.9 million data points in 2010 to 750 billion in 2023, according to BofA Global Research. As demand rises for AI-enabled offerings, companies are prioritizing tools to integrate, store, and process data.

In BofA’s survey, data streaming/stream processing and data science/ML were selected as key use cases in regard to AI, with 44% and 37% of respondents citing usage, respectively. Further, AI enablement is accelerating data to the cloud. Gartner estimates that 74% of the data management market will be deployed in the cloud by 2027, up from 60% in 2023.

Data infrastructure software [1.] represents a top spending priority for the IT department. Survey respondents cite that data infrastructure represents 35% of total IT spending, with budgets expected to grow 9% for the next 12 months. No surprise that the public cloud hyper-scaler platforms were cited as top three vendors. Amazon AWS data warehouse/data lake offerings, Microsoft Azure database offerings, and Google BigQuery are chosen by 45%, 39% and 35% of respondents, respectively.

Note 1. Data infrastructure software refers to databases, data warehouses/lakes, data pipelines, data analytics and other software that facilitate data management, processing and analysis.

………………………………………………………………………………………………………………..

The top three factors for evaluating data infrastructure software vendors are security, enterprise capabilities (e.g., architecture scalability and reliability) and depth of technology.

BofA’s Software team estimates that the data infrastructure industry (e.g., data warehouses, data lakes, unstructured databases, etc.) is currently a $96bn market that could reach $153bn in 2028. The team’s proprietary survey revealed that data infrastructure is 35% of total IT spending with budgets expected to grow 9% over the next 12 months. Hyperscalers including Amazon and Google are among the top recipients of dollars and in-turn, those companies spend big on hardware.

Key takeaways:

- Data infrastructure is the largest and fastest growing segment of software ($96bn per our bottom-up analysis, 17% CAGR).

- AI/cloud represent enduring growth drivers. Data is the currency for LLMs, positioning data vendors well in this new cycle

- BofA survey (150 IT professionals) suggests best of breeds (MDB, SNOW and Databricks) seeing highest expected growth in spend

………………………………………………………………………………………………………….

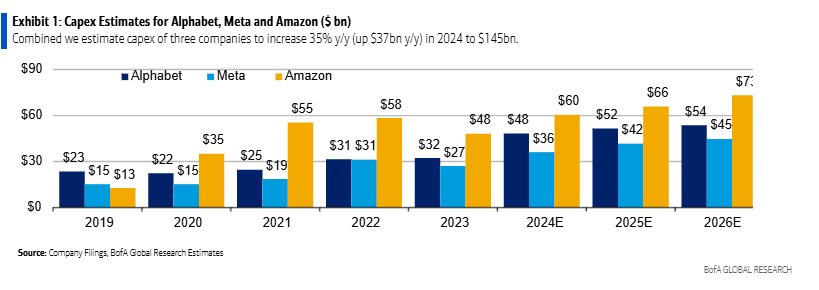

BofA analyst Justin Post expects server and equipment capex for mega-cap internet companies (Amazon, Alphabet/Google, Meta/Facebook) to rise 43% y/y in 2024 to $145bn, which represents $27bn of the $37bn y/y total capex growth. Despite the spending surge, Mr. Post thinks these companies will keep free cash flow margins stable at 22% y/y before increasing in 2025. The technical infrastructure related capex spend at these three companies is expected to see steep rise in 2024, with the majority of the increase for servers and equipment.

Notes:

- Alphabet categorizes its technical infrastructure assets under the line item ‘Information Technology Assets‘

- Amazon take a much a broader categorization and includes Servers, networking equipment, retail related heavy equipment & fulfillment equipment under ‘Equipment‘.

- Meta gives more details and separately reports Server & Networking, and Equipment assets.

In 2024, BofA estimates CAPEX for the three hyperscalers as follows:

- Alphabet‘s capex for IT assets will increase by $12bn y/y to $28bn.

- Meta, following a big ramp in 2023, server, network and equipment asset spend is expected to increase $7bn y/y to $22bn.

- Amazon, equipment spend is expected to increase $8bn y/y to $41bn (driven by AWS, retail flattish). Amazon will see less relative growth due to retail equipment capex leverage in this line.

On a relative scale, Meta capex spend (% of revenue) remains highest in the group and the company has materially stepped up its AI related capex investments since 2022 (in–house supercomputer, LLM, leading computing power, etc.). We think it‘s interesting that Meta is spending almost as much as the hyperscalers on capex, which should likely lead to some interesting internal AI capabilities, and potential to build a “marketing cloud“ for its advertisers.

From 2016-22, the sector headcount grew 26% on average. In 2023, headcount decreased by 9%. BofA expects just 3% average. annual job growth from 2022-2026. Moreover, AI tools will likely drive higher employee efficiency, helping offset higher depreciation.

…………………………………………………………………………………………………………

Source for all of the above information: BofA Global Research

NTT & Yomiuri: ‘Social Order Could Collapse’ in AI Era

From the Wall Street Journal:

Japan’s largest telecommunications company and the country’s biggest newspaper called for speedy legislation to restrain generative artificial intelligence, saying democracy and social order could collapse if AI is left unchecked.

Nippon Telegraph and Telephone, or NTT, and Yomiuri Shimbun Group Holdings made the proposal in an AI manifesto to be released Monday. Combined with a law passed in March by the European Parliament restricting some uses of AI, the manifesto points to rising concern among American allies about the AI programs U.S.-based companies have been at the forefront of developing.

The Japanese companies’ manifesto, while pointing to the potential benefits of generative AI in improving productivity, took a generally skeptical view of the technology. Without giving specifics, it said AI tools have already begun to damage human dignity because the tools are sometimes designed to seize users’ attention without regard to morals or accuracy.

Unless AI is restrained, “in the worst-case scenario, democracy and social order could collapse, resulting in wars,” the manifesto said.

It said Japan should take measures immediately in response, including laws to protect elections and national security from abuse of generative AI.

A global push is under way to regulate AI, with the European Union at the forefront. The EU’s new law calls on makers of the most powerful AI models to put them through safety evaluations and notify regulators of serious incidents. It also is set to ban the use of emotion-recognition AI in schools and workplaces.

The Biden administration is also stepping up oversight, invoking emergency federal powers last October to compel major AI companies to notify the government when developing systems that pose a serious risk to national security. The U.S., U.K. and Japan have each set up government-led AI safety institutes to help develop AI guidelines.

Still, governments of democratic nations are struggling to figure out how to regulate AI-powered speech, such as social-media activity, given constitutional and other protections for free speech.

NTT and Yomiuri said their manifesto was motivated by concern over public discourse. The two companies are among Japan’s most influential in policy. The government still owns about one-third of NTT, formerly the state-controlled phone monopoly.

Yomiuri Shimbun, which has a morning circulation of about six million copies according to industry figures, is Japan’s most widely-read newspaper. Under the late Prime Minister Shinzo Abe and his successors, the newspaper’s conservative editorial line has been influential in pushing the ruling Liberal Democratic Party to expand military spending and deepen the nation’s alliance with the U.S.

The two companies said their executives have been examining the impact of generative AI since last year in a study group guided by Keio University researchers.

The Yomiuri’s news pages and editorials frequently highlight concerns about artificial intelligence. An editorial in December, noting the rush of new AI products coming from U.S. tech companies, said “AI models could teach people how to make weapons or spread discriminatory ideas.” It cited risks from sophisticated fake videos purporting to show politicians speaking.

NTT is active in AI research, and its units offer generative AI products to business customers. In March, it started offering these customers a large-language model it calls “tsuzumi” which is akin to OpenAI’s ChatGPT but is designed to use less computing power and work better in Japanese-language contexts.

An NTT spokesman said the company works with U.S. tech giants and believes generative AI has valuable uses, but he said the company believes the technology has particular risks if it is used maliciously to manipulate public opinion.

…………………………………………………………………………………………………………….

From the Japan News (Yomiuri Shimbun):

Challenges: Humans cannot fully control Generative AI technology

・ While the accuracy of results cannot be fully guaranteed, it is easy for people to use the technology and understand its output. This often leads to situations in which generative AI “lies with confidence” and people are “easily fooled.”

・ Challenges include hallucinations, bias and toxicity, retraining through input data, infringement of rights through data scraping and the difficulty of judging created products.

・ Journalism, research in academia and other sources have provided accurate and valuable information by thoroughly examining what information is correct, allowing them to receive some form of compensation or reward. Such incentives for providing and distributing information have ensured authenticity and trustworthiness may collapse.

A need to respond: Generative AI must be controlled both technologically and legally

・ If generative AI is allowed to go unchecked, trust in society as a whole may be damaged as people grow distrustful of one another and incentives are lost for guaranteeing authenticity and trustworthiness. There is a concern that, in the worst-case scenario, democracy and social order could collapse, resulting in wars.

・ Meanwhile, AI technology itself is already indispensable to society. If AI technology is dismissed as a whole as untrustworthy due to out-of-control generative AI, humanity’s productivity may decline.

・ Based on the points laid out in the following sections, measures must be realized to balance the control and use of generative AI from both technological and institutional perspectives, and to make the technology a suitable tool for society.

Point 1: Confronting the out-of-control relationship between AI and the attention economy

・ Any computer’s basic structure, or architecture, including that of generative AI, positions the individual as the basic unit of user. However, due to computers’ tendency to be overly conscious of individuals, there are such problems as unsound information spaces and damage to individual dignity due to the rise of the attention economy.

・ There are concerns that the unstable nature of generative AI is likely to amplify the above-mentioned problems further. In other words, it cannot be denied that there is a risk of worsening social unrest due to a combination of AI and the attention economy, with the attention economy accelerated by generative AI. To understand such issues properly, it is important to review our views on humanity and society and critically consider what form desirable technology should take.

・ Meanwhile, the out-of-control relationship between AI and the attention economy has already damaged autonomy and dignity, which are essential values that allow individuals in our society to be free. These values must be restored quickly. In doing so, autonomous liberty should not be abandoned, but rather an optimal solution should be sought based on human liberty and dignity, verifying their rationality. In the process, concepts such as information health are expected to be established.

Point 2: Legal restraints to ensure discussion spaces to protect liberty and dignity, the introduction of technology to cope with related issues

・ Ensuring spaces for discussion in which human liberty and dignity are maintained has not only superficial economic value, but also a special value in terms of supporting social stability. The out-of-control relationship between AI and the attention economy is a threat to these values. If generative AI develops further and is left unchecked like it is currently, there is no denying that the distribution of malicious information could drive out good things and cause social unrest.

・ If we continue to be unable to sufficiently regulate generative AI — or if we at least allow the unconditional application of such technology to elections and security — it could cause enormous and irreversible damage as the effects of the technology will not be controllable in society. This implies a need for rigid restrictions by law (hard laws that are enforceable) on the usage of generative AI in these areas.

・ In the area of education, especially compulsory education for those age groups in which students’ ability to make appropriate decisions has not fully matured, careful measures should be taken after considering both the advantages and disadvantages of AI usage.

・ The protection of intellectual property rights — especially copyrights — should be adapted to the times in both institutional and technological aspects to maintain incentives for providing and distributing sound information. In doing so, the protections should be made enforceable in practice, without excessive restrictions to developing and using generative AI.

・ These solutions cannot be maintained by laws alone, but rather, they also require measures such as Originator Profile (OP), which is secured by technology.

Point 2: Legal restraints to ensure discussion spaces to protect liberty and dignity, and the introduction of technology to cope with related issues

・ Ensuring spaces for discussion in which human liberty and dignity are maintained has not only superficial economic value, but also a special value in terms of supporting social stability. The out-of-control relationship between AI and the attention economy is a threat to these values. If generative AI develops further and is left unchecked like it is currently, there is no denying that the distribution of malicious information could drive out good things and cause social unrest.

・ If we continue to be unable to sufficiently regulate generative AI — or if we at least allow the unconditional application of such technology to elections and security — it could cause enormous and irreversible damage as the effects of the technology will not be controllable in society. This implies a need for rigid restrictions by law (hard laws that are enforceable) on the usage of generative AI in these areas.

・ In the area of education, especially compulsory education for those age groups in which students’ ability to make appropriate decisions has not fully matured, careful measures should be taken after considering both the advantages and disadvantages of AI usage.

・ The protection of intellectual property rights — especially copyrights — should be adapted to the times in both institutional and technological aspects to maintain incentives for providing and distributing sound information. In doing so, the protections should be made enforceable in practice, without excessive restrictions to developing and using generative AI.

・ These solutions cannot be maintained by laws alone, but rather, they also require measures such as Originator Profile (OP), which is secured by technology.

Point 3: Establishment of effective governance, including legislation

・ The European Union has been developing data-related laws such as the General Data Protection Regulation, the Digital Services Act and the Digital Markets Act. It has been developing regulations through strategic laws with awareness of the need to both control and promote AI, positioning the Artificial Intelligence Act as part of such efforts.

・ Japan does not have such a strategic and systematic data policy. It is expected to require a long time and involve many obstacles to develop such a policy. Therefore, in the long term, it is necessary to develop a robust, strategic and systematic data policy and, in the short term, individual regulations and effective measures aimed at dealing with AI and attention economy-related problems in the era of generative AI.

・However, it would be difficult to immediately introduce legislation, including individual regulations, for such issues. Without excluding consideration of future legislation, the handling of AI must be strengthened by soft laws — both for data (basic) and generative AI (applied) — that offer a co-regulatory approach that identifies stakeholders. Given the speed of technological innovation and the complexity of value chains, it is expected that an agile framework such as agile governance, rather than governance based on static structures, will be introduced.

・ In risk areas that require special caution (see Point 2), hard laws should be introduced without hesitation.

・ In designing a system, attention should be paid to how effectively it protects the people’s liberty and dignity, as well as to national interests such as industry, based on the impact on Japan of extraterritorial enforcement to the required extent and other countries’ systems.

・ As a possible measure to balance AI use and regulation, a framework should be considered in which the businesses that interact directly with users in the value chain, the middle B in “B2B2X,” where X is the user, reduce and absorb risks when generative AI is used.

・ To create an environment that ensures discussion spaces in which human liberty and dignity are maintained, it is necessary to ensure that there are multiple AIs of various kinds and of equal rank, that they keep each other in check, and that users can refer to them autonomously, so that users do not have to depend on a specific AI. Such moves should be promoted from both institutional and technological perspectives.

Outlook for the Future:

・ Generative AI is a technology that cannot be fully controlled by humanity. However, it is set to enter an innovation phase (changes accompanying social diffusion).

・ In particular, measures to ensure a healthy space for discussion, which constitutes the basis of human and social security (democratic order), must be taken immediately. Legislation (hard laws) are needed, mainly for creating zones of generative AI use (strong restrictions for elections and security).

・ In addition, from the viewpoint of ecosystem maintenance (including the dissemination of personal information), it is necessary to consider optimizing copyright law in line with the times, in a manner compatible with using generative AI itself, from both institutional and technological perspectives.

・ However, as it takes time to revise the law, the following steps must be taken: the introduction of rules and joint regulations mainly by the media and various industries, the establishment and dissemination of effective technologies, and making efforts to revise the law.

・ In this process, the most important thing is to protect the dignity and liberty of individuals in order to achieve individual autonomy. Those involved will study the situation, taking into account critical assessments based on the value of community.

References:

‘Joint Proposal on Shaping Generative AI’ by The Yomiuri Shimbun Holdings and NTT Corp.

Major technology companies form AI-Enabled Information and Communication Technology (ICT) Workforce Consortium

MTN Consulting: Generative AI hype grips telecom industry; telco CAPEX decreases while vendor revenue plummets

Cloud Service Providers struggle with Generative AI; Users face vendor lock-in; “The hype is here, the revenue is not”

Amdocs and NVIDIA to Accelerate Adoption of Generative AI for $1.7 Trillion Telecom Industry

AI sparks huge increase in U.S. energy consumption and is straining the power grid; transmission/distribution as a major problem

The AI boom is changing how data centers are built and where they’re located, and it’s already sparking a reshaping of U.S. energy infrastructure, according to Barron’s. Energy companies increasingly cite AI power consumption as a leading contributor to new demand. That is because AI compute servers in data centers require a tremendous amount of power to process large language models (LLMs). That was explained in detail in this recent IEEE Techblog post.

Fast Company reports that “The surge in AI is straining the U.S. power grid.” AI is pushing demand for energy significantly higher than anyone was anticipating. “The U.S. electric grid is not prepared for significant load growth,” Grid Strategies warned. AI is a major part of the problem when it comes to increased demand. Not only are industry leaders such as OpenAI, Amazon, Microsoft, and Google either building or looking for locations on which to build enormous data centers to house the infrastructure required to power large language models, but smaller companies in the space are also making huge energy demands, as the Washington Post reports.

Georgia Power, which is the chief energy provider for that state, recently had to increase its projected winter megawatt demand by as much as 38%. That’s, in part, due to the state’s incentive policy for computer operations, something officials are now rethinking. Meanwhile, Portland General Electric in Oregon, recently doubled its five-year forecast for new electricity demand.

Electricity demand was so great in Virginia that Dominion Energy was forced to halt connections to new data centers for about three months in 2022. Dominion says it expects demand in its service territory to grow by nearly 5% annually over the next 15 years, which would almost double the total amount of electricity it generates and sells. To prepare, the company is building the biggest offshore wind farm in the U.S. some 25 miles off Virginia Beach and is adding solar energy and battery storage. It has also proposed investing in new gas generation and is weighing whether to delay retiring some natural gas plants and one large coal plant.

Also in 2022, the CEO of data center giant Digital Realty said on an earnings call that Dominion had warned its big customers about a “pinch point” that could prevent it from supplying new projects until 2026.

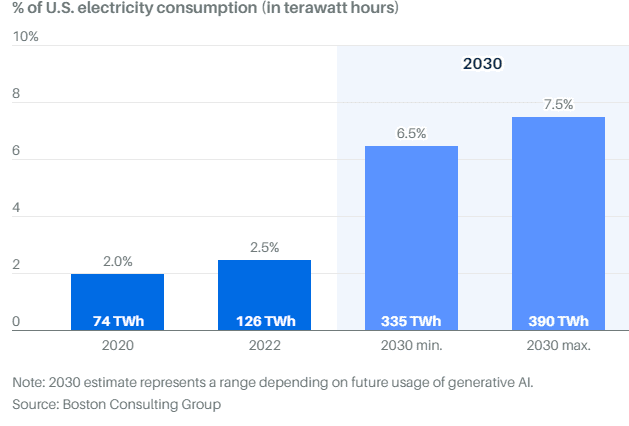

AES, another Virginia-based utility, recently told investors that data centers could comprise up to 7.5% of total U.S. electricity consumption by 2030, citing data from Boston Consulting Group. The company is largely betting its growth on the ability to deliver renewable power to data centers in the coming years.

New data centers coming on line in its regions ”represent the potential for thousands of megawatts of new electric load—often hundreds of megawatts for just one project,” Sempra Energy told investors on its earnings call last month. The company operates public utilities in California and Texas and has cited AI as a major factor in its growth.

There are also environmental concerns. While there is a push to move to cleaner energy production methods, such as solar, due to large federal subsidies, many are not yet online. And utility companies are lobbying to delay the shutdown of fossil fuel plants (and some are hoping to bring more online) to meet the surge in demand.

“Annual peak demand growth forecasts appear headed for growth rates that are double or even triple those in recent years,” Grid Strategies wrote. “Transmission planners need long-term forecasts of both electricity demand and sources of electricity supply to ensure sufficient transmission will be available when and where it’s needed. Such a failure of planning could have real consequences for investments, jobs, and system reliability for all electric customers.”

According to Boston Consulting Group, the data-center share of U.S. electricity consumption is expected to triple from 126 terawatt hours in 2022 to 390 terawatt hours by 2030. That’s the equivalent usage of 40 million U.S. homes, the firm says. Much of the data-center growth is being driven by new applications of generative AI. As AI dominates the conversation, it’s likely to bring renewed focus on the nation’s energy grid. Siemens Energy CEO Christian Bruch told shareholders at the company’s recent annual meeting that electricity needs will soar with the growing use of AI. “That means one thing: no power, no AI. Or to put it more clearly: no electricity, no progress.”

The technology sector has already shown how quickly AI can recast long-held assumptions. Chips, for instance, driven by Nvidia, have replaced software as tech’s hottest commodity. Nvidia has said that the trillion dollars invested in global data-center infrastructure will eventually shift from traditional servers with central processing units, or CPUs, to AI servers with graphics processing units, or GPUs. GPUs are better able to power the parallel computations needed for AI.

For AI workloads, Nvidia says that two GPU servers can do the work of a thousand CPU servers at a fraction of the cost and energy. Still, the better performance capabilities of GPUs is leading to more aggregate power usage as developers find innovative new ways to use AI.

The overall power consumption increase will come on two fronts: an increase in the number of GPUs sold per year and a higher power draw from each GPU. Research firm 650 Group expects AI server shipments will rise from one million units last year to six million units in 2028. According to Gartner, most AI GPUs will draw 1,000 watts of electricity by 2026, up from the roughly 650 watts on average today.

Ironically, data-center operators will use AI technology to address the power demands. “AI can be used to improve efficiency, where you’re modeling temperature, humidity, and cooling,” says Christopher Wellise, vice president of sustainability for Equinix, one of the nation’s largest data-center companies. “It can also be used for predictive maintenance.” Equinix states that using AI modeling at one of its data centers has already improved energy efficiency by 9%.

Data centers will also install more-effective cooling systems. , a leading provider of power and cooling infrastructure equipment, says that AI servers generate five times more heat than traditional CPU servers and require ten times more cooling per square foot. AI server maker Super Micro estimates that switching to liquid cooling from traditional air-based cooling can reduce operating expenses by more than 40%.

But cooling, AI efficiency, and other technologies won’t fully solve the problem of satisfying AI’s energy demands. Certain regions could face issues with their local grid. Historically, the two most popular areas to build data centers were Northern Virginia and Silicon Valley. The regions’ proximity to major internet backbones enabled quicker response times for applications, which is also helpful for AI. (Northern Virginia was home to AOL in the 1990s. A decade later, Silicon Valley was hosting most of the country’s online platforms.)

Today, each region faces challenges around power capacity and data-center availability. Both areas are years away making from the grid upgrades that would be needed to run more data centers, according to DigitalBridge, an asset manager that invests in digital infrastructure. DigitalBridge CEO Marc Ganzi says the tightness in Northern Virginia and Northern California is driving data-center construction into other markets, including Atlanta; Columbus, Ohio; and Reno, Nev. All three areas offer better power availability than Silicon Valley and Northern Virginia, though the network quality is slightly inferior as of now. Reno also offers better access to renewable energy sources such as solar and wind.

Ultimately, Ganzi says the obstacle facing the energy sector—and future AI applications—is the country’s decades-old electric transmission grid. “It isn’t so much that we have a power issue. We have a transmission infrastructure issue,” he says. “Power is abundant in the United States, but it’s not efficiently transmitted or efficiently distributed.”

Yet that was one of the prime objectives of the Smart Grid initiative which apparently is a total failure! Do you think IEEE can revive that initiative with a focus on power consumption and cooling in AI data centers?

References:

https://www.barrons.com/articles/ai-chips-electricity-usage-2f92b0f3

https://www.supermicro.com/en/solutions/liquid-cooling

Proposed solutions to high energy consumption of Generative AI LLMs: optimized hardware, new algorithms, green data centers

AI Frenzy Backgrounder; Review of AI Products and Services from Nvidia, Microsoft, Amazon, Google and Meta; Conclusions