cooling

AI sparks huge increase in U.S. energy consumption and is straining the power grid; transmission/distribution as a major problem

The AI boom is changing how data centers are built and where they’re located, and it’s already sparking a reshaping of U.S. energy infrastructure, according to Barron’s. Energy companies increasingly cite AI power consumption as a leading contributor to new demand. That is because AI compute servers in data centers require a tremendous amount of power to process large language models (LLMs). That was explained in detail in this recent IEEE Techblog post.

Fast Company reports that “The surge in AI is straining the U.S. power grid.” AI is pushing demand for energy significantly higher than anyone was anticipating. “The U.S. electric grid is not prepared for significant load growth,” Grid Strategies warned. AI is a major part of the problem when it comes to increased demand. Not only are industry leaders such as OpenAI, Amazon, Microsoft, and Google either building or looking for locations on which to build enormous data centers to house the infrastructure required to power large language models, but smaller companies in the space are also making huge energy demands, as the Washington Post reports.

Georgia Power, which is the chief energy provider for that state, recently had to increase its projected winter megawatt demand by as much as 38%. That’s, in part, due to the state’s incentive policy for computer operations, something officials are now rethinking. Meanwhile, Portland General Electric in Oregon, recently doubled its five-year forecast for new electricity demand.

Electricity demand was so great in Virginia that Dominion Energy was forced to halt connections to new data centers for about three months in 2022. Dominion says it expects demand in its service territory to grow by nearly 5% annually over the next 15 years, which would almost double the total amount of electricity it generates and sells. To prepare, the company is building the biggest offshore wind farm in the U.S. some 25 miles off Virginia Beach and is adding solar energy and battery storage. It has also proposed investing in new gas generation and is weighing whether to delay retiring some natural gas plants and one large coal plant.

Also in 2022, the CEO of data center giant Digital Realty said on an earnings call that Dominion had warned its big customers about a “pinch point” that could prevent it from supplying new projects until 2026.

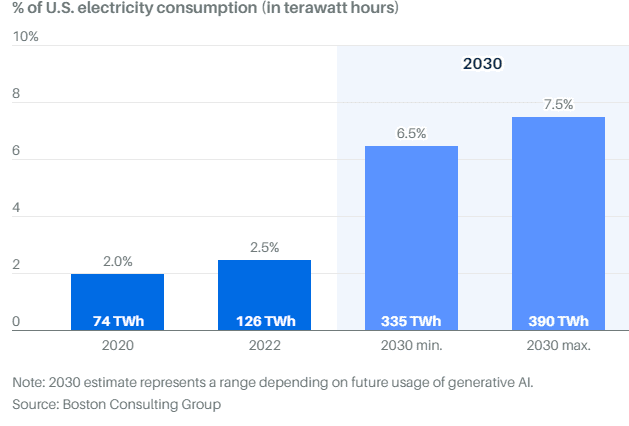

AES, another Virginia-based utility, recently told investors that data centers could comprise up to 7.5% of total U.S. electricity consumption by 2030, citing data from Boston Consulting Group. The company is largely betting its growth on the ability to deliver renewable power to data centers in the coming years.

New data centers coming on line in its regions ”represent the potential for thousands of megawatts of new electric load—often hundreds of megawatts for just one project,” Sempra Energy told investors on its earnings call last month. The company operates public utilities in California and Texas and has cited AI as a major factor in its growth.

There are also environmental concerns. While there is a push to move to cleaner energy production methods, such as solar, due to large federal subsidies, many are not yet online. And utility companies are lobbying to delay the shutdown of fossil fuel plants (and some are hoping to bring more online) to meet the surge in demand.

“Annual peak demand growth forecasts appear headed for growth rates that are double or even triple those in recent years,” Grid Strategies wrote. “Transmission planners need long-term forecasts of both electricity demand and sources of electricity supply to ensure sufficient transmission will be available when and where it’s needed. Such a failure of planning could have real consequences for investments, jobs, and system reliability for all electric customers.”

According to Boston Consulting Group, the data-center share of U.S. electricity consumption is expected to triple from 126 terawatt hours in 2022 to 390 terawatt hours by 2030. That’s the equivalent usage of 40 million U.S. homes, the firm says. Much of the data-center growth is being driven by new applications of generative AI. As AI dominates the conversation, it’s likely to bring renewed focus on the nation’s energy grid. Siemens Energy CEO Christian Bruch told shareholders at the company’s recent annual meeting that electricity needs will soar with the growing use of AI. “That means one thing: no power, no AI. Or to put it more clearly: no electricity, no progress.”

The technology sector has already shown how quickly AI can recast long-held assumptions. Chips, for instance, driven by Nvidia, have replaced software as tech’s hottest commodity. Nvidia has said that the trillion dollars invested in global data-center infrastructure will eventually shift from traditional servers with central processing units, or CPUs, to AI servers with graphics processing units, or GPUs. GPUs are better able to power the parallel computations needed for AI.

For AI workloads, Nvidia says that two GPU servers can do the work of a thousand CPU servers at a fraction of the cost and energy. Still, the better performance capabilities of GPUs is leading to more aggregate power usage as developers find innovative new ways to use AI.

The overall power consumption increase will come on two fronts: an increase in the number of GPUs sold per year and a higher power draw from each GPU. Research firm 650 Group expects AI server shipments will rise from one million units last year to six million units in 2028. According to Gartner, most AI GPUs will draw 1,000 watts of electricity by 2026, up from the roughly 650 watts on average today.

Ironically, data-center operators will use AI technology to address the power demands. “AI can be used to improve efficiency, where you’re modeling temperature, humidity, and cooling,” says Christopher Wellise, vice president of sustainability for Equinix, one of the nation’s largest data-center companies. “It can also be used for predictive maintenance.” Equinix states that using AI modeling at one of its data centers has already improved energy efficiency by 9%.

Data centers will also install more-effective cooling systems. , a leading provider of power and cooling infrastructure equipment, says that AI servers generate five times more heat than traditional CPU servers and require ten times more cooling per square foot. AI server maker Super Micro estimates that switching to liquid cooling from traditional air-based cooling can reduce operating expenses by more than 40%.

But cooling, AI efficiency, and other technologies won’t fully solve the problem of satisfying AI’s energy demands. Certain regions could face issues with their local grid. Historically, the two most popular areas to build data centers were Northern Virginia and Silicon Valley. The regions’ proximity to major internet backbones enabled quicker response times for applications, which is also helpful for AI. (Northern Virginia was home to AOL in the 1990s. A decade later, Silicon Valley was hosting most of the country’s online platforms.)

Today, each region faces challenges around power capacity and data-center availability. Both areas are years away making from the grid upgrades that would be needed to run more data centers, according to DigitalBridge, an asset manager that invests in digital infrastructure. DigitalBridge CEO Marc Ganzi says the tightness in Northern Virginia and Northern California is driving data-center construction into other markets, including Atlanta; Columbus, Ohio; and Reno, Nev. All three areas offer better power availability than Silicon Valley and Northern Virginia, though the network quality is slightly inferior as of now. Reno also offers better access to renewable energy sources such as solar and wind.

Ultimately, Ganzi says the obstacle facing the energy sector—and future AI applications—is the country’s decades-old electric transmission grid. “It isn’t so much that we have a power issue. We have a transmission infrastructure issue,” he says. “Power is abundant in the United States, but it’s not efficiently transmitted or efficiently distributed.”

Yet that was one of the prime objectives of the Smart Grid initiative which apparently is a total failure! Do you think IEEE can revive that initiative with a focus on power consumption and cooling in AI data centers?

References:

https://www.barrons.com/articles/ai-chips-electricity-usage-2f92b0f3

https://www.supermicro.com/en/solutions/liquid-cooling

Proposed solutions to high energy consumption of Generative AI LLMs: optimized hardware, new algorithms, green data centers

AI Frenzy Backgrounder; Review of AI Products and Services from Nvidia, Microsoft, Amazon, Google and Meta; Conclusions

Proposed solutions to high energy consumption of Generative AI LLMs: optimized hardware, new algorithms, green data centers

Introduction:

Many generative AI tools rely on a type of natural-language processing called large language models (LLMs) to first learn and then make inferences about languages and linguistic structures (like code or legal-case prediction) used throughout the world. Some companies that use LLMs include: Anthropic (now collaborating with Amazon), Microsoft, OpenAI, Google, Amazon/AWS, Meta (FB), SAP, IQVIA. Here are some examples of LLMs: Google’s BERT, Amazon’s Bedrock, Falcon 40B, Meta’s Galactica, Open AI’s GPT-3 and GPT-4, Google’s LaMDA Hugging Face’s BLOOM Nvidia’s NeMO LLM.

The training process of the Large Language Models (LLMs) used in generative artificial intelligence (AI) is a cause for concern. LLMs can consume many terabytes of data and use over 1,000 megawatt-hours of electricity.

Alex de Vries is a Ph.D. candidate at VU Amsterdam and founder of the digital-sustainability blog Digiconomist published a report in Joule which predicts that current AI technology could be on track to annually consume as much electricity as the entire country of Ireland (29.3 terawatt-hours per year).

“As an already massive cloud market keeps on growing, the year-on-year growth rate almost inevitably declines,” John Dinsdale, chief analyst and managing director at Synergy, told CRN via email. “But we are now starting to see a stabilization of growth rates, as cloud provider investments in generative AI technology help to further boost enterprise spending on cloud services.”

Hardware vs Algorithmic Solutions to Reduce Energy Consumption:

Roberto Verdecchia is an assistant professor at the University of Florence and the first author of a paper published on developing green AI solutions. He says that de Vries’s predictions may even be conservative when it comes to the true cost of AI, especially when considering the non-standardized regulation surrounding this technology. AI’s energy problem has historically been approached through optimizing hardware, says Verdecchia. However, continuing to make microelectronics smaller and more efficient is becoming “physically impossible,” he added.

In his paper, published in the journal WIREs Data Mining and Knowledge Discovery, Verdecchia and colleagues highlight several algorithmic approaches that experts are taking instead. These include improving data-collection and processing techniques, choosing more-efficient libraries, and improving the efficiency of training algorithms. “The solutions report impressive energy savings, often at a negligible or even null deterioration of the AI algorithms’ precision,” Verdecchia says.

……………………………………………………………………………………………………………………………………………………………………………………………………………………

Another Solution – Data Centers Powered by Alternative Energy Sources:

The immense amount of energy needed to power these LLMs, like the one behind ChatGPT, is creating a new market for data centers that run on alternative energy sources like geothermal, nuclear and flared gas, a byproduct of oil production. Supply of electricity, which currently powers the vast majority of data centers, is already strained from existing demands on the country’s electric grids. AI could consume up to 3.5% of the world’s electricity by 2030, according to an estimate from IT research and consulting firm Gartner.

Amazon, Microsoft, and Google were among the first to explore wind and solar-powered data centers for their cloud businesses, and are now among the companies exploring new ways to power the next wave of AI-related computing. But experts warn that given their high risk, cost, and difficulty scaling, many nontraditional sources aren’t capable of solving near-term power shortages.

Exafunction, maker of the Codeium generative AI-based coding assistant, sought out energy startup Crusoe Energy Systems for training its large-language models because it offered better prices and availability of graphics processing units, the advanced AI chips primarily produced by Nvidia, said the startup’s chief executive, Varun Mohan.

AI startups are typically looking for five to 25 megawatts of data center power, or as much as they can get in the near term, according to Pat Lynch, executive managing director for commercial real-estate services firm CBRE’s data center business. Crusoe will have about 200 megawatts by year’s end, Lochmiller said. Training one AI model like OpenAI’s GPT-3 can use up to 10 gigawatt-hours, roughly equivalent to the amount of electricity 1,000 U.S. homes use in a year, University of Washington research estimates.

Major cloud providers capable of providing multiple gigawatts of power are also continuing to invest in renewable and alternative energy sources to power their data centers, and use less water to cool them down. By some estimates, data centers account for 1% to 3% of global electricity use.

An Amazon Web Services spokesperson said the scale of its massive data centers means it can make better use of resources and be more efficient than smaller, privately operated data centers. Amazon says it has been the world’s largest corporate buyer of renewable energy for the past three years.

Jen Bennett, a Google Cloud leader in technology strategy for sustainability, said the cloud giant is exploring “advanced nuclear” energy and has partnered with Fervo Energy, a startup beginning to offer geothermal power for Google’s Nevada data center. Geothermal, which taps heat under the earth’s surface, is available around the clock and not dependent on weather, but comes with high risk and cost.

“Similar to what we did in the early days of wind and solar, where we did these large power purchase agreements to guarantee the tenure and to drive costs down, we think we can do the same with some of the newer energy sources,” Bennett said.

References:

https://aws.amazon.com/what-is/large-language-model/

https://spectrum.ieee.org/ai-energy-consumption

https://www.crn.com/news/cloud/microsoft-aws-google-cloud-market-share-q3-2023-results/6

Amdocs and NVIDIA to Accelerate Adoption of Generative AI for $1.7 Trillion Telecom Industry

SK Telecom and Deutsche Telekom to Jointly Develop Telco-specific Large Language Models (LLMs)

AI Frenzy Backgrounder; Review of AI Products and Services from Nvidia, Microsoft, Amazon, Google and Meta; Conclusions