Cignal AI & Del’Oro: Optical Network Equipment Market Decline Continues

Executive Summary & Overview:

Does anyone remember the fiber optic build out boom of the late 1990’s to early 2001? And the subsequent bust, which the industry still has not recovered from!

Fast forward to today, where we hear more and more about huge fiber demand from mega cloud service providers/Internet companies for intra and inter Data Center Connections. And the huge amount of fiber backhaul for small cells and cell towers.

Yet two respected market research firms- Cignal AI and Del’Oro Group– both say that optical network transport equipment revenue declined yet again.

Cignal AI said: “global spending on optical network equipment dropped for a third consecutive quarter, led by a larger than normal seasonal decline in China and weakening trends in EMEA.” However, Cignal AI (Andrew Schmitt) stated that “North American spending increased again quarter-over-quarter, with positive results reported by most vendors. Spending on Metro WDM continues to grow at the expense of LH WDM.”

Del’Oro Group reported in a press release: “revenues for Optical Transport equipment in North America continued to decline in the third quarter of 2017.”

“Optical Transport equipment purchases in North America was about 10 percent lower in the first nine months of 2017,” said Jimmy Yu, Vice President at Dell’Oro Group. “This has been one of the more challenging years for optical equipment manufacturers selling into North America. However, a few vendors in the region performed really well considering the tough market environment. For the first nine months of the year, Ciena was able to hold revenues steady, Cisco was able to grow revenues 14 percent, and Fujitsu experienced only a slight revenue decline,” Mr. Yu added.

–>Please see Editor’s Notes below for additional optical network equipment market insight and vendor perspective.

…………………………………………………………………………………………………………

Cignal AI Report Summary:

- North American spending increased again quarter-over-quarter, with positive results reported by most vendors. Spending on Metro WDM continues to grow at the expense of LH WDM.

- EMEA revenue fell sharply though this was the result of weakness at larger vendors – smaller vendors performed better. As in North America, LH WDM bore the brunt of the decline.

- Last quarter was the weakest YoY revenue growth recorded in China in over 4 years as momentum from 2Q17 spending failed to continue into the third quarter. Spending trends in the region remain difficult to predict.

- Revenue in the rest of Asia (RoAPAC) easedfollowing breakout results in India during 2Q17 though spending remains at historically high levels.

- Quarterly coherent 100G+ port shipments broke 100k units for the first time on a global basis. 100G+ Port shipments in China were flat QoQ and are substantially up YoY.

Cignal AI’s October 29, 2017 Optical Customer Markets Report discovered an unexpected weakness in 2017 optical transport equipment spending from cloud and co-location (colo) operators (see Cignal AI Reports Unexpected Drop in Cloud and Colo Spending). This surprising trend was then further supported by public comments later made by Juniper and Applied Optoelectronics.

Contact Info:

Cignal AI – 225 Franklin Street FL26 Boston, MA – 02110 – (617) 326-3996

Email: [email protected]

…………………………………………………………………………………………………………

Editor’s Notes:

1.One prominent Optical Transport Network Equipment vendor evidently feels the effect of the market slowdown. On November 8, 2017, Infinera reported a GAAP net loss for the quarter of $(37.2) million, or $(0.25) per share, compared to a net loss of $(42.8) million, or $(0.29) per share, in the second quarter of 2017, and net loss of $(11.2) million, or $(0.08) per share, in the third quarter of 2016.

Infinera also announced it is implementing a plan to restructure its worldwide operations in order to reduce its expenses and establish a more cost-efficient structure that better aligns its operations with its long-term strategies. As part of this restructuring plan, Infinera will reduce headcount, rationalize certain products and programs, and close a remote R&D facility.

2. Astonishingly, there’s an India based optical network equipment vendor on the rise. Successful homegrown Indian telecom vendors are hard to come by. That makes Bengaluru-based Tejas Networks something of an anomaly. Started 17 years ago (in 2000), Tejas is one of India’s few hardware producers.

Tejas Networks India Ltd. has made a name for itself in the optical networking market, especially within India, which looks poised for a boom in this sector (mainly due to fiber backhaul of 4G and 5G mobile data traffic). Nearly two thirds of its sales come from India, with the rest earned overseas.

“We are growing at 35% year-on-year and we hope to grow by at least 20% over the next two to three years,” says Sanjay Nayak, the CEO and managing director of Tejas, during an interview with Light Reading. “Overseas, we mainly target south-east Asian, Latin America and African markets.” Telcos in these markets have similar concerns to those in India, explains Nayak, making it easy for Tejas to address their demands.

“R&D is in our DNA and we believe that unless you come up with a differentiated product the market will not take you seriously,” says Nayak. “We have a huge advantage as an Indian player … [which] allows us to provide the product at a lesser price.”

Nayak believes that the experience of developing solutions for the problems faced by Indian telcos has helped the company to address overseas markets as well.

“Our products do very well for networks evolving from TDM to packet, which is a key concern of the Indian telcos,” he explains. “We realized that the US-based service providers were facing a similar problem of cross connect, which we were able to resolve. So, as we say, you can address any market if you are able to handle the Indian market.”

Read more at: http://www.communicationstoday.co.in/daily-news/17152-india-s-tejas-eyes-bigger-slice-of-optical-market

3. The long haul optical transport market is dominated by OTN (Optical Transport Network) equipment (which this editor worked on from 2000 to 2002 as a consultant to Ciena, NEC, and other optical network equipment and chip companies).

The OTN wraps client payloads (video, image, data, voice, etc) into containers or “wrappers” that are transported across wide area fiber optic networks. That helps maintain native payload structure and management information. OTN offers key benefits such as reduction in transport cost and optimal utilization of the optical spectrum.

OTN technology includes both WDM and DWDM. The service segment includes network maintenance and support services and network design & optimization services. On the basis of component, the market is divided into optical switch and optical transport. Based on end user, it is classified into government and enterprises.

According to Allied Market Research, OTN equipment market leaders include: Adtran, Inc., ADVA Optical Networking, Advanced Micro Devices Inc., Fujitsu, Huawei Technologies., ZTE Corporation., Belkin Corporation., Ciena Corporation., Coriant, and Allied Telesyn.

Above illustration courtesy of Allied Market Research

………………………………………………………………………………………………..

Note that Cisco offers OTN capability on their Network Convergence System (NCS) 4000 – 400 Gbps Universal line card. Despite that and other OTN capable gear, Cisco is not covered in the above mentioned Allied Market Research OTN report.

………………………………………………………………………………………………………………….

Global Switching & Router Market Report:

Separately, Synergy Research Group said in a press release that:

Worldwide switching and router revenues were well over $11 billion in Q3 and were $44 billion for last four quarters, representing 3% growth on a rolling annualized basis. Ethernet switching is the largest of the three segments accounting for almost 60% of the total and it is also the segment that has by far the highest growth rate, propelled by aggressive growth in the deployment of 100 GbE and 25 GbE switches.

In Q3 North America remained the biggest region accounting for over 41% of worldwide revenues, followed by APAC, EMEA and Latin America. The APAC region has been the fastest growing and this was again the case in Q3, with growth being driven in large part by spending in China, which benefited Huawei in particular.

Cisco’s share of the total worldwide switching and router market was 51%, with shares in the individual segments ranging from 63% for enterprise routers to 38% for service provider routers. Cisco is followed by Huawei, Juniper, Nokia and HPE. Their overall switching and router market shares were in the 4-10% range in Q3. There is then a reasonably long tail of other vendors, with Arista and H3C being the most prominent challengers.

![S&R Q317[1]](http://www.globenewswire.com/news-release/2017/11/27/1206112/0/en/photos/494670/0/494670.jpg?lastModified=11%2F27%2F2017%2004%3A20%3A07&size=3)

“The big picture is that total switching and router revenues are still growing and Cisco continues to control half of the market,” said John Dinsdale, a Chief Analyst at Synergy Research Group. “Some view SDN and NFV as existential threats to Cisco’s core business, with own-design networking gear from the hyperscale cloud providers posing another big challenge. While these are genuine issues which erode growth opportunities for networking hardware vendors, there are few signs that these are substantially impacting Cisco’s competitive market position in the short term.”

Contact Info:

To speak to a Synergy analyst or to find out more about how to access Synergy’s market data, please contact Heather Gallo @ [email protected] or at 775-852-3330 extension 101.

New ITU-T Standards for IMT 2020 (5G) + 3GPP Core Network Systems Architecture

New ITU-T standards related to “5G”:

ITU-T has reached first-stage approval (‘consent’ level) of three new international standards defining the requirements for IMT-2020 (“5G”) network systems as they relate to network operation, softwarization and fixed-mobile convergence.

The standards were developed by ITU-T’s standardization expert group for future networks, ITU-T Study Group 13.

Note: The first-stage approvals come in parallel with ITU-T Study Group 13’s establishment of a new ITU Focus Group to study machine learning in 5G systems.

End-to-end flexibility will be one of the defining features of 5G networks. This flexibility will result in large part from the introduction of network softwarization, the ability to create highly specialized network slices using advanced Software-Defined Networking (SDN), Network Function Virtualization (NFV) and cloud computing capabilities.

The three new ITU-T standards are the following:

- ITU Y.3101 “Requirements of the IMT-2020 network” describes the features of 5G networks necessary to ensure efficient 5G deployment and high network flexibility.

- ITU Y.3150 “High-level technical characteristics of network softwarization for IMT-2020” describes the value of slicing in both horizontal and vertical, application-specific environments.

- ITU Y.3130 “Requirements of IMT-2020 fixed-mobile convergence” calls for unified user identity, unified charging, service continuity, guaranteed support for high quality of service, control plane convergence and smart management of user data.

ITU’s work on “International Mobile Telecommunications for 2020 and beyond (IMT-2020)” defines the framework and overall objectives of the 5G standardization process as well as the roadmap to guide this process to its conclusion by 2020.

ITU’s Radiocommunication Sector (ITU-R) is coordinating the international standardization and identification of spectrum for 5G mobile development. ITU’s Telecommunications Standardization Sector (ITU-T) is playing a similar convening role for the technologies and architectures of the wireline elements of 5G systems.

ITU standardization work on the wireline elements of 5G systems continues to accelerate.

ITU-T Study Group 15 (Transport, access and home networks) is developing a technical report on 5G requirements associated with backbone optical transport networks. ITU-T Study Group 11 (Protocols and test specifications) is studying the 5G control plane, relevant protocols and related testing methodologies. ITU-T Study Group 5 (Environment and circular economy) has assigned priority to its emerging study of the environmental requirements of 5G systems.

ITU-T Study Group 13 (Future networks), ITU’s lead group for 5G wireline studies, continues to support the shift to software-driven network management and orchestration. The group is progressing draft 5G standards addressing subjects including network architectures, network capability exposure, network slicing, network orchestration, network management-control, and frameworks to ensure high quality of service.

……………………………………………………………………………………..

The “5G” wireline standards developed by ITU-T Study Group 13 and approved in 2017 include:

- ITU Y.3071 “Data Aware Networking (Information Centric Networking) – Requirements and Capabilities” will support ultra-low latency 5G communications by enabling proactive in-network data caching and limiting redundant traffic in core networks.

- ITU Y.3100 “Terms and definitions for IMT-2020 network” provides a foundational set of terminology to be applied universally across 5G-related standardization work.

- ITU Y.3111 “IMT-2020 network management and orchestration framework” establishes a framework and related principles for the design of 5G networks.

- ITU Y.3310 “IMT-2020 network management and orchestration requirements” describes the capabilities required to support emerging 5G services and applications.

- Supplement 44 to the ITU Y.3100 series “Standardization and open source activities related to network softwarization of IMT-2020”summarizes open-source and standardization initiatives relevant to ITU’s development of standards for network softwarization.

Reference:

http://news.itu.int/5g-update-new-itu-standards-network-softwarization-fixed-mobile-convergence/

…………………………………………………………….

“5G” Core Network functions & Services Based Architecture:

The primary focus of ITU-R WP5D IMT 2020 standardization efforts are on the radio aspects (as per its charter). That includes the Radio Access Network (RAN)/Radio Interface Technology (RIT), spectral efficiency, latency, frequencies, etc.

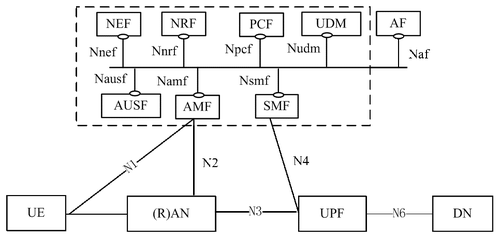

To actually deliver services over a 5G RAN, a system architecture and core network are required. The core network provides functions such as authentication, session management, mobility management, forwarding of user data, and (possibly) virtualization of network functions.

3GPP Technical Specification (TS) 23.501 — “System Architecture for the 5G System” — is more commonly referred to as the Service-Based Architecture (SBA) for the 5G Core network. It uses service-based interfaces between control-plane functions, while user-plane functions connect over point-to-point links. This is shown in the figure below. The service-based interfaces will use HTTP 2.0 over TCP in the initial release, with QUIC transport being considered for later 3GPP releases.

There are many aspects to this, but the white paper highlights:

- How the idea of “network function services” (3GPP terminology) aligns with the micro-services based view of network service composition

- How operators may take advantage of decoupled control- and user-plane to scale performance

- How the design might enable operators to deploy 5GC functions at edge locations, such as central offices, stadiums or enterprise campuses

The first 5G core standards (really specifications because 3GPP is not a formal standards body) are scheduled to be included in 3GPP Release 15, which “freezes” in June next year and will be formally approved three months later. This will be a critical release for the industry that will set the development path of the 5G system architecture for years to come.

Download white paper: Service-Based Architecture for 5G Core Networks

Editor’s Note:

From http://www.3gpp.org/specifications:

“The 3GPP Technical Specifications and Technical Reports have, in themselves, no legal standing. They only become “official” (standards) when transposed into corresponding publications of the Partner Organizations (or the national / regional standards body acting as publisher for the Partner).”

References:

http://www.lightreading.com/mobile/5g/5g-core-and-the-service-based-architecture/a/d-id/738456?

https://img.lightreading.com/downloads/Service-Based-Architecture-for-5G-Core-Networks.pdf

LoRaWAN gains momentum: NEC LoRaWAN server + New Zealand nationwide network

1. NEC has launched a new network server that complies with LoRaWAN (MAC and PHY specifications from the LoRa Alliance) to help telecoms carriers accelerate the creation of new IoT services. The new server implements device identification, data rate control and channel allocation for sensor devices complying with LoRaWAN through the LoRaWAN gateway. It also mediates data processing from each sensor device to the application server. As the LoRaWAN network server features a function for conducting flexible multi-tenant and multi-device control assuming a variety of service provision formats of communication carriers, it is capable of providing LoRaWAN network services to numerous companies and service providers.

LoRaWAN Network Server Connection–Image by NEC Corp.

…………………………………………………………………………..

In addition, its WebAPI capability makes it interoperable with a wide range of service applications using LoRa. This facilitates the utilization of data collected from sensor devices.

“This new server enables new IoT services to be flexibly provided to telecommunications carriers in combination with gateways and end-devices,” said Shigeru Okuya, senior vice president of NEC.

“NEC aims to provide LoRaWAN compliant solutions to companies around the world in the coming years as part of accelerating the creation of new IoT services and improving user convenience,” he added.

“NEC’s network server integrated with Semtech’s LoRa (PHY) Technology will give operators a competitive advantage that will contribute to society,” said Marc Pegulu, General Manager and Vice President of Semtech’s Wireless and Sensing Products Group.

“LoRa Technology offers long-range, low-power capabilities for next-generation IoT applications in vertical markets, including smart cities, smart building, smart agriculture, smart metering, and smart supply chain and logistics.”

The new NEC LoRaWAN servers will start shipping to IoT WAN connectivity providers in December.

http://www.nec.com/en/press/201711/global_20171115_01.html

https://www.telecomasia.net/content/nec-unveils-lorawan-compliant-network-server

2. New Zealand Nationwide LoRaWAN by Spark

New Zealand’s Spark has contracted French IoT network solutions specialist Kerlink to support a nationwide LoRaWAN rollout in the twin island nation.

Spark, the leading digital services provider in New Zealand, has already deployed the low power wide area (LPWA) network in parts of the country, and has signed on initial customers including farmer co-operatives Farmlands and Ballance Agri-Nutrients.

These companies are using the network to provide farmers with real-time information about their operations through an array of sensors.

“Spark already has created use cases that will demonstrate the LoRaWAN network’s energy-efficient, geolocation connectivity that is well suited for both the wide-open spaces and urban centers of New Zealand,” Kerlink Asia Pacific sales director Arnaud Boulay said.

The vendor is providing IoT stations that support bidirectional data exchange and geolocation capability and operate on the 923-MHz industrial, scientific, and medical (ISM) radio band.

Other early adopters include the National Institute of Water and Atmospheric Research (NIWA), and Spark is targeting customers in key sectors such as health, safety, transportation, asset tracking and smart cities.

Kerlink is a co-founder and board member of the LoRa Alliance, and has this year launched nationwide rollouts in India with Tata Communications and in Argentina.

Spark already has created use cases that will demonstrate the LoRaWAN networks energy-efficient, geolocation connectivity that is well suited for both the wide-open spaces and urban centers of New Zealand.

http://www.4-traders.com/KERLINK-27472140/news/Kerlink-Supports-Sparks-Nationwide-IoT-Network-Rollout-In-New-Zealand-25559327/

https://www.telecomasia.net/content/spark-deploying-nationwide-lorawan-network

Qualcomm to ISPs: Mesh WiFi networking via IEEE 802.11ax is the future of smart homes

Mesh networking can centralize IoT and other devices in smart homes and make them easier to manage, according to Qualcomm’s Connectivity Business unit lead, Rahul Patel. Carrier-class mesh networking could resolve connection issues, said Patel who strongly suggests internet service providers (ISPs) offer a mesh networking service.

- Market research firm Gartner predicts that 8.4 billion connected “Things” will be in use in 2017, up 31 percent from 2016.

- A GMSA report “The Impact of the Internet of Things: The Connected Home” suggests that up to 50 connected or Internet of Things (IoT) devices will be in use in the average connected home by 2020.

According to Qualcomm’s Wi-Fi router consumer survey of 1500 respondents from the UK, France, and Germany this year, 50% said they use a device in three different rooms simultaneously. [Those folks must have a lot of people living in their homes with separate rooms!]

Today, home broadband networks sometimes find themselves buckling under the weight of numerous mobile, IoT, and connected devices. Information streams can become confused, bottlenecks occur, and ISP throttling can cause too much strain for efficiency or reliability (expect more of this as FCC has just repealed net neutrality rules).

Qualcomm’s mesh technologies, including Wi-Fi SON, are already used by vendors including Eero, Google Wi-Fi, TP-Link, Luma, and Netgear.

Qualcomm is directing its mesh WiFi standards efforts within the IEEE 802.11ax task group which it serves as co-vice chair. That specification is being designed to improve overall spectral efficiency, especially in dense deployment scenarios. It’s predicted to have a top speed of around 10 Gb/s and operate in the already existing 2.4 GHz and 5 GHz spectrum bands.

Qualcomm has created a 12-stream mesh WiFi platform powered by a quad-core iCMOS micro-processor with a 64-bit architecture.

Editor’s Note:

IEEE 802.11ax draft 3.0 is scheduled to go out for IEEE 802.11 Working Group Letter ballot in May 2018 with Sponsor Ballot scheduled for May 2019. Please see references for further details.

…………………………………………………………………………………………………………………….

Patel says that development is already in play to use the platform, and mesh will be the “next big thing” for the Wi-Fi industry, with products expected to appear in the market based on Qualcomm technologies in the second half of 2018.

Carrier-class mesh networking could be used to map entire neighborhoods, in which connectivity problems can be quickly detected and fixed without constant customer reports, complaints, and costly engineer footfall.

As consumers expect more from their home Wi-Fi, however, they also expect ISPs to make sure systems are in working order and deliver what they promise.

“The operator is shouldering the burden of fixing issues in the home,” Patel says. “If they don’t, cloud providers such as Google will take over.”

If ISPs do not rise to the challenge, consumers may choose to go to a cloud provider instead.

“That [home] traffic is getting piped into their clouds rather than BT or Sky, and so ISPs are losing out on the traffic they are piping into someones home,” Patel added. “You as an operation are perceived to be the one to support the Wi-Fi in the home.”

“If you (ISPs) don’t move fast, you lose out on the home becoming a cloud providers’ and have no control over what happens in the home,” Patel said.

References:

http://www.zdnet.com/article/mesh-networking-is-the-future-of-iot-smart-homes/

http://www.ieee802.org/11/Reports/tgax_update.htm

AT&T Deploys LTE Licensed Assisted Access (LTE-LAA) Technology; Plans 5G Evolution

AT&T has introduced a high speed “4G” service in the form of LTE-Licensed Assisted Access (LAA) in Indianapolis, IN. LTE-LAA uses unlicensed spectrum. According to AT&T it will provide theoretical gigabit speeds to some areas of the city. LTE-LAA has reached a peak of 979 Mbps in a San Francisco, CA trial.

“Demand continues to grow at a rapid pace on our network,” the Bill Soards, President AT&T Indiana in a press release. That’s why offering customers the latest technologies and increased wireless capacity by combining licensed and unlicensed spectrum is an important milestone.”

The U.S. mega telco recently announced plans to roll out its 5G Evolution program in Minneapolis. That initiative – which aims to provide networks with the capability to support 5G when it is ready – already is in use in parts of Indianapolis and in Austin, TX. It features LTE Advanced features such as 256 QAM, 4×4 MIMO and 3-way Carrier Aggregation.

AT&T says that it invested $350 million in its wired and wireless network infrastructure in Indianapolis between 2014 and 2016.

References:

http://about.att.com/story/commercial_lte_licensed_assisted_access_indianapolis.html

ITU-R WP5D: Guidelines for evaluation of radio interface technologies for IMT-2020

ITU-R Working Party 5D: draft new Report ITU-R M.[IMT-2020.EVAL]

“Guidelines for evaluation of radio interface technologies for IMT-2020”

Backgrounder:

Among other work items, the October 2017 ITU-R WP5D meeting discussed proposals to correct minor errors and insert editorial improvements in draft new Report ITU-R M.[IMT-2020.EVAL]. Proposals to introduce some corrections including range of working distance in Indoor Hotspot channel models were agreed and reflected in the new version of the document.

Selected sections of this EVAL draft report follow (see NOTE at the end of the post and Comment in the box below it)…….

Introduction:

Resolution ITU-R 56 defines a new term “IMT-2020” applicable to those systems, system components, and related aspects that provide far more enhanced capabilities than those described in Recommendation ITU-R M.1645.

In this regard, International Mobile Telecommunications-2020 (IMT-2020) systems are mobile systems that include the new capabilities of IMT that go beyond those of IMT-Advanced.

Recommendation ITU-R M.2083 “IMT Vision – Framework and overall objectives of the future development of IMT for 2020 and beyond” identifies capabilities for IMT‑2020 which would make IMT-2020 more efficient, fast, flexible, and reliable when providing diverse services in the intended usage scenarios.

The usage scenario of IMT-2020 will extend to enhanced mobile broadband (eMBB), massive machine type communications (mMTC) and ultra-reliable and low latency communications (URLLC).

IMT-2020 systems support low to high mobility applications and much enhanced data rates in accordance with user and service demands in multiple user environments. IMT‑2020 also has capabilities for enabling massive connections for a wide range of services, and guarantee ultra‑reliable and low latency communications for future deployed services even in critical environments.

The capabilities of IMT-2020 include:

– very high peak data rate;

– very high and guaranteed user experienced data rate;

– quite low air interface latency;

– quite high mobility while providing satisfactory quality of service;

– enabling massive connection in very high density scenario;

– very high energy efficiency for network and device side;

– greatly enhanced spectral efficiency;

– significantly larger area traffic capacity;

– high spectrum and bandwidth flexibility;

– ultra high reliability and good resilience capability;

– enhanced security and privacy.

2. Scope:

These features enable IMT-2020 to address evolving user and industry needs.

The capabilities of IMT-2020 systems are being continuously enhanced in line with user and industry trends, and consistent with technology developments.

This Report provides guidelines for the procedure, the methodology and the criteria (technical, spectrum and service) to be used in evaluating the candidate IMT-2020 radio interface technologies (RITs) or Set of RITs (SRITs) for a number of test environments. These test environments are chosen to simulate closely the more stringent radio operating environments. The evaluation procedure is designed in such a way that the overall performance of the candidate RITs/SRITs may be fairly and equally assessed on a technical basis. It ensures that the overall IMT‑2020 objectives are met.

This Report provides, for proponents, developers of candidate RITs/SRITs and independent evaluation groups, the common evaluation methodology and evaluation configurations to evaluate the candidate RITs/SRITs and system aspects impacting the radio performance.

This Report allows a degree of freedom to encompass new technologies. The actual selection of the candidate RITs/SRITs for IMT-2020 is outside the scope of this Report.

The candidate RITs/SRITs will be assessed based on those evaluation guidelines. If necessary, additional evaluation methodologies may be developed by each independent evaluation group to complement the evaluation guidelines. Any such additional methodology should be shared between independent evaluation groups and sent to the Radiocommunication Bureau as information in the consideration of the evaluation results by ITU-R and for posting under additional information relevant to the independent evaluation group section of the ITU-R IMT-2020 web page (http://www.itu.int/en/ITU-R/study-groups/rsg5/rwp5d/imt-2020/Pages/submission-eval.aspx)

Evaluation guidelines:

IMT-2020 can be considered from multiple perspectives: users, manufacturers, application developers, network operators, service and content providers, and, finally, the usage scenarios – which are extensive. Therefore, candidate RITs/SRITs for IMT-2020 must be capable of being applied in a much broader variety of usage scenarios and supporting a much broader range of environments, significantly more diverse service capabilities as well as technology options. Consideration of every variation to encompass all situations is, however, not possible; nonetheless the work of the ITU-R has been to determine a representative view of IMT‑2020 consistent with the process defined in Resolution ITU-R 65, Principles for the process of future development of IMT‑2020 and beyond, and the key technical performance requirements defined in Report ITU-R M.[IMT-2020.TECH PERF REQ] – Minimum requirements related to technical performance for IMT-2020 radio interface(s).

The parameters presented in this Report are for the purpose of consistent definition, specification, and evaluation of the candidate RITs/SRITs for IMT-2020 in ITU-R in conjunction with the development of Recommendations and Reports such as the framework, key characteristics and the detailed specifications of IMT-2020. These parameters have been chosen to be representative of a global view of IMT-2020 but are not intended to be specific to any particular implementation of an IMT-2020 technology. They should not be considered as the values that must be used in any deployment of any IMT-2020 system nor should they be taken as the default values for any other or subsequent study in ITU or elsewhere.

Further consideration has been given in the choice of parameters to balancing the assessment of the technology with the complexity of the simulations while respecting the workload of an evaluator or a technology proponent.

This procedure deals only with evaluating radio interface aspects. It is not intended for evaluating system aspects (including those for satellite system aspects).

The following principles are to be followed when evaluating radio interface technologies for IMT‑2020:

− Evaluations of proposals can be through simulation, analytical and inspection procedures.

− The evaluation shall be performed based on the submitted technology proposals, and should follow the evaluation guidelines, using the evaluation methodology and the evaluation configurations defined in this Report.

− Evaluations through simulations contain both system-level and link-level simulations. Independent evaluation groups may use their own simulation tools for the evaluation.

− In case of evaluation through analysis, the evaluation is to be based on calculations which use the technical information provided by the proponent.

− In case of evaluation through inspection the evaluation is to be based on statements in the proposal.

The following options are foreseen for proponents and independent external evaluation groups doing the evaluations.

− Self-evaluation must be a complete evaluation (to provide a fully complete compliance template) of the technology proposal.

− An external evaluation group may perform complete or partial evaluation of one or several technology proposals to assess the compliance of the technologies with the minimum requirements of IMT-2020.

− Evaluations covering several technology proposals are encouraged.

Overview of characteristics for evaluation:

The characteristics chosen for evaluation are explained in detail in § 3 of Report ITU-R M.[IMT‑2020.SUBMISSION −Requirements, evaluation criteria and submission templates for the development of IMT‑2020] including service aspect requirements, spectrum aspect requirements, and technical performance requirements, the last of which are based on Report ITU‑R M.[IMT-2020.TECH PERF REQ]. These are summarized in Table 6-1, together with their high level assessment method:

− Simulation (including system-level and link-level simulations, according to the principles of the simulation procedure given in § 7.1 below).

− Analytical (via calculation or mathematical analysis).

− Inspection (by reviewing the functionality and parameterization of the proposal).

……………………………………………………………………………………………………………..

TABLE 6-1 Summary of evaluation methodologies:

| Characteristic for evaluation | High-level assessment method | Evaluation methodology in this Report | Related section of Reports ITU-R M.[IMT-2020.TECH PERF REQ] and ITU-R M.[IMT‑2020.SUBMISSION] |

| Peak data rate | Analytical | § 7.2.2 | Report ITU-R M.[IMT-2020.TECH PERF REQ], § 4.1 |

| Peak spectral efficiency | Analytical | § 7.2.1 | Report ITU-R M.[IMT-2020.TECH PERF REQ], § 4.2 |

| User experienced data rate | Analytical for single band and single layer;

Simulation for multi-layer |

§ 7.2.3 | Report ITU-R M.[IMT-2020.TECH PERF REQ], § 4.3 |

| 5th percentile user spectral efficiency | Simulation | § 7.1.2 | Report ITU-R M.[IMT-2020.TECH PERF REQ], § 4.4 |

| Average spectral efficiency | Simulation | § 7.1.1 | Report ITU-R M.[IMT-2020.TECH PERF REQ], § 4.5 |

| Area traffic capacity | Analytical | § 7.2.4 | Report ITU-R M.[IMT-2020.TECH PERF REQ], § 4.6 |

| User plane latency | Analytical | § 7.2.6 | Report ITU-R M.[IMT-2020.TECH PERF REQ], § 4.7.1 |

| Control plane latency | Analytical | § 7.2.5 | Report ITU-R M.[IMT-2020.TECH PERF REQ], § 4.7.2 |

| Connection density | Simulation | § 7.1.3 | Report ITU-R M.[IMT-2020.TECH PERF REQ], § 4.8 |

| Energy efficiency | Inspection | § 7.3.2 | Report ITU-R M.[IMT-2020.TECH PERF REQ], § 4.9 |

| Reliability | Simulation | § 7.1.5 | Report ITU-R M.[IMT-2020.TECH PERF REQ], § 4.10 |

| Mobility | Simulation | § 7.1.4 | Report ITU-R M.[IMT-2020.TECH PERF REQ], § 4.11 |

| Mobility interruption time | Analytical | § 7.2.7 | Report ITU-R M.[IMT-2020.TECH PERF REQ], § 4.12 |

| Bandwidth | Inspection | § 7.3.1 | Report ITU-R M.[IMT-2020.TECH PERF REQ], § 4.13 |

| Support of wide range of services | Inspection | § 7.3.3 | Report ITU-R M.[IMT-2020.SUBMISSION], § 3.1 |

| Supported spectrum band(s)/range(s) | Inspection | § 7.3.4 | Report ITU-R M.[IMT-2020.SUBMISSION], § 3.2 |

Section 7 defines the evaluation methodology for assessing each of these criteria.

………………………………………………………………………………………………………….

IMPORTANT NOTE: The excerpts shown above are not final and subject to change at future ITU-R WP 5D meetings. The remainder of this draft report is much too detailed for any tech blog. Note that development of the detailed specs for the RIT/SRIT chosen won’t start till early 2020!

………………………………………………………………………………………

References:

http://www.itu.int/en/ITU-R/study-groups/rsg5/rwp5d/imt-2020/Pages/submission-eval.aspx

https://www.itu.int/en/ITU-R/study-groups/rsg5/rwp5d/imt-2020/Pages/submission-eval.aspx

……………………………………………………………………………………………..

For a glimpse at what ITU-T and 3GPP are doing to standardize the network aspects/core network for IMT 2020 please refer to this post:

New ITU-T Standards for IMT 2020 (5G) + 3GPP Core Network Systems Architecture

Also note that 3GPP’s New Radio spec (3GPP release 15) is ONLY 1 candidate for the IMT 2020 Radio access Interface Technology (RIT). There are expected to be several others.

Gartner Group: SD-WAN Survey Yields Surprises

by Danellie Young | Ted Corbett | Lisa Pierce

Introduction:

A Gartner-conducted software-defined (SD)-WAN survey has identified the key drivers for SD-WAN adoption and preferences for managed services from non-carrier providers. Despite its relative immaturity, the perceived benefits create incentives for IT leaders responsible for networking to leap into SD-WAN pilots now.

Editor’s Notes:

- Please refer to our report on IHS-Markit analysis of the SD-WAN market. Cisco and VMware are the top two vendors due to recent acquisitions of Viptela and Velocloud respectively. Cisco also bought Meraki which provides a SD-WAN solution as well as business WiFi networks.

- According survey data from Nemertes Research, enterprises are not discarding their MPLS networks as they deploy SD-WANs. “Fully 78% of organizations deploying SD-WAN have no plan to completely drop MPLS from their WAN,” Nemertes John Burke reports. “However, most intend to reduce and restrict their use of it (MPLS), if not immediately then over the next few years.”

- “Although it brings a lot of benefits to the table, SD-WAN still uses the public Internet to connect your sites,” points out Network World contributor Mike C. Smith. “And once your packets hit the public Internet, you will not be able to guarantee low levels of packet loss, latency and jitter: the killers of real-time applications.”

…………………………………………………………………………………………………………………………..

Key Findings of Gartner Survey:

- Enterprise clients cite increased network availability, reliability and reduced WAN costs resulting from less-expensive transport as the top benefits of software-defined WAN.

- Enterprise clients are concerned about the large number of SD-WAN vendors and anticipate market consolidation, making some early choices risky.

- A lack of familiarity with the technology, the instability of the vendors, and skepticism about performance and reliability are the most common concerns when deploying SD-WAN.

- Nearly two-thirds of the organizations we surveyed prefer buying managed SD-WAN, demonstrating a preference for presales and postsales support. A preference for type of managed service provider does not align with legacy carrier MSP adoption rates.

Recommendations:

To maximize new SD-WAN opportunities, infrastructure and operations leaders planning new networking architectures should:

- Include SD-WAN solutions on their shortlists if they’re aggressively migrating apps to the public cloud, building hybrid WANs, refreshing branch WAN equipment and/or renegotiating a managed network service contract.

- Include a diverse range of management solutions related to SD-WAN considerations; don’t just look at carrier offers to determine the best option available to meet enterprise requirements.

- Compare each vendor’s current features and roadmaps with enterprise requirements to develop a shortlist, and use pilots and customer references to confirm providers’ ability to deliver on the most desirable features and functionality.

- Focus pilots on specific, critical success factors and negotiate contract terms and conditions to support service configuration changes, fast site roll-out and granular application reporting.

- Negotiate flexible WAN or managed WAN services contract clauses to support evolution to SD-WAN when appropriate.

…………………………………………………………………………………….

Gartner has forecast SD-WAN to grow at a 59% compound annual growth rate (CAGR) through 2021 to become a $1.3 billion market (see Figure 1 and “Forecast: SD-WAN and Its Impact on Traditional Router and MPLS Services Revenue, Worldwide, 2016-2020”). Simultaneously, the overall branch office router market is forecast to decline at a −6.3% CAGR and the legacy router segment will suffer a −28.1% CAGR through 2020.

SD-WAN equipment and services dramatically simplify the complexity associated with the management and configuration of WANs. They provide branch-office connectivity in a simplified and cost-effective manner, compared with traditional routers. These solutions enable traffic to be distributed across multiple WAN connections in an efficient and dynamic fashion, based on performance and/or application-based policies.

The survey data highlights that most of the respondent organizations are in the early stages of their SD-WAN projects. To qualify, respondents must be involved in choosing, implementing and/or managing network services and equipment for their company’s sites, while their primary role in the organization is IT-focused or IT-business-focused. We intentionally searched for companies that plan to use or are using SD-WAN. Of those surveyed, 93% plan to use SD-WAN within two years or are piloting and deploying now, with approximately 73% in pilot or deployment mode. These results do not reflect actual market adoption rates, because Gartner estimates that between 1% and 5% of enterprises have deployed SD-WAN. Although the results differ numerically, the qualitative feedback is compelling.

Related to specific number of sites, the responses are shown in Figure below:

Respondents using SD-WAN; n = 21 (small sample size; results are indicative). Totals may not add up to 100%, due to rounding.

Source: Gartner Group (November 2017)

……………………………………………………………………………………………………………………………….

SD-WAN Concerns

Enterprises cite their lack of deep technology familiarity as a key barrier to using SD-WAN. In fact, of those who plan for SD-WAN, nearly 50% have concerns about their lack of technical familiarity, followed by concerns over the stability of vendors and concerns about performance and reliability.

Editor’s Note: Surprisingly, enterprises don’t seem to be concerned with the lack of SD-WAN standards which dictates a single vendor solution/lock-in.

…………………………………………………………………………………

With more than 30 SD WAN vendors in the market and consolidation accelerating, this doesn’t come as a surprise.

Other key findings include:

- Vendor stability is a major concern. Among the 51% of respondents who selected performance and reliability as key drivers (n = 44), nearly half (45%) had concerns about the stability of the vendors.

- Many among the 50% who see agility as a key driver (n = 36) expressed concern about their lack of familiarity with the technology.

- Among organizations with fewer than 1,000 employees (n = 53), the most common concern is lack of familiarity with the technology (51%). Organizations with 1,000 to 9,999 employees (n = 38) find the ROI of the investment to be most common challenge (50%).

- Among the EMEA respondents (n = 48), half were most concerned about the stability of the vendors, followed closely by concerns about proven performance and reliability.

To purchase the complete Gartner SD-WAN report go to:

https://www.gartner.com/doc/3829464/survey-analysis-early-findings-yield

………………………………………………………………………………………………………………

References:

https://www.sdxcentral.com/sd-wan/definitions/software-defined-sdn-wan/

https://blogs.gartner.com/andrew-lerner/2017/06/03/sd-wan-is-going-mainstream/

Technology Insight for Software-Defined WAN [SD-WAN]

http://sd-wan.cloudgenix.com/Q217GartnerTechInsightforSD-WANSearch_registration.html

http://blog.ntt-sdwan.com/post/102ekiu/sd-wan-momentum-five-trends-to-look-out-for-in-2018

Broadband Forum’s vBG network spec targeted at SD-WANs; led by ONUG

Highlights of IDTechEx: IoT Connectivity Sessions and Exhibits: November 15-16, 2017

Introduction:

The Internet of Things (IoT) will connect existing systems and then augment those by connecting more things, thanks to wireless sensor networks and other technologies. Things on the ‘edge’ form mesh networks and can make their own automated decisions. This article reviews key messages from conference technical sessions on IoT connectivity and describes a new Wireless Mesh Sensor network which is an extension of IEEE 802.15.4.

Sessions Attended:

1. Overcoming Adoption Barriers To Achieve Mass IIoT Deployment, Iotium

Early adopters are realizing the complexities involved in scalable mass deployment of Industrial IoT. These includes deployment complexities, security issues starting from hardware root of trust to OS, network, cloud security and application vulnerabilities, and extensibility. This session will focus on these 3 areas in-depth to help you successfully deploy your own IIoT strategy.

2. Overcoming The Connectivity Challenge Limiting IoT Innovation, Helium

The hardware and application layers of IoT systems are supported by robust, mature markets, with devices tailored for any use case and pre-built infrastructure platforms from Microsoft, Google and AWS. But the connectivity layer, without which the entire system is useless, still has numerous challenges. It takes too much knowledge and time to get data from sensors to apps that most staffs don’t have. The speaker discussed a streamlined, secure approach to connectivity that will make building a wireless IoT network as easy as designing a mobile app, thereby removing the greatest barrier to mass IoT adoption.

3. Whitelabel The Future: How White Label Platforms Will Streamline The IoT Revolution, Pod Group

As expectations tend towards personalized, data-driven services, responding immediately to market changes is becoming a key differentiator, creating the need for mutual insight on both sides of the market. Whitelabelled platforms are an effective intermediary, allowing unprecedented levels of customer interaction and paving the way for truly end-to-end IoT systems.

Barriers to achieving a sustainable IoT business model:

-Businesses must have flexible resources and structures:

a] lacking tools to implement (new technology/billing)

b] organizational changes (retraining staff/expertise at top level)

-55% of large enterprises are not pursuing IoT (Analysys Mason)

-Digital proficiency lacking in 50% of companies (Price Waterhouse Cooper)

-IoT platforms can introduce users to systems as a whole & streamline management

There are several different types of IoT platforms:

-IoT Application Enablement Platform – in-field application (eg. device) management

-Connectivity Management Platform (CMP) – management of network connections

-Back-end Infrastructure as a Service (IaaS) – hosting space and processing power

-Hardware-specific Platform – only works with one type of hardware

Many platforms tied to specific provider/device:

– ‘Agnostic’ platforms ideal to integrate different types & retain adaptability (eg. connectivity management integrating device mgmt. & billing capabilities).

-CMPs offer a range of services: managing global connections, introducing providers to clients, integration with hardware vendors, etc.

-CMPs focus on centralized network management- not on building new services.

-Application Enablement Platforms focus on device management/insight–billing hierarchy enables new business services with additional layers, e.g. analytics.

What will the IoT landscape look like in the near future?

-Various connectivity technologies competing, platform technology and open-source driving software/service innovation.

-Hybrid platform offers ease of management, solid foundation for building recurring revenue from value-added services – ensures business is scalable and able to roll-out services quickly.

-Capable platform shifts focus from day-to-day management to building new bus. models and recurring rev. streams..

-Whitelabel platforms help to implement new business models throughout business, consolidate management of legacy and future systems, and build recurring revenue from end-to-end value-added services.

Choose right platform for your business – ease-of-use, billing hierarchies, multi-tech integration key to generating recurring revenue.

With a strong platform in place to future-proof devices and manage customer accounts and business, enterprise can be part of full IoT ecosystem, gaining value from every stage.

4. From Disappointing To Delightful: How To build With IoT, Orange IoT Studio

Many engineers, designers and business folks want to work with IoT devices, but don’t know where to begin. Come learn which mistakes to avoid and which best practices to copy as you integrate with IoT or build your own IoT products. This presentation examines the consistent, systematic ways that IoT tends to fail and delight. The talk explained what makes IoT unique, and examined why it’s not at all easy to classify IoT platforms and devices.

5. Many Faces Of LPWAN (emphasizing LoRaWAN), Multi-Tech Systems

Until recently, most M2M and Internet of Things (IoT) applications have relied on high-speed cellular and wired networks for their wide area connectivity. Today, there are a number of IoT applications that will continue to require higher-bandwidth, however others may be better suited for low-power wide-area network options that not only compliment traditional cellular networks, but also unlock new market potential by reducing costs and increasing the flexibility of solution deployments.

Low-Power Wide-Area Networks (LPWAN)s are designed to allow long range communications at low bit rates. LPWANs are ideally suited to connected objects such as sensors and “things” operating on battery power and communicating at low bit rates, which distinguishes them from the wireless WANs used for IT functions (such as Internet access).

Many LPWAN alternative specifications/standards have emerged – some use licensed spectrum such as ITU-R LTE Cat-M1 and 3GPP NB-IoT, while other alternatives such as LoRaWAN™ are based on as specification from the LoRA Alliance and uses unlicensed industrial, scientific, and medical (ISM) radio band/spectrum.

IoT has many challenges – from choosing the right device, to adding connectivity and then managing those devices and the data they generate. Here are just a few IoT connectivity challenges:

- Long battery life (5+ yrs) requires low power WAN interface

- Low cost communications (much lower than cellular data plans)

- Range and in-building penetration

- Operation in outdoor and harsh environments

- Low cost infrastructure

- Robust communications

- Permits mobility

- Scalable to thousands of nodes/devices

- Low touch management and provisioning – Easy to attach assets

- Highly fragmented connectivity due to a proliferation of choices

Mike Finegan of Multi-Tech presented several LPWAN use case studies, including tank monitoring in Mt. Oso, CA; point of sales terminals, kiosks, vending machines; oil and gas; distributed energy resources; agriculture; and a real time control school traffic sign (T-Mobile using NB-IoT equipment from MultiTech (the first public NB-IoT demo in North America).

Mr. Finegan concluded by emphasizing the importance of security functions needed in an IoT Connectivity Platform. A “trusted IoT platform” should reduce attack vectors, provide secure and reliable end to end communications, and device to headquarters management services.

6. What Makes a City Smart? Totem Power

The framework necessary to build holistic infrastructure that leverages capabilities essential to realizing the full potential of smart cities – concepts including curbside computing power, advanced energy resiliency and ubiquitous connectivity.

An interesting observation was that fiber trenches being dug to facilitate 5G backhaul for small cells and macro cells could accommodate electrical wiring for power distribution and charging of electric vehicles within the city limits.

………………………………………………………………………………………………

At it’s booth, Analog Devices/ Linear Technology displayed an exhibit of SmartMesh® – a Wireless Mesh Sensor Network that was based on a now proprietary extension of IEEE 802.15.4 [1]. SmartMesh® wireless sensor networking products are chips and pre-certified PCB modules complete with mesh networking software; enabling sensors to communicate in tough Industrial Internet of Things (IoT) environments.

Note 1. IEEE 802.15.4 is a standard which defines the operation of low-rate wireless personal area networks (LR-WPANs) via PHY and MAC layers. It focuses on low-cost, low-speed ubiquitous communication between devices.

……………………………………………………………………………………………………….

The Industrial Internet of Things (IoT) wireless sensor networks (WSNs) must support a large number of nodes while meeting stringent communications requirements in rugged industrial environments. Such networks must operate reliably more than ten years without intervention and be scalable to enable business growth and increasing data traffic over the lifetime of the network.

More information on SmartMesh® is here.

……………………………………………………………………………………………….

References:

https://www.idtechex.com/internet-of-things-usa/show/en/

https://www.idtechex.com/internet-of-things-usa/show/en/agenda

http://www.linear.com/dust_networks/

Ericsson Files 5G Patent; Teams up with Bharti Airtel on 5G Evolution & Massive MIMO

1. Ericsson Files End-to-End “5G” Patent Application:

Ericsson has filed what it is calling a “landmark” end-to-end “5G” patent application incorporating numerous inventions from the vendor.

The patent application combines the work of 130 Ericsson inventors and contains the vendor’s complementary suite of 5G innovations.

“[The application] contains everything you need to build a complete 5G network – from devices, the overall network architecture, the nodes in the network, methods and algorithms, but also shows how to connect all this together into one fully functioning network,” Ericsson principal researcher Dr Stefan Parkvall said.

“The inventions in this application will have a huge impact on industry and society: they will provide low latency with high performance and capacity. This will enable new use cases like the Internet of Things, connected factories and self-driving cars,” he added.

Ericsson has filed a worldwide patent with the World Intellectual Property Organization (WIPO) and a U.S. patent with the U.S. Patents and Trademarks Office (USPTO).

Reference:

https://www.telecomasia.net/content/ericsson-files-end-end-5g-patent-application

……………………………………………………………………………………..

2. Ericsson & Bharti Airtel “5G” Partnership:

“Ericsson will work with Bharti Airtel on creating a strategic roadmap for evolution of the (wireless) network to the next-gen 5G technology,” Ericsson Senior Vice President and Head of Market Area South East Asia, Oceania and India, Nunzio Mirtillo told reporters in New Delhi, India, according to the Economic Times (see references below).

On Friday November 17th, Ericsson showcased the first live “5G” end-to-end demonstration here using its 5G test bed and 5G New Radio (“NR” specified by 3GPP).

The company already has 36 such MoUs with global telecom operators. Under these agreements, Ericsson is trialing 5G technology and other solutions across verticals with these telcos.

Ericsson had earlier said that timely deployment plan for 3.3-3.6GHz band is crucial for the 5G network rollout in India. The band has already been identified as the primary band for the introduction of 5G services in the country before 2020.

Ericsson India Country Manager Nitin Bansal said, ” Besides telcos, startups, enterprises and academia have to come together to develop 5G use cases in India.”

Mr. Bansal said that Ericsson through its partnership with IIT-Delhi is evaluating 5G use cases around Healthcare, automotive and education verticals.

Earlier this year, Bharti Airtel had inked a similar pact with telecom gear maker Nokia to expand their partnership to areas like 5G technology standard and management of connected devices. Hence, it remains to be seen if both wireless network equipment vendors will be supplying base stations to Bharti Airtel.

India plans to roll out 5G services for consumers by 2020 and to achieve that objective, the government has this week set up a high-level forum that will evaluate and approve road maps and action plans to bring in the latest technology in the country.

References:

Operators around the globe gearing up for 5G: Ericsson survey

IHS-Markit: 15% Drop in Global Optical Network Equipment Sales; Cisco and VMware are SD-WAN market leaders

- Optical Network Equipment Market:

IHS-Markit reports that the optical network equipment market slumped 3% in the third quarter from the same period last year. Huawei was #1 in optical network sales, followed by Ciena, which ranked first in North America where Huawei isn’t permitted to sell its gear.

Highlights:

- In the third quarter of 2017 (Q3 2017), the global optical equipment market declined 15 percent quarter over quarter and 3 percent year over year as North America, Latin America and EMEA (Europe, the Middle East and Africa) experienced significant reductions in spending; the Asia Pacific region was up 2 percent on a year-over-year basis

- The metro wavelength-division multiplexing (WDM) segment was down slightly in Q3 2017 from the prior quarter but increased 3 percent from a year ago; long-haul WDM declined 9 percent year over year

- Huawei remained the worldwide optical equipment market leader in Q3 2017; Ciena was the number-two optical equipment vendor by revenue globally and maintained its number-one position in North America

IHS-Markit analysis:

The worldwide optical equipment market declined 15 percent sequentially and 3 percent year over year in Q3 2017 as soft growth in the Asia Pacific region was not sufficient to offset the declines in EMEA, North America and Latin America.

Recent performance in some corners of the optical components market has many in the industry looking to the market in China and questioning whether it can sustain the high investment levels seen over the past 18 months. While China was indeed down sharply sequentially as is typical for the quarter, it did manage to stay in growth territory on a year-over-year basis. Recent bid activity in China indicates that further significant investments in backbone and provincial networks are still ahead.

In Q3 2017, the WDM equipment segment declined 15 percent from the prior quarter and was down 2 percent from a year ago. The metro WDM segment fell slightly quarter over quarter, but increased 3 percent year over year, supporting our view that this will be the main growth vector for the market moving forward. The long-haul segment sank 23 percent quarter over quarter and was down 9 percent year over year. Subsea revenue also declined both sequentially and on a year-over-year basis in Q3 2017.

Huawei remained the optical equipment market leader in Q3 2017 despite a significant seasonal drop in revenue both sequentially and year over year. Tepid spending in Western Europe was responsible for a large part of Huawei’s overall decline in the quarter. Ciena moved back up to the number-two position worldwide for Q3 2017. The company continues to be the dominant optical equipment vendor in North America, and it also made notable progress outside its home market in Q3 2017 with strong year-over-year gains in EMEA, Latin America and Asia Pacific.

Analyst Quotes:

“The metro WDM segment fell slightly quarter over quarter, but increased 3% year over year, supporting our view that this will be the main growth vector for the market moving forward,” report author Heidi Adams said.

“Huawei remained the optical equipment market leader in Q3 2017 despite a significant seasonal drop in revenue both sequentially and year over year,” Adams said. “Tepid spending in Western Europe was responsible for a large part of Huawei’s overall decline in the quarter.”

Ciena “continues to be the dominant optical equipment vendor in North America, and it also made notable progress outside its home market in Q3 2017 with strong year-over-year gains in EMEA, Latin America and Asia Pacific,” Adams added.

Optical report synopsis:

The IHS Markit optical network hardware report tracks the global market for metro and long-haul WDM and Synchronous Optical Networking (SONET)/Synchronous Digital Hierarchy (SDH) equipment, Ethernet optical ports, SONET/SDH ports and WDM ports. The report provides market size, market share, forecasts through 2021, analysis and trends.

References:

https://technology.ihs.com/597065/optical-network-hardware-market-cools-off-in-q3-2017

…………………………………………………………………….

Related article:

Optical Networks Booming in India

…………………………………………………………………………………………………………………………………………………..

2. SD-WAN Market:

IHS Markit offered a much more bullish assessment for software-defined (SD) WAN vendors.

Consolidation and acquisitions are well underway in the software-defined wide area network (SD-WAN) market as vendors race to include SD-WAN technology in their offerings. Following Cisco’s acquisition of Viptela, VMware carried out its own acquisition of VeloCloud, the SD-WAN revenue leader in the first half of 2017, for an undisclosed amount.

“VMware and Cisco have acquired the two SD-WAN market share leaders, making the SD-WAN market a two-horse race for the number-one spot,” said Cliff Grossner, PhD and senior research director/advisor for cloud and data center markets at IHS Markit. “And we could see even more consolidation as vendors set out to add SD‑WAN to their capability sets, especially since the technology is key to supporting connectivity in the multi-clouds that enterprises are building.”

According to the IHS Markit Data Center and Enterprise SDN Hardware and Software Biannual Market Tracker, SD-WAN is currently a small market, totaling just $137 million worldwide in the first half of 2017 (H1 2017). However, global SD-WAN hardware and software revenue is forecast to reach $3.3 billion by 2021 as service providers partner with SD-WAN vendors to deploy overlay solutions — and as virtual network function (VNF)–based solutions become more closely integrated with carrier operations support systems (OSS) and business support systems (BSS).

“Currently, the majority of SD-WAN revenue is from appliances, with early deployments focused on rolling out devices at branch offices,” Grossner said. “Moving forward, we expect a larger portion of SD-WAN revenue to come from control and management software as users increasingly adopt application visibility and analytics services.”

More highlights from the IHS Markit data center and enterprise SDN report:

- Globally, data center and enterprise software-defined networking (SDN) revenue for in-use SDN-capable Ethernet switches, SDN controllers and SD-WAN increased 5.4 percent in H1 2017 from H2 2016, to $1.93 billion

- Based on in-use SDN revenue, Cisco was the number-one market share leader in the SDN market in H1 2017, followed by Arista, White Box, VMware and Hewlett Packard Enterprise

- Looking at the individual SDN categories in H1 2017, White Box was the front runner in bare metal switch revenue, VMware led the SDN controller market segment, Dell held 45 percent of branded bare metal switch revenue and Hewlett Packard Enterprise had the largest share of total SDN-capable (in-use and not-in-use) branded Ethernet switch ports

Reference: