Verizon Completes $1.8B Acquisition of XO Communications

Verizon has completed its $1.8 billion acquisition of XO Communications’ fiber business in a deal announced almost one year ago. The company expects to achieve synergies by incorporating XO’s fiber assets as part of its current network operations, with the total operating and expense savings estimated at over $1.5 billion. The XO takeover is expected to help boost Verizon’s enterprise and wholesale business, as well as improve backhaul for its mobile network. Integration of all XO operations and facilities is expected to commence immediately, Verizon said.

Verizon also says it will lease wireless frequencies from NextLink Wireless, a former XO affiliate, in a deal that gives the carrier an option to buy NextLink. That might help the mega telco with its plan to offer high speed wireless broadband (4G or 5G).

In September, Verizon’s CFO Fran Shammo told the audience at a Goldman Sachs & Co. conference that Verizon’s fixed wireless 5G, with 1 Gbit/s connections to the home, will take on cable, “or any broadband connection for that matter,” and that deploying 5G will be much cheaper than deploying FiOS. (See Verizon CFO: Eat Our (Fixed) 5G Dust!)

Shammo also said that the company’s key advantage in deploying 5G is the rights to lease 28GHz bandwidth from NextLink. “That covers 40% of the US,” he said then, adding “That’s all licensed for fixed wireless use for the time being, as per the Federal Communications Commission (FCC) rules. We’re hoping that’ll become mobile at some point down the road.”

AT&T in Advanced Discussions to Trial Project AirGig for High Speed Internet

AT&T has entered “advanced discussions” with electric utilities to test its Project AirGig wireless technology in two places by the fourth quarter, the telco said in a press release. The high-speed broadband technology relies on antennas placed on power lines to transmit wireless signals. One of the locations will be in the US. The others will be determined in the coming months, according to the company.

AT&T Chief Strategy Officer John Donovan told USA Today that an announcement about the power companies involved would come soon, in a matter of “days to weeks.” It will likely be in the south to avoid the variable of winter, Donovan added.

AT&T has said that Project Airgig as a new way to deliver fast Internet service with high-speed connections at a lower cost by delivering over (but not through) existing power lines. The infrastructure associated with it is cheaper and easier to deploy than fiber, the company says. Whereas fiber internet requires digging up dirt to run wires, Airgig delivers through relatively inexpensive plastic antennas on top of power lines.

Preliminary Model of AirGig Antenna- side view, Photo Courtesy of AT&T

…………………………………………………………………………

“We are looking forward to begin testing the possibilities of AT&T Labs’ invention for customers and utility companies,” said Andre Fuetsch, president of AT&T Labs and chief technology officer. “AT&T is focused on delivering a gigabit-per-second speed everywhere we can with our wired and wireless technologies. Project AirGig represents a key invention in our 5G Evolution approach. AT&T Labs is ‘writing the textbook’ for a new technology approach that has the potential to deliver benefits to utility companies and bring this multi-gigabit, low-cost internet connectivity anywhere there are power lines – big urban market, small rural town, globally.”

AT&T says it has been working on the technology for more than 10 years and has more than 200 patents associated with it. The potential that other companies would start sniffing around the patents prompted the public disclosure in September, Donovan said at the time.

“AT&T Labs engineers and scientists invented low-cost plastic antennas, a Radio Distributed Antenna System (RDAS), mmWave surface wave launchers and inductive power devices,” the press release noted.

Another 5G Partnership– Nokia and Orange

In almost 50 years of observing tech trends and markets, the most hyped of all time is 5G. It’s a market that doesn’t exist and won’t until 2021 at the earliest. That didn’t stop Verizon, AT&T, Telia and numerous other wireless telcos announcing 5G trials this year!

The latest 5G partnership (whatever that means) is network equipment behemoth Nokia (a combo of Nokia-Siemens, Lucent and Alcatel) combining with Orange (formerly France Telecom) to progress 5G. Does that mean that Nokia will be the exclusive 5G network equipment provider to Orange? We doubt that!

As part of the agreement, the pair will focus on making the transition from 4G to 5G network connectivity a pain free job, to pave the way for such innovations as smarter cities, connected vehicles and remote healthcare. This work will also include the application of ultra-broadband leveraging new frequency bands, cloud RAN and massive MIMO, IoT, end-to-end network slicing techniques and energy efficiency.

“In line with the Orange Essentials 2020 strategy, Orange places innovation at the heart of its drive to deliver an unmatched customer experience,” said Alain Maloberti, Senior Vice President, Orange Labs Networks

“Working with Nokia, we are preparing the evolution of our networks from 4G to 5G, with multiple services on a single infrastructure to deliver a quality tailored for each service requirement. Our new services will enhance people’s lives and accelerate the digitization of vertical industries.”

“With our breadth of Radio, IP and Optics technologies, and the expertise of Bell Labs, Nokia is proud to be assisting Orange in the introduction of 5G and the application of the Future X Network paradigm,” said Marc Rouanne, chief Innovation and Operating Officer at Nokia, said:

“Through this collaboration, we will test 5G applications for different industry segments and measure the benefits of extremely short latency and very high speeds. We are also delighted to be applying our world-class R&D expertise in Paris and Lannion in this project.”

Prior to the announcement, the two already had a relationship in place, namely a research partnership between Orange Labs and Nokia Bell Labs.

THE 5 KEYS TO 5G according to Nokia:

FBR: AT&T Earnings Analysis: International & Bundling Efforts; Fiber Investment Uptick

by David Dixon and Mike He, FBR & Co. (edited by Alan J Weissberger)

Summary and Recommendation:

AT&T reported largely mixed 4Q16 results, with both top-line and adjusted EBITDA (Earnings Before Interest, Taxes, Depreciation and Amortization) misses driven by fewer upgrades and continued legacy service weakness. Lower depreciation expenses helped lift adjusted EPS to $0.66. Strong international and entertainment revenues were key to offsetting ongoing weakness in consumer mobility and an anemic business solutions segment, which continues to be handicapped by weak legacy service demand. Promotional pricing of DIRECTV NOW (Internet TV package), with zero-rating [1], saw strong initial adoption, helping to offset churn from the 2G network shutdown.

With Ajit Pai recently named as chairman of the FCC, net neutrality is likely to be overturned; and zero-rating will likely be endorsed, paving the way for AT&T’s bundling strategy. Potential tax and regulatory reform should help the telcos attack cable’s historic advantage through a fiber-lead investment uptick amid consolidation. So, while tax reform is accretive to cash flow, we believe much of this benefit will be absorbed from a higher capex trajectory.

Note 1. Zero-rating (also called toll-free data or sponsored data) is the practice of mobile network operators (MNO), mobile virtual network operators (MVNO), and Internet service providers (ISP) not to charge end customers for data used by specific applications or internet services through their network, in limited or metered mobile data plans.

Key Points:

■ 4Q16 results recap. Consolidated revenues declined 0.7% YOY, to $41.8B, in line with our estimate but below consensus’ estimate of $42.0B. By segment: Business solutions delivered revenues of $18.0B, entertainment and Internet services had revenues of $13.2B, consumer mobility had revenues of $8.4B, and international had revenues of $1.9B. Adjusted EBITDA was $12.2B, versus consensus’ estimate of $12.8B and our estimate of $13.1B. EBITDA margins expanded by a modest 6 bps YOY, to 29.1%. Consumer mobility postpaid net adds were 270,000, prepaid net adds were 406,000, and postpaid churn was 1.25%.

■ In-line FY17 guidance. Management provided FY17 revenue guidance of low single-digit growth, in line with consensus’ estimate of +1.6% YOY, with adjusted EPS growth in the mid-single-digit range, adjusted operating margin expansion, capex of $22B, and free cash flow of $18B.

■ Stay tuned for a fiber-induced investment uptick. Management would not take the bait on plans to extend fiber investment, but we think this is inevitable. Beyond the in-region Boston fiber deployment, our checks indicate that Verizon has issued fiber construction RFPs for the two-year buildout of an additional 20 to 30 cities. Not only would this likely invoke a competitive response from cable companies, it may also drive an acceleration and expansion in T’s fiber plans to deploy to 12.5 million homes (aside from any tax reform and the extension of bonus depreciation, which could both accelerate deployment).

Can AT&T drive earnings growth?

With the LTE network buildout complete and AT&T diversifying into Mexico to alleviate churn pressures, further changes to upgrade eligibility are likely. 6 to 12 Months Can AT&T drive earnings growth? Smartphone activations remain significant. Strategic initiatives with Samsung and Google, coupled with support of the Windows Phone ecosystem by MSFT, NOK, and other OEMs, are key to lower wireless subsidy pressure but it is early days. We think AT&T will continue to consider pricing action to augment growth once the LTE network build is complete. But competitive intensity is likely to increase in FY16, so this will prove difficult absent consolidation or until T-Mobile US becomes spectrum challenged, which we think is still one year away and a function of T-Mobile’s commitment to continue network investment.

How will AT&T fare in the changing wireless landscape in 2017 and beyond?

Our strategic concerns for AT&T include:

(1) the Apple eSIM impact, should Apple be successful in striking wholesale agreements;

(2) the Google MVNO impact, which could strip the company of the last bastion of connectivity revenue; and

(3) a WiFi first network from Comcast, coupled with a wholesale agreement with a carrier, which would enable a competitor and increase pricing pressure.

Does AT&T have a sustainable spectrum advantage compared with other carriers?

AT&T is behind Verizon in spectrum and out of spectrum in numerous major markets, according to our vendor checks. However, with additional density investment, it is reasonably well positioned to benefit from the combination of coverage layer (700 MHz and 850 MHz) and capacity layer (1,700 MHz and 1,900 MHz and soon-to-be-confirmed 2,300 MHz) spectrum and will focus on LTE and LTE Advanced, as well as refarming 850 MHz/1,900 MHz spectrum for additional coverage and capacity. Yet this nonstandard LTE band will cost more capex and take longer to implement. In the short run, aggressive cell splitting is expected, and metro Wi-Fi and small-cell solutions with economic backhaul solutions are becoming available, allowing for greater surgical reuse of existing spectrum. Sprint s differentiation through Clearwire spectrum is only likely to modestly affect AT&T relative to Verizon. Furthermore, with 70% 80% of wireless data traffic on Wi-Fi and only 20% of capacity utilized, this suggests a focus in this area to manage data usage growth.

Conclusions:

We expect the wireless segment to continue to be challenged by T-Mobile US and a resurgent Sprint. We are less bullish on near-term improvements in capex intensity due to cultural challenges associated with the much-needed migration to software centric networks, coupled with the need to upgrade its fiber plant aggressively to improve its competitive positioning and lay the foundation for efficiency improvement.

…………………………………………………………………………………………………………………………………………………….

End Quote:

“2016 was a transformational year for AT&T, one in which we made tremendous progress toward our goal of becoming the global leader in telecom, media and technology,” said Randall Stephenson, AT&T Chairman and CEO. “We launched DIRECTV NOW, our innovative over-the-top streaming service. Our 5G evolution plans and improved spectrum position are paving the way for the next-generation of super-fast mobile and fixed networks. And we shook-up the industry with our landscape-changing deal to acquire Time Warner, the logical next step in our strategy to bring together world-class content with best-in-class distribution which will drive innovation and more choice for consumers.”

“At the same time, we performed at a high level in 2016 with growing revenues, expanding adjusted consolidated operating margins and solid adjusted earnings growth, and we hit our $1.5 billion DIRECTV cost-synergy target. We also delivered record cash from operations, which allowed us to return substantial value to investors and invest more in the U.S. economy.”

Reference:

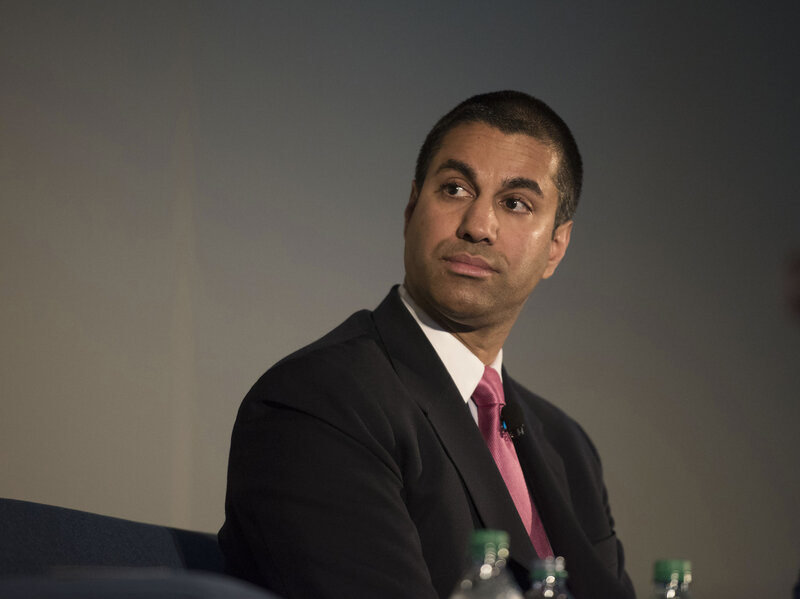

Now Official:Trump Picks Ajit Pai as new FCC Chairman

Trump Taps FCC Commissioner Ajit Pai to Be Agency Chairman

Pai has been critic of net neutrality, other Obama-era rules; wants to roll back regulations hindering investment

By JOHN D. MCKINNON, Wall Street Journal

President Donald Trump named Ajit Pai, a GOP member of the Federal Communications Commission, to be the agency’s chairman. The designation of Mr. Pai, a sitting commissioner, allows the new administration to hit the ground running in the telecommunications area, a huge and dynamic part of the economy. Mr. Pai brings a staunchly free-market approach that generally appears to jibe with Mr. Trump and his economic advisers.

Mr. Pai, a lawyer and former Senate aide, has been a frequent critic of new telecommunications rules pushed by the Obama administration, including its far-reaching net-neutrality regulations, designed to ensure that all internet traffic is treated equally. Mr. Pai, 44, has suggested in recent weeks that he intends to apply a “weed whacker” to various FCC rules that he thinks could hinder investment in new broadband capacity.

In a statement on Monday afternoon, Mr. Pai said he was “deeply grateful” to Mr. Trump. He said he looks forward to working with other policy makers, including Congress, to bring the “benefits of the digital age to all Americans.”

Mr. Pai likely would be able to move quickly on some of his priorities, such as rolling back aspects of the net-neutrality regulations. The five-member commission will have a 2-1 GOP majority, with two seats vacant, following Democratic Chairman Tom Wheeler’s already announced resignation.

But some steps likely would require legislation by Congress, which could take a while, given other priorities such as health-care and tax overhauls. Some observers believe Congress is more likely to take up major telecommunications changes next year.

Write to John D. McKinnon at [email protected]

……………………………………………………………………………………….

Editor’s Note:

Mr. Pai has been a commissioner at the FCC since 2012, when he was appointed by then-President Obama and confirmed by the Senate. He has long been critical of net neutrality, saying that the problem it’s trying to solve — big internet providers acting as gatekeepers to what we see and do online — doesn’t exist. He recently reiterated a prediction that the commission’s Open Internet Order, which established net neutrality, would be reversed or overturned in one way or another. He’ll now have the chance to play a role in that.

“On the day that the Title II Order was adopted, I said that ‘I don’t know whether this plan will be vacated by a court, reversed by Congress, or overturned by a future commission. But I do believe that its days are numbered,’” Pai said. “Today, I am more confident than ever that this prediction will come true.”

Daniel Acker/Bloomberg/Getty Images

IHS Survey: SDN Live in the Data Center Taking Longer Than Expected

By Cliff Grossner, Ph.D., senior research director and advisor, cloud and data center research practice, IHS Markit

Editor’s Note:

We’ve been big skeptics of SDN in the Data Center with the exception of the mega cloud companies (e.g. Google, Microsoft, Facebook, Alibaba, Baidu, etc) that create their own specs and do all the infrastructure design themselves. We are even more skeptical of SD-WANs where there are no definitive standards and hence no vendor inter-operability.

Highlights:

- In the data center, lab trials for software-defined networking (SDN) are still dominant over production trials and live deployments

- Service provider bare metal switching deployments have stalled, but more bare metal switches are in-use for SDN

- Cisco and Juniper were named as the top data center SDN vendors by respondent service providers

IHS Analysis:

IHS Markit’s data center SDN survey provides insight into how cloud and telecom service provider data centers are evolving for SDN.

Data center SDN deployments going slowly

Two-thirds of the service providers participating in our 2016 survey are still conducting lab trials of data center SDN, down only slightly from three-quarters in 2015.

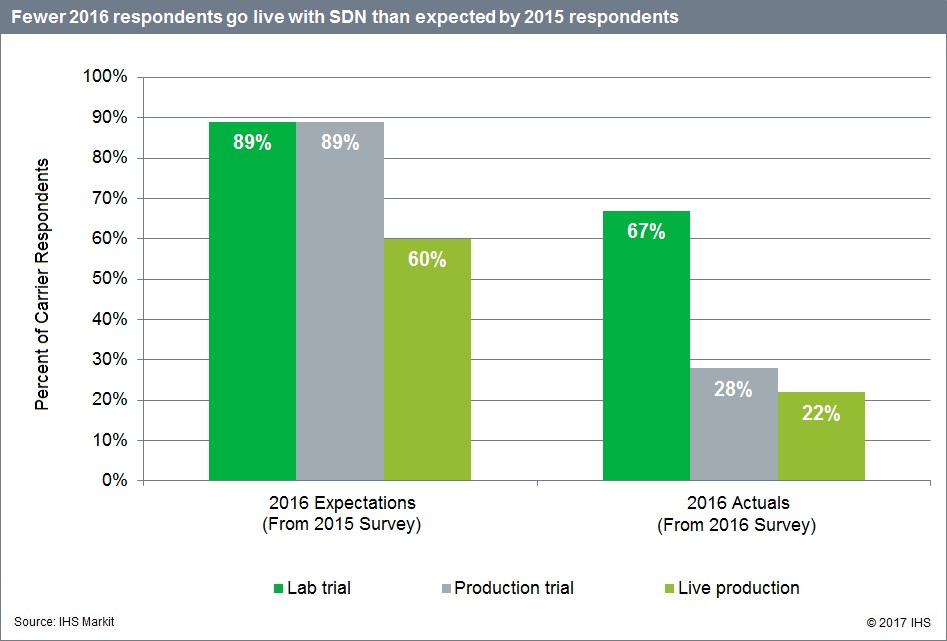

The number of respondents in production trials and live deployment in 2016 continued to be lower than expected by those taking part in the prior year’s study; see the chart below.

Getting to live production is taking more time than expected. This means that the most innovation-driven part of the market critical for new revenue—SDN controllers, data center orchestration and SDN applications—is still wide open. Although the leaders in the SDN service provider data center market are becoming clearer, especially for physical network equipment, we do not expect the market to solidify until live deployments ramp.

Bare metal switching stalls

Bare metal switch ports comprise, on average, 33 percent of respondents’ data center Ethernet switch ports (the same as our 2015 study). And, on average, 24 percent of bare metal switch ports are in-use for SDN, up from 12 percent in the 2015 survey.

The majority of bare metal switches are still being deployed at large cloud service providers (CSPs) because skilled programmers who are adept at dealing with bare metal switching are required to ensure success.

By 2018, bare metal Ethernet switch ports in the data center are expected to reach 41 percent among operators surveyed.

Service providers name Cisco and Juniper top SDN vendors

The leaders in the service provider data center SDN market are solidifying for physical network equipment.

In an open-ended question, we asked respondents whom they consider to be the top three data center SDN hardware/software product vendors. Cisco, the big player in enterprise networking, was identified as a top-three vendor by 72 percent of respondents, while Juniper was named by 39 percent of respondents.

Cisco continues to get traction with its SDN solutions, including with educational institutions providing online learning for students, CSPs deploying self-service portals and enterprises adopting hybrid cloud architectures. Juniper, meanwhile, is gaining footing with its contrail cloud and contrail networking solutions.

Data Center SDN Synopsis:

For its 30-page 2016 data center SDN survey, IHS Markit interviewed service providers that have deployed or plan to deploy or evaluate SDN by the end of 2018 about their plans to evolve their data centers and adopt new technologies over the next 2 years. Respondents were asked about SDN deployment drivers, barriers and timing; expected capex and opex changes with SDN; use cases; security technologies; applications; top-rated vendors; and more.

For information about purchasing this report, contact the sales department at IHS Markit in the Americas at (844) 301-7334 or[email protected]; in Europe, Middle East and Africa (EMEA) at +44 1344 328 300 or

or Asia-Pacific (APAC) at +604 291 3600 or [email protected]

Weightless SIG Hopes to Drive LPWA Standard in ETSI; Ubiik as IoT Game Changer?

The Weightless Special Interest Group (SIG) is expanding its effort to drive open standards in low-power, wide-area (LPWA) networks for the Internet of Things (IoT). That area is one of many where there are a plethora of competing standards which results in IoT wide area connectivity market fragmentation.

Three different Weightless specifications are available for LPWA networks: Weightless-N, Weightless-P and Weightless-W.

Each spec is designed to be deployed in different use cases depending on a number of key priorities. These are summarized in the table below:

| Weightless-N | Weightless-P | Weightless-W | |

| Directionality | 1-way | 2-way | 2-way |

| Feature set | Simple | Full | Extensive |

| Range | 5km+ | 2km+ | 5km+ |

| Battery life | 10 years | 3-8 years | 3-5 years |

| Terminal cost | Very low | Low | Low-medium |

| Network cost | Very low | Medium | Medium |

- If low cost is the priority and 1-way communication is adequate for your application, use Weightless-N

- If high performance is the priority, or 2-way communication is required, use Weightless-P

- If TV white space spectrum is available in the location where the network will be deployed and an extensive feature set is required, use Weightless-W

Source: http://www.weightless.org/about/which-weightless-standard

……………………………………………………………………………………………….

In a November 1, 2016 press release, the Weightless SIG said “Ubiik emerges as IoT game changer.”

“Operating out of Taiwan and Japan, Ubiik. is creating the next wave of smarter LPWAN products and is bringing substantive disruption to this dynamic technology space.

Showcased recently at CTIA in Las Vegas, Weightless offered an early insight into Ubiik’s revolutionary LPWAN technology offering a step change in performance expectations. Formed from the core team responsible for the groundbreaking LPWAN technology behind the Weightless-P open standard, Ubiik is set to deliver transformative change in every one of the key parameters that define IoT performance. Integrating cutting edge design philosophy with established, best in class cellular approaches Ubiik is building the high performance, carrier grade, industrial IoT infrastructure of the future.”

Ubiik hopes to roll out hardware in February for Weightless-P, the Weightless SIG’s third effort at defining a spec for LPWA networks. Fabien Petitgrand, chief technologist at Ubiik said that technology has an edge over current market leaders, Sigfox and LoRa, that will help it find traction in the still-emerging sector of IoT LPWA networks.

“We don’t expect significant revenue from meaningful deployment [of Weightless-P] for the next potentially two years,” he said. “Weightless-P is coming later to the game, but we are only at the beginning of this LPWA market.”

In a white paper, Ubiik makes the case that Weightless-P is the lowest-cost approach to applications such as smart meters. The technology could serve an area the size of San Diego with 542 base stations at a total cost of about $2.7 million per year. By contrast, Sigfox and LoRa would need 1,900 and 29,000 base stations and cost $9.5 million and $14.4 million a year, respectively, it said.

……………………………………………………………………………………………………………………………………………….

Weightless SIG chief executive William Webb makes the case that it’s still in the early days for a highly fragmented sector of LPWA networks. To realize predictions of 50 billion IoT nodes by 2020, vendors need to deploy nearly 13 million a day, but so far, market leaders in LPWA such as Sigfox and LoRa each have connected an estimated 7–10 million total to date.

“The message we are hearing very strongly is that the biggest problem in LPWA is the fragmentation of the industry,” said Webb. “If you are making a sensor what wireless chip do you put in it? Most people stop because they don’t want to use the wrong one. We are bumbling along the bottom,” Webb added.

Machina Research estimates that Sigfox now has public networks in the works or running in 26 countries, with LoRa following at 19 and Ingenu at 10. At CES, many top cellular carriers and module makers announced that they were ready to start trials of the (2nd generation) LTE Category-M (also known as LTE Category M1) intended for IoT LPWA networks.

“We project [that,] as of the end of 2017, the LPWA networks using unlicensed spectrum will collectively cover 32% of the world’s population with 11% for licensed LPWA,” which includes both cellular operators and other spectrum holders such as M2M Spectrum Networks, according to Machina Research analyst Aapo Markkanen.

………………………………………………………………………………………………………………………………………….

ETSI’s Task Group 28 has an informal subgroup called Low Throughput Networks (LTN) that will act as a “document rapporteur.” It expects to release a suite of LPWA specifications by the end of the year. The Weightless SIG plans to take their specs to ETSI for approval.

“The way ahead for us is to transfer our standards into ETSI and let Weightless act like the Wi-Fi Alliance, a public face for the ecosystem and a forum for regulator input and future directions,” Webb said.

Sigfox, Germany’s Fraunhofer Institute, and Weightless members such as Telensa are expected to make submissions to the ETSI process, which opens in March. The Weightless SIG will submit its specs to the group.

”Our intention is to produce a single LTN standard…that will consist in a set of three documents–LTN use cases and system requirements, LTN architecture and LTN protocols and interfaces,” said Benoit Ponsard, a representative from Sigfox that runs the ETSI subgroup.

“I suspect that [ETSI] will have a family of standards, and you can argue that that will defeat the aim of ending fragmentation, but we hope that a single-chip design could implement all of them, although not all at the same time,” Webb said.

Here’s a comparison of Weightless P vs. LoRaWAN vs. Sigfox from Ubiik. It considers capacity and range for a single LPWA base station.

…………………………………………………………………………………………………………………………………………………………

“The real success [of Weightless-P] will be if we can bring on more players,” said Webb. “We’re talking to quite a few semiconductor companies, and a number of them like Weightless as a vehicle for LPWA.”

The current effort marks the third change in direction for the Weightless SIG. It started in 2010 to work on a Weightless-W specification for the 700-MHz “white spaces” TV bands with technology from startup Neul.

When it became clear that those bands would not become available as expected, the group developed an ultra narrowband spec, called Weightless-N, using technology from NWave. It was a uni-directional link aimed at lowest cost and data rate to compete directly with Sigfox in the 800- to 900-MHz bands. But NWave struggled to gain traction, and Weightless ultimately transferred the technology to ETSI.

References:

http://www.weightless.org/about/the-argument-for-lpwan-in-the-internet-of-things

http://www.eetimes.com/document.asp?doc_id=1331207

https://en.wikipedia.org/wiki/LPWAN

http://internetofthingsagenda.techtarget.com/definition/LPWAN-low-power-wide-area-network

http://www.eetimes.com/document.asp?doc_id=1330671

http://community.comsoc.org/blogs/posts?page=51&sort=date&order=asc

451 Alliance Report: Corporate Cloud Computing Trends Multi-Cloud UPDATE

by 451 Alliance Staff

Update by the Editor on Multi-Cloud Adoption (April 2, 2017):

In the search for higher “fault tolerance,” few companies rely on a single cloud service provider. According to a recent study by Microsoft and 451 Research, nearly a third of organizations work with four or more cloud service providers (CSPs). By using multiple cloud services, enterprises increase their agility and automation. More and more companies are now re-strategizing and adopting a multicloud system.

“In computing, redundancy is a good thing,” says Mart van de Ven, director and consultant at Droste, a data consultancy. “If one provider is ‘nuked’, our services would not be affected because [as a company using multiple providers] we are also running our services on another provider.”

A study conducted by International Data Corporation (IDC) last year found that 86 per cent of enterprises predict they will require a multi-cloud strategy to support their business goals within the next two years.

A recent example of a company adopting a multi-cloud strategy is SNAP, the company with the popular Snapchat messaging and photo-sharing app that recently had a very successful IPO. It has agreed to buy $1 billion worth of Amazon cloud services over the next five years, according to its filing to the SEC in February 2017. This comes just days after SNAP revealed in another document related to its IPO that it has committed to buying $2 billion worth of Google Cloud services over the same five-year timeframe.

Other than serving as a back-up plan, the multi-cloud strategy means that different clouds from different providers can be used for separate tasks. It also enables companies to avoid vendor lock-in, so they can take advantage of price fluctuations and new offerings.

Lack of expertise in multiple clouds makes data management a challenge that businesses face as they grow in size and go global.

Last year, a survey of IT managers in Hong Kong, India and Singapore, by IDG, Connect and Rackspace, showed that over half of overall survey respondents found monitoring and managing multiple and/or complex workloads their biggest multi-cloud challenge.

Choosing cloud vendors with global networks and strong cloud security capability has become essential for companies when they adopt different cloud vendors. For example, many multinational companies with businesses in China are using services from Alibaba Cloud, the cloud computing arm of Alibaba Group, to help manage their global IT infrastructure from one single account, and to gain full-fledged anti-distributed denial-of-service (anti-DDoS) support, with the company’s server on premises or on cloud.

“Companies are adopting multi-cloud by necessity,” van de Ven says.

Both the number of companies using multiple clouds and the number of clouds they’re using are growing. Finding the right expertise to manage clouds is a key concern, according to the 1,002 IT professionals surveyed by RightScale, a cloud management platform. No wonder multi-cloud management is already a fast-growing market.

According to global market research and consulting company MarketsandMarkets, the multi-cloud management market size is expected to grow from US$939.3 million last year to US$3.4 billion by 2021, at a compound annual growth rate of 29.6 per cent.

………………………………………………………………………………………………

Reliability and Cost are Most Important in Choosing a Cloud Computing Vendor

A December 2016 survey of 911 members of the 451 Global Digital Infrastructure Alliance focused on cloud computing trends, including overall spending, levels of adoption and key attributes. These are summarized below:

By Tracy Corbo Overall IT Spending – Next 90 DaysWe asked respondents about their organizations’ overall IT spending and found 38% expect it to increase over the next 90 days – unchanged from the October 2016 survey. Another 11% expect a decrease (up 2-pts from previously).

Cloud Spending – Next 90 DaysCloud vs Overall IT Spending. Cloud spending continues to outpace overall IT spending over the next 90 days, with cloud spending increases 6-pts stronger than overall IT.

Last 12+ Months. Focusing specifically on cloud spending, 44% of respondents expect their organizations’ cloud spending to increase over the next 90 days (down 2-pts from previous), compared with only 4% who expect a decrease. As the following chart shows, the level of cloud spending growth has remained extremely strong over the past year.

Cloud AdoptionSaaS (64%, up 1-pt) remains the most popular type of cloud computing in use, followed by Infrastructure as a Service (43%, up 4-pts) and On-Premises Private Cloud (34%, down 2-pts).

Level of Adoption. Respondents using cloud services were asked to describe the level of cloud adoption in their organizations. Overall adoption remains strong, with three in five respondents indicating broad or initial implementations of production applications. Broad Implementation of Production Applications (31%) is up 2-pts from the previous survey. Initial Implementations of Production Applications (31%) and Running Trials/Pilot Projects (15%) are both up 1-pt.

In contrast, Discovery and Evaluation (15%, down 2-pts) and Used for Test and Development Environments (9%) are down slightly. Clouds Running Mission Critical Applications. Respondents were asked to describe the criticality of the majority of applications running on various cloud environments. More applications critical to business are running on On-Premises Private Cloud (70%) than on IaaS/Public Cloud (59%).

Cloud VendorsThis section looks at the vendors respondents are using across the different types of cloud. On-Premises Private Cloud. The most popular vendor for on-premises private cloud is VMware vCloud (65%), with Cisco (33%) and Microsoft Cloud OS (30%) a more distant second and third.

IaaS. In terms of which vendors respondents are using to meet their IaaS needs, Amazon Web Services (56%) was the most popular choice, followed closely by Microsoft Azure

Key Vendor AttributesRespondents were asked about the most important attributes they look for in a cloud provider. For on-premises private cloud vendors, Platform Reliability (66%) topped the list, followed by Value for Money/Cost (47%) and Technical Expertise (36%).

IaaS/Public Cloud Vendor Attributes. In a similar fashion, Service Reliability (60%) and Value for Money/Cost (55%) top the list, but Secure Services (45%) is third.

Cloud Usage Next Two YearsLooking ahead, respondents were asked to select which of the following best describes how their organization will use different on-premises and off-premises cloud environments over the next two years. Overall, 34% of respondents said they will focus primarily on a single cloud environment. Another 29% said they will have multiple cloud environments with little or no interoperability, and 25% said they will have multiple cloud environments that will migrate workloads or data between different cloud environments.

Company Size. A closer look at the 34% who selected single cloud environments shows clear differences by company size. That number is higher among smaller companies (40% for companies with 1-249 employees; 38% for companies with 250-999 employees) compared to just 26% among companies with 10,000+ employees.

Larger organizations favor multiple cloud environments. Companies with 1,000 to 9,999 employees (36%) will have multiple clouds with little or no interoperability, while 29% of very large organizations (more than 10,000 employees) plan to migrate data between multiple cloud environments. |

FCC seeks $10B in Spectrum Auction; Trump Team Plans to Restructure FCC Bureaus; Pai new FCC Chairman

FCC Forward Auction:

The Federal Communications Commission (FCC) now has a benchmark price of $10,054,676,822 for the forward portion of its wireless spectrum auction [1].

Note 1: In the reverse auction, TV broadcasters sold spectrum which is to be sold in the forward portion of the FCC auction. More at https://www.fcc.gov/general/learn-everything-about-reverse-auctions-now-learn-%E2%80%93-faqs

………………………………………………………………………………………………………

That’s a huge drop from the previous round. It appears a number wireless operators might be able to meet the lowered benchmark price, especially if the average price in the top markets also meets the second condition for closing the auction successfully. It is a far cry from the $86 billion broadcaster asking price when the auction began, though that was for much more spectrum. One low-power TV advocate called it a “fire sale.”

The $10,054,676,822 is how much the government will have to pay—actually it will be wireless companies if/when the auction finally closes for good—to move broadcasters off 84 MHz of spectrum so it can offer it to forward auction bidders for wireless broadband. That is down from the $43 billion broadcasters wanted for 108 MHz in stage 3.

More info at: http://www.broadcastingcable.com/news/washington/spectrum-auction-tvs-new-exit-price-plummets-10b/162451

………………………………………………………………………………………………………

Trump Team Plots to Revamp FCC:

President-elect Donald Trump’s incoming administration reportedly has signed off on a plan to restructure and streamline the bureaus of the Federal Communications Commission.

“There is an opportunity now, not to be wasted, to make some fundamental changes in the FCC’s structure and the way it operates,” said Randolph May of the Free State Foundation.

To read more: http://www.broadcastingcable.com/news/washington/exclusive-trump-team-embraces-fcc-remake-blueprint/162479

Pai expected to be named FCC interim chairman:

Ajit Pai is expected to be named interim FCC chairman on Friday after Chairman Tom Wheeler’s departure, which is coincident with Donald J Trump’s presidential inauguration. White House spokesman Sean Spicer confirmed a meeting between Pai and President-elect Trump and referred to Mr. Pai as the current FCC chairman.

Pai is predicting a “more sober” regulatory approach after President-elect Donald Trump is sworn in, saying in a recent speech that the FCC will be “guided by evidence, sound economic analysis, and a good dose of humility” under its new leadership.

While undoing some of the actions taken by the FCC during the past eight years is likely to be the initial focus of the Trump administration in the coming months, Pai said when attention is turned to new regulations the bar will be set high. “Proof of market failure should guide the next Commission’s consideration of new regulations,” he told the Free State Foundation. “And the FCC should only adopt a regulation if it determines that its benefits outweigh its costs.”

Having served as a Republican commissioner since 2012, Pai has won near-universal praise from broadcasters for his efforts to revitalize the AM band and a willingness to devote resources to radio-related issues. One Washington insider tells Inside Radio he believes the Commission—with Pai and fellow Republican Michael O’Rielly and Democrat Mignon Clyburn—could prove to be highly productive in the coming months. The first item up for a vote is doing away with a regulation mandating broadcasters to keep hard copies of emails and letters sent to the station in their public inspection file. The FCC is scheduled to vote on the change on Jan. 31.

AT&T CEO Stephenson Meets Trump With Time Warner Deal in Background

AT&T CEO Randall Stephenson recently met with President-elect Donald Trump at Trump Tower. AT&T’s proposed $85.4 billion acquisition of Time Warner was not a topic of discussion during the meeting, which centered primarily on job creation, wage increases and “the policies and the regulations that stand in the way of them creating further jobs,” said incoming White House press secretary Sean Spicer.

Even so, the Time Warner deal loomed large. “The No. 1 thing for AT&T right now is the merger, so it is obviously in the background and coloring every single one of these discussions,” said Gigi Sohn, a former senior adviser to Tom Wheeler, the chairman of the Federal Communications Commission. “The Trump people are smart enough to know that.”

The careful positioning of Thursday’s meeting illustrates the difficulties AT&T faces in pushing through the Time Warner deal. The acquisition, announced last October, is the first test for huge mergers in the new administration, which was voted in on a populist wave that is skeptical of such deals because of the job losses that often follow.

But beyond a barometer of antitrust, the deal is also being closely watched for Mr. Trump’s strong-arm approach to media — particularly to organizations whose coverage he dislikes. Mr. Trump repeatedly targeted CNN during the presidential campaign. This week, he criticized the network for reporting on a briefing by intelligence officials to the president and the president-elect about an unverified report linking him to a Russian campaign to influence the election.

The meeting between Mr. Trump and Mr. Stephenson, which included Bob Quinn, AT&T’s head of lobbying and regulatory affairs, appeared to do little to defuse tension. Right after the meeting, Mr. Trump took to Twitter to again attack CNN, which he said is in a “meltdown” and suffering from declining ratings.

The proposed AT&T-Time Warner deal also is set to face fewer regulatory eyes than originally thought. While the Justice Department is reviewing it, AT&T and Time Warner said last week that they did not plan to submit an application to the F.C.C. Time Warner said that after reviewing the satellite and other telecommunications licenses for its television networks, it concluded they did not merit F.C.C. scrutiny, Time Warner said in filings.

References: