Infonetics: Cloud is Top Priority for N. America Enterprise Network Spending

Infonetics Research released excerpts from its 2014 Network Equipment Spending and Vendor Leadership: North American Enterprise Survey, which explores businesses’ top networking initiatives, budget priorities and growth, investment drivers and barriers, and vendor preferences.

NETWORK EQUIPMENT SPENDING SURVEY HIGHLIGHTS:

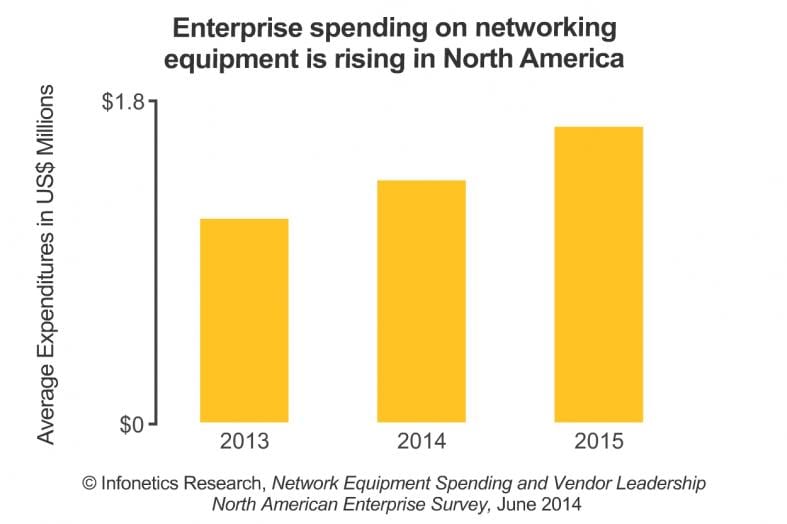

. Respondent companies spent, on average, over $1.1 million on networking equipment in 2013, and they expect to increase spending by 19% this year

. Wireless LAN (WLAN) budget allocations are on the rise among those surveyed, becoming the #2 investment area for 2014

. The economic outlook for North America is positive and companies are looking to capitalize on new opportunities, leading to an expenditure shift from headquarters to branch offices

. Around 3/4 of respondents consider Cisco the top network equipment vendor, an even better showing than in Infonetics survey last year

. Juniper has made major gains from last year, moving up 3 positions in respondent ratings

ANALYST NOTE:

“Our just-finished survey on network equipment spending indicates the outlook for network equipment is healthy. Enterprises are expecting double-digit growth in their expenditures this year and next. How they are spending their budgets is changing, and allocations towards wireless LAN, network monitoring, and switches are growing. Greater spending on branch office infrastructure is also anticipated, a sign of confidence in continued economic expansion,” notes Matthias Machowinski, directing analyst for enterprise networks and video at Infonetics Research.

Machowinski adds: “One of the most interesting findings is that cloud has emerged as the number-1 networking initiative over the next 12 months. Companies are embracing the cloud in a services model as well as building their own cloud-architected data centers, and this means upgrades to network infrastructure.”

Author NOTE:

For several years, the issue of a standardized cloud network interface and architecture has been neglected. The alternatives includes: public Internet, private line [to cloud service providers (CSP) Point of Presence (PoP)], IP MPLS VPN, Ethernet for Private Cloud (a MEF initiative) and hybrid/combinations of the above. Each CSP offers a different set of alternative interfaces and architectures- often via third parties. It’s amazing that this issue hasn’t drawn more attention from the official standards bodies (IEEE started an initiative in this area in 2011, had 1 meeting and then it went dark- with no notice given to the meeting attendees, including this author.).

ABOUT THE SURVEY:

For its 27-page network equipment spending survey, Infonetics interviewed 207 North American organizations that have at least 100 employees about their networking equipment, plans, and purchase considerations over the next two years. The survey provides insights into networking initiatives, budget priorities and growth, investment drivers and barriers, and vendor preferences.

Vendors named in the survey include Alcatel-Lucent, Apple, Aruba, Avaya, Brocade, Cisco, D-Link, Dell, Extreme/Enterasys, HP, Huawei, IBM, Juniper, Linksys, Microsoft, Motorola, Oracle, Netgear, others.

To buy the report, contact Infonetics: www.infonetics.com/contact.asp

More info at: http://www.infonetics.com/pr/2014/Enterprise-Network-Equipment-Spending-Survey-Highlights.asp

Public Cloud Services growing at 23%:

Separately, gllobal spending on public cloud services reached US$45.7 billion last year and will experience a 23 percent compound annual growth rate through 2018, according to analyst firm IDC.

Some 86 percent of the 2013 total came from cloud software, which encompasses both SaaS (software as a service) applications and PaaS (platform as a service) offerings, with the remaining 14 percent generated by cloud infrastructure, IDC said on July 7th.

IDC ranked Amazon.com first in the PaaS market, with Salesforce.com and Microsoft tied for second place, followed by GXS and Google. Amazon was also No. 1 in the infrastructure category, followed by Rackspace, IBM, CenturyLink and Microsoft.

Geographically, the U.S. accounts for 68 percent of the overall public cloud market, but this figure will fall to 59 percent by 2018 as Western Europe’s take rises from 19 percent to 23 percent and growth picks up in emerging markets, IDC said.

“We are at a pivotal time in the battle for leadership and innovation in the cloud. IDC’s Public Cloud Services Tracker shows very rapid growth in customer cloud service spending across 19 product categories and within eight geographic regions. Not coincidentally, we see vendors introducing many new cloud offerings and slashing cloud pricing in order to capture market share. Market share leadership will certainly be up for grabs over the next 2-3 years,” said Frank Gens, Senior Vice President and Chief Analyst at IDC.

The SaaS market – accounting for 72% of the total public cloud services market and forecast to grow at a 20% CAGR over the forecast period – is dominated by Enterprise Applications cloud solutions such as enterprise resource management (ERM) and customer relationship management (CRM), followed by Collaborative applications. System Infrastructure Software cloud solutions – the other major part of the SaaS market, including Security, Systems Management, and Storage Management cloud services – drove 21% of the 2013 SaaS market. From a competitive perspective, the SaaS service provider ecosystem is largely led by Salesforce.com followed by ADP and Intuit. Traditional software vendors Oracle and Microsoft hold the 4th and 5th positions, respectively.

The PaaS market – accounting for 14% of the market in 2013 with a forecast CAGR of 27% – is composed of a wide variety of highly strategic cloud app development, deployment, and management services. In 2013 and 2014, PaaS spending has been largely driven by Integration and Process Automation solutions, Data Management solutions, and Application Server Middleware services. From a market share standpoint, the 2013 PaaS market was led by Amazon.com, followed by Salesforce.com and Microsoft (both share the number 2 position). GXS and Google hold the 4th and 5th positions, respectively.

More info at: http://www.idc.com/getdoc.jsp?containerId=prUS24977214

Qualcomm buys Wilocity to accelerate high speed WiFi chip development

Qualcomm announced on July 2nd that it had acquired Wilocity, a developer of 802.11ad WiGig chips. The companies previously collaborated on WiGig technology, a faster version of Wi-Fi. Qualcomm is integrating the Wilocity technology into its Snapdragon 810 chipsets for mobile devices, hoping to gain a competitive advantage against Broadcom. Qualcomm had previously purchased Atheros Communications in 2011 to get into the WiFi chip business.

Wilocity’s fast gigabit wireless-data capabilities will be included in sample products being shipped to customers, Qualcomm said yesterday in a statement. The company didn’t disclose terms of its purchase of the startup, which is based in Caesarea, Israel.

SoC devices capable of the new higher-speed 802.11ad (Wi Gig) will go on sale next year, said Amir Faintuch, president of Qualcomm’s Atheros division, which has been an investor in Wilocity since 2008.

Sarah Reedy of Light Reading wrote: “Qualcomm is integrating super-fast 60GHz WiFi into its Snapdragon 810 chipsets, a move that it says will advance the entire WiFi ecosystem now that its acquisition of multi-gigabit wireless leader Wilocity is complete.”

“The tri-band reference design based on the Qualcomm Snapdragon 810 will enable 4K video streaming from the phone to the TV in the home, peer-to-peer content sharing, networking, wireless docking, and instantaneous cloud access.”

Companies like Cisco, Dell, Microsoft Corp. (Nasdaq: MSFT), and others plan to use the new integrated chipsets first in the connected home and enterprise. Cormac Conroy, Qualcomm’s VP of product management and engineering, says to expect the Snapdragon 810 platform to start shipping in smartphones and tablets in the second half of the year. He also calls small cells from companies like Cisco a “natural fit,” but those are further down the pipeline.

Sixty Gigahertz is an in-area technology, which means that, while it doesn’t necessarily require line-of-sight, it cannot penetrate walls. It’s ideal for open spaces of any size but can’t cover a whole home on its own. Qualcomm says its tri-band WiFi chips will integrate the multi-gigabit performance of 802.11ad operating in the 60GHz spectrum band with 802.11ac in the 5GHz band and 802.11b/g/n in the 2.4GHz band with handoff in between them to ensure it works everywhere.

“We think this is an important step in our overall vision and mission to deliver high-speed wireless connectivity, mobile computing, and networking,” Conroy says. “This announcement is not just ‘Qualcomm acquires a company.’ The message is Qualcomm and a number of leading partners think 60GHz will be a very important technology across multiple use cases.”

Read more at:

http://www.lightreading.com/components/comms-chips/qualcomm-advances-wig…

Highlights of Infonetics Global Telecom and Datacom Market Trends and Drivers report

Infonetics Research recently released excerpts from its latest Global Telecom and Datacom Market Trends and Drivers report,which analyzes global and regional market trends and conditions.

TELECOM AND DATACOM MARKET TRENDS:

. The International Monetary Fund (IMF) anticipates the world economy will expand 3.6% in 2014 (+0.06 from 2013) amid recoveries in the UK and Germany and slowing growth in Japan, Russia, Brazil, and South Africa

. Mobile service revenue remains the main telecom/datacom growth engine worldwide, led by the unabated rise of mobile broadband

. To avoid falling into the role of pipe provider, many service providers are deploying or weighing new architectural options such as caching/content delivery networks, distributed BRAS/BNG, next-gen central offices, distributed mini data centers, and video optimization

. Software-defined networks (SDNs) and network functions virtualization (NFV) have the attention of nearly all service providers, who are on the long road to widespread deployments

. Big data is becoming more manageable: Operators are leveraging subscriber and network intelligence to support marketing and loyalty strategies, churn management, and automation/optimization of networks using SDN and NFV

. The cloud, mobility, BYOD, and virtualization are the top trends driving enterprise networking and communication technology spending, with North America leading the way

REPORT SYNOPSIS:

Infonetics’ market drivers report is published twice annually to provide analysis of global and regional market trends and conditions affecting service providers, enterprises, subscribers, and the global economy. The report assess the state of the telecom industry, telling the story of what’s going on now and what’s expected in the near and long term, including spending trends; subscriber forecasts; macroeconomic drivers; and key economic statistics (e.g., unemployment, OECD indicators, GDP growth). The 44-page report is illustrated with charts, graphs, tables, and written analysis.

“Expect a slowdown in the Americas, but for a change, Europe will be in the telecom capex driver’s seat this year!” says Stéphane Téral, principal analyst for mobile infrastructure and carrier economics at Infonetics Research. “We’re forecasting global carrier capex to rise 4%, with EMEA as the growth engine despite unabated low-single-digit revenue declines all across Europe. After waiting for so many years to upgrade their networks, Europe’s ‘Big 5’-Deutsche Telekom, Orange, Telecom Italia, Telefónica, and Vodafone-have decided it’s time to take the plunge.”

Co-author of the report Matthias Machowinski, Infonetics’ directing analyst for enterprise networks, adds: “Economic expansion in mature economies and falling unemployment in Europe is driving stronger growth in enterprise telecom and datacom expenditures this year. We expect the network infrastructure segment to be the main beneficiary of growing investments, followed by security. The communication segment will likely have another challenging year, as companies evaluate their deployment strategy going forward.”

To buy report, contact Infonetics: www.infonetics.com/contact.asp

FCC Reveals DSL Speeds Lagging Cable/Fiber: Speeds are Inconsistent & often <Advertised! AT&T U-Verse Speed Report

A new FCC report shows that while many U.S. Internet service providers (ISPs) increased performance between 2012 and 2013, not all of them delivered the speeds that they advertised. The FCC report found the average speed for subscribers is now 21.2 megabits per second- up roughly 36% from the average in 2012. Aside from Centurylink, the other DSL providers showed little or no improvement in broadband speeds.

Note: The author has had AT&T U-Verse for 2 years during which time the speeds have not improved. June 20, 2014 (today) test results: 12.69M b/sec downstream and 1.93M b/sec upstream. No change within last 2 years, but above the advertised speed range for the access tier I subscribe to:

AT&T U-verse High Speed Internet Max: 6.1 Mb/sec – 12.0 Mb/sec

http://www.att.net/speedtiers

For 10 years prior to U-Verse, I had Pac Bell/SBC/AT&T DSL which topped out at 3Mb/sec downstream and was NOT nearly as reliable as U-Verse Internet access. All over the same old twisted pair copper going into my home.

Upload speeds varied greatly among broadband providers, since according to the report most consumers tend to download far more data than they upload. Frontier and Verizon, which market fiber-based services, offered upload speeds as fast as 25 Mbps and 35 Mbps, respectively; no other provider offers upload speeds of more than 10 Mbps. (AT&T doesn’t advertise download speed ranges for U-Verse on their website).

The FCC plans to write to the CEOs of the DSL providers and other companies that failed to consistently meet their advertised speeds, to find out why. An agency official singled out Windstream for having among the worst performance.

Consistency of speeds is also a problem, as some ISPs delivered approximately 60 percent of the speeds they promised 80 percent of the time to 80 percent of customers, according to the report. This is the first time the commission measured speed consistency in its annual report on the topic, and the results concerned FCC Chairman Tom Wheeler. “Consumers deserve to get what they pay for,” Wheeler said in a statement announcing the report Wednesday. “I’ve directed FCC staff to write to the underperforming companies to ask why this happened and what they will do to solve this.”

Please refer to this chart showing consistent download speed vs advertised speed 80% of time/80% of subscribers. All major U.S. Internet Service Providers (ISPs) are included:

The FCC report states that “Cablevision delivered 100% or better of advertised speed to 80% of our panelists 80% of the time during peak periods, and about half the ISPs delivered less than about 90% or better of the advertised speed for 80/80. However about one-third of the ISPs delivered only 60 percent or better of advertised speeds 80 percent of the time to 80 percent of the consumers.” This is a metric that the FCC expects ISPs to improve upon over the course of the next year. Do you think that will occur?

Those ISPs using DSL technology show little or no improvement in maximum speeds, with the sole exception of Qwest/Centurylink, which this past year doubled its highest download speed within specific market areas. The reason for this may be that DSL, unlike cable and fiber technologies, is strongly dependent upon the length of the copper wire (or “loop”) from the residence to the service provider’s terminating electronic equipment, such that obtaining higher data speeds would require companies to make significant capital investments across a market area to shorten the copper loops. On the other hand, both fiber and cable technologies intrinsically support higher bandwidths, and can support even higher speeds with more incremental investments.

The FCC’s primary conclusions from this study:

1. Many ISPs now closely meet or exceed the speeds they advertise, but there continues to be room for improvement.

2. New metric this year – Consistency of speeds – also shows significant room for improvement.

3. Consumers are continuing to migrate to faster speed tiers.

4. Improvements in Speed are not Uniform Across Speed Tiers Tested

5. There were sharp differences in Upload Speeds

The FCC report says that increasing consumer demand for broadband access is causing congestion on networks due to video streaming services like Netflix. As that demand increases, companies using digital subscriber line (DSL) service (based on copper wires) cannot compete with the growing speeds of cable and fiber Internet. An official said the FCC plans to act on that issue later this year after obtaining more information.

Providers using fiber-based broadband connected directly to consumers’ households delivered 113 percent of advertised download speeds and 114 percent of advertised upload speeds, making it the best traffic option available. But telecom companies have not invested in expanding fiber-optic networks, which is the only way to compete with Internet speeds offered by cable companies, says Harold Feld, senior vice president of consumer advocacy group Public Knowledge. “We are ultimately heading to a cable monopoly because on the technology side the other technologies cannot keep up,” Feld says, citing arguments made by Susan Crawford, a former tech policy adviser for the Obama administration who is now a visiting professor at Harvard Law School. “We see people want faster speeds, and that DSL as a technology cannot keep up unless companies make very significant investments.”

This study may help the case for AT&T to purchase DirecTV, as the telecom giant has argued it needs money to upgrade its DSL network. Comcast networks performed well in the study, which may potentially weaken its argument that its customers need more Internet services through the purchase of Time Warner Cable.

References:

FCC Report

http://data.fcc.gov/download/measuring-broadband-america/2014/2014-Fixed…

FCC Charts – Measuring Broadband America

http://www.fcc.gov/measuring-broadband-america/charts

Articles

http://imarketreports.com/fcc-finds-dsl-speeds-arent-keeping-up-with-cab…

http://online.wsj.com/articles/fcc-report-on-broadband-speeds-dsl-lags-b…

http://www.usnews.com/news/blogs/data-mine/2014/06/19/fcc-reveals-incons…

Comment from Ken Pyle (viodi.com):

I find it interesting that at least two cable companies announced major increases in their speeds this week.

Comment from IEEE DIscussion Group Member George Ginis (3 points):

Infonetics: Ethernet/IP MPLS VPN services reached $62.6B in 2013; Security Services to be Impacted by SDN/NFV

Infonetics Research released excerpts from its 2014 Ethernet and IP MPLS VPN Services report, which analyzes the market for wholesale and retail Ethernet services and managed and unmanaged layer 2 and layer 3 IP MPLS VPN services.

Worldwide revenue from Ethernet and IP MPLS VPN services last year totaled $62.6 billion, a 12% improvement from 2012, according to Infonetics. The market research firm credited cloud-based services for the increase. “Both segments are growing at a healthy clip and will continue to do so, with Ethernet services growing about twice as fast as IP MPLS VPNs through 2017,” said Michael Howard of Infonetics. See below for more quotes from Mr. Howard.

ETHERNET AND IP MPLS VPN MARKET HIGHLIGHTS

. The combined global Ethernet services and IP MPLS VPN services markets totaled $62.6 billion in 2013, up 12% from the year prior

. Keeping the momentum going are cloud services accessed via IP VPNs, Ethernet services, and mobile backhaul transport over Ethernet services

. Revenue from Ethernet services delivered on 10GE and 100GE is forecast by Infonetics to grow 300% between 2013 and 2018

. In 2013, Asia Pacific made up the biggest share of Ethernet services revenue and will continue to do so through 2018

“Ethernet services continued to gain momentum in 2013, easily outpacing IP MPLS VPN services. Both segments are growing at a healthy clip and will continue to do so, with Ethernet services growing about twice as fast as IP MPLS VPNs through 2017,” reports Michael Howard, principal analyst for carrier networks and co-founder of Infonetics Research.

Howard adds: “Software-defined networking (SDN) and network functions virtualization (NFV) technologies will change the way service providers operate their networks and, more important, how they deliver services. The biggest change will come in the types of security services offered over IP MPLS VPNs and Ethernet services-for example, firewalls, SSL VPNs, intrusion detection, parental controls-and the pace at which they’re made available.”

From a related Infonetics report excerpt: “Historically, data centers have been protected by big-iron security solutions and complex webs of security appliances and load-balancing infrastructure,” says Jeff Wilson, principal analyst for security at Infonetics Research. “But as more providers virtualize their data centers and roll out SDNs and NFV, we anticipate a fairly significant revenue transition from hardware appliances to virtual appliances and purpose-built security solutions that interface directly with hypervisors, with SDN controllers via APIs, or orchestration platforms.”

Read more at: http://www.infonetics.com/pr/2014/2H13-Data-Center-Security-Products-Market-Highlights.asp and

http://www.infonetics.com/pr/2014/Ethernet-and-IP-MPLS-VPN-Services-Mark…

REPORT SYNOPSIS

Infonetics’ annual IP MPLS VPN and Ethernet services report provides market size, forecasts through 2018, analysis, and trends for wholesale and retail Ethernet services (Internet and WAN access, E-LINE, E-LAN services) by speed, and managed and unmanaged layer 2 and layer 3 IP MPLS VPN services. Data is presented by country (U.S., Canada) and region: North America, EMEA (Europe, Middle East, Africa), Asia Pacific, CALA (Caribbean, Latin America) and worldwide.

More info at: http://www.infonetics.com/pr/2014/Ethernet-and-IP-MPLS-VPN-Services-Market-Highlights.asp

To buy report, contact Infonetics: www.infonetics.com/contact.asp

NFV WEBINAR

Join Michael Howard June 19 at 11:00 AM EDT for NFV: An Easier Initial Target Than SDN?, a free, live event for operators exploring use cases, lessons learned, and recommendations for developing NFV projects. Attend live or access the replay at:

http://w.on24.com/r.htm?e=793989&s=1&k=BA88F4D4A551DA1913530B000C135707

AT&T begins GigaPower rollout in Winston-Salem & Durham, NC; Future expansion plans & roll out procedures

AT&T’s GigaPower -which competes with Google Fiber- is currently available in Austin, TX. This Tuesday, AT&T received approval from the city of Winston-Salem, N.C., to begin building out its FTTH based GigaPower service. That comes two months after AT&T announced it was in talks with the North Carolina Next Generation Network to bring high-speed fiber to the state. Winston-Salem is one of six cities in North Carolina expected to receive AT&T’s GigaPower service. Another is Durham, NC, which was announced today: http://about.att.com/story/att_uverse_with_gigapower_fiber_network_coming_to_durham.html

The company expects GigaPower construction and deployment to begin in other communities this year. Metro areas being explored for GigaPower include: Atlanta, Augusta, Charlotte, Chicago, Cleveland, Dallas, Fort Lauderdale, Ft. Worth, Greensboro, Houston, Jacksonville, Kansas City, Los Angeles, Miami, Nashville, Oakland, Orlando, Raleigh-Durham, San Antonio, San Diego, San Francisco, San Jose/Santa Clara, St. Louis.

This blog from Eric Small, AT&T Vice President of Fiber Broadband Planning, explains some of the more technical aspects as to how the company is rolling out GigaFiber: http://blogs.att.net/consumerblog/story/a7791741

Technical Outline of GigaPower Process:

· U-verse with GigaPower enables residents who sign up to enjoy speeds of up to 300 megabytes per second

o Within the next year, AT&T plans to boost speeds to up to 1 gigabyte per second

· Traditionally, fiber network connection that starts at AT&T’s central switching office. Fiber cable from the office runs to a VRAD equipment box in the neighborhood, which then uses existing copper wires to transmit U-verse signals to homes

o GigaPower bypasses the VRAD by using a passive optical splitter which runs fiber directly into homes

· Armored fiber ‘drop’ cable connects the customer home to the network

o Drop cable connects to the Optical Network Terminal (ONT) outside of the home. A CAT5e or CAT6 cable connects the ONT to the U-verse residential gateway inside the home.

o Cable splicing is done in the factory instead of the field, saving time and money and enables higher quality connection

Reference:

More information on GigaPower can be found at: http://about.att.com/mediakit/gigapower

Addendum:

As part of it’s proposed acquistion of DirecTV, AT&T told the FCC and Justice Dept:

“AT&T commits to deploying high-speed broadband to 15 million new customers as part of the transaction, but only two million will receive high-speed fiber connections.”

AT&T said its U-Verse product, which supplies high-speed broadband and pay-TV services to almost six million customers, could at best cover only a quarter of the nation’s TV households.

http://online.wsj.com/news/articles/SB2000142405270230364270457961871127…

Infonetics: Microwave equipment market slides 17% sequentially in 1Q14 (10% according to Dell’Oro Group)

Infonetics Research released excerpts from its 1st quarter 2014 (1Q14) Microwave Equipment report, which tracks time-division multiplexing (TDM), Ethernet, and dual Ethernet/TDM microwave equipment.

MICROWAVE EQUIPMENT MARKET HIGHLIGHTS:

. Microwave equipment revenue totaled $1 billion worldwide in 1Q14, down 17% sequentially, and down 7% from the year-ago quarter

. Revenue for every microwave product segment-TDM, dual Ethernet/TDM, Ethernet, access, backhaul, transport-declined in 1Q14 from 4Q13

. Backhaul continues to dominate the microwave market, while access and transport remain stable niche segments

. Ericsson held steady atop the microwave equipment revenue share leaderboard in 1Q14, while NEC leapfrogged Huawei to claim the #2 spot

. By 2018, the average revenue per unit (ARPU) for Ethernet-only microwave gear is anticipated to fall to around half its 2013 value

MICROWAVE REPORT SYNOPSIS:

Infonetics’ quarterly microwave equipment report provides worldwide and regional market size, vendor market share, forecasts through 2018, analysis, and trends for Ethernet, TDM, and hybrid microwave equipment by spectrum, capacity, form factor, architecture, and line of sight. Vendors tracked: Alcatel-Lucent, Aviat Networks, Ceragon, DragonWave, Ericsson, Exalt, Huawei, Intracom, NEC, SIAE, ZTE, and others.

ANALYST NOTE:

“The proliferation of LTE-A upgrades and small cells deployments was not enough to stop the microwave equipment market from sliding downward in the first quarter of 2014, a casualty of continuing pricing pressures and inter-technology competition with wireline backhaul alternatives, particularly fiber-based solutions,” notes Richard Webb, directing analyst for mobile backhaul and small cells at Infonetics Research. “The seasonal decline was much more severe than usual, suggesting a deeper malaise in the market.”

Webb adds: “Even with the arrival of 5G towards 2018, the microwave backhaul market may be arriving at the limit of demand for increasing backhaul capacity from the cell site, as many will be more than adequately future-proofed by this point.”

To buy the report, contact Infonetics: http://www.infonetics.com/contact.asp

RELATED REPORT EXCERPTS:

. China’s huge LTE rollouts and EU network upgrades push carrier capex to $354B this year

. China Mobile increases capital intensity 6% year-over-year

. Carriers going gangbusters with WiFi and Hotspot 2.0

. Small cell market on track to increase 65% this year

. Alcatel-Lucent, Huawei and Cisco get top marks from operators in mobile backhaul survey

According to Dell’Oro Group, the point-to-point Microwave Transmission equipment market declined 10 percent during the trailing four quarter period ending in the first quarter of 2014.

“Demand for microwave transmission equipment has been under pressure for quite some time with little relief in the first quarter of 2014,” said Jimmy Yu, Vice President of Microwave Transmission research at Dell’Oro Group. “We think things will improve as we enter the second half of the year, but this is largely dependent on improving conditions in two of the primary microwave market regions, Europe and India. On a brighter note, packet microwave sales achieved another quarter of positive growth. We estimate that for the trailing four quarter period ending in 1Q14, packet microwave revenue grew 13 percent year-over-year,” Yu added.

In-line with market demand, nearly all major microwave vendors experienced a year-over-year revenue decline. Among the top vendors, Alcatel-Lucent’s microwave revenues faired the best, growing slightly above zero percent due to their strong market position in the Packet Microwave segment.

|

Microwave Transmission Market |

||

|

$3.7 Billion for trailing four quarter period (2Q13 through 1Q14) |

||

|

Manufacturer |

Rank |

Growth Y/Y |

|

Ericsson |

1 |

– 6 % |

|

Huawei |

2 |

– 15 % |

|

Alcatel-Lucent |

3 |

+ 0 % |

|

NEC |

4 |

– 2 % |

The Dell’Oro Group Microwave Transmission Quarterly Report offers complete, in-depth coverage of the market with tables covering manufacturers’ revenue, ports/radio transceivers shipped, and average selling prices by capacities (low, high and ultra high). The report tracks point-to-point TDM, Packet and Hybrid Microwave as well as full indoor and full outdoor unit configurations. To purchase this report, email Julie Learmond-Criqui at [email protected].

For more information, contact Dell’Oro Group at +1.650.622.9400 or visit www.DellOro.com.

Infonetics: Global Carrier Router & Switch Market down 13% in quarter; Up only 2% YoY

Infonetics Research released vendor market share and analysis from its 1st quarter 2014 (1Q14) Service Provider Routers and Switches report.

CARRIER ROUTER AND SWITCH MARKET HIGHLIGHTS:

. The global carrier router and switch market, including IP edge and core routers and carrier Ethernet switches (CES), totaled $3.2 billion in 1Q14, down 13% from 4Q13, and up just 2% from the year-ago quarter

. Revenue for all product segments-IP edge and core routers and CES-declined by double digits sequentially in 1Q14

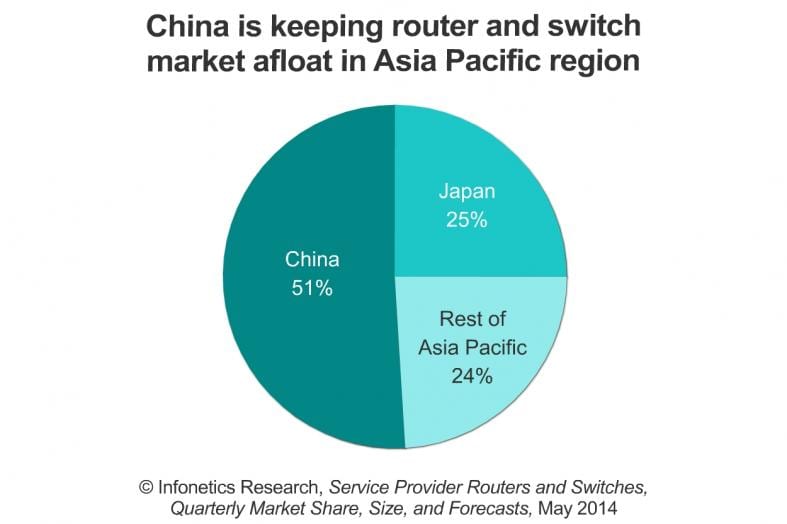

. Likewise, all major geographical regions (NA, EMEA, APAC, CALA) are also down from the prior quarter, though all but N. America are up from the same period a year ago

. The top 4 router and CES vendors stayed in dominant positions in 1Q14, but positions 2-4 played musical chairs: Cisco maintained its lead, Juniper rose to 2nd, Alcatel-Lucent rose to 3rd, and Huawei dropped to 4th

. Infonetics is projecting 5-year (2013-2018) CAGRs of 4.3% for edge routers, 2.9% for core routers, and 0.7% for CES

CARRIER ROUTER/SWITCH REPORT SYNOPSIS:

Infonetics’ quarterly service provider router and switch report provides worldwide, regional, China, and Japan market share, market size, forecasts through 2018, analysis, and trends for IP edge and core routers and carrier Ethernet switches. Vendors tracked: Alaxala, Alcatel-Lucent, Brocade, Ciena, Cisco, Coriant, Ericsson, Fujitsu, Hitachi, Huawei, Juniper, NEC, UTStarcom, ZTE, and others.

RELATED REPORT EXCERPTS:

. China’s huge LTE rollouts and EU network upgrades push carrier capex to $354B this year

. China Mobile increases capital intensity 6% year-over-year

. Networking ports hit $39 billion; 40G booming in the data center, 100G taking off in the core

. SDN and NFV moving from lab to field trials; Operators name top NFV use case

ANALYST NOTE:

“Last quarter, we identified the ‘SDN hesitation,’ where we believe the enormity of the coming software-defined networking and network functions virtualization (NFV) transformation is making carriers be more cautious with their spending. This hesitation reared its head in the first quarter of 2014, where global service provider router and switch revenue increased only 2% from the year-ago quarter,” notes Michael Howard, principal analyst for carrier networks and co-founder of Infonetics Research.

“We believe the current generation of high-capacity edge and core routers can be nursed along for a while as the detailed steps of the SDN-NFV transformation are defined by each service provider-and many of the largest operators in the world are involved, including AT&T, BT, Deutsche Telekom, Telefónica, NTT, China Telecom, and China Mobile,” continues Howard. “And there is intensifying focus on multiple CDNs (content delivery networks) and smart traffic management across various routes and alternative routes to make routers and optical gear cooperate more closely.”

To buy the report, contact Infonetics: http://www.infonetics.com/contact.asp

Editor’s Comment:

We’re not at all surprised by this lackluster growth in carrier switch/router market. We think it won’t get any better any time soon. That’s because of all the confusion caused by vendors touting their “SDN/NFV” solutions which don’t interoperate with any other like vendor. Moreover, we see carriers moving to higher speed inter-office optical interconnects with fewer high performance switch/routers and optical ports needed.

Multi-Layer Capacity Planning for IP-Optical Networks, published in the Jan 2014 IEEE Communications magazine does a good job explaining how carriers might save 40-60% of routing ports, transponders and wavelengths in the core network and up to 25% in metro/regional networks. The authors are from Cisco and two well regarded carriers -DT and Telefonica.

One of the key findings: “Applying this methodology to two real data sets provided by two large European network operators, showed significant savings in the total number of required router interfaces, transponders, and wavelengths, on the order of 40–60 percent in the core. In addition, if MLR-A is applied to the aggregation region, it should provide an additional gain up to 25 percent in metro/regional interfaces.”

If that’s the case, then fewer switch/routers will be sold to global carriers in the near future. Furthermore, prices are likely to drop precipitously due to white labeled/ODM built switch/routers that are compatible with various SDN/NFV/Open Networking schemes.

Cisco Embraces SDN, Commits (Again) to Leading the Shift – Scott Thompson, FBR Capital Markets

Scott Thompson’s report/edited by Alan J Weissberger:

Introduction:

Cisco Live! 2014, the company’s premier annual developer/customer event in the U.S., seems to have helped frame Cisco as a company that is carefully and conscientiously navigating a rapidly shifting communications equipment landscape. The company appears to be gaining a reasonable amount of momentum with its new products and architectures, including its Nexus 9000 platform and CRS-X, and it appears that Cisco has joined the SDN “revolution” with a credible architecture story. While we expect there may be potential for near-term upside to consensus estimates, we remain cautious. We continue to believe that the longer-term architectural impacts of SDN could challenge Cisco’s business.

Nexus 9000 is the product to beat:

Cisco’s new Nexus 9000 platform seems to be one of the switches to beat in the data center networking space. We suspect Cisco has found a way to use the platform not only to deliver industry-leading pricing for 10G Ethernet ports, but also to lever the product’s higher density and optical capacities to keep revenue consistent with Nexus 5000 and 7000 run-rates.

Cisco believes the gross margin mix on the newer products it recently released, or is about to release, is higher than past products. If immediately accretive, we believe this could help bolster management’s ability to keep consolidated GMs above 60%. The Nexus 9000 has several different configurations based around the Trident II chipset. While stand-alone Trident II silicon is standard in the low-end 9000 platform, Cisco’s custom ASICs add functionality such as VXLAN routing, extra memory, and expansive VLAN capacity to scale to several times the size or previous TCAM capacities. This flexible configuration not only blurs the lines between the switching and routing lines but also provides a full range of cost points, potentially able to lead the industry on price and protect Cisco’s market share with less impact to margins than we had at first expected.

Cisco’s Sales Team:

Cisco’s sales team is poised to gain traction with the Nexus 9000. It has been several quarters since the Cisco sales team has had an industry-leading networking product to ignite the sales engine. We expect the Nexus 9000, and the series of products that are likely to follow, represents a product that could drive a significant switching upgrade cycle. These types of cycles often drive margin share gains for Cisco, and we expect this to be no different.

Competitors likely to come under pressure from Cisco’s expected aggressive pricing and sales tactics could be Juniper, Brocade, and to some extent Alcatel-Lucent and Ciena. Time will tell how aggressively Cisco will cannibalize its existing product, but industry trends appear to encourage it.

Cisco shifts its focus to software-centric solutions:

Cisco Live marked a notable change in Cisco’s product strategy, from mostly hardware to a more hybrid combination of hardware, software, and cloud applications. With bold statements such as “infrastructure is a commodity,” CEO Chambers foreshadowed a shift in key product offerings: software and infrastructure as a service that delivers holistic solutions instead of stand-alone products.

We expect the Cisco ONE suite and smart licensing to be available in Fall 2014, with sales beginning to ramp as early as 2015.

Will white box (low-cost,non-branded ODM bulit hardware) be a threat to Cisco in the next 6-18 months?

Although we believe white box may not take a sizable portion ofmarket share away from Cisco, we believe it will likely put greaterthan-expected pressure on pricing. We believe this to be one of Cisco s main gross margins challenges in 2014 and 2015.

Will new and unexpected entrants into the data networking space take market share from Cisco in the next 2+ years?

Significant technical and strategic shifts are affecting the IT landscape. We expect Cisco to be threatened, and we expect these to become threats to an increasingly large number of IT players as technology shifts drive the industry toward consolidation.

TiECon 2014 Summary-Part 4: Highlights of IBM keynote- What Really Matters for IoT

Introduction:

In our fourth and final article on TiECon 2014, we summarize the Internet of Things (IoT) market opportunites and advice for entrepreneurs, as described by Sandy Carter, IBM VP & General Manager of IBM Ecosystem Development during her TiECon IoT keynote speech on May 16th. Consistent with the theme of TiECON, Ms Carter addressed where IoT entrepreneurs should concentrate their time and what they need to do to succeed.

Sandy contrasted the common vs. unique aspects of the IoT, identified three customer use cases and several common roles, examined consumer vs. enterprise focus, and suggested where new innovation and investment can produce material improvements over the next several years. It was a very well researched and clearly presented talk.

Presentation Take-aways:

As one might expect, investment and opportunities in the IoT are expanding rapidly. And that will continue for many years. By 2020, it’s forecast that $300B in incremental revenue will derive from IoT products and services (source of that forecast was not identified).

Three items are needed for the above IoT forecast to be realized, according to Ms Carter:

- Focus on domain knowledge expertise, value drivers, and design (focus on outcome- not the devices(s)).

- Integrated solution that includes data analytics and cognitive computing along with monitoring sensors.

- Ecosystem that includes many different types of vendors, service providers, software suppliers and system integrators.

The IoT opportunity exists by vertical industry segment rather than a single monolithic market (which confirms what all IoT market researchers have said to date). The top ranked IoT industry verticals (in order of revenue opportunities) are: auto, healthcare, industrial, retail, asset management, utilities/energy, home monitoring & control/building automation.

When selling IoT solutions, vendors and service providers must target the right buyer within the company they’re trying to sell to. For example, the IoT solution provider should determine if it is the facility manager vs IT manager vs department head. Value driven use cases will also be important for the IoT provider to demonstate solutions for the industry segment(s) relevant to the targeted customers.

IoT Integrated solution opportunities identified were:

- Data collection at $87B

- Analystics at $237B

- Cognitive Computing at $370B

- Data analytics that are optimized for each customer was said to be a $180B opportunity in 2016.

Note: The timeframe for the first three forecasts were not mentioned during Sandy’s keynote. The source noted on the slide was an Harbor Research report: Where will value be created in IoT, April 7, 2014. When attempting to search on line for same, we could only find a 2013 forecast from that firm:

http://harborresearch.com/wp-content/uploads/2013/08/Harbor-Research_IoT-Market-Opps-Paper_2013.pdf

IoT Collaboration Examples:

1. IBM is working with Honda to collect data related to battery performance in electric vehicles (EV) on the road. The objective is to analyze massive amounts of data to better determine how those EV batteries operate. To improve battery life and perfomance, the data will be optimized for decision making. That will result in increased EV performance and enhanced customer satisfaction.

Other IoT opportunities for EV were said to be: device performance, maintenance contracts, warranties/recalls, and customer service.

2. IBM is working with the Flint River Basin Partnership to collect, analyze, and optimize data from various sensor readings. The objective is to make more informed irrigation scheduling decisions to conserve water, improve crop yields and mitigate the impact of future droughts. That will be done by analyzing and optimizing unstructured sensor data to predict irrigation needs based on weather conditions or crop health. The result of this collaboration will be the deployment of innovative conservation measures to enhance agricultural efficiency by up to 20 percent.

An extension of the above opportunites would be to utlize mutliple protocols and technologies to collect and analyze data from many different devices. Then to merge and reconcile that unstructured data from multiple input sources.

3. Streetline (http://www.streetline.com/company/in-the-news/) has been working with the city of Los Angeles on an optimized parking solution. The system collects and analyzes parking spot data and makes decisions to optimize parking spot usage. This has reduced traffic congestion and resulted in increased motorist satisfaction. It’s also generated more parking revenue fo the city of L.A.

Note: Sandy predicted that over 100B sensor readings would be made daily by 2020 (she didn’t say whether that was global or just for the U.S.)

Suggested IoT Ecosystem Value for Entrepreneurs:

- End to end technology and business monitoring

- Raise capital/ procure investments

- Scale globally- using one or more partner companies or a network of companies

- Mentoring as a recipe for success

- Cloud connect your thoughts (meaning that part of the solution is data analytics/number crunching/cognitive computing in the cloud)

- Address security and privacy concerns in the provided IoT system solution

The connected car was presented as an IoT ecosystem example. The IBM Watson Cloud, with over 1700 developers, was given as an example of IoT cognitive computing (probably the best cloud solution available in this author’s opinion). For more information on Watson: http://www.ibm.com/smarterplanet/us/en/ibmwatson/ecosystem.html

Summary and Conclusions:

What really matters (reiterated):

- Focus with domain knowledge as driver of value. Concentrate on long term growth, revenues and profits.

- Offer an Integrated solution: collect, analyze, optimize data.

- Build an ecosystem with mentoring, capital and global scale.

Bottom line:

To succeed in the IoT marketplace a company must provide an integrated solution (likely with one or more partner companies) that collects, analyzes, and optimizes unstructured data from “things.” Then design, operate and manage.

From her keynote speech, it’s clear that Sandy Carter recognizes the opportunities available in leveraging the Internet of Things (IoT) for both established corporations (like IBM) and entrepreneurs alike. She also provided valuable insight into what it takes to be successful in this large, emerging market.