Highlights of Infonetics Macrocell Mobile Backhaul Equipment, Services & Strategies, +MMW reports

Infonetics Research released excerpts from its latest Macrocell Mobile Backhaul Equipment and Services report, which tracks mobile backhaul equipment vendors, identifies market growth areas, and provides analysis of equipment, connections, and cell sites.

“The macrocell mobile backhaul equipment market continues to be driven by demand for capacity increases to support LTE deployment and 3G network expansion. But there will be few greenfield macro base station deployments and this, combined with increasing pressure on equipment pricing, inhibits revenue growth in the long term,” notes Richard Webb, directing analyst for mobile backhaul and small cells at Infonetics Research.

MACROCELL MOBILE BACKHAUL MARKET HIGHLIGHTS:

. The global macrocell mobile backhaul equipment market totaled $8.4 billion in 2013, and Infonetics expects this slow-growing, mature market to reach over $8.5 billion in 2014

. Microwave is anticipated to comprise 48% of mobile backhaul equipment spending in 2014 and trend downward slightly by 2018, in favor of wired solutions, predominantly fiber-based

. The ongoing HSPA/HSPA+ onslaught across the 3GPP world and growing LTE deployments are fueling Ethernet macrocell backhaul spending, especially microwave and Ethernet

over fiber

. IP edge router revenue is expected to increase in 2014 and continue to grow through 2018, generating a 2013-2018 compound annual growth rate (CAGR) of 2.4%

. Infonetics forecasts a cumulative $45 billion to be spent on macrocell mobile backhaul equipment worldwide over the 5 years from 2014 to 2018

MACROCELL MOBILE BACKHAUL REPORT SYNOPSIS:

Infonetics’ biannual macrocell mobile backhaul report provides worldwide and regional market share, market size, forecasts through 2018, analysis, and trends for macrocell mobile backhaul equipment, connections, and installed cell sites by technology. The report also includes an Operator Strategies Tracker. Companies tracked: Accedian, Actelis, Adtran, Adva, Alcatel-Lucent, Aviat Networks, BridgeWave, Canoga Perkins, Ceragon, Ciena, Cisco, DragonWave, E-band, Ericsson-Redback, FibroLan, Huawei, Intracom, Ipitek, Juniper, MRV, NEC, Overture, RAD, SIAE, Siklu, Sub10 Systems, Telco Systems, Tellabs, Thomson, ZTE, others.

To buy the report, contact Infonetics:

www.infonetics.com/contact.asp

RELATED REPORT EXCERPTS

. Infonetics’ October Mobile Backhaul and Microwave research brief: http://www.infonetics.com/2014-newsletters/Mobile-Backhaul-Microwave-October.html

. Momentum building in millimeter wave market, ignited by outdoor small cells

. Mobile operators evaluating SDN and NFV for more flexible and cost-effective backhaul

. US$1 trillion to be spent on telecom and datacom equipment and software over next 5 years

. Infonetics releases Global Telecom and Datacom Market Trends and Drivers report

. Operators launching 4G-based femtocells to ratchet mobile broadband services

. Carriers going gangbusters with WiFi and Hotspot 2.0

Mobile operators evaluating SDN and NFV for more flexible and cost-effective backhaul:

Infonetics Research released excerpts from its 2014 Macrocell Backhaul Strategies: Global Service Provider Survey, which provides insights into operator plans for macrocell backhaul.

“The mobile network is evolving to incorporate small cells, distributed antenna systems (DASs), remote radio heads (RRHs), and WiFi, and though the macrocell layer still does the heavy lifting when it comes to traffic handling, the backhaul network behind all this is becoming increasingly complex,” notes Richard Webb, directing analyst for mobile backhaul and small cells at Infonetics Research. “Our macrocell backhaul study reveals the extent to which operators are looking at software-defined networking (SDN) and network functions virtualization (NFV) solutions to provide greater backhaul flexibility and cost-savings.”

MACROCELL BACKHAUL SURVEY HIGHLIGHTS

- Infonetics’ survey respondents have yet to scale their small cell deployments, but anticipate they will place over 20% of traffic from the macro network onto small cells by 2018

- When asked if or when they will introduce software-defined networking (SDN) into the backhaul network, 29% of respondents say they are deploying or plan to deploy SDN at some point, while the majority (63%) are evaluating it as a possibility with no set timeframe

- Among those surveyed, Ethernet on fiber will be the most-used technology for macrocell backhaul connections by 2016, followed by Ethernet-only microwave

- Downstream bandwidth is the top-ranked service-level agreement (SLA) metric, rated very important by 92% of operators surveyed, followed by jitter, latency, and upstream bandwidth

- Survey respondents not only seek vendors with strong macrocell backhaul product portfolios, but partners who can also support their strategies for holistic, flexible future-proofed networks

MOBILE BACKHAUL NFV WEBINAR

View on-demand analyst Richard Webb’s Making Mobile Backhaul Flexible with NFV and SDN, a webinar exploring how network functions virtualization (NFV) and software-defined networking (SDN) can be used to automate transport networks:

http://w.on24.com/r.htm?e=847425&s=1&k=C8E5B08CA6062C0E9F5C670CA7E7880E.

MACROCELL SURVEY SYNOPSIS:

For its 28-page macrocell backhaul survey, Infonetics interviewed purchase decision makers at 25 incumbent, mobile, competitive, and cable operators from EMEA, Asia, Latin America, and North America about their current and future plans for macrocell backhaul. The study provides insights into operator deployment strategies, technologies, capacity requirements, packet timing and synchronization methods, SLA metrics, and vendor preferences. The operators participating in the study control 29% of the world’s .telecom capex

To buy the report, contact Infonetics:

http://www.infonetics.com/contact.asp

Infonetics Research released excerpts from its latest Millimeter Wave Equipment report, which tracks licensed and unlicensed millimeter wave equipment by market application (access, backhaul, and transport).

“Although the millimeter wave market is still modest in scale at this point, the enhanced capacity capabilities delivered by this technology will be invaluable as a backhaul aggregation solution for small cell deployments as they scale up,” says Richard Webb, directing analyst for mobile backhaul and small cells at Infonetics Research.

Webb continues: “We expect millimeter wave to play a significant role in outdoor small cell backhaul, which will become the primary long-term market driver.”

MILLIMETER WAVE EQUIPMENT MARKET HIGHLIGHTS

- Infonetics projects the overall millimeter wave equipment market to grow to $755 million by 2018

- The outdoor small cell backhaul segment of the millimeter wave equipment market is forecast by Infonetics to outpace all other segments of the market, growing at a 101% CAGR from 2013 to 2018

- Licensed E-band 70–90GHz equipment accounted for 93% of millimeter wave sales in the first half of 2014 (1H14)

- 90% of millimeter wave equipment revenue in 1H14 came from mobile backhaul applications, up from 85% in the second half of 2013 (2H13)

- Currently, the top 3 players in millimeter wave gear are E-Band Communications, NEC, and Siklu (in alphabetical order), though Infonetics expects some consolidation to take place over the next 2 years as the market grows and more of the large mainstream microwave vendors move in from the wings

REPORT SYNOPSIS:

Infonetics’ biannual millimeter wave report provides worldwide and regional market size, vendor market share, forecasts through 2018, analysis, and trends for unlicensed V-band (57–64GHz), licensed E-band (70–90GHz), and W-band (75–110GHz) millimeter wave equipment by network application (access, backhaul, transport). The report tracks units, revenue, and ARPU and follows Aviat Networks, Ceragon, E-Band, Ericsson, Fujitsu, Huawei, Intracom, NEC, Remec (BridgeWave), SIAE, Siklu, Sub10 Systems, and others.

To buy the report, contact Infonetics:

Huawei Displays SDN and NFV Technologies at SDN & OpenFlow World Congress in Germany

Huawei today showcased its software-defined networking (SDN) and network functions virtualization (NFV) technologies at the SDN & OpenFlow World Congress in Dusseldorf, Germany. At the event, Huawei discussed how industry partners can best utilize these technologies to further the development of the telecom industry, with specific focus on how its SoftCOM strategy will continue to help address this industry trend.

The SDN World Congress was advertised as an opportunity to examine developments, debate the issues and showcase the reality of SDN and NFV – and promises to be another milestone occasion for the whole market – carriers, data centres and enterprises. There’s a NFV FORUM and Proof of Concept Zone – endorsed by ETSI as Forum Partner- at this conference as well.

“Huawei’s SoftCOM will unleash the full potential of SDN and NFV, building an open and flexible network infrastructure for the ICT industry. We hope to work with all industry participants to create a future-oriented open network ecosystem in the cloud ICT era,” said Ken Wang (Shengqing), President of Global Marketing and Solutions, Huawei.

As ICT technologies become increasingly cloud-based, telecom carriers are faced with the pressure of increased development costs and sluggish revenue growth. In this new era of ICT, carriers must now focus on evolving their businesses to address these challenges and regain competency. Based on the concepts of cloud computing, SDN, and NFV, Huawei developed the future-oriented telecom network architecture strategy, SoftCOM, which aims to help reconstruct the telecom industry from the perspectives of network architecture, network functions, as well as service and Observation and Measurements (O&M) models. The SoftCOM strategy enables telecom carriers to realize comprehensive network evolution and business transformation, thus creating and seizing new value opportunities through ICT integration. This approach will further help with establishing and advancing an open, interconnected, and innovative ecosystem, to increase and better leverage the aggraded industry value.

Focusing on technological development driven by business requirements, Huawei has experimented with numerous SDN and NFV innovations across all network fields and launched corresponding solutions to help carriers implement full-scale network and business transformation for the future. To date, Huawei has worked with more than 20 leading carriers around the world including Telefonica, China Mobile, China Unicom, and China Telecom, on over 60 joint SDN- and NFV-related innovation projects. In 2014, Huawei and global carriers executed several commercial SDN and NFV deployments, including the world’s first commercial SDN deployment in cooperation with China Telecom, the SDN-based Wo cloud project in cooperation with China Unicom, the SDN-based hybrid cloud project in cooperation with China Telecom, and an NFV-based vIMS joint project in Europe.

The SDN & OpenFlow World Congress saw Huawei reveal its new Flexible Ethernet solution and an innovative network architecture MobileFlow, as well as several prototype systems of its new technologies at the event. The Flexible Ethernet solution has the flexibility to support various dynamic software-configured combinations of different Ethernet sub-interfaces. It marks different data connections and services that require different data traffic, and match them to their correspondent sub-interfaces accordingly. The Flexible Ethernet is an Ethernet revolution that will change the industry dominated by the traditional fixed Ethernet forever, and would greatly impact the future IP network architecture. In addition, the MobileFlow structure has realized unified deployment of mobile network control functions through cloud, while the previous plane model is only capable of service forwarding and processing. Through the southbound interface, the mobile controller is now able to conduct fine-grained forwarding control of the distributed forwarding devices, and also open the control capability to their application layers.

Huawei will continue to focus on the development of its SDN and NFV-based cloud technology, with the aim of facilitating the industry’s network structural transformation for the future.

About Huawei Huawei is a leading global information and communications technology (ICT) solutions provider with the vision to enrich life through communication. Driven by customer-centric innovation and open partnerships, Huawei has established an end-to-end ICT solutions portfolio that gives our customers competitive advantages in telecom and enterprise networks, devices and cloud computing. Huawei’s 150,000 employees worldwide are committed to creating maximum value for telecom operators, enterprises and consumers. Our innovative ICT solutions, products and services have been deployed in over 170 countries and regions, serving more than one third of the world’s population. Founded in 1987, Huawei is a private company fully owned by its employees.

For more information:

Set Top Box Market is NOT Monolithic- Satellite, Cable, OTT, IPTV, etc from Infonetics + Research & Markets

I. Infonetics Research released excerpts from its 2nd quarter 2014 (2Q14) Set-Top Boxes and Pay TV Subscribers report, which tracks IP, cable, satellite, and digital terrestrial (DTT) set-top boxes (STBs) and over-the-top (OTT) media servers.

2Q14 SET-TOP BOX MARKET HIGHLIGHTS:

. Globally, set-top box (STB) revenue-including IP, cable, satellite, and DTT STBs and OTT media servers-is up 4% in 2Q14 from 1Q14, to $4.8 billion

. STB unit shipments grew 7% sequentially in 2Q14, but are down 3% from the year-ago 2nd quarter of 2013

. Cable STB revenue increased by 3% sequentially in 2Q14, and unit shipments grew 4% during this same period

. Arris, the worldwide STB market share leader, gained almost 2 share percentage points in 2Q14 over 1Q14

. Over-the-top (OTT) media servers are quickly becoming the STB of choice for pay TV providers in emerging markets such as China, where free video content is abundant and service providers are looking to bundle live streaming video with their own broadband offerings

. The worldwide STB market is forecast by Infonetics to be essentially flat, i.e. growth at a -0.05% compound annual growth rate (CAGR) – from 2013 to 2018, when it will total $19.2 billion.

. Surprise forecast: In 2018, satellite STBs are expected to contribute the majority of STB revenue at 36%

“The global set-top box (STB) market is in a fascinating period of mixed signals. While quarterly unit shipments are up, on a year-over-year basis shipments are down. And though nearly all STB product categories saw volume increases in 2Q14, satellite shipments continue a downward trend, while cable set-tops are growing due to an ongoing refresh cycle in North America and Europe,” says Infonetics’ Jeff Heynen, principal analyst for broadband access and pay TV.

STB REPORT SYNOPSIS:

Infonetics’ quarterly STB report provides worldwide and regional market size, vendor market share, forecasts through 2018, analysis, and trends for IP STBs; cable and satellite STBs (digital, hybrid, video gateways, media players); DTT STBs; and OTT media servers. The report also tracks telco IPTV and cable and satellite video subscribers. Vendors tracked: ADB, Apple, Arris, Changhong, Cisco, Coship, DVN, Echostar, Huawei, Humax, Jiuzhou, Kingvon, Netgem, Pace, Roku, Sagemcom, Samsung, Skyworth Digital, Technicolor, ZTE, others.

To buy report, contact Infonetics: www.infonetics.com/contact.asp

RELATED REPORT EXCERPTS:

. DOCSIS channels pass 1 million for first time in Q2; CCAP revenue up 42%

. Broadband aggregation equipment passes $2B in 2Q14 as operators ‘binge on broadband’

. Fixed broadband infrastructure investments are rebounding in Brazil

. OTT and multiscreen TV drive spending on broadcast and streaming video equipment

. 802.11ac routers to make up nearly a third of WiFi-enabled router shipments by 2015

II. Research and Markets has announced the addition of the “Global and China Set-Top Box (STB) Industry Report 2014” report to their offering.

The Global and China Set-Top Box (STB) Industry Report 2014 is a professional and in-depth study on the current state of the global set-top box industry with a focus on the Chinese market.

The report provides a basic overview of the industry including definitions, classifications, applications and industry chain structure. The set-top box market analysis is provided for both the international and Chinese domestic situations including development trends, competitive landscape analysis, key regions development status and a comparison analysis between the international and Chinese markets.

Development policies and plans are also discussed and manufacturing processes and cost structures analyzed. Set-Top box industry import/export consumption, supply and demand figures and cost price and production value gross margins are also provided.

The report focuses on thirteen industry players providing information such as company profiles, product picture and specification, capacity production, price, cost, production value and contact information. Upstream raw materials and equipment and downstream demand analysis is also carried out. The set-top box industry development trends and marketing channels are analyzed. Finally the feasibility of new investment projects are assessed and overall research conclusions offered.

For more information visit http://www.researchandmarkets.com/research/7b85kd/global_and_china

Media Contact: Laura Wood, +353-1-481-1716, [email protected]

SIP trunking services market expected to grow 35% in 2014 & top $8 billion by 2018

Infonetics Research released excerpts from its new SIP Trunking Services market size and forecasts report, which tracks Session Initiation Protocol (SIP) trunking revenue, trunks, and average revenue per trunk.

SIP trunking is a means of synthesizing the Session Initiation Protocol and Voice over Internet Protocol (VoIP) to provide telephony services over data networks. The two leading SIP trunk providers are BroadVoice and 8×8, Inc.

SIP TRUNKING SERVICES MARKET HIGHLIGHTS:

. The global SIP trunking services market is on track to grow 35% in 2014, to $4.4 billion

. The biggest market for SIP trunking services is North America, although new geographic markets continue to open up, helping fuel growth

. More and more businesses are making the switch to SIP trunking, but they are not cutting 100% of connections to SIP on day one; rather, SIP is typically being deployed at one or two sites to start

. Infonetics expects continued strong worldwide growth for SIP trunking over the next five years, forecasting the market to reach $8 billion in 2018

. SIP trunking is being pitched alongside hosted PBX and unified communications (UC) services as more businesses seek hybrid solutions

“There is no denying the world is moving to IP, and SIP has become the de facto solution of choice for businesses for IP connections. In North America, slightly more than 20% of the installed business trunks are SIP trunking today, with significant upside opportunity,” notes Diane Myers, principal analyst for VoIP, UC, and IMS at Infonetics Research.

SIP REPORT SYNOPSIS:

Infonetics’ annual SIP trunking services report provides worldwide and regional market size, forecasts through 2018, analysis, and trends for SIP trunking revenue, trunks, and average revenue per trunk.

To buy the report, contact Infonetics:

www.infonetics.com/contact.asp

RELATED REPORT EXCERPTS:

. Infonetics’ October Enterprise Voice, Video, and UC research brief:

http://www.infonetics.com/2014-newsletters/Enterprise-Voice-Video-UC-October.html

. Over 3/4 of N. American businesses surveyed plan to use SIP trunking by 2016

. Shift to software and services in enterprise videoconferencing market tamps revenue growth

. Enterprise session border controller (eSBC) market up 8% in 2Q14

. PBX market struggles linger in first half of 2014; UC up 31% from year ago

. Cloud PBX and unified communication services a $12 billion market by 2018

References:

Analysis of the North American VoIP Access and SIP Trunking Services Market 2013-2020

http://www.businesswire.com/news/home/20141001006535/en/Research-Markets…

Infonetics: 100G+ coherent network equipment moving to the metro

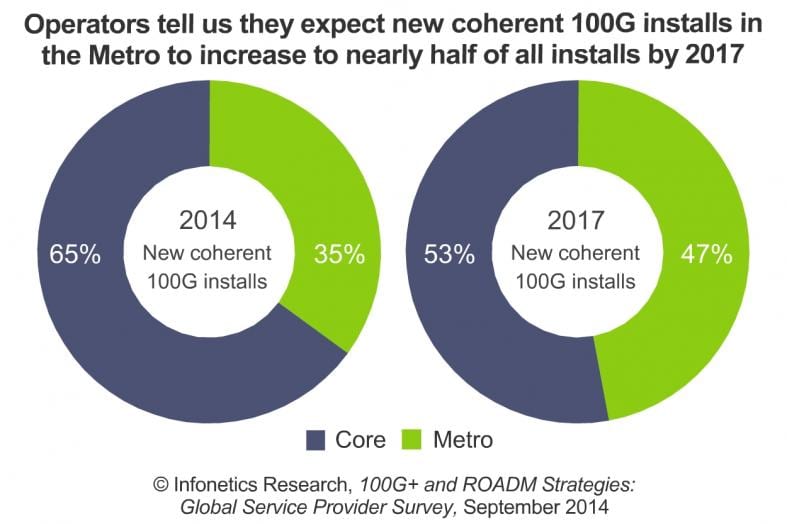

Infonetics Research released excerpts from its 2014 100G+ and ROADM Strategies: Global Service Provider Survey, which details the plans of operators transitioning optical transmission and switching equipment to higher-speed 100G+ wavelengths.

100G+ AND ROADM SURVEY HIGHLIGHTS:

. Respondent operators have reasonable expectations for pricing for coherent metro-regional and access applications versus the core

. When it comes to end-use applications for metro 100G, study participants view data center interconnect as the clear killer app for the next 12 months

. Respondents also expect a greater uptake of 100GE transport when compared to previous years’ Infonetics surveys

. Those surveyed expect 100G WDM to account for almost 40% of all metro wavelength deployments and 75% of the core network in 2017

. Pluggable CFP and CFP2 technology is perceived by respondents as a more important technology for the metro this year than it was last year

“The big battleground for vendors of 100G-and-beyond coherent equipment and components has shifted to the metro as winners for long reach core applications have been decided,” says Andrew Schmitt, principal analyst for carrier transport networking at Infonetics Research.

“There are two very distinct markets for coherent technology. One market is deploying long haul coherent today and will transition to metro in the coming years. But there is another, separate (network provider) customer type that is already fully committed to deploying coherent technology in the metro today,” Schmitt adds. “These two markets have major implications for the supply chain regarding product specialization and the relative lack of interest in flex coherent technology by

some customers.”

ABOUT INFONETICS’ 100G/ROADM SURVEY:

For its 19-page 100G+ and ROADM (Reconfigurable Optical Add/Drop Mulltiiplexer) strategies survey, Infonetics interviewed 31 incumbent, competitive, mobile, and cable operators from EMEA, North America, and Asia that have an optical transport network using WDM. Together, the respondents represent a significant 33% of the world’s telecom revenue and 36% of global capex. The survey covers 100G WDM price points, metro coherent wavelength deployment trends, end-use applications for metro 100G, metro and core technology adoption rates, and key technologies for optical transport.

To buy the report, contact Infonetics: www.infonetics.com/contact.asp

http://www.infonetics.com/pr/2014/100G-ROADM-Strategies-Survey-Highlight…

FREE OPTICAL WEBINAR AND WHITEPAPER:

Join Infonetics analyst Andrew Schmitt Oct. 28 at 11:00 A.M. EDT for Building More Efficient Networks with Multi-Layer Packet-Optical, an event examining how network operators are building more capable and efficient networks. Register to attend live or access the replay at:

http://w.on24.com/r.htm?e=846242&s=1&k=C66A31488593733FC7770651A4A1DD98

RELATED REPORTS & BLOG POSTS:

. Infonetics’ October Optical research brief:

http://www.infonetics.com/2014-newsletters/Optical-October.html

Top 10 Things to Look for in a Metro 100G Platform

http://www.cyaninc.com/blog/2014/09/09/top-10-things-to-look-for-in-a-me…

Fujitsu unveils 100G coherent CFP for metro networks

http://www.lightwaveonline.com/articles/2014/09/fujitsu-unveils-100g-coh…

Infinera Targets Cloud for 100G Network Between Data Centers

https://www.sdncentral.com/news/infinera-gets-cloud-happy-100g-network-d…

Open Networking NOT truly OPEN without industry accepted Standards & APIs; Role of OpenStack Foundation?

There are so many flavors of “SDN” you can’t keep track of them. The term “SDN” has come to ONLY mean “software control of a network.” That could be anything and is a far cry from the original definition which included strict separation of the Control and Data planes, a “centralized controller” that computes paths for many packet forwarding engines (Data plane implementations) and a standardized API (Open Flow v1.3) as the “southbound API” between the Control and Data Planes.

What Guru Parulker of Stanford University called “SDN Washing” (non pure forms of SDN) have come to dominate the industry. There are network overlay models with multiple instances of Control planes, network virtualization (overlay of a logical network over a physical network) using VMware technology or VxLAN tags, and extensions of proprietary, closed network equipment to accomodate some degree of user control over the network configuration and paths.

I don’t think AT&Ts SDN WAN uses Open Flow or even a centralized SDN controller to compute paths/routes for multiple data forwarding “engines.” Instead, they use a proprietary, dynamic routing protocol as described in this article: http://viodi.com/2014/09/18/atts-sdn-wan-as-the-network-to-access-deliver-cloud-services/

What Standards are Required for SDN:

IMHO, Open networking imples multi-vendor interoperability, which requires standards for functionality, protocols, APIs, and interfaces that are in the public domain. I believe the following standards are needed for SDN:

1. API/protocol between the Control Plane entity and the Data Plane entity (Open Flow v1.3????)

2. API/protocol between the Control Plane entity and the Management/Orchestration/Automation entity. It’s sometimes referred to as the “Northbound API” or “Management plane API”

There’s also OpenStack Networking API v2.0 (neutron) Reference

Summary of Light Reading’s NFV and the Data Center Conference- 16 Sept 2014

Note: This is a condensed summary of two previously published articles on this excellent conference.

http://viodi.com/category/weissberger/

Introduction:

The telco data center [1] (DC) is likely to be the first place network operators deploy Network Virtualization/Network Functions Virtualization (NFV). That was the opening statement at the Light Reading conference on NFV and the Data Center, held Sept 16, 2014 in Santa Clara, CA. A network virtualized data center was defined by the conference host as a “cloudified DC which integrates virtualized telecom network functions utilizing Virtual Network Functions (VNF) or Distributed VNFs.”

Note 1. Larger network operators (e.g. AT&T, Verizon) already operate “telco DCs” for web hosting, storage, cloud computing, managed services, and back end network management/OSS/BSS. It will be easier for them (compared to those operators with no DC) to implement a NFV based telco DC. See Heavy Reading Survey results below for more details on this topic.

Concepts and reference architectures from the ETSI NFV specifications group were predicted to alter the data center from a siloed IT-centric model (separate compute /storage/ networking equipment) to a harmonized network and IT domain model, in which virtualized telecom functions, e.g. policy control and application orchestration, are added to the growing list of computing demands on servers. According to Light Reading, NFV will drive an entirely new set of storage, automation, management, and performance requirements, which are only now starting to be defined.

[One must assume that these VNF’s will be implemented as software in the DC compute servers, perhaps with some hardware assist functionality. Realizing that vision will eliminate a lot of network equipment (hardware) in a telco’s DC and provide much more software control of network functions and services.]

Key industry trends discussed at this excellent 1 day conference included:

•The need for service providers to shorten their service delivery cycles and adopt agile approaches to delivering new services.

•The key role that automation of network processes will play in helping operators deliver more user control and network programmability.

•Taming network complexity remains a significant challenge.

•Services in the era of virtualization must still maintain security and reliability for which telecom has been known.

Key findings from Heavy Reading’s January 2014, multi-client study are presented. Next, we summarize network operator keynotes from Century Link. Part 2. will review the Orange, and NTT Communications keynotes as well as our summary and conclusions.

Panel Discussion:

NFV requires operators to find new ways of looking at basic network attributes like performance, reliability and security. For example, performance metrics may change in migrating to NFV – from raw/aggregate performance to performance per cubic meter, or performance per watt. Virtualization will transform many ways of configuring and managing network resources.

However, a business case must be established for an operator to move towards network virtualization/NFV/NVFs. The cost and ROI must be justified. Heavy Reading analyst Roz Roseboro opined that projects which get funded are those that will affect the top line, meaning increased revenues from new and existing services. In that sense, NFV is more likely to get funding than SDN, because it will greatly help an operator increase service velocity/time to market and thereby realize more money. SDN is more about OPEX reductions and efficiency she said.

Century Link Keynote: James Fege, VP of Network Strategy & Development

CenturyLink is counting on its Savvis acquisition to make them hugely successful in cloud computing and to build a “cloudified” telco DC for traditional network services. Acquired in 2011, Savvis is a separate vertical entity within CenturyLink (which includes the former Embarq, U.S. West, Qwest and other companies. CenturyLink has successfully integrated the cloud orchestration and software development of Tier 3, and the platform-as-a-service capabilities of AppFog in their cloud computing capabilitites).

According to Feger, “Cloud is not ‘rust resistant.’ It must be: programable, self-service, and offer on-demand services.”

The CenturyLink Cloud process and operations involve the following attributes:

- Agile methodology

- 21- to 30-day release cycles

- DevOps team2

- Minimum viable product (not explained)

- Building block architecture which is API based

- Constant feedback to improve operations and services

Note 2: While network operations is traditionally a stand-alone function with dedicated staff, the DevOps model eliminates the hand-off from development to operations, keeps the developers in the feedback loop, and incentivizes developers to resolve problems or complications on their own instead of passing them to the Operations department.

The realization of the above cloud attributes is via open applications programming interfaces to software that exists above the physical network. Open source software will allow developers to offer their apps or services regardless of the underlying infrastructure, Feger said. He was firm in his view that “agility combined with our network platform is CenturyLink’s differentiator.”

Feger was quite honest during his talk. He confessed that the service cycles on the network side are still measured in months, not weeks or days. By incorporating the agile technology approaches of the CenturyLink Cloud and the use of a DevOps model, CenturyLink hopes to improve on that. But not this year or next.

“It will be a multi-year project to migrate our network to a cloud like set of capabilities, while minimizing (existing) customer disruptions.”

The take away here is that CenturyLink is attempting to leverage their highly regarded cloud capabilities to offer “cloud-like” L1 to L3 network services, e.g. IP MPLS VPN, Ethernet services, private line (e.g. T1/T3/OC3), broadband Internet access, video, and other wire-line services. Service delivery times must become a lot shorter, while programmability, orchestration, and automation are necessary components to make this happen.

Orange Keynote: Christos Kolias, Sr. Research Scientist, Orange – Silicon Valley

Christos first described the the NFV Concept and Vision from his perspective as a founding member of the ETSI NFV specifications group. It’s a quantum shift from dedicated network equipment to” virtual appliances.”

In the NFV model, various types of dedicated network appliance boxes (e.g. message router, CDN equipment, Session Border Controller, WAN acceleration, Deep Packet Inspection (DPI), Firewall, Carrier grade IP Network Address Translation (NAT), Radio/Fixed Access Network Nodes, QoS monitor/tester, etc.) become “virtual appliances,” which are software entities that run on a high performance compute server.

In other words, higher layer network functions become software based virtual appliances, with multiple roles over the same commodity hardware and with remote operation possible. “It’s a very dynamic environment, where (software based) network functions can move around a lot. It’s extremely easy to scale,” according to Christos.

[One assumes that each such virtual appliance would have an open or proprietary API for orchestration, automation, and management of the particular function(s) performed.]

A few examples were cited for a network virtualized telco DC:

• Security functions: Firewalls, virus scanners, intrusion detection systems, spam protection

•Tunnelling gateway elements: IP-SEC/SSL VPN gateways

•Application-level optimization: Content Delivery Networks (CDNs), Cache Servers, Load Balancers, Application Accelerators, Application Delivery Controllers (ADCs)

•Traffic analysis/forensics: DPI, QoE measurement

•Traffic Monitoring: Service Assurance, SLA monitoring, Test and Diagnostics

Note: This author DESPISES TLAs=three letter acronyms. In many cases, the TLA used in a presentation/talk is much more recognizable in another industry, e.g. ADC =Analog to Digital Converter, rather than Application Delivery Controller. Hence, I’ve tried to spell out most acronyms in this and the preceeding article on the NFV conference. It takes a lot of effort as I’m not familiar with most of the TLAs used glibly by speakers.

Kolias said that the migration from network hardware to software based virtual appliances won’t be easy. Decoupling NVFs from underlying hardware presents management challenges: services to NFV mapping, instantiating VNFs, allocating and scaling resources to VNFs, monitoring VNFs, support of physical/software resources.

NFV components in a virtualized telco DC might include: server virtualization, management and orchestration of functions & services, service composition, automation, and scaling (up and/or down according to network load). There are lots of servers, storage elements, and L2/L3 switches in such a DC. There’s also: security hardware (firewalls, IDS/IPS), load balancers, IP NAT, ADC, monitoring, etc.

NFV in the Data Center will be more energy efficient, according to Kolias. “It’s the greenest choice for an operator,” Christos said. With many fewer hardware boxes, NFV can bring the most energy efficiency to a data center (less energy consumed and lower cooling requirements). That’s a top consideration for those massively power-hungry DC facilities. “You have to dispose of telecom hardware, but when we move things into software, it becomes more eco-friendly,” Kolias said. “So yes, there is absolutely a fit for NFV in the Data Center,” he concluded.

Christos thinks it’s probably easier and faster to implement NFV in a telco DC, because there’s less compliance/ regulation and it’s a less complex environment – both technically and operationally.

Service chaining was referred to as “service composition and insertion,” with policies determining the chain order. Customized service chains are possible with NFV, Kolias added. Ad-hoc, on-demand, secure virtual tenant networks are also possible. For example, tunnels/overlays using the VxLAN protocol (spec from Arista, VMWare and Juniper).

Kolias also cited other benefits of “cloudification” — a term he admittedly hates. “For example, consolidating multiple physical network infrastructures in a cloud-based EPC (LTE Evolved Packet Core) can lead to less complexity in the network and produce better scalability and flexibility for service providers in support of new business models,” he noted.

Several other important points Christos made about NFV in the telco DC:

1. Virtual switches can be key functional blocks for management of multiple virtual switches and for programmable service chains.

2. The Control plane could become part of management and orchestration in a unified, policy-based management platform, e.g. OpenStack.

[That’s radically different than the pure SDN model (Open Network Foundation), where the Control plane resides in a separate enitity, which communicates with the Management/ Orchestration platform (e.g. OpenStack) via a “Northbound” API.]

3. Hardware acceleration can play a role in Network Interface Cards (NICs) and specialized servers. However, they should be programmable.

4. Challenges include: Performance (e.g. increased VM-VM traffic requirements), Security Hybrid environment, and Scaling.

APIs will be important for plug-n-play, especially for open platforms like Google, Facebook, Microsoft, eg. WebRTC. They can enable a plethora of innovative (e.g. ad-hoc/customized) services and lead to new business models for the telcos. That would translate into monetization opportunities (e.g. for new residential and business/ enterprise customers, virtual network operators (VNOs), and others) for service providers.

Christos predicts that many service providers will move from function/service based networks to app-based models. They will deploy resources, including Virtual Network Functions (VNFs) on-demand, as an application when the user needs them. He predicted that smart mobile devices and the Internet of Things (IoT) will precipitate the adoption of APIs for telco apps.

Kolias summed up: “NFV can propel the move to the telco cloud. When this happens we will have succeeded as an NFV community! NFV removes the boundaries and constraints in your infrastructure. It breaks the barriers and opens up unlimited opportunities.”

NTT Com Keynote: Chris Eldredge, Executive VP of Data Center Services for NTT America (NA subsidiary of NTT Com)

Background: NTT Com is one of the largest global network providers in the world, in third place behind Verizon and AT&T. They provide global cloud services, managed services, and connectivity to the world’s biggest enterprises. NTT Com has a physical presence in 79 countries, $112B in revenues, and 242K employees. It’s network covers 196 countries and regions. The company spent $2.5B in R&D last year, with a North American R&D center in Palo Alto, CA. Finally, they claim to be the #1 global data center and IP backbone network provider in the world. [Chris said Equinix has more total square footage in their data centers than NTT, but they don’t have the IP backbone network.]

NTT Com’s enterprise customers mostly use cloud for development and test applications. “It’s bursty in nature. They turn it up and turn it down,” Eldredge said. It’s also used for OTT broadcasts of sporting events and concerts. On January 1, 2014, NTT spun up 200,000 virtual machines (VMs) to meet demand for Europeans watching soccer matches on their mobile devices. After the soccer match was over, those VMs were de-activated.

With the Virtela acquisition, NTT Com has recently deployed their version of NFV capabilities in both their DCs and global network along with SDN based provisioning.

“SDN/NFV is a more scalable network technology that NTT Com is now using to provide cloud and managed services to a broad range of clients,” Eldredge said. “It allows us to specialize and provide custom solutions for our customers,” he added.

The NFV (higher layer) services NTT Com is now offering include: virtual firewall, network hosted applications accelerator, Secure Sockets Layer (SSL) VPN, IP-SEC gateway, automated customer portal (for full control of services, self deployment, self management, and full visibility), on premises harware based managed services which provide a fully integrated managed solution for NTT Com customers.

The above NFV enabled services can be easily applied, monitored and rapidly changed. NTT Com can customize applications performance and service levels for specific users and profiles. In conclusion, Chris said that “NFV has become the next phase of the virtualized DC, extending the enterprise DC into the cloud. [Such an extension, by definition, would be a hybrid cloud]

In answer to this author’s question on when and if NTT Com would use NFV to deliver pure (L1-L3) network connectivity services, Chris confessed that it wasn’t on their roadmap at this time.

Summary and Conclusions:

Operators are planning for NFV and some – like NTT Com – already have implemented several NFV enabled services. Examples of NFV capabilities were clearly stated by Kolias of Orange Silicon Valley and Eldredge of NTT Com. It starts with higher layer (L5-L7) network functions/capabilities, cloud and managed services. However, it will take considerable time before the entire network is virtualized. “NFV everywhere by 2020” is too aggressive, according to some. And don’t expect mainstream connectivity functions (including Carrier Ethernet services, private lines, circuit switching, etc) to be virtualized anytime soon.

Early NFV adopters will be challenged as they work through internal issues like breaking down their organizational silos and adapting their business models to a quicker, more agile manner of provisioning and controlling network resources and services.

What happens to the network IT guy when the majority of network equipment disappears and is transformed into virtual appliances? Who maintains a compute server that’s also implementing many higher layer networking functions? What trouble shooting tools will be available for NFV entities?

Automation and self service are crucial for the network operator to deploy services quicker and hence realize more revenues. CenturyLink’s Feger said it best: “If you’re on a nine-month release strategy, your network isn’t really programmable.”

“Agility is an asset. You can only tame complexity,” noted Heavy Reading analyst and event host Jim Hodges, who quoted Brocade’s Kelly Herrell from an earlier presentation. “As an industry, we realize complexity is an inherent part of what we’re doing, but it’s something we have to address.”

Infonetics: Data center and enterprise SDN market soars 192% year-over-year; Largest SDN in China

Infonetics Research released excerpts from its 2014 Data Center and Enterprise SDN Hardware and Software report, which defines and sizes the market for software-defined networks (SDN).

SDN MARKET HIGHLIGHTS:

. Vendors are seeding the market with SDN-capable Ethernet switches in the data center and enterprise LAN

. The leaders in the SDN market will be solidified during the next 2 years, as 2014 lab trials give way to live production deployments

. Bare metal switches are the top in-use for SDN-capable switch use case in the data center and are anticipated to account for 31% of total SDN-capable switch revenue by 2018

. Infonetics forecasts the “real” market for SDN-that is, in-use for SDN Ethernet switches and controllers-to reach $9.5 billion in 2018

. The adoption of SDN network virtualization overlays (NVOs) is expected to go mainstream by 2018

ANALYST NOTE:

“There is no longer any question about software-defined networking (SDN) playing a role in data center and enterprise networks. Data center and enterprise SDN revenue, including SDN capable Ethernet switches and SDN controllers, was up 192% year-over-year (2013 over 2012),” reports Cliff Grossner, Ph.D., directing analyst for data center, cloud, and SDN at Infonetics Research. “The early SDN explorers-NEC in Japan and pure-play SDN startups in North America-were joined in 2013 by the majority of traditional switch vendors and server virtualization vendors offering a wide selection of SDN products.”

“Even more eye opening,” continues Grossner, “In-use for SDN Ethernet switch revenue, including branded Ethernet switches, virtual switches, and bare metal switches, grew more than 10-fold in 2013 from the prior year, driven by significant increases in white box bare metal switch deployments by very large cloud service providers such as Google and Amazon.”

ENTERPRISE SDN AND DATA CENTER REPORT SYNOPSIS:

Infonetics’ annual data center and enterprise SDN report provides worldwide and regional market size, forecasts through 2018, analysis, and trends for SDN controllers and Ethernet switches in use for SDN. Notably, the report tracks and forecasts SDN controllers and Ethernet switches in-use for SDN separately from SDN-capable Ethernet switches. The report also includes significant SDN vendor announcements. Vendors tracked: Alcatel-Lucent, Big Switch, Brocade, Cisco, Cumulus, Dell, Extreme, HP, Huawei, IBM, Juniper, Midokura, NEC, Pica8, Plexxi, PLUMgrid, Vello Systems, VMware, others.

To buy the report, contact Infonetics: www.infonetics.com/contact.asp

RELATED ARTICLE:

Huawei and 21Vianet Collaborate to Launch China’s Largest Commercial SDN Network

RELATED REPORT:

87% of medium and large N. American enterprises surveyed by Infonetics intend to have SDN live in the data center by 2016 http://www.infonetics.com/pr/2014/SDN-Strategies-Survey-Highlights.asp

“Software-defined networking (SDN) spells opportunity for existing and new vendors, and the time to act is now. The leaders in the SDN market serving the enterprise will be solidified during the next two years as lab trials give way to live production deployments in 2015 and significant growth by 2016. The timelines for businesses moving from lab trials to live production for the data center and LAN are almost identical,” notes Cliff Grossner, Ph.D., directing analyst for data center, cloud, and SDN at Infonetics Research.

“There’s still some work to do on the part of SDN vendors. Expectations for SDN are clear, but there are still serious concerns about the maturity of the technology and the business case. Vendors need to work with their lead enterprise customers to complete lab trials and provide public demonstrations of success.”

SDN SURVEY HIGHLIGHTS (July 2014):

- Infonetics’ enterprise respondents are expanding the number of data center sites and LAN sites they operate over the next 2 years and are investing significant capital on servers and LAN Ethernet switching equipment

- A majority of survey respondents are currently conducting data center SDN lab trials or will do so this year; 45% are planning to have SDN in live production in the data center in 2015, growing to 87% in 2016

- Respondents’ plans for LAN SDN are nearly identical to their data center plans

- Among respondents, the top drivers for deploying SDN are improving management capabilities andimproving application performance, while potential network interruptions and interoperability with existing network equipment are the leading barriers

- Meanwhile, enabling hybrid cloud is dead last on the list of drivers, a sign that SDN vendors have some work to do in educating enterprises that SDN can be an important enabler of hybrid cloud architectures

- On average, 17% of respondents’ data center Ethernet switch ports are on bare metal switches, and only 21% of those are in-use for SDN

- Nearly ¼ of businesses surveyed are ready to consider non-traditional network vendors for their SDN applications and orchestration software

RELATED RESEARCH:

- 87% of medium/large N.A. enterprises say they intend to have SDN in data center by 2016 (see above summary)

- $1 trillion to be spent on telecom and datacom equipment and software over next 5 years

- SDN and NFV to bring about shift in data center security investments

Summary of Michael Howard’s NFV migration talk at 2014 Hot Interconnects:

http://viodi.com/2014/09/05/2014-hot-interconnects-hardware-for-software-defined-networks-nfv-part-ii/

INFONETICS WEBINARS:

Visit www.infonetics.com/infonetics-events to register for upcoming webinars, view recent webinars on-demand, or learn about sponsoring a webinar.

. Making Your Network Run Hotter With SDN (View on-demand)

. NFV: An Easier Initial Target Than SDN? (View on-demand)

. SDN and NFV Roundup of Trials and Deployments (Oct. 23: Learn More)

. SDN: vSwitch or ToR, Where is the Network Intelligence, and Why? (Oct. 30: Sponsor)

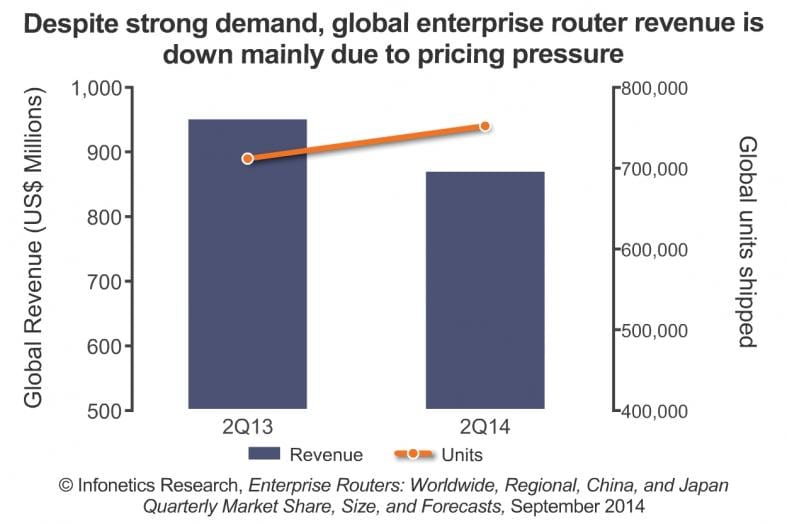

Enterprise Router Market Declines 9% while Service Provider Edge Router Market gains 4% Y-o-Y

Infonetics Research released excerpts from its 2nd quarter 2014 (2Q14) Enterprise Routers report, which tracks high end, mid-range, branch office, and low-end/SOHO router revenue and ports. There was no seasonal rebound in enterprise router sales in 2Q14, as the market was down 9% from one year ago.

2Q14 ENTERPRISE ROUTER MARKET HIGHLIGHTS:

. Worldwide enterprise router revenue totaled $867 million in 2Q14, just a 1% sequential gain in a quarter that typically sees a strong seasonal pickup; Unit shipments are still growing, up 6% year-over-year

. Meanwhile, enterprise router sales dipped 9% in 2Q14 from the year-ago quarter (2Q13)

. The good news in 2Q14: demand for higher-performance routers was strong; High-end and mid range router unit shipments were up by double digits year-over-year, while branch office and low-end routers posted more muted growth

. Asia Pacific is once again the top-performing region for enterprise routers in 2Q14; North American sales tumbled 19% year-over-year, and EMEA sales fell 11%

. U.S. vendor performance for enterprise routers was mostly down on a year-over-year basis in 2Q14, while Chinese vendors gained as preferences in China shift to local vendors Huawei and ZTE as well as the H3C division of HP

ANALYST NOTE:

“For the second quarter in a row, enterprise router sales disappointed, and revenue is now trending downward. Demand for routers is still strong, as indicated by rising unit shipments, but discount pressure, preferences for local and lower-cost vendors in China, and lower public sector sales drove down revenue,” notes Matthias Machowinski, directing analyst for enterprise networks and video at Infonetics Research.

ENTERPRISE ROUTER REPORT SYNOPSIS:

Infonetics’ quarterly enterprise router report provides worldwide and regional market size, vendor market share, forecasts through 2018, analysis, and trends for high-end, mid-range, branch office, and low-end/SOHO router revenue and ports. Vendors tracked: Adtran, Alcatel-Lucent, Brocade, Cisco, HP, Huawei, Juniper, NEC, OneAccess, Yamaha, ZTE, others.

To buy report, contact Infonetics:

www.infonetics.com/contact.asp.

RELATED REPORT EXCERPTS:

. Cloud is our number-one networking initiative, say enterprises in Infonetics’ latest survey

. Tight battle for 2nd after Cisco in Infonetics’ enterprise networking infrastructure scorecard

. $1 trillion to be spent on telecom and datacom equipment and software over next 5 years

. Infonetics releases Global Telecom and Datacom Market Trends and Drivers report

. Enterprise router market off to a rough start, plunges 14% sequentially

In sharp contrast to the enterprise router market, Dell’Oro Group,reports that the Service Provider Edge Router market grew to its highest level ever, gaining four percent in the second quarter of 2014 versus the year-ago period, contributing to a record quarter for the Service Provider Router market overall.

“Demand drivers varied by country as all regions grew versus last year,” said Alam Tamboli, Senior Analyst at Dell’Oro Group. “In the United States, demand for routers in the backhaul for LTE networks has been one of the primary motives for investment in recent years, however this quarter service providers in the region also invested heavily into fixed networks. In much of the world, routers used for LTE mobile backhaul networks continued to drive investment in the edge,” Tamboli added. –

The top Four SP Router Vendors Combined Accounted for Over 93% of the Market

:#1 Cisco Systems: Remained the first-ranked vendor with increased revenue into EMEA and Asia.

#2 Alcatel-Lucent: Achieved record Service Provider Edge Router sales, increasing revenue in every major region.

#3 Juniper Networks: Delivered a record quarter in Edge Router revenues driven primarily by sales into North America.

#4 Huawei Technologies: Saw a shift in demand as Service Providers in its domestic market, China, focused on mobile backhaul and cut back on routers for fiber deployments.

More info at: http://www.delloro.com/news/service-provider-edge-router-market-reaches-record-levels

2014 Hot Interconnects: Death of the God Box….or maybe NOT?

Introduction:

We compare and contrast two keynote speeches from opening day (Aug 26th) of the 2014 IEEE Hot Interconnects conference, held at Google’s campus in Mt View, CA. The focus is on disruptive innovation giving rise to radically new hardware..or not?

Abstract: The God Box is Dead, by J.R. Rivers of Cumulus Networks

The maturing landscape in both interconnect technology and consumer expectations is leading a time of innovation in network capacity and utility. Complex systems are being realized by loosely coupling available and emerging open components with relevant, consumable technologies enjoying rapid times to deployment. This talk will highlight the historical precedence and discuss these implications on future system architectures.

Presentation Summary:

In his 2014 Hot Interconnects keynote talk J.R. Rivers, co-founder and CEO of Cumulus Networks, said: “The God Box is dead.” That meant not to expect any new revolutionary pieces of hardware (like the IBM 360 maintrame in the 1960s to Apple’s iPhone in 2007) anytime in the near future. “The IT industry is unlikely to create a new piece of hardware that will elevate a market that is mature,” he added.

Author’s Note: The first time I heard about a “God Box” was during the 1998-2000 fiber optic buildout boom. Many start-ups were making “Multi-Service Provisioning Platforms (MSPPs), which they claimed could do any and all networking functions. Hence, MSPPs were referred to as “God Boxes.” After the fiiber and dot com bust in 2001-2002, many of the new age carriers (CLECs) went bankrupt. They (and not the ILECs) planned to run fiber to commercial buildings in support of either n x G/10G Ethernet OR SONET/SDH OC12 or OC48 access. After their demise. most of the MSPP companies disappeared.

Among the reasons Rivers cited to support his position:

1. The decline of research: Government investment in research is declining, while corporate R & D for most companies is much more Design then Research (Google is a likely exception). Venture Capital and corporate research is evolutionary, seeking incremental improvements in existing technologies and products.

2. The (semiconductor?) supply chain is mature. [No clarification provided]

3. Components improve (in price-performance, power, size, etc) faster than they can be re-invented. Microprocessors vs Network Processors was given as an example, where the former has evolved to lower performance gaps with the latter (due to continuation of Moore’s law).

Other observations and advice for researchers and new product developers:

1. “Go places no man has ever gone before. Don’t rehash a design just to make incremental improvements.”

2. Aim at a broad market- especially for components. Niche markets disappear quicker than most think.

3. Tracking a benchmark is better than the current generation of silicon. To clarify this point, J.R. wrote in a post conference email: “The point here is that many companies work on a piece of technology based on the current version of that technology (for example my CPU is way better that today’s i7) without taking into account likely evolutions in the current form as well as planning a roadmap based what the incumbents are likely to build towards. A recent example of this in the interconnect world is the Fulcrum Microelectronics story.”

4. Need a 4 X speed improvement over what’s available today in silicon, e.g. switch silicon (Broadcom), network processors (Cavium), I/O and Bus interconnect (PLX Technology- recently acquired by Avago Technologies). If that can’t be achieved, the newly targeted silicon won’t gain significant market share.

For a systems developer, time to market and flexibility are vital to success. The hardware produced should facilitate rapid provisioning of services and be able to run various types of software/firmware. J

J.R. shared an experience he had as a systems designer at Cisco where he helped develop their Nuova switches: “After releasing some recent ASIC-heavy products, Cisco found that what really mattered to customers was the provisioning system and the software. In the end, that provisioning system could have sat on industry-standard servers and been almost as successful,” Rivers said.

Q&A:

ONF Executive Director Dan Pitt (Alan’s IEEE colleague for 30+ years) asked J.R. to please tell us how his talk applied to Cumulus Networks – a very innovative software company. Mr. Rivers respectively declined to do so, even though the audience (by a show of hands) indicated they were very interested in Cumulus. In fact, it was one of the reasons I attended the first day of the conference! Rivers said he’d talk to audience members off line about Cumulus, but then he disappeared as the morning break began (at least I couldn’t find him then or later).

Prof. David Patterson (UC Berkeley) challenged Rivers by saying that when Moore’s law ends (perhaps in 2020), there’ll be a lot more opportunities for tech innovation.

Abstract: Flash and Chips: A Case for Solid-State Warehouse-Scale Computers WSC) using Custom Silicon, by David Patterson, PhD and Professor UC Berkeley

The 3G-WSC design emphasis will shift from hardware cost-performance and energy-efficiency to easing application engineering. The reliance on flash memory for long-term storage will create a solid-state server that has both much faster and more consistent storage latency and bandwidth. Custom SoCs connected by optical links will enable servers in a 3GWSC to have better network interfaces and be one- to two-orders of magnitude larger than the servers of today, which should simplify both application development and WSC operations. Thus, a WSC of 2020 will be composed of ~400 3G-WSC servers instead of ~100,000 4U servers.

Such a 3GWSC server would certainly be considered a supercomputer, but unlike those for high performance computing, it will be multiprogrammed—for both interactive and batch applications—be fault tolerant so as to be available 24×7, and be tail tolerant to deliver predictable response times.

Presentation Highlights:

Prof. Patterson noted that new market opportunities happen when existing engineering technologies change. One recent example is server architecture changes with virtualization and the move to cloud resident data centers.

The Professor observed that Moore’s law (doubling of transisters per same size die every 18 to 24 months) to three years and will slow to five+ years before it becomes defunct for SRAMs and DRAMs in 2020. Flash memories may or may not perpetuate Moore’s law.

[During my interview with him at 2014 Flash Memory Summit, Professor Simon Sze1 said that Moore’s law for SRAM/DRAMs actually stopped around 2000 or 2001, but continued for Flash memories.]

Note 1. History Session @ Flash Memory Summit, Aug 7th, Santa Clara, CA: Interview with Simon Sze, Co-Inventor of the Floating Gate Transistor by Alan J Weissberger

http://ithistory.org/blog/?p=2163

When Moore’s law is dead or slowing to a crawl, there will be new opportunities for innovative custom silicon, according to Prof. Patterson. “When scaling (of transistors) stops, custom chips costs will drop,” he said. There will then be more ASICs developed and that will be supported by new hardware description languages to facilitate new designs.

All that will give rise to the next generation of Warehouse Scale Computers (WSC). It will be imperative to address “tail tolerance” in order to build new computing machines with predictable response times for all operations. Then, we actually may see new God boxes emerge, the UC Berkeley Professor concluded.

In a post conference email, Prof. Patterson wrote: “The God Box is not dead. The iPhone created the smartphone phenomena in 2007 and the iPad led to the tablet in 2010, both of which outsell PCs. The past is prelude, and the recent past suggests more God Boxes are coming in the near future.”

This author agrees as there are likely to be more instances of disruptive tech innovation in the coming years.

Reference:

http://www.hoti.org/hoti22/keynotes/

Addendum:

In a post conference email, Mr Rivers responded to Prof Patterson’s comment about the iPhone:

“Prof. Patterson’s comments around iPhone and iPad as related to the PC… the point he missed is that in both the smartphone and tablets, Apple’s hardware innovations are not their differentiators and that world is rife with industry standard hardware… there is not a God Box in that industry anymore (I’ll hold up the iPhone as a GodBox for the first 4 years of existence).”

3 other articles on the excellent 2014 Hot Interconnects conference are at: viodi.com