ETSI NFV evolution, containers, kubernetes, and cloud-native virtualization initiatives

Backgrounder:

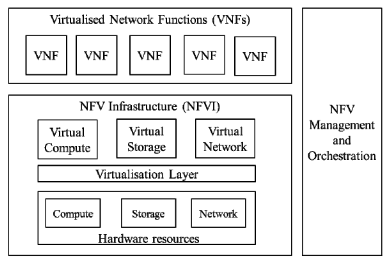

NFV, as conceived by ETSI in November, 2012, has radically changed. While the virtualization and automation concepts remain intact, the implementation envisioned is completely different. Both Virtual Network Functions (VNFs) [1.] and Management, Automation, and Network Orchestration (MANO) [2.] were not commercially successful due to telco’s move to a cloud native architecture. Moving beyond virtualization to a fully cloud-native design helps push to a new level the efficiency and agility needed to rapidly deploy innovative, differentiated offers that markets and customers demand. An important distinguishing feature of the cloud-native approach is that it uses Containers [3.] rather than VNFs implemented as VMs.

Note 1. Virtual network functions (VNFs) are software applications that deliver network functions such as directory services, routers, firewalls, load balancers, and more. They are deployed as virtual machines (VMs). VNFs are built on top of NFV infrastructure (NFVI), including a virtual infrastructure manager (VIM) like OpenStack® to allocate resources like compute, storage, and networking efficiently among the VNFs.

Note 2. Management, Automation, and Network Orchestration (MANO) is a framework for how VNFs are provisioned, their configuration, and also the deployment of the infrastructure VNFs will run on. MANO has been superseded by Kubernetes, as described below.

Note 3. Containers are units of a software application that package code and all dependencies and can be run individually and reliably from one environment to another. Some advantages of Containers are: faster deployment and much smaller footprint, factors that can help in improving the resource utilization and lowering resource consumption.

An article which compares Containers to VMs is here.

High Level NFV Framework:

Kubernetes Defined:

Each application consisted of many of these “container modules,” also called Pods, so a way to manage them was needed. Many different container orchestration systems were developed, but the one that became most popular was an open source project called Kubernetes which assumed the role of MANO. Kubernetes ensured declarative interfaces at each level and defined a set of building blocks/intents (“primitives”) in terms of API objects. These objects are representation of various resources such as Pods, Secrets, Deployments, Services. Kubernetes ensured that its design was loosely coupled, which made it easy to extend it to support the varying needs of different workloads, while still following intent-based framework.

The traditional ETSI MANO framework as defined in the context of virtual machines along with 3GPP management functions.

ETSI MANO Framework and Kubernetes and associated constructs

Source of both diagrams: Amazon Web Services

…………………………………………………………………………………………………………………………………………………………………………………………………………………………….

ETSI NFV at 2023 MWC-Shanghai Conference:

During the 2023 MWC-Shanghai conference, ETSI hosted a roundtable discussion of its NFV and cloud-native virtualization initiatives. There were presentations from China Telecom, China Mobile, China Unicom, SKT, AIS, and NTT DOCOMO. Apparently, telcos want to leverage opportunities in cloud-based microservices and network resource management, but it also has become clear that there are “challenges.”

Three reoccurring themes during the roundtable were the following:

1) the best approach to implement containerization (i.e., Virtual Machine (VM)-based containers versus bare-metal containers) which have replaced the Virtual Network Machine (VNF) concept

2) the lack of End-to-End (E2E) automation;

3) the friction and cost that is incurred from the presence of various incompatible fragmented solutions and products.

Considering the best approach to implement containerization, most attendees present suggested that having a single unified backward-compatible platform for managing both bare metal and virtualized resource pools would be advantageous. Their top three concerns for selecting between VM-based containers and bare-metal containers were performance, resource consumption, and security. The top three concerns for selecting between VM-based containers and bare-metal containers were performance, resource consumption, and security.

…………………………………………………………………………………………………………………………………………………………………………………………………………….

ETSI NFV Evolution:

While the level of achievements and real benefits of NFV might not equate among all service providers worldwide, partly due to the particular use cases and contexts where these operate. Based on the ETSI NFV architecture, service providers have been able to build ultra-largescale telco cloud infrastructures based on cross-layer and multi-vendor interoperability. For example, one of the world’s largest telco clouds based on the ETSI NFV standard architecture includes distributed infrastructure of multiple centralized regions and hundreds of edge data centers, with a total of more than 100,000 servers. In addition, some network operators have also achieved very high ratios of virtualization (i.e., amount of virtualized network functions compared to legacy ATCA-based network elements) in their targeted network systems, e.g., above 70% in the case of 4G and 5G core network systems. In addition, ETSI NFV standards are continuously providing essential value for wider-scale multivendor interoperability, also into the hyperscaler ecosystem as exemplified by recent announcements on offering support for ETSI NFV specifications in offered telco network management service solutions.

ETSI ISG NFV Release 5, initiated in 2021, had “consolidation and ecosystem” as its slogan. It aimed to address further operational issues in areas such as energy efficiency, configuration management, fault management, multi-tenancy, network connectivity, etc., and consider new use cases or technologies developed by other organizations in the ecosystem

Work on ETSI NFV Release 6 has started. It will focus on: 1) new challenges, 2) architecture evolution, and 3) additional infrastructure work items.

Key changes include:

- The broadening of virtualization technologies beyond traditional Virtual Machines (VMs) and containers (e.g., micro VM, Kata Containers, and WebAssembly)

- Creation of declarative intent-driven network operations

- Integrating heterogenous hardware, Application Programming Interfaces (APIs), and cloud platforms through a unified management framework

All changes aim for simplification and automation within the NFV architecture. The developments are preceded by recent announcements of standards-based applications by hyperscalers: Amazon Web Services (AWS) Telco Network Builder (TNB) and Microsoft Azure Operator Nexus (AON) are two new NFV-Management and Orchestration (NFV-MANO)-compliant platforms for automating deployment of network services (including the core and Radio Access Network (RAN)) through the hybrid cloud.

As more network operators and vendors are already leveraging the potential of OS container virtualization (containers) technologies for deploying telecom networks, the ETSI ISG NFV also studied how to enhance its specifications to support this trend. During this work, the community has found ways to reuse the VNF modeling and existing NFV management and orchestration (NFV-MANO) interfaces to address both OS container and VM virtualization technologies, hence ensuring that the VNF modeling embraces the cloud-native network function (CNF) concepts, which is now a term commonly referred in the industry.

This has been achieved despite OS container and VM technologies having somewhat different management logic and resource descriptions. However, diverse and quickly changing open source solutions make it hard to define unified and standardized specifications. Nevertheless, due to the fact that both kind of virtualization technologies can and will still play a major role in the future to fulfill the various and broad set of telecom network use cases, efforts to further evolve them as well as to complement them with other newer virtualization technologies (e.g., unikernels) are needed.

Furthermore, driven by new application scenarios and different workload requirements (e.g., video, Cloud RAN, etc.), new requirements for deploying diversified heterogeneous hardware resources in the NFV system are becoming a reality. For example, to meet high-performance VNFs, requirements for heterogeneous acceleration hardware resources such as DPUs, GPUs, NPU, FPGAs, and AI ASIC are being brought forward. In another example, to meet the ubiquitous deployment of edge devices in the future, other types of heterogeneous hardware resources, such as integrated edge devices and specialized access

devices, are also starting to be considered.

NFV architectures have and will continue to evolve, especially with the rise of Artificial Intelligence (AI) and Machine Learning (ML) automation. Data centers, either cloud-based or “on-premises” are becoming complex, heterogeneous environments. In addition to Central Processing Units (CPUs), complementary Graphics Processing Units (GPUs) handle parallel processing functions for accelerated computing tasks of all kinds. AI, Deep Learning (DL), and big data analytics applications are underpinned by GPUs. However, as data centers have expanded in complexity, Digital Processing Units (DPUs) have become the third member of this data-centric accelerated computing model. The DPU helps orchestrate and direct the data around the data center and other processing nodes on the network.

……………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………

References:

Updates in ETSI NFV for Accelerating the Transition to Cloud (abiresearch.com)

Omdia and Ericsson on telco transitioning to cloud native network functions (CNFs) and 5G SA core networks

Virtual Network Function Orchestration (VNFO) Market Overview: VMs vs Containers

6 thoughts on “ETSI NFV evolution, containers, kubernetes, and cloud-native virtualization initiatives”

Comments are closed.

This is a good summary of the NFV activity, an activity I was involved in from the first meeting. IMHO, the ISG made two basic mistakes. First, they should have simply referenced the current cloud state of the art for management and orchestration, rather than going their own way. Second, they should have avoided making a virtual network function map 1:1 to actual devices. The former meant they couldn’t keep pace with cloud evolution, and the second meant that they were locked into functions that were nothing more than hosted device feature sets, when the cloud could have enabled a much richer vision.

Many thanks for your comments Tom, as you were a pioneer in leading the ETSI NFV ISG.

Do you think their new “cloud-native” approach in Release 5 and 6 will be successful? In particular ETSI NFV Release 6 will focus on: 1) new challenges, 2) architecture evolution, and 3) additional infrastructure work items. That is very vague, IMHO.

Tom

I read your views with great interest.

Can you share your thoughts on what is missing in the so-called “cloud-native” network functions of the day? Also, do you think that “presumption that a virtual network function is a hosted-software equivalent of a physical device” persists due to the network function OEM landscape getting less competitive, especially in the cellular mobile telephony infrastructure space, or is there any other reason(s)? Thanks

The biggest problem that the ISG created was the 1:1 VNF to PNF mapping presumption. What it did was to model VNFs as complex, monolithic, processes because that’s what devices are. A router is not stateless, for example. That means that a distributed, scalable, “virtual function” was much more difficult to construct. It also meant that it was essential to consider the question of how scalability and resiliency could be achieved when network devices are typically connected to something, and the connections themselves may not be portable. If you need traffic to/from a given trunk, you need something sitting at the termination. Some sort of efficient meshing should have been discussed and it was not.

The second issue relates to the first. The early work of the ISG tended to focus on one of two things–higher-level services or elements of 5G. The problem with the first of the two was that these higher-level services are too price-sensitive to make sense except for business users. The economic value of a service, meaning its potential to create benefit for both buyer and seller, should have been assessed. For the second, 5G, the challenge was that NFV didn’t really fully address lifecycle management of a shared-among-users infrastructure element.

The third issue is management. The functional E2E diagram done in late spring 2013 was a diagram about how to manage boxes, and even there it reflected older polled-status SNMP-like relationships rather than event-driven relationships. It never considered how the model would be impacted by a bunch of services/users sharing a resource. Who mediates the management? How do you avoid having management status polling swamp the devices?

Relative to the role that vendor/OEM contributions in the virtual function space played, much of the early resistance to abandoning the VNF:PNF relationship was that the suppliers of CPE software didn’t want to unbundle their software and allow composition from more primitive elements. In addition, if you looked at the whole notion of virtual CPE for businesses or (though it was impractical for residential users IMHO) consumers, the goals of the operator, the user, and the supplier of the current PNFs were all incompatible. If a VNF replaced a PNF, there were more players who wanted to make a profit and any profit made would have to come from displacing the PNF supplier in favor of a VNF. That suppler would also likely have to supply the VNF, so they set their pricing higher to ensure they didn’t lose revenue, and the whole process collapsed.

Hope I answered the questions without overkill!

Tom

For those who want more info on my views at the time (2013) I refer you to the following link to the submission of the PoC I presented, the first to be offered and approved by the ISG: https://www.cimicorp.com/CloudNFVPoCAsApproved.pdf

Thanks, Tom

ABI Research:

An Impact, but Not a Resurgence

Despite the rise of cloud-native telco networks, ETSI’s planned changes will likely have market impact due to the existing market penetration of NFV architectures. ABI Research forecasts that for Packet Gateway (PGW) and Serving Gateway (SGW) network functions, Cloud-native Network Functions (CNFs) will outpace Virtual Network Functions (VNFs) by a 43% Compound Annual Growth Rate (CAGR) (from 2021 to 2026, globally). These numbers confirm the buzz surrounding cloud-native solutions and their potential for market growth. Yet, for the same PGW and SGW network functions, the total number of cloud-native deployments by 2026 will still only be 36% that of virtual. This indicates the large stock of NFV architectures susceptible to impact by ETSI’s changes. The costs and opportunities associated with those impacts may be dependent upon the compatibility of current and next-phase NFV frameworks, which remains to be seen.

The rollout of AWS TNB and Microsoft AON is consistent with ETSI’s plans for NFV development due to its NFV-MANO compliance. This reveals the kind of progress in coordination between Information Technology (IT) hyperscalers and telcos that is envisioned by ETSI’s Release-6 design, all toward integrating heterogeneous technologies and cloud platforms in a unified NFV framework. Yet, this consistency and compliance should not be interpreted as a resurgence or re-trending of ETSI NFV led by hyperscalers; rather, the compliance is to redirect the existing stock of telco network technologies toward hyperscalers’ public cloud services. The coincidence of ETSI’s planning and these new network automation services is nevertheless informative for future markets.

Acceleration to Hybrid and Multi-Cloud

NFV-MANO compliance signals that the IT industry is transitioning from de facto standards and norms governing software development toward the telecoms industry’s explicit international standards (e.g., ITU-T’s Telecommunication Management Network (TMN)), which will improve interoperability, especially for NFV architectures. This will open new opportunities for progression to the hybrid cloud, which may be pursued for advantages in automation, storage, and flexibility for load, further supporting edge compute. It also opens new opportunities for multi-cloud among several MANO-compliant cloud vendors, helping to prevent vendor lock-in.

Early signs of IT-telco coordination are auspicious for the fulfillment and future uptake of ETSI’s other planned developments, especially the broadening of virtualization technologies and shift to declarative intent-driven network operations. For instance, both network automation services offer Kubernetes-based containerization and support VNF and CNF architectures. While operations are not declarative intent-based, Google’s competing Telecom Network Automation service is; such competition may stimulate developments in this direction. With the head start in coordination among hyperscalers and telcos, ETSI’s plans appear viable within the designated time frame.

Despite the good news for the continuous relevance of ETSI NFV, this will not likely impede investment in the transition to cloud-native architectures. First, cloud-native advantages over NFV persist in reducing costs of Virtual Machine (VM) overhead, energy consumption, and boot time. Second, as ETSI plans indicate, NFV architecture is broadening to accommodate cloud-native technologies. Those technologies will remain key drivers of growth withstanding the strategic use of NFV standards for expanding cloud services. Moreover, hyperscalers will not likely wait for NFV to complete its current workplan to introduce these features. The ongoing drive toward public and hybrid cloud developments, even for 5G core networks, means that NFV will likely create a viable, scalable, and robust platform, but too late for it to be considered for current networks.

https://www.abiresearch.com/market-research/insight/7782363-updates-in-etsi-nfv-for-accelerating-the-t/