Will AI clusters be interconnected via Infiniband or Ethernet: NVIDIA doesn’t care, but Broadcom sure does!

InfiniBand, which has been used extensively for HPC interconnect, currently dominates AI networking accounting for about 90% of deployments. That is largely due to its very low latency and architecture that reduces packet loss, which is beneficial for AI training workloads. Packet loss slows AI training workloads, and they’re already expensive and time-consuming. This is probably why Microsoft chose to run InfiniBand when building out its data centers to support machine learning workloads. However, InfiniBand tends to lag Ethernet in terms of top speeds. Nvidia’s very latest Quantum InfiniBand switch tops out at 51.2 Tb/s with 400 Gb/s ports. By comparison, Ethernet switching hit 51.2 Tb/s nearly two years ago and can support 800 Gb/s port speeds.

While InfiniBand currently has the edge, several factors point to increased Ethernet adoption for AI clusters in the future. Recent innovations are addressing Ethernet’s shortcomings compared to InfiniBand:

- Lossless Ethernet technologies

- RDMA over Converged Ethernet (RoCE)

- Ultra Ethernet Consortium’s AI-focused specifications

Some real-world tests have shown Ethernet offering up to 10% improvement in job completion performance across all packet sizes compared to InfiniBand in complex AI training tasks. By 2028, it’s estimated that: 1] 45% of generative AI workloads will run on Ethernet (up from <20% now) and 2] 30% will run on InfiniBand (up from <20% now).

In a lively session at VM Ware-Broadcom’s Explore event, panelists were asked how to best network together the GPUs, and other data center infrastructure, needed to deliver AI. Broadcom’s Ram Velaga, SVP and GM of the Core Switching Group, was unequivocal: “Ethernet will be the technology to make this happen.” Velaga opening remarks asked the audience, “Think about…what is machine learning and how is that different from cloud computing?” Cloud computing, he said, is about driving utilization of CPUs; with ML, it’s the opposite.

“No one…machine learning workload can run on a single GPU…No single GPU can run an entire machine learning workload. You have to connect many GPUs together…so machine learning is a distributed computing problem. It’s actually the opposite of a cloud computing problem,” Velaga added.

Nvidia (which acquired Israel interconnect fabless chip maker Mellanox [1.] in 2019) says, “Infiniband provides dramatic leaps in performance to achieve faster time to discovery with less cost and complexity.” Velaga disagrees saying “InfiniBand is expensive, fragile and predicated on the faulty assumption that the physical infrastructure is lossless.”

Note 1. Mellanox specialized in switched fabrics for enterprise data centers and high performance computing, when high data rates and low latency are required such as in a computer cluster.

…………………………………………………………………………………………………………………………………………..

Ethernet, on the other hand, has been the subject of ongoing innovation and advancement since, he cited the following selling points:

- Pervasive deployment

- Open and standards-based

- Highest Remote Direct Access Memory (RDMA) performance for AI fabrics

- Lowest cost compared to proprietary tech

- Consistent across front-end, back-end, storage and management networks

- High availability, reliability and ease of use

- Broad silicon, hardware, software, automation, monitoring and debugging solutions from a large ecosystem

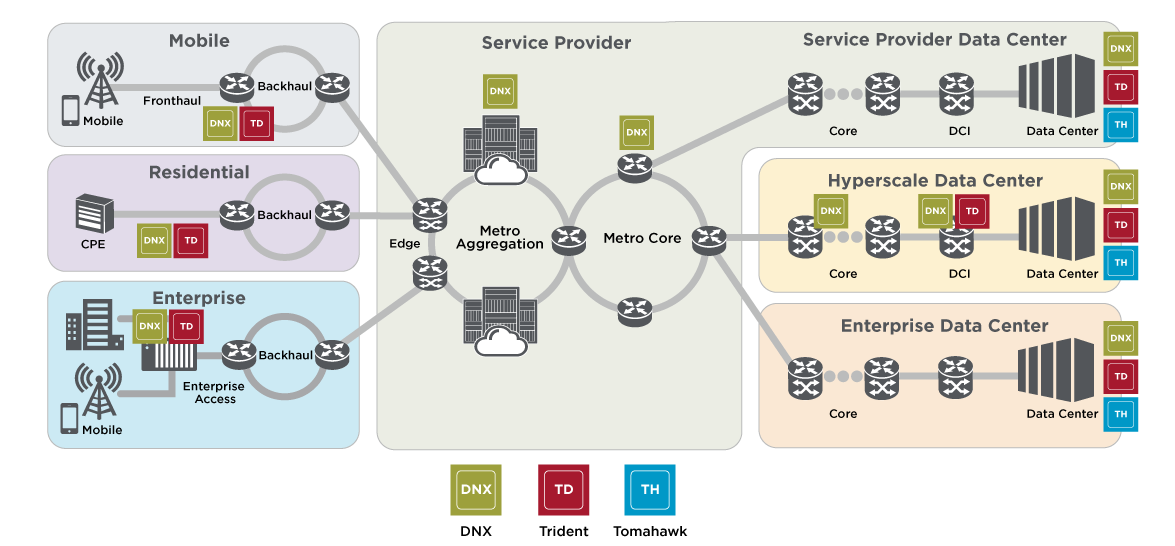

To that last point, Velaga said, “We steadfastly have been innovating in this world of Ethernet. When there’s so much competition, you have no choice but to innovate.” InfiniBand, he said, is “a road to nowhere.” It should be noted that Broadcom (which now owns VMWare) is the largest supplier of Ethernet switching chips for every part of a service provider network (see diagram below). Broadcom’s Jericho3-AI silicon, which can connect up to 32,000 GPU chips together, competes head-on with InfiniBand!

Image Courtesy of Broadcom

………………………………………………………………………………………………………………………………………………………..

Conclusions:

While InfiniBand currently dominates AI networking, Ethernet is rapidly evolving to meet AI workload demands. The future will likely see a mix of both technologies, with Ethernet gaining significant ground due to its improvements, cost-effectiveness, and widespread compatibility. Organizations will need to evaluate their specific needs, considering factors like performance requirements, existing infrastructure, and long-term scalability when choosing between InfiniBand and Ethernet for AI clusters.

–>Well, it turns out that Nvidia’s Mellanox division in Israel makes BOTH Infiniband AND Ethernet chips so they win either way!

…………………………………………………………………………………………………………………………………………………………………………..

References:

https://www.perplexity.ai/search/will-ai-clusters-run-on-infini-uCYEbRjeR9iKAYH75gz8ZA

https://www.theregister.com/2024/01/24/ai_networks_infiniband_vs_ethernet/

Broadcom on AI infrastructure networking—’Ethernet will be the technology to make this happen’

https://www.nvidia.com/en-us/networking/products/infiniband/h

ttps://www.nvidia.com/en-us/networking/products/ethernet/

Part1: Unleashing Network Potentials: Current State and Future Possibilities with AI/ML

Using a distributed synchronized fabric for parallel computing workloads- Part II

Part-2: Unleashing Network Potentials: Current State and Future Possibilities with AI/ML

2 thoughts on “Will AI clusters be interconnected via Infiniband or Ethernet: NVIDIA doesn’t care, but Broadcom sure does!”

Comments are closed.

Reuters: Broadcom shares slump as revenue target fails to impress investors counting on AI boost

Broadcom’s shares (AVGO.O), opens new tab closed down 10% on Friday after the chipmaker’s tepid revenue forecast spooked investors betting on robust demand for AI chips to drive strong growth.

Chipmakers are bearing the brunt of Wall Street’s lofty expectations after a months-long rally in shares of semiconductor firms, as investors bet heavily on the hardware that supports generative AI technology.

Broadcom posted a big decline in quarterly revenue from its broadband and non-AI networking divisions on Thursday, while a hike in its annual forecast for AI chip sales failed to impress growth-hungry investors who have driven a more than 35% increase in its shares so far this year.

https://www.reuters.com/technology/broadcom-shares-slump-revenue-target-disappoints-investors-hoping-big-ai-boost-2024-09-06/

Dec 3, 2024 remarks by Nvidia’s Colette Kress – Executive Vice President and Chief Financial Officer:

Our Networking business is one of the most important additions that we added when we went to a datacenter scale. The ability to think through, not just the time where the data, the work is being done at a data processing or the use of the compute and/or the GPU is essential to think through the Networking’s position inside of that datacenter.

So we have two different offerings. We have InfiniBand and InfiniBand had tremendous success with many of the largest supercomputers in the world for decades. And that has been very important in terms of the size of data, the size of speed of data going through. It had different views in terms of how to deal with the traffic that will be there.

Ethernet, a great configuration that is the standard for many of the enterprises. But Ethernet was not built for AI. Ethernet was just built for the networking inside of datacenters. So we are taking some of the best of the breeds of what you see in terms of inter InfiniBand and creating Ethernet for AI. That allows customers now, both the choice between those. We can be full end-to-end systems with InfiniBand and now you have your choice in terms of what we do with Ethernet.

Both of these things are a growth option for us. In terms of this last quarter, we had some timing issues. But now, what you will see in terms of the continuation of our Networking will definitely grow. With our designs in terms of Networking with our compute, we have some of the strongest clusters that are being built and also using our Networking. That connection that we have done has been a very important part of the work that we’ve done since the acquisition of Mellanox. Folks do represent and understand our use of networking and how that can help their overall system as a whole.

https://seekingalpha.com/article/4741811-nvidia-corporation-nvda-ubs-global-technology-conference-transcript