CES 2025: Intel announces edge compute processors with AI inferencing capabilities

At CES 2025 today, Intel unveiled the new Intel® Core™ Ultra (Series 2) processors, designed to revolutionize mobile computing for businesses, creators and enthusiast gamers. Intel said “the new processors feature cutting-edge AI enhancements, increased efficiency and performance improvements.”

“Intel Core Ultra processors are setting new benchmarks for mobile AI and graphics, once again demonstrating the superior performance and efficiency of the x86 architecture as we shape the future of personal computing,” said Michelle Johnston Holthaus, interim co-CEO of Intel and CEO of Intel Products. “The strength of our AI PC product innovation, combined with the breadth and scale of our hardware and software ecosystem across all segments of the market, is empowering users with a better experience in the traditional ways we use PCs for productivity, creation and communication, while opening up completely new capabilities with over 400 AI features. And Intel is only going to continue bolstering its AI PC product portfolio in 2025 and beyond as we sample our lead Intel 18A product to customers now ahead of volume production in the second half of 2025.”

Intel also announced new edge computing processors, designed to provide scalability and superior performance across diverse use cases. Intel Core Ultra processors were said to deliver remarkable power efficiency, making them ideal for AI workloads at the edge, with performance gains that surpass competing products in critical metrics like media processing and AI analytics. Those edge processors are targeted at compute servers running in hospitals, retail stores, factory floors and other “edge” locations that sit between big data centers and end-user devices. Such locations are becoming increasingly important to telecom network operators hoping to sell AI capabilities, private wireless networks, security offerings and other services to those enterprise locations.

Intel edge products launching today at CES include:

- Intel® Core™ Ultra 200S/H/U series processors (code-named Arrow Lake).

- Intel® Core™ 200S/H series processors (code-named Bartlett Lake S and Raptor Lake H Refresh).

- Intel® Core™ 100U series processors (code-named Raptor Lake U Refresh).

- Intel® Core™ 3 processor and Intel® Processor (code-named Twin Lake).

“Intel has been powering the edge for decades,” said Michael Masci, VP of product management in Intel’s edge computing group, during a media presentation last week. According to Masci, AI is beginning to expand the edge opportunity through inferencing [1.]. “Companies want more local compute. AI inference at the edge is the next major hotbed for AI innovation and implementation,” he added.

Note 1. Inferencing in AI refers to the process where a trained AI model makes predictions or decisions based on new data, rather than previously stored “training models.” It’s essentially AI’s ability to apply learned knowledge on fresh inputs in real-time. Edge computing plays a critical role in inferencing, because it brings it closer to users. That lowers latency (much faster AI responses) and can also reduce bandwidth costs and ensure privacy and security as well.

Editor’s Note: Intel’s edge compute business – the one pursuing AI inferencing – is in in its Client Computing Group (CCG) business unit. Intel’s chips for telecom operators reside inside its NEX business unit.

Intel’s Masci specifically called out Nvidia’s GPU chips, claiming Intel’s new silicon lineup supports up to 5.8x faster performance and better usage per watt. Indeed, Intel claims their “Core™ Ultra 7 processor uses about one-third fewer TOPS (Trillions Operations Per Second) than Nvidia’s Jetson AGX Orin, but beats its competitor with media performance that is up to 5.6 times faster, video analytics performance that is up to 3.4x faster and performance per watt per dollar up to 8.2x better.”

………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………….

However, Nvidia has been using inference in its AI chips for quite some time. Company officials last month confirmed that 40% of Nvidia’s revenues come from AI inference, rather than AI training efforts in big data centers. Colette Kress, Nvidia Executive Vice President and Chief Financial Officer, said, “Our architectures allows an end-to-end scaling approach for them to do whatever they need to in the world of accelerated computing and Ai. And we’re a very strong candidate to help them, not only with that infrastructure, but also with the software.”

“Inference is super hard. And the reason why inference is super hard is because you need the accuracy to be high on the one hand. You need the throughput to be high so that the cost could be as low as possible, but you also need the latency to be low,” explained Nvidia CEO Jensen Huang during his company’s recent quarterly conference call.

“Our hopes and dreams is that someday, the world does a ton of inference. And that’s when AI has really succeeded, right? It’s when every single company is doing inference inside their companies for the marketing department and forecasting department and supply chain group and their legal department and engineering, and coding, of course. And so we hope that every company is doing inference 24/7.”

……………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………….

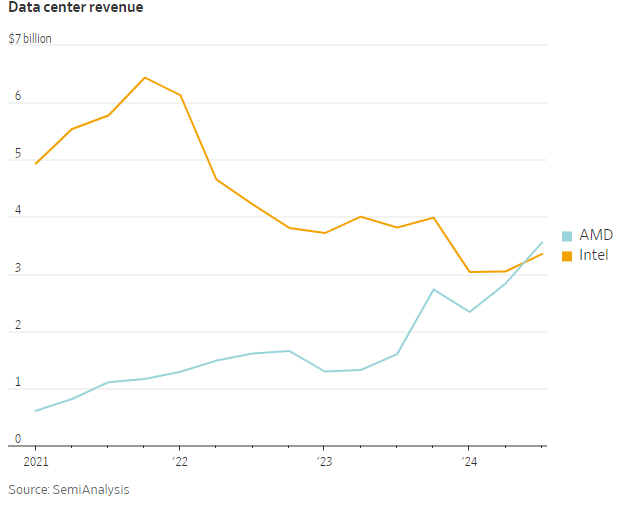

Sadly for its many fans (including this author), Intel continues to struggle in both data center processors and AI/ GPU chips. The Wall Street Journal recently reported that “Intel’s perennial also-ran, AMD, actually eclipsed Intel’s revenue for chips that go into data centers. This is a stunning reversal: In 2022, Intel’s data-center revenue was three times that of AMD.”

Even worse for Intel, more and more of the chips that go into data centers are GPUs and Intel has minuscule market share of these high-end chips. GPUs are used for training and delivering AI. The WSJ notes that many of the companies spending the most on building out new data centers are switching to chips that have nothing to do with Intel’s proprietary architecture, known as x86, and are instead using a combination of a competing architecture from ARM and their own custom chip designs. For example, more than half of the CPUs Amazon has installed in its data centers over the past two years were its own custom chips based on ARM’s architecture, Dave Brown, Amazon vice president of compute and networking services, said recently.

This displacement of Intel is being repeated all across the big providers and users of cloud computing services. Microsoft and Google have also built their own custom, ARM-based CPUs for their respective clouds. In every case, companies are moving in this direction because of the kind of customization, speed and efficiency that custom silicon supports.

References:

https://www.intel.com/content/www/us/en/newsroom/news/2025-ces-client-computing-news.html#gs.j0qbu4

https://www.intel.com/content/www/us/en/newsroom/news/2025-ces-client-computing-news.html#gs.j0qdhd

https://www.wsj.com/tech/intel-microchip-competitors-challenges-562a42e3

Massive layoffs and cost cutting will decimate Intel’s already tiny 5G network business

WSJ: China’s Telecom Carriers to Phase Out Foreign Chips; Intel & AMD will lose out

The case for and against AI-RAN technology using Nvidia or AMD GPUs

Superclusters of Nvidia GPU/AI chips combined with end-to-end network platforms to create next generation data centers

FT: Nvidia invested $1bn in AI start-ups in 2024

AI winner Nvidia faces competition with new super chip delayed

AI Frenzy Backgrounder; Review of AI Products and Services from Nvidia, Microsoft, Amazon, Google and Meta; Conclusions

One thought on “CES 2025: Intel announces edge compute processors with AI inferencing capabilities”

Comments are closed.

At CES 2025, Nvidia said its latest generation AI processor series, Blackwell, is now in full production. Analysts had been concerned that Blackwell was running into production issues.

“Blackwell is in full production,” CEO Jensen Huang said. “Every single cloud service provider now has systems up and running. We have systems here (at CES 2025) from 15 computer makers.”

Huang also introduced the Nvidia Blackwell GeForce RTX 50 Series graphics processing units and a family of laptop computers that use versions of the new GPUs.

The top-of-the-line Nvidia GeForce RTX 5090 GPU has 92 billion transistors and delivers 3,352 trillion AI operations per second (TOPS).

“The GPU is just a beast,” Huang said.

The RTX 50 series desktop GPUs will be available starting Jan. 30. Notebook computers with the new GPUs will be available in March. The products are designed for gamers, creators and developers.