Nvidia’s annual AI developers conference (GTC) used to be a relatively modest affair, drawing about 9,000 people in its last year before the Covid outbreak. But the event now unofficially dubbed “AI Woodstock” is expected to bring more than 25,000 in-person attendees!

Nvidia’s Blackwell AI chips, the main showcase of last year’s GTC (GPU Technology Conference), have only recently started shipping in high volume following delays related to the mass production of their complicated design. Blackwell is expected to be the main anchor of Nvidia’s AI business through next year. Analysts expect Nvidia Chief Executive Jensen Huang to showcase a revved-up version of that family called Blackwell Ultra at his keynote address on Tuesday.

March 18th Update: The next Blackwell Ultra NVL72 chips, which have one-and-a-half times more memory and two times more bandwidth, will be used to accelerate building AI agents, physical AI, and reasoning models, Huang said. Blackwell Ultra will be available in the second half of this year. The Rubin AI chip, is expected to launch in late 2026. Rubin Ultra will take the stage in 2027.

Nvidia watchers are especially eager to hear more about the next generation of AI chips called Rubin, which Nvidia has only teased at in prior events. Ross Seymore of Deutsche Bank expects the Rubin family to show “very impressive performance improvements” over Blackwell. Atif Malik of Citigroup notes that Blackwell provided 30 times faster performance than the company’s previous generation on AI inferencing, which is when trained AI models generate output. “We don’t rule out Rubin seeing similar improvement,” Malik wrote in a note to clients this month.

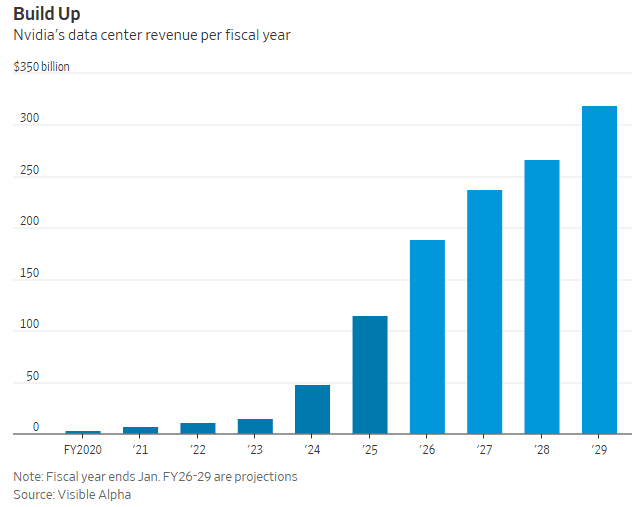

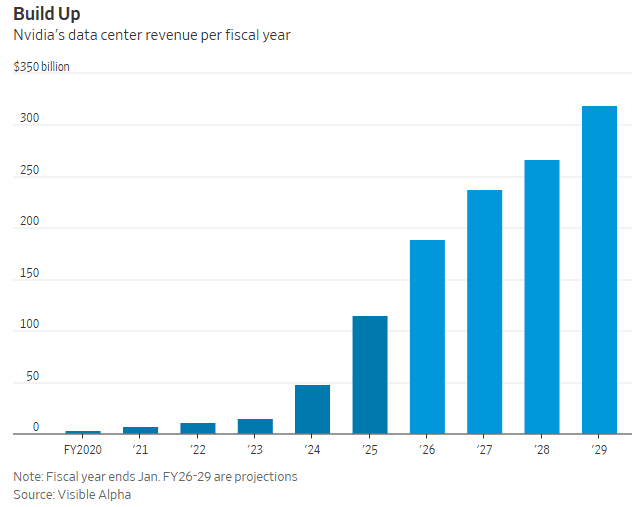

Rubin products aren’t expected to start shipping until next year. But much is already expected of the lineup; analysts forecast Nvidia’s data-center business will hit about $237 billion in revenue for the fiscal year ending in January of 2027, more than double its current size. The same segment is expected to eclipse $300 billion in annual revenue two years later, according to consensus estimates from Visible Alpha. That would imply an average annual growth rate of 30% over the next four years, for a business that has already exploded more than sevenfold over the last two.

Nvidia has also been haunted by worries about competition with in-house chips designed by its biggest customers like Amazon and Google. Another concern has been the efficiency breakthroughs claimed by Chinese AI startup DeepSeek, which would seemingly lessen the need for the types of AI chip clusters that Nvidia sells for top dollar.

…………………………………………………………………………………………………………………………………………………………………………………………………………………….

Telecom Sessions of Interest:

Wednesday Mar 19 | 2:00 PM – 2:40 PM

Delivering Real Business Outcomes With AI in Telecom [S73438]

Chris Penrose | VP & Head of Business Development Telco | NVIDIA

Andy Markus | SVP and Chief Data and Artificial Intelligence Officer | AT&T

Kaniz Mahdi | Director Technology, AWS Industries | Amazon Web Services

Anil Kumar | VP, Head of AI Center | Verizon

Hans Bendik Jahren | VP Network and Infrastructure | Telenor

In this session, executives from three leading telcos will share their unique journeys of embedding AI into their organizations. They’ll discuss how AI is driving measurable value across critical areas such as network optimization, customer experience, operational efficiency, and revenue growth. Gain insights into the challenges and lessons learned, key strategies for successful AI implementation, and the transformative potential of AI in addressing evolving industry demands.

Thursday Mar 20 | 11:00 AM – 11:40 AM PDT

AI-RAN in Action [S72987]

Soma Velayutham | VP, AI and Telecoms | NVIDIA

Aji Ed | VP & Head of CloudRAN, Mobile Networks | Nokia

Ryuji Wakikawa | VP, Research Institute of Advanced Technology | Softbank

Freddie Södergren | VP and Head of Technology & Strategy | Ericsson

Karri Kuoppamaki | SVP, Advanced and Emerging Technologies | T-Mobile

Two AI trends are driving the need for an AI-enabled infrastructure at the 5G/6G radio access network (RAN) edge. Inferencing for generative AI and AI agents requires AI compute infrastructure to be distributed from edge to central clouds. At the same time, it’s clear that the RAN is evolving to an AI-native infrastructure. The AI-RAN brings these trends to fruition, providing an accelerated computing infrastructure that accelerates both radio signal processing and AI workloads. In the past six months, both Softbank in Japan and T-Mobile in the United States have taken leading roles toward transforming their networks to AI-RAN. Our panel will explore the motivations and practicalities of delivering a commercial grade AI-RAN network to transform commercial return on investment and increase spectral efficiency, network capacity, and network utilization.

Thursday Mar 20 | 9:00 AM – 9:40 AM PDTHow Indonesia Delivered a Telco-led Sovereign AI Platform for 270M Users [S73440]

Anissh Pandey | Sr. Director NCP Asia Pacific | NVIDIA

Lilach Ilan | Global Head of Business Development -Telco Operations | NVIDIA

Munjal Shah | Co-Founder and CEO | Hippocratic AI

Vikram Sinha | CEO | Indosat Ooredoo Hutchison

Senthil Ramani | Global Lead – Data & AI | Accenture

Sovereign AI enables nations to create their own AI and participate in the new global economy. Nations are partnering up with telcos to enable the foundational AI platform and ecosystem required for their universities, startups, enterprises, and government agencies to create AI. Indosat, a major telecoms provider with over 100 million customers, working with Lintasarta, GoTo, Accenture, and Hippocratic, has deployed a Sovereign AI Factory and a collection of LLMs for Indonesia leveraging the NVIDIA Cloud Partner program. We’ll explore how Indosat set up the sovereign cloud and launched its own foundational large language model, Sahabat.ai, for the 277 million native Bahasa speakers with NVIDIA NEMO and NIMs. We’ll also discuss how partners like Accenture and Hippocratic AI are accelerating AI use-case deployment in Indonesia for critical sectors including banking and healthcare using NVIDIA AI Platform.

Thursday Mar 20 | 3:00 PM – 3:40 PM PDT

Driving 6G Development With Advanced Simulation Tools [S72994]

CC Chong | Senior Director, Aerial Product Management | NVIDIA

Balaji Raghothaman | Chief Technologist, 6G | Keysight Technologies

Arien Sligar | Senior Principal Product Specialist | Ansys, Inc.

Tommaso Melodia | William Lincoln Smith Professor | Northeastern University

The NVIDIA Aerial Omniverse Digital Twin (AODT) is a platform that leverages the power of NVIDIA accelerated computing, NVIDIA Omniverse, and NVIDIA Aerial to provide a comprehensive, scalable, and flexible solution for 6G research and development. The platform enables researchers and developers to customize, program, and test 6G networks in near-real time, with AI/ML in the loop, and to simulate and optimize the network performance and quality of service based on site-specific data and system-level simulation. The platform has been adopted by Keysight and Ansys with advanced simulation tools. One is leveraging our state-of-the-art 3D electromagnetic ray tracing model and radio access network (RAN) modules for advanced 5G/6G end-to-end simulations. The other has integrated their PerceiveEM Solver into the AODT to leverage NVIDIA AI/ML with full-stack RAN in the loop to accelerate and simplify 6G research and development. The NVIDIA Aerial Omniverse Digital Twin has also empowered academia to unleash the potential of cloud and AI-native networks for 6G system research, design, and development.

Thursday Mar 20 | 2:00 PM – 2:40 PM PDT

Defining AI-Native RAN for 6G [S72985]

Chris Dick | Senior Distinguished Engineer, 5G/6G | NVIDIA

Ardavan Tehrani | Director Engineering | Samsung Research

Moe Win | Professor | MIT

Jim Shea | CEO | DeepSig, Inc.

Kai Mao | Head of AI-RAN Strategy for Fujitsu Network Business | Fujitsu

The telecoms industry is working to integrate AI into the design, operation, and optimization of 6G networks to enable unprecedented levels of automation, efficiency, and adaptability. The AI-native 6G radio access network (RAN) embodies this expectation and makes AI a foundational element of the 6G stack. In this session, leading telecoms industry stakeholders will outline how they’re advancing 6G research, with AI integration, to revolutionize connectivity, performance, and societal transformation. They’ll explore how the AI-native 6G RAN can deliver extreme levels of radio access performance, integrating technologies like deep learning and edge computing, promoting sustainability, enhancing public safety, and driving economic growth through advanced applications like smart cities, digital twins, and immersive communication.

Thursday Mar 20 | 4:00 PM – 4:40 PM PDT

Pushing Spectral Efficiency Limits on CUDA-accelerated 5G/6G RAN [S72990]

Vikrama Ditya | Senior Director of Software, Aerial | NVIDIA

Tommaso Balercia | Principal Engineer, Aerial | NVIDIA

Yuan Gao | Senior Software Engineer, 5G – Algorithms | NVIDIA

The demand for mobile broadband continues to grow, and correspondingly the radio access networks (RAN) delivering it are asked to support an extraordinary rate of innovation. RAN-based on software-defined platforms are of key importance in enabling such a rate of innovation sustainably. Among all software-defined platforms, the one based on the GPU allows for the introduction of complex algorithms to pursue new levels of spectral efficiency. This is both to the ease with which the GPU can be programmed and their computational efficiency. Moreover, GPU-based 5G and 6G RAN stacks can run coherently with the code of a new class of simulation tools: digital twins. The efficiency with which the GPU can run both physics simulation chains as well as the 5G/6G RAN stack enable simulations to reach new scales and levels of accuracy. Such simulation environments, in turn, enable the creation and the detailed characterization of new algorithms. In this talk, we will demonstrate how a series of key algorithms that make use of the GPU computational power allow us to push the boundary of system spectral efficiency. The characterization is performed in a digital twin environment, where the radio environment is closely resembling the field.

Thursday Mar 20 | 4:00 PM – 4:40 PM PDT

Enable AI-Native Networking for Telcos with Kubernetes [S72993]

Erwan Gallen | Senior Principal Product Manager | Red Hat

Elad Blatt | Global Head Business Development Telco Networking | NVIDIA

Ahmed Guetari | VP and GM, Service Providers | F5

As telcos transform their networks for the age of AI, utilizing the Bluefield 3 (BF3) DPU engines has been challenging for developers and clients alike. Yet, BF3 is a critical part in deploying and securing an accelerated compute cluster to enable this networking-for-AI infrastructure. The DOCA Platform Framework (DPF) simplifies this task, providing a framework for LCM (life cycle management) and provisioning of both the BF3, as a platform, and the services running on it as K8 containers. DPF is deployed via two network operators in the K8 environment that allow you to deploy and service chain NVIDIA and third-party services. With this, for the first time, independent software vendors (ISVs), operating systems (OS) vendors, and developers can deploy and orchestrate services with ease, as well as onboarding these tools to their environment. In this session, OS and ISV partner companies who’ve adopted the DPF will share their experience, what they are able to achieve, and what comes next.

Monday Mar 17 | 3:00 PM – 4:45 PM PDT

Automate 5G Network Configurations With NVIDIA AI LLM Agents and Kinetica Accelerated Database [DLIT72350]

Maria Amparo Canaveras Galdon | Senior Solutions Architect Generative AI | NVIDIA

Swastika Dutta | Solutions Architect Generative AI | NVIDIA

Shibani Likhite | Solutions Architect | NVIDIA

Nick Reamaroon | Solutions Architect | NVIDIA

Anna Daccache | Solutions Architect Generative AI | NVIDIA

Learn how to create AI agents using LangGraph and NVIDIA NIM to automate 5G network configurations. You’ll deploy LLM agents to monitor real-time network quality of service (QoS) and dynamically respond to congestion by creating new network slices. LLM agents will process logs to detect when QoS falls below a threshold, then automatically trigger a new slice for the affected user equipment. Using graph-based models, the agents understand the network configuration, identifying impacted elements. This ensures efficient, AI-driven adjustments that consider the overall network architecture.

We’ll use the Open Air Interface 5G lab to simulate the 5G network, demonstrating how AI can be integrated into real-world telecom environments. You’ll also gain practical knowledge on using Python with LangGraph and NVIDIA AI endpoints to develop and deploy LLM agents that automate complex network tasks.

Prerequisite: Python programming.

………………………………………………………………………………………………………………………………………………………………………………………………………..

References:

NVIDIA and Telecom Industry Leaders to Develop AI-Native Wireless Networks for 6G:

T-Mobile, MITRE, Cisco, ODC and Booz Allen Hamilton to Collaborate on Development of AI-Native Network Stack for 6G on NVIDIA AI Aerial Platform

The goal is to develop AI-RAN technology further, using AI to improve radio networks and deploy AI applications at the edge — running network and AI workloads together.

“The end goal is to have a fully integrated AI-native wireless network that sets new benchmarks in spectral efficiency, power efficiency, operational efficiency, security, cost-effectiveness and new opportunities for revenue generation,” NVIDIA’s Ronnie Vasishta said. “This will be a scalable solution ready for global deployments.”

Nvidia launched new additions to the Aerial Research portfolio Tuesday, a suite of Nvidia research tools for developing, training, simulating and deploying wireless networks. Upgrades include the Omniverse Digital Twin Service, for simulating 5G and 6G infrastructure from single towers to entire cities; the Aerial Commercial Test Bed on Nvidia MGX; and upgrades to Sionna 1.0 5G and 6G physical layer research software.

Nvidia has achieved 40% performance improvement in network simulations using the new tools, and expects those improvements to translate to the real world, Vasishta said.

References:

https://nvidianews.nvidia.com/news/nvidia-and-telecom-industry-leaders-to-develop-ai-native-wireless-networks-for-6g

https://www.fierce-network.com/cloud/nvidia-gtc-top-telco-takeaways-ai-ran-6g-ai-factories-and-oh-those-cute-robots

Ultimately, the biggest development from GTC was Nvidia’s aggressive product road map. During his keynote, CEO Jensen Huang announced that the company’s Blackwell Ultra AI server, available later this year, would outperform the current model by 50%. Then he said that the Vera Rubin AI server, scheduled for the second half of 2026, would be 3.3 times faster than Blackwell Ultra. The showstopper was the unveiling of the Rubin Ultra AI server—set for late 2027—with 14 times the performance of Blackwell Ultra. That figure drew gasps from the audience.

Nvidia made the case that the overall market opportunities for AI and AI data center infrastructure are expanding rapidly. Huang expects the industry will spend roughly $500 billion on data center capital expenditures this year, rising to more than $1 trillion by 2028, with Nvidia’s GPU chip business gaining a larger share of the spending in the coming years.

Part of that will come from the growing number of Nvidia GPUs inside data centers. These so-called superclusters have grown from 16,000 GPUs to over 100,000 GPUs during the past year. Huang told me he’s confident that several one-million-GPU clusters would be built by 2027.

“Almost the entire world got it wrong,” Huang said. “The amount of computation we need at this point as a result of agentic AI, as a result of reasoning, is easily 100 times more than we thought we needed this time last year.”

https://www.barrons.com/articles/nvidia-stock-buy-ai-robotics-6f39cdc6?mod=hp_LEDE_B_1

June 18, 2025: Deloitte launches Agentic AI Blueprint to help unlock US$150 billion in value for telecom organizations

Deloitte announced today the launch of its Agentic AI Blueprint for Telcos to help telecom organizations scale and accelerate their AI transformation. With telcos facing rising complexity and margin pressure, the industry is primed to benefit from the transformational impact of AI. While traditional AI delivers incremental improvements, agentic AI can enable true automation—empowering intelligent systems to reason, act, and adapt across core telco functions like network management, service delivery, and customer care.

https://www.prnewswire.com/news-releases/deloitte-launches-agentic-ai-blueprint-to-help-unlock-us150-billion-in-value-for-telecom-organizations-302484415.html

Deloitte’s Agentic AI Blueprint can give telcos a clear, scalable path to move beyond pilots and deliver agentic AI solutions that can help boost efficiency and drive value. The solution enables telecom teams to identify and implement high-impact AI use cases—such as network management, billing, and customer service— within their existing infrastructure. Built on TM Forum’s widely adopted enhanced telecoms operation map (eTOM), powered by AI and Deloitte’s proprietary industry data, it helps accelerate deployment and maintain consistency with industry standards.

“Agentic AI presents a US$150 billion opportunity for the telecom industry—and the race to capture that value has already begun. To move beyond pilots and drive modernization, telcos can utilize a clear, scalable path to embed AI across their operations. Deloitte’s Agentic AI Blueprint provides organizations the tools to help build and scale agentic AI solutions quickly, and efficiently.”

— Baris Sarer, Telecom, Media and Entertainment, and Technology Industry AI leader, Deloitte Consulting LLP

The blueprint in action: A telecom-specific approach

The Agentic AI Blueprint helps telecom executives pinpoint where AI can have the most impact and design agentic workflows that help align with their operations. It includes a model for prioritizing AI use cases based on impact, a library of telecom-specific workflows aligned to TM Forum’s eTOM framework, automated process engineering tools, and practical playbooks for design and implementation. Additionally, the blueprint leverages Deloitte’s relationships with leading cloud and AI technology providers to help support integration.

“Deloitte’s Agentic AI Blueprint will provide TM Forum members with a clear path-to-production solution using TM Forum’s Open Digital Architecture (ODA), an industry-standard framework, designed to enable agile, modular, and open digital business capabilities. As we introduce our own ODA AI-Native Integration Blueprint, we believe such resources will collectively accelerate the implementation of Agentic AI use cases. Together, we’re helping telecom organizations to quickly and strategically integrate Agentic AI into their operations to deliver intuitive, intelligent experiences that will turn everyday customer interactions into lasting loyalty.”