Author: Vincent Rodriguez

From LPWAN to Hybrid Networks: Satellite and NTN as Enablers of Enterprise IoT – Part 2

By Afnan Khan (ML Engineer) and Mehsam Bin Tahir (Data Engineer)

Introduction:

This is the second of two articles on the impact of the Internet of Things (IoT) on the UK Telecom industry. The first is at

Enterprise IoT and the Transformation of UK Telecom Business Models – Part 1

Executive Summary:

Early Internet of Things (IoT) deployments relied heavily on low power wide area networks (LPWANs) to deliver low-cost connectivity for distributed devices. While these technologies enabled initial IoT adoption, they struggled to deliver sustainable commercial returns for telecom operators. In response, attention has shifted towards hybrid terrestrial–satellite connectivity models that integrate Non-Terrestrial Networks (NTN) directly into mobile network architectures. In 2026, satellite connectivity is increasingly positioned not as a universal coverage solution but as a resilience and continuity layer for enterprise IoT services (Ofcom, 2025).

The Commercial Limits of LPWAN-Based IoT:

LPWAN technologies enabled low-cost connectivity for specific IoT use cases but were typically deployed outside mobile core architectures. This limited their ability to support quality of service guarantees, enterprise-grade security and integrated billing models. As a result, LPWAN deployments often remained fragmented and failed to scale into durable enterprise business models, restricting their long-term commercial value for telecom operators (Ofcom, 2025).

Satellite and NTN as Integrated Mobile Extensions:

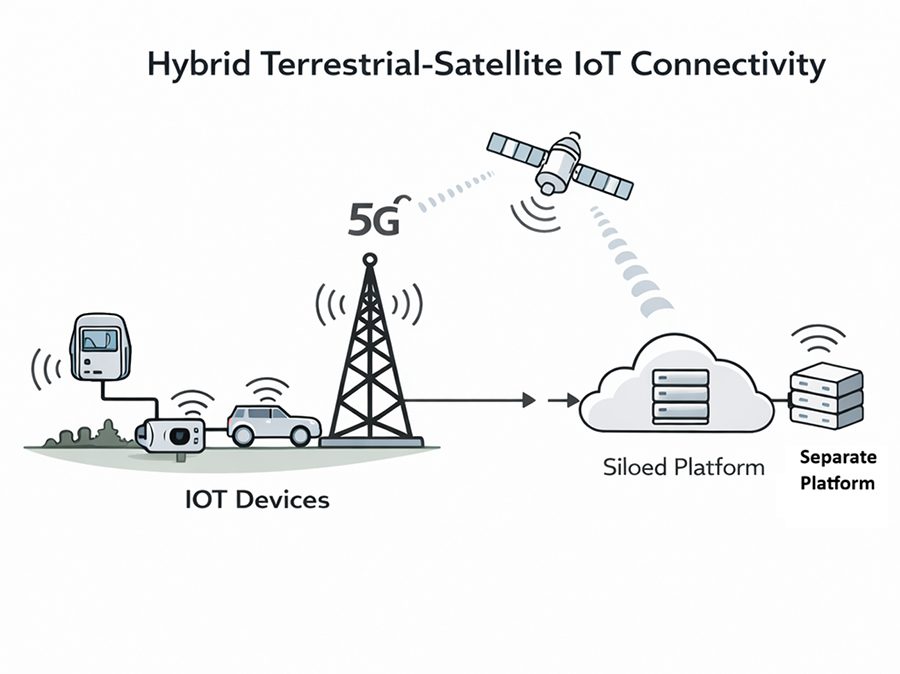

In contrast, satellite and NTN connectivity extends existing mobile networks rather than operating as a parallel IoT layer. When non-terrestrial connectivity is integrated into 5G core infrastructure, telecom operators are able to deliver managed IoT services with consistent security, performance and billing models across both terrestrial and remote environments. This architectural shift allows satellite connectivity to be packaged as part of a unified enterprise service rather than sold as a standalone or niche connectivity product (3GPP, 2023). Figure 1 illustrates this hybrid terrestrial–satellite model, showing how satellite connectivity functions as an extension of mobile networks to support continuous IoT services across urban, rural and remote environments.

Figure 1: Hybrid terrestrial–satellite connectivity supporting continuous IoT services across urban, rural and remote environments.

Industrial Use Cases and Hybrid Connectivity

In sectors such as offshore energy, agriculture, logistics and remote infrastructure monitoring, IoT deployments prioritise coverage continuity and service resilience over peak data throughput. Hybrid terrestrial–satellite connectivity enables operators to offer coverage guarantees and service level agreements that LPWAN-based models could not reliably support. In 2026, Virgin Media O2 launched satellite-enabled services aimed at supporting rural connectivity and improving resilience for IoT-dependent applications, reflecting a broader operator strategy to monetise non-terrestrial coverage where reliability is a core requirement (Real Wireless, 2025).

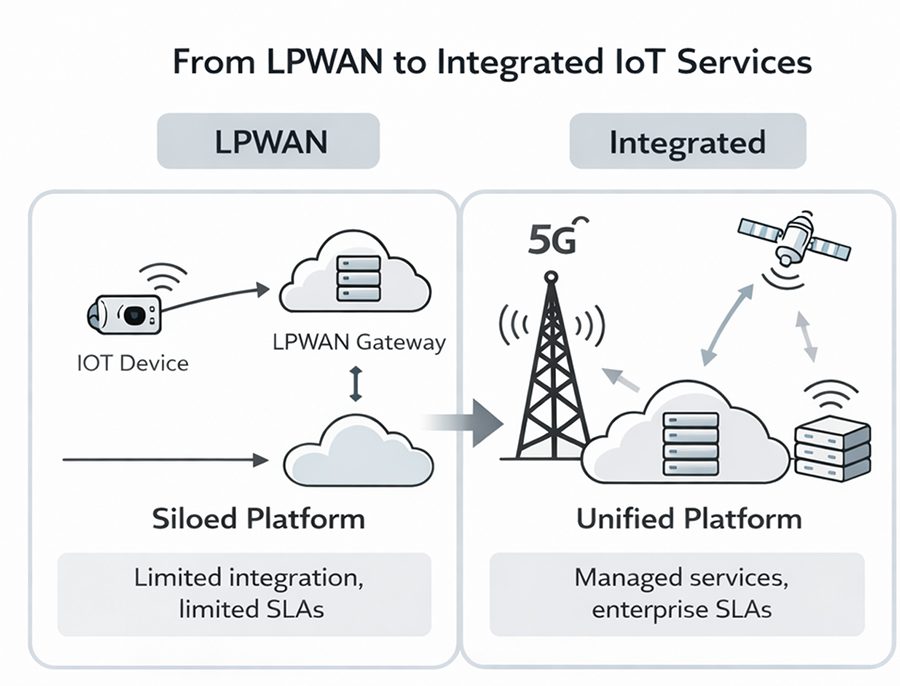

The commercial implications of this transition are further illustrated in Figure 2, which contrasts siloed LPWAN deployments with integrated mobile and satellite IoT services delivered through a unified network core.

Figure 2: Transition from siloed LPWAN deployments to integrated mobile and satellite IoT services delivered through a unified network core.

Satellite Connectivity and Enterprise IoT at Scale:

The UK Space Agency has identified hybrid terrestrial–satellite connectivity as an enabling layer for remote industrial operations, environmental monitoring and agricultural IoT systems. UK-based firms such as Open Cosmos are contributing to this model by integrating Low Earth Orbit satellite connectivity with existing mobile core networks. This approach allows telecom operators to deliver end-to-end managed connectivity for enterprise customers without deploying separate IoT network stacks, converting coverage limitations from a cost burden into chargeable, service-based revenue opportunities (Open Cosmos, 2024; UK Space Agency, 2025).

Conclusion

In 2026, IoT is reshaping the UK telecom sector primarily by enabling new revenue models rather than by driving incremental network expansion. Following the limited commercial success of LPWAN-based IoT strategies, satellite and Non-Terrestrial Network integration is increasingly deployed as an extension of mobile networks to provide coverage continuity and service guarantees for industrial and remote use cases. When integrated into 5G core architectures, satellite connectivity enables telecom operators to monetise resilience and reliability as part of managed enterprise services rather than offering standalone connectivity. Taken together, these developments show that satellite and NTN integration has become a critical enabler of scalable, enterprise-led IoT business models in the UK (Ofcom-2025; 3GPP-2023).

…………………………………………………………………………………………………………………………………………………………………………

References:

Ofcom. (2025). Connected Nations UK report.

https://www.ofcom.org.uk

Real Wireless. (2025). Satellite to mobile connectivity and the UK market.

https://real-wireless.com

UK Space Agency. (2025). Connectivity and space infrastructure briefing

https://www.gov.uk/government/organisations/uk-space-agency

Open Cosmos. (2024). Satellite solutions for IoT and Earth observation.

https://open-cosmos.com

3GPP. (2023). Non-Terrestrial Networks (NTN) support in 5G systems.

https://www.3gpp.org/news-events/ntn

Non-Terrestrial Networks (NTNs): market, specifications & standards in 3GPP and ITU-R

Keysight Technologies Demonstrates 3GPP Rel-19 NR-NTN Connectivity in Band n252 (using Samsung modem chip set)

Telecoms.com’s survey: 5G NTNs to highlight service reliability and network redundancy

ITU-R recommendation IMT-2020-SAT.SPECS from ITU-R WP 5B to be based on 3GPP 5G NR-NTN and IoT-NTN (from Release 17 & 18)

China ITU filing to put ~200K satellites in low earth orbit while FCC authorizes 7.5K additional Starlink LEO satellites

Samsung announces 5G NTN modem technology for Exynos chip set; Omnispace and Ligado Networks MoU

Enterprise IoT and the Transformation of UK Telecom Business Models – Part 1

By Afnan Khan (ML Engineer) and Raabia Riaz (Data Scientist)

Introduction:

This is the first of two articles on the impact of the Internet of Things (IoT) on the UK Telecom industry. The second is at

From LPWAN to Hybrid Networks: Satellite and NTN as Enablers of Enterprise IoT – Part 2

Executive Summary:

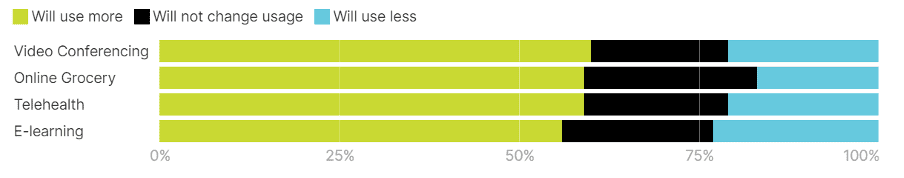

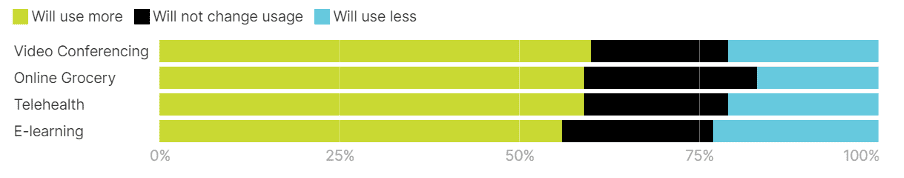

In 2026, the Internet of Things (IoT) is fundamentally changing the UK telecom sector by enabling new business models rather than simply driving incremental network upgrades.

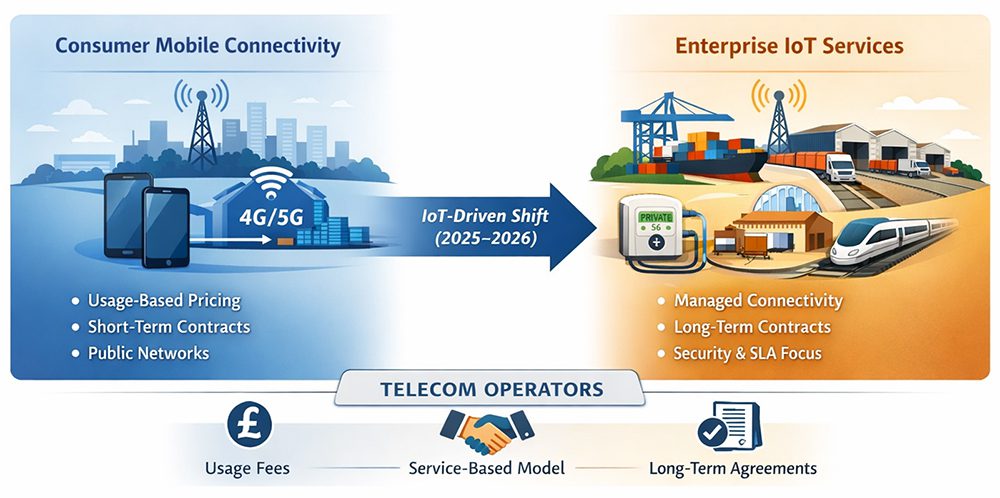

As consumer mobile markets show limited YoY growth between 2025 and 2026, telecom operators have prioritised IoT-led enterprise services as a source of new revenue (as per Ofcom-2025; GSMA-2024). Investment has shifted away from consumer facing upgrades towards private networks, managed connectivity and long-term service contracts for industry and infrastructure. This change reflects a broader move from usage-based connectivity towards service-based delivery.

IoT and Enterprise Connectivity through Private 5G:

Figure 1: Transition from consumer mobile connectivity to enterprise IoT services in the UK telecom sector, highlighting the shift towards managed connectivity and long-term service contracts.

The growth of private 5G and managed enterprise networks represents one of the clearest IoT driven business shifts. Industrial customers increasingly require predictable performance, low latency and enhanced security, which are not consistently available through public mobile networks. 5G Standalone architecture enables features such as network slicing and low latency communication, allowing operators to sell connectivity as a managed service rather than a commodity product (Mobile UK, 2024).

In the UK, this model is visible in projects such as the Port of Felixstowe private 5G trials supporting automated port operations and asset tracking (BT Group, 2023), the Liverpool City Region 5G programme focused on connected logistics (DCMS, 2022), the West Midlands 5G transport and connected vehicle projects (WM5G, 2023) and Network Rail 5G rail monitoring trials supporting safety and asset management (Network Rail, 2024). These deployments are typically delivered through long term enterprise contracts.

Together, these projects illustrate how connectivity is increasingly sold as a managed operational capability embedded within enterprise workflows rather than them being priced through consumer-style data usage as illustrated in figure 1.

IoT and Long-Term Infrastructure Revenue:

IoT enables telecom operators to participate in long-term infrastructure-based revenue models. The UK national smart meter programme illustrates this shift. By the third quarter of 2025, more than 40 million smart and advanced meters had been installed across Great Britain, with around 70% operating in smart mode (Department for Energy Security and Net Zero, 2025).

These systems rely on continuous, secure connectivity over long lifecycles. The Data Communications Company network processes billions of encrypted messages each month, creating sustained demand for resilient connectivity (DCC, 2024). Ofcom has linked the growth of such systems to increased regulatory focus on network resilience where connectivity underpins critical national infrastructure, while the National Cyber Security Centre has highlighted security risks associated with large IoT deployments (Ofcom, 2025; NCSC, 2024).

For telecom operators, these deployments favour long-term service contracts and regulated infrastructure partnerships over short-term retail revenue models.

Conclusions:

In 2026, IoT is transforming the UK telecom sector primarily by reshaping how connectivity is monetised rather than by driving incremental network upgrades. As consumer mobile markets show limited growth, telecom operators have increasingly aligned investment with enterprise IoT demand through private 5G deployments and long-term infrastructure connectivity. These models prioritise predictable performance, security and service continuity over mass-market scale. Private 5G projects across ports, transport networks and logistics hubs demonstrate how IoT demand has accelerated the commercial adoption of 5G Standalone capabilities, allowing operators to sell connectivity as a managed operational service embedded within enterprise workflows (Mobile UK, 2024). At the same time, national smart infrastructure programmes such as smart metering illustrate how IoT supports long-duration connectivity contracts that favour regulated partnerships and resilient network design over short-term retail revenue (Department for Energy Security and Net Zero, 2025; DCC, 2024). Taken together, these developments indicate that IoT is no longer an adjunct to UK telecom networks. Instead, it has become a central driver of enterprise-led, service-based business models that align network investment with stable, long-term revenue streams and critical infrastructure requirements.

…………………………………………………………………………………………………………………………………………………………..

References:

BT Group. (2023). BT and Hutchison Ports trial private 5G at the Port of Felixstowe.

https://www.bt.com/about/news/2023/bt-hutchison-ports-5g-felixstowe

Data Communications Company. (2024). Annual report and accounts 2023–24.

https://www.smartdcc.co.uk/our-company/our-performance/annual-reports/

Department for Digital, Culture, Media and Sport. (2022). Liverpool City Region 5G Testbeds and Trials Programme.

https://www.gov.uk/government/publications/5g-testbeds-and-trials-programme

Department for Energy Security and Net Zero. (2025). Smart meter statistics in Great Britain Q3 2025.

https://www.gov.uk/government/collections/smart-meters-statistics

GSMA. (2024). The Mobile Economy Europe.

https://www.gsma.com/mobileeconomy/europe/

Mobile UK. (2024). Unleashing the power of 5G Standalone.

https://www.mobileuk.org

National Cyber Security Centre. (2024). Cyber security principles for connected places.

https://www.ncsc.gov.uk

Network Rail. (2024). 5G on the railway connectivity trials.

https://www.networkrail.co.uk

Ofcom. (2025). Connected Nations UK report.

https://www.ofcom.org.uk

MTN Consulting: Satellite network operators to focus on Direct-to-device (D2D), Internet of Things (IoT), and cloud-based services

IoT Market Research: Internet Of Things Eclipses The Internet Of People

Artificial Intelligence (AI) and Internet of Things (IoT): Huge Impact on Tech Industry

ITU-R M.2150-1 (5G RAN standard) will include 3GPP Release 17 enhancements; future revisions by 2025

5G Americas: LTE & LPWANs leading to ‘Massive Internet of Things’ + IDC’s IoT Forecast

GSA: 102 Network Operators in 52 Countries have Deployed NB-IoT and LTE-M LPWANs for IoT

LoRaWAN and Sigfox lead LPWANs; Interoperability via Compression

IEEE/SCU SoE Virtual Event: May 26, 2022- Critical Cybersecurity Issues for Cellular Networks (3G/4G, 5G), IoT, and Cloud Resident Data Centers

Automating Fiber Testing in the Last Mile: An Experiment from the Field

By Said Yakhyoev with Sridhar Talari & Ajay Thakur

The December 23, 2025 IEEE ComSoc Tech Blog post on AI-driven data center buildouts [1.] highlights the urgent need to scale optical fiber and related equipment[1]. While much of the industry focus is on manufacturing capacity and high-density components inside data centers, a different bottleneck is emerging downstream— a sprawling last-mile network that demands testing, activation, and long-term maintenance. The AI-driven fiber demand coincided with the historic federal broadband programs to bring fiber to the premises for millions of customers[2]. This not only adds near-term pressure on fiber supply chains, but also creates a longer-term operational challenge: efficiently servicing hundreds of thousands of new fiber endpoints in the field.

As standard-setting bodies and vendors are introducing optimized products and automation inside data centers, similar future-proofing is needed in the last-mile outside plant. This post presents an example of such innovation from a field perspective, based on hands-on experimentation with a robotic tool designed to automate fiber testing inside existing Fiber Distribution Hubs (FDHs).

While central office copper terminating DSLAMs—and Optical Line Terminals (OLTs) in Passive Optical Networks (PONs)—aggregate subscribers and automate testing and provisioning, FDHs function as passive patch panels[3] that deliberately omit electronics to reduce cost. Between an OLT and the subscriber, the passive distribution network remains fixed. As a result, accessing individual ports at a local FDH—and anything downstream of it—remains a manual process. In active networks, DSLAMs and OLTs can electronically manage thousands of subscribers efficiently, but during construction this manual access is a bottleneck. There are likely tens of thousands of FDHs deployed nationwide.

Consider this problem from a technician’s perspective: suburban and urban Fiber to the Home (FTTH) networks are often deployed using a hub-and-spoke architecture centered around FDHs. These cabinets carry between 144 and 432 ports serving customers in a neighborhood, and each line must be tested bidirectionally[4]. In practice, this typically requires two technicians: one stationed at the FDH to move the test equipment between ports, and another at the customer location or terminal.

Testing becomes difficult during inclement weather. Counterintuitively, the technician stationed at the hub—often standing still for long periods—is more exposed than technicians moving between poles in vehicles. In addition to discomfort, there is a real economic penalty: either a skilled technician is tied up performing repetitive port switching, or an additional helper must be assigned. Above all, dependence on both favorable weather and helper availability makes testing schedules unpredictable and slows network completion.

To mitigate this bottleneck, we developed and tested Machine2 (M2)—a compact, gantry-style robotic tool that remotely connects an optical test probe inside an FDH, allowing a single technician to perform bidirectional testing independently.

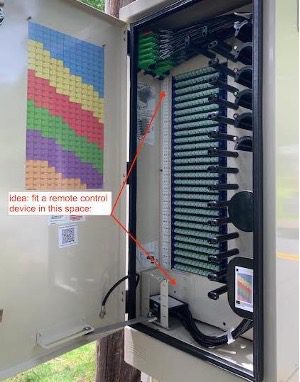

M2 was designed to retrofit into a commonly deployed 288-port Clearfield FDH used in rural and small-town networks. The available space in front of the patch panel—approximately 9.5 × 28 × 4 inches—constrained the design to a flat Cartesian mechanism capable of navigating between ports and inserting a standard SC connector. Despite the simple design, integrating M2 into an unmodified FDH in the field proved more challenging than expected. Several real-world constraints shaped the redesign.

|

|

| FDH cabinet. Space to fit an automated switch | M2 installed for dry-run testing |

Space and geometry constraints: The patch panel occupies roughly 80% of the available volume, leaving only a narrow strip for motors, electronics, and cable routing. This forced compromises in pulley placement, leadscrew length, and motor orientation, limiting motion and requiring multiple iterations. The same constraints also limited battery size, making energy efficiency a primary design concern.

Port aiming: The patch panel is composed of cassettes with loosely constrained SC connectors. Small variations in connector position led to unreliable insertions. After repeated attempts, small misalignments accumulated, rendering the system ineffective without corrective feedback.

Communications reliability: A specialized cellular modem intended for IoT applications performed poorly for command-and-control. Message latency ranged from 1.5 seconds to over 12 seconds – and in some cases minutes – making real-time control impractical. In rural areas of Connecticut and Vermont, cellular coverage was also inconsistent or absent. Thus, the effort was abandoned between 2022 and 2024.

When the project resumed, an unexpected solution emerged. A low-cost consumer mobile hotspot proved more reliable than the specialized modem when cellular signal was available, providing predictable latency and stable Wi-Fi connectivity inside the FDH—even with the all-metal cabinet door closed and locked.

To further reduce latency, we explored using the fiber under test itself as a communication channel, a kind of temporary orderwire. When a two-piece Optical Loss Test Set (OLTS) is connected across an intact fiber, the devices indicate link readiness via an LED. By tapping this status signal, M2 can infer when a technician at the far end disconnects the meter and automatically connects to the next port. While this cue-based mode is limited, it enables near-zero-latency coordination and rapid testing of multiple ports without spoken or typed commands, which proved effective for common field workflows.

A second breakthrough came from addressing port aiming with vision. Standard computer-vision techniques such as edge detection were sufficient to micro-adjust the probe position at individual ports. To detect and avoid dust caps, M2 also uses a lightweight edge-ML[5] model trained to recognize caps under varying illumination. Using only 30 positive and 30 negative training images, the model correctly detected caps in over 80% of cases.

In our experience, lightweight vision models proved sufficient for practical field tasks, suggesting that accessibility—not sophistication—may drive adoption of automation in outside-plant environments.

What building M2 revealed:

- Overcoming communications issues led to an intriguing idea: optical background communication, where modulated laser light subtly changes ambient illumination inside the FDH that a camera can detect and extract instructions.

- M2 also proved useful beyond testing. For example, in a verify-as-you-splice workflow, M2 can lase a specific fiber as confirmation before splicing. Interactive port illumination and detection allow a single technician to troubleshoot complex situations.

The comparison below is illustrative and reflects observed workflows rather than controlled benchmarking.

Illustrative comparison of testing workflows in our experience

| Human helper (remote) | M2 | |

| Connect next port | 1–1.5 s | 2.5–4 s |

| Connect random / distant port | 8–24 s | ~11–30 s |

| Ease of deployment | Requires flat ground, fair weather, ground-level FDH | ~15 min setup; requires software familiarity |

| Functionality | Highly adaptable | Limited to 2–3 functions |

| Economics | Inefficient for small networks | Well-suited for small and medium networks |

| Independence factor | Low; requires two people | High; largely weather-independent |

| Best use | Variable builds, high adaptability | Repetitive builds, independent workflows |

Early insights for OSP vendors and standards

Building M2 revealed two broader lessons relevant to operators and vendors. First, there are now practical opportunities for automation to enter outside-plant workflows following developments in the power industry and datacenters[6]. Second, infrastructure design choices can facilitate this transition.

More spacious or reconfigurable FDH cabinets would simplify retrofitting active devices. Standardized attachment points on cabinets, terminals and pluggable components would allow mechanized or automated fiber management, reducing the risk of damage in dense installations.

Fiducial marks are among the lowest-cost adaptations. QR marks conveying dimensions and part architecture would help machines determine part orientation and position easily. Although these are common in the industry, it may be time to adopt them more broadly in telecom infrastructure maintenance.

Aerial terminals may benefit the most from machine-friendly design. Standardized port spacing and swing-out or hinged caps would significantly simplify autonomous or remotely assisted connections. Such cooperative interfaces could enable standoff connections without requiring a technician to climb a pole, improving safety and reducing access costs. Retrofitting aerial infrastructure to make it robot-friendly has been recommended[7] by the power industry and is also needed in the broadband utilities.

Conclusion

A growing gap is emerging between rapidly evolving data-center infrastructure and the more traditional telecom networks downstream. As fiber density increases, testing, activation, and maintenance of last-mile networks are likely to become bottlenecks. One way ISPs and vendors can future-proof outside-plant infrastructure is by proactively incorporating automation- and robot-friendly design features. M2 is one practical example that helps inform how such transitions might begin.

Short video clip from our early field trial in Massachusetts:

https://youtube.com/shorts/MiDoQd_S6Kw

References:

[1] IEEE ComSoc Technology blog post, Dec 23 2025, How will fiber and equipment vendors meet the increased demand for fiber optics in 2026 due to AI data center buildouts? ↩

[2] U.S. Dept. of Commerce Office of Inspector General, “NTIA Broadband Programs: Semiannual Status Report,” Washington, DC, USA, Rep. no. OIG-25-031-I, Sept. 24, 2025. ↩

[3] for an overview of an FTTH architecture see: Fiber Optic Association (FOA), FTTH Network Design Considerations and Fiber Optic Association (FOA), FTTH and PON Applications ↩

[4] Corning Optical Communications, “Corning Recommended Fiber Optic Test Guidelines,” Hickory, NC, USA, Application Engineering Note LAN-1561-AEN, Feb. 2020. ↩

[5] Refer to tools available for easy to use edge computing by Edge Impulse. ↩

[6] See state of the art indoor optical switches like ROME from NTT-AT and G5 from Telescent. ↩

[7] Andrew Phillips, “Autonomous overhead transmission line inspection robot (TI) development and demonstration,” IEEE PES General Meeting, 2014. ↩

About the Author:

Said Yakhyoev is a fiber optic technician with LightStep LLC in Colorado and a developer of the experimental Machine2 (M2) platform for automating fiber testing in outside-plant networks.

The author acknowledges the use of AI-assisted tools for language refinement and formatting.

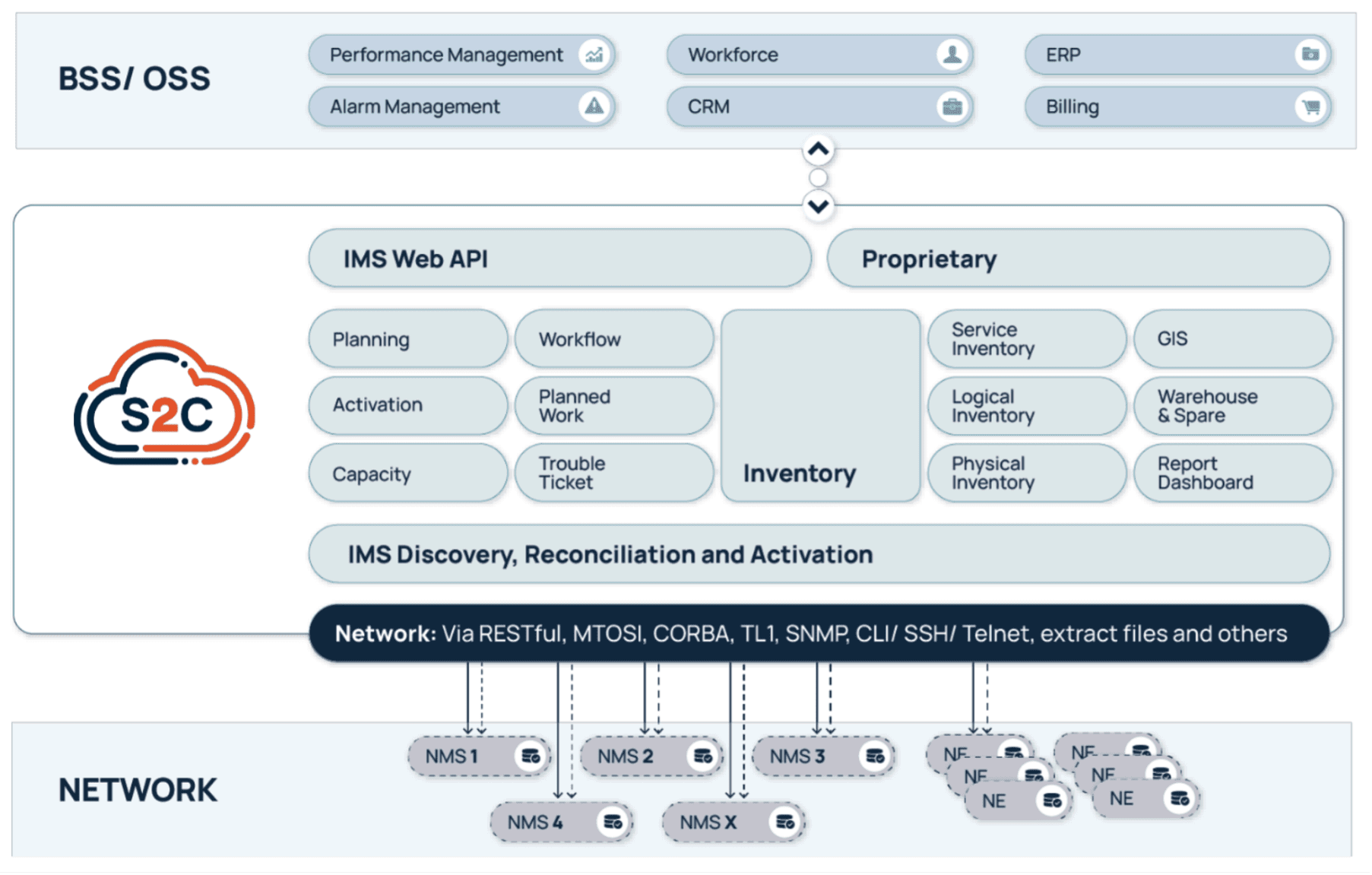

VC4 Advances OSS Transformation with an Efficient and Reliable AI enabled Network Inventory System

By Juhi Rani assisted by IEEE Techblog editors Ajay Lotan Thakur and Sridhar Talari Rajagopal

Introduction:

This year, 2025, VC4 [a Netherlands Head Office (H/O) based Operational Support System (OSS) software provider] has brought sharp industry focus to a challenge that many experience in telecom. Many operators/carriers still struggle with broken, unreliable, and disconnected inventory systems. While many companies are demoing AI, intent-based orchestration, and autonomous networks, VC4’s newly branded offering, Service2Create (or S2C as it’s known to some), is refreshingly grounded. Also as we have learnt very quickly, bad data into AI is a “no-no”. None of the orchestration and autonomous networks, will work accurately if your OSS is built on flawed data. The age-old saying “Garbage in, Garbage out” comes to mind.

VC4’s platform, Service2Create (S2C), is a next-generation OSS inventory system that supports the evolving needs of telecom operators looking to embrace AI, automate workflows, and run leaner, smarter operations. Service2Create is built from over two decades of experience of inventory management solutions – IMS. By focusing on inventory accuracy and network transparency, S2C gives operators a foundation they can trust.

Inventory: The Most Underestimated Barrier to Transformation

In a post from TM Forum, we observed that operators across the world are making huge investments in digital transformation but many are slowed by a problem closer to the ground: the inability to know what exactly is deployed in the network, where it is, and how it’s interconnected.

VC4 calls this the “silent blocker” to OSS evolution.

Poor mis-aligned inventory undermines everything. It breaks service activations, triggers unnecessary truck rolls, causes billing mismatches, and frustrates assurance teams. Field engineers often discover real-world conditions that don’t match what’s in the system, while planners and support teams struggle to keep up. The problem doesn’t just stop with network data.

In many cases, customer records were also out of date or incomplete… and unknown inventory can also be a factor. Details like line types, distance from the central office, or whether loading coils were present often didn’t match reality. For years, this was one of the biggest issues for operators. Customer databases and network systems rarely aligned, and updates often took weeks or months. Engineers had to double-check every record before activating a service, which slowed delivery and increased errors. It was a widespread problem across the industry and one that many operators have been trying to fix ever since.

Over time, some operators tried to close this gap with data audits and manual reconciliation projects, but those fixes never lasted long. Networks change every day, and by the time a cleanup was finished, the data was already out of sync again.

Modern inventory systems take a different approach by keeping network and customer data connected in real time. They:

- Continuously sync with live network data so records stay accurate.

- Automatically validate what’s in the field against what’s stored in the system.

- Update both customer and network records when new services are provisioned.

In short, we’re talking about network auto-discovery and reconciliation, something that Service2Create does exceptionally well. This also applies for unknown records, duplicate records and records with naming inconsistencies/variances.

It is achieved through continuous network discovery that maps physical and logical assets, correlates them against live service models, and runs automated reconciliation to detect discrepancies such as unknown elements, duplicates, or naming mismatches. Operators can review and validate these findings, ensuring that the inventory always reflects the true, real-time network state. A more detailed explanation can be found in the VC4 Auto Discovery & Reconciliation guide which can be downloaded for free.

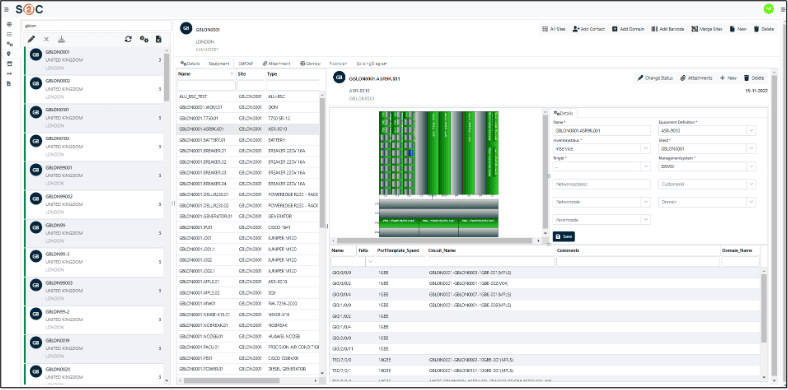

Service2Create: Unified, Reconciled, and AI-Ready

Service2Create is designed to reflect the actual, current state of the network across physical, logical, service and virtual layers. Whether operators are managing fiber rollout, mobile backhaul, IP/MPLS cores, or smart grids, S2C creates a common source of truth. It models infrastructure end-to-end, automates data reconciliation using discovery, and integrates with orchestration platforms and ticketing tools.

To make the difference clearer here is the table below shows how Service2Create compares with the older inventory systems still used by many operators. Traditional tools depend on manual updates and disconnected data sources, while Service2Create keeps everything synchronized and validated in real time.

Comparison between legacy inventory tools and Service2Create (S2C)

| Feature | Legacy OSS Tools | VC4 Service2Create (S2C) |

|---|---|---|

| Data reconciliation | Manual or periodic | Automated and continuous |

| Inventory accuracy | Often incomplete or outdated | Real-time and verified |

| Integration effort | Heavy customization needed | Standard API-based integration |

| Update cycle | It takes days or weeks | Completed in hours |

| AI readiness | Low, needs data cleanup | High, with consistent and normalized data |

What makes it AI-ready isn’t just compatibility with new tools, it’s data integrity. VC4 understands that AI and automation only perform well when they’re fed accurate, reliable, and real-time data. Without that, AI is flying blind.

Built-in Geographic Information System (GIS) capabilities help visualize the network in geographic context, while no/low-code workflows and APIs support rapid onboarding and customization. More than software, S2C behaves like a data discipline framework for telecom operations.

Service2Create gives operators a current, trusted view of their network, improving accuracy and reducing the time it takes to keep systems aligned.

AI is Reshaping OSS… But only if the Data is Right

AI is driving the next wave of OSS transformation from automated fault resolution and dynamic provisioning to predictive maintenance and AI-guided assurance. But it’s increasingly clear: AI doesn’t replace the need for accuracy; it demands it.

In 2025, one common thread across operators and developers was this: telcos want AI to reduce costs, shorten response times, and simplify networks. According to a GSMA analysis, many operators continue to struggle as their AI systems depend on fragmented and incomplete datasets, which reduces overall model accuracy.

VC4’s message is cutting through: AI is only as useful as the data that feeds it. Service2Create ensures the inventory is trustworthy, reconciled daily with the live network, and structured in a way AI tools can consume. It’s the difference between automating chaos and enabling meaningful, autonomous decisions.

Service2Create has been adopted with operators across Europe and Asia. In national fiber networks, it’s used to coordinate thousands of kilometers of rollout and maintenance. In mixed fixed-mobile environments, it synchronizes legacy copper, modern fiber, and 5G transport into one unified model.

Designed for Operational Reality

VC4 didn’t build Service2Create for greenfield labs or ideal conditions. The platform is designed for real-world operations: brownfield networks, legacy system integrations, and hybrid IT environments. Its microservices-based architecture and API-first design make it modular and scalable, while its no/low-code capabilities allow operators to adapt it without long customization cycles. See the diagram below.

S2C is deployable in the cloud or on-premises and integrates smoothly with Operational Support System / Business Support System (OSS/BSS) ecosystems including assurance, CRM, and orchestration. The result? Operators don’t have to rip and replace their stack – they can evolve it, anchored on a more reliable inventory core.

What Industry Analysts are Saying

In 2025, telco and IT industry experts are also emphasizing that AI’s failure to deliver consistent ROI in telecom is often due to unreliable base systems. One IDC analyst summed it up: “AI isn’t failing because the models are bad, it’s failing because operators still don’t know what’s in their own networks.”

A senior architect from a Tier 1 European CSP added, “We paused a closed-loop automation rollout because our service model was based on inventory we couldn’t trust. VC4 was the first vendor we saw this year that has addressed this directly and built a product around solving it.”

This year the takeaway is clear: clean inventory isn’t a nice-to-have. It’s step one.

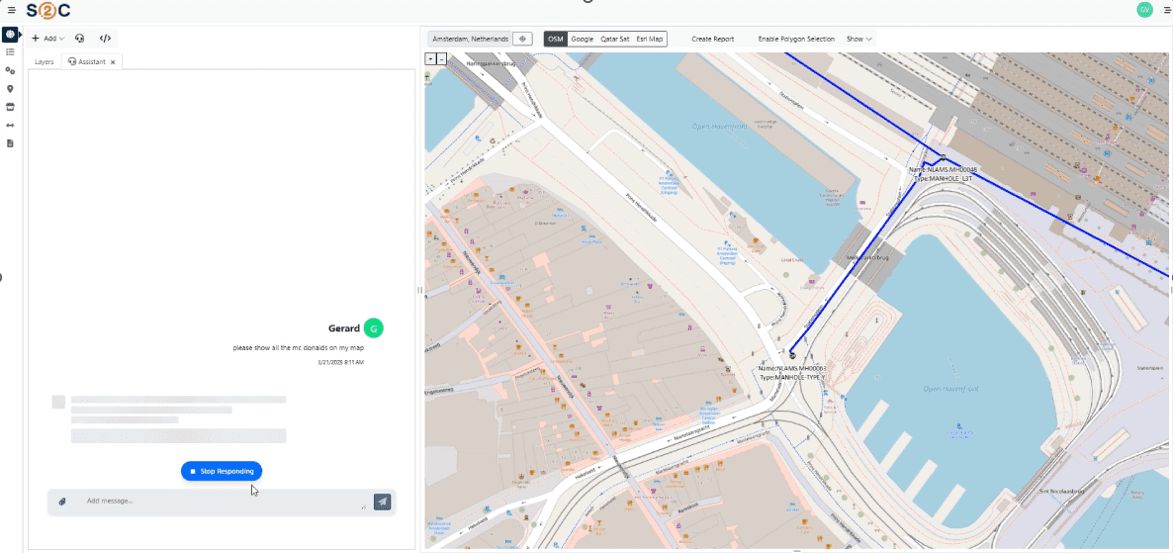

Looking Ahead: AI-Driven Operations Powered by Trusted Inventory

VC4 is continuing to enhance Service2Create with capabilities that support AI-led operations. Currently, S2C is enhanced with AI-powered natural language interfaces through Model Context Protocol (MCP) servers. This creates a revolutionary way for users to access their data and makes it also easier for them to do so. Simply ask for what you need, in plain language, and receive instant, accurate results from your systems of record.

The S2C platform now offers multiple synchronized access methods:

- Natural Language Interface

- Ask questions in plain language: “Show me network capacity issues in Amsterdam”

- AI translates requests into precise system queries

- No training required – productive from day one

- Direct API Access via MCP

- Programmatic access using Language Integrated Query (LINQ) expressions

- Perfect for integrations and automated workflows

- Industry-standard authentication (IDP)

- S2C Visual Platform

- Full-featured GUI for power users

- Parameterized deeplinks for instant component access

- Low/no-code configuration capabilities

- Hybrid Workflows

-

- Start with AI chat, graduate to power tools

- AI generates deeplinks to relevant S2C dashboards

Export to Excel/CSV for offline analysis

-

What It All Comes Down To

Digital transformation sounds exciting on a conference stage, but in the trenches of telecom operations, it starts with simpler questions. Do you know what’s on your network? Can you trust the data? Can your systems work together?

That’s what Service2Create is built for. It helps operators take control of their infrastructure, giving them the confidence to automate when ready and the clarity to troubleshoot when needed.

VC4’s approach isn’t flashy. It’s focused. And that’s what makes it so effective – a direction supported by coverage from Subseacables.net, which reported on VC4’s partnership with AFR-IX, to automate and modernize network operations across the Mediterranean.

………………………………………………………………………………………………………………….

About the Author:

Juhi Rani is an SEO specialist at VC4 B.V. in the Netherlands. She has successfully directed and supervised teams, evaluated employee skills and knowledge, identified areas of improvement, and provided constructive feedback to increase productivity and maintain quality standards.

Juhi earned a B. Tech degree in Electronics and Communications Engineering from RTU in Jaipur, India in 2015.

……………………………………………………………………………………………………………………………………………………………………..

Ajay Lotan Thakur and Sridhar Talari Rajagopal are esteemed members of the IEEE Techblog Editorial Team. Read more about them here.

Emerging Cybersecurity Risks in Modern Manufacturing Factory Networks

By Omkar Ashok Bhalekar with Ajay Lotan Thakur

Introduction

With the advent of new industry 5.0 standards and ongoing advancements in the field of Industry 4.0, the manufacturing landscape is facing a revolutionary challenge which not only demands sustainable use of environmental resources but also compels us to make constant changes in industrial security postures to tackle modern threats. Technologies such as Internet of Things (IoT) in Manufacturing, Private 4G/5G, Cloud-hosted applications, Edge-computing, and Real-time streaming telemetry are effectively fueling smart factories and making them more productive.

Although this evolution facilitates industrial automation, innovation and high productivity, it also greatly makes the exposure footprint more vulnerable for cyberattacks. Industrial Cybersecurity is quintessential for mission critical manufacturing operations; it is a key cornerstone to safeguard factories and avoid major downtimes.

With the rapid amalgamation of IT and OT (Operational Technology), a hack or a data breach can cause operational disruptions like line down situations, halt in production lines, theft or loss of critical data, and huge financial damage to an organization.

Industrial Networking

Why does Modern Manufacturing demand Cybersecurity? Below outlines a few reasons why cybersecurity is essential in modern manufacturing:

- Convergence of IT and OT: Industrial control systems (ICS) which used to be isolated or air-gapped are now all inter-connected and hence vulnerable to breaches.

- Enlarged Attack Surface: Every device or component in the factory which is on the network is susceptible to threats and attacks.

- Financial Loss: Cyberattacks such as WannaCry or targeted BSOD Blue Screen of Death (BSOD) can cost millions of dollars per minute and result in complete shutdown of operations.

- Disruptions in Logistics Network: Supply chain can be greatly disarrayed due to hacks or cyberattacks causing essential parts shortage.

- Legislative Compliance: Strict laws and regulations such as CISA, NIST, and ISA/IEC 62443 are proving crucial and mandating frameworks to safeguard industries

It is important to understand and adapt to the changing trends in the cybersecurity domain, especially when there are several significant factors at risk. Historically, it has been observed that mankind always has had some lessons learned from their past mistakes while not only advances at fast pace, but the risks from external threats would limit us from making advancements without taking cognizance.

This attitude of adaptability or malleability needs to become an integral part of the mindset and practices in cybersecurity spheres and should not be limited to just industrial security. Such practices can scale across other technological fields. Moreover, securing industries does not just mean physical security, but it also opens avenues for cybersecurity experts to learn and innovate in the field of applications and software such as Manufacturing Execution System (MES) which are crucial for critical operations.

Greatest Cyberattacks in Manufacturing of all times:

Familiarizing and acknowledging different categories of attacks and their scales which have historically hampered the manufacturing domain is pivotal. In this section we would highlight some of the Real-World cybersecurity incidents.

Ransomware (Colonial Pipeline, WannaCry, y.2021):

These attacks brought the US east coast to a standstill due to extreme shortage of fuel and gasoline after hacking employee credentials.

Cause: The root cause for this was compromised VPN account credentials. An VPN account which wasn’t used for a long time and lacked Multi-factor Authentication (MFA) was breached and the credentials were part of a password leak on dark web. The Ransomware group “Darkside” exploited this entry point to gain access to Colonial Pipeline’s IT systems. They did not initially penetrate operational technology systems. However, the interdependence of IT and OT systems caused operational impacts. Once inside, attackers escalated privileges and exfiltrated 100 GB of data within 2 hours. Ransomware was deployed to encrypt critical business systems. Colonial Pipeline proactively shut down the pipeline fearing lateral movement into OT networks.

Effect: The pipeline, which supplies nearly 45% of the fuel to the U.S. East Coast, was shut down for 6 days. Mass fuel shortages occurred across several U.S. states, leading to public panic and fuel hoarding. Colonial Pipeline paid $4.4 million ransom. Later, approximately $2.3 million was recovered by the FBI. Led to a Presidential Executive Order on Cybersecurity and heightened regulations around critical infrastructure cybersecurity. Exposed how business IT network vulnerabilities can lead to real-world critical infrastructure impacts, even without OT being directly targeted.

Industrial Sabotage (Stuxnet, y.2009):

This unprecedented and novel software worm was able to hijack an entire critical facility and sabotage all the machines rendering them defunct.

Cause: Nation-state-developed malware specifically targeting Industrial Control Systems (ICS), with an unprecedented level of sophistication. Stuxnet was developed jointly by the U.S. (NSA) and Israel (Unit 8200) under operation “Olympic Games”. The target was Iran’s uranium enrichment program at Natanz Nuclear Facility. The worm was introduced via USB drives (air-gapped network). Exploited four zero-day vulnerabilities in Windows systems at that time, unprecedented. Specifically targeted Siemens Step7 software running on Windows, which controls Siemens S7-300 PLCs. Stuxnet would identify systems controlling centrifuges used for uranium enrichment. Reprogrammed the PLCs to intermittently change the rotational speed of centrifuges, causing mechanical stress and failure, while reporting normal operations to operators. Used rootkits for both Windows and PLC-level to remain stealthy.

Effect: Destroyed approximately 1,000 IR-1 centrifuges (~10% of Iran’s nuclear capability). Set back Iran’s nuclear program by 1-2 years. Introduced a new era of cyberwarfare, where malware caused physical destruction. Raised global awareness about the vulnerabilities in industrial control systems (ICS). Iran responded by accelerating its cyber capabilities, forming the Iranian Cyber Army. ICS/SCADA security became a top global priority, especially in energy and defense sectors.

Upgrade spoofing (SolarWinds Orion Supply chain Attack, y.2020):

Attackers injected malicious pieces of software into the software updates which infected millions of users.

Cause: Compromise of the SolarWinds build environment leading to a supply chain attack. Attackers known as Russian Cozy Bear, linked to Russia’s foreign intelligence agency, gained access to SolarWinds’ development pipeline. Malicious code was inserted into Orion Platform updates, released between March to June 2020 Customers who downloaded the update installed malware known as SUNBURST. Attackers compromised SolarWinds build infrastructure. It created a backdoor in Orion’s signed DLLs. Over 18,000 customers were potentially affected, including 100 high-value targets. After the exploit, attackers used manual lateral movement, privilege escalation, and custom C2 (command-and-control) infrastructure to exfiltrate data.

Effect: Breach included major U.S. government agencies: DHS, DoE, DoJ, Treasury, State Department, and more. Affected top corporations: Cisco, Intel, Microsoft, FireEye, and others FireEye discovered the breach after noticing unusual two-factor authentication activity. Exposed critical supply chain vulnerabilities and demonstrated how a single point of compromise could lead to nationwide espionage. Promoted the creation of Cybersecurity Executive Order 14028, Zero Trust mandates, and widespread adoption of Software Bill of Materials (SBOM) practices.

Spywares (Pegasus, y.2016-2021):

Cause: Zero-click and zero-day exploits leveraged by NSO Group’s Pegasus spyware, sold to governments. Pegasus can infect phones without any user interaction also known as zero-click exploits. It acquires malicious access to WhatsApp, iMessage or browsers like Safari’s vulnerabilities on iOS, including zero-days attacks on Android devices. Delivered via SMS, WhatsApp messages, or silent push notifications. Once installed, it provides complete surveillance capability such as access to microphones, camera, GPS, calls, photos, texts, and encrypted apps. Zero-click iOS exploit ForcedEntry allows complete compromise of an iPhone. Malware is extremely stealthy, often removing itself after execution. Bypassed Apple’s BlastDoor sandbox and Android’s hardened security modules.

Effect: Used by multiple governments to surveil activists, journalists, lawyers, opposition leaders, even heads of state. The 2021 Pegasus Project, led by Amnesty International and Forbidden Stories, revealed a leaked list of 50,000 potential targets. Phones of high-profile individuals including international journalists, associates, specifically French president, and Indian opposition figures were allegedly targeted which triggered legal and political fallout. NSO Group was blacklisted by the U.S. Department of Commerce. Apple filed a lawsuit against NSO Group in 2021. Renewed debates over the ethics and regulation of commercial spyware.

Other common types of attacks:

Phishing and Smishing: These attacks send out links or emails that appear to be legitimate but are crafted by bad actors for financial means or identity theft.

Social Engineering: Shoulder surfing though sounds funny; it’s the tale of time where the most expert security personnel have been outsmarted and faced data or credential leaks. Rather than relying on technical vulnerabilities, this attack targets human psychology to gain access or break into systems. The attacker manipulates people into revealing confidential information using techniques such as Reconnaissance, Engagement, Baiting or offering Quid pro quo services.

Security Runbook for Manufacturing Industries:

To ensure ongoing enhancements to industrial security postures and preserve critical manufacturing operations, following are 11 security procedures and tactics which will ensure 360-degree protection based on established frameworks:

A. Incident Handling Tactics (First Line of Defense) Team should continuously improve incident response with the help of documentation and response apps. Co-ordination between teams, communications root, cause analysis and reference documentation are the key to successful Incident response.

B. Zero Trust Principles (Trust but verify) Use strong security device management tools to ensure all end devices are in compliance such as trusted certificates, NAC, and enforcement policies. Regular and random checks on users’ official data patterns and assign role-based policy limiting full access to critical resources.

C. Secure Communication and Data Protection Use endpoint or cloud-based security session with IPSec VPN tunnels to make sure all traffic can be controlled and monitored. All user data must be encrypted using data protection and recovery software such as BitLocker.

D. Secure IT Infrastructure Hardening of network equipment such switches, routers, WAPs with dot1x, port-security and EAP-TLS or PEAP. Implement edge-based monitoring solutions to detect anomalies and redundant network infrastructure to ensure least MTTR.

E. Physical Security Locks, badge readers or biometric systems for all critical rooms and network cabinets are a must. A security operations room (SOC) can help monitor internal thefts or sabotage incidents.

F. North-South and East-West Traffic Isolation Safety traffic and external traffic can be rate limited using Firewalls or edge compute devices. 100% isolation is a good wishful thought, but measures need to be taken to constantly monitor any security punch-holes.

G. Industrial Hardware for Industrial applications Use appropriate Industrial grade IP67 or IP68 rated network equipment to avoid breakdowns due to environmental factors. Localized industrial firewalls can provide desired granularity on the edge thereby skipping the need to follow Purdue model.

H. Next-Generation Firewalls with Application-Level Visibility Incorporate Stateful Application Aware Firewalls, which can help provide more control over zones and policies and differentiate application’s behavioral characteristics. Deploy Tools which can perform deep packet inspection and function as platforms for Intrusion prevention (IPS/IDS).

I. Threat and Traffic Analyzer Tools such as network traffic analyzers can help achieve network Layer1-Layer7 security monitoring by detecting and responding to malicious traffic patterns. Self-healing networks with automation and monitoring tools which can detect traffic anomalies and rectify the network incompliance.

J. Information security and Software management Companies must maintain a repo of trust certificates, software and releases and keep pushing regular patches for critical bugs. Keep a constant track of release notes and CVEs (Common Vulnerabilities and exposures) for all vendor software.

K. Idiot-Proofing (How to NOT get Hacked) Regular training to employees and familiarizing them with cyber-attacks and jargons like CryptoJacking or HoneyNets can help create awareness. Encourage and provide a platform for employees or workers to voice their opinions and resolve their queries regarding security threats.

Current Industry Perspective and Software Response

In response to the escalating tide of cyberattacks in manufacturing, from the Triton malware striking industrial safety controls to LockerGoga shutting down production at Norsk Hydro, there has been a sea change in how the software industry is facilitating operational resilience. Security companies are combining cutting-edge threat detection with ICS/SCADA systems, delivering purpose-designed solutions like zero-trust network access, behavior-based anomaly detection, and encrypted machine-to-machine communications. Companies such as Siemens and Claroty are leading the way, bringing security by design rather than an afterthought. A prime example is Dragos OT-specific threat intelligence and incident response solutions, which have become the focal point in the fight against nation-state attacks and ransomware operations against critical infrastructure.

Bridging the Divide between IT and OT: Two way street

With the intensification of OT and IT convergence, perimeter-based defense is no longer sufficient. Manufacturers are embracing emerging strategies such as Cybersecurity Mesh Architecture (CSMA) and applying IT-centric philosophies such as DevSecOps within the OT environment to foster secure by default deployment habits. The trend also brings attention to IEC 62443 conformity as well as NIST based risk assessment frameworks catering to manufacturing. Legacy PLCs having been networked and exposed to internet-borne threats, companies are embracing micro-segmentation, secure remote access, and real-time monitoring solutions that unify security across both environments. Learn how Schneider Electric is empowering manufacturers to securely link IT/OT systems with scalable cybersecurity programs.

Conclusion

In a nutshell, Modern manufacturing, contrary to the past, is not just about quick input and quick output systems which can scale and be productive, but it is an ecosystem, where cybersecurity and manufacturing harmonize and just like healthcare system is considered critical to humans, modern factories are considered quintessential to manufacturing. So many experiences with cyberattacks on critical infrastructure such as pipelines, nuclear plants, power-grids over the past 30 years not only warrant world’s attention but also calls to action the need to devise regulatory standards which must be followed by each and every entity in manufacturing.

As mankind keeps making progress and sprinting towards the next industrial revolution, it’s an absolute exigency to emphasize making Industrial Cybersecurity a keystone in building upcoming critical manufacturing facilities and building a strong foundation for operational excellency. Now is the right time to buy into the trend of Industrial security, sure enough the leaders who choose to be “Cyberfacturers” will survive to tell the tale, and the rest may just serve as stark reminders of what happens when pace outperforms security.

References

- https://www.cisa.gov/topics/industrial-control-systems

- https://www.nist.gov/cyberframework

- https://www.isa.org/standards-and-publications/isa-standards/isa-standards-committees/isa99

- https://www.cisa.gov/news-events/news/attack-colonial-pipeline-what-weve-learned-what-weve-done-over-past-two-years

- https://www.law.georgetown.edu/environmental-law-review/blog/cybersecurity-policy-responses-to-the-colonial-pipeline-ransomware-attack/

- https://www.hbs.edu/faculty/Pages/item.aspx?num=63756

- https://spectrum.ieee.org/the-real-story-of-stuxnet

- https://www.washingtonpost.com/world/national-security/stuxnet-was-work-of-us-and-israeli-experts-officials-say/2012/06/01/gJQAlnEy6U_story.html

- https://www.kaspersky.com/resource-center/definitions/what-is-stuxnet

- https://www.malwarebytes.com/stuxnet

- https://www.zscaler.com/resources/security-terms-glossary/what-is-the-solarwinds-cyberattack

- https://www.gao.gov/blog/solarwinds-cyberattack-demands-significant-federal-and-private-sector-response-infographic

- https://www.rapid7.com/blog/post/2020/12/14/solarwinds-sunburst-backdoor-supply-chain-attack-what-you-need-to-know/

- https://www.fortinet.com/resources/cyberglossary/solarwinds-cyber-attack

- https://www.amnesty.org/en/latest/research/2021/07/forensic-methodology-report-how-to-catch-nso-groups-pegasus/

- https://citizenlab.ca/2023/04/nso-groups-pegasus-spyware-returns-in-2022/

- https://thehackernews.com/2023/04/nso-group-used-3-zero-click-iphone.html

- https://www.securityweek.com/google-says-nso-pegasus-zero-click-most-technically-sophisticated-exploit-ever-seen/

- https://www.nist.gov/itl/smallbusinesscyber/guidance-topic/phishing

- https://www.blumira.com/blog/social-engineering-the-human-element-in-cybersecurity

- https://www.nist.gov/cyberframework

- https://www.dragos.com/cyber-threat-intelligence/

- https://mesh.security/security/what-is-csma/

- https://download.schneider-electric.com/files?p_Doc_Ref=IT_OT&p_enDocType=User+guide&p_File_Name=998-20244304_Schneider+Electric+Cybersecurity+White+Paper.pdf

- https://insanecyber.com/understanding-the-differences-in-ot-cybersecurity-standards-nist-csf-vs-62443/

- https://blog.se.com/sustainability/2021/04/13/it-ot-convergence-in-the-new-world-of-digital-industries/

About Author:

Omkar Bhalekar is a senior network engineer and technology enthusiast specializing in Data center architecture, Manufacturing infrastructure, and Sustainable solutions with extensive experience in designing resilient industrial networks and building smart factories and AI data centers with scalable networks. He is also the author of the Book Autonomous and Predictive Networks: The future of Networking in the Age of AI and co-author of Quantum Ops – Bridging Quantum Computing & IT Operations. Omkar writes to simplify complex technical topics for engineers, researchers, and industry leaders.

Countdown to Q-day: How modern-day Quantum and AI collusion could lead to The Death of Encryption

By Omkar Ashok Bhalekar with Ajay Lotan Thakur

Behind the quiet corridors of research laboratories and the whir of supercomputer data centers, a stealth revolution is gathering force, one with the potential to reshape the very building blocks of cybersecurity. At its heart are qubits, the building blocks of quantum computing, and the accelerant force of generative AI. Combined, they form a double-edged sword capable of breaking today’s encryption and opening the door to an era of both vast opportunity and unprecedented danger.

Modern Cryptography is Fragile

Modern-day computer security relies on the un-sinking complexity of certain mathematical problems. RSA encryption, introduced for the first time in 1977 by Rivest, Shamir, and Adleman, relies on the principle that factorization of a 2048-bit number into primes is computationally impossible for ordinary computers (RSA paper, 1978). Also, Diffie-Hellman key exchange, which was described by Whitfield Diffie and Martin Hellman in 1976, offers key exchange in a secure manner over an insecure channel based on the discrete logarithm problem (Diffie-Hellman paper, 1976). Elliptic-Curve Cryptography (ECC) was described in 1985 independently by Victor Miller and Neal Koblitz, based on the hardness of elliptic curve discrete logarithms, and remains resistant to brute-force attacks but with smaller key sizes for the same level of security (Koblitz ECC paper, 1987).

But quantum computing flips the script. Thanks to algorithms like Shor’s Algorithm, a sufficiently powerful quantum computer could factor large numbers exponentially faster than regular computers rendering RSA and ECC utterly useless. Meanwhile, Grover’s Algorithm provides symmetric key systems like AES with a quadratic boost.

What would take millennia or centuries to classical computers, quantum computers could boil down to days or even hours with the right scale. In fact, experts reckon that cracking RSA-2048 using Shor’s Algorithm could take just 20 million physical qubits which is a number that’s diminishing each year.

Generative AI adds fuel to the fire

While quantum computing threatens to undermine encryption itself, generative AI is playing an equally insidious but no less revolutionary role. By mass-producing activities such as the development of malware, phishing emails, and synthetic identities, generative AI models, large language models, and diffusion-based visual synthesizers, for example, are lowering the bar on sophisticated cyberattacks.

Even worse, generative AI can be applied to model and experiment with vulnerabilities in implementations of cryptography, including post-quantum cryptography. It can be employed to assist with training reinforcement learning agents that optimize attacks against side channels or profile quantum circuits to uncover new behaviors.

With quantum computing on the horizon, generative AI is both a sophisticated research tool and a player to watch when it comes to weaponization. On the one hand, security researchers utilize generative AI to produce, examine, and predict vulnerabilities in cryptography systems to inform the development of post-quantum-resistant algorithms. Meanwhile, it is exploited by malicious individuals for their ability to automate the production of complex attack vectors like advanced malware, phishing attacks, and synthetic identities radically reducing the barrier to conducting high impact cyberattacks. This dual-use application of generative AI radically shortens the timeline for adversaries to take advantage of breached or transitional cryptographic infrastructures, practically bridging the window of opportunity for defenders to deploy effective quantum-safe security solutions.

Real-World Implications

The impact of busted cryptography is real, and it puts at risk the foundations of everyday life:

1. Online Banking (TLS/HTTPS)

When you use your bank’s web site, the “https” in the address bar signifies encrypted communication over TLS (Transport Layer Security). Most TLS implementations rely on RSA or ECC keys to securely exchange session keys. A quantum attack would decrypt those exchanges, allowing an attacker to decrypt all internet traffic, including sensitive banking data.

2. Cryptocurrencies

Bitcoin, Ethereum, and other cryptocurrencies use ECDSA (Elliptic Curve Digital Signature Algorithm) for signing transactions. If quantum computers can crack ECDSA, a hacker would be able to forge signatures and steal digital assets. In fact, scientists have already performed simulations in which a quantum computer might be able to extract private keys from public blockchain data, enabling theft or rewriting the history of transactions.

3. Government Secrets and Intelligence Archives

National security agencies all over the world rely heavily on encryption algorithms such as RSA and AES to protect sensitive information, including secret messages, intelligence briefs, and critical infrastructure data. Of these, AES-256 is one that is secure even in the presence of quantum computing since it is a symmetric-key cipher that enjoys quantum resistance simply because Grover’s algorithm can only give a quadratic speedup against it, brute-force attacks remain gigantic in terms of resources and time. Conversely, asymmetric cryptographic algorithms like RSA and ECC, which underpin the majority of public key infrastructures, are fundamentally vulnerable to quantum attacks that can solve the hard mathematical problems they rely on for security.

Such a disparity offers a huge security gap. Information obtained today, even though it is in such excellent safekeeping now, might not be so in the future when sufficiently powerful quantum computers will be accessible, a scenario that is sometimes referred to as the “harvest now, decrypt later” threat. Both intelligence agencies and adversaries could be quietly hoarding and storing encrypted communications, confident that quantum technology will soon have the capability to decrypt this stockpile of sensitive information. The Snowden disclosures placed this threat in the limelight by revealing that the NSA catches and keeps vast amounts of global internet traffic, such as diplomatic cables, military orders, and personal communications. These repositories of encrypted data, unreadable as they stand now, are an unseen vulnerability; when Q-Day which is the onset of available, practical quantum computers that can defeat RSA and ECC, come around, confidentiality of decades’ worth of sensitive communications can be irretrievably lost.

Such a compromise would have apocalyptic consequences for national security and geopolitical stability, exposing classified negotiations, intelligence operations, and war plans to adversaries. Such a specter has compelled governments and security entities to accelerate the transition to post-quantum cryptography standards and explore quantum-resistant encryption schemes in an effort to safeguard the confidentiality and integrity of information in the era of quantum computing.

Arms Race Toward Post-Quantum Cryptography

In response, organizations like NIST are leading the development of post-quantum cryptographic standards, selecting algorithms believed to be quantum resistant. But migration is glacial. Implementing backfitting systems with new cryptographic foundations into billions of devices and services is a logistical nightmare. This is not a process of merely software updates but of hardware upgrades, re-certifications, interoperability testing, and compatibility testing with worldwide networks and critical infrastructure systems, all within a mode of minimizing downtime and security vulnerabilities.

Building such a large quantum computer that can factor RSA-2048 is an enormous task. It would require millions of logical qubits with very low error rates, it’s estimated. Today’s high-end quantum boxes have less than 100 operational qubits, and their error rates are too high to support complicated processes over a long period of time. However, with continued development of quantum correction methods, materials research, and qubit coherence times, specialists warn that effective quantum decryption capability may appear more quickly than the majority of organizations are prepared to deal with.

This convergence time frame, when old and new environments coexist, is where danger is most present. Attackers can use generative AI to look for these hybrid environments in which legacy encryption is employed, by botching the identification of old crypto implementations, producing targeted exploits en masse, and choreographing multi-step attacks that overwhelm conventional security monitoring and patching mechanisms.

Preparing for the Convergence

In order to be able to defend against this coming storm, the security strategy must evolve:

- Inventory Cryptographic Assets: Firms must take stock of where and how encryption is being used across their environments.

- Adopt Crypto-Agility: System needs to be designed so it can easily switch between encryption algorithms without full redesign.

- Quantum Test Threats: Use AI tools to stress-test quantum-like threats in encryption schemes.

Adopt PQC and Zero-Trust Models: Shift towards quantum-resistant cryptography and architectures with breach as the new default state.

In Summary

Quantum computing is not only a looming threat, it is a countdown to a new cryptographic arms race. Generative AI has already reshaped the cyber threat landscape, and in conjunction with quantum power, it is a force multiplier. It is a two-front challenge that requires more than incremental adjustment; it requires a change of cybersecurity paradigm.

Panic will not help us. Preparation will.

Abbreviations

RSA – Rivest, Shamir, and Adleman

ECC – Elliptic-Curve Cryptography

AES – Advanced Encryption Standard

TLS – Transport Layer Security

HTTPS – Hypertext Transfer Protocol Secure

ECDSA – Elliptic Curve Digital Signature Algorithm

NSA – National Security Agency

NIST – National Institute of Standards and Technology

PQC – Post-Quantum Cryptography

References

- https://arxiv.org/abs/2104.07603

- https://www.researchgate.net/publication/382853518_CryptoGenSec_A_Hybrid_Generative_AI_Algorithm_for_Dynamic_Cryptographic_Cyber_Defence

- https://papers.ssrn.com/sol3/papers.cfm?abstract_id=5185525

- https://www.classiq.io/insights/shors-algorithm-explained

- https://www.theguardian.com/world/interactive/2013/nov/01/snowden-nsa-files-surveillance-revelations-decoded

- https://arxiv.org/abs/2307.00691

- https://secureframe.com/blog/generative-ai-cybersecurity

- https://www.techtarget.com/searchsecurity/news/365531559/How-hackers-can-abuse-ChatGPT-to-create-malware

- https://arxiv.org/abs/1802.07228

- https://www.technologyreview.com/2019/05/30/65724/how-a-quantum-computer-could-break-2048-bit-rsa-encryption-in-8-hours/

- https://arxiv.org/pdf/1710.10377

- https://thequantuminsider.com/2025/05/24/google-researcher-lowers-quantum-bar-to-crack-rsa-encryption/

- https://csrc.nist.gov/projects/post-quantum-cryptography

***Google’s Gemini is used in this post to paraphrase some sentences to add more context. ***

About Author:

Omkar Bhalekar is a senior network engineer and technology enthusiast specializing in Data center architecture, Manufacturing infrastructure, and Sustainable solutions with extensive experience in designing resilient industrial networks and building smart factories and AI data centers with scalable networks. He is also the author of the Book Autonomous and Predictive Networks: The future of Networking in the Age of AI and co-author of Quantum Ops – Bridging Quantum Computing & IT Operations. Omkar writes to simplify complex technical topics for engineers, researchers, and industry leaders.

Liquid Dreams: The Rise of Immersion Cooling and Underwater Data Centers

By Omkar Ashok Bhalekar with Ajay Lotan Thakur

As demand for data keeps rising, driven by generative AI, real-time analytics, 8K streaming, and edge computing, data centers are facing an escalating dilemma: how to maintain performance without getting too hot. Traditional air-cooled server rooms that were once large enough for straightforward web hosting and storage are being stretched to their thermal extremes by modern compute-intensive workloads. While the world’s digital backbone burns hot, innovators are diving deep, deep to the ocean floor. Say hello to immersion cooling and undersea data farms, two technologies poised to revolutionize how the world stores and processes data.

Heat Is the Silent Killer of the Internet – In each data center, heat is the unobtrusive enemy. If racks of performance GPUs, CPUs, and ASICs are all operating at the same time, they generate massive amounts of heat. The old approach with gigantic HVAC systems and chilled air manifolds is reaching its technological and environmental limits.

In the majority of installations, over 35-40% of total energy consumption is spent on simply cooling the hardware, rather than running it. As model sizes and inference loads explode (think ChatGPT, DALL·E, or Tesla FSD), traditional cooling infrastructures simply aren’t up to the task without costly upgrades or environmental degradation. This is why there is a paradigm shift.

Liquid cooling is not an option everywhere due to lack of infrastructure, expense, and geography, so we still must rely on every player in the ecosystem to step up the ante when it comes to energy efficiency. The burden crosses multiple domains, chip manufacturers need to deliver far greater performance per watt with advanced semiconductor design, and software developers need to write that’s fundamentally low power by optimizing algorithms and reducing computational overhead.

Along with these basic improvements, memory manufacturers are designing low-power solutions, system manufacturers are making more power-efficient delivery networks, and cloud operators are making their data center operations more efficient while increasing the use of renewable energy sources. As Microsoft Chief Environmental Officer Lucas Joppa said, “We need to think about sustainability not as a constraint, but as an innovative driver that pushes us to build more efficient systems across every layer of the stack of technology.”

However, despite these multifaceted efficiency gains, thermal management remains a significant bottleneck that can have a deep and profound impact on overall system performance and energy consumption. Ineffective cooling can force processors to slow down their performance, which is counterintuitive to better chips and optimized software. This becomes a self-perpetuating loop where wasteful thermal management will counteract efficiency gains elsewhere in the system.

In this blogpost, we will address the cooling aspect of energy consumption, considering how future thermal management technology can be a multiplier of efficiency across the entire computing infrastructure. We will explore how proper cooling strategies not only reduce direct energy consumption from cooling components themselves but also enable other components of the system to operate at their maximum efficiency levels.

What Is Immersion Cooling?

Immersion cooling cools servers by submerging them in carefully designed, non-conductive fluids (typically dielectric liquids) that transfer heat much more efficiently than air. Immersion liquids are harmless to electronics; in fact, they allow direct liquid contact cooling with no risk of short-circuiting or corrosion.

Two general types exist:

- Single-phase immersion, with the fluid remaining liquid and transferring heat by convection.

- Two-phase immersion, wherein fluid boils at low temperature, gets heated and condenses in a closed loop.

According to Vertiv’s research, in high-density data centers, liquid cooling improves the energy efficiency of IT and facility systems compared to air cooling. In their fully optimized study, the introduction of liquid cooling created a 10.2% reduction in total data center power and a more than 15% improvement in Total Usage Effectiveness (TUE).

Total Usage Effectiveness is calculated by using the formula below:

TUE = ITUE x PUE (ITUE = Total Energy Into the IT Equipment/Total Energy into the Compute Components, PUE = Power Usage Effectiveness)

Reimagining Data Centers Underwater

Imagine shipping an entire data center in a steel capsule and sinking it to the ocean floor. That’s no longer sci-fi.

Microsoft’s Project Natick demonstrated the concept by deploying a sealed underwater data center off the Orkney Islands, powered entirely by renewable energy and cooled by the surrounding seawater. Over its two-year lifespan, the submerged facility showed:

- A server failure rate 1/8th that of land-based centers.

- No need for on-site human intervention.

- Efficient, passive cooling by natural sea currents.

Why underwater? Seawater is an open, large-scale heat sink, and underwater environments are naturally less prone to temperature fluctuations, dust, vibration, and power surges. Most coastal metropolises are the biggest consumers of cloud services and are within 100 miles of a viable deployment site, which would dramatically reduce latency.

Why This Tech Matters Now Data centers already account for about 2–3% of the world’s electricity, and with the rapid growth in AI and metaverse workloads, that figure will grow. Generative inference workloads and AI training models consume up to 10x the power per rack that regular server workloads do, subjecting cooling gear and sustainability goals to tremendous pressure. Legacy air cooling technologies are reaching thermal and density thresholds, and immersion cooling is a critical solution to future scalability. According to Submer, a Barcelona based immersion cooling company, immersion cooling has the ability to reduce energy consumed by cooling systems by up to 95% and enable higher rack density, thus providing a path to sustainable growth in data centers under AI-driven demands

Advantages & Challenges

Immersion and submerged data centers possess several key advantages:

- Sustainability – Lower energy consumption and lower carbon footprints are paramount as ESG (Environmental, Social, Governance) goals become business necessities.

- Scalability & Efficiency – Immersion allows more density per square foot, reducing real estate and overhead facility expenses.

- Reliability – Liquid-cooled and underwater systems have fewer mechanical failures including less thermal stress, fewer moving parts, and less oxidation.

- Security & Autonomy – Underwater encased pods or autonomous liquid systems are difficult to hack and can be remotely monitored and updated, ideal for zero-trust environments.

While there are advantages of Immersion Cooling / Submerges Datacenters, there are some challenges/limitations as well –

- Maintenance and Accessibility Challenges – Both options make hardware maintenance complex. Immersion cooling requires careful removal and washing of components to and from dielectric liquids, whereas underwater data centers provide extremely poor physical access, with entire modules having to be removed to fix them, which translates to longer downtimes.

- High Initial Costs and Deployment Complexity – Construction of immersion tanks or underwater enclosures involves significant capital investment in specially designed equipment, infrastructure, and deployment techniques. Underwater data centers are also accompanied by marine engineering, watertight modules, and intricate site preparation.

- Environmental and Regulatory Concerns – Both approaches involve environmental issues and regulatory adherence. Immersion systems struggle with fluid waste disposal regulations, while underwater data centers have marine environmental impact assessments, permits, and ongoing ecosystem protection mechanisms.

- Technology Maturity and Operational Risks – These are immature technologies with minimal historical data on long-term performance and reliability. Potential problems include leakage of liquids in immersion cooling or damage and biofouling in underwater installation, leading to uncertain large-scale adoption.

Industry Momentum

Various companies are leading the charge:

- GRC (Green Revolution Cooling) and submersion cooling offer immersion solutions to hyperscalers and enterprises.

- HPC is offered with precision liquid cooling by Iceotope. Immersion cooling at scale is being tested by Alibaba, Google, and Meta to support AI and ML clusters.

- Microsoft is researching commercial viability of underwater data centers as off-grid, modular ones in Project Natick.

Hyperscalers are starting to design entire zones of their new data centers specifically for liquid-cooled GPU pods, while smaller edge data centers are adopting immersion tech to run quietly and efficiently in urban environments.

- The Future of Data Centers: Autonomous, Sealed, and Everywhere

Looking ahead, the trend is clear: data centers are becoming more intelligent, compact, and environmentally integrated. We’re entering an era where: - AI-based DCIM software predicts and prevents failure in real-time.

- Edge nodes with immersive cooling can be located anywhere, smart factories, offshore oil rigs.

- Entire data centers might be built as prefabricated modules, inserted into oceans, deserts, or even space.

- The general principle? Compute must not be limited by land, heat, or humans.

Final Thoughts

In the fight to enable the digital future, air is a luxury. Immersed in liquid or bolted to the seafloor, data centers are shifting to cool smarter, not harder.

Underwater installations and liquid cooling are no longer out-there ideas, they’re lifelines to a scalable, sustainable web.

So, tomorrow’s “Cloud” won’t be in the sky, it will hum quietly under the sea.

References

- https://news.microsoft.com/source/features/sustainability/project-natick-underwater-datacenter/

- https://www.researchgate.net/publication/381537233_Advancement_of_Liquid_Immersion_Cooling_for_Data_Centers

- https://en.wikipedia.org/wiki/Project_Natick

- https://theliquidgrid.com/underwater-data-centers/

- https://www.sunbirddcim.com/glossary/submerged-server-cooling

- https://www.vertiv.com/en-us/solutions/learn-about/liquid-cooling-options-for-data-centers/

- https://submer.com/immersion-cooling/

About Author: