Big tech spending on AI data centers and infrastructure vs the fiber optic buildout during the dot-com boom (& bust)

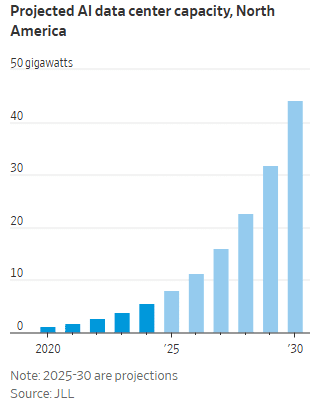

Big Tech plans to spend between $364 billion and $400 billion on AI data centers, purchasing specialized AI hardware like GPUs, and supporting cloud computing/storage capacity. The final 2Q 2025 GDP report, released last week, reveals a surge in data center infrastructure spending from $9.5 billion in early 2020 to $40.4 billion in the second quarter of 2025. It’s largely due to an unprecedented investment boom driven by artificial intelligence (AI) and cloud computing. The increase highlights a monumental shift in capital expenditure by major tech companies.

Yet there are huge uncertainties about how far AI will transform scientific discovery and hypercharge technological advance. Tech financial analysts worry that enthusiasm for AI has turned into a bubble that is reminiscent of the mania around the internet’s infrastructure build-out boom from 1998-2000. During that time period, telecom network providers spent over $100 billion blanketing the country with fiber optic cables based on the belief that the internet’s growth would be so explosive that such massive investments were justified. The “talk of the town” during those years was the “All Optical Network,” with ultra-long haul optical transceiver, photonic switches and optical add/drop multiplexers. 27 years later, it still has not been realized anywhere in the world.

The resulting massive optical network overbuilding made telecom the hardest hit sector of the dot-com bust. Industry giants toppled like dominoes, including Global Crossing, WorldCom, Enron, Qwest, PSI Net and 360Networks.

However, a key difference between then and now is that today’s tech giants (e.g. hyperscalers) produce far more cash than the fiber builders in the 1990s. Also, AI is immediately available for use by anyone that has a high speed internet connection (via desktop, laptop, tablet or smartphone) unlike the late 1990s when internet users (consumers and businesses) had to obtain high-speed wireline access via cable modems, DSL or (in very few areas) fiber to the premises.

……………………………………………………………………………………………………………………………………………………………………………………………………………………………….

Today, the once boring world of chips and data centers has become a raging multi-hundred billion dollar battleground where Silicon Valley giants attempt to one up each other with spending commitments—and sci-fi names. Meta CEO Mark Zuckerberg teased his planned “Hyperion” mega-data center with a social-media post showing it would be the size of a large chunk of Manhattan.

OpenAI’s Sam Altman calls his data-center effort “Stargate,” a reference to the 1994 movie about an interstellar time-travel portal. Company executives this week laid out plans that would require at least $1 trillion in data-center investments, and Altman recently committed the company to pay Oracle an average of approximately $60 billion a year for AI compute servers in data centers in coming years. That’s despite Oracle is not a major cloud service provider and OpenAI will not have the cash on hand to pay Oracle.

In fact, OpenAI is on track to realize just $13 billion in revenue from all its paying customers this year and won’t be profitable till at least 2029 or 2030. The company projects its total cash burn will reach $115 billion by 2029. The majority of its revenue comes from subscriptions to premium versions of ChatGPT, with the remainder from selling access to its models via its API. Although ~ 700 million people—9% of the world’s population—are weekly users of ChatGPT (as of August, up from 500 million in March), its estimated that over 90% use the free version. Also this past week:

- Nvidia plans to invest up to $100 billion to help OpenAI build data center capacity with millions GPUs.

- OpenAI revealed an expanded deal with Oracle and SoftBank , scaling its “Stargate” project to a $400 billion commitment across multiple phases and sites.

- OpenAI deepened its enterprise reach with a formal integration into Databricks — signaling a new phase in its push for commercial adoption.

Nvidia is supplying capital and chips. Oracle is building the sites. OpenAI is anchoring the demand. It’s a circular economy that could come under pressure if any one player falters. And while the headlines came fast this week, the physical buildout will take years to deliver — with much of it dependent on energy and grid upgrades that remain uncertain.

Another AI darling is CoreWeave, a company that provides GPU-accelerated cloud computing platforms and infrastructure. From its founding in 2017 until its pivot to cloud computing in 2019, Corweave was an obscure cryptocurrency miner with fewer than two dozen employees. Flooded with money from Wall Street and private-equity investors, it has morphed into a computing goliath with a market value bigger than General Motors or Target.

…………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………….

Massive AI infrastructure spending will require tremendous AI revenue for pay-back:

David Cahn, a partner at venture-capital firm Sequoia, estimates that the money invested in AI infrastructure in 2023 and 2024 alone requires consumers and companies to buy roughly $800 billion in AI products over the life of these chips and data centers to produce a good investment return. Analysts believe most AI processors have a useful life of between three and five years.

This week, consultants at Bain & Co. estimated the wave of AI infrastructure spending will require $2 trillion in annual AI revenue by 2030. By comparison, that is more than the combined 2024 revenue of Amazon, Apple, Alphabet, Microsoft, Meta and Nvidia, and more than five times the size of the entire global subscription software market.

Morgan Stanley estimates that last year there was around $45 billion of revenue for AI products. The sector makes money from a combination of subscription fees for chatbots such as ChatGPT and money paid to use these companies’ data centers. How the tech sector will cover the gap is “the trillion dollar question,” said Mark Moerdler, an analyst at Bernstein. Consumers have been quick to use AI, but most are using free versions, Moerdler said. Businesses have been slow to spend much on AI services, except for the roughly $30 a month per user for Microsoft’s Copilot or similar products. “Someone’s got to make money off this,” he said.

…………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………….

Why this time is different (?):

AI cheerleaders insist that this boom is different from the dot-com era. If AI continues to advance to the point where it can replace a large swath of white collar jobs, the savings will be more than enough to pay back the investment, backers argue. AI executives predict the technology could add 10% to global GDP in coming years.

“Training AI models is a gigantic multitrillion dollar market,” Oracle chairman Larry Ellison told investors this month. The market for companies and consumers using AI daily “will be much, much larger.”

The financing behind the AI build-out is complex. Debt is layered on at nearly every level. his “debt-fueled arms race” involves large technology companies, startups, and private credit firms seeking innovative ways to fund the development of data centers and acquire powerful hardware, such as Nvidia GPUs. Debt is layered across different levels of the AI ecosystem, from the large tech giants to smaller cloud providers and specialized hardware firms.

Alphabet, Microsoft, Amazon, Meta and others create their own AI products, and sometimes sell access to cloud-computing services to companies such as OpenAI that design AI models. The four “hyperscalers” alone are expected to spend nearly $400 billion on capital investments next year, more than the cost of the Apollo space program in today’s dollars. Some build their own data centers, and some rely on third parties to erect the mega-size warehouses tricked out with cooling equipment and power.

…………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………….

Echoes of bubbles past:

History is replete with technology bubbles that pop. Optimism over an invention—canals, electricity, railroads—prompts an investor stampede premised on explosive growth. Overbuilding follows, and investors eat giant losses, even when a new technology permeates the economy. Predicting when a boom turns into a bubble is notoriously hard. Many inflate for years. Some never pop, and simply stagnate.

The U.K.’s 19th-century railway mania was so large that over 7% of the country’s GDP went toward blanketing the country with rail. Between 1840 and 1852, the railway system nearly quintupled to 7,300 miles of track, but it only produced one-fourth of the revenue builders expected, according to Andrew Odlyzko,PhD, an emeritus University of Minnesota mathematics professor who studies bubbles. He calls the unbridled optimism in manias “collective hallucinations,” where investors, society and the press follow herd mentality and stop seeing risks.

He knows from firsthand experience as a researcher at Bell Labs in the 1990s. Then, telecom giants and upstarts raced to speculatively plunge tens of millions of miles of fiber cables into the ground, spending the equivalent of around 1% of U.S. GDP over half a decade.

Backers compared the effort to the highway system, to the advent of electricity and to discovering oil. The prevailing belief at the time, he said, was that internet use was doubling every 100 days. But in reality, for most of the 1990s boom, traffic doubled every year, Odlyzko found.

The force of the mania led executives across the industry to focus on hype more than unfavorable news and statistics, pouring money into fiber until the bubble burst.

“There was a strong element of self interest,” as companies and executives all stood to benefit financially as long as the boom continued, Odlyzko said. “Cautionary signs are disregarded.”

Kevin O’Hara, a co-founder of upstart fiber builder Level 3, said banks and stock investors were throwing money at the company, and executives believed demand would rocket upward for years. Despite worrying signs, executives focused on the promise of more traffic from uses like video streaming and games.

“It was an absolute gold rush,” he said. “We were spending about $110 million a week” building out the network.

When reality caught up, Level 3’s stock dropped 95%, while giants of the sector went bust. Much of the fiber sat unused for over a decade. Ultimately, the growth of video streaming and other uses in the early 2010s helped soak up much of the oversupply.

Worrying signs:

There are growing, worrying signs that the optimism about AI won’t pan out.

- MIT Media Lab (2025): The “State of AI in Business 2025” report found that 95% of custom enterprise AI tools and pilots fail to produce a measurable financial impact or reach full-scale production. The primary issue identified was a “learning gap” among leaders and organizations, who struggle to properly integrate AI tools and redesign workflows to capture value.

- A University of Chicago economics paper found AI chatbots had “no significant impact on workers’ earnings, recorded hours, or wages” at 7,000 Danish workplaces.

- Gartner (2024–2025): The research and consulting firm has reported that 85% of AI initiatives fail to deliver on their promised value. Gartner also predicts that by the end of 2025, 30% of generative AI projects will be abandoned after the proof-of-concept phase due to issues like poor data quality, lack of clear business value, and escalating costs.

- RAND Corporation (2024): In its analysis, RAND confirmed that the failure rate for AI projects is over 80%, which is double the failure rate of non-AI technology projects. Cited obstacles include cost overruns, data privacy concerns, and security risks.

OpenAI’s release of ChatGPT-5 in August was widely viewed as an incremental improvement, not the game-changing thinking machine many expected. Given the high cost of developing it, the release fanned concerns that generative AI models are improving at a slower pace than expected. Each new AI model—ChatGPT-4, ChatGPT-5—costs significantly more than the last to train and release to the world, often three to five times the cost of the previous, say AI executives. That means the payback has to be even higher to justify the spending.

Another hurdle: The chips in the data centers won’t be useful forever. Unlike the dot-com boom’s fiber cables, the latest AI chips rapidly depreciate in value as technology improves, much like an older model car. And they are extremely expensive.

“This is bigger than all the other tech bubbles put together,” said Roger McNamee, co-founder of tech investor Silver Lake Partners, who has been critical of some tech giants. “This industry can be as successful as the most successful tech products ever introduced and still not justify the current levels of investment.”

Other challenges include the growing strain on global supply chains, especially for chips, power and infrastructure. As for economy-wide gains in productivity, few of the biggest listed U.S. companies are able to describe how AI was changing their businesses for the better. Equally striking is the minimal euphoria some Big Tech companies display in their regulatory filings. Meta’s 10k form last year reads: “[T]here can be no assurance that the usage of AI will enhance our products or services or be beneficial to our business, including our efficiency or profitability.” That is very shaky basis on which to conduct a $300bn capex splurge.

………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………

Conclusions:

Big tech spending on AI infrastructure has been propping up the U.S. economy, with some projections indicating it could fuel nearly half of the 2025 GDP growth. However, this contribution primarily stems from capital expenditures, and the long-term economic impact is still being debated. George Saravelos of Deutsche Bank notes that economic growth is not coming from AI itself but from building the data centers to generate AI capacity.

Once those AI factories have been built, with needed power supplies and cooling, will the productivity gains from AI finally be realized? How globally disseminated will those benefits be? Finally, what will be the return on investment (ROI) for the big spending AI companies like the hyperscalers, OpenAI and other AI players?

………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………

References:

https://www.wsj.com/tech/ai/ai-bubble-building-spree-55ee6128

https://www.ft.com/content/6c181cb1-0cbb-4668-9854-5a29debb05b1

https://www.cnbc.com/2025/09/26/openai-big-week-ai-arms-race.html

“You should expect OpenAI to spend trillions of dollars on things like data center construction in the not-too-distant future,” Mr. Altman recently said, referring to the massive computing facilities that power the company’s A.I. technologies. “You should expect a bunch of economists to wring their hands and say ‘This is so crazy. It’s so reckless’ or whatever. And we’ll just be like: ‘You know what? Let us do our thing.’”

Operating a chatbot is significantly more expensive than serving up an ordinary website. And the technology does not necessarily lend itself to the tried-and-true way of making money on a search engine: digital advertising.

OpenAI sells a version of ChatGPT for $20 a month, and according to the company, it at least pays for the cost of its own delivery. But those subscribers account for fewer than 6% of the people who now use ChatGPT.

The free version is still in the red, as OpenAI has not yet begun to experiment with ads. Google, on the other hand, generates $54 billion in ad revenue each quarter from its search engine, which is used by about 2 billion people each day.

Amazon, Google, Meta, Microsoft and OpenAI plan to spend more than $325 billion combined on giant data centers this year. That is $100 billion more than the annual budget of Belgium. Over time, about 10% of the infrastructure will be used to build A.I. technologies while 80 to 90% will be used to deliver these technologies to customers, according to Amazon’s chief executive, Andy Jassy.

Unless companies like Amazon, Google and OpenAI continue to improve these technologies, adoption may be slower than expected.

Almost eight in 10 businesses have started to use generative A.I., but just as many have said it has “no significant bottom-line impact,” according to research from McKinsey & Company.

“The house of cards is going to start crumbling,” said Sasha Luccioni, a researcher at the artificial intelligence start-up Hugging Face. “The amount of money being spent is not proportionate to the money that’s coming in.”

Meta and various start-ups, including Character.AI and Elon Musk’s xAI, are beginning to offer A.I. bots that provide a new kind of companionship. People can interact with these bots on social networks in much the same way they interact with friends. “The average person wants more connectivity, connection, than they have,” Mr. Zuckerberg said in a recent podcast interview.

Dario Amodei, the chief executive of Anthropic, one of OpenAI’s primary rivals, believes that in just a few years — perhaps as soon as next year — artificial intelligence will be like having a “country of geniuses in a data center” that can work together in solving the biggest scientific problems that our society faces.

Technologists like Mr. Amodei believe this kind of technology will change life as we know it. Last year, in a 14,000-word essay, he said that A.I. could eventually cure cancer, end poverty and even bring world peace.

He predicted that within a decade, A.I. would double the life span of the average person to 150 years.

How close is it to reality? It is unclear how these technologies will be built — or if they are even possible. James Manyika, Google’s senior vice president of research, labs, technology and society, said that as Google pursues loftier goals, it will create technologies that can be used right away. As an example, he points to AlphaFold, a system developed by Google that can help accelerate drug discovery in small but important ways and recently won the Nobel Prize for chemistry.

A Google spinoff called Isomorphic Labs aims to make money by helping drug companies use this kind of technology. Executives like Mr. Zuckerberg and Demis Hassabis, the head of Google’s DeepMind research lab, say their companies are pursuing artificial general intelligence, or A.G.I., shorthand for a machine that can match the powers of the human brain, or an even more powerful technology called superintelligence.

Many technologists are determined to chase the largest goal they can imagine: superintelligence. Technologists have pursued this dream since the 1950s.

Terms like A.G.I. and superintelligence are hard to pin down. Scientists cannot even agree on a way of defining human intelligence. But a machine that truly matches the powers of the human brain is many years away, perhaps decades or more.

No one has articulated how companies will make money from this kind of technology. As tech companies spend hundreds of billions on new data centers, they are taking a leap of faith.

This leap is fueled by the same brew that often drives the moguls of Silicon Valley, said Oren Etzioni, the founding chief executive of the Allen Institute for AI: greed, ego and fear of being unseated by an unexpected breakthrough. “If I had to give a one-word answer,” said Mr. Etzioni, “it would be FOMO.”

Mr. Altman said that as he and his rivals chase these lofty goals, some investors may be overspending. Researchers might develop ways of building A.I. using far less hardware. People might not want the A.I. technologies these companies are building. The rapid improvement of A.I. technologies over the last several years might slow or even hit a wall. The entire economy might shift for unrelated reasons.

“Some of our competitors will flame out and some will do really well, and that’s just how capitalism works,” Mr. Altman said. “I do suspect that someone is going to lose a phenomenal amount of money.”

https://www.nytimes.com/2025/09/16/technology/what-exactly-are-ai-companies-trying-to-build-heres-a-guide.html

OpenAI and partners added another five data centers to its Stargate project, raising the cost to around $400 billion, and also announced a partnership with Nvidia, with the AI chip firm committing $100 billion investment in OpenAI’s next-generation infrastructure.

In China, Alibaba vowed to scale up its AI investment plan, currently worth $53 billion, while European hyperscaler startup Nscale raised $1.1 billion.

These sort of numbers have brought comparisons to the dotcom era, and the bandwidth bubble in particular.

Analysts acknowledge the similarities but also point out that, unlike most dotcom companies, AI firms are generating revenue on real business models.

Even so, consultancy Bain & Co has calculated that with the growth rate of AI compute demand running at more than double Moore’s Law, the industry is not going to be able to fund the required capex. This level of growth would require annual investment of around $500 billion – or around 1.25 Stargates.

To fund that, AI companies would have to generate $2 trillion in revenue, but Bain estimates that not even the most aggressive take-up of enterprise AI would raise $1.2 trillion, leaving an $800 billion spending gap.

This methodology does not include revenue from consumer AI services – but $800 billion is a lot of subscriptions – or ads. OpenAI’s revenue is estimated at just $13 billion this year.

Meanwhile, China is pushing ahead with its own version of Stargate that is premised on the idea that both capital and compute power are limited.

As detailed by the Financial Times, it is building a string of AI data center hubs across the country to create a national “computing power” network. It follows a well-established model: the central government issues broad directions, local governments dole out incentives, and big tech firms do the work.

The scheme involves one hub in eastern China servicing the Yangtze River Delta, an Inner Mongolia facility that supports Beijing, and another two in southern and central China respectively to supply Guangzhou and Chongqing.

The three big telcos and Huawei have the job of building and operating the AI data centers.

Outside this government-led project, China’s tech heavyweights are also pouring private funds into cloud and computing infrastructure. According to Bloomberg Intelligence, capex on AI infrastructure and services by Alibaba, Tencent, Baidu Inc. and JD.com could top $32 billion in 2025, up from below $13 billion in 2023.

State-owned investment bank CICC has a much bigger figure, estimating capital spending of 500 billion Chinese yuan (US$70 billion). Even so, it is around a fifth of the torrent of cash being tipped into US data centers.

To compensate for the funding imbalance, Chinese analysts point to DeepSeek as an illustration of how their scarcity-driven innovation path can create breakthroughs.

They also note that while the US has top universities and capital, China has its own deep talent bench with strong engineering capabilities and a high-tech industry built on consumer apps development.

https://www.lightreading.com/ai-machine-learning/ai-keeps-attracting-torrents-of-cash

Massive infrastructure investment from the likes of Microsoft (MSFT), Alphabet (GOOG), Amazon (AMZN) and Meta (META) has been a primary source of fuel for the AI rally. Their spending has caused revenue at chipmakers like Nvidia (NVDA), Broadcom (AVGO) and Micron (MU) to explode, and underpinned the narrative that AI demand is insatiable.

The hyperscalers will all update investors on their capital expenditure plans when they report quarterly results in late October and early November. Alphabet and Meta each lifted their capex forecasts in their most recent earnings reports, while Microsoft and Amazon said they would continue to invest heavily in infrastructure throughout the year.

https://finance.yahoo.com/news/ai-stocks-fueled-bull-market-194514237.html

Neoclouds are emerging as a distinct category of cloud providers built specifically for AI. Their core attributes include GPU-as-a-service models, ultra-low-latency networking, and software stacks optimised for large-scale AI workloads. Unlike traditional infrastructure-as-a-service or platform-as-a-service offerings, neoclouds provide capabilities tailored to AI, from training and inference to fine-tuning and full model hosting.

Global and European players are already establishing themselves in this space, bringing new competition to hyperscalers and regional providers. For the wider ecosystem, the implications are clear. Neoclouds introduce new types of tenants, push facilities toward higher-density requirements, and require earlier supplier engagement than conventional cloud builds.

According to Kristina Lesnjak, EMEA Research Manager, “AI is rapidly reshaping the foundations of cloud infrastructure, driving demand for GPU scale and ultra-low latency that neoclouds are uniquely positioned to deliver. With record levels of investment flowing into the UK’s digital infrastructure and leading technology firms aligning around AI, the momentum behind this transformation has never been stronger.”

https://www.dcbyte.com/news-blogs/ai-neocloud-uk-infrastructure/

It seems a week doesn’t go by that a massive AI deal isn’t announced. OpenAI (OPAI.PVT), Nvidia (NVDA), AMD (AMD), Oracle (ORCL), Broadcom (AVGO), and a raft of other companies are spending billions investing in each other and each other’s hardware.

OpenAI is the company making the most waves in the dealmaking space of late. The ChatGPT developer signed a massive contract in September with Nvidia that will see the chipmaker invest up to $100 billion in the company in exchange for OpenAI purchasing upwards of 10 gigawatts of GPUs over several years. Nvidia said that the partnership will allow OpenAI to deploy “at least 10 gigawatts” of compute capacity from the chipmaker’s AI systems to train and run the ChatGPT maker’s next generation of artificial intelligence models.

The first phase of the partnership kicks off in the second half of 2026, when Nvidia will begin deploying its next-generation Vera Rubin superchips.

One month later, OpenAI unveiled an agreement with Nvidia rival AMD that will see the Sam Altman-helmed AI firm purchase shares equivalent to roughly 10% of the chip company in exchange for upwards of 6 gigawatts of GPUs.

As with the Nvidia deal, AMD will supply OpenAI with its next-generation MI450 AI chips beginning in the second half of 2026.

OpenAI wasn’t done making moves just yet, though. This week, it let fly with its latest news, a 10 gigawatt transaction with Broadcom through which the two will co-develop custom chips to run OpenAI’s AI models and services.

OpenAI will design the accelerators and systems, and Broadcom will develop and deploy them.

All totald, OpenAI has signed deals worth up to 26 gigawatts of GPUs over the time of the three agreements. One gigawatt of electricity is enough to power 800,000 homes, according to Reuters. Those 26 gigawatts then work out to roughly 20.8 million homes.

OpenAI has also signed an agreement with Oracle as part of the company’s Stargate Project. Worth more than $300 billion, the deal, announced in July and fully revealed in September, is one of the AI giant’s most ambitious ventures and will give OpenAI an additional 4.5 gigawatts of GPUs over the next five years.

……………………………………………………………………………………………………………………

Oracle isn’t just working with OpenAI. On Tuesday, the cloud computing company said it will purchase upwards of 50,000 AMD GPUs starting with its MI450 chips in the second half of 2026.

Oracle also reportedly signed an earlier deal to purchase $40 billion worth of Nvidia chips for OpenAI’s Stargate Project, according to the Financial Times.

https://finance.yahoo.com/news/nvidia-stock-jumps-on-100-billion-openai-investment-as-huang-touts-biggest-ai-infrastructure-project-in-history-171740509.html

Like other large tech companies, Google is pouring tens of billions of dollars into AI development. It lifted its estimates for capital expenditures this year to a range of $91 billion to $93 billion, up from $52.5 billion in 2024.

The company said it expects a substantial increase in capital expenditures next year. Much of the money will be used to build data centers to develop and run AI models.

Google’s cloud division, which sells computing power to data centers, has grown as a result of the race to develop AI. The cloud unit had $15.2 billion in quarterly revenue, up 34% from the same quarter last year.

“The gen AI paradox”:

In a June 2025 study by consulting firm McKinsey, almost 80% of companies reported using generative A.I., but about the same number reported that the tools had not significantly affected their earnings. At the heart of this paradox is an imbalance between “horizontal” (enterprise-wide) copilots and chatbots—which have scaled quickly but deliver diffuse, hard-to-measure gains—and more transformative “vertical” (function-specific) use cases—about 90% of which remain stuck in pilot mode.

https://www.mckinsey.com/capabilities/quantumblack/our-insights/seizing-the-agentic-ai-advantage

It’s unlikely that big established companies will be able to substitute A.I. for large numbers of workers over the next year or two. One reason is that big companies are by their nature plodding and bureaucratic when reimagining their work processes. The McKinsey report observed that many companies’ flirtation with A.I. had involved “a proliferation of disconnected micro-initiatives” that suffered from “limited coordination.” A study released this summer by researchers at M.I.T. reached a similar conclusion, finding that industries other than technology and media showed “little structural change” as a result of A.I.

https://www.nytimes.com/2025/11/07/business/layoffs-ai-replacement.html

From Nov 8, 2025 NY Times:

According to an investor offering sheet obtained by DealBook, Blackstone is on the cusp of closing a $3.46 billion commercial-mortgage-backed securities (C.M.B.S.) offering to refinance debt held by QTS, the biggest player in the artificial intelligence infrastructure market. It would be the largest deal of its type this year in a fast-accelerating market. (Blackstone declined to comment.)

The bonds would be backed by 10 data centers in six markets (including Atlanta, Dallas and Norfolk, Va.) that together consume enough energy to power Burlington, Vt., for half a decade.

Blackstone’s offering is part of the latest push in the A.I. infrastructure financing blitz. According to McKinsey, $7 trillion in data center investment will be required by 2030 to keep up with projected demand. Google, Meta, Microsoft and Amazon have together spent $112 billion on capital expenditures in the past three months alone.

The sheer scale of spending is spooking investors: Meta’s stock tumbled 11 percent after the company revealed its aggressive capital expenditure plans last week, and tech stocks have sold off this week on overvaluation fears.

Now, the tech giants are turning to financing maneuvers that may add to the risk. To obtain the capital they need, hyperscalers have leveraged a growing list of complex debt-financing options, including corporate debt, securitization markets, private financing and off-balance-sheet vehicles. That shift is fueling speculation that A.I. investments are turning into a game of musical chairs whose financial instruments are reminiscent of the 2008 financial crisis.

Big tech companies are looking for new sources of financing. While Meta, Microsoft, Amazon and Google previously relied on their own cash flow to invest in data centers, more recently they’ve turned to loans. To diversify their debt, they’re repackaging much of it as asset-backed securities (A.B.S.). About $13.3 billion in A.B.S. backed by data centers has been issued across 27 transactions this year, a 55 percent increase over 2024.

If investors want to buy data center A.B.S., they have two options, according to Sarah McDonald, a senior vice president in the capital solutions group at Goldman Sachs: They can invest in a data center that has one tenant, like a hyperscaler, or in a co-location data center, which has thousands of smaller tenants. The former is an investment-grade tenant with a long-term lease, but the risk is highly concentrated; the latter is most likely renting out to noninvestment-grade tenants with short-term leases, but the investment is extremely diversified.

Digital infrastructure “is something that investors have a huge appetite for,” McDonald said.

Despite the increase in popularity, data center securities are just a small slice of the A.B.S. market, which is dominated by credit card, auto, consumer and student loans.

Blackstone’s $3.46 billion C.M.B.S. offering may seem like small potatoes compared with some other debt-fueled deals, such as Meta’s $30 billion corporate offering to finance its data center in Louisiana. But it’s unprecedented for the C.M.B.S. market, where issuance for data-center-backed deals was just $3 billion for all of 2024.

“They realize how much cash they’re going to need, so they’re getting the C.M.B.S. market comfortable with this type of asset,” said Dan McNamara, the founder and chief investment officer of Polpo Capital, a hedge fund that focuses on C.M.B.S. He added that while most traders in the market were well versed in assets like office space or industrial buildings, with data centers, “it’s not traditional ‘bricks and sticks’ commercial real estate.”

To complicate matters further, the share of single-asset-single-borrower securities (S.A.S.B.) — for example, the assets inside the bond being sold are all from the same company or a single data center — is rising, with 13 percent of all S.A.S.B. deals coming from data centers, according to Goldman Sachs.

“It’s one company, and these assets are quite similar. If there’s a problem with A.I. data centers, like if their current chips are obsolete in five years, you could have big losses in these deals,” McNamara said. “That’s the knock on S.A.S.B.: When things go bad, they go really bad.”

https://www.nytimes.com/2025/11/08/business/dealbook/debt-has-entered-the-ai-boom.html

Laura Chambers, CEO of Mozilla—maker of the Firefox browser—sees the situation as a classic, straightforward bubble. Funding is abundant, it is easier than ever to make low-grade products, and most AI companies are running at a loss, she says. “Yes. It’s really easy to build a whole bunch of stuff, and so people are building a whole bunch of stuff, but not all of that will have traction. So the amount of stuff coming out versus the amount of stuff that’s going to [be sustainable] is probably higher than it’s ever been. I mean, I can build an app in four hours now. That would have taken me six months to do before. So there’s a lot of junk being built very, very quickly, and only a part of that will come through. So that’s one piece of the bubble,” she said.

“I think the most interesting piece is monetization, though. All the AI companies, all these AI browsers, are running at a massive loss. At some point that isn’t sustainable, and so they’re going to have to figure out how to monetize.”

Babak Hodjat, chief AI officer at Cognizant, said he believed diminishing returns were setting in to large language models. The DeepSeek launch from earlier this year—in which a Chinese company released an LLM comparable to ChatGPT for a fraction of the cost—was a good example of this. Building AI was once a huge, expensive, and difficult undertaking. But today, many AI use-cases (such as custom-built, task-specific AI agents) don’t need huge models underpinning them, he said.

“The bulk of the money that you see—and people talk about a bubble—is going into commercial companies that are actually building large language models. I think that technology is starting to be commoditized. You don’t really need to use that big of a large language model, but those guys are taking money because they need a lot of compute capacity. They need a lot of data. And their valuation is based on, you know, bigger is better. Which is not necessarily the case,” he told Fortune.

https://www.aol.com/finance/tech-execs-admit-ai-bubble-141038315.html

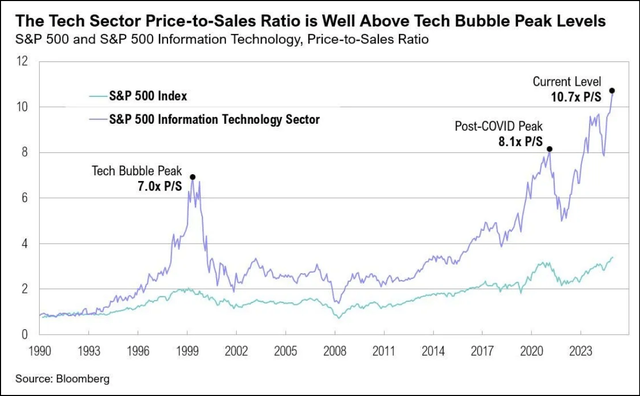

Fears of an AI bubble fueled stock market volatility in recent weeks as big tech valuations stretched and Wall Street’s top executives warned of a market correction. Global AI-related investments are projected to exceed $4 trillion by 2030, reflecting investor zeal to capitalize on a technology that surged in popularity after ChatGPT was launched three years ago. Record spending by big tech persists despite skepticism about a near-term payoff as many organizations report zero measurable return from billions in capital investment. Tech sector valuations have soared to dot-com era levels – and, based on price-to-sales ratios, well beyond them. Some of AI’s biggest proponents acknowledge the fact that valuations are overinflated, including OpenAI chairman Bret Taylor:

AI will transform the economy… and create huge amounts of economic value in the future,” Taylor told The Verge. “I think we’re also in a bubble, and a lot of people will lose a lot of money.”

Alphabet’s (GOOG) (GOOGL) Sundar Pichai sees “elements of irrationality” in the current scale of AI investing, not unlike the excessive investment during the dot-com boom. If the AI bubble bursts, Pichai added, no company will be immune, including Alphabet, despite its breakthrough technology, Gemini, fueling gains.

Big tech and AI start-ups like OpenAI and Anthropic are leaning heavily on circular deals and heavy debt to finance AI spending has raised eyebrows on Wall Street.

The top five hyperscalers have raised a record $108B worth of debt in 2025 – more than 3x the average over the past nine years, according to data compiled by Bloomberg Intelligence. Moreover, some firms are turning to off-balance-sheet entities, including special-purpose vehicles (SPVs) – structures with a checkered history going back to Enron, D.A. Davidson analyst Gil Luria observed. A slowdown in AI demand could render the debt tied to these SPVs “worthless,” Luria warned, potentially triggering another financial crisis.

AI’s rapid expansion has masked some underlying economic issues. As US investment in AI-related industries surged over the past five years, non-AI investment remained stagnant, a Deutsche Bank research report revealed. And, outside of data centers, economic demand has been weak. In fact, the US would be close to a recession this year without technology-related spending, the report added. Meanwhile, a front-loading of chip orders has helped offset some of the tariff-driven constraints on global trade.

https://seekingalpha.com/article/4848572-5-top-stocks-for-ai-fatigue

SoftBank races to fulfill $22.5 billion funding commitment to OpenAI by year-end, sources say

Japan’s SoftBank Group is racing to close a $22.5 billion funding commitment to OpenAI by year-end through an array of cash-raising schemes, including a sale of some investments, and could tap its undrawn margin loans borrowed against its valuable ownership in chip firm Arm Holdings , sources said.

The “all-in” bet on OpenAI is among the biggest yet by SoftBank CEO Masayoshi Son, as the Japanese billionaire seeks to improve his firm’s position in the race for artificial intelligence. To come up with the money, Son has already sold SoftBank’s entire $5.8 billion stake in AI chip leader Nvidia (NVDA.O), opens new tab, offloaded $4.8 billion of its T-Mobile US.

Son has slowed most other dealmaking at SoftBank’s Vision Fund to a crawl, and any deal above $50 million now requires his explicit approval, two of the sources told Reuters.

Son’s firm is working to take public its payments app operator, PayPay. The initial public offering, originally expected this month, was pushed back due to the 43-day-long U.S. government shutdown, which ended in November. PayPay’s market debut, likely to raise more than $20 billion, is now expected in the first quarter of next year, according to one direct source and another person familiar with the efforts.

The Japanese conglomerate is also looking to cash out some of its holdings in Didi Global (92Sy.D), opens new tab, the operator of China’s dominant ride-hailing platform, which is looking to list its shares in Hong Kong after a regulatory crackdown forced it to delist in the U.S. in 2021, a source with direct knowledge said. Investment managers at SoftBank’s Vision Fund are being directed toward the OpenAI deal, two of the above sources said.

https://www.reuters.com/business/media-telecom/softbank-races-fulfill-225-billion-funding-commitment-openai-by-year-end-sources-2025-12-19/