Amazon AI

Custom AI Chips: Powering the next wave of Intelligent Computing

by the Indxx team of market researchers with Alan J Weissberger

The Market for AI Related Semiconductors:

Several market research firms and banks forecast that revenue from AI-related semiconductors will grow at about 18% annually over the next few years—five times faster than non-AI semiconductor market segments.

- IDC forecasts that global AI hardware spending, including chip demand, will grow at an annual rate of 18%.

- Morgan Stanley analysts predict that AI-related semiconductors will grow at an 18% annual rate for a specific company, Taiwan Semiconductor (TSMC).

- Infosys notes that data center semiconductor sales are projected to grow at an 18% CAGR.

- MarketResearch.biz and the IEEE IRDS predict an 18% annual growth rate for AI accelerator chips.

- Citi also forecasts aggregate chip sales for potential AI workloads to grow at a CAGR of 18% through 2030.

AI-focused chips are expected to represent nearly 20% of global semiconductor demand in 2025, contributing approximately $67 billion in revenue [1]. The global AI chip market is projected to reach $40.79 billion in 2025 [2.] and continue expanding rapidly toward $165 billion by 2030.

…………………………………………………………………………………………………………………………………………………

Types of AI Custom Chips:

Artificial intelligence is advancing at a speed that traditional computing hardware can no longer keep pace with. To meet the demands of massive AI models, lower latency, and higher computing efficiency, companies are increasingly turning to custom AI chips which are purpose-built processors optimized for neural networks, training, and inference workloads.

Those AI chips include Application Specific Integrated Circuits (ASICs) and Field- Programmable Gate Arrays (FPGAs) to Neural Processing Units (NPUs) and Google’s Tensor Processing Units (TPUs). They are optimized for core AI tasks like matrix multiplications and convolutions, delivering far higher performance-per-watt than CPUs or GPUs. This efficiency is key as AI workloads grow exponentially with the rise of Large Language Models (LLMs) and generative AI.

OpenAI – Broadcom Deal:

Perhaps the biggest custom AI chip design is being done by an OpenAI partnership with Broadcom in a multi-year, multi-billion dollar deal announced in October 2025. In this arrangement, OpenAI will design the hardware and Broadcom will develop custom chips to integrate AI model knowledge directly into the silicon for efficiency.

Here’s a summary of the partnership:

- OpenAI designs its own AI processors (GPUs) and systems, embedding its AI insights directly into the hardware. Broadcom develops and deploys these custom chips and the surrounding infrastructure, using its Ethernet networking solutions to scale the systems.

- Massive Scale: The agreement covers 10 gigawatts (GW) of AI compute, with deployments expected over four years, potentially extending to 2029.

- Cost Savings: This custom silicon strategy aims to significantly reduce costs compared to off-the-shelf Nvidia or AMD chips, potentially saving 30-40% on large-scale deployments.

- Strategic Goal: The collaboration allows OpenAI to build tailored hardware to meet the intense demands of developing frontier AI models and products, reducing reliance on other chip vendors.

AI Silicon Market Share of Key Players:

- Nvidia, with its extremely popular AI GPUs and CUDA software ecosystem., is expected to maintain its market leadership. It currently holds an estimated 86% share of the AI GPU market segment according to one source [2.]. Others put NVIDIA’s market AI chip market share between 80% and 92%.

- AMD holds a smaller, but growing, AI chip market share, with estimates placing its discrete GPU market share around 4% to 7% in early to mid-2025. AMD is projected to grow its AI chip division significantly, aiming for a double-digit share with products like the MI300X. In response to the extraordinary demand for advanced AI processors, AMD’s Chief Executive Officer, Dr. Lisa Su, presented a strategic initiative to the Board of Directors: to pivot the company’s core operational focus towards artificial intelligence. Ms. Su articulated the view that the “insatiable demand for compute” represented a sustained market trend. AMD’s strategic reorientation has yielded significant financial returns; AMD’s market capitalization has nearly quadrupled, surpassing $350 billion [1]. Furthermore, the company has successfully executed high-profile agreements, securing major contracts to provide cutting-edge silicon solutions to key industry players, including OpenAI and Oracle.

- Intel accounts for approximately 1% of the discrete GPU market share, but is focused on expanding its presence in the AI training accelerator market with its Gaudi 3 platform, where it aims for an 8.7% share by the end of 2025. The former microprocessor king has recently invested heavily in both its design and manufacturing businesses and is courting customers for its advanced data-center processors.

- Qualcomm, which is best known for designing chips for mobile devices and cars, announced in October that it would launch two new AI accelerator chips. The company said the new AI200 and AI250 are distinguished by their very high memory capabilities and energy efficiency.

Big Tech Custom AI chips vs Nvidia AI GPUs:

Big tech companies, including Google, Meta, Amazon, and Apple—are designing their own custom AI silicon to reduce costs, accelerate performance, and scale AI across industries. Yet nearly all rely on TSMC for manufacturing, thanks to its leadership in advanced chip fabrication technology [3.]

- Google recently announced Ironwood, its 7th-generation Tensor Processing Unit (TPU), a major AI chip for LLM training and inference, offering 4x the performance of its predecessor (Trillium) and massive scalability for demanding AI workloads like Gemini, challenging Nvidia’s dominance by efficiently powering complex AI at scale for Google Cloud and major partners like Meta. Ironwood is significantly faster, with claims of over 4x improvement in training and inference compared to the previous Trillium (6th gen) TPU. It allows for super-pods of up to 9,216 interconnected chips, enabling huge computational power for cutting-edge models. It’s optimized for high-volume, low-latency AI inference, handling complex thinking models and real-time chatbots efficiently.

- Meta is in advanced talks to purchase and rent large quantities of Google’s custom AI chips (TPUs), starting with cloud rentals in 2026 and moving to direct purchases for data centers in 2027, a significant move to diversify beyond Nvidia and challenge the AI hardware market. This multi-billion dollar deal could reshape AI infrastructure by giving Meta access to Google’s specialized silicon for workloads like AI model inference, signaling a major shift in big tech’s chip strategy, notes this TechRadar article.

- According to a Wall Street Journal report published on December 2, 2025, Amazon’s new Trainium3 custom AI chip presents a challenge to Nvidia’s market position by providing a more affordable option for AI development. Four times as fast as its previous generation of AI chips, Amazon said Trainium3 (produced by AWS’s Annapurna Labs custom-chip design business) can reduce the cost of training and operating AI models by up to 50% compared with systems that use equivalent graphics processing units, or GPUs. AWS acquired Israeli startup Annapurna Labs in 2015 and began designing chips to power AWS’s data-center servers, including network security chips, central processing units, and later its AI processor series, known as Inferentia and Trainium. “The main advantage at the end of the day is price performance,” said Ron Diamant, an AWS vice president and the chief architect of the Trainium chips. He added that his main goal is giving customers more options for different computing workloads. “I don’t see us trying to replace Nvidia,” Diamant said.

- Interestingly, many of the biggest buyers of Amazon’s chips are also Nvidia customers. Chief among them is Anthropic, which AWS said in late October is using more than one million Trainium2 chips to build and deploy its Claude AI model. Nvidia announced a month later that it was investing $10 billion in Anthropic as part of a massive deal to sell the AI firm computing power generated by its chips.

Image Credit: Emil Lendof/WSJ, iStock

Other AI Silicon Facts and Figures:

- Edge AI chips are forecast to reach $13.5 billion in 2025, driven by IoT and smartphone integration.

- AI accelerators based on ASIC designs are expected to grow by 34% year-over-year in 2025.

- Automotive AI chips are set to surpass $6.3 billion in 2025, thanks to advancements in autonomous driving.

- Google’s TPU v5p reached 30% faster matrix math throughput in benchmark tests.

- U.S.-based AI chip startups raised over $5.1 billion in venture capital in the first half of 2025 alone.

Conclusions:

Custom silicon is now essential for deploying AI in real-world applications such as automation, robotics, healthcare, finance, and mobility. As AI expands across every sector, these purpose-built chips are becoming the true backbone of modern computing—driving a hardware race that is just as important as advances in software. More and more AI firms are seeking to diversify their suppliers by buying chips and other hardware from companies other than Nvidia. Advantages like cost-effectiveness, specialization, lower power consumption and strategic independence that cloud providers gain from developing their own in-house AI silicon. By developing their own chips, hyperscalers can create a vertically integrated AI stack (hardware, software, and cloud services) optimized for their specific internal workloads and cloud platforms. This allows them to tailor performance precisely to their needs, potentially achieving better total cost of ownership (TCO) than general-purpose Nvidia GPUs

However, Nvidia is convinced it will retain a huge lead in selling AI silicon. In a post on X, Nvida wrote that it was “delighted by Google’s success with its TPUs,” before adding that Nvidia “is a generation ahead of the industry—it’s the only platform that runs every AI model and does it everywhere computing is done.” The company said its chips offer “greater performance, versatility, and fungibility” than more narrowly tailored custom chips made by Google and AWS.

The race is far from over, but we can expect to surely see more competition in the AI silicon arena.

………………………………………………………………………………………………………………………………………………………………………………….

Links for Notes:

2. https://sqmagazine.co.uk/ai-chip-statistics/

3. https://www.ibm.com/think/news/custom-chips-ai-future

References:

https://www.wsj.com/tech/ai/amazons-custom-chips-pose-another-threat-to-nvidia-8aa19f5b

https://www.wsj.com/tech/ai/nvidia-ai-chips-competitors-amd-broadcom-google-amazon-6729c65a

AI infrastructure spending boom: a path towards AGI or speculative bubble?

OpenAI and Broadcom in $10B deal to make custom AI chips

Reuters & Bloomberg: OpenAI to design “inference AI” chip with Broadcom and TSMC

RAN silicon rethink – from purpose built products & ASICs to general purpose processors or GPUs for vRAN & AI RAN

Dell’Oro: Analysis of the Nokia-NVIDIA-partnership on AI RAN

Cisco CEO sees great potential in AI data center connectivity, silicon, optics, and optical systems

Expose: AI is more than a bubble; it’s a data center debt bomb

China gaining on U.S. in AI technology arms race- silicon, models and research

Amazon’s Jeff Bezos at Italian Tech Week: “AI is a kind of industrial bubble”

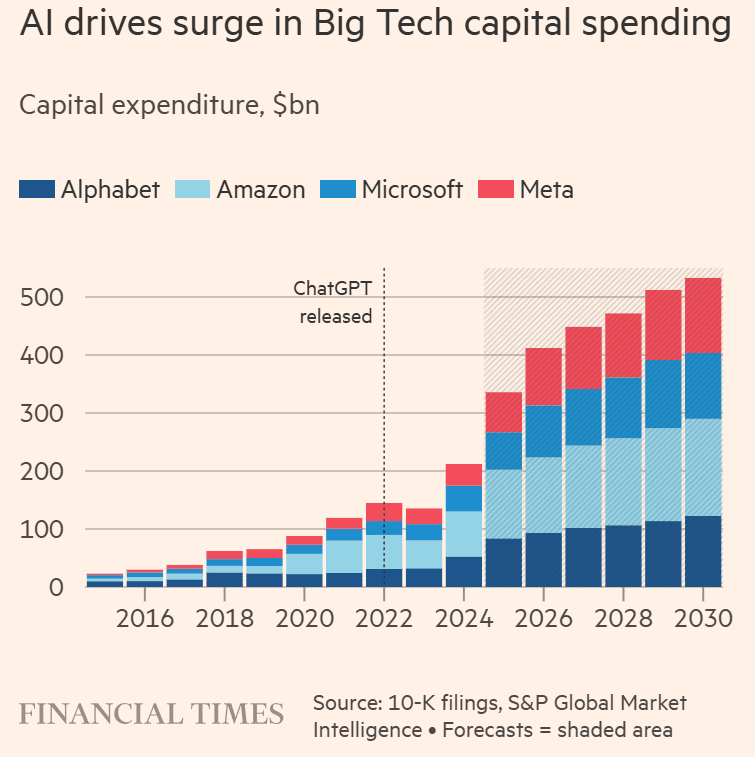

Tech firms are spending hundreds of billions of dollars on advanced AI chips and data centers, not just to keep pace with a surge in the use of chatbots such as ChatGPT, Gemini and Claude, but to make sure they’re ready to handle a more fundamental and disruptive shift of economic activity from humans to machines. The final bill may run into the trillions. The financing is coming from venture capital, debt and, lately, some more unconventional arrangements that have raised concerns among top industry executives and financial asset management firms.

At Italian Tech Week in Turin on October 3, 2025, Amazon founder Jeff Bezos said this about artificial intelligence, “This is a kind of industrial bubble, as opposed to financial bubbles.” Bezos differentiated this from “bad” financial or housing bubbles, which cause harm. Bezos’s comparison of the current AI boom to a historical “industrial bubble” highlights that, while speculative, it is rooted in real, transformative technology.

“It can even be good, because when the dust settles and you see who are the winners, societies benefit from those investors,” Bezos said. “That is what is going to happen here too. This is real, the benefits to society from AI are going to be gigantic.”

He noted that during bubbles, everything (both good and bad investments) gets funded. When these periods of excitement come along, investors have a hard time distinguishing the good ideas from the bad, he said, adding this is “probably happening today” with AI investments. “Investors have a hard time in the middle of this excitement, distinguishing between the good ideas and the bad ideas,” Bezos said of the AI industry. “And that’s also probably happening today,” he added.

- A “good” kind of bubble: He explained that during industrial bubbles, excessive funding flows to both good and bad ideas, making it hard for investors to distinguish between them. However, the influx of capital spurs significant innovation and infrastructure development that ultimately benefits society once the bubble bursts and the strongest companies survive.

- Echoes of the dot-com era: Bezos drew a parallel to the dot-com boom of the 1990s, where many internet companies failed, but the underlying infrastructure—like fiber-optic cable—endured and led to the creation of companies like Amazon.

- Gigantic benefits: Despite the market frothiness, Bezos reiterated that AI is “real” and its benefits to society “are going to be gigantic.”

- Sam Altman (OpenAI): The CEO of OpenAI has stated that he believes “investors as a whole are overexcited about AI.” In In August, the OpenAI CEO told reporters the AI market was in a bubble. When bubbles happen, “smart people get overexcited about a kernel of truth,” Altman warned, drawing parallels with the dot-com boom. Still, he said his personal belief is “on the whole, this would be a huge net win for the economy.”

- David Solomon (Goldman Sachs): Also speaking at Italian Tech Week, the Goldman Sachs CEO warned that a lot of capital deployed in AI would not deliver returns and that a market “drawdown” could occur.

- Mark Zuckerberg (Meta): Zuckerberg has also acknowledged that an AI bubble exists. The Meta CEO acknowledged that the rapid development of and surging investments in AI stands to form a bubble, potentially outpacing practical productivity and returns and risking a market crash. However, he would rather “misspend a couple hundred billion dollars” on AI development than be late to the technology.

- Morgan Stanley Wealth Management’s chief investment officer, Lisa Shalett, warned that the AI stock boom was showing “cracks” and was likely closer to its end than its beginning. The firm cited concerns over negative free cash flow growth among major AI players and increasing speculative investment. Shalett highlighted that free cash flow growth for the major cloud providers, or “hyperscalers,” has turned negative. This is viewed as a key signal of the AI capital expenditure cycle’s maturity. Some analysts estimate this growth could shrink by about 16% over the next year.

Bezos’s remarks come as some analysts express growing fears of an impending AI market crash.

- Underlying technology is real: Unlike purely speculative bubbles, the AI boom is driven by a fundamental technology shift with real-world applications that will survive any market correction.

- Historical context: Some analysts believe the current AI bubble is on a much larger scale than the dot-com bubble due to the massive influx of investment.

- Significant spending: The level of business spending on AI is already at historic levels and is fueling economic growth, which could cause a broader economic slowdown if it were to crash.

- Potential for disruption: The AI industry faces risks such as diminishing returns for costly advanced models, increased competition, and infrastructure limitations related to power consumption.

Ian Harnett argues, the current bubble may be approaching its “endgame.” He wrote in the Financial Times:

“The dramatic rise in AI capital expenditure by so-called hyperscalers of the technology and the stock concentration in US equities are classic peak bubble signals. But history shows that a bust triggered by this over-investment may hold the key to the positive long-run potential of AI.

Until recently, the missing ingredient was the rapid build-out of physical capital. This is now firmly in place, echoing the capex boom seen in the late-1990s bubble in telecommunications, media and technology stocks. That scaling of the internet and mobile telephony was central to sustaining ‘blue sky’ earnings expectations and extreme valuations, but it also led to the TMT bust.”

Today’s AI capital expenditure (capex) is increasingly being funded by debt, marking a notable shift from previous reliance on cash reserves. While tech giants initially used their substantial cash flows for AI infrastructure, their massive and escalating spending has led them to increasingly rely on external financing to cover costs.

This is especially true of Oracle, which will have to increase its capex by almost $100 billion over the next two years for their deal to build out AI data centers for OpenAI. That’s an annualized growth rate of some 47%, even though Oracle’s free cash flow has already fallen into negative territory for the first time since 1990. According to a recent note from KeyBanc Capital Markets, Oracle may need to borrow $25 billion annually over the next four years. This comes at a time when Oracle is already carrying substantial debt and is highly leveraged. As of the end of August, the company had around $82 billion in long-term debt, with a debt-to-equity ratio of roughly 450%. By comparison, Alphabet—the parent company of Google—reported a ratio of 11.5%, while Microsoft’s stood at about 33%. In July, Moody’s revised Oracle’s credit outlook to negative from, while affirming its Baa2 senior unsecured rating. This negative outlook reflects the risks associated with Oracle’s significant expansion into AI infrastructure, which is expected to lead to elevated leverage and negative free cash flow due to high capital expenditures. Caveat Emptor!

References:

https://fortune.com/2025/10/04/jeff-bezos-amazon-openai-sam-altman-ai-bubble-tech-stocks-investing/

https://www.ft.com/content/c7b9453e-f528-4fc3-9bbd-3dbd369041be

Can the debt fueling the new wave of AI infrastructure buildouts ever be repaid?

AI Data Center Boom Carries Huge Default and Demand Risks

Big tech spending on AI data centers and infrastructure vs the fiber optic buildout during the dot-com boom (& bust)

Gartner: AI spending >$2 trillion in 2026 driven by hyperscalers data center investments

Will the wave of AI generated user-to/from-network traffic increase spectacularly as Cisco and Nokia predict?

Analysis: Cisco, HPE/Juniper, and Nvidia network equipment for AI data centers

RtBrick survey: Telco leaders warn AI and streaming traffic to “crack networks” by 2030

https://fortune.com/2025/09/19/zuckerberg-ai-bubble-definitely-possibility-sam-altman-collapse/

https://finance.yahoo.com/news/why-fears-trillion-dollar-ai-130008034.html