Data Center Network Equipment

Dell’Oro: Data Center Switch market declined 9% YoY; SD-WAN market increased at slower rate than in 2019

Market research firm Dell’Oro Group reported today that the worldwide Data Center Switch market recorded its first decline in nine years, dropping 9 percent year-over-year in the first quarter. 1Q 2020 revenue level was also the lowest in three years. The softness was broad-based across all major branded vendors, except Juniper Networks and white box vendors. Revenue from white box vendors was propelled mainly by strong demand from Google and Amazon.

“The COVID-19 pandemic has created some positive impact on the market as some customers pulled in orders in anticipation of supply shortage and elongated lead times,” said Sameh Boujelbene, Senior Director at Dell’Oro Group. “Yet this upside dynamic was more than offset by the pandemic’s more pronounced negative impact on customer demand as they paused purchases due to macro-economic uncertainties. Supply constraints were not major headwinds during the first quarter but expected to become more apparent in the next quarter,” added Boujelbene.

Additional highlights from the 1Q 2020 Ethernet Switch – Data Center Report:

- The revenue decline was broad-based across all regions but was less pronounced in North America.

- We expect revenue in the market to decline high single-digit in 2020, despite some pockets of strength from certain segments.

The Dell’Oro Group Ethernet Switch – Data Center Quarterly Report offers a detailed view of the market, including Ethernet switches for server access, server aggregation, and data center core. (Software is addressed separately.) The report contains in-depth market and vendor-level information on manufacturers’ revenue; ports shipped; average selling prices for both Modular and Fixed Managed and Unmanaged Ethernet Switches (1000 Mbps,10, 25, 40, 50, 100, 200, and 400 GE); and regional breakouts. To purchase these reports, please contact us by email at [email protected].

…………………………………………………………………………………………………………………………………………………

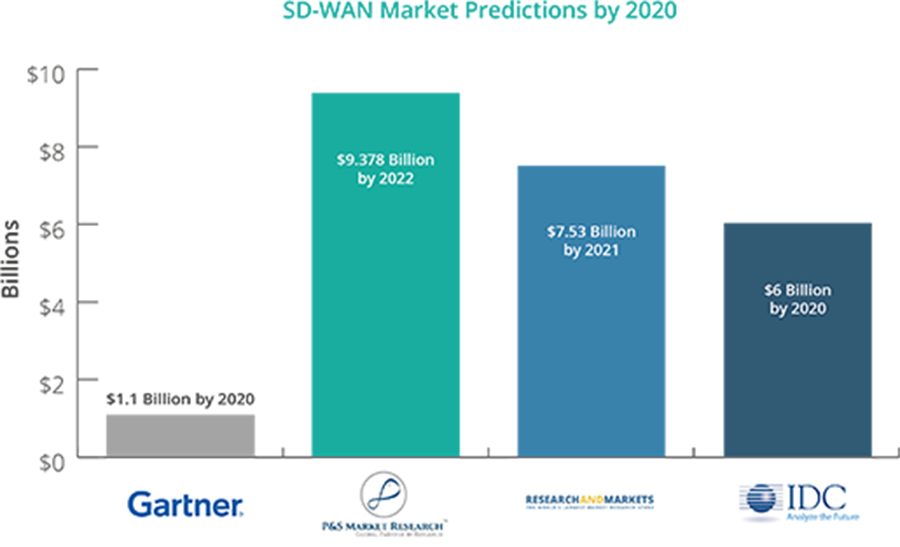

Separately, Dell’Oro Group reported that the market for software-defined (SD)-WAN equipment increased by 24% in the first quarter (year-to-year), which was significantly below the 64% growth seen in 2019. Citing supply chain issues created by the coronavirus pandemic, the market research firm’s Shin Umeda predicted the market will post double-digit growth in 2020 despite “macroeconomic uncertainty.”

- Supply chain disruptions accounted for the majority of the Service Provider (SP) Router and CES Switch market decline in 1Q 2020.

- The SP Router and CES market in China showed a modest decline in 1Q 2020, but upgrades for 5G infrastructure are expected to drive strong demand over the rest of 2020.

Omdia: High-speed data-center Ethernet adapter market at $1.7 billion in 2019

Executive Summary:

The market for Ethernet adapters with speeds of 25 gigabits (25GE) and faster deployed by enterprises, cloud service providers and telecommunication network providers at data centers topped $1 billion for the first time in 2019, according to Omdia.

The total Ethernet adapter market size stood at $1.7 billion for the year. This result was in line with Omdia’s long term server and storage connectivity forecast. Factors driving that forecast include the growth in data sets, such as those computed by analytics algorithms looking for patterns, and the adoption of new software technologies like AI and ML which must examine large data sets to be effective, driving larger movement of data.

“Server virtualization and containerization reached new highs in 2019 and drove up server utilization. This increased server connectivity bandwidth requirements, and the need for higher speed Ethernet adapters” said Vlad Galabov, principal analyst for data center IT, at Omdia. “The popularization of data-intensive workloads, like analytics and AI, were also strong drivers for higher speed adapters in 2019”

25GE Ethernet adapters represented more than 25 percent of total data-center Ethernet adapter ports and revenue in 2019, as reported by Omdia’s Ethernet Network Adapter Equipment Market Tracker. Omdia also found that the price per each 25GE port is continued to decline. A single 25GE port cost an average of $81 in 2019, a decrease of $9 from 2018.

Despite representing a small portion of the market, 100GE Ethernet adapters are increasingly deployed by cloud service providers and enterprises running high-performance computing clusters. Shipments and revenue for 100GE Ethernet adapter ports both grew by more than 60 percent in 2019. Each 100GE adapter port is also becoming more affordable. In 2019, an individual 100GE Ethernet adapter port cost $321 on average, a decrease of $34 from 2018.

“Cloud service providers (CSPs) are leading the transition to faster networks as they run multi-tenant servers with a large number of virtual machines and/or containers per server. This is driving high traffic and bandwidth needs,” Galabov said. “Omdia expects telcos to invest more in higher speeds going forward—including 100GE—driven by network function virtualization (NFV) and increased bandwidth requirements from HD video, social media, AR/VR and expanded IoT use cases.”

The Ethernet outlook:

Omdia expects Ethernet adapter revenue to grow 21 percent on average each year through 2024. Despite the COVID-19 lockdown, the Ethernet adapter market is set to remain close to this growth curve in 2020.

Ethernet adapters that can provide complete on-card processing of network, storage or memory protocols, data-plane offload or that can offload server memory access will account for half of the total market revenue in 2020, or $1.1 billion. Ethernet adapters that have an onboard field customizable processor such as a field-programmable gate array (FPGA) or system on chip (SoC), will account for slightly more than than a quarter of 2020 adapter revenue, totaling $557 million. Adapters that only provide Ethernet connectivity will make up a minority share of the market, at just $475 million.

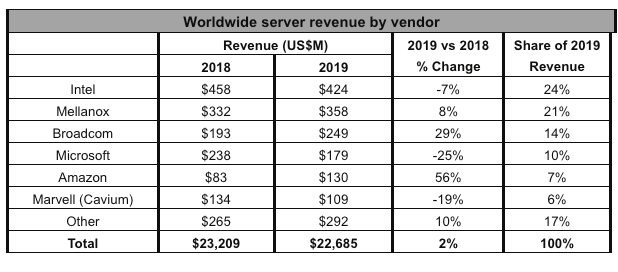

Intel maintains lead:

Looking at semiconductor vendor market share, Intel held 24 percent of the 2019 Ethernet adapter market, shipping adapters worth $424 million in 2019. This represents a 2.5-point decrease from 2018 that Omdia attributes to the aging Intel Ethernet adapter portfolio which consists primarily of 1GE and 10GE adapters with Ethernet connectivity only. Intel indicated it will introduce adapters with offload functionality in 2020 that will help it remain competitive in the market.

Mellanox (now part of NVIDIA) captured 21 percent of the 2019 Ethernet adapter market, a 1-point increase compared to 2018. The vendor reported strong growth of its 25GE and 100GE offload adapters driven by strong cloud service provider demand and growing demand among enterprises for 25GE networking.

Broadcom was the third largest Ethernet adapter vendor in 2019, commanding a 14 percent share of the market, an increase of 3 points from 2018. Broadcom’s revenue growth was driven by strong demand for high-speed offload and programmable adapters at hyperscale CSPs.

In 2019, Microsoft and Amazon continued to adopt in-house-developed Ethernet adapters. Given their large scale and the high value of their high-speed offload and programmable adapters, the companies cumulatively deployed Ethernet adapters worth over $300 million. This made them the fourth and fifth largest makers of Ethernet adapters in 2019. As both service providers deploy 100GE adapters in larger numbers in 2020, they’re set to remain key trendsetters in the market.

Amazon AWS and Microsoft Azure continued to use in-house-developed Ethernet adapters. Given their large scale and the high value of their high-speed offload and programmable adapters, the companies cumulatively deployed Ethernet adapters worth over $300 million, according to Omdia. This made Microsoft and Amazon, respectively, the fourth and fifth largest makers of Ethernet adapters in 2019. As both service providers deploy 100GE adapters in larger numbers in 2020, Omdia expects them to continue to be key trendsetters in the market going forward.

About Omdia:

Omdia is a global technology research powerhouse, established following the merger of the research division of Informa Tech (Ovum, Heavy Reading and Tractica) and the acquired IHS Markit technology research portfolio*.

We combine the expertise of over 400 analysts across the entire technology spectrum, analyzing 150 markets publishing 3,000 research solutions, reaching over 14,000 subscribers, and covering thousands of technology, media & telecommunications companies.

Our exhaustive intelligence and deep technology expertise allow us to uncover actionable insights that help our customers connect the dots in today’s constantly evolving technology environment and empower them to improve their businesses – today and tomorrow.

………………………………………………………………………………………………………………………………………………..

Omdia is a registered trademark of Informa PLC and/or its affiliates. All other company and product names may be trademarks of their respective owners. Informa PLC registered in England & Wales with number 8860726, registered office and head office 5 Howick Place, London, SW1P 1WG, UK. Copyright © 2020 Omdia. All rights reserved.

*The majority of IHS Markit technology research products and solutions were acquired by Informa in August 2019 and are now part of Omdia.

TMR: Data Center Networking Market sees shift to user-centric & data-oriented business + CoreSite DC Tour

TMR Press Release edited by Alan J Weissberger followed by Coresite Data Center Talk & Tour for IEEE ComSocSCV and Power Electronics members

TMR Executive Summary and Forecast:

The global data center networking market is expected to emerge as highly competitive due to rising demand for networking components.

The major players operating in the global data center networking market include Hewlett Packard Enterprise, Cisco Systems, Inc., Arista Networks, Microsoft Corporation, and Juniper Networks. The key players are also indulging into business strategies such as mergers and acquisitions to improve their existing technologies. Those vendors are investing heavily in the research and development activities to sustain their lead in the market. Besides, these firms aim to improve their product portfolio in order to expand their global reach and get an edge over their competitors globally.

The global data center networking market is likely to pick up a high momentum since the firms are rapidly shifting to a more user-centric and data-oriented business. According to a recent report by Transparency Market Research (TMR), the global data center networking market is expected to project a steady CAGR of 15.5% within the forecast period from 2017 to 2025. In 2016, the global market was valued around worth US$63.05 bn, which is projected to reach around a valuation of US$228.40 bn by 2025.

On the basis of component, the global data center networking market is segmented into services, software, and hardware. Among these, the hardware segment led the market in 2016 with around 52.0% of share of data center networking market, as per the revenue. Nevertheless, projecting a greater CAGR than other segments, software segment is as well foreseen to emerge as the key segment contributing to the market growth. Geographically, North America was estimated to lead the global market in 2016. Nevertheless, Asia Pacific is likely to register the leading CAGR of 17.3% within the forecast period from 2017 to 2025.

Rising Demand for Networking Solutions to Propel Growth in Market

Increased demand for networking solutions has initiated a need for firms to change data center as a collective automated resource centers, which provide better flexibility to shift workload from any cloud so as to improve the operational efficiency.

Rising number of internet users across the globe require high-speed interface. Companies are highly dependent on the data centers in terms of efficiency to decrease the operational cost and improve the productivity.

Nevertheless, virtualization and rising demand for end-use gadgets are the major restrictions likely to hamper growth in the data center networking market in the coming years. Rising usage of mobile devices and cloud services also is hindering the steady strides in the data center networking market.

Popularity of Big Data to Add to Market Development in Future:

Rising popularity of big data and cloud services from the industry as well as consumer is anticipated to fuel the development in the global data center networking market. Advantages such as low operational costs, flexibility, better security and safety, and improved performance are likely to proliferate the market growth.

Disaster recovery and business continuity has resulted in simplification of data center networking by saving both money and time for companies. Financial advantages along with technology is likely to augur the demand in data center networking and cloud computing.

Companies are highly focused on data center solution providers to perform efficiently and effectively, with better productivity, high profit, and decreased prices. These goals require high-end networking technologies and upgraded performance server. It also needs a proper integration between simplified networking framework and server to reach the optimum level of performance.

The study presented here is based on a report by Transparency Market Research (TMR) titled “Data Center Networking Market (Component Type – Hardware, Software, and Services; Industry Vertical – Telecommunications, Government, Retail, Media and Entertainment, BFSI, Healthcare, and Education) – Global Industry Analysis, Size, Share, Growth, Trends and Forecast 2017 – 2025.”

Get PDF Brochure at:

https://www.transparencymarketresearch.com/sample/sample.php?flag=B&rep_id=21257

Request PDF Sample of Data Center Networking Market:

https://www.transparencymarketresearch.com/sample/sample.php?flag=S&rep_id=21257

About TMR:

Transparency Market Research is a next-generation market intelligence provider, offering fact-based solutions to business leaders, consultants, and strategy professionals.

Our reports are single-point solutions for businesses to grow, evolve, and mature. Our real-time data collection methods along with ability to track more than one million high growth niche products are aligned with your aims. The detailed and proprietary statistical models used by our analysts offer insights for making right decision in the shortest span of time. For organizations that require specific but comprehensive information we offer customized solutions through adhoc reports. These requests are delivered with the perfect combination of right sense of fact-oriented problem solving methodologies and leveraging existing data repositories.

TMR believes that unison of solutions for clients-specific problems with right methodology of research is the key to help enterprises reach right decision.”

Contact

Mr. Rohit Bhisey

Transparency Market Research

State Tower

90 State Street,

Suite 700,

Albany, NY – 12207

United States

Tel: +1-518-618-1030

USA – Canada Toll Free: 866-552-3453

Email: [email protected]

Website: https://www.transparencymarketresearch.com

Research Blog: http://www.europlat.org/

Press Release:

………………………………………………………………………………………………………..

Coresite Data Center Tour:

On May 23, 2019, IEEE ComSocSCV and IEEE Power Electronics members were treated to a superb talk and tour of the Coresite Multi-Tenant Data Center (MTDC) in Santa Clara, CA.

CoreSite is a Multi-Tenant Data Center owner that competes with Equinix. CoreSite offers the following types of Network Access for their MTDC colocation customers:

•Direct Access to Tier-1 and Eyeball Networks

•Access to Broad Range of Network Services (Transit/Transport/Dark Fiber)

•Direct Access to Public Clouds (Amazon, Microsoft, Google, etc)

•Direct Access to Optical Ethernet Fabrics

………………………………………………………….

CoreSite also provides POWER distribution and backup on power failures:

•Standby Generators

•Large Scale UPS

•Resilient Design

•Power Quality

•A/B Power Delivery

•99.999% Uptime

….and PHYSICAL SECURITY:

•24/7 OnSite Security Personnel

•Dual-Authentication Access

•IP DVR for All Facility Areas

•Perimeter Security

•Equipment Check-In/Out Process

•Access-Control Policies (Badge Deactivation, etc)

……………………………………………………………………………………………………..

There are 28 network operators and cloud service providers that have brought fiber into the CoreSite Santa Clara MTDC campus. The purpose of that is to enable customers to share fiber network/cloud access at a much higher speed and lower cost than would otherwise be realized via premises-based network/cloud access.

While the names of the network and cloud service providers could not be disclosed, network providers included: Verizon, AT&T, Century Link, Zayo. In addition, AWS Direct Connect, Microsoft Azure ExpressRoute, Alibaba Cloud, Google Cloud interconnection and other unnamed cloud providers were said to have provided direct fiber to cloud connectivity for CoreSite’s Santa Clara MTDC customers.

Here’s how network connectivity is achieved within and outside the CoreSite MTDC:

The SMF or MMF from each customer’s colocation cage is physically routed (under the floor) to a fiber wiring cross-connect/patch panel maintained by Coresite. The output fibers are then routed to a private room where the network/cloud providers maintain their own fiber optic gear (fiber optic multiplexers/switches, DWDM transponders and other fiber transmission equipment) which connect to the outside plant fiber optic cable(s) for each network/cloud services provider.

The outside plant fiber fault detection and restoration are done by each network/cloud provider- either via a mesh topology fiber optic network or 1:1 or N:1 hot standby. Coresite’s responsibility ends when it delivers the fiber to the provider cages. They do, however, have network engineers that are responsible for maintenance and trouble shooting in the DC when necessary.

Instead of using private lines or private IP connections, CoreSite offers an Interconnect Gateway-SM provides their enterprise customers a dedicated, high-performance interconnection solution between their cloud and network service providers, while establishing a flexible IT architecture that allows them to adapt to market demands and rapidly evolving technologies.

CoreSite’s gateway directly integrates enterprises’ WAN architecture into CoreSite’s native cloud and carrier ecosystem using high-speed fiber and virtual interconnections. This solution includes:

-Private network connectivity to the CoreSite data center

-Dedicated cabinets and network hardware for routing, switching, and security

-Direct fiber and virtual interconnections to cloud and network providers

-Technical integration, 24/7/365 monitoring and management from a certified CoreSite Solution Partner

-Industry-leading SLA

Facebook’s F16 achieves 400G effective intra DC speeds using 100GE fabric switches and 100G optics, Other Hyperscalers?

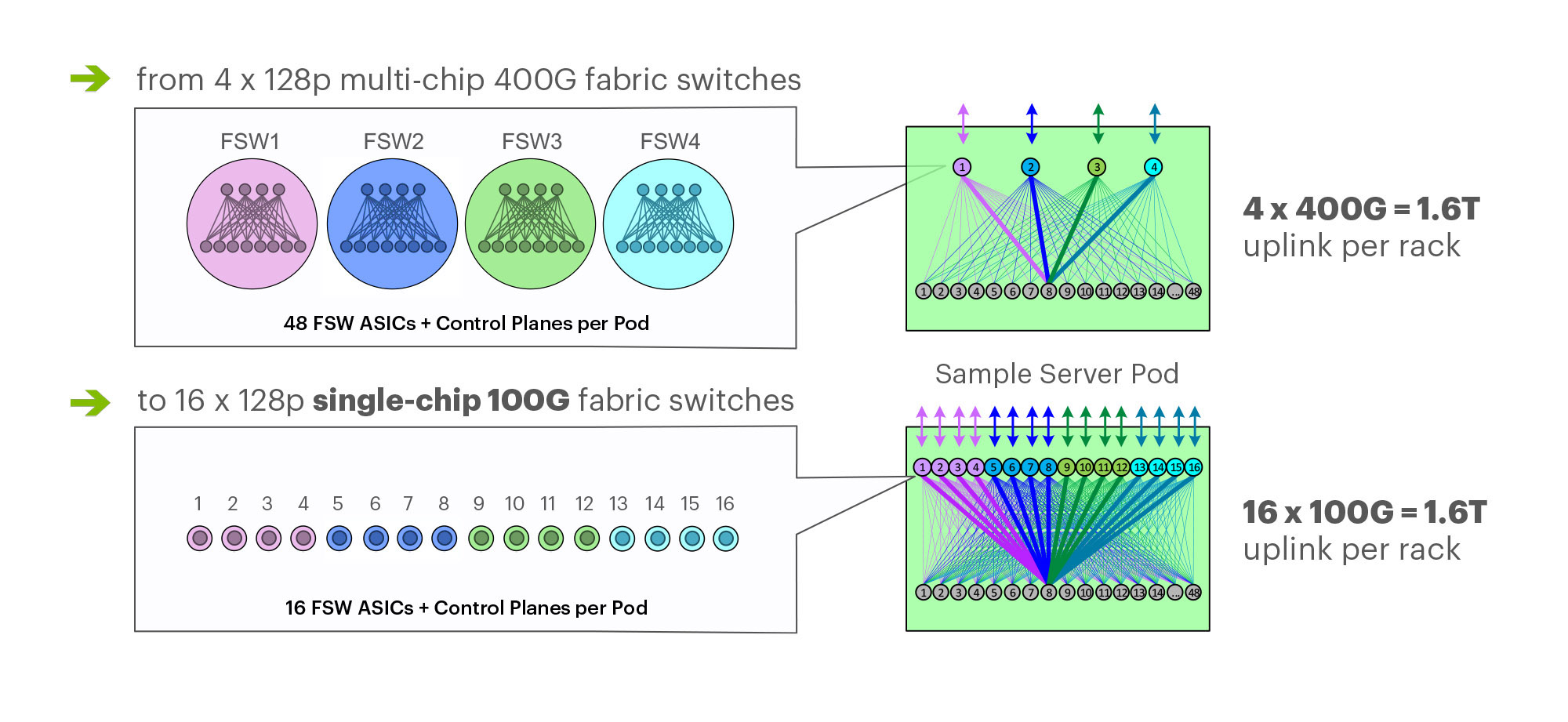

On March 14th at the 2019 OCP Summit, Omar Baldonado of Facebook (FB) announced a next-generation intra -data center (DC) fabric/topology called the F16. It has 4x the capacity of their previous DC fabric design using the same Ethernet switch ASIC and 100GE optics. FB engineers developed the F16 using mature, readily available 100G 100G CWDM4-OCP optics (contributed by FB to OCP in early 2017), which in essence gives their data centers the same desired 4x aggregate capacity increase as 400G optical link speeds, but using 100G optics and 100GE switching.

F16 is based on the same Broadcom ASIC that was the candidate for a 4x-faster 400G fabric design – Tomahawk 3 (TH3). But FB uses it differently: Instead of four multichip-based planes with 400G link speeds (radix-32 building blocks), FB uses the Broadcom TH3 ASIC to create 16 single-chip-based planes with 100G link speeds (optimal radix-128 blocks). Note that 400G optical components are not easy to buy inexpensively at Facebook’s large volumes. 400G ASICs and optics would also consume a lot more power, and power is a precious resource within any data center building. Therefore, FB built the F16 fabric out of 16 128-port 100G switches, achieving the same bandwidth as four 128-port 400G switches would.

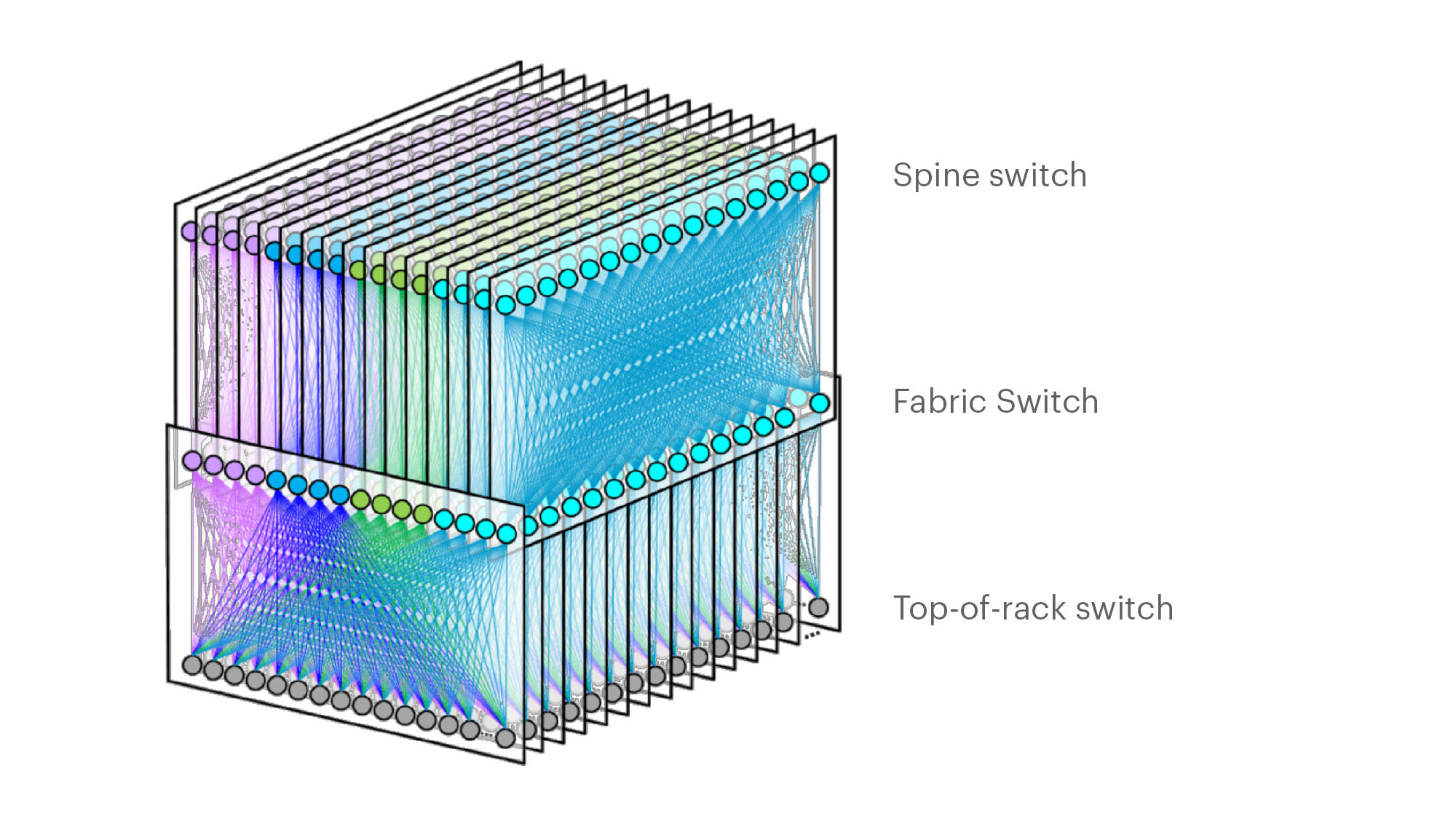

Below are some of the primary features of the F16 (also see two illustrations below):

-Each rack is connected to 16 separate planes. With FB Wedge 100S as the top-of-rack (TOR) switch, there is 1.6T uplink bandwidth capacity and 1.6T down to the servers.

-The planes above the rack comprise sixteen 128-port 100G fabric switches (as opposed to four 128-port 400G fabric switches).

-As a new uniform building block for all infrastructure tiers of fabric, FB created a 128-port 100G fabric switch, called Minipack – a flexible, single ASIC design that uses half the power and half the space of Backpack.

-Furthermore, a single-chip system allows for easier management and operations.

Facebook F16 data center network topology

Facebook F16 data center network topology

………………………………………………………………………………………………………………………………………………………………………………………………..

Multichip 400G b/sec pod fabric switch topology vs. FBs single chip (Broadcom ASIC) F16 at 100G b/sec

…………………………………………………………………………………………………………………………………………………………………………………………………..

In addition to Minipack (built by Edgecore Networks), FB also jointly developed Arista Networks’ 7368X4 switch. FB is contributing both Minipack and the Arista 7368X4 to OCP. Both switches run FBOSS – the software that binds together all FB data centers. Of course the Arista 7368X4 will also run that company’s EOS network operating system.

F16 was said to be more scalable and simpler to operate and evolve, so FB says their DCs are better equipped to handle increased intra-DC throughput for the next few years, the company said in a blog post. “We deploy early and often,” Baldonado said during his OCP 2019 session (video below). “The FB teams came together to rethink the DC network, hardware and software. The components of the new DC are F16 and HGRID as the network topology, Minipak as the new modular switch, and FBOSS software which unifies them.”

This author was very impressed with Baldonado’s presentation- excellent content and flawless delivery of the information with insights and motivation for FBs DC design methodology and testing!

References:

https://code.fb.com/data-center-engineering/f16-minipack/

………………………………………………………………………………………………………………………………….

Other Hyperscale Cloud Providers move to 400GE in their DCs?

Large hyperscale cloud providers initially championed 400 Gigabit Ethernet because of their endless thirst for networking bandwidth. Like so many other technologies that start at the highest end with the most demanding customers, the technology will eventually find its way into regular enterprise data centers. However, we’ve not seen any public announcement that it’s been deployed yet, despite its potential and promise!

Some large changes in IT and OT are driving the need to consider 400 GbE infrastructure:

- Servers are more packed in than ever. Whether it is hyper-converged, blade, modular or even just dense rack servers, the density is increasing. And every server features dual 10 Gb network interface cards or even 25 Gb.

- Network storage is moving away from Fibre Channel and toward Ethernet, increasing the demand for high-bandwidth Ethernet capabilities.

- The increase in private cloud applications and virtual desktop infrastructure puts additional demands on networks as more compute is happening at the server level instead of at the distributed endpoints.

- IoT and massive data accumulation at the edge are increasing bandwidth requirements for the network.

400 GbE can be split via a multiplexer into smaller increments with the most popular options being 2 x 200 Gb, 4 x 100 Gb or 8 x 50 Gb. At the aggregation layer, these new higher-speed connections begin to increase in bandwidth per port, we will see a reduction in port density and more simplified cabling requirements.

Yet despite these advantages, none of the U.S. based hyperscalers have announced they have deployed 400GE within their DC networks as a backbone or to connect leaf-spine fabrics. We suspect they all are using 400G for Data Center Interconnect, but don’t know what optics are used or if it’s Ethernet or OTN framing and OAM.

…………………………………………………………………………………………………………………………………………………………………….

In February, Google said it plans to spend $13 billion in 2019 to expand its data center and office footprint in the U.S. The investments include expanding the company’s presence in 14 states. The dollar figure surpasses the $9 billion the company spent on such facilities in the U.S. last year.

In the blog post, CEO Sundar Pichai wrote that Google will build new data centers or expand existing facilities in Nebraska, Nevada, Ohio, Oklahoma, South Carolina, Tennessee, Texas, and Virginia. The company will establish or expand offices in California (the Westside Pavillion and the Spruce Goose Hangar), Chicago, Massachusetts, New York (the Google Hudson Square campus), Texas, Virginia, Washington, and Wisconsin. Pichai predicts the activity will create more than 10,000 new construction jobs in Nebraska, Nevada, Ohio, Texas, Oklahoma, South Carolina, and Virginia. The expansion plans will put Google facilities in 24 states, including data centers in 13 communities. Yet there is no mention of what data networking technology or speed the company will use in its expanded DCs.

I believe Google is still designing all their own IT hardware (compute servers, storage equipment, switch/routers, Data Center Interconnect gear other than the PHY layer transponders). They announced design of many AI processor chips that presumably go into their IT equipment which they use internally but don’t sell to anyone else. So they don’t appear to be using any OCP specified open source hardware. That’s in harmony with Amazon AWS, but in contrast to Microsoft Azure which actively participates in OCP with its open sourced SONIC now running on over 68 different hardware platforms.

It’s no secret that Google has built its own Internet infrastructure since 2004 from commodity components, resulting in nimble, software-defined data centers. The resulting hierarchical mesh design is standard across all its data centers. The hardware is dominated by Google-designed custom servers and Jupiter, the switch Google introduced in 2012. With its economies of scale, Google contracts directly with manufactures to get the best deals.Google’s servers and networking software run a hardened version of the Linux open source operating system. Individual programs have been written in-house.

IHS Markit: Service Provider Data Center Growth Accelerates + Gartner on DC Networking Market Drivers

Service Provider Data Center Growth Accelerates, by Cliff Grossner, Ph.D., IHS Markit

Service providers are investing in their data centers (DCs) to improve scalability, deploy applications rapidly, enable automation, and harden security, according to the Data Center Strategies and Leadership Global Service Provider Survey from IHS Markit. Respondents are considering taking advantage of new options from server vendors such as ARM-based servers and parallel compute co-processors, allowing them to better match servers to their workloads. The workloads most deployed by service provider respondents were IT applications (including financial and on-line transaction processing), followed by ERP and generic VMs on VMware ESXi and Microsoft Hyper-V. Speed and support for network protocol virtualization and SDN are top service provider DC network requirements.

“Traditional methods for network provisioning to provide users with a quality experience, such as statically assigned priorities (QoS) in the DC network, are no longer effective. The DC network must be able to recognize individual application traffic flows and rapidly adjust priority to match the dynamic nature of application traffic in a resource-constrained world. New requirements for applications delivered on demand, coupled with the introduction of virtualization and DC orchestration technology, has kicked off an unprecedented transformation that began on servers and is now reaching into the DC network and storage,” said Cliff Grossner Ph.D., senior research director and advisor for cloud and data center at IHS Markit , a world leader in critical information, analytics and solutions.

“Physical networks will always be needed in the DC to provide the foundation for the high-performance connectivity demanded of today’s applications. Cisco, Juniper, Huawei, Arista, and H3C were identified as the top five DC Ethernet switch vendors by service provider respondents ranking the top three vendors in each of eight selection criteria. These Ethernet switch providers have a long history as hardware vendors. When selecting a vendor, respondents are heavily weighing factors such as product reliability, service and support, pricing model, and security,” said Grossner.

More Service Provider Data Center Strategies Highlights:

· Respondents indicate they expect a 1.5x increase in the average number of physical servers in their DCs by 2019.

· Top DC investment drivers are scalability (a driver for 93% of respondents), rapid application deployment (87%), automation (73%), and security (73%).

· On average 90% of servers are expected to be running hypervisors or containers by 2019, up from 74% today.

· Top DC fabric features are high speed and support for network virtualization protocols (80% of respondents each), and SDN (73%).

· 100% of respondents intend to increase investment in SSD, 80% in software defined storage, and 67% in NAS.

· The workloads most deployed by respondents were generic IT applications (53% of respondents), followed by ERP and generic VMs (20%).

· Cisco and Juniper are tied for leadership with on average 58% of respondents placing them in the top three across eight categories. Huawei is #3 (38%), Arista is #4 (28%), and H3C is #5 (18%).

Data Center Network Research Synopsis:

The IHS Markit Data Center Networks Intelligence Service provides quarterly worldwide and regional market size, vendor market share, forecasts through 2022, analysis and trends for (1) data center Ethernet switches by category [purpose-built, bare metal, blade, and general purpose], port speed [1/10/25/40/50/100/200/400GE] and market segment [enterprise, telco and cloud service provider], (2) application delivery controllers by category [hardware-based appliance, virtual appliance], and (3) software-defined WAN (SD-WAN) [appliances and control and management software], (4) FC SAN switches by type [chassis, fixed], and (5) FC SAN HBAs. Vendors tracked include A10, ALE, Arista, Array Networks, Aryaka, Barracuda, Cisco, Citrix, CloudGenix, CradlePoint, Dell, F5, FatPipe, HPE, Huawei, Hughes, InfoVista, Juniper, KEMP, Nokia (Nuage), Radware, Riverbed, Silver Peak, Talari, TELoIP, VMware, ZTE and others.

………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………….

The following information was collected by Alan J Weissberger from various subscription only websites:

Gartner Group says the data center networking market is primarily driven by three factors:

- Refresh of existing data center networking equipment that is at its technological or support limits

- The expansion of capacity (i.e., physical buildouts) within existing locations

- The desire to increase agility and automation to an existing data center

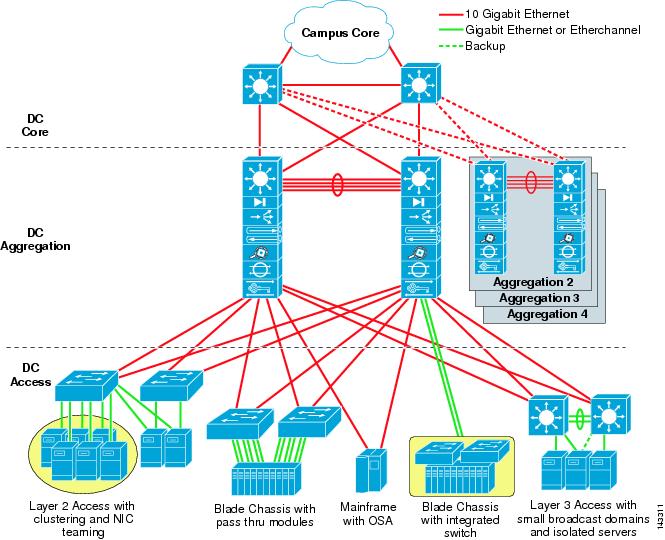

Data center networking solutions are characterized by the following elements:

- Physical interfaces: Physical interfaces to plug-in devices are a very common component of products in this market. 10G is now the most common interface speed we see in enterprise data center proposals. However, we are also rapidly seeing the introduction of new Ethernet connectivity options at higher speeds (25 GbE, 50 GbE and 100 GbE). Interface performance is rarely an issue for new implementations, and speeds and feeds are less relevant as buying criteria for the majority of enterprise clients, when compared to automation and ease of operations (see “40G Is Dead — Embrace 100G in Your Data Center!” ).

- Physical topology and switches: The spine-and-leaf (folded Clos) topology is the most common physical network design, proposed by most vendors. It has replaced the historical three-tier design (access, aggregation, core). The reduction in physical switching tiers is better-suited to support the massive east-west traffic flows created by new application architectures (see “Building Data Center Networks in the Digital Business Era” and “Simplify Your Data Center Network to Improve Performance and Decrease Costs” ). Vendors deliver a variety of physical form factors for their switches, including fixed-form factor and modular or chassis-based switches. In addition, this includes software-based switches such as virtual switches that reside inside of physical virtualized servers.

- Switching/infrastructure management: Ethernet fabric provides management for a collection of switches as a single construct, and programmable fabrics include an API. Fabrics are commonly adopted as logical control planes for spine-and-leaf designs, replacing legacy protocols like Spanning Tree Protocol (STP) and enabling better utilization of all the available paths. Fabrics automate several tasks affiliated with managing a data center switching infrastructure, including autodiscovery of switches, autoconfiguration of switches, etc. (see “Innovation Insight for Ethernet Switching Fabric” ).

- Automation and orchestration: Automation and orchestration are increasingly important to buyers in this market, because enterprises want to improve speed to deliver data center network infrastructure to business, including on-demand capability. This includes support and integration with popular automation tools (such as Ansible, Chef and Puppet), integration with broader platforms like VMware vRA, inclusion of published/open APIs, as well as support for scripting tools like Python (see “Building Data Center Networks in the Digital Business Era” ).

- Network overlays: Network overlays create a logical topology abstracted from the underlying physical topology. We see overlay tunneling protocols like VXLAN used with virtual switches to provide Layer 2 connectivity on top of scalable Layer 3 spine-and-leaf designs, enabling support of multiple tenants and more granular network partitioning (microsegmentation), to increase security within the data center. Overlay products also typically provide an API to enable programmability and integration with orchestration platforms.

- Public cloud extension/hybrid cloud: An emerging capability of data center products is the ability to provide visibility, troubleshooting, configuration and management for workloads that exist in a public cloud provider’s infrastructure. In this case, vendors are not providing the underlying physical infrastructure within the cloud provider network, but provide capability to manage that infrastructure in a consistent manner with on-premises/collocated workloads.

You can see user reviews for Data Center Networking vendors here.

…………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………..

In a new report, HTF Market Intelligence says that the Global Data Center Colocation Market will Have Huge Growth by 2025.

The key players are highly focusing innovation in production technologies to improve efficiency and shelf life. The best long-term growth opportunities for this sector can be captured by ensuring ongoing process improvements and financial flexibility to invest in the optimal strategies. Company profile section of players such as NTT Communications Corporation, Dupont Fabros Technology, Inc., Digital Realty Trust, Inc., Cyxtera Technologies, Inc., Cyrusone Inc., Level 3 Communications Inc., Equinix, Inc., Global Switch, AT&T, Inc., Coresite Realty Corporation, China Telecom Corporation Limited, Verizon Enterprise Solutions, Inc., Interxion Holding NV, Internap Corporation & KDDI Corporation includes its basic information like legal name, website, headquarters, its market position, historical background and top 5 closest competitors by Market capitalization / revenue along with contact information. Each player/ manufacturer revenue figures, growth rate and gross profit margin is provided in easy to understand tabular format for past 5 years and a separate section on recent development like mergers, acquisition or any new product/service launch etc.

Browse the Full Report at: https://www.htfmarketreport.com/reports/1125877-global-data-center-colocation-market-6

IHS Markit: CSPs accelerate high speed Ethernet adapter adoption; Mellanox doubles switch sales

by Vladimir Galabov, senior analyst, IHS Markit

Summary:

High speed Ethernet adapter ports (25GE to 100GE) grew 45% in 1Q18, tripling compared to 1Q17, with cloud service provider (CSP) adoption accelerating the industry transition. 25GE represented a third of adapter ports shipped to CSPs in 1Q18, doubling compared to 4Q17. Telcos follow CSPs in their transition to higher networking speeds and while they are ramping 25GE adapters, they are still using predominantly 10GE adapters, while enterprises continue to opt for 1GE, according to the Data Center Ethernet Adapter Equipment report from IHS Markit.

“We expect higher speeds (25GE+) to be most prevalent at CSPs out to 2022, driven by high traffic and bandwidth needs in large-scale data centers. By 2022 we expect all Ethernet adapter at CSP data centers to be 25GE and above. Tier 1 CSPs are currently opting for 100GE at ToR with 4x25GE breakout cables for server connectivity,” said Vladimir Galabov, senior analyst, IHS Markit. “Telcos will invest more in higher speeds, including 100GE out to 2022, driven by NFV and increased bandwidth requirements from HD video, social media, AR/VR, and expanded IoT use cases. By 2022 over two thirds of adapters shipped to telcos will be 25GE and above.”

CSP adoption of higher speeds drives data center Ethernet adapter capacity (measured in 1GE port equivalents) shipped to CSPs to hit 60% of total capacity by 2022 (up from 55% in 2017). Telco will reach 23% of adapter capacity shipped by 2022 (up from 15% in 2017) and enterprise will drop to 17% (down from 35% in 2017).

“Prices per 1GE ($/1GE) are lowest for CSPs as higher speed adapters result in better per gig economies. Large DC cloud environments with high compute utilization requirements continually tax their networking infrastructure, requiring CSPs to adopt high speeds at a fast rate,” Galabov said.

Additional data center Ethernet adapter equipment market highlights:

· Offload NIC revenue was up 6% QoQ and up 55% YoY, hitting $160M in 1Q18. Annual offload NIC revenue will grow at a 27% CAGR out to 2022.

· Programmable NIC revenue was down 5% QoQ and up 14% YoY, hitting $26M in 1Q18. Annual programmable NIC revenue will grow at a 58% CAGR out to 2022.

· Open compute Ethernet adapter form factor revenue was up 11% QoQ and up 56% YoY, hitting $54M in 1Q18. By 2022, 21% of all ports shipped will be open compute form factor.

· In 1Q18, Intel was #1 in revenue market share (34%), Mellanox was #2 (23%), and Broadcom was #3 (14%)

Data Center Compute Intelligence Service:

The quarterly IHS Markit “Data Center Compute Intelligence Service” provides analysis and trends for data center servers, including form factors, server profiles, market segments and servers by CPU type and co-processors. The report also includes information about Ethernet network adapters, including analysis by adapter speed, CPU offload, form factors, use cases and market segments. Other information includes analysis and trends of multi-tenant server software by type (e.g., server virtualization and container software), market segments and server attach rates. Vendors tracked in this Intelligence Service include Broadcom, Canonical, Cavium, Cisco, Cray, Dell EMC, Docker, HPE, IBM, Huawei, Inspur, Intel, Lenovo, Mellanox, Microsoft, Red Hat, Supermicro, SuSE, VMware, and White Box OEM (e.g., QCT and WiWynn).

………………………………………………………………………………………………………………………………………….

Mellanox Ethernet Switches for the Data Center:

In the Q1 2018 earnings call, Mellanox reported that its Ethernet switch product line revenue more than doubled year over year. Mellanox Spectrum Ethernet switches are getting strong traction in the data center market. The recent inclusion in the Gartner Magic Quadrant is yet another milestone. There are a few underlying market trends that is driving this strong adoption.

Network Disaggregation has gone mainstream

Mellanox Spectrum switches are based off its highly differentiated homegrown silicon technology. Mellanox disaggregates Ethernet switches by investing heavily in open source technology, software and partnerships. In fact, Mellanox is the only switch vendor that is part of the top-10 contributors to open source Linux. In addition to native Linux, Spectrum switches can run Cumulus Linux or Mellanox Onyx operating systems. Network disaggregation brings transparent pricing and provides customers a choice to build their infrastructure with the best silicon and best fit for purpose software that would meet their specific needs.

25/100GbE is the new 10/40GbE

25/100GbE infrastructure provides better RoI and the market is adopting these newer speeds at record pace. Mellanox Spectrum silicon outperforms other 25GbE switches in the market in terms of every standard switch attribute.

IHS Markit: On-premises Enterprise Data Center (DC) is alive and flourishing!

by Cliff Grossner, PhD, IHS Markit

Editor’s Note: Cliff and his IHS Markit team interviewed IT decision-makers in 151 North American organizations that operate data centers (DCs) and have at least 101 employees.

………………………………………………………………………………………………………………………………………………….

Summary:

While enterprise have been adopting cloud services for a number of years now, they are also continuing to make significant investments in their on-premises data center infrastructure. We are seeing a continuation of the enterprise DC growth phase signaled by last year’s respondents and confirmed by respondents to this study. Enterprises are transforming their on-premises DC to a cloud architecture, making the enterprise DC a first-class citizen as enterprises build their multi-clouds.

The on-premises DC is evolving with server diversity set to increase, the DC network moving to higher speeds, and increased software defined storage with solid state drive (SSD) adoption, according to the Data Center Strategies and Leadership North American Enterprise Survey

“Application architectures are evolving with the increased adoption of software containers and micro-services coupled with a Dev/Ops culture of rapid and frequent software builds. In addition, we see new technologies such as artificial intelligence (AI) and machine learning (ML) incorporated into applications. These applications consume network bandwidth in a very dynamic and unpredictable manner and make new demands on servers for increased parallel computation,” said Cliff Grossner Ph.D., senior research director and advisor for cloud and data center at IHS Markit (Nasdaq: INFO), a world leader in critical information, analytics and solutions.

“New software technologies are driving more diverse compute architectures. An example is the development of multi-tenant servers (VMs and software containers), which is requiring new features in CPU silicon to support these technologies. AI and ML have given rise to a market for specialized processors capable of high degrees of parallelism (such as GPGPUs and the Tensor Processing Unit from Google). We can only expect this trend to continue and new compute architectures emerging in response,” said Grossner.

More Data Center Strategies Highlights

· Respondents expect a greater than 2x increase in the average number of physical servers in their DCs by 2019.

· Top DC investment drivers are security and application performance (75% of respondents) and scalability (71%).

· 9% of servers are expected to be 1-socket by 2019, up from 3% now.

· 73% of servers are expected to be running hypervisors or containers by 2019, up from 70% now.

· Top DC fabric features are high speed (68% of respondents), automated VM movement (62%), and support for network virtualization protocols (62%).

· 53% of respondents intend to increase investment in software defined storage, 52% in NAS, and 42% in SSD.

· 30% of respondents indicated they are running general purpose IT applications, 22% are running productivity applications such as Microsoft Office, and 18% are running collaboration tools such as email, SharePoint, and unified communications in their data centers.

· Cisco, Dell, HPE, Juniper, and Huawei were identified as the top 5 DC Ethernet switch vendors by respondents ranking the top 3 vendors in each of 8 selection criteria.

Data Center Network Research Synopsis:

The IHS Markit Data Center Networks Intelligence Service provides quarterly worldwide and regional market size, vendor market share, forecasts through 2022, analysis and trends for (1) data center Ethernet switches by category [purpose-built, bare metal, blade, and general purpose], port speed [1/10/25/40/50/100/200/400GE] and market segment [enterprise, telco and cloud service provider], (2) application delivery controllers by category [hardware-based appliance, virtual appliance], and (3) software-defined WAN (SD-WAN) [appliances and control and management software], (4) FC SAN switches by type [chassis, fixed], and (5) FC SAN HBAs.

Vendors tracked include A10, ALE, Arista, Array Networks, Aryaka, Barracuda, Cisco, Citrix, CloudGenix, CradlePoint, Dell, F5, FatPipe, HPE, Huawei, Hughes, InfoVista, Juniper, KEMP, Nokia (Nuage), Radware, Riverbed, Silver Peak, Talari, TELoIP, VMware, ZTE and others.

IHS-Markit: Data Center Ethernet switch revenue reached $2.8B in 1Q2018

by Devon Adams and Cliff Grosner, PhD, IHS Markit

DC Ethernet switch revenue reached $2.8B in 1Q18; Programmable switch silicon gets key validation

In 1Q18, Data Center *DC” Ethernet switch revenue reached $2.8B, up 12% over 1Q17; bare metal and purpose-built switch revenues grew 35% and 15% YoY, respectively. Bare metal switches are now widely available from both traditional and white box vendors. Purpose-built switches embedded with data plane programmable silicon from Broadcom, Cavium, and Barefoot Networks continue to expand. Over the past 18 months Arista, the #2 DC switching vendor, has released several switches with programmable silicon from each of the 3 manufacturers mentioned above.

“Bare metal switch shipments continue their long-term growth as hyper-scale and tier 2 cloud service providers (CSPs), telcos adopting NFV, and large enterprises increase their deployments worldwide”, says Devan Adams, MBA, Senior Analyst, Cloud and Data Center Research Practice, IHS Markit.

“A mix of bare metal and purpose-built switches using programmable silicon from a handful of chip vendors continues to displace traditional switches in the market,” he added.

By CY22, we expect 25GE data center Ethernet switch ports to represent 16% of DC ports shipped, up from 6% in CY17; and 100GE ports to reach 35% of DC switch ports shipped, up from 9% in CY17. Ethernet switch manufacturers continue to enhance their portfolios with new 25GE and 100GE models. Dell began shipping its 1st 25GE branded bare metal switch at the end of CY17; Arista has released several switches, including four 100GE and a 25GE model, since February 2018; and Juniper has introduced numerous switches, including two 100GE and two 25GE models, since the start of 2018.

“We believe 25GE will have a noticeable negative effect on the growth of 10GE, as CSPs favor 25GE for server connectivity and 100GE at the access and core layer; as a result, 100GE top-of-rack (ToR) switches connecting to 25GE server ports and the availability of 100GE bare metal switches will continue to drive additional 100GE deployments” says Devan Adams.

More Data Center Network Market Highlights

· 25GE and 100GE data center switching port shipments see triple-digit growths year over year in 1Q18.

· 200/400GE deployments edge closer, shipments expected to begin in 2019.

· F5 garnered 46% ADC market share in 1Q18 with revenue up 4% QoQ. Citrix had the #2 spot with 29% of revenue, and A10 (9%) rounded out the top 3 market share spots.

· 1Q18 ADC revenue declined 4% from 4Q17 to $453M and declined 4% over 1Q17

· Virtual ADC appliances stood at 31% of 1Q18 ADC revenue

Data Center Network Equipment Report Synopsis

The IHS Markit Data Center Network Equipment market tracker is part of the Data Center Networks Intelligence Service and provides quarterly worldwide and regional market size, vendor market share, forecasts through 2022, analysis and trends for (1) data center Ethernet switches by category [purpose built, bare metal, blade and general purpose], port speed [1/10/25/40/50/100/200/400GE] and market segment [enterprise, telco and cloud service provider], (2) application delivery controllers by category [hardware-based appliance, virtual appliance], and (3) software-defined WAN (SD-WAN) [appliances and control and management software]. Vendors tracked include A10, ALE, Arista, Array Networks, Aryaka, Barracuda, Cisco, Citrix, CloudGenix, CradlePoint, Dell, F5, FatPipe, HPE, Huawei, Hughes, InfoVista, Juniper, KEMP, Nokia (Nuage), Radware, Riverbed, Silver Peak, Talari, TELoIP, VMware, ZTE and others.

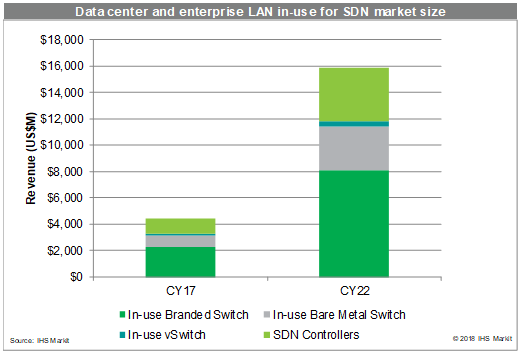

IHS Markit: Data Center and enterprise LAN SDN market totaled $4.4 Billion in 2017

Data Center & Enterprise SDN Hardware and Software Market Tracker from IHS Markit:

Highlights:

- Global data center and enterprise LAN software-defined networking (SDN) market revenue—including SDN-in-use bare metal and branded Ethernet switches and SDN controllers—is anticipated to reach $15.8 billion by 2022.

- Bare metal switch revenue came to $790 million in 2017, representing 26 percent of total in-use SDN-capable Ethernet switch revenue.

- Data center SDN controller revenue totaled $1.2 billion in 2017, and is expected to hit $4 billion by 2022, when it will represent 17 percent of data center and enterprise LAN SDN revenue.

- Cisco is number-one with 21 percent of 2017 in-use SDN revenue, VMware comes in at number-two with 19 percent, Arista at number-three with 15 percent, White Box at number-four with 12 percent and Huawei at number-five with 7 percent.

Notes:

- White Box is No. 1 in bare metal switch revenue, VMware leads the SDN controller market segment, Dell owns 44 percent of branded bare metal switch revenue in the second half of 2017, and HPE has the largest share of total SDN-capable (in-use and not-in-use) branded Ethernet switch ports.

- White box also ranked No. 1 in data center server revenue, pulling in 21 percent of the market in the first quarter, or $3.8 billion. Dell EMC dropped to second place in revenue at 20 percent ($3.6 billion), followed by Hewlett Packard Enterprise (HPE) at 18 percent ($3.2 billion).

IHS Markit analysis:

The data center and enterprise LAN market is maturing. In-use SDN revenue totaled $4.4 billion worldwide in 2017, and is expected to hit $15.8 billion by 2022. Innovations continue, with bare metal switch vendors announcing 400GE switches with new programmable silicon that incorporates an on-chip ARM processor to support artificial intelligence (AI) and machine learning (ML) and allows data plane protocols to be modified without changing silicon. Also of note, Edgecore Networks (Taiwan) contributed its 440GE switch design to the Open Compute Project (OCP).

SDN controllers for the data center also continue to add functionality, with increased support for interconnecting multiple on-premises data centers, and with support for interoperation between cloud service provider (CSP) data centers and enterprises as they build multi-clouds. Other notable enhancements include features that support container networking and integration with container management software such as Kubernetes.

Data center and enterprise LAN SDN is now mainstream and the market is expected to grow.

“Not all the $15.8 billion in revenue in 2022 is new. SDN-capable Ethernet switch revenue is existing revenue, and a portion of SDN controller revenue is displaced from the Ethernet switch market due to reduced port ASPs,” said Cliff Grossner, Ph.D., senior research director, IHS Markit. “Some network operators will elect to use bare metal Ethernet switches when deploying SDNs and rely on SDN controllers for advanced control plane features,” Cliff added.

About the report

The IHS Markit Data Center & Enterprise SDN Hardware & Software Market Tracker provides market size, market share, forecasts, analysis and trends for SDN controllers; SDN-capable bare metal Ethernet switches and branded Ethernet switches; and SD-WAN appliances and control and management. Vendors tracked include Arista, Aryaka, Cisco, Citrix, CloudGenix, Dell EMC, Fatpipe, HPE, Huawei, InfoVista, Juniper, NEC, Riverbed, Silver Peak, Talari, VMware, White Box, ZTE and others.

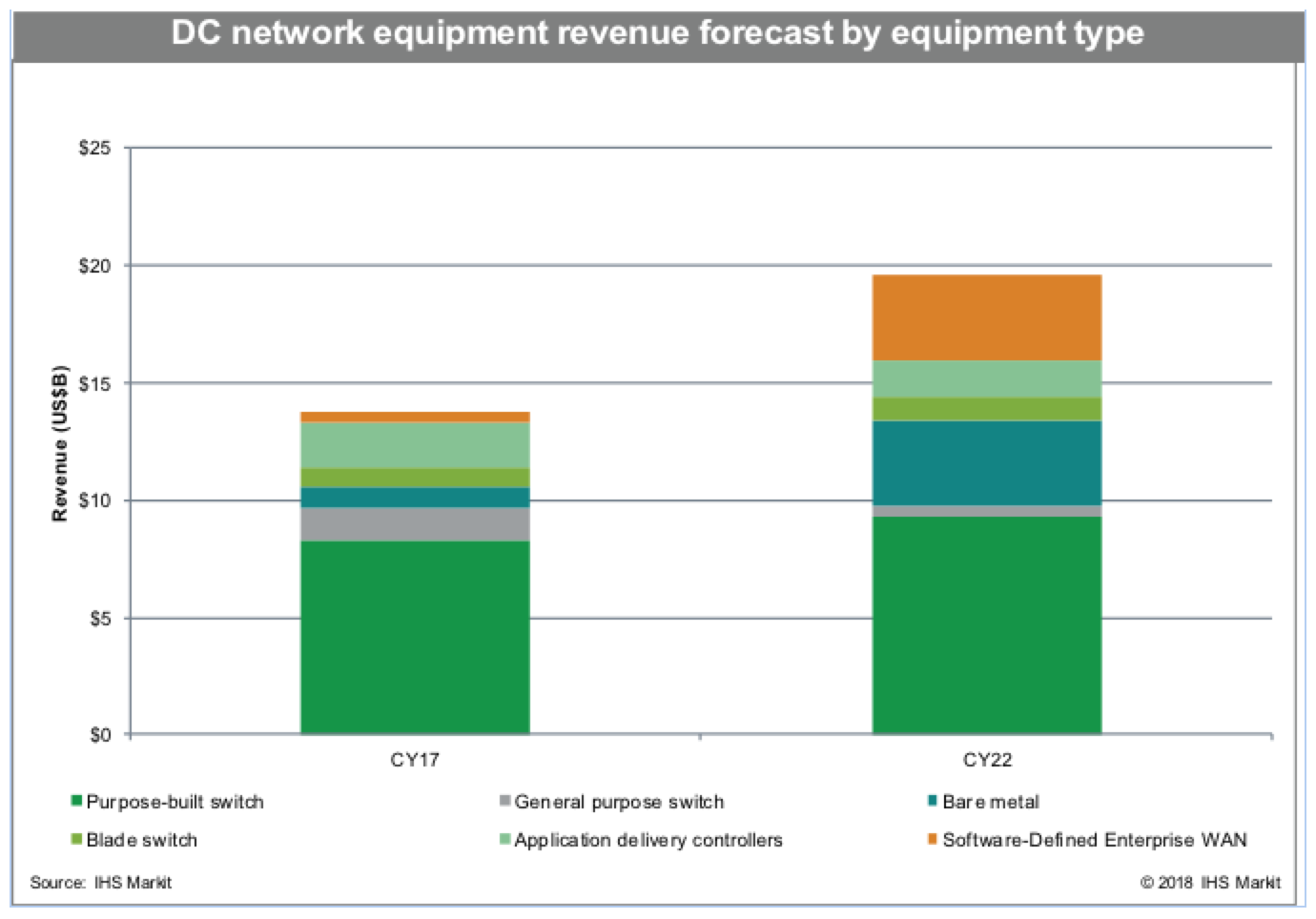

IHS Markit: Data center network equipment revenue reached $13.7 billion in 2017

Data center network equipment revenue, including data center Ethernet switches, application delivery controllers (ADCs) and software-defined enterprise WAN (SD-WAN), totaled $13.7 billion in 2017, increasing 13 percent over the previous year.

In the short term, investment in physical infrastructure is still driving data center network equipment revenue growth. However, in 2018 and 2019 the effect of server virtualization will slow the market, with fewer — but higher capacity — servers reducing the need for data center Ethernet switch ports, along with the move to virtual ADCs.

“The adoption of lower-priced bare metal switches will cause revenue growth to slow,” said Clifford Grossner, Ph.D., senior research director and advisor, cloud and data center research practice, IHS Markit. “The ongoing shift to the cloud not only moves network equipment out of the enterprise data center, but also requires less equipment, as the cloud represents data center consolidation on a wide scale.”

Data center network equipment highlights

- Data center network equipment revenue was on the rise, year over year, in all regions: North America and Europe, Middle-East and Africa (EMEA) each increased 10 percent in 2017; Asia Pacific (APAC) was up 23 percent; and Caribbean and Latin America (CALA) rose 2 percent.

- 25GE and 100GE data center switching ports increased three-fold year over year.

- New 200/400GE developments are underway, and shipments expected to begin in 2019.

- Long-term growth in the data center network equipment market is expected to slow to 6 percent in 2022, as SD-WAN revenue growth slows due to the migration from the enterprise dater center to the cloud.

- SD-WAN revenue is anticipated to reach $3.6 billion by 2022; the next wave for SD-WAN includes increased analytics, with artificial intelligence (AI) and machine learning (ML) providing multi-cloud connectivity.

Revenue results from key segments

- Data center Ethernet switch revenue rose 13 percent over the previous year, reaching $11.4 billion in 2017.

- SD-WAN market revenue hit $444.1 million for the full-year 2017.

- Bare metal switch revenue was up 60 percent year over year in the fourth quarter of 2017.

- ADC revenue was down 5 percent year over year in 2017.

Research synopsis

The IHS Markit Data Center Networks Intelligence Service provides quarterly worldwide and regional market size, vendor market share, forecasts through 2022, analysis and trends for data center Ethernet switches by category (purpose-built, bare metal, blade, and general purpose), port speed (1/10/25/40/50/100/200/400GE) and market segment (enterprise, telco and cloud service provider). The intelligence service also covers application delivery controllers by category (hardware-based appliances, virtual appliances), SD-WAN (appliances and control and management software), FC SAN switches by type (chassis, fixed) and FC SAN HBAs.

Vendors tracked include A10, ALE, Arista, Array Networks, Aryaka, Barracuda, Broadcom, Cavium, Cisco, Citrix, CloudGenix, Dell, F5, FatPipe, HPE, Huawei, InfoVista, Juniper, KEMP, Radware, Riverbed, Silver Peak, Talari, TELoIP, VMware, ZTE and others.