Cloud Data Centers

Data infrastructure software: picks and shovels for AI; Hyperscaler CAPEX

For many years, data volumes have been accelerating. By 2025, global data volumes are expected to reach 180 zettabytes (1 zettabyte=1 sextillion bytes), up from 120 zettabytes in 2023.

In the age of AI, data is viewed as the currency for large language models (LLMs) and AI–enabled offerings. Therefore, demand for tools to integrate, store and process data is a growing priority amongst enterprises.

The median size of datasets required to train AI models increased from 5.9 million data points in 2010 to 750 billion in 2023, according to BofA Global Research. As demand rises for AI-enabled offerings, companies are prioritizing tools to integrate, store, and process data.

In BofA’s survey, data streaming/stream processing and data science/ML were selected as key use cases in regard to AI, with 44% and 37% of respondents citing usage, respectively. Further, AI enablement is accelerating data to the cloud. Gartner estimates that 74% of the data management market will be deployed in the cloud by 2027, up from 60% in 2023.

Data infrastructure software [1.] represents a top spending priority for the IT department. Survey respondents cite that data infrastructure represents 35% of total IT spending, with budgets expected to grow 9% for the next 12 months. No surprise that the public cloud hyper-scaler platforms were cited as top three vendors. Amazon AWS data warehouse/data lake offerings, Microsoft Azure database offerings, and Google BigQuery are chosen by 45%, 39% and 35% of respondents, respectively.

Note 1. Data infrastructure software refers to databases, data warehouses/lakes, data pipelines, data analytics and other software that facilitate data management, processing and analysis.

………………………………………………………………………………………………………………..

The top three factors for evaluating data infrastructure software vendors are security, enterprise capabilities (e.g., architecture scalability and reliability) and depth of technology.

BofA’s Software team estimates that the data infrastructure industry (e.g., data warehouses, data lakes, unstructured databases, etc.) is currently a $96bn market that could reach $153bn in 2028. The team’s proprietary survey revealed that data infrastructure is 35% of total IT spending with budgets expected to grow 9% over the next 12 months. Hyperscalers including Amazon and Google are among the top recipients of dollars and in-turn, those companies spend big on hardware.

Key takeaways:

- Data infrastructure is the largest and fastest growing segment of software ($96bn per our bottom-up analysis, 17% CAGR).

- AI/cloud represent enduring growth drivers. Data is the currency for LLMs, positioning data vendors well in this new cycle

- BofA survey (150 IT professionals) suggests best of breeds (MDB, SNOW and Databricks) seeing highest expected growth in spend

………………………………………………………………………………………………………….

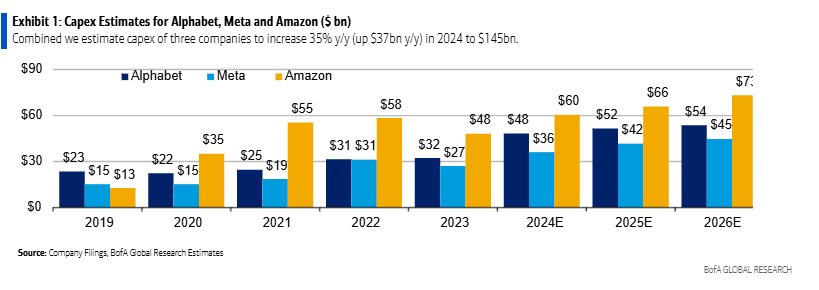

BofA analyst Justin Post expects server and equipment capex for mega-cap internet companies (Amazon, Alphabet/Google, Meta/Facebook) to rise 43% y/y in 2024 to $145bn, which represents $27bn of the $37bn y/y total capex growth. Despite the spending surge, Mr. Post thinks these companies will keep free cash flow margins stable at 22% y/y before increasing in 2025. The technical infrastructure related capex spend at these three companies is expected to see steep rise in 2024, with the majority of the increase for servers and equipment.

Notes:

- Alphabet categorizes its technical infrastructure assets under the line item ‘Information Technology Assets‘

- Amazon take a much a broader categorization and includes Servers, networking equipment, retail related heavy equipment & fulfillment equipment under ‘Equipment‘.

- Meta gives more details and separately reports Server & Networking, and Equipment assets.

In 2024, BofA estimates CAPEX for the three hyperscalers as follows:

- Alphabet‘s capex for IT assets will increase by $12bn y/y to $28bn.

- Meta, following a big ramp in 2023, server, network and equipment asset spend is expected to increase $7bn y/y to $22bn.

- Amazon, equipment spend is expected to increase $8bn y/y to $41bn (driven by AWS, retail flattish). Amazon will see less relative growth due to retail equipment capex leverage in this line.

On a relative scale, Meta capex spend (% of revenue) remains highest in the group and the company has materially stepped up its AI related capex investments since 2022 (in–house supercomputer, LLM, leading computing power, etc.). We think it‘s interesting that Meta is spending almost as much as the hyperscalers on capex, which should likely lead to some interesting internal AI capabilities, and potential to build a “marketing cloud“ for its advertisers.

From 2016-22, the sector headcount grew 26% on average. In 2023, headcount decreased by 9%. BofA expects just 3% average. annual job growth from 2022-2026. Moreover, AI tools will likely drive higher employee efficiency, helping offset higher depreciation.

…………………………………………………………………………………………………………

Source for all of the above information: BofA Global Research

WSJ: China’s Telecom Carriers to Phase Out Foreign Chips; Intel & AMD will lose out

China’s largest telecom firms were ordered earlier this year to phase out foreign computer chips from their networks by 2027. That news confirms and expands on reports from recent months. It was reported in the Saturday print edition of the Wall Street Journal (WSJ). The move will hit U.S. semiconductor processor companies Intel and Advanced Micro Devices. Asia Financial reported in late March that these retaliatory bans would cost the U.S. chip firms billions.

The deadline given by China’s Ministry of Industry and Information Technology (MIIT) aims to accelerate efforts by Beijing to halt the use of such core chips in its telecom infrastructure. The regulator ordered state-owned mobile operators to inspect their networks for the prevalence of non-Chinese semiconductors and draft timelines to replace them, the people said.

In the past, efforts to get the industry to wean itself off foreign semiconductors have been hindered by the lack of good domestically made chips. Chinese telecom carriers’ procurements show they are switching more to domestic alternatives, a move made possible in part because local chips’ quality has improved and their performance has become more stable, the people said.

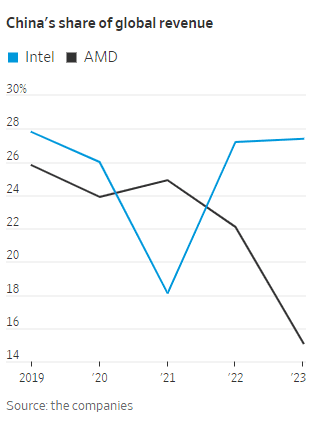

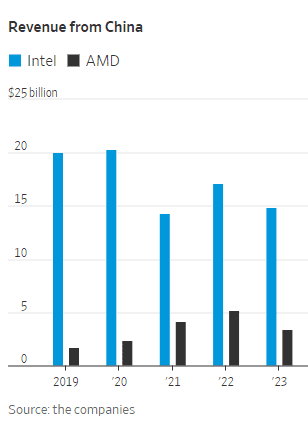

Such an effort will hit Intel and AMD the hardest, they said. The two chip makers have in recent years provided the bulk of the core processors used in networking equipment in China and the world.

China’s MIIT, which oversees the regulation of the wireless, broadcasting and communication industries, didn’t respond to WSJ’s request for comment. China Mobile and China Telecom , the nation’s two biggest telecom carriers by revenue, also didn’t respond.

In March 2023, the Financial Times reported China is seeking to forbid the use of Intel and AMD chips, as well as Microsoft’s operating system, from government computers and servers in favor of local hardware and software. The latest purchasing rules represent China’s most significant step yet to build up domestic substitutes for foreign technology and echo moves in the US as tensions increase between the two countries. Among the 18 approved processors were chips from Huawei and state-backed group Phytium. Both are on Washington’s export blacklist. Chinese processor makers are using a mixture of chip architectures including Intel’s x86, Arm and homegrown ones, while operating systems are derived from open-source Linux software.

Beijing’s desire to wean China off American chips where there are homemade alternatives is the latest installment of a U.S.-China technology war that is splintering the global landscape for network equipment, semiconductors and the internet. American lawmakers have banned Chinese telecom equipment over national-security concerns and have restricted U.S. chip companies including AMD and Nvidia from selling their high-end artificial-intelligence chips to China.

China has also published procurement guidelines discouraging government agencies and state-owned companies from purchasing laptops and desktop computers containing Intel and AMD chips. Requirements released in March give the Chinese entities eight options for central processing units, or CPUs, they can choose from. AMD and Intel were listed as the last two options, behind six homegrown CPUs.

Computers with the Chinese chips installed are preapproved for state buyers. Those powered by Intel and AMD chips require a security evaluation with a government agency, which hasn’t certified any foreign CPUs to date. Making chips for PCs is a significant source of sales for the two companies.

China Mobile and China Telecom are also key customers of both chip makers in China, buying thousands of servers for their data centers in the country’s mushrooming cloud-computing market. These servers are also critical to telecommunications equipment working with base stations and storing mobile subscribers’ data, often viewed as the “brains” of the network. Intel and AMD have the lion’s share of the overall global market for CPUs used in servers, according to data from industry researcher TrendForce. In 2024, Intel will likely hold 71% of the market, while AMD will have 23%, TrendForce estimates. The researcher doesn’t break out China data.

China’s localization policies could diminish Intel and AMD’s sales in the country, one of the most important markets for semiconductor firms. China is Intel’s largest market, accounting for 27% of the company’s revenue last year, Intel said in its latest annual report in January. The U.S. is its second-largest market. Its customers also include global electronics makers that manufacture in China.

In the report, Intel highlighted the geopolitical risk it faced from elevated U.S.-China tensions and China’s localization push. “We could face increased competition as a result of China’s programs to promote a domestic semiconductor industry and supply chains,” the report said.

References:

https://www.wsj.com/tech/china-telecom-intel-amd-chips-99ae99a9 (paywall)

https://www.ft.com/content/7bf0f79b-dea7-49fa-8253-f678d5acd64a

China Mobile & China Unicom increase revenues and profits in 2023, but will slash CAPEX in 2024

GSMA: China’s 5G market set to top 1 billion this year

MIIT: China’s Big 3 telcos add 24.82M 5G “package subscribers” in December 2023

China’s telecom industry business revenue at $218B or +6.9% YoY

Google Cloud infrastructure enhancements: AI accelerator, cross-cloud network and distributed cloud

Google is selling broad access to its most powerful artificial-intelligence technology for the first time as it races to make up ground in the lucrative cloud-software market. The cloud giant now has a global network of 38 cloud regions, with a goal to operate entirely on carbon-free energy 24/7 by 2030.

At the Google Cloud Next conference today, Google Cloud announced several key infrastructure enhancements for customers, including:

- Cloud TPU v5e: Google’s most cost-efficient, versatile, and scalable purpose-built AI accelerator to date. Now, customers can use a single Cloud TPU platform to run both large-scale AI training and inference. Cloud TPU v5e scales to tens of thousands of chips and is optimized for efficiency. Compared to Cloud TPU v4, it provides up to a 2x improvement in training performance per dollar and up to a 2.5x improvement in inference performance per dollar.

- A3 VMs with NVIDIA H100 GPU: A3 VMs powered by NVIDIA’s H100 GPU will be generally available next month. It is purpose-built with high-performance networking and other advances to enable today’s most demanding gen AI and large language model (LLM) innovations. This allows organizations to achieve three times better training performance over the prior-generation A2.

- GKE Enterprise: This enables multi-cluster horizontal scaling ;-required for the most demanding, mission-critical AI/ML workloads. Customers are already seeing productivity gains of 45%, while decreasing software deployment times by more than 70%. Starting today, the benefits that come with GKE, including autoscaling, workload orchestration, and automatic upgrades, are now available with Cloud TPU v5e.

- Cross-Cloud Network: A global networking platform that helps customers connect and secure applications across clouds. It is open, workload-optimized, and offers ML-powered security to deliver zero trust. Designed to enable customers to gain access to Google services more easily from any cloud, Cross-Cloud Network reduces network latency by up to 35%.

- Google Distributed Cloud: Designed to meet the unique demands of organizations that want to run workloads at the edge or in their data center. In addition to next-generation hardware and new security capabilities, the company is also enhancing the GDC portfolio to bring AI to the edge, with Vertex AI integrations and a new managed offering of AlloyDB Omni on GDC Hosted.

Google’s launch on Tuesday puts it ahead of Microsoft in making AI-powered office software easily available for all customers,” wrote WSJ’s Miles Kruppa. Google will also open up availability to its large PaLM 2 model, which supports generative AI features, plus AI technology by Meta Platforms and startup Anthropic, reported Kruppa.

The efforts are Google’s latest attempt to spark growth in the cloud business, an important part of CEO Sundar Pichai’s attempts to reduce dependence on its cash-cow search engine. Recent advances in AI, and the computing resources they require, have added extra urgency to turn the technology into profitable products.

Google’s infrastructure and software offerings produce $32 billion in annual sales, about 10% of total revenue at parent company. Its cloud unit turned a quarterly operating profit for the first time this year. That still leaves Google firmly in third place in the cloud behind AWS and Microsoft Azure. However, Google Cloud revenue is growing faster – at 31% – than its two bigger cloud rivals.

Google will make widely available its current large PaLM 2 model, which powers many of the company’s generative-AI features. It was previously only available for handpicked customers. The company also will make available AI technology developed by Meta Platforms and the startup Anthropic, in which it is an investor.

Google Cloud CEO Thomas Kurian who gave the keynote speech at Google Cloud Next 2023 conference. Image Credit: Alphabet (parent company of Google)

……………………………………………………………………………………………………………………………

Google Cloud’s comprehensive AI platform — Vertex AI — enables customers to build, deploy and scale machine learning (ML) models. They have seen tremendous usage, with the number of gen AI customer projects growing more than 150 times from April-July this year. Customers have access to more than 100 foundation models, including third-party and popular open-source versions, in their Model Garden. They are all optimized for different tasks and different sizes, including text, chat, images, speech, software code, and more.

Google also offer industry specific models like Sec-PaLM 2 for cybersecurity, to empower global security providers like Broadcom and Tenable; and Med-PaLM 2 to assist leading healthcare and life sciences companies including Bayer Pharmaceuticals, HCA Healthcare, and Meditech.

Partners are also using Vertex AI to build their own features for customers – including Box, Canva, Salesforce, UKG, and many others. Today at Next ‘23, we’re announcing:

- DocuSign is working with Google to pilot how Vertex AI could be used to help generate smart contract assistants that can summarize, explain and answer what’s in complex contracts and other documents.

- SAP is working with us to build new solutions utilizing SAP data and Vertex AI that will help enterprises apply gen AI to important business use cases, like streamlining automotive manufacturing or improving sustainability.

- Workday’s applications for Finance and HR are now live on Google Cloud and they are working with us to develop new gen AI capabilities within the flow of Workday, as part of their multi-cloud strategy. This includes the ability to generate high-quality job descriptions and to bring Google Cloud gen AI to app developers via the skills API in Workday Extend, while helping to ensure the highest levels of data security and governance for customers’ most sensitive information.

In addition, many of the world’s largest consulting firms, including Accenture, Capgemini, Deloitte, and Wipro, have collectively planned to train more than 150,000 experts to help customers implement Google Cloud GenAI.

………………………………………………………………………………………………………………………

“The computing capabilities are improving a lot, but the applications are improving even more,” said Character Technologies CEO Noam Shazeer, who pushed for Google to release a chatbot to the public before leaving the company in 2021. “There will be trillions of dollars worth of value and product chasing tens of billions of dollars worth of hardware.”

………………………………………………………………………………………………………………

References:

https://cloud.google.com/blog/topics/google-cloud-next/welcome-to-google-cloud-next-23

https://www.wsj.com/tech/ai/google-chases-microsoft-amazon-cloud-market-share-with-ai-tools-a7ffc449

https://cloud.withgoogle.com/next

Microsoft acquires Lumenisity – hollow core fiber high speed/low latency leader

Executive Summary:

Microsoft announced it has acquired Lumenisity® Limited, a leader in next-generation hollow core fiber (HCF) solutions. Lumenisity’s innovative and industry-leading HCF product can enable fast, reliable and secure networking for global, enterprise and large-scale organizations.

The acquisition will expand Microsoft’s ability to further optimize its global cloud infrastructure and serve Microsoft’s Cloud Platform and Services customers with strict latency and security requirements. The technology can provide benefits across a broad range of industries including healthcare, financial services, manufacturing, retail and government.

Organizations within these sectors could see significant benefit from HCF solutions as they rely on networks and datacenters that require high-speed transactions, enhanced security, increased bandwidth and high-capacity communications. For the public sector, HCF could provide enhanced security and intrusion detection for federal and local governments across the globe. In healthcare, because HCF can accommodate the size and volume of large data sets, it could help accelerate medical image retrieval, facilitating providers’ ability to ingest, persist and share medical imaging data in the cloud. And with the rise of the digital economy, HCF could help international financial institutions seeking fast, secure transactions across a broad geographic region.

Types of Hollow Core Fiber:

Various types of hollow-core photonic bandgap fibers:

(a) Photonic crystal fiber featuring small hollow core surrounded by a periodic array of large air holes.

(b) Microstructured fiber featuring medium-sized hollow core surrounded by several rings of small air holes separated by nano-size bridges.

(c) Bragg fiber featuring large hollow core surrounded by a periodic sequence of high and low refractive index layers

Lumenisity HCF benefits:

Lumenisity’s hollow core fiber technology replaces the standard glass core in a fiber cable with an air-filled chamber. According to Microsoft, light travels through air 47% faster than glass. Lumenisity’s next generation of HCF uses a proprietary design where light propagates in an air core, which has significant advantages over traditional cable built with a solid core of glass, including:

- Increased overall speed and lower latency as light travels through HCF 47% faster than standard silica glass.[1]

- Enhanced security and intrusion detection due to Lumenisity’s innovative inner structure.

- Lower costs, increased bandwidth and enhanced network quality due to elimination of fiber nonlinearities and broader spectrum.

- Potential for ultra-low signal loss enabling deployment over longer distances without repeaters.

Lumenisity was formed in 2017 as a spinoff from the world-renowned Optoelectronics Research Centre (ORC) at the University of Southampton to commercialize breakthroughs in the development of hollow core optical fiber. In 2021 and 2022, the company won the Best Fibre Component Product for their NANF® CoreSmart® HCF cable in the European Conference on Optical Communication (ECOC) Exhibition Industry Awards. As part of the Lumenisity acquisition, Microsoft plans to utilize the organization’s technology and team of industry-leading experts to accelerate innovations in networking and infrastructure.

Lumenisity said: “We are proud to be acquired by a company with a shared vision that will accelerate our progress in the hollow-core space. This is the end of the beginning, and we are excited to start our new chapter as part of Microsoft to fulfill this technology’s full potential and continue our pursuit of unlocking new capabilities in communication networks.”

………………………………………………………………………………………………………………………………………………………………..

Analysis:

The purchase is also noteworthy in light of Microsoft’s other recent acquisitions in the telecommunications sector, which include Affirmed Networks, Metaswitch Networks and AT&T’s core network operations (including 5G SA Core Network).

Microsoft isn’t the only company interested in HCF technology and Lumenisity. Both BT in the UK and Comcast in the US have tested Lumenisity’s offerings.

Comcast announced in April it was able to support speeds in the range of 10 Gbit/s to 400 Gbit/s over a 40km “hybrid” connection in Philadelphia that utilized legacy fiber and the new hollow core fiber. Comcast worked with Lumenisity.

“As we continue to develop and deploy technology to deliver 10G, multigigabit performance to tens of millions of homes, hollow core fiber will help to ensure that the network powering those experiences is among the most advanced and highest performing in the world,” said Comcast networking chief Elad Nafshi in the release issued in April.

References:

https://www.datacenterdynamics.com/en/news/microsoft-acquires-hollow-core-fiber-firm-lumenisity

Comcast Deploys Advanced Hollowcore Fiber With Faster Speed, Lower Latency

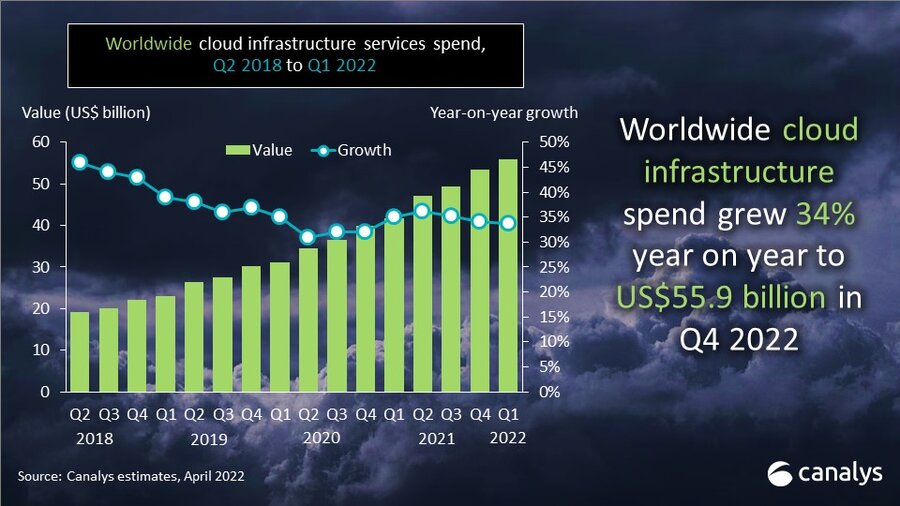

Canalys: Global cloud services spending +33% in Q2 2022 to $62.3B

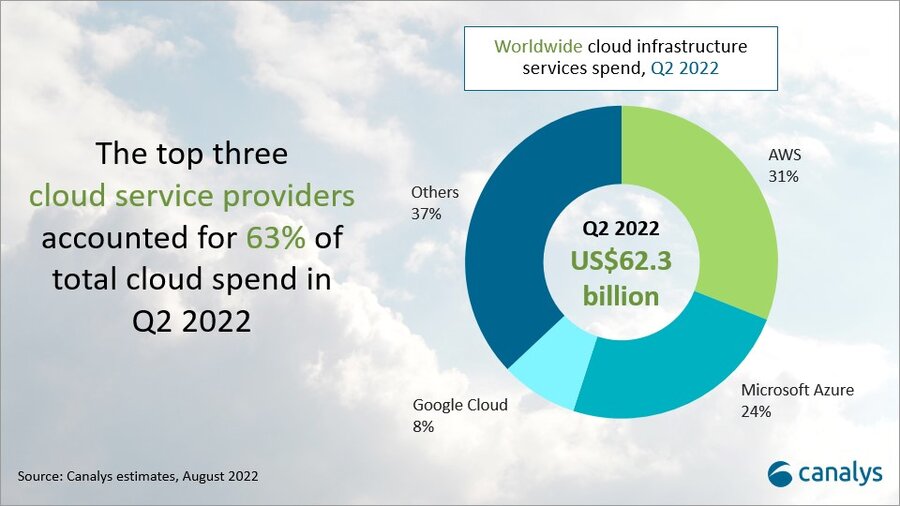

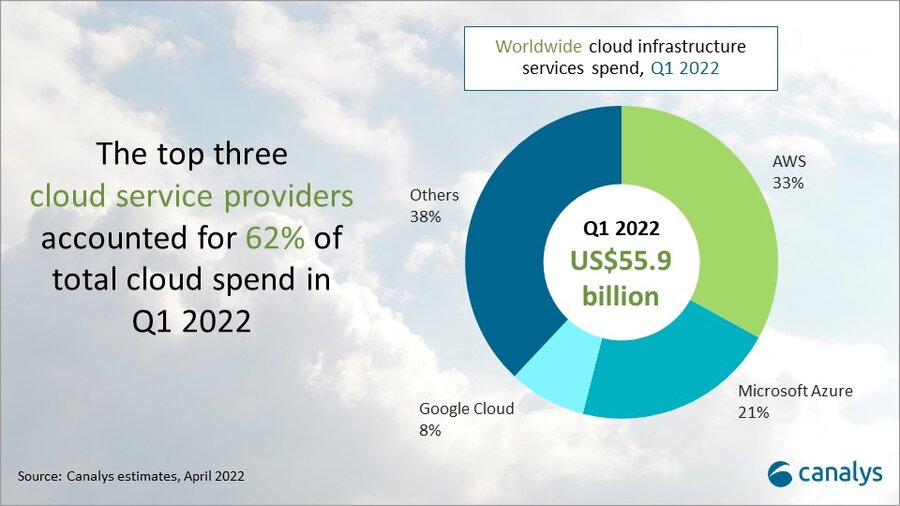

According to market research firm Canalys, cloud infrastructure services continued to be in high demand in Q2 2022. Worldwide cloud spending increased 33% year on year to US$62.3 billion, driven by a range of factors, including demand for data analytics and machine learning, data center consolidation, application migration, cloud-native development and service delivery. The growing use of industry-specific cloud applications also contributed to the broader horizontal use cases seen across IT transformation. The latest Canalys data shows expenditure was over US$6 billion more than in the previous quarter and US$15 billion more than in Q2 2021.

The top three vendors in Q2 2022, Amazon Web Services (AWS), Microsoft Azure and Google Cloud, together accounted for 63% of global spending in Q2 2022 and collectively grew 42%. The key to increasing global market share is continually growing and upgrading cloud data center infrastructure, which all big three cloud service providers are working on.

- AWS accounted for 31% of total cloud infrastructure services spend in Q2 2022, making it the leading cloud service provider. It grew 33% on an annual basis.

- Microsoft Azure was the second largest cloud service provider in Q2, with a 24% market share after growing 40% annually.

- Google Cloud grew 45% in the latest quarter and accounted for an 8% market share.

In the next year, AWS plans to launch 24 new availability zones in eight regions, and Microsoft plans to launch 10 new cloud regions. Google Cloud, which accounted for 8% of Q2 cloud spend, recently announced Latin America expansion plans.

The hyperscale battle between leader AWS and challenger Microsoft Azure continues to intensify, with Azure closing the gap on its rival. Fueling this growth, Microsoft had a record number of larger multi-year deals in both the US$100 million-plus and US$1 billion-plus segments. Microsoft also said it plans to increase the efficiency of its server and network equipment by extending the depreciable useful life from four years to six.

A diverse go-to-market ecosystem, combined with a broad portfolio and wide range of software partnerships is enabling Microsoft to stay hot on the heels of AWS in the race to be #1 in cloud services.

“Cloud remains the strong growth segment in tech,” said Canalys VP Alex Smith. “While opportunities abound for providers large and small, the interesting battle remains right at the top between AWS and Microsoft. The race to invest in infrastructure to keep pace with demand will be intense and test the nerves of the companies’ CFOs as both inflation and rising interest rates create cost headwinds.”

Both AWS and Microsoft are continuing to roll out infrastructure. AWS has plans to launch 24 availability zones across eight regions, while Microsoft plans to launch 10 new regions over the next year. In both cases, the providers are increasing investment outside of the US as they look to capture global demand and ensure they can provide low-latency and high data sovereignty solutions.

“Microsoft announced it would extend the depreciable useful life of its server and network equipment from four to six years, citing efficiency improvements in how it is using technology,” said Smith. “This will improve operating income and suggests that Microsoft will sweat its assets more, which helps investment cycles as the scale of its infrastructure continues to soar. The question will be whether customers feel any negative impact in terms of user experience in the future, as some services will inevitably run on legacy equipment.”

Beyond the capacity investments, software capabilities and partnerships will be vital to meet customers’ cloud demands, especially when considering the compute needs of highly specialized services across different verticals.

“Most companies have gone beyond the initial step of moving a portion of their workloads to the cloud and are looking at migrating key services,” said Canalys Research Analyst Yi Zhang. “The top cloud vendors are accelerating their partnerships with a variety of software companies to demonstrate a differentiated value proposition. Recently, Microsoft pointed to expanded services to migrate more Oracle workloads to Azure, which in turn are connected to databases running in Oracle Cloud.”

Canalys defines cloud infrastructure services as those that provide infrastructure-as-a-service and platform-as-a-service, either on dedicated hosted private infrastructure or shared public infrastructure. This excludes software-as-a-service expenditure directly, but includes revenue generated from the infrastructure services being consumed to host and operate them.

For more information, please contact: Alex Smith: [email protected] OR Yi Zhang: [email protected]

References:

Dish Network & Nokia: world’s first 5G SA core network deployed on public cloud (AWS)

Dish Network is just a month into the commercial launch of its cloud native based 5G core network, but is already planning how it will expand that architecture to take advantage of multicloud and hybrid cloud environments.

During a Dish-Nokia fireside chat this Tuesday (sponsored by Nokia) on LinkedIn, Jitin Bhandari – CTO and VP, Cloud and Network Services, Nokia interviewed Sidd Chenumolu, VP of technology development and network services at Dish Wireless, provided some insight into the carrier’s current use of Amazon Web Services (AWS) public cloud resources.

Chenumolu said Dish’s 5G core was currently using three of AWS’ four public regions, was deployed in “multiple availability zones and almost all the local zones, but most were deployed with Nokia applications across AWS around the country.”

[AWS Outposts GM Joshua Burgin had previously explained to SDxCentral that Dish would be using a mixture of AWS Regions, Local Zones, and Outposts, specifically the smaller form factor AWS Outposts servers, to power its network. This includes the deployment of single 1U Outpost servers, some with an accelerator card, to run network functions in single-digit milliseconds at cell sites, he said in a phone interview.]

AWS Local Zones, which are built on Outpost racks and span 15 locations around the U.S., some of which were deployed to meet Dish’s demands, run Dish’s less latency-sensitive functions, Burgin explained. Dish’s operations and business support systems will run on AWS Regions.

“How to we deploy 5G SA core network on multi-cloud,” Sidd asked but did not answer. He then started to turn the tables and interview Jitin via a series of questions.

Chenumolu did not provide an update on Dish’s use of AWS’ Wavelength platform, which the cloud giant initially launched in partnership with Verizon to marry the network operators’ 5G networks with AWS’ edge compute service. Burgin had previously stated that support “could come down the line.”

The usual hype and back slapping/praise with glib expressions like “disintegrated disruptor, uncharted territory, automate learning with AI, cloud RAN,” etc. characterized the session.

References:

https://www.linkedin.com/video/event/urn:li:ugcPost:6945794807772438528/

https://www.sdxcentral.com/articles/news/dish-eyes-5g-multicloud-hybrid-cloud-expansion/2022/07/

AWS, Microsoft Azure, Google Cloud account for 62% – 66% of cloud spending in 1Q-2022

New data from Synergy Research Group shows that Q1 enterprise spending on cloud infrastructure services was approaching $53 billion. That is up 34% from the first quarter of 2021, making it the eleventh time in twelve quarters that the year-on-year growth rate has been in the 34-40% range.

To the surprise of no one, Amazon AWS continues to lead with its worldwide market share remaining at 33%. For the third consecutive quarter its annual growth came in above the growth of the overall market.

Microsoft Azure continues to gain almost two percentage points of market share per year while Google Cloud’s annual market share gain is approaching one percentage point.

In aggregate all other cloud providers have grown their revenues by over 150% since the first quarter of 2018, though their collective market share has plunged from 48% to 36% as their growth rates remain far below the market leaders.

Synergy estimates that quarterly cloud infrastructure service revenues (including IaaS, PaaS and hosted private cloud services) were $52.7 billion, with trailing twelve-month revenues reaching $191 billion. Public IaaS and PaaS services account for the bulk of the market and those grew by 37% in Q1. The dominance of the major cloud providers is even more pronounced in public cloud, where the top three control 71% of the market. Geographically, the cloud market continues to grow strongly in all regions of the world.

“While the level of competition remains high, the huge and rapidly growing cloud market continues to coalesce around Amazon, Microsoft and Google,” said John Dinsdale, a Chief Analyst at Synergy Research Group. “Aside from the Chinese market, which remains totally dominated by local Chinese companies, other cloud providers simply cannot match the scale and geographic reach of the big three market leaders. As Amazon, Microsoft and Google continue to grow at 35-50% per year, other non-Chinese cloud providers are typically growing in the 10-20% range. That can still be an attractive proposition for those smaller providers, as long as they focus on regional or service niches where they can differentiate themselves from the big three.”

…………………………………………………………………………………………………………………..

Separately, Canalys estimates global cloud infrastructure services spending increased 34% to US$55.9 billion in Q1 2022, as organizations prioritized digitalization strategies to meet market challenges. That was over US$2 billion more than in the previous quarter and US$14 billion more than in Q1 2021.

The top three cloud service providers have benefited from increased adoption and scale, collectively growing 42% year on year and accounting for 62% of global customer spend.

Cloud-enabled business transformation has become a priority as organizations face global supply chain issues, cybersecurity threats and geopolitical instability. Organizations of all sizes and vertical markets are turning to cloud to ensure flexibility and resilience in the face of these challenges.

SMBs, in particular, have driven investment in cloud infrastructure services to support workload migration, data storage services and cloud-native application development. At the same time, infrastructure hardware shortages and the threat of further price inflation has spurred many large enterprises to invest in large-scale, multi-year cloud contracts to lock in upfront discounts with the hyperscalers.

All the major cloud providers have seen a significant increase in order backlogs as a result, which now total several hundred billion dollars worldwide. This in turn is driving the importance of cloud marketplaces as a sales channel for third-party software and security, as businesses seek to burn down these cloud commitments, further fueling infrastructure consumption.

“Cloud has continued to be a hot market and transformation strategies are emphasizing digital resiliency to face the market challenges of today and tomorrow,” said Canalys Research Analyst Blake Murray. “To be effective in resiliency planning, customers are turning to channel partners with the technical and consulting skills to help them effectively embrace hyper-scaler cloud services.”

Top cloud partners are doubling down on certification efforts and skills recruitment around hyper-scaler cloud services.

Global systems integrators, including Accenture, Atos, Deloitte, HCL Technologies, TCS, Kyndryl, Tech Mahindra and Wipro, are building practices with tens of thousands of cloud engineers and consultants. This has also included acquisitions of cloud application development and migration specialists, as well as the launch of new dedicated cloud services brands.

Smaller consultants, resellers, service providers and distributors are pursuing similar strategies as mid-market and SMB customers also demand support with cloud adoption.

“As the use cases for cloud infrastructure services expand so does the potential complexity, and we see that hybrid and multi-cloud deployments are commonplace in the market,” said Canalys Research Analyst Yi Zhang. “The hyperscalers are investing in rapid channel development and partners are responding as the opportunities grow.”

…………………………………………………………………………………………………………….

About Synergy Research Group:

Synergy provides quarterly market tracking and segmentation data on IT and Cloud related markets, including vendor revenues by segment and by region. Market shares and forecasts are provided via Synergy’s uniquely designed online database SIA ™, which enables easy access to complex data sets. Synergy’s Competitive Matrix ™ and CustomView ™ take this research capability one step further, enabling our clients to receive on-going quantitative market research that matches their internal, executive view of the market segments they compete in.

About Canalys:

Canalys is an independent analyst company that strives to guide clients on the future of the technology industry and to think beyond the business models of the past. We deliver smart market insights to IT, channel and service provider professionals around the world. We stake our reputation on the quality of our data, our innovative use of technology and our high level of customer service.

References:

https://www.canalys.com/newsroom/global-cloud-services-Q1-2022

………………………………………………………………………………………………………………………………………..

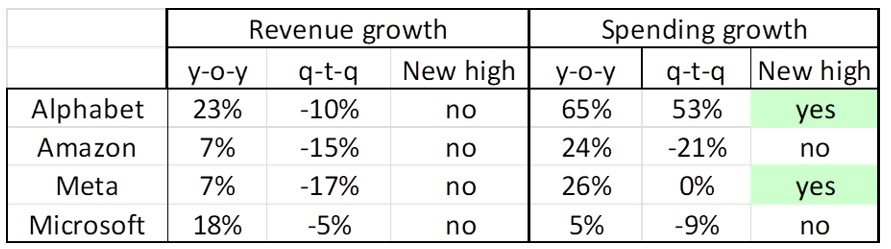

May 6, 2022 Update from Light Counting:

|

ICPs (Internet Cloud Providers) have grown spending by double digit rates (year-over-year) for many quarters and Q1 2022 looks like it will be no exception, as the combined spending of Alphabet, Amazon, Meta, and Microsoft increased 29% versus Q1 2021. What is surprising though is that Alphabet, not Meta, showed the fastest growth, with a 65% increase to more than $9.5 billion, a new record. And Alphabet’s big increase was not fueled by spending on infrastructure however, but by the closing of purchases of office facilities in New York, London, and Poland, which the company said added $4 billion to total spending in the quarter. We expect Alphabet’s Q2 capex will return from the stratosphere to the $5 billion range it has been running at. If Alphabet’s real estate spending is removed, Q1 capex for the group of four was up only 15% compared to Q1 2021, at the low end of the typical range for the Top 15 ICPs. While ICP spending appears on track to continue growing at double-digit rates this year, Q1 revenues were decidedly ‘off’ for the four majors that have reported, with no records set, and two of the four (Amazon and Meta) growing sales by only single-digit growth rates y-o-y. |

|

|

The Cloud services revenues of Alphabet, Amazon, and Microsoft continued to grow faster than overall company sales, increasing 44%, 37%, and 17% respectively.

………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………….

Network equipment makers sales growth in Q1 2022 declined by 1% y-o-y in aggregate among the reported companies, but this figure belies the fact that individual company growth rates ranged from strong double-digits (Adtran, ADVA), middling single-digits (Ericsson Networks, Infinera, ZTE), to sales declines (Nokia Networks, Ribbon Communications).

Five Chinese optical transceiver vendors have reported Q1 results, and four of them showed strong growth: HG Tech, Innolight, Accelink, and Eoptolink. CIG was negatively impacted by shutdowns in both Shanghai and Shenzhen, which affected its ability to fulfill orders.

Among U.S.-based optical component makers, Neophotonics reported Q1 2022 revenue of $89 million, up 47% year-over-year, with 400G and above products growing 70% y-o-y to $54 million. The company is now shipping production volumes of 400ZR modules to cloud and data center customers.

Two years after the start of the COVID-19 pandemic, the effects of the COVID mitigation measures continue to disrupt manufacturing, shipping, and sales in the optical industry. Several companies warned that shortages and higher component and shipping costs would persist or even worsen as 2022 progresses. And finally, costs from Russia’s invasion of Ukraine, and subsequent withdrawals from the Russian telecoms market are starting to become known, ranging from $5 million (Infinera) to 900 million Euro (Ericsson).

|

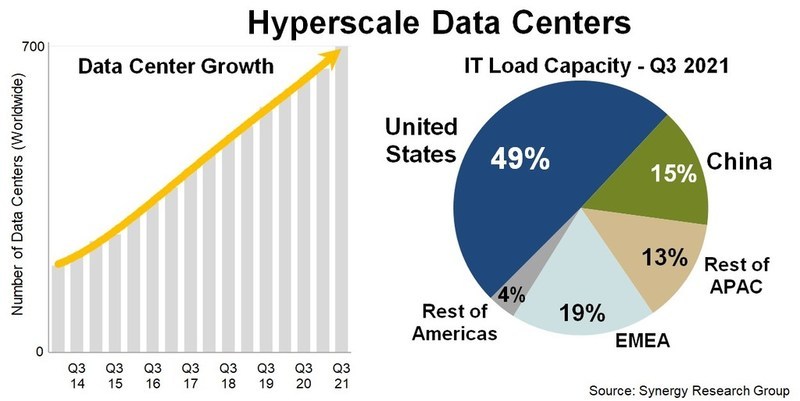

U.S. 4 big cloud companies lead world in hyperscale data centers and capacity

The U.S. continues to lead the world in total hyperscale data centers and worldwide capacity through at least 2026, according to Synergy Research Group. “The United States currently accounts for almost 40% of operational hyperscale data centers and half of all worldwide capacity,” John Dinsdale, chief analyst at Synergy Research Group, wrote in a new report. Synergy’s new hyperscale forecasts show continued rapid growth in the number of large data centers to be used by hyperscale operators in order to support their ever-expanding business operations.

With a current known pipeline of 314 future new hyperscale data centers, the installed base of operational data centers will pass the 1,000 mark in three years’ time and continue growing rapidly thereafter.

The United States currently accounts for almost 40% of operational hyperscale data centers and half of all worldwide capacity. By a wide margin, it is also the country with the most data centers in the future pipeline, followed by China, Ireland, India, Spain, Israel, Canada, Italy, Australia and the UK.

As the installed base of operational data centers continues to grow each year at double-digit percentage rates, the capacity of those data centers will grow even more rapidly as the average size increases and older facilities are expanded.

By data center capacity the leading companies are the usual suspects: Amazon, Microsoft, Google and Facebook, though it is the Chinese hyperscalers that are growing the fastest, most notably ByteDance, Alibaba and Tencent.

The companies that feature most heavily in the future new data center pipeline are Amazon, Microsoft, Facebook and Google. Each has 60 or more data center locations with at least three in each of the four regions – North America, APAC, EMEA and Latin America. Oracle, Alibaba and Tencent also have a notably broad data center presence.

“The future looks bright for hyperscale operators, with double-digit annual growth in total revenues supported in large part by cloud revenues that will be growing in the 20-30% per year range. This in turn will drive strong growth in capex generally and in data center spending specifically,” said John Dinsdale, a Chief Analyst at Synergy Research Group.

“While we see the geographic distribution, build-versus-lease distribution, average data center size and spending mix by data center component all continuing to evolve, we predict continued rapid growth throughout the hyperscale data center ecosystem. Companies who can successfully target that ecosystem with their product offerings have plenty of reasons for optimism.”

Synergy’s Hyperscale Market Tracker research service provides key data and metrics on 19 companies that meet Synergy’s hyperscale definition criteria. The data includes information on the hyperscale data center footprint, a full data center listing, analysis of critical IT load, future data center pipeline, hyperscale operator capex, hyperscale data center spending, company revenues, and five-year forecasts for the key metrics.

Synergy Research Group tracks the data center footprints and expansion plans of 19 of the world’s largest cloud and internet service companies, including the largest providers of infrastructure, platforms, software, search, social media, e-commerce, and gaming.

About Synergy Research Group:

Synergy provides quarterly market tracking and segmentation data on IT and Cloud related markets, including vendor revenues by segment and by region. Market shares and forecasts are provided via Synergy’s uniquely designed online database SIA ™, which enables easy access to complex data sets. Synergy’s Competitive Matrix ™ and CustomView ™ take this research capability one step further, enabling our clients to receive on-going quantitative market research that matches their internal, executive view of the market segments they compete in.

Synergy Research Group helps marketing and strategic decision makers around the world via its syndicated market research programs and custom consulting projects. For nearly two decades, Synergy has been a trusted source for quantitative research and market intelligence.

References:

Synergy Research: Hyperscale Operator Capex at New Record in Q3-2020

Synergy Research: Strong demand for Colocation with Equinix, Digital Realty and NTT top providers

Synergy Research: Ethernet Switch & Router revenues drop to 7 year low in Q1-2020

Synergy Research: Cloud Service Provider Rankings (See Comments for Details)

Gartner: AWS, Azure, and Google Cloud top rankings for Cloud Infrastructure and Platform Services

Gartner’s latest Magic Quadrant report for cloud infrastructure and platform services (CIPS) ranks Amazon Web Services (AWS), Microsoft Azure, and Google Cloud as the top cloud service providers.

Beyond the top three players, Gartner placed Alibaba Cloud in the “visionaries” box, and ranked Oracle, Tencent Cloud, and IBM as “niche players,” in that order.

The scope of Gartner’s Magic Quadrant for CIPS includes infrastructure as a service (IaaS) and integrated platform as a service (PaaS) offerings. These include application PaaS (aPaaS), functions as a service (FaaS), database PaaS (dbPaaS), application developer PaaS (adPaaS) and industrialized distributed cloud offerings that are often deployed in enterprise data centers (i.e. private clouds).

Figure 1: Magic Quadrant for Cloud Infrastructure and Platform Services

……………………………………………………………………………………………..

……………………………………………………………………………………………..

1. Gartner analysts praise Amazon AWS for its broad support of IT services, including cloud native, edge compute, and processing mission-critical workloads. Also noteworthy is Amazon’s “engineering prowess” in designing CPUs and silicon. This focus on owning increasingly larger portions of the supply chain for cloud infrastructure bolsters the No. 1 cloud provider’s long-term outlook and earns it advantages against competitors, according to the Gartner report.

“AWS often sets the pace in the market for innovation, which guides the roadmaps of other CIPS providers. As the innovation leader, AWS has materially more mind share across a broad range of personas and customer types than all other providers,” the analysts wrote.

AWS, which recently achieved $59 billion in annual revenues, contributed 13% of Amazon’s total revenue and almost 54% of its profit during second-quarter 2021.

AWS’s future focus is on attempting to own increasingly larger portions of the supply chain used to deliver cloud services to customers. Its operations are geographically diversified, and its clients tend to be early-stage startups to large enterprises.

……………………………………………………………………………………

2. Microsoft Azure, which remains the #2 Cloud Services Provider, sports a 51% annual growth rate. It earned praise from Gartner for its strength “in all use cases, which include the extended cloud and edge computing,” particularly among Microsoft-centric organizations.

The No. 2 public cloud provider also enjoys broad appeal. “Microsoft has the broadest set of capabilities, covering a full range of enterprise IT needs from SaaS to PaaS and IaaS, compared to any provider in this market,” the analysts wrote.

Microsoft has the broadest sets of capabilities, covering a full range of enterprise IT needs from SaaS to PaaS and IaaS, compared to any provider in this market. From the perspective of IaaS and PaaS, Microsoft has compelling capabilities ranging from developer tooling such as Visual Studio and GitHub to public cloud services.

Enterprises often choose Azure because of the trust in Microsoft built over many years. Such strategic alignment with Microsoft gives Azure advantages across nearly every vertical market.

“Strategic alignment with Microsoft gives Azure advantages across nearly every vertical market,” Gartner said. However, Gartner criticized Microsoft for very complex licensing and contracting. Also, Microsoft sales pressures to grow overall account revenue prevent it from effectively deploying Azure to bring down a customer’s total Microsoft costs.

Microsoft Azure’s forays in operational databases and big data solutions have been markedly successful over the past year. Azure’s Cosmos DB and its joint offering with Databricks stand out in terms of customer adoption.

………………………………………………………………………………………

3. Google Cloud Platform (GCP) is strong in nearly all use cases and is slowly improving its edge compute capabilities. Google continues to invest in being a broad-based provider of IaaS and PaaS by expanding its capabilities as well as the size and reach of its go-to-market operations. Its operations are geographically diversified, and its clients tend to be startups to large enterprises.

The company is making gains in mindshare among enterprises and “lands at the top of survey results when infrastructure leaders are asked about strategic cloud provider selection in the next few years,” Gartner analysts wrote. Google is also closing “meaningful gaps with AWS and Microsoft Azure in CIPS capabilities,” and outpacing its larger competitors in some cases, according to the report.

The analysts also noted that Google Cloud “is the only CIPS provider with significant market share that currently operates at a financial loss.” The No. 3 public cloud provider reported a 54% year-over-year revenue increase and a 59% decrease in operating losses during Q2.

………………………………………………………………………………..

Separately, Dell’Oro Group Research Director Baron Fung recently said that hyperscalers make up a big portion of the overall IT market, with the 10 largest cloud-service providers, including AWS, Google, and Alibaba, accounting for up to 40% of global data center spending, and “some of these companies can have really tremendous weight on the ecosystem.”

The Dell’Oro report noted that some providers have deployed accelerated servers using internally developed artificial intelligence (AI) chips, while other cloud providers and enterprises have commonly deployed solutions based on graphics processing units (GPUs) and FPGAs.

Fung explained that this model has also spilled over into those cloud providers also building their own servers and networking equipment to better fit their needs while “moving away from the traditional model in which users are buying equipment from companies like Dell and [Hewlett Packard Enterprise]. … It’s really disrupting the vendor landscape.”

Certain applications—such as cloud gaming, autonomous driving, and industrial automation—are latency-sensitive, requiring Multi-Access Edge Compute, or MEC, nodes to be situated at the network edge, where sensors are located. Unlike cloud computing, which has been replacing enterprise data centers, edge computing creates new market opportunities for novel use cases.

…………………………………………………………………………………

References:

https://www.gartner.com/doc/reprints?id=1-26YXE86I&ct=210729&st=sb

AWS deployed in Digital Realty Data Centers at 100Gbps & for Bell Canada’s 5G Edge Computing

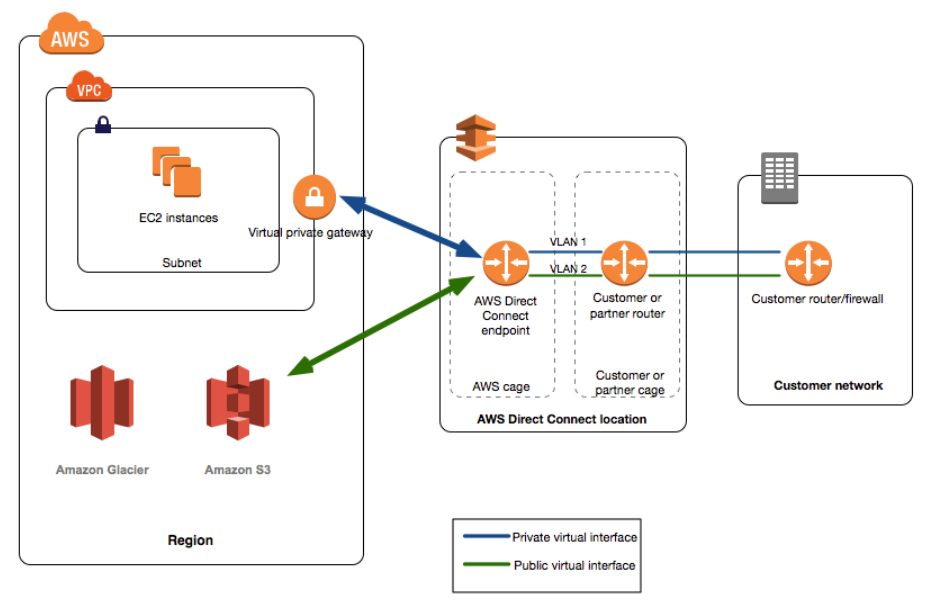

I. Digital Realty, the largest global provider of cloud- and carrier-neutral data center, colocation and interconnection solutions, announced today the deployment of Amazon Web Services (AWS) Direct Connect 100Gbps capability at the company’s Westin Building Exchange in Seattle, Washington and on its Interxion Dublin Campus in Ireland, bringing one of the fastest AWS Direct Connect [1.] capabilities to PlatformDIGITAL®. Digital Realty’s platform connects 290 centers of data exchange with over 4,000 participants around the world, enabling enterprise customers to scale digital business and interconnect distributed workflows on a first of its kind global data center platform.

Note 1. AWS Direct Connect is a cloud service solution that makes it easy to establish a dedicated network connection from your premises to AWS. This can increase bandwidth throughput and provide a more consistent network experience than internet-based connections.

……………………………………………………………………………………………………………………………….

As organizations bring on new technologies and solutions such as artificial intelligence (AI) and IoT at scale, the explosive growth of digital business is posing new challenges, as data takes on its own gravity, becoming heavier, denser, and more expensive to move.

The new AWS Direct Connect 100Gbps is tailored to providing easy access to larger data sets, enabling high availability, reliability and lower latency. As a result, customers will be able to move bandwidth-heavy workloads seamlessly – and break through the barriers posed by data gravity. Customers gain access to strategic IT infrastructure that can aggregate and maintain data with less design time and spend, enabling access to AWS with one of the fastest and highest quality AWS network connections available.

As an AWS Outposts Ready Partner, Digital Realty’s global platform is optimized to support the needs of data-intensive, secure hybrid IT deployments. Digital Realty supports AWS Outposts deployments by enabling access to more than 40 AWS Direct Connect locations globally to address local processing, compliance, and storage requirements, while optimizing cost and performance. When coupled with the availability of AWS Direct Connect 100Gbps connections, the Westin Building Exchange and Interxion Dublin campuses become ideal meeting places for customers to tackle data gravity challenges and unlock new opportunities with their AWS Outposts deployments.

“As emerging technologies such as AI, VR and blockchain move from the margins to the mainstream, enterprises need new levels of performance from their hybrid solutions,” said Tom Sly, General Manager, AWS Direct Connect. “Deploying AWS Direct Connect at 100Gbps at Digital Realty facilities in Seattle and Dublin is critical to our strategy of helping customers build more sophisticated applications with increased flexibility, scalability and reliability. We’re excited to see the value Digital Realty’s PlatformDIGITAL® delivers for our mutual customers.”

The Westin Building Exchange serves as a primary interconnection hub for the Pacific Northwest, linking Canada, Alaska and Asia along the Pacific Rim. The building is one of the most densely interconnected facilities in North America, and is home to leading global cloud, content and interconnection providers, housing over 150 carriers and more than 10,000 cross-connects, giving Amazon customers low-latency access to the largest companies and services representing the digital economy. The 34-story tower is adjacent to Amazon’s existing 4.1 million square foot campus in Seattle.

Digital Realty offers six colocation data centers in the Irish capital, which forms a strategic bridge between Europe and the U.S. Ireland has particular significance as a global trading hub and provides the headquarters location for several global multinationals within the software, finance and life science industries. Multiple transatlantic cables also land in Ireland before continuing to the UK or continental Europe, making Interxion Dublin a prime location for the new AWS Direct Connect 100Gbps at the heart of a vibrant connected data community.

“Today’s announcement of the opening of AWS Direct Connect 100Gbps on-ramps significantly expands opportunities for customers to scale their digital transformation through our global PlatformDIGITAL®,” added Digital Realty Chief Technology Officer Chris Sharp. “AWS serves some of the world’s most innovative and demanding customers, from start-up to enterprise, that are looking to drive the digital economy forward. Our platform expands the coverage, capacity, and next-generation connectivity that AWS customers need to extend workloads to the cloud rapidly. We are honored to open up next-generation access in collaboration with AWS and specifically at the heart of the rich digital communities at the Westin Building Exchange and on our Interxion Dublin campus.”

The new deployments create centers of data exchange in Network Hubs deployed on PlatformDIGITAL®, enabling distributed workflows to be rapidly scaled and securely interconnected – reducing operating costs, enhancing visibility, saving time and improving compliance. The new capability also gives AWS customers instant access to a growing list of powerful AWS services such as Blockchain, Machine Learning, IoT and countless others – all over a direct, private connection optimized for high performance and security.

AIB, Inc., a leading data exchange and management firm with a software as a service platform deployed at over 1,600 automotive industry customers, recognized the value of deploying a physical Network Hub on PlatformDIGITAL® coupled with a virtual direct interconnection to AWS to enable flexibility in its hybrid IT environment.

“Our Texas-based operations required new cloud zone diversity solutions for our cloud native national vision. Digital Realty provided an innovative and comprehensive solution for AWS cloud access through PlatformDIGITAL®,” said Kellen Dunham, CTO, AIB, Inc.

Digital Realty’s global platform enables low-latency access to both the nearest AWS Region as well as a wide array of options to connect edge deployments or devices. Customers can securely connect to their desired AWS Region using both physical and virtual connectivity options. Globally, PlatformDIGITAL® offers access to more than 40 AWS Direct Connect locations, including 11 in EMEA, providing secure, high-performance access to numerous AWS Outposts-Ready data centers around the world. In addition, the Digital Realty Internet Exchange (DRIX) supports AWS Direct Peering capabilities and dedicated access to multiple third-party Internet Exchanges on PlatformDIGITAL®, providing a direct path from on-premise networks to AWS. The solution is part of PlatformDIGITAL®’s robust and expanding partner community that solves hybrid IT challenges for the enterprise.

About Digital Realty:

Digital Realty supports the world’s leading enterprises and service providers by delivering the full spectrum of data center, colocation and interconnection solutions. PlatformDIGITAL®, the company’s global data center platform, provides customers a trusted foundation and proven Pervasive Datacenter Architecture (PDx™) solution methodology for scaling digital business and efficiently managing data gravity challenges. Digital Realty’s global data center footprint gives customers access to the connected communities that matter to them with 290 facilities in 47 metros across 24 countries on six continents. To learn more about Digital Realty, please visit digitalrealty.com or follow us on LinkedIn and Twitter.

Additional Resources:

- For more information on locations and availability please visit www.digitalrealty.com/cloud/aws-direct-connect

- Learn about Digital Realty’s Data Hub featuring AWS Outposts solution for data localization and compliance on PlatformDIGITAL

- Explore global coverage options on PlatformDIGITAL®

- Read the AIB case study on deploying hybrid IT flexibly with Digital Realty and AWS

………………………………………………………………………………………………………………………………………

II. Bell Canada today announced it has entered into an agreement with Amazon Web Services, Inc. (AWS) to modernize the digital experience for Bell customers and support 5G innovation across Canada. Bell will use the breadth and depth of AWS technologies to create and scale new consumer and business applications faster, as well as enhance how its voice, wireless, television and internet subscribers engage with Bell services and content such as streaming video. In addition, AWS and Bell are teaming up to bring AWS Wavelength to Canada, deploying it at the edge of Bell’s 5G network to allow developers to build ultra-low-latency applications for mobile devices and users. With this rollout, Bell will become the first Canadian communications company to offer AWS-powered multi-access edge computing (MEC) to business and government users.

“Bell’s partnership with AWS further heightens both our 5G network leadership and the Bell customer experience with greater automation, enhanced agility and streamlined service options. Together, we’ll provide the next-generation service innovations for consumers and business customers that will support Canada’s growth and prosperity in the years ahead,” said Mirko Bibic, President and CEO of BCE and Bell Canada. “With this first in Canada partnership to deploy AWS Wavelength at the network edge, where 5G’s high capacity, unprecedented speed and ultra low latency are crucial for next-generation applications, Bell and AWS are opening up all-new opportunities for developers to enhance our customers’ digital experiences. As Canada recovers from COVID-19 and looks forward to the economic, social and sustainability advantages of 5G, Bell is moving rapidly to expand the country’s next-generation network infrastructure capabilities. Bell’s accelerated capital investment plan, supported by government and regulatory policies that encourage significant investment and innovation in network facilities, will double our 5G coverage this year while growing the high-capacity fibre connections linking our national network footprint.”

The speed and increased bandwidth capacity of the Bell 5G network support applications that can respond much more quickly and handle greater volumes of data than previous generations of wireless technology. Through its relationship with AWS, Bell will leverage AWS Wavelength to embed AWS compute and storage services at the edge of its 5G telco networks so that applications developers can serve edge computing workloads like machine learning, IoT, and content streaming. Bell and AWS will move 5G data processing to the network edge to minimize latency and power customer-led 5G use cases such as immersive gaming, ultra-high-definition video streaming, self-driving vehicles, smart manufacturing, augmented reality, machine learning inference and distance learning throughout Canada. Developers will also have direct access to AWS’s full portfolio of cloud services to enhance and scale their 5G applications.

Optimized for MEC applications, AWS Wavelength minimizes the latency involved in sending data to and from a mobile device. AWS delivers the service through Wavelength Zones, which are AWS infrastructure deployments that embed AWS compute and storage services within a telecommunications provider’s datacenters at the edge of the 5G network so that data traffic can reach application servers within the zones without leaving the mobile provider’s network. Application data need only travel from the device to a cell tower to an AWS Wavelength Zone running in a metro aggregation site. This results in increased performance by avoiding the multiple hops between regional aggregation sites and across the internet that traditional mobile architectures require.

Outside of the AWS Wavelength deployment, Bell is also continuing to evolve its offerings to enhance its customers’ digital experiences. From streaming media to network performance to customer service, Bell will leverage AWS’s extensive portfolio of cloud capabilities to better serve its tens of millions of customers coast to coast. This work will allow Bell’s product innovation teams to streamline and automate processes as well as adapt more quickly to changing market conditions and customer preferences.

“As the first telecommunications company in Canada to provide access to AWS Wavelength, Bell is opening the door for businesses and organizations throughout the country to combine the speed of its 5G network with the power and versatility of the world’s leading cloud. Together, Bell and AWS are bringing the transformative power of cloud and 5G to users all across Canada,” said Andy Jassy, CEO of Amazon Web Services, Inc. “Cloud and 5G are changing the business models for telecommunications companies worldwide, and AWS’s unmatched infrastructure capabilities in areas like machine learning and IoT will enable leaders like Bell to deliver new digital experiences that will enhance their customers’ lives.”

Launched in June 2020, Bell’s 5G network is now available to approximately 35% of the Canadian population. On February 4, Bell announced it was accelerating its typical annual capital investment of $4 billion by an additional $1 billion to $1.2 billion over the next 2 years to rapidly expand its fibre, rural Wireless Home Internet and 5G networks, followed May 31 by the announcement of a further up to $500 million increase in capital spending. With this accelerated capital investment plan, Bell’s 5G network is on track to reach approximately 70% of the Canadian population by year end.

5G will support a wide range of new consumer and business applications in coming years, including virtual and augmented reality, artificial intelligence and machine learning, connected vehicles, remote workforces, telehealth and Smart Cities, with unprecedented IoT opportunities for business and government. 5G is also accelerating the positive environmental impact of Bell’s networks. The Canadian Wireless Telecommunications Association estimates 5G technology can support 1000x the traffic at half of current energy consumption over the next decade, enhancing the potential of IoT and other next-generation technologies to support sustainable economic growth, and supporting Bell’s own objective to be carbon neutral across its operations in 2025.

About Bell Canada:

The Bell team builds world-leading broadband wireless and fiber networks, provides innovative mobile, TV, Internet and business communications services and delivers the most compelling content with premier television, radio, out of home and digital media brands. With a goal to advance how Canadians connect with each other and the world, Bell serves more than 22 million consumer and business customer connections across every province and territory. Founded in Montréal in 1880, Bell is wholly owned by BCE Inc. (TSX, NYSE: BCE). To learn more, please visit Bell.ca or BCE.ca.

Bell supports the social and economic prosperity of our communities with a commitment to the highest environmental, social and governance (ESG) standards. We measure our progress in increasing environmental sustainability, achieving a diverse and inclusive workplace, leading data governance and protection, and building stronger and healthier communities. This includes confronting the challenge of mental illness with the Bell Let’s Talk initiative, which drives mental health awareness and action with programs like the annual Bell Let’s Talk Day and Bell funding for community care, research and workplace programs nationwide all year round.

……………………………………………………………………………………………………………………………………..

Comment and Analysis:

AWS already has an edge compute footprint that covers parts of Asia, Europe and North America. AWS, Google Cloud and Microsoft Azure increasingly (unsurprisingly) look like the real power brokers and empire builders in multi-access/mobile edge computing. Rogers and Telus, Bell’s two main rivals. will likely contract with one of the three big cloud service providers for their 5G edge computing needs.

References: