OpenAI

Open AI raises $8.3B and is valued at $300B; AI speculative mania rivals Dot-com bubble

According to the Financial Times (FT), OpenAI (the inventor of Chat GPT) has raised another $8.3 billion in a massively over-subscribed funding round, including $2.8 billion from Dragoneer Investment Group, a San Francisco-based technology-focused fund. Leading VCs that also participated in the funding round included Founders Fund, Sequoia Capital, Andreessen Horowitz, Coatue Management, Altimeter Capital, D1 Capital Partners, Tiger Global and Thrive Capital, according to the people with knowledge of the deal.

The oversubscribed funding round came months ahead of schedule. OpenAI initially raised $2.5 billion from VC firms in March when it announced its intention to raise $40 billion in a round spearheaded by SoftBank. The Chat GPT maker is now valued at $300 billion.

OpenAI’s annual recurring revenue has surged to $12bn, according to a person with knowledge of OpenAI’s finances, and the group is set to release its latest model, GPT-5, this month.

OpenAI is in the midst of complex negotiations with Microsoft that will determine its corporate structure. Rewriting the terms of the pair’s current contract, which runs until 2030, is seen as a prerequisite to OpenAI simplifying its structure and eventually going public. The two companies have yet to agree on key issues such as how long Microsoft will have access to OpenAI’s intellectual property. Another sticking point is the future of an “AGI clause”, which allows OpenAI’s board to declare that the company has achieved a breakthrough in capability called “artificial general intelligence,” which would then end Microsoft’s access to new models.

An additional risk is the increasing competition from rivals such as Anthropic — which is itself in talks for a multibillion-dollar fundraising — and is also in a continuing legal battle with Elon Musk. The FT also reported that Amazon is set to increase its already massive investment in Anthropic.

OpenAI CEO Sam Altman. The funding forms part of a round announced in March that values the ChatGPT maker at $300bn © Yuichi Yamazaki/AFP via Getty Images

………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………..

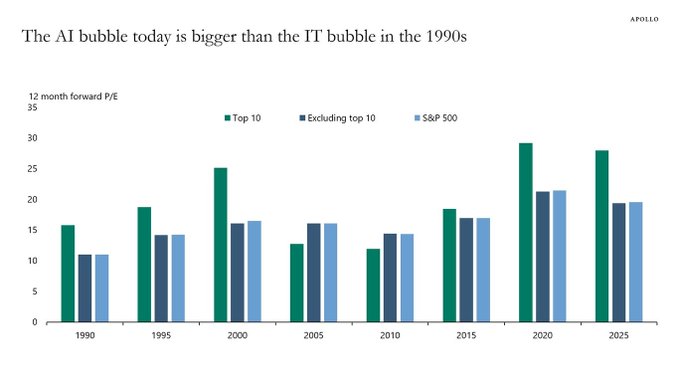

While AI is the transformative technology of this generation, comparisons are increasingly being made with the Dot-com bubble. 1999 saw such a speculative frenzy for anything with a ‘.com’ at the end that valuations and stock markets reached unrealistic and clearly unsustainable levels. When that speculative bubble burst, the global economy fell into an extended recession in 2001-2002. As a result, analysts are now questioning the wisdom of the current AI speculative bubble and fearing dire consequences when it eventually bursts. Just as with the Dot-com bubble, AI revenues are nowhere near justifying AI company valuations, especially for private AI companies that are losing tons of money (see Open AI losses detailed below).

Torsten Slok, Partner and Chief Economist at Apollo Global Management via ZERO HEDGE on X: “The difference between the IT bubble in the 1990s and the AI bubble today is that the top 10 companies in the S&P 500 today (including Nvidia, Microsoft, Amazon, Google, and Meta) are more overvalued than the IT companies were in the 1990s.”

AI private companies may take a lot longer to reach the lofty profit projections institutional investors have assumed. Their reliance on projected future profits over current fundamentals is a dire warning sign to this author. OpenAI, for example, faces significant losses and aggressive revenue targets to become profitable. OpenAI reported an estimated loss of $5 billion in 2024, despite generating $3.7 billion in revenue. The company is projected to lose $14 billion in 2026 while total projected losses from 2023 to 2028 are expected to reach $44 billion.

Other AI bubble data points (publicly traded stocks):

- The proportion of the S&P 500 represented by the 10 largest companies is significantly higher now (almost 40%) compared to 25% in 1999. This indicates a more concentrated market driven by a few large technology companies deeply involved in AI development and adoption.

- Investment in AI infrastructure has reportedly exceeded the spending on telecom and internet infrastructure during the dot-com boom and continues to grow, suggesting a potentially larger scale of investment in AI relative to the prior period.

- Some indices tracking AI stocks have demonstrated exceptionally high gains in a short period, potentially surpassing the rates of the dot-com era, suggesting a faster build-up in valuations.

- The leading hyperscalers, such as Amazon, Microsoft, Google, and Meta, are investing vast sums in AI infrastructure to capitalize on the burgeoning AI market. Forecasts suggest these companies will collectively spend $381 billion in 2025 on AI-ready infrastructure, a significant increase from an estimated $270 billion in 2024.

Check out this YouTube video: “How AI Became the New Dot-Com Bubble”

References:

https://www.ft.com/content/76dd6aed-f60e-487b-be1b-e3ec92168c11

https://www.telecoms.com/ai/openai-funding-frenzy-inflates-the-ai-bubble-even-further

https://x.com/zerohedge/status/1945450061334216905

AI spending is surging; companies accelerate AI adoption, but job cuts loom large

Will billions of dollars big tech is spending on Gen AI data centers produce a decent ROI?

Canalys & Gartner: AI investments drive growth in cloud infrastructure spending

AI Echo Chamber: “Upstream AI” companies huge spending fuels profit growth for “Downstream AI” firms

Huawei launches CloudMatrix 384 AI System to rival Nvidia’s most advanced AI system

Gen AI eroding critical thinking skills; AI threatens more telecom job losses

Dell’Oro: AI RAN to account for 1/3 of RAN market by 2029; AI RAN Alliance membership increases but few telcos have joined

OpenAI partners with G42 to build giant data center for Stargate UAE project

OpenAI, the maker of ChatGPT, said it was partnering with United Arab Emirates firm G42 and others to build a huge artificial-intelligence data center in Abu Dhabi, UAE. It will be the company’s first large-scale project outside the U.S. OpenAI and G42 said Thursday the data center would have a capacity of 1 gigawatt (1 GW) [1], putting it among the most powerful in the world. OpenAI and G42 didn’t disclose a cost for the huge data center, although similar projects planned in the U.S. run well over $10 billion.

………………………………………………………………………………………………………………………………………………………………………..

Note 1. 1 GW of continuous power is enough to run roughly one million top‑end Nvidia GPUs once cooling and power‑conversion overheads are included. That’s roughly the annual electricity used by a city the size of San Francisco or Washington.

“Think of 1MW as the backbone for a mid‑sized national‑language model serving an entire country,” Mohammed Soliman, director of the strategic technologies and cybersecurity programme at the Washington-based Middle East Institute think tank, told The National.

………………………………………………………………………………………………………………………………………………………………………..

The project, called Stargate UAE, is part of a broader push by the U.A.E. to become one of the world’s biggest funders of AI companies and infrastructure—and a hub for AI jobs. The Stargate project is led by G42, an AI firm controlled by Sheikh Tahnoon bin Zayed al Nahyan, the U.A.E. national-security adviser and brother of the president. As part of the deal, an enhanced version of ChatGPT would be available for free nationwide, OpenAI said.

The first 200-megawatt chunk of the data center is due to be completed by the end of 2026, while the remainder of the project hasn’t been finalized. The buildings’ construction will be funded by G42, and the data center will be operated by OpenAI and tech company Oracle, G42 said. Other partners include global tech investor, AI/GPU chip maker Nvidia and network-equipment company Cisco.

Data centers are grouped into three sizes: small, measuring up to about 1,000 square feet (93 square metres), medium, around 10,000 sqft to 50,000 sqft, and large, which are more than 50,000 sqft, according to Data Centre World. On a monthly basis, they are estimated to consume as much as 36,000kWh, 2,000MW and 10MW, respectively.

UAE has at least 17 data centers, according to data compiled by industry tracker DataCentres.com. AFP

The data-center project is the fruit of months of negotiations between the Gulf petrostate and the Trump administration that culminated in a deal last week to allow the U.A.E. to import up to 500,000 AI chips a year, people familiar with the deal have said.

That accord overturned Biden administration restrictions that limited access to cutting-edge AI chips to only the closest of U.S. allies, given concerns that the technology could fall into the hands of adversaries, particularly China.

To convince the Trump administration it was a reliable partner, the U.A.E. embarked on a multipronged charm offensive. Officials from the country publicly committed to investing more than $1.4 trillion in the U.S., used $2 billion of cryptocurrency from Trump’s World Liberty Financial to invest in a crypto company, and hosted the CEOs of the top U.S. tech companies for chats in a royal palace in Abu Dhabi.

As part of the U.S.-U.A.E agreement, the Gulf state “will fund the build-out of AI infrastructure in the U.S. at least as large and powerful as that in the UAE,” David Sacks, the Trump administration’s AI czar, said earlier this week on social media.

U.A.E. fund MGX is already an investor in Stargate, the planned $100 billion network of U.S. data centers being pushed by OpenAI and SoftBank.

Similar accords with other U.S. tech companies are expected in the future, as U.A.E. leaders seek to find other tenants for their planned 5-gigawatt data-center cluster. The project was revealed last week during Trump’s visit to the region, where local leaders showed the U.S. president a large model of the project.

The U.A.E. is betting U.S. tech giants will want servers running near users in Africa and India, slightly shaving off the time it takes to transmit data there.

Stargate U.A.E. comes amid a busy week for OpenAI. On Wednesday, a developer said it secured $11.6 billion in funding to push ahead with an expansion of a data center planned for OpenAI in Texas. OpenAI also announced it was purchasing former Apple designer Jony Ive’s startup for $6.5 billion.

References:

https://www.wsj.com/tech/open-ai-abu-dhabi-data-center-1c3e384d?mod=ai_lead_pos6

https://www.thenationalnews.com/future/technology/2025/05/24/stargate-uae-ai-g42/

Wedbush: Middle East (Saudi Arabia and UAE) to be next center of AI infrastructure boom

Cisco to join Stargate UAE consortium as a preferred tech partner

Sources: AI is Getting Smarter, but Hallucinations Are Getting Worse

Recent reports suggest that AI hallucinations—instances where AI generates false or misleading information—are becoming more frequent and present growing challenges for businesses and consumers alike who rely on these technologies. More than two years after the arrival of ChatGPT, tech companies, office workers and everyday consumers are using A.I. bots for an increasingly wide array of tasks. But there is still no way of ensuring that these systems produce accurate information.

A groundbreaking study featured in the PHARE (Pervasive Hallucination Assessment in Robust Evaluation) dataset has revealed that AI hallucinations are not only persistent but potentially increasing in frequency across leading language models. The research, published on Hugging Face, evaluated multiple large language models (LLMs) including GPT-4, Claude, and Llama models across various knowledge domains.

“We’re seeing a concerning trend where even as these models advance in capability, their propensity to hallucinate remains stubbornly present,” notes the PHARE analysis. The comprehensive benchmark tested models across 37 knowledge categories, revealing that hallucination rates varied significantly by domain, with some models demonstrating hallucination rates exceeding 30% in specialized fields.

Hallucinations are when AI bots produce fabricated information and present it as fact. Photo Credit: More SOPA Images/LightRocket via Getty Images

Today’s A.I. bots are based on complex mathematical systems that learn their skills by analyzing enormous amounts of digital data. These systems use mathematical probabilities to guess the best response, not a strict set of rules defined by human engineers. So they make a certain number of mistakes. “Despite our best efforts, they will always hallucinate,” said Amr Awadallah, the chief executive of Vectara, a start-up that builds A.I. tools for businesses, and a former Google executive.

“That will never go away,” he said. These AI bots do not — and cannot — decide what is true and what is false. Sometimes, they just make stuff up, a phenomenon some A.I. researchers call hallucinations. On one test, the hallucination rates of newer A.I. systems were as high as 79%.

Amr Awadallah, the chief executive of Vectara, which builds A.I. tools for businesses, believes A.I. “hallucinations” will persist.Credit…Photo credit: Cayce Clifford for The New York Times

AI companies like OpenAI, Google, and DeepSeek have introduced reasoning models designed to improve logical thinking, but these models have shown higher hallucination rates compared to previous versions. For more than two years, those companies steadily improved their A.I. systems and reduced the frequency of these errors. But with the use of new reasoning systems, errors are rising. The latest OpenAI systems hallucinate at a higher rate than the company’s previous system, according to the company’s own tests.

For example, OpenAI’s latest models (o3 and o4-mini) have hallucination rates ranging from 33% to 79%, depending on the type of question asked. This is significantly higher than earlier models, which had lower error rates. Experts are still investigating why this is happening. Some believe that the complex reasoning processes in newer AI models may introduce more opportunities for errors.

Others suggest that the way these models are trained might be amplifying inaccuracies. For several years, this phenomenon has raised concerns about the reliability of these systems. Though they are useful in some situations — like writing term papers, summarizing office documents and generating computer code — their mistakes can cause problems. Despite efforts to reduce hallucinations, AI researchers acknowledge that hallucinations may never fully disappear. This raises concerns for applications where accuracy is critical, such as legal, medical, and customer service AI systems.

The A.I. bots tied to search engines like Google and Bing sometimes generate search results that are laughably wrong. If you ask them for a good marathon on the West Coast, they might suggest a race in Philadelphia. If they tell you the number of households in Illinois, they might cite a source that does not include that information. Those hallucinations may not be a big problem for many people, but it is a serious issue for anyone using the technology with court documents, medical information or sensitive business data.

“You spend a lot of time trying to figure out which responses are factual and which aren’t,” said Pratik Verma, co-founder and chief executive of Okahu, a company that helps businesses navigate the hallucination problem. “Not dealing with these errors properly basically eliminates the value of A.I. systems, which are supposed to automate tasks for you.”

For more than two years, companies like OpenAI and Google steadily improved their A.I. systems and reduced the frequency of these errors. But with the use of new reasoning systems, errors are rising. The latest OpenAI systems hallucinate at a higher rate than the company’s previous system, according to the company’s own tests.

The company found that o3 — its most powerful system — hallucinated 33% of the time when running its PersonQA benchmark test, which involves answering questions about public figures. That is more than twice the hallucination rate of OpenAI’s previous reasoning system, called o1. The new o4-mini hallucinated at an even higher rate: 48 percent.

When running another test called SimpleQA, which asks more general questions, the hallucination rates for o3 and o4-mini were 51% and 79%. The previous system, o1, hallucinated 44% of the time.

In a paper detailing the tests, OpenAI said more research was needed to understand the cause of these results. Because A.I. systems learn from more data than people can wrap their heads around, technologists struggle to determine why they behave in the ways they do.

“Hallucinations are not inherently more prevalent in reasoning models, though we are actively working to reduce the higher rates of hallucination we saw in o3 and o4-mini,” a company spokeswoman, Gaby Raila, said. “We’ll continue our research on hallucinations across all models to improve accuracy and reliability.”

Tests by independent companies and researchers indicate that hallucination rates are also rising for reasoning models from companies such as Google and DeepSeek.

Since late 2023, Mr. Awadallah’s company, Vectara, has tracked how often chatbots veer from the truth. The company asks these systems to perform a straightforward task that is readily verified: Summarize specific news articles. Even then, chatbots persistently invent information. Vectara’s original research estimated that in this situation chatbots made up information at least 3% of the time and sometimes as much as 27%.

In the year and a half since, companies such as OpenAI and Google pushed those numbers down into the 1 or 2% range. Others, such as the San Francisco start-up Anthropic, hovered around 4%. But hallucination rates on this test have risen with reasoning systems. DeepSeek’s reasoning system, R1, hallucinated 14.3% of the time. OpenAI’s o3 climbed to 6.8%.

Sarah Schwettmann, co-founder of Transluce, said that o3’s hallucination rate may make it less useful than it otherwise would be. Kian Katanforoosh, a Stanford adjunct professor and CEO of the upskilling startup Workera, told TechCrunch that his team is already testing o3 in their coding workflows, and that they’ve found it to be a step above the competition. However, Katanforoosh says that o3 tends to hallucinate broken website links. The model will supply a link that, when clicked, doesn’t work.

AI companies are now leaning more heavily on a technique that scientists call reinforcement learning. With this process, a system can learn behavior through trial and error. It is working well in certain areas, like math and computer programming. But it is falling short in other areas.

“The way these systems are trained, they will start focusing on one task — and start forgetting about others,” said Laura Perez-Beltrachini, a researcher at the University of Edinburgh who is among a team closely examining the hallucination problem.

Another issue is that reasoning models are designed to spend time “thinking” through complex problems before settling on an answer. As they try to tackle a problem step by step, they run the risk of hallucinating at each step. The errors can compound as they spend more time thinking.

“What the system says it is thinking is not necessarily what it is thinking,” said Aryo Pradipta Gema, an A.I. researcher at the University of Edinburgh and a fellow at Anthropic.

New research highlighted by TechCrunch indicates that user behavior may exacerbate the problem. When users request shorter answers from AI chatbots, hallucination rates actually increase rather than decrease. “The pressure to be concise seems to force these models to cut corners on accuracy,” the TechCrunch article explains, challenging the common assumption that brevity leads to greater precision.

References:

https://www.nytimes.com/2025/05/05/technology/ai-hallucinations-chatgpt-google.html

The Confidence Paradox: Why AI Hallucinations Are Getting Worse, Not Better

https://www.forbes.com/sites/conormurray/2025/05/06/why-ai-hallucinations-are-worse-than-ever/

https://techcrunch.com/2025/04/18/openais-new-reasoning-ai-models-hallucinate-more/

Goldman Sachs: Big 3 China telecom operators are the biggest beneficiaries of China’s AI boom via DeepSeek models; China Mobile’s ‘AI+NETWORK’ strategy

Telecom sessions at Nvidia’s 2025 AI developers GTC: March 17–21 in San Jose, CA

Nvidia AI-RAN survey results; AI inferencing as a reinvention of edge computing?

Does AI change the business case for cloud networking?

Deutsche Telekom and Google Cloud partner on “RAN Guardian” AI agent

Ericsson’s sales rose for the first time in 8 quarters; mobile networks need an AI boost

Reuters & Bloomberg: OpenAI to design “inference AI” chip with Broadcom and TSMC

Bloomberg reports that OpenAI, the fast-growing company behind ChatGPT, is working with Broadcom Inc. to develop a new artificial intelligence chip specifically focused on running AI models after they’ve been trained, according to two people familiar with the matter. The two companies are also consulting with Taiwan Semiconductor Manufacturing Company(TSMC) the world’s largest chip contract manufacturer. OpenAI has been planning a custom chip and working on its uses for the technology for around a year, the people said, but the discussions are still at an early stage. The company has assembled a chip design team of about 20 people, led by top engineers who have previously built Tensor Processing Units (TPUs) at Google, including Thomas Norrie and Richard Ho (head of hardware engineering).

Reuters reported on OpenAI’s ongoing talks with Broadcom and TSMC on Tuesday. It has been working for months with Broadcom to build its first AI chip focusing on inference (responds to user requests), according to sources. Demand right now is greater for training chips, but analysts have predicted the need for inference chips could surpass them as more AI applications are deployed.

OpenAI has examined a range of options to diversify chip supply and reduce costs. OpenAI considered building everything in-house and raising capital for an expensive plan to build a network of chip manufacturing factories known as “foundries.”

REUTERS/Dado Ruvic/Illustration/File Photo Purchase Licensing Rights

OpenAI may continue to research setting up its own network of foundries, or chip factories, one of the people said, but the startup has realized that working with partners on custom chips is a quicker, attainable path for now. Reuters earlier reported that OpenAI was pulling back from the effort of establishing its own chip manufacturing capacity. The company has dropped the ambitious foundry plans for now due to the costs and time needed to build a network, and plans instead to focus on in-house chip design efforts, according to sources.

OpenAI, which helped commercialize generative AI that produces human-like responses to queries, relies on substantial computing power to train and run its systems. As one of the largest purchasers of Nvidia’s graphics processing units (GPUs), OpenAI uses AI chips both to train models where the AI learns from data and for inference, applying AI to make predictions or decisions based on new information. Reuters previously reported on OpenAI’s chip design endeavors. The Information reported on talks with Broadcom and others.

The Information reported in June that Broadcom had discussed making an AI chip for OpenAI. As one of the largest buyers of chips, OpenAI’s decision to source from a diverse array of chipmakers while developing its customized chip could have broader tech sector implications.

Broadcom is the largest designer of application-specific integrated circuits (ASICs) — chips designed to fit a single purpose specified by the customer. The company’s biggest customer in this area is Alphabet Inc.’s Google. Broadcom also works with Meta Platforms Inc. and TikTok owner ByteDance Ltd.

When asked last month whether he has new customers for the business, given the huge demand for AI training, Broadcom Chief Executive Officer Hock Tan said that he will only add to his short list of customers when projects hit volume shipments. “It’s not an easy product to deploy for any customer, and so we do not consider proof of concepts as production volume,” he said during an earnings conference call.

OpenAI’s services require massive amounts of computing power to develop and run — with much of that coming from Nvidia chips. To meet the demand, the industry has been scrambling to find alternatives to Nvidia. That’s included embracing processors from Advanced Micro Devices Inc. and developing in-house versions.

OpenAI is also actively planning investments and partnerships in data centers, the eventual home for such AI chips. The startup’s leadership has pitched the U.S. government on the need for more massive data centers and CEO Sam Altman has sounded out global investors, including some in the Middle East, to finance the effort.

“It’s definitely a stretch,” OpenAI Chief Financial Officer Sarah Friar told Bloomberg Television on Monday. “Stretch from a capital perspective but also my own learning. Frankly we are all learning in this space: Infrastructure is destiny.”

Currently, Nvidia’s GPUs hold over 80% AI market share. But shortages and rising costs have led major customers like Microsoft, Meta, and now OpenAI, to explore in-house or external alternatives.

References:

AI Echo Chamber: “Upstream AI” companies huge spending fuels profit growth for “Downstream AI” firms

AI Frenzy Backgrounder; Review of AI Products and Services from Nvidia, Microsoft, Amazon, Google and Meta; Conclusions

AI sparks huge increase in U.S. energy consumption and is straining the power grid; transmission/distribution as a major problem

Generative AI Unicorns Rule the Startup Roost; OpenAI in the Spotlight