AI Hallucinations

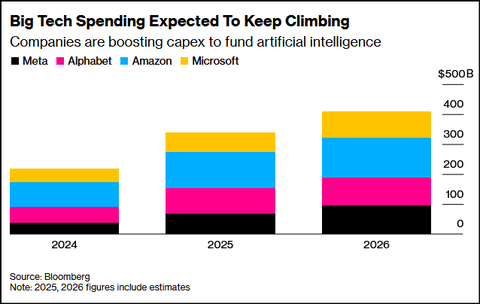

AI spending boom accelerates: Big tech to invest an aggregate of $400 billion in 2025; much more in 2026!

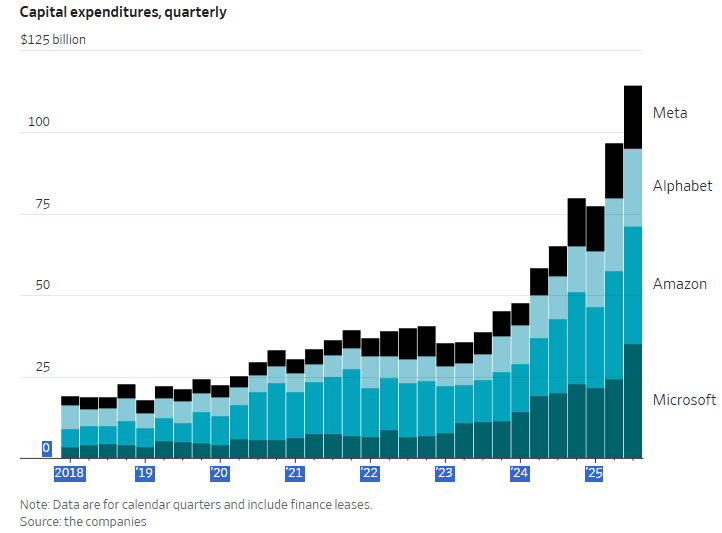

- Meta Platforms says it continues to experience capacity constraints as it simultaneously trains new AI models and supports existing product infrastructure. Meta CEO Mark Zuckerberg described an unsatiated appetite for more computing resources that Meta must work to fulfill to ensure it’s a leader in a fast-moving AI race. “We want to make sure we’re not underinvesting,” he said on an earnings call with analysts Wednesday after posting third-quarter results. Meta signaled in the earnings report that capital expenditures would be “notably larger” next year than in 2025, during which Meta expects to spend as much as $72 billion. He indicated that the company’s existing advertising business and platforms are operating in a “compute-starved state.” This condition persists because Meta is allocating more resources toward AI research and development efforts rather than bolstering existing operations.

- Microsoft reported substantial customer demand for its data-center-driven services, prompting plans to double its data center footprint over the next two years. Concurrently, Amazon is working aggressively to deploy additional cloud capacity to meet demand. Amy Hood, Microsoft’s chief financial officer, said: “We’ve been short [on computing power] now for many quarters. I thought we were going to catch up. We are not. Demand is increasing.” She further elaborated, “When you see these kinds of demand signals and we know we’re behind, we do need to spend.”

- Alphabet (Google’s parent company) reported that capital expenditures will jump from $85 billion to between $91 billion and $93 billion. Google CFO Anat Ashkenazi said the investments are already yielding returns: “We already are generating billions of dollars from AI in the quarter. But then across the board, we have a rigorous framework and approach by which we evaluate these long-term investments.”

- Amazon has not provided a specific total dollar figure for its planned AI investment in 2026. However, the company has announced it expects its total capital expenditures (capex) in 2026 to be even higher than its 2025 projection of $125 billion, with the vast majority of this spending dedicated to AI and related infrastructure for Amazon Web Services (AWS).

- Apple: Announced it is also increasing its AI investments, though its overall spending remains smaller in comparison to the other tech giants.

As big as the spending projections were this week, they look pedestrian compared with OpenAI, which has announced roughly $1 trillion worth of AI infrastructure deals of late with partners including Nvidia , Oracle and Broadcom.

Despite the big capex tax write-offs (due to the 2025 GOP tax act) there is a large degree of uncertainty regarding the eventual outcomes of this substantial AI infrastructure spending. The companies themselves, along with numerous AI proponents, assert that these investments are essential for machine-learning systems to achieve artificial general intelligence (AGI), a state where they surpass human intelligence.

Yet skeptics question whether investing billions in large-language models (LLMs), the most prevalent AI system, will ultimately achieve that objective. They also highlight the limited number of paying users for existing technology and the prolonged training period required before a global workforce can effectively utilize it.

During investor calls following the earnings announcements, analysts directed incisive questions at company executives. On Microsoft’s call, one analyst voiced a central market concern, asking: “Are we in a bubble?” Similarly, on the call for Google’s parent company, Alphabet, another analyst questioned: “What early signs are you seeing that gives you confidence that the spending is really driving better returns longer term?”

Bank of America (BofA) credit strategists Yuri Seliger and Sohyun Marie Lee write in a client note that capital spending by five of the Magnificent Seven megacap tech companies (Amazon.com, Alphabet, and Microsoft, along with Meta and Oracle) has been growing even faster than their prodigious cash flows. “These companies collectively may be reaching a limit to how much AI capex they are willing to fund purely from cash flows,” they write. Consensus estimates of AI capex suggest will climb to 94% of operating cash flows, minus dividends and share repurchases, in 2025 and 2026, up from 76% in 2024. That’s still less than 100% of cash flows, so they don’t need to borrow to fund spending, “but it’s getting close,” they add.

………………………………………………………………………………………………………………………………………………………………….

Google, which projected a rise in its full-year capital expenditures from $85 billion to a range of $91 billion to $93 billion, indicated that these investments were already proving profitable. Google’s Ashkenazi stated: “We already are generating billions of dollars from AI in the quarter. But then across the board, we have a rigorous framework and approach by which we evaluate these long-term investments.”

Microsoft reported that it expects to face capacity shortages that will affect its ability to power both its current businesses and AI research needs until at least the first half of the next year. The company noted that its cloud computing division, Azure, is absorbing “most of the revenue impact.”

Amazon informed investors of its expedited efforts to bring new capacity online, citing its ability to immediately monetize these investments.

“You’re going to see us continue to be very aggressive in investing capacity because we see the demand,” said Amazon Chief Executive Andy Jassy. “As fast as we’re adding capacity right now, we’re monetizing it.”

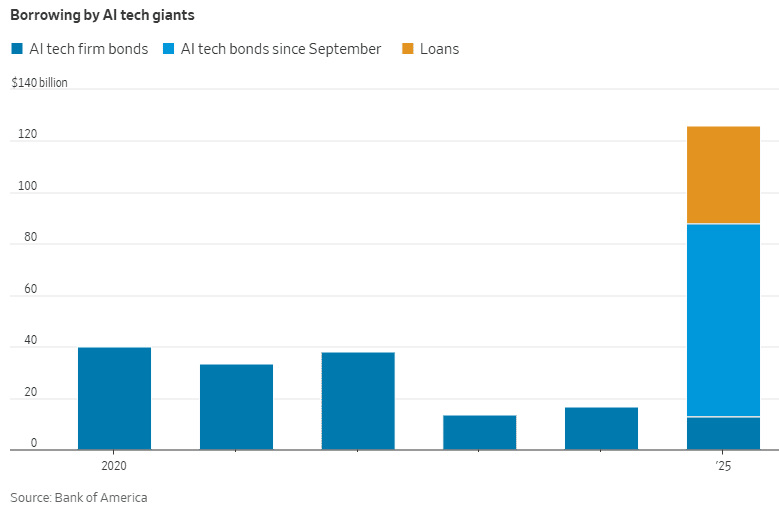

Meta’s chief financial officer, Susan Li, stated that the company’s capital expenditures—which have already nearly doubled from last year to $72 billion this year—will grow “notably larger” in 2026, though specific figures were not provided. Meta brought this year’s biggest investment-grade corporate bond deal to market, totaling some $30 billion, the latest in a parade of recent data-center borrowing.

Apple confirmed during its earnings call it is also increasing investments in AI . However, its total spending levels remain significantly lower compared to the outlays planned by the other major technology firms.

Skepticism and Risk:

While proponents argue the investments are necessary for AGI and offer a competitive advantage, skeptics question if huge spending (capex) on AI infrastructure and large-language models will achieve this goal and point to limited paying users for current AI technology. Meta CEO Zuckerberg addressed this by telling investors the company would “simply pivot” if its AGI spending strategy proves incorrect.

The mad scramble by mega tech companies and Open AI to build AI data centers is largely relying on debt markets, with a slew of public and private mega deals since September. Hyperscalers would have to spend 94% of operating cash flow to pay for their AI buildouts so are turning to debt financing to help defray some of that cost, according to Bank of America. Unlike earnings per share, cash flow can’t be manipulated by companies. If they spend more on AI than they generate internally, they have to finance the difference.

Hyperscaler debt taken on so far this year have raised almost as much money as all debt financings done between 2020 and 2024, the BofA research said. BofA calculates $75 billion of AI-related public debt offerings just in the past two months!

In bubbles, everyone gets caught up in the idea that spending on the hot theme will deliver vast profits — eventually. When the bubble is big enough, it shifts the focus of the market as a whole from disliking capital expenditure, and hating speculative capital spending in particular, to loving it. That certainly seems the case today with surging AI spending. For much more, please check-out the References below.

Postscript: November 23, 2025:

In this new AI era, consumers and workers are not what drives the economy anymore. Instead, it’s spending on all things AI, mostly with borrowed money or circular financing deals.

BofA Research noted that Meta and Oracle issued $75 billion in bonds and loans in September and October 2025 alone to fund AI data center build outs, an amount more than double the annual average over the past decade. They warned that “The AI boom is hitting a money wall” as capital expenditures consume a large portion of free cash flow. Separately, a recent Bank of America Global Fund Manager Survey found that 53% of participating fund managers felt that AI stocks had reached bubble proportions. This marked a slight decrease from a record 54% in the prior month’s survey, but the concern has grown over time, with the “AI bubble” cited as the top “tail risk” by 45% of respondents in the November 2025 poll.

JP Morgan Chase estimates up to $7 trillion of AI spending will be with borrowed money. “The question is not ‘which market will finance the AI-boom?’ Rather, the question is ‘how will financings be structured to access every capital market?’ according to strategists at the bank led by Tarek Hamid.

As an example of AI debt financing, Meta did a $27 billion bond offering. It wasn’t on their balance sheet. They paid 100 basis points over what it would cost to put it on their balance sheet. Special purpose vehicles happen at the tail end of the cycle, not the early part of the cycle, notes Rajiv Jain of GQG Partners.

References:

Softbank developing autonomous AI agents; an AI model that can predict and capture human cognition

Speaking at a customer event Wednesday in Tokyo, Softbank Chairman and CEO Masayoshi Son said his company is developing “the world’s first” artificial intelligence (AI) agent system that can autonomously perform complex tasks. Human programmers will no longer needed. “The AI agents will think for themselves and improve on their own…so the era of humans doing the programming is coming to an end,”

Softbank estimated it needed to create around 1000 agents per person – a large number because “employees have complex thought processes. The agents will be active 24 hours a day, 365 days a year and will interact with each other.” Son estimates the agents will be at least four times as productive and four times as efficient as humans, and would cost around 40 Japanese yen (US$0.27) per agent per month. At that rate, the billion-agent plan would cost SoftBank $3.2 billion annually.

“For 40 yen per agent per month, the agent will independently memorize, negotiate and conduct learning. So with these actions being taken, it’s incredibly cheap,” Son said. “I’m excited to see how the AI agents will interact with one another and advance given tasks,” Son added that the AI agents, to achieve the goals, will “self-evolve and self-replicate” to execute subtasks.

Unlike generative AI, which needs human commands to carry out tasks, an AI agent performs tasks on its own by designing workflows with data available to it. It is expected to enhance productivity at companies by helping their decision-making and problem-solving.

While the CEO’s intent is clear, details of just how and when SoftBank will build this giant AI workforce are scarce. Son admitted the 1 billion target would be “challenging” and that the company had not yet developed the necessary software to support the huge numbers of agents. He said his team needed to build a toolkit for creating more agents and an operating system to orchestrate and coordinate them. Son, one of the world’s most ardent AI evangelists, is betting the company’s future on the technology.

According to Son, the capabilities of AI agents had already surpassed PhD-holders in advanced fields including physics, mathematics and chemistry. “There are no questions it can’t comprehend. We’re almost at a stage where there are hardly any limitations,” he enthused. Son acknowledged the problem of AI hallucinations, but dismissed it as “a temporary and minor issue.” Son said the development of huge AI data centers, such as the $500 billion Stargate project, would enable exponential growth in computing power and AI capabilities.

Softbank Group Corp. Chairman and CEO Masayoshi Son (L) and OpenAI CEO Sam Altman at an event on July 16, 2025. (Kyodo)

The project comes as SoftBank Group and OpenAI, the developer of chatbot ChatGPT, said in February they had agreed to establish a joint venture to promote AI services for corporations. Wednesday’s event included a cameo appearance from Sam Altman, CEO of SoftBank partner OpenAI, who said he was confident about the future of AI because the scaling law would exist “for a long time” and that cost was continually going down. “I think the first era of AI, the…ChatGPT initial era was about an AI that you could ask anything and it could tell you all these things,” Altman said.

“Now as these (AI) agents roll out, AI can do things for you…You can ask the computer to do something in natural language, a sort of vaguely defined complex task, and it can understand you and execute it for you,” Altman said. “The productivity and potential that it unlocks for the world is quite huge.”

……………………………………………………………………………………………………………………………………………..

According to the NY Times, an international team of scientists believe that A.I. systems can help them understand how the human mind works. They have created a ChatGPT-like system that can play the part of a human in a psychological experiment and behave as if it has a human mind. Details about the system, known as Centaur, were published on Wednesday in the journal Nature. Dr. Marcel Binz, a cognitive scientist at Helmholtz Munich, a German research center, is the author of the new AI study.

References:

https://english.kyodonews.net/articles/-/57396#google_vignette

https://www.lightreading.com/ai-machine-learning/softbank-aims-for-1-billion-ai-agents-this-year

https://www.nytimes.com/2025/07/02/science/ai-psychology-mind.html

https://www.nature.com/articles/s41586-025-09215-4

AI spending is surging; companies accelerate AI adoption, but job cuts loom large

Big Tech and VCs invest hundreds of billions in AI while salaries of AI experts reach the stratosphere

Ericsson reports ~flat 2Q-2025 results; sees potential for 5G SA and AI to drive growth

Agentic AI and the Future of Communications for Autonomous Vehicle (V2X)

Dell’Oro: AI RAN to account for 1/3 of RAN market by 2029; AI RAN Alliance membership increases but few telcos have joined

Indosat Ooredoo Hutchison and Nokia use AI to reduce energy demand and emissions

Deloitte and TM Forum : How AI could revitalize the ailing telecom industry?

McKinsey: AI infrastructure opportunity for telcos? AI developments in the telecom sector

ZTE’s AI infrastructure and AI-powered terminals revealed at MWC Shanghai

Ericsson revamps its OSS/BSS with AI using Amazon Bedrock as a foundation

Big tech firms target data infrastructure software companies to increase AI competitiveness

SK Group and AWS to build Korea’s largest AI data center in Ulsan

OpenAI partners with G42 to build giant data center for Stargate UAE project

Nile launches a Generative AI engine (NXI) to proactively detect and resolve enterprise network issues

AI infrastructure investments drive demand for Ciena’s products including 800G coherent optics

McKinsey: AI infrastructure opportunity for telcos? AI developments in the telecom sector

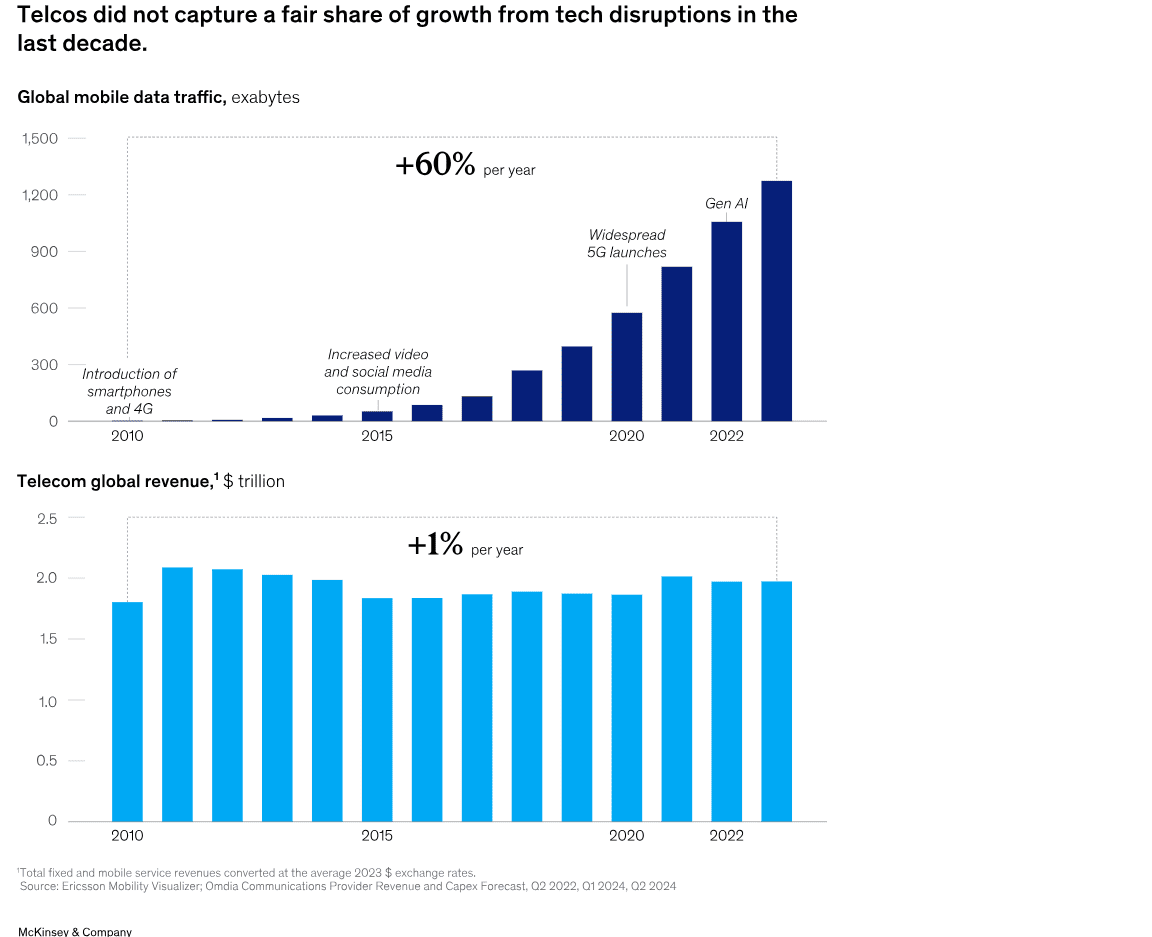

A new report from McKinsey & Company offers a wide range of options for telecom network operators looking to enter the market for AI services. One high-level conclusion is that strategy inertia and decision paralysis might be the most dangerous threats. That’s largely based on telco’s failure to monetize past emerging technologies like smartphones and mobile apps, cloud networking, 5G-SA (the true 5G), etc. For example, global mobile data traffic rose 60% per year from 2010 to 2023, while the global telecom industry’s revenues rose just 1% during that same time period.

“Operators could provide the backbone for today’s AI economy to reignite growth. But success will hinge on effectively navigating complex market dynamics, uncertain demand, and rising competition….Not every path will suit every telco; some may be too risky for certain operators right now. However, the most significant risk may come from inaction, as telcos face the possibility of missing out on their fair share of growth from this latest technological disruption.”

McKinsey predicts that global data center demand could rise as high as 298 gigawatts by 2030, from just 55 gigawatts in 2023. Fiber connections to AI infused data centers could generate up to $50 billion globally in sales to fiber facilities based carriers.

Pathways to growth -Exploring four strategic options:

- Connecting new data centers with fiber

- Enabling high-performance cloud access with intelligent network services

- Turning unused space and power into revenue

- Building a new GPU as a Service business.

“Our research suggests that the addressable GPUaaS [GPU-as-a-service] market addressed by telcos could range from $35 billion to $70 billion by 2030 globally.” Verizon’s AI Connect service (described below), Indosat Ooredoo Hutchinson (IOH), Singtel and Softbank in Asia have launched their own GPUaaS offerings.

……………………………………………………………………………………………………………………………………………………………………………………………………………………………….

Recent AI developments in the telecom sector include:

- The AI-RAN Alliance, which promises to allow wireless network operators to add AI to their radio access networks (RANs) and then sell AI computing capabilities to enterprises and other customers at the network edge. Nvidia is leading this industrial initiative. Telecom operators in the alliance include T-Mobile and SoftBank, as well as Boost Mobile, Globe, Indosat Ooredoo Hutchison, Korea Telecom, LG UPlus, SK Telecom and Turkcell.

- Verizon’s new AI Connect product, which includes Vultr’s GPU-as-a-service (GPUaaS) offering. GPU-as-a-service is a cloud computing model that allows businesses to rent access to powerful graphics processing units (GPUs) for AI and machine learning workloads without having to purchase and maintain that expensive hardware themselves. Verizon also has agreements with Google Cloud and Meta to provide network infrastructure for their AI workloads, demonstrating a focus on supporting the broader AI economy.

- Orange views AI as a critical growth driver. They are developing “AI factories” (data centers optimized for AI workloads) and providing an “AI platform layer” called Live Intelligence to help enterprises build generative AI systems. They also offer a generative AI assistant for contact centers in partnership with Microsoft.

- Lumen Technologies continues to build fiber connections intended to carry AI traffic.

- British Telecom (BT) has launched intelligent network services and is working with partners like Fortinet to integrate AI for enhanced security and network management.

- Telus (Canada) has built its own AI platform called “Fuel iX” to boost employee productivity and generate new revenue. They are also commercializing Fuel iX and building sovereign AI infrastructure.

- Telefónica: Their “Next Best Action AI Brain” uses an in-house Kernel platform to revolutionize customer interactions with precise, contextually relevant recommendations.

- Bharti Airtel (India): Launched India’s first anti-spam network, an AI-powered system that processes billions of calls and messages daily to identify and block spammers.

- e& (formerly Etisalat in UAE): Has launched the “Autonomous Store Experience (EASE),” which uses smart gates, AI-powered cameras, robotics, and smart shelves for a frictionless shopping experience.

- SK Telecom (Korea): Unveiled a strategy to implement an “AI Infrastructure Superhighway” and is actively involved in AI-RAN (AI in Radio Access Networks) development, including their AITRAS solution.

- Vodafone: Sees AI as a transformative force, with initiatives in network optimization, customer experience (e.g., their TOBi chatbot handling over 45 million interactions per month), and even supporting neurodiverse staff.

- Deutsche Telekom: Deploys AI across various facets of its operations

……………………………………………………………………………………………………………………………………………………………………..

A recent report from DCD indicates that new AI models that can reason may require massive, expensive data centers, and such data centers may be out of reach for even the largest telecom operators. Across optical data center interconnects, data centers are already communicating with each other for multi-cluster training runs. “What we see is that, in the largest data centers in the world, there’s actually a data center and another data center and another data center,” he says. “Then the interesting discussion becomes – do I need 100 meters? Do I need 500 meters? Do I need a kilometer interconnect between data centers?”

……………………………………………………………………………………………………………………………………………………………………..

References:

https://www.datacenterdynamics.com/en/analysis/nvidias-networking-vision-for-training-and-inference/

https://opentools.ai/news/inaction-on-ai-a-critical-misstep-for-telecos-says-mckinsey

Bain & Co, McKinsey & Co, AWS suggest how telcos can use and adapt Generative AI

Nvidia AI-RAN survey results; AI inferencing as a reinvention of edge computing?

The case for and against AI-RAN technology using Nvidia or AMD GPUs

Telecom and AI Status in the EU

Major technology companies form AI-Enabled Information and Communication Technology (ICT) Workforce Consortium

AI RAN Alliance selects Alex Choi as Chairman

AI Frenzy Backgrounder; Review of AI Products and Services from Nvidia, Microsoft, Amazon, Google and Meta; Conclusions

AI sparks huge increase in U.S. energy consumption and is straining the power grid; transmission/distribution as a major problem

Deutsche Telekom and Google Cloud partner on “RAN Guardian” AI agent

NEC’s new AI technology for robotics & RAN optimization designed to improve performance

MTN Consulting: Generative AI hype grips telecom industry; telco CAPEX decreases while vendor revenue plummets

Amdocs and NVIDIA to Accelerate Adoption of Generative AI for $1.7 Trillion Telecom Industry

SK Telecom and Deutsche Telekom to Jointly Develop Telco-specific Large Language Models (LLMs)

Sources: AI is Getting Smarter, but Hallucinations Are Getting Worse

Recent reports suggest that AI hallucinations—instances where AI generates false or misleading information—are becoming more frequent and present growing challenges for businesses and consumers alike who rely on these technologies. More than two years after the arrival of ChatGPT, tech companies, office workers and everyday consumers are using A.I. bots for an increasingly wide array of tasks. But there is still no way of ensuring that these systems produce accurate information.

A groundbreaking study featured in the PHARE (Pervasive Hallucination Assessment in Robust Evaluation) dataset has revealed that AI hallucinations are not only persistent but potentially increasing in frequency across leading language models. The research, published on Hugging Face, evaluated multiple large language models (LLMs) including GPT-4, Claude, and Llama models across various knowledge domains.

“We’re seeing a concerning trend where even as these models advance in capability, their propensity to hallucinate remains stubbornly present,” notes the PHARE analysis. The comprehensive benchmark tested models across 37 knowledge categories, revealing that hallucination rates varied significantly by domain, with some models demonstrating hallucination rates exceeding 30% in specialized fields.

Hallucinations are when AI bots produce fabricated information and present it as fact. Photo Credit: More SOPA Images/LightRocket via Getty Images

Today’s A.I. bots are based on complex mathematical systems that learn their skills by analyzing enormous amounts of digital data. These systems use mathematical probabilities to guess the best response, not a strict set of rules defined by human engineers. So they make a certain number of mistakes. “Despite our best efforts, they will always hallucinate,” said Amr Awadallah, the chief executive of Vectara, a start-up that builds A.I. tools for businesses, and a former Google executive.

“That will never go away,” he said. These AI bots do not — and cannot — decide what is true and what is false. Sometimes, they just make stuff up, a phenomenon some A.I. researchers call hallucinations. On one test, the hallucination rates of newer A.I. systems were as high as 79%.

Amr Awadallah, the chief executive of Vectara, which builds A.I. tools for businesses, believes A.I. “hallucinations” will persist.Credit…Photo credit: Cayce Clifford for The New York Times

AI companies like OpenAI, Google, and DeepSeek have introduced reasoning models designed to improve logical thinking, but these models have shown higher hallucination rates compared to previous versions. For more than two years, those companies steadily improved their A.I. systems and reduced the frequency of these errors. But with the use of new reasoning systems, errors are rising. The latest OpenAI systems hallucinate at a higher rate than the company’s previous system, according to the company’s own tests.

For example, OpenAI’s latest models (o3 and o4-mini) have hallucination rates ranging from 33% to 79%, depending on the type of question asked. This is significantly higher than earlier models, which had lower error rates. Experts are still investigating why this is happening. Some believe that the complex reasoning processes in newer AI models may introduce more opportunities for errors.

Others suggest that the way these models are trained might be amplifying inaccuracies. For several years, this phenomenon has raised concerns about the reliability of these systems. Though they are useful in some situations — like writing term papers, summarizing office documents and generating computer code — their mistakes can cause problems. Despite efforts to reduce hallucinations, AI researchers acknowledge that hallucinations may never fully disappear. This raises concerns for applications where accuracy is critical, such as legal, medical, and customer service AI systems.

The A.I. bots tied to search engines like Google and Bing sometimes generate search results that are laughably wrong. If you ask them for a good marathon on the West Coast, they might suggest a race in Philadelphia. If they tell you the number of households in Illinois, they might cite a source that does not include that information. Those hallucinations may not be a big problem for many people, but it is a serious issue for anyone using the technology with court documents, medical information or sensitive business data.

“You spend a lot of time trying to figure out which responses are factual and which aren’t,” said Pratik Verma, co-founder and chief executive of Okahu, a company that helps businesses navigate the hallucination problem. “Not dealing with these errors properly basically eliminates the value of A.I. systems, which are supposed to automate tasks for you.”

For more than two years, companies like OpenAI and Google steadily improved their A.I. systems and reduced the frequency of these errors. But with the use of new reasoning systems, errors are rising. The latest OpenAI systems hallucinate at a higher rate than the company’s previous system, according to the company’s own tests.

The company found that o3 — its most powerful system — hallucinated 33% of the time when running its PersonQA benchmark test, which involves answering questions about public figures. That is more than twice the hallucination rate of OpenAI’s previous reasoning system, called o1. The new o4-mini hallucinated at an even higher rate: 48 percent.

When running another test called SimpleQA, which asks more general questions, the hallucination rates for o3 and o4-mini were 51% and 79%. The previous system, o1, hallucinated 44% of the time.

In a paper detailing the tests, OpenAI said more research was needed to understand the cause of these results. Because A.I. systems learn from more data than people can wrap their heads around, technologists struggle to determine why they behave in the ways they do.

“Hallucinations are not inherently more prevalent in reasoning models, though we are actively working to reduce the higher rates of hallucination we saw in o3 and o4-mini,” a company spokeswoman, Gaby Raila, said. “We’ll continue our research on hallucinations across all models to improve accuracy and reliability.”

Tests by independent companies and researchers indicate that hallucination rates are also rising for reasoning models from companies such as Google and DeepSeek.

Since late 2023, Mr. Awadallah’s company, Vectara, has tracked how often chatbots veer from the truth. The company asks these systems to perform a straightforward task that is readily verified: Summarize specific news articles. Even then, chatbots persistently invent information. Vectara’s original research estimated that in this situation chatbots made up information at least 3% of the time and sometimes as much as 27%.

In the year and a half since, companies such as OpenAI and Google pushed those numbers down into the 1 or 2% range. Others, such as the San Francisco start-up Anthropic, hovered around 4%. But hallucination rates on this test have risen with reasoning systems. DeepSeek’s reasoning system, R1, hallucinated 14.3% of the time. OpenAI’s o3 climbed to 6.8%.

Sarah Schwettmann, co-founder of Transluce, said that o3’s hallucination rate may make it less useful than it otherwise would be. Kian Katanforoosh, a Stanford adjunct professor and CEO of the upskilling startup Workera, told TechCrunch that his team is already testing o3 in their coding workflows, and that they’ve found it to be a step above the competition. However, Katanforoosh says that o3 tends to hallucinate broken website links. The model will supply a link that, when clicked, doesn’t work.

AI companies are now leaning more heavily on a technique that scientists call reinforcement learning. With this process, a system can learn behavior through trial and error. It is working well in certain areas, like math and computer programming. But it is falling short in other areas.

“The way these systems are trained, they will start focusing on one task — and start forgetting about others,” said Laura Perez-Beltrachini, a researcher at the University of Edinburgh who is among a team closely examining the hallucination problem.

Another issue is that reasoning models are designed to spend time “thinking” through complex problems before settling on an answer. As they try to tackle a problem step by step, they run the risk of hallucinating at each step. The errors can compound as they spend more time thinking.

“What the system says it is thinking is not necessarily what it is thinking,” said Aryo Pradipta Gema, an A.I. researcher at the University of Edinburgh and a fellow at Anthropic.

New research highlighted by TechCrunch indicates that user behavior may exacerbate the problem. When users request shorter answers from AI chatbots, hallucination rates actually increase rather than decrease. “The pressure to be concise seems to force these models to cut corners on accuracy,” the TechCrunch article explains, challenging the common assumption that brevity leads to greater precision.

References:

https://www.nytimes.com/2025/05/05/technology/ai-hallucinations-chatgpt-google.html

The Confidence Paradox: Why AI Hallucinations Are Getting Worse, Not Better

https://www.forbes.com/sites/conormurray/2025/05/06/why-ai-hallucinations-are-worse-than-ever/

https://techcrunch.com/2025/04/18/openais-new-reasoning-ai-models-hallucinate-more/