FCC updates subsea cable regulations; repeals 98 “outdated” broadcast rules and regulations

The U.S. Federal Communications Commission (FCC) is updating its regulations for subsea cables to enhance security and streamline the licensing process. The updates, adopted at an FCC open meeting on August 7th, aim to address national security concerns related to foreign adversaries (like Russia and China) and accelerate the deployment of these critical communication networks. This initiative, developed by the Office of International Affairs in collaboration with the Public Safety and Homeland Security Bureau and the Enforcement Bureau, is intended to bolster national security. The new rules address potential vulnerabilities of subsea cables to foreign adversaries, recognizing their critical role in global internet traffic and financial transactions.

FCC Chairman Brendan Carr said that while the FCC often focuses on airwaves as vital but unseen infrastructure, submarine cables are just as essential. “They are the real unseen heroes of global communications. [The Commission] must facilitate, not frustrate the buildout of submarine cable industries.” Indeed, the vast global network of subsea cables carry ~ 99% of the world’s internet traffic and support more than $10 trillion in daily financial transactions.

……………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………

The risk of Russia- and China-backed attacks on undersea cables carrying international internet traffic is likely to rise amid a spate of incidents in the Baltic Sea and around Taiwan, according to a report by Recorded Future, a U.S. cybersecurity company. It singled out nine incidents in the Baltic Sea and off the coast of Taiwan in 2024 and 2025 as a harbinger for further disruptive activity. The report said that while genuine accidents remained likely to cause most undersea cable disruption, the Baltic and Taiwanese incidents pointed to increased malicious activity from Russia and China. “(Sabotage) Campaigns attributed to Russia in the North Atlantic-Baltic region and China in the western Pacific are likely to increase in frequency as tensions rise,” the company said.

……………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………

The FCC also repealed 98 “outdated” broadcast rules and regulations as part of a deregulation effort aimed at streamlining and modernizing the agency’s rules. These rules, deemed obsolete or unnecessary, included outdated requirements for testing equipment and authorization procedures, as well as provisions related to technologies like analog broadcasting that are no longer in use. The move is part of the FCC’s “Delete, Delete, Delete” docket, which seeks to identify and remove rules that no longer serve the public interest.

FCC Chairman Brendan Carr (middle) and Commissioners Olivia Trusty (right) and Anna Gomez (left) at the Open Meeting on August 7, 2025 in Washington, D.C. Photo credit: Broadband Breakfast/Patricia Blume.

Many of the repealed rules relate to analog-era technologies and practices that are no longer relevant in today’s digital broadcasting landscape. These rules cover various areas, such as obsolete subscription television rules, outdated equipment requirements from the 1970s, unnecessary authorization rules for standard practices, obsolete international broadcast provisions, and sections that were for reference only or were duplicative or reserved. In particular:

- The repealed rules covered equipment requirements for AM, FM, and TV stations that are now obsolete, as well as rules related to subscription television systems that operated on now-defunct analog technology.

- The FCC eliminated rules regarding international broadcasting that used outdated terms and procedures.

- Several sections that merely listed citations to outdated FCC orders, court decisions, and policies were also removed.

- The FCC eliminated a rule requiring FM stations to obtain authorization for stereophonic sound programs, which is now standard practice.

References:

https://www.fcc.gov/August2025

https://www.fcc.gov/document/fcc-deletes-outdated-broadcast-rules-and-requirements/carr-statement

https://docs.fcc.gov/public/attachments/DOC-413057A1.pdf

https://broadbandbreakfast.com/fcc-unanimously-approves-buildout-of-secure-submarine-cables/

New FCC Chairman Carr Seen Clarifying Space Rules and Streamlining Approvals Process

U.S. federal appeals court says FCC’s net neutrality/open internet rules are “unlawful”

Intentional or Accident: Russian fiber optic cable cut (1 of 3) by Chinese container ship under Baltic Sea

Sabotage or Accident: Was Russia or a fishing trawler responsible for Shetland Island cable cut?

Geopolitical tensions arise in Asia over subsea fiber optic cable projects; U.S. intervened to flip SeaMeWe-6 contractor

China seeks to control Asian subsea cable systems; SJC2 delayed, Apricot and Echo avoid South China Sea

OpenAI announces new open weight, open source GPT models which Orange will deploy

Overview:

OpenAI today introduced two new open-weight, open-source GPT models (gpt-oss-120b and gpt-oss-20b) designed to deliver top-tier performance at a lower cost. Available under the flexible Apache 2.0 license, these models outperform similarly sized open models on reasoning tasks, demonstrate strong tool use capabilities, and are optimized for efficient deployment on consumer hardware. They were trained using a mix of reinforcement learning and techniques informed by OpenAI’s most advanced internal models, including o3 and other frontier systems.

These two new AI models require much less compute power to run, with the gpt-oss20B version able to run on just 16 GB of memory. The smaller memory size and less compute power enables OpenAI’s models to run in a wider variety of environments, including at the network edge. The open weights mean those using the models can tweak the training parameters and customize them for specific tasks.

OpenAI has been working with early partner companies, including AI Sweden, Orange, and Snowflake to learn about real-world applications of our open models, from hosting these models on-premises for data security to fine-tuning them on specialized datasets. We’re excited to provide these best-in-class open models to empower everyone—from individual developers to large enterprises to governments—to run and customize AI on their own infrastructure. Coupled with the models available in our API, developers can choose the performance, cost, and latency they need to power AI workflows.

In lockstep with OpenAI, France’s Orange today announced plans to deploy the new OpenAI models in its regional cloud data centers as well as small on-premises servers and edge sites to meet demand for sovereign AI solutions. Orange’s deep AI engineering talent enables it to customize and distill the OpenAI models for specific tasks, effectively creating smaller sub-models for particular use-cases, while ensuring the protection of all sensitive data used in these customized models. This process facilitates innovative use-cases in network operations and will enable Orange to build on its existing suite of ‘Live Intelligence’ AI solutions for enterprises, as well as utilizing it for its own operational needs to improve efficiency, and drive cost savings.

Using AI to improve the quality and resilience of its networks, for example by enabling Orange to more easily explore and diagnose complex network issues with the help of AI. This can be achieved with trusted AI models that operate entirely within Orange sovereign data centers where Orange has complete control over the use of sensitive network data. This ability to create customized, secure, and sovereign AI models for network use cases is a key enabler in Orange’s mission to achieve higher levels of automation across all of its networks.

Steve Jarrett, Orange’s Chief AI Officer, noted the decision to use state-of-the-art open-weight models will allow it to drive “new use cases to address sensitive enterprise needs, help manage our networks, enable innovating customer care solutions including African regional languages, and much more.”

Performance of the new OpenAI models:

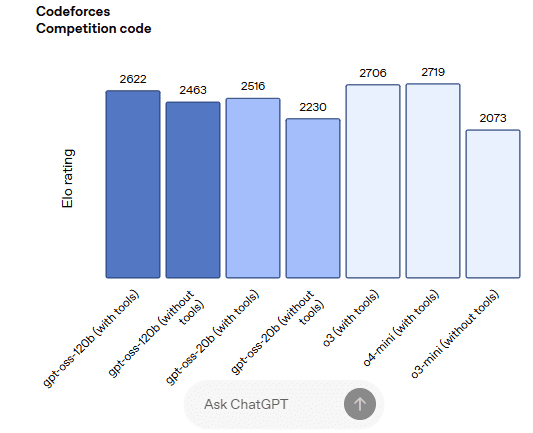

gpt-oss-120b outperforms OpenAI o3‑mini and matches or exceeds OpenAI o4-mini on competition coding (Codeforces), general problem solving (MMLU and HLE) and tool calling (TauBench). It furthermore does even better than o4-mini on health-related queries (HealthBench) and competition mathematics (AIME 2024 & 2025). gpt-oss-20b matches or exceeds OpenAI o3‑mini on these same evals, despite its small size, even outperforming it on competition mathematics and health.

Sovereign AI Market Forecasts:

Open-weight and open-source AI models play a significant role in enabling and shaping the development of Sovereign AI, which refers to a nation’s or organization’s ability to control its own AI technologies, data, and infrastructure to meet its specific needs and regulations.

Sovereign AI refers to a nation’s ability to control and manage its own AI development and deployment, including data, infrastructure, and talent. It’s about ensuring a country’s strategic autonomy in the realm of artificial intelligence, enabling them to leverage AI for their own economic, social, and security interests, while adhering to their own values and regulations.

Bank of America’s financial analysts recently forecast the sovereign AI market segment could become a “$50 billion a year opportunity, accounting for 10%–15% of the global $450–$500 billion AI infrastructure market.”

BofA analysts said, “Sovereign AI nicely complements commercial cloud investments with a focus on training and inference of LLMs in local culture, language and needs,” and could mitigate challenges such as “limited power availability for data centers in US” and trade restrictions with China.

References:

https://openai.com/index/introducing-gpt-oss/

https://newsroom.orange.com/orange-and-openai-collaborate-on-trusted-responsible-and-inclusive-ai/

https://finance.yahoo.com/news/nvidia-amd-targets-raised-bofa-162314196.html

Open AI raises $8.3B and is valued at $300B; AI speculative mania rivals Dot-com bubble

OpenAI partners with G42 to build giant data center for Stargate UAE project

Reuters & Bloomberg: OpenAI to design “inference AI” chip with Broadcom and TSMC

Muon Space in deal with Hubble Network to deploy world’s first satellite-powered Bluetooth network

Muon Space, a provider of end-to-end space systems specializing in mission-optimized satellite constellations, today announced its most capable satellite platform, MuSat XL, a high-performance 500 kg-class spacecraft designed for the most demanding next-generation low Earth orbit (LEO) missions. Muon also announced its first customer for the XL Platform: Hubble Network, a Seattle-based space-tech pioneer building the world’s first satellite-powered Bluetooth network. IEEE Techblog reported Hubble Network’s first Bluetooth to space satellite connection in this post.

The XL Platform delivers a dramatically expanded capability tier to the flight-proven Halo™ stack – delivering more power, agility, and integration flexibility while preserving the speed, scalability and cost-effectiveness needed for constellation deployment. Optimized for Earth observation (EO) and telecommunications missions supporting commercial and national security customers that require multi-payload operations, extreme data throughput, high-performance inter-satellite networking, and cutting-edge attitude control and pointing, the XL Platform sets a new industry benchmark for mission performance and value. “XL is more than a bigger bus – it’s a true enabler for customers pushing the boundaries of what’s possible in orbit, like Hubble,” said Jonny Dyer, CEO of Muon Space. “Their transformative BLE technology represents the future of space-based services and we are ecstatic to enable their mission with the XL Platform and our Halo stack.”

The Muon Space XL platform combines exceptional payload power, precise pointing, and high-bandwidth networking to enable advanced space capabilities across defense, disaster response, and commercial missions.

Enhancing Global BLE Coverage:

In 2024, Hubble became the first company to establish a Bluetooth connection directly to a satellite, fueling global IoT growth. Using MuSat XL, it will deploy a next-generation BLE payload featuring a phased-array antenna and a receiver 20 times more powerful than its CubeSat predecessor, enabling BLE detection at 30 times lower power and direct connectivity for ultra-low-cost, energy-efficient devices worldwide. MuSat XL’s large payload accommodation, multi-kW power system, and cutting-edge networking and communications capabilities are key enablers for advanced services like Hubble’s.

“Muon’s platform gives us the scale and power to build a true Bluetooth layer around the Earth,” said Alex Haro, Co-Founder and CEO of Hubble Network.

The first two MuSat XL satellites will provide a 12-hour global revisit time, with a scalable design for faster coverage. Hubble’s BLE Finding Network supports critical applications in logistics, infrastructure, defense, and consumer technology.

A Next Generation Multi-Mission Satellite Platform:

MuSat XL is built for operators who need real capability – more power, larger apertures, more flexibility, and more agility – and with the speed to orbit and reliability that Muon has already demonstrated with its other platforms in orbit since 2023. Built on the foundation of Muon’s heritage 200 kg MuSat architecture, MuSat XL is a 500 kg-class bus that extends the Halo technology stack’s performance envelope to enable high-impact, real-time missions.

Key capabilities include:

- 1 kW+ orbit average payload power – Supporting advanced sensors, phased arrays, and edge computing applications.

- Seamless, internet-standards based, high bandwidth, low latency communications, and optical crosslink networking – Extremely high volume downlink (>5 TB / day) and near real-time communications for time-sensitive operations critical for defense, disaster response, and dynamic tasking.

- Flexible onboard interface, network, compute – Muon’s PayloadCore architecture enables rapid hardware/software integration of payloads and deployment of cloud-like workflows to onboard network, storage, and compute.

- Precise, stable, and agile pointing – Attitude control architected for the rigorous needs of next-generation EO and RF payloads.

In the competitive small satellite market, MuSat XL offers standout advantages in payload volume, power availability, and integration flexibility – making it a versatile backbone for advanced sensors, communications systems, and compute-intensive applications. The platform is built for scale: modular, manufacturable, and fully integrated with Muon’s vertically developed stack, from custom instrument design to full mission operations via the Halo technology stack.

Muon designed MuSat XL to deliver exceptional performance without added complexity. Early adopters like Hubble signal a broader trend in the industry: embracing platforms that offer operational autonomy, speed, and mission longevity at commercial scale.

About Muon Space:

Founded in 2021, Muon Space is an end-to-end space systems company that designs, builds, and operates mission-optimized satellite constellations to deliver critical data and enable real-time compute and decision-making in space. Its proprietary technology stack, Halo™, integrates advanced spacecraft platforms, robust payload integration and management, and a powerful software-defined orchestration layer to enable high-performance capabilities at unprecedented speed – from concept to orbit. With state-of-the-art production facilities in Silicon Valley and a growing track record of commercial and national security customers, Muon Space is redefining how critical Earth intelligence is delivered from space. Muon Space employs a team of more than 150 engineers and scientists, including industry experts from Skybox, NASA, SpaceX, and others. SOURCE: Muon Space

About Hubble Network:

Founded in 2021, Hubble is creating the world’s first satellite-powered Bluetooth network, enabling global connectivity without reliance on cellular infrastructure. The Hubble platform makes it easy to transmit low-bandwidth data from any Bluetooth-enabled device, with no infrastructure required. Their global BLE network is live and expanding rapidly, delivering real-time visibility across supply chains, fleets, and facilities. Visit www.hubble.com for more information.

References:

Hubble Network Makes Earth-to-Space Bluetooth Satellite Connection; Life360 Global Location Tracking Network

WiFi 7: Backgrounder and CES 2025 Announcements

Nvidia’s networking solutions give it an edge over competitive AI chip makers

Nvidia’s networking equipment and module sales accounted for $12.9 billion of its $115.1 billion in data center revenue in its prior fiscal year. Composed of its NVLink, InfiniBand, and Ethernet solutions, Nvidia’s networking products (from its Mellanox acquisition) are what allow its GPU chips to communicate with each other, let servers talk to each other inside massive data centers, and ultimately ensure end users can connect to it all to run AI applications.

“The most important part in building a supercomputer is the infrastructure. The most important part is how you connect those computing engines together to form that larger unit of computing,” explained Gilad Shainer, senior vice president of networking at Nvidia.

In Q1-2025, networking made up $4.9 billion of Nvidia’s $39.1 billion in data center revenue. And it’ll continue to grow as customers continue to build out their AI capacity, whether that’s at research universities or massive data centers.

“It is the most underappreciated part of Nvidia’s business, by orders of magnitude,” Deepwater Asset Management managing partner Gene Munster told Yahoo Finance. “Basically, networking doesn’t get the attention because it’s 11% of revenue. But it’s growing like a rocket ship. “[Nvidia is a] very different business without networking,” Munster explained. “The output that the people who are buying all the Nvidia chips [are] desiring wouldn’t happen if it wasn’t for their networking.”

Nvidia senior vice president of networking Kevin Deierling says the company has to work across three different types of networks:

- NVLink technology connects GPUs to each other within a server or multiple servers inside of a tall, cabinet-like server rack, allowing them to communicate and boost overall performance.

- InfiniBand connects multiple server nodes across data centers to form what is essentially a massive AI computer.

- Ethernet connectivity for front-end network for storage and system management.

Note: Industry groups also have their own competing networking technologies including UALink, which is meant to go head-to-head with NVLink, explained Forrester analyst Alvin Nguyen.

“Those three networks are all required to build a giant AI-scale, or even a moderately sized enterprise-scale, AI computer,” Deierling explained. Low latency is key as longer transit times for data going to/from GPUs slows the entire operation, delaying other processes and impacting the overall efficiency of an entire data center.

Nvidia CEO Jensen Huang presents a Grace Blackwell NVLink72 as he delivers a keynote address at the Consumer Electronics Show (CES) in Las Vegas, Nevada on January 6, 2025. Photo by PATRICK T. FALLON/AFP via Getty Images

As companies continue to develop larger AI models and autonomous and semi-autonomous agentic AI capabilities that can perform tasks for users, making sure those GPUs work in lockstep with each other becomes increasingly important.

The AI industry is in the midst of a broad reordering around the idea of inferencing, which requires more powerful data center systems to run AI models. “I think there’s still a misperception that inferencing is trivial and easy,” Deierling said.

“It turns out that it’s starting to look more and more like training as we get to [an] agentic workflow. So all of these networks are important. Having them together, tightly coupled to the CPU, the GPU, and the DPU [data processing unit], all of that is vitally important to make inferencing a good experience.”

Competitor AI chip makers, like AMD are looking to grab more market share from Nvidia, and cloud giants like Amazon, Google, and Microsoft continue to design and develop their own AI chips. However, none of them have the low latency, high speed connectivity solutions provided by Nvidia (again, think Mellanox).

References:

https://www.nvidia.com/en-us/networking/

Networking chips and modules for AI data centers: Infiniband, Ultra Ethernet, Optical Connections

Nvidia enters Data Center Ethernet market with its Spectrum-X networking platform

Superclusters of Nvidia GPU/AI chips combined with end-to-end network platforms to create next generation data centers

Does AI change the business case for cloud networking?

The case for and against AI-RAN technology using Nvidia or AMD GPUs

Telecom sessions at Nvidia’s 2025 AI developers GTC: March 17–21 in San Jose, CA

NBN selects Amazon Project Kuiper over Starlink for LEO satellite internet service in Australia

Government-owned wholesale broadband operator NBN Co will become the first major customer of Amazon’s Project Kuiper Low-Earth Orbit (LEO) satellite internet technology in Australia beginning in the middle of 2026. At that time, NBN Co plans to offer wholesale residential-grade fixed LEO satellite broadband services to more than 300,000 premises within our existing satellite footprint via participating Retail Service Providers (RSPs).

The agreement will enable NBN Co to transition from its existing geostationary Sky Muster satellite service over the coming years and will complement NBN Co’s investments in fiber and fixed wireless upgrades for regional Australia.

NBN Co will shortly start consultation with RSPs, regional communities and stakeholders, to help inform what speed tiers are offered, wholesale pricing and the upgrade for customers. The consultation will consider the offer of equipment and professional initial standard installation and assurance at no cost for existing eligible NBN satellite customers, via participating RSPs.

…………………………………………………………………………………………………………………………………………………………………………………………………………

Project Kuiper’s low-latency, high-bandwidth satellite network will provide significant improvements to the quality and reliability of broadband for eligible regional, rural and remote communities. To achieve its goals, Project Kuiper is deploying thousands of satellites in low Earth orbit —connected to each other by high-speed optical links that will create a mesh network in space—and linked to a global network of antennas, fiber, and internet connection points on the ground.

The initial satellite constellation will include more than 3,200 satellites, which began deploying on April 28, 2025 with its first operational launch. That initial launch consisted of 27 production satellites and was carried out by a United Launch Alliance Atlas V rocket, according to the United Launch Alliance. The launch took place from Cape Canaveral Space Force Station in Florida.

There are currently 78 Kuiper satellites in orbit, after three successful launches in less than three months. Amazon is continuing to increase its production, processing and launch rates ahead of an initial service rollout.

…………………………………………………………………………………………………………………………………………………………………………………………………………

In the coming years, LEO satellite services powered by Project Kuiper will replace NBN’s current geostationary orbit Sky Muster satellite service. The company plans to maintain and operate its two geostationary Sky Muster satellites until the transition to the Project Kuiper satellite network is complete. This will ensure continuity for customers in regional, rural, and remote parts of Australia who rely on satellite telecommunications. However, the two Sky Muster satellites are expected to remain operational until approximately 2032.

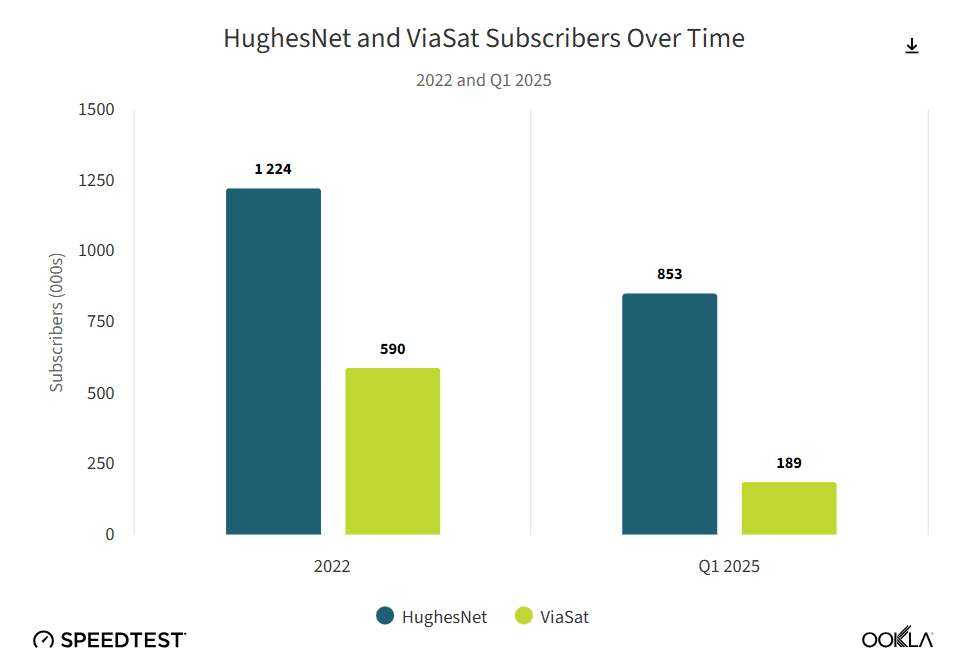

The agreement between NBN and Amazon is expected to introduce competition to the LEO-based satellite internet services market, particularly in regional Australia. Currently, Starlink dominates this market as the only LEO satellite operator. As of April 2025, Starlink claimed to have more than 350,000 customers in Australia.

Telecom analyst Paul Budde told Reuters that NBN’s decision to partner with Amazon was probably influenced by the need to limit sovereign risk arising from giving control of essential Australian infrastructure to a company aligned with “a very unpredictable America. I am sure total dependence on Starlink would not be seen as a favorable situation,” he added.

Ellie Sweeney, Chief Executive Officer at NBN Co, said:

“LEO satellite broadband, supplied by NBN Co and powered by Amazon’s Project Kuiper, will be a major leap forward for customers in parts of regional, rural and remote Australia.

“This important agreement will complement our other major network upgrades that have involved the rollout of full fibre services across much of our fixed line network and the deployment of the latest 5G millimeter wave technology to improve the speed and capacity of our fixed wireless network.

“Australians deserve to have access to fast, effective broadband regardless of whether they live in a major city, on the outskirts of a country town or miles from their nearest neighbor. That’s what NBN was set up to deliver. By upgrading to next generation LEO satellite broadband powered by Project Kuiper, we are working to bring the best available technology to Aussies in the bush.

“Transitioning from two geostationary satellites to a constellation of Low Earth Orbit satellites will help to ensure the nbn network is future-ready and delivers the best possible broadband experience to customers living and working in parts of regional, rural and remote Australia.

“We plan to bring faster, lower latency broadband to Australians living and working in regional, rural and remote areas, enabling their ongoing participation in the economy for work, study, telehealth, streaming entertainment and connecting with family and friends.

“This new LEO service will eventually replace our geostationary satellites, and we are committed to working with regional communities to ensure we provide continuity of service and make the transition as smooth and seamless as possible.”

Rajeev Badyal, Vice President, Technology at Amazon’s Project Kuiper, said:

“We’ve designed Project Kuiper to be the most advanced satellite system ever built, and we’re combining that innovation with Amazon’s long track record of making everyday life better for customers. We’re proud to be working with NBN to bring Kuiper to even more customers and communities across Australia and look forward to creating new opportunities for hundreds of thousands of people in rural and remote parts of the country.”

References:

https://www.lightreading.com/satellite/nbn-amazon-deal-to-bring-project-kuiper-to-australia-by-2026

https://www.aboutamazon.com/news/innovation-at-amazon/project-kuiper-satellite-internet-first-launch

Amazon launches first Project Kuiper satellites in direct competition with SpaceX/Starlink

Vodafone and Amazon’s Project Kuiper to extend 4G/5G in Africa and Europe

Amazon to Spend Billions on 38 Space Launches for Project Kuiper

Telstra selects SpaceX’s Starlink to bring Satellite-to-Mobile text messaging to its customers in Australia

GEO satellite internet from HughesNet and Viasat can’t compete with LEO Starlink in speed or latency

FCC: More competition for Starlink; freeing up spectrum for satellite broadband service

Open AI raises $8.3B and is valued at $300B; AI speculative mania rivals Dot-com bubble

According to the Financial Times (FT), OpenAI (the inventor of Chat GPT) has raised another $8.3 billion in a massively over-subscribed funding round, including $2.8 billion from Dragoneer Investment Group, a San Francisco-based technology-focused fund. Leading VCs that also participated in the funding round included Founders Fund, Sequoia Capital, Andreessen Horowitz, Coatue Management, Altimeter Capital, D1 Capital Partners, Tiger Global and Thrive Capital, according to the people with knowledge of the deal.

The oversubscribed funding round came months ahead of schedule. OpenAI initially raised $2.5 billion from VC firms in March when it announced its intention to raise $40 billion in a round spearheaded by SoftBank. The Chat GPT maker is now valued at $300 billion.

OpenAI’s annual recurring revenue has surged to $12bn, according to a person with knowledge of OpenAI’s finances, and the group is set to release its latest model, GPT-5, this month.

OpenAI is in the midst of complex negotiations with Microsoft that will determine its corporate structure. Rewriting the terms of the pair’s current contract, which runs until 2030, is seen as a prerequisite to OpenAI simplifying its structure and eventually going public. The two companies have yet to agree on key issues such as how long Microsoft will have access to OpenAI’s intellectual property. Another sticking point is the future of an “AGI clause”, which allows OpenAI’s board to declare that the company has achieved a breakthrough in capability called “artificial general intelligence,” which would then end Microsoft’s access to new models.

An additional risk is the increasing competition from rivals such as Anthropic — which is itself in talks for a multibillion-dollar fundraising — and is also in a continuing legal battle with Elon Musk. The FT also reported that Amazon is set to increase its already massive investment in Anthropic.

OpenAI CEO Sam Altman. The funding forms part of a round announced in March that values the ChatGPT maker at $300bn © Yuichi Yamazaki/AFP via Getty Images

………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………..

While AI is the transformative technology of this generation, comparisons are increasingly being made with the Dot-com bubble. 1999 saw such a speculative frenzy for anything with a ‘.com’ at the end that valuations and stock markets reached unrealistic and clearly unsustainable levels. When that speculative bubble burst, the global economy fell into an extended recession in 2001-2002. As a result, analysts are now questioning the wisdom of the current AI speculative bubble and fearing dire consequences when it eventually bursts. Just as with the Dot-com bubble, AI revenues are nowhere near justifying AI company valuations, especially for private AI companies that are losing tons of money (see Open AI losses detailed below).

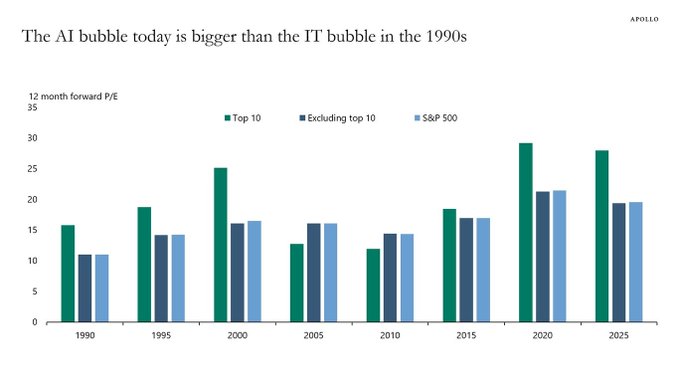

Torsten Slok, Partner and Chief Economist at Apollo Global Management via ZERO HEDGE on X: “The difference between the IT bubble in the 1990s and the AI bubble today is that the top 10 companies in the S&P 500 today (including Nvidia, Microsoft, Amazon, Google, and Meta) are more overvalued than the IT companies were in the 1990s.”

AI private companies may take a lot longer to reach the lofty profit projections institutional investors have assumed. Their reliance on projected future profits over current fundamentals is a dire warning sign to this author. OpenAI, for example, faces significant losses and aggressive revenue targets to become profitable. OpenAI reported an estimated loss of $5 billion in 2024, despite generating $3.7 billion in revenue. The company is projected to lose $14 billion in 2026 while total projected losses from 2023 to 2028 are expected to reach $44 billion.

Other AI bubble data points (publicly traded stocks):

- The proportion of the S&P 500 represented by the 10 largest companies is significantly higher now (almost 40%) compared to 25% in 1999. This indicates a more concentrated market driven by a few large technology companies deeply involved in AI development and adoption.

- Investment in AI infrastructure has reportedly exceeded the spending on telecom and internet infrastructure during the dot-com boom and continues to grow, suggesting a potentially larger scale of investment in AI relative to the prior period.

- Some indices tracking AI stocks have demonstrated exceptionally high gains in a short period, potentially surpassing the rates of the dot-com era, suggesting a faster build-up in valuations.

- The leading hyperscalers, such as Amazon, Microsoft, Google, and Meta, are investing vast sums in AI infrastructure to capitalize on the burgeoning AI market. Forecasts suggest these companies will collectively spend $381 billion in 2025 on AI-ready infrastructure, a significant increase from an estimated $270 billion in 2024.

Check out this YouTube video: “How AI Became the New Dot-Com Bubble”

References:

https://www.ft.com/content/76dd6aed-f60e-487b-be1b-e3ec92168c11

https://www.telecoms.com/ai/openai-funding-frenzy-inflates-the-ai-bubble-even-further

https://x.com/zerohedge/status/1945450061334216905

AI spending is surging; companies accelerate AI adoption, but job cuts loom large

Will billions of dollars big tech is spending on Gen AI data centers produce a decent ROI?

Canalys & Gartner: AI investments drive growth in cloud infrastructure spending

AI Echo Chamber: “Upstream AI” companies huge spending fuels profit growth for “Downstream AI” firms

Huawei launches CloudMatrix 384 AI System to rival Nvidia’s most advanced AI system

Gen AI eroding critical thinking skills; AI threatens more telecom job losses

Dell’Oro: AI RAN to account for 1/3 of RAN market by 2029; AI RAN Alliance membership increases but few telcos have joined

Emerging Cybersecurity Risks in Modern Manufacturing Factory Networks

By Omkar Ashok Bhalekar with Ajay Lotan Thakur

Introduction

With the advent of new industry 5.0 standards and ongoing advancements in the field of Industry 4.0, the manufacturing landscape is facing a revolutionary challenge which not only demands sustainable use of environmental resources but also compels us to make constant changes in industrial security postures to tackle modern threats. Technologies such as Internet of Things (IoT) in Manufacturing, Private 4G/5G, Cloud-hosted applications, Edge-computing, and Real-time streaming telemetry are effectively fueling smart factories and making them more productive.

Although this evolution facilitates industrial automation, innovation and high productivity, it also greatly makes the exposure footprint more vulnerable for cyberattacks. Industrial Cybersecurity is quintessential for mission critical manufacturing operations; it is a key cornerstone to safeguard factories and avoid major downtimes.

With the rapid amalgamation of IT and OT (Operational Technology), a hack or a data breach can cause operational disruptions like line down situations, halt in production lines, theft or loss of critical data, and huge financial damage to an organization.

Industrial Networking

Why does Modern Manufacturing demand Cybersecurity? Below outlines a few reasons why cybersecurity is essential in modern manufacturing:

- Convergence of IT and OT: Industrial control systems (ICS) which used to be isolated or air-gapped are now all inter-connected and hence vulnerable to breaches.

- Enlarged Attack Surface: Every device or component in the factory which is on the network is susceptible to threats and attacks.

- Financial Loss: Cyberattacks such as WannaCry or targeted BSOD Blue Screen of Death (BSOD) can cost millions of dollars per minute and result in complete shutdown of operations.

- Disruptions in Logistics Network: Supply chain can be greatly disarrayed due to hacks or cyberattacks causing essential parts shortage.

- Legislative Compliance: Strict laws and regulations such as CISA, NIST, and ISA/IEC 62443 are proving crucial and mandating frameworks to safeguard industries

It is important to understand and adapt to the changing trends in the cybersecurity domain, especially when there are several significant factors at risk. Historically, it has been observed that mankind always has had some lessons learned from their past mistakes while not only advances at fast pace, but the risks from external threats would limit us from making advancements without taking cognizance.

This attitude of adaptability or malleability needs to become an integral part of the mindset and practices in cybersecurity spheres and should not be limited to just industrial security. Such practices can scale across other technological fields. Moreover, securing industries does not just mean physical security, but it also opens avenues for cybersecurity experts to learn and innovate in the field of applications and software such as Manufacturing Execution System (MES) which are crucial for critical operations.

Greatest Cyberattacks in Manufacturing of all times:

Familiarizing and acknowledging different categories of attacks and their scales which have historically hampered the manufacturing domain is pivotal. In this section we would highlight some of the Real-World cybersecurity incidents.

Ransomware (Colonial Pipeline, WannaCry, y.2021):

These attacks brought the US east coast to a standstill due to extreme shortage of fuel and gasoline after hacking employee credentials.

Cause: The root cause for this was compromised VPN account credentials. An VPN account which wasn’t used for a long time and lacked Multi-factor Authentication (MFA) was breached and the credentials were part of a password leak on dark web. The Ransomware group “Darkside” exploited this entry point to gain access to Colonial Pipeline’s IT systems. They did not initially penetrate operational technology systems. However, the interdependence of IT and OT systems caused operational impacts. Once inside, attackers escalated privileges and exfiltrated 100 GB of data within 2 hours. Ransomware was deployed to encrypt critical business systems. Colonial Pipeline proactively shut down the pipeline fearing lateral movement into OT networks.

Effect: The pipeline, which supplies nearly 45% of the fuel to the U.S. East Coast, was shut down for 6 days. Mass fuel shortages occurred across several U.S. states, leading to public panic and fuel hoarding. Colonial Pipeline paid $4.4 million ransom. Later, approximately $2.3 million was recovered by the FBI. Led to a Presidential Executive Order on Cybersecurity and heightened regulations around critical infrastructure cybersecurity. Exposed how business IT network vulnerabilities can lead to real-world critical infrastructure impacts, even without OT being directly targeted.

Industrial Sabotage (Stuxnet, y.2009):

This unprecedented and novel software worm was able to hijack an entire critical facility and sabotage all the machines rendering them defunct.

Cause: Nation-state-developed malware specifically targeting Industrial Control Systems (ICS), with an unprecedented level of sophistication. Stuxnet was developed jointly by the U.S. (NSA) and Israel (Unit 8200) under operation “Olympic Games”. The target was Iran’s uranium enrichment program at Natanz Nuclear Facility. The worm was introduced via USB drives (air-gapped network). Exploited four zero-day vulnerabilities in Windows systems at that time, unprecedented. Specifically targeted Siemens Step7 software running on Windows, which controls Siemens S7-300 PLCs. Stuxnet would identify systems controlling centrifuges used for uranium enrichment. Reprogrammed the PLCs to intermittently change the rotational speed of centrifuges, causing mechanical stress and failure, while reporting normal operations to operators. Used rootkits for both Windows and PLC-level to remain stealthy.

Effect: Destroyed approximately 1,000 IR-1 centrifuges (~10% of Iran’s nuclear capability). Set back Iran’s nuclear program by 1-2 years. Introduced a new era of cyberwarfare, where malware caused physical destruction. Raised global awareness about the vulnerabilities in industrial control systems (ICS). Iran responded by accelerating its cyber capabilities, forming the Iranian Cyber Army. ICS/SCADA security became a top global priority, especially in energy and defense sectors.

Upgrade spoofing (SolarWinds Orion Supply chain Attack, y.2020):

Attackers injected malicious pieces of software into the software updates which infected millions of users.

Cause: Compromise of the SolarWinds build environment leading to a supply chain attack. Attackers known as Russian Cozy Bear, linked to Russia’s foreign intelligence agency, gained access to SolarWinds’ development pipeline. Malicious code was inserted into Orion Platform updates, released between March to June 2020 Customers who downloaded the update installed malware known as SUNBURST. Attackers compromised SolarWinds build infrastructure. It created a backdoor in Orion’s signed DLLs. Over 18,000 customers were potentially affected, including 100 high-value targets. After the exploit, attackers used manual lateral movement, privilege escalation, and custom C2 (command-and-control) infrastructure to exfiltrate data.

Effect: Breach included major U.S. government agencies: DHS, DoE, DoJ, Treasury, State Department, and more. Affected top corporations: Cisco, Intel, Microsoft, FireEye, and others FireEye discovered the breach after noticing unusual two-factor authentication activity. Exposed critical supply chain vulnerabilities and demonstrated how a single point of compromise could lead to nationwide espionage. Promoted the creation of Cybersecurity Executive Order 14028, Zero Trust mandates, and widespread adoption of Software Bill of Materials (SBOM) practices.

Spywares (Pegasus, y.2016-2021):

Cause: Zero-click and zero-day exploits leveraged by NSO Group’s Pegasus spyware, sold to governments. Pegasus can infect phones without any user interaction also known as zero-click exploits. It acquires malicious access to WhatsApp, iMessage or browsers like Safari’s vulnerabilities on iOS, including zero-days attacks on Android devices. Delivered via SMS, WhatsApp messages, or silent push notifications. Once installed, it provides complete surveillance capability such as access to microphones, camera, GPS, calls, photos, texts, and encrypted apps. Zero-click iOS exploit ForcedEntry allows complete compromise of an iPhone. Malware is extremely stealthy, often removing itself after execution. Bypassed Apple’s BlastDoor sandbox and Android’s hardened security modules.

Effect: Used by multiple governments to surveil activists, journalists, lawyers, opposition leaders, even heads of state. The 2021 Pegasus Project, led by Amnesty International and Forbidden Stories, revealed a leaked list of 50,000 potential targets. Phones of high-profile individuals including international journalists, associates, specifically French president, and Indian opposition figures were allegedly targeted which triggered legal and political fallout. NSO Group was blacklisted by the U.S. Department of Commerce. Apple filed a lawsuit against NSO Group in 2021. Renewed debates over the ethics and regulation of commercial spyware.

Other common types of attacks:

Phishing and Smishing: These attacks send out links or emails that appear to be legitimate but are crafted by bad actors for financial means or identity theft.

Social Engineering: Shoulder surfing though sounds funny; it’s the tale of time where the most expert security personnel have been outsmarted and faced data or credential leaks. Rather than relying on technical vulnerabilities, this attack targets human psychology to gain access or break into systems. The attacker manipulates people into revealing confidential information using techniques such as Reconnaissance, Engagement, Baiting or offering Quid pro quo services.

Security Runbook for Manufacturing Industries:

To ensure ongoing enhancements to industrial security postures and preserve critical manufacturing operations, following are 11 security procedures and tactics which will ensure 360-degree protection based on established frameworks:

A. Incident Handling Tactics (First Line of Defense) Team should continuously improve incident response with the help of documentation and response apps. Co-ordination between teams, communications root, cause analysis and reference documentation are the key to successful Incident response.

B. Zero Trust Principles (Trust but verify) Use strong security device management tools to ensure all end devices are in compliance such as trusted certificates, NAC, and enforcement policies. Regular and random checks on users’ official data patterns and assign role-based policy limiting full access to critical resources.

C. Secure Communication and Data Protection Use endpoint or cloud-based security session with IPSec VPN tunnels to make sure all traffic can be controlled and monitored. All user data must be encrypted using data protection and recovery software such as BitLocker.

D. Secure IT Infrastructure Hardening of network equipment such switches, routers, WAPs with dot1x, port-security and EAP-TLS or PEAP. Implement edge-based monitoring solutions to detect anomalies and redundant network infrastructure to ensure least MTTR.

E. Physical Security Locks, badge readers or biometric systems for all critical rooms and network cabinets are a must. A security operations room (SOC) can help monitor internal thefts or sabotage incidents.

F. North-South and East-West Traffic Isolation Safety traffic and external traffic can be rate limited using Firewalls or edge compute devices. 100% isolation is a good wishful thought, but measures need to be taken to constantly monitor any security punch-holes.

G. Industrial Hardware for Industrial applications Use appropriate Industrial grade IP67 or IP68 rated network equipment to avoid breakdowns due to environmental factors. Localized industrial firewalls can provide desired granularity on the edge thereby skipping the need to follow Purdue model.

H. Next-Generation Firewalls with Application-Level Visibility Incorporate Stateful Application Aware Firewalls, which can help provide more control over zones and policies and differentiate application’s behavioral characteristics. Deploy Tools which can perform deep packet inspection and function as platforms for Intrusion prevention (IPS/IDS).

I. Threat and Traffic Analyzer Tools such as network traffic analyzers can help achieve network Layer1-Layer7 security monitoring by detecting and responding to malicious traffic patterns. Self-healing networks with automation and monitoring tools which can detect traffic anomalies and rectify the network incompliance.

J. Information security and Software management Companies must maintain a repo of trust certificates, software and releases and keep pushing regular patches for critical bugs. Keep a constant track of release notes and CVEs (Common Vulnerabilities and exposures) for all vendor software.

K. Idiot-Proofing (How to NOT get Hacked) Regular training to employees and familiarizing them with cyber-attacks and jargons like CryptoJacking or HoneyNets can help create awareness. Encourage and provide a platform for employees or workers to voice their opinions and resolve their queries regarding security threats.

Current Industry Perspective and Software Response

In response to the escalating tide of cyberattacks in manufacturing, from the Triton malware striking industrial safety controls to LockerGoga shutting down production at Norsk Hydro, there has been a sea change in how the software industry is facilitating operational resilience. Security companies are combining cutting-edge threat detection with ICS/SCADA systems, delivering purpose-designed solutions like zero-trust network access, behavior-based anomaly detection, and encrypted machine-to-machine communications. Companies such as Siemens and Claroty are leading the way, bringing security by design rather than an afterthought. A prime example is Dragos OT-specific threat intelligence and incident response solutions, which have become the focal point in the fight against nation-state attacks and ransomware operations against critical infrastructure.

Bridging the Divide between IT and OT: Two way street

With the intensification of OT and IT convergence, perimeter-based defense is no longer sufficient. Manufacturers are embracing emerging strategies such as Cybersecurity Mesh Architecture (CSMA) and applying IT-centric philosophies such as DevSecOps within the OT environment to foster secure by default deployment habits. The trend also brings attention to IEC 62443 conformity as well as NIST based risk assessment frameworks catering to manufacturing. Legacy PLCs having been networked and exposed to internet-borne threats, companies are embracing micro-segmentation, secure remote access, and real-time monitoring solutions that unify security across both environments. Learn how Schneider Electric is empowering manufacturers to securely link IT/OT systems with scalable cybersecurity programs.

Conclusion

In a nutshell, Modern manufacturing, contrary to the past, is not just about quick input and quick output systems which can scale and be productive, but it is an ecosystem, where cybersecurity and manufacturing harmonize and just like healthcare system is considered critical to humans, modern factories are considered quintessential to manufacturing. So many experiences with cyberattacks on critical infrastructure such as pipelines, nuclear plants, power-grids over the past 30 years not only warrant world’s attention but also calls to action the need to devise regulatory standards which must be followed by each and every entity in manufacturing.

As mankind keeps making progress and sprinting towards the next industrial revolution, it’s an absolute exigency to emphasize making Industrial Cybersecurity a keystone in building upcoming critical manufacturing facilities and building a strong foundation for operational excellency. Now is the right time to buy into the trend of Industrial security, sure enough the leaders who choose to be “Cyberfacturers” will survive to tell the tale, and the rest may just serve as stark reminders of what happens when pace outperforms security.

References

- https://www.cisa.gov/topics/industrial-control-systems

- https://www.nist.gov/cyberframework

- https://www.isa.org/standards-and-publications/isa-standards/isa-standards-committees/isa99

- https://www.cisa.gov/news-events/news/attack-colonial-pipeline-what-weve-learned-what-weve-done-over-past-two-years

- https://www.law.georgetown.edu/environmental-law-review/blog/cybersecurity-policy-responses-to-the-colonial-pipeline-ransomware-attack/

- https://www.hbs.edu/faculty/Pages/item.aspx?num=63756

- https://spectrum.ieee.org/the-real-story-of-stuxnet

- https://www.washingtonpost.com/world/national-security/stuxnet-was-work-of-us-and-israeli-experts-officials-say/2012/06/01/gJQAlnEy6U_story.html

- https://www.kaspersky.com/resource-center/definitions/what-is-stuxnet

- https://www.malwarebytes.com/stuxnet

- https://www.zscaler.com/resources/security-terms-glossary/what-is-the-solarwinds-cyberattack

- https://www.gao.gov/blog/solarwinds-cyberattack-demands-significant-federal-and-private-sector-response-infographic

- https://www.rapid7.com/blog/post/2020/12/14/solarwinds-sunburst-backdoor-supply-chain-attack-what-you-need-to-know/

- https://www.fortinet.com/resources/cyberglossary/solarwinds-cyber-attack

- https://www.amnesty.org/en/latest/research/2021/07/forensic-methodology-report-how-to-catch-nso-groups-pegasus/

- https://citizenlab.ca/2023/04/nso-groups-pegasus-spyware-returns-in-2022/

- https://thehackernews.com/2023/04/nso-group-used-3-zero-click-iphone.html

- https://www.securityweek.com/google-says-nso-pegasus-zero-click-most-technically-sophisticated-exploit-ever-seen/

- https://www.nist.gov/itl/smallbusinesscyber/guidance-topic/phishing

- https://www.blumira.com/blog/social-engineering-the-human-element-in-cybersecurity

- https://www.nist.gov/cyberframework

- https://www.dragos.com/cyber-threat-intelligence/

- https://mesh.security/security/what-is-csma/

- https://download.schneider-electric.com/files?p_Doc_Ref=IT_OT&p_enDocType=User+guide&p_File_Name=998-20244304_Schneider+Electric+Cybersecurity+White+Paper.pdf

- https://insanecyber.com/understanding-the-differences-in-ot-cybersecurity-standards-nist-csf-vs-62443/

- https://blog.se.com/sustainability/2021/04/13/it-ot-convergence-in-the-new-world-of-digital-industries/

About Author:

Omkar Bhalekar is a senior network engineer and technology enthusiast specializing in Data center architecture, Manufacturing infrastructure, and Sustainable solutions with extensive experience in designing resilient industrial networks and building smart factories and AI data centers with scalable networks. He is also the author of the Book Autonomous and Predictive Networks: The future of Networking in the Age of AI and co-author of Quantum Ops – Bridging Quantum Computing & IT Operations. Omkar writes to simplify complex technical topics for engineers, researchers, and industry leaders.

China gaining on U.S. in AI technology arms race- silicon, models and research

Introduction:

According to the Wall Street Journal, the U.S. maintains its early lead in AI technology with Silicon Valley home to the most popular AI models and the most powerful AI chips (from Santa Clara based Nvidia and AMD). However, China has shown a willingness to spend whatever it takes to take the lead in AI models and silicon.

The rising popularity of DeepSeek, the Chinese AI startup, has buoyed Beijing’s hopes that it can become more self-sufficient. Huawei has published several papers this year detailing how its researchers used its homegrown AI chips to build large language models without relying on American technology.

“China is obviously making progress in hardening its AI and computing ecosystem,” said Michael Frank, founder of think tank Seldon Strategies.

AI Silicon:

Morgan Stanley analysts forecast that China will have 82% of AI chips from domestic makers by 2027, up from 34% in 2024. China’s government has played an important role, funding new chip initiatives and other projects. In July, the local government in Shenzhen, where Huawei is based, said it was raising around $700 million to invest in strengthening an “independent and controllable” semiconductor supply chain.

During a meeting with President Xi Jinping in February, Huawei Chief Executive Officer Ren Zhengfei told Xi about “Project Spare Tire,” an effort by Huawei and 2,000 other enterprises to help China’s semiconductor sector achieve a self-sufficiency rate of 70% by 2028, according to people familiar with the meeting.

……………………………………………………………………………………………………………………………………………

AI Models:

Prodded by Beijing, Chinese financial institutions, state-owned companies and government agencies have rushed to deploy Chinese-made AI models, including DeepSeek [1.] and Alibaba’s Qwen. That has fueled demand for homegrown AI technologies and fostered domestic supply chains.

Note 1. DeepSeek’s V3 large language model matched many performance benchmarks of rival AI programs developed in the U.S. at a fraction of the cost. DeepSeek’s open-weight models have been integrated into many hospitals in China for various medical applications.

In recent weeks, a flurry of Chinese companies have flooded the market with open-source AI models, many of which are claiming to surpass DeepSeek’s performance in certain use cases. Open source models are freely accessible for modification and deployment.

The Chinese government is actively supporting AI development through funding and policy initiatives, including promoting the use of Chinese-made AI models in various sectors.

Meanwhile, OpenAI’s CEO Sam Altman said his company had pushed back the release of its open-source AI model indefinitely for further safety testing.

AI Research:

China has taken a commanding lead in the exploding field of artificial intelligence (AI) research, despite U.S. restrictions on exporting key computing chips to its rival, finds a new report.

The analysis of the proprietary Dimensions database, released yesterday, finds that the number of AI-related research papers has grown from less than 8500 published in 2000 to more than 57,000 in 2024. In 2000, China-based scholars produced just 671 AI papers, but in 2024 their 23,695 AI-related publications topped the combined output of the United States (6378), the United Kingdom (2747), and the European Union (10,055).

“U.S. influence in AI research is declining, with China now dominating,” Daniel Hook, CEO of Digital Science, which owns the Dimensions database, writes in the report DeepSeek and the New Geopolitics of AI: China’s ascent to research pre-eminence in AI.

In 2024, China’s researchers filed 35,423 AI-related patent applications, more than 13 times the 2678 patents filed in total by the U.S., the U.K., Canada, Japan, and South Korea.

References:

https://www.wsj.com/tech/ai/how-china-is-girding-for-an-ai-battle-with-the-u-s-5b23af51

Huawei launches CloudMatrix 384 AI System to rival Nvidia’s most advanced AI system

U.S. export controls on Nvidia H20 AI chips enables Huawei’s 910C GPU to be favored by AI tech giants in China

Goldman Sachs: Big 3 China telecom operators are the biggest beneficiaries of China’s AI boom via DeepSeek models; China Mobile’s ‘AI+NETWORK’ strategy

Gen AI eroding critical thinking skills; AI threatens more telecom job losses

Softbank developing autonomous AI agents; an AI model that can predict and capture human cognition

AI spending is surging; companies accelerate AI adoption, but job cuts loom large

Big Tech and VCs invest hundreds of billions in AI while salaries of AI experts reach the stratosphere

ZTE’s AI infrastructure and AI-powered terminals revealed at MWC Shanghai

Deloitte and TM Forum : How AI could revitalize the ailing telecom industry?

Ericsson completes Aduna joint venture with 12 telcos to drive network API adoption

Today, Ericsson announced the completion of the equity investments by twelve global communication service providers (CSPs) into its subsidiary Aduna, formally establishing Aduna as a 50:50 joint venture. Aduna was created to combine and sell aggregated network Application Programming Interfaces (APIs) globally. It has been operational since the deal signed on 11 September 2024. Aduna is now owned by AT&T, Bharti Airtel, Deutsche Telekom, Ericsson, KDDI, Orange, Reliance Jio, Singtel, Telefonica, Telstra, T-Mobile, Verizon and Vodafone.

The network APIs, based on the CAMARA open source project of the GSMA and Linux Foundation, are designed to enable network operators to offer services such as service level assurance, fraud prevention and authentication programmatically to application developers, similar to how those same application developers can easily spin up cloud compute instances on cloud providers like Google Cloud and Microsoft Azure. For telcos and other network operators, network APIs promise the potential to leverage 5G networks for new business models and revenue streams.

The Aduna ecosystem includes additional CSPs worldwide, as well as major developer platform companies, global system integration (GSI) companies, communication platform-as-a-service (CPaaS) companies, and independent software vendor (ISV) companies. To date these include: e& (in UAE -formerly Etisalat), Bouygues Telecom, Free, CelcomDigi, Softbank, NTT DOCOMO, Google Cloud, Vonage, Sinch, Infobip, Enstream, Bridge Alliance, Syniverse, JT Global, Microsoft, Wipro and Tech Mahindra – each playing a vital role in advancing the reach and impact of network APIs worldwide.

Former Vonage COO Anthony Bartolo, became Aduna CEO this past January. He said in a statement:

“The closing of the transaction is another important step for Aduna. In just ten months we have built an impressive ecosystem comprising the biggest names in telecoms and the wider ICT industry. The closing provides renewed motivation for Aduna to accelerate the adoption of network APIs by developers on a global scale. This includes encouraging more telecom operators to join the new company, further driving the industry and developer experience.”

………………………………………………………………………………………………………………………………………………………………………………………………………….

A Febuary 2024 McKinsey article stated:

Network APIs are the interlocking puzzle pieces that connect applications to one another and to telecom networks. As such, they are critical to companies seamlessly tapping into 5G’s powerful capabilities for hundreds of potential use cases, such as credit card fraud prevention, glitch-free videoconferencing, metaverse interactions, and entertainment. If developers have access to the right network APIs, enterprises can create 5G-driven applications that leverage features like speed on demand, low-latency connections, speed tiering, and edge compute discovery.

In addition to enhancing today’s use cases, network APIs can lay the foundation for entirely new ones. Remotely operated equipment, semi-autonomous vehicles in production environments, augmented reality gaming, and other use cases could create substantial value in a broad range of industries. By enabling these innovations, telecom operators can position themselves as essential partners to enterprises seeking to accelerate their digital transformations.

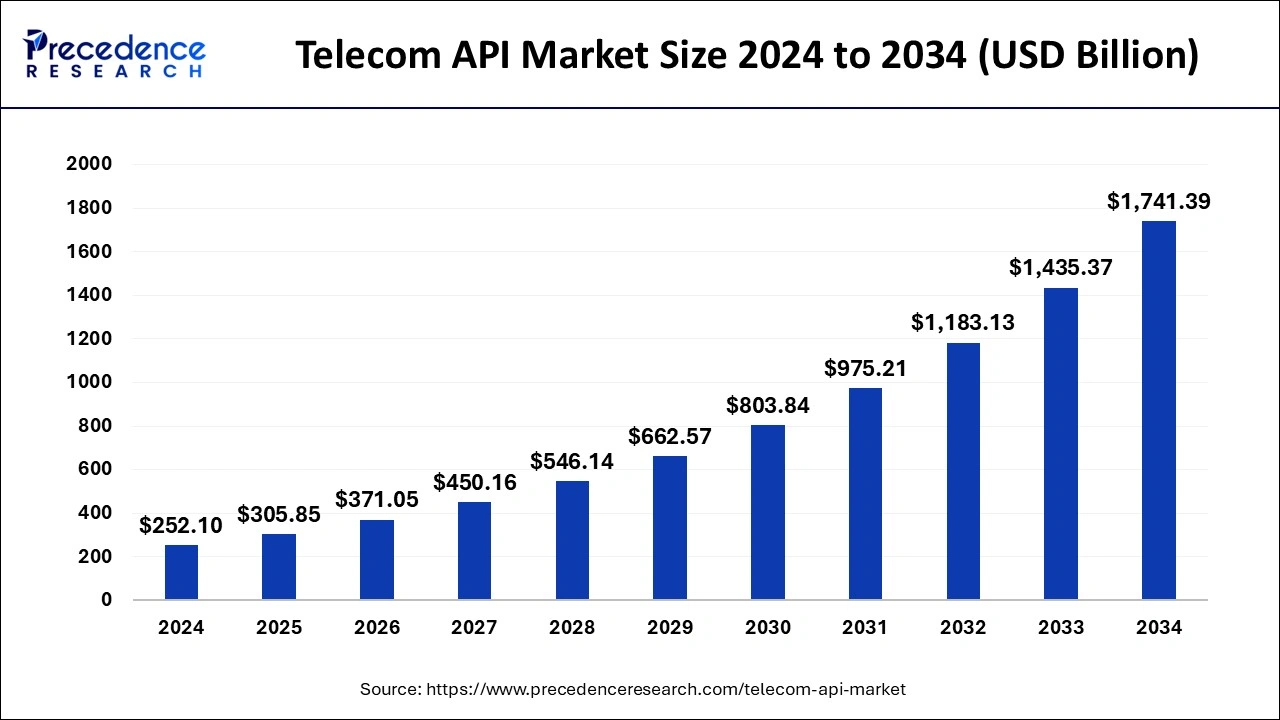

Over the next five to seven years, we estimate the network API market could unlock around $100 billion to $300 billion in connectivity- and edge-computing-related revenue for operators while generating an additional $10 billion to $30 billion from APIs themselves. But telcos won’t be the only ones vying for this lucrative pool. In fact, with the market structure currently in place, they would cede as much as two-thirds of the value creation to other players in the ecosystem, such as cloud providers and API aggregators—repeating the industry’s frustrating experience of the past two decades.

……………………………………………………………………………………………………………………………………………………………………………………………………………..

Telecom API Market Size and Forecast 2025 to 2034 by Precedence Research:

The global telecom API market size was estimated at USD 252.10 billion in 2024 and is anticipated to reach around USD 1741.39 billion by 2034, expanding at a CAGR of 21.32% from 2025 to 2034.

Aduna Global can potentially scale network APIs across regions, and maybe even globally, Leonard Lee, executive analyst at neXt Curve, told Fierce Network. Aduna can potentially help deliver trust and regulatory clients that network APIs need for widespread adoption. Aduna itself won’t directly offer services Aduna partners such as JT Global, Vonage and AWS already offer fraud prevention services that leverage relevant security and authentication-related network APIs, Lee said.

“You won’t likely see Aduna Global offer a fraud prevention service,” he added. “You need to look at Vonage, Infobip, Tech Mahindra and the like to offer these solutions that will likely be accessible to developers through APIs.”

……………………………………………………………………………………………………………………………………………………………………………………………………………..

References:

https://www.ericsson.com/en/press-releases/2025/7/ericsson-announces-completion-of-aduna-transaction

New venture to sell Network Application Programming Interfaces (APIs) on a global scale

https://www.precedenceresearch.com/telecom-api-market

Telefónica and Nokia partner to boost use of 5G SA network APIs

Countdown to Q-day: How modern-day Quantum and AI collusion could lead to The Death of Encryption

By Omkar Ashok Bhalekar with Ajay Lotan Thakur

Behind the quiet corridors of research laboratories and the whir of supercomputer data centers, a stealth revolution is gathering force, one with the potential to reshape the very building blocks of cybersecurity. At its heart are qubits, the building blocks of quantum computing, and the accelerant force of generative AI. Combined, they form a double-edged sword capable of breaking today’s encryption and opening the door to an era of both vast opportunity and unprecedented danger.

Modern Cryptography is Fragile

Modern-day computer security relies on the un-sinking complexity of certain mathematical problems. RSA encryption, introduced for the first time in 1977 by Rivest, Shamir, and Adleman, relies on the principle that factorization of a 2048-bit number into primes is computationally impossible for ordinary computers (RSA paper, 1978). Also, Diffie-Hellman key exchange, which was described by Whitfield Diffie and Martin Hellman in 1976, offers key exchange in a secure manner over an insecure channel based on the discrete logarithm problem (Diffie-Hellman paper, 1976). Elliptic-Curve Cryptography (ECC) was described in 1985 independently by Victor Miller and Neal Koblitz, based on the hardness of elliptic curve discrete logarithms, and remains resistant to brute-force attacks but with smaller key sizes for the same level of security (Koblitz ECC paper, 1987).

But quantum computing flips the script. Thanks to algorithms like Shor’s Algorithm, a sufficiently powerful quantum computer could factor large numbers exponentially faster than regular computers rendering RSA and ECC utterly useless. Meanwhile, Grover’s Algorithm provides symmetric key systems like AES with a quadratic boost.

What would take millennia or centuries to classical computers, quantum computers could boil down to days or even hours with the right scale. In fact, experts reckon that cracking RSA-2048 using Shor’s Algorithm could take just 20 million physical qubits which is a number that’s diminishing each year.

Generative AI adds fuel to the fire

While quantum computing threatens to undermine encryption itself, generative AI is playing an equally insidious but no less revolutionary role. By mass-producing activities such as the development of malware, phishing emails, and synthetic identities, generative AI models, large language models, and diffusion-based visual synthesizers, for example, are lowering the bar on sophisticated cyberattacks.

Even worse, generative AI can be applied to model and experiment with vulnerabilities in implementations of cryptography, including post-quantum cryptography. It can be employed to assist with training reinforcement learning agents that optimize attacks against side channels or profile quantum circuits to uncover new behaviors.

With quantum computing on the horizon, generative AI is both a sophisticated research tool and a player to watch when it comes to weaponization. On the one hand, security researchers utilize generative AI to produce, examine, and predict vulnerabilities in cryptography systems to inform the development of post-quantum-resistant algorithms. Meanwhile, it is exploited by malicious individuals for their ability to automate the production of complex attack vectors like advanced malware, phishing attacks, and synthetic identities radically reducing the barrier to conducting high impact cyberattacks. This dual-use application of generative AI radically shortens the timeline for adversaries to take advantage of breached or transitional cryptographic infrastructures, practically bridging the window of opportunity for defenders to deploy effective quantum-safe security solutions.

Real-World Implications

The impact of busted cryptography is real, and it puts at risk the foundations of everyday life:

1. Online Banking (TLS/HTTPS)

When you use your bank’s web site, the “https” in the address bar signifies encrypted communication over TLS (Transport Layer Security). Most TLS implementations rely on RSA or ECC keys to securely exchange session keys. A quantum attack would decrypt those exchanges, allowing an attacker to decrypt all internet traffic, including sensitive banking data.

2. Cryptocurrencies

Bitcoin, Ethereum, and other cryptocurrencies use ECDSA (Elliptic Curve Digital Signature Algorithm) for signing transactions. If quantum computers can crack ECDSA, a hacker would be able to forge signatures and steal digital assets. In fact, scientists have already performed simulations in which a quantum computer might be able to extract private keys from public blockchain data, enabling theft or rewriting the history of transactions.

3. Government Secrets and Intelligence Archives

National security agencies all over the world rely heavily on encryption algorithms such as RSA and AES to protect sensitive information, including secret messages, intelligence briefs, and critical infrastructure data. Of these, AES-256 is one that is secure even in the presence of quantum computing since it is a symmetric-key cipher that enjoys quantum resistance simply because Grover’s algorithm can only give a quadratic speedup against it, brute-force attacks remain gigantic in terms of resources and time. Conversely, asymmetric cryptographic algorithms like RSA and ECC, which underpin the majority of public key infrastructures, are fundamentally vulnerable to quantum attacks that can solve the hard mathematical problems they rely on for security.

Such a disparity offers a huge security gap. Information obtained today, even though it is in such excellent safekeeping now, might not be so in the future when sufficiently powerful quantum computers will be accessible, a scenario that is sometimes referred to as the “harvest now, decrypt later” threat. Both intelligence agencies and adversaries could be quietly hoarding and storing encrypted communications, confident that quantum technology will soon have the capability to decrypt this stockpile of sensitive information. The Snowden disclosures placed this threat in the limelight by revealing that the NSA catches and keeps vast amounts of global internet traffic, such as diplomatic cables, military orders, and personal communications. These repositories of encrypted data, unreadable as they stand now, are an unseen vulnerability; when Q-Day which is the onset of available, practical quantum computers that can defeat RSA and ECC, come around, confidentiality of decades’ worth of sensitive communications can be irretrievably lost.

Such a compromise would have apocalyptic consequences for national security and geopolitical stability, exposing classified negotiations, intelligence operations, and war plans to adversaries. Such a specter has compelled governments and security entities to accelerate the transition to post-quantum cryptography standards and explore quantum-resistant encryption schemes in an effort to safeguard the confidentiality and integrity of information in the era of quantum computing.

Arms Race Toward Post-Quantum Cryptography

In response, organizations like NIST are leading the development of post-quantum cryptographic standards, selecting algorithms believed to be quantum resistant. But migration is glacial. Implementing backfitting systems with new cryptographic foundations into billions of devices and services is a logistical nightmare. This is not a process of merely software updates but of hardware upgrades, re-certifications, interoperability testing, and compatibility testing with worldwide networks and critical infrastructure systems, all within a mode of minimizing downtime and security vulnerabilities.

Building such a large quantum computer that can factor RSA-2048 is an enormous task. It would require millions of logical qubits with very low error rates, it’s estimated. Today’s high-end quantum boxes have less than 100 operational qubits, and their error rates are too high to support complicated processes over a long period of time. However, with continued development of quantum correction methods, materials research, and qubit coherence times, specialists warn that effective quantum decryption capability may appear more quickly than the majority of organizations are prepared to deal with.

This convergence time frame, when old and new environments coexist, is where danger is most present. Attackers can use generative AI to look for these hybrid environments in which legacy encryption is employed, by botching the identification of old crypto implementations, producing targeted exploits en masse, and choreographing multi-step attacks that overwhelm conventional security monitoring and patching mechanisms.

Preparing for the Convergence

In order to be able to defend against this coming storm, the security strategy must evolve:

- Inventory Cryptographic Assets: Firms must take stock of where and how encryption is being used across their environments.

- Adopt Crypto-Agility: System needs to be designed so it can easily switch between encryption algorithms without full redesign.

- Quantum Test Threats: Use AI tools to stress-test quantum-like threats in encryption schemes.

Adopt PQC and Zero-Trust Models: Shift towards quantum-resistant cryptography and architectures with breach as the new default state.

In Summary

Quantum computing is not only a looming threat, it is a countdown to a new cryptographic arms race. Generative AI has already reshaped the cyber threat landscape, and in conjunction with quantum power, it is a force multiplier. It is a two-front challenge that requires more than incremental adjustment; it requires a change of cybersecurity paradigm.

Panic will not help us. Preparation will.

Abbreviations

RSA – Rivest, Shamir, and Adleman

ECC – Elliptic-Curve Cryptography

AES – Advanced Encryption Standard

TLS – Transport Layer Security

HTTPS – Hypertext Transfer Protocol Secure

ECDSA – Elliptic Curve Digital Signature Algorithm

NSA – National Security Agency

NIST – National Institute of Standards and Technology

PQC – Post-Quantum Cryptography

References

- https://arxiv.org/abs/2104.07603

- https://www.researchgate.net/publication/382853518_CryptoGenSec_A_Hybrid_Generative_AI_Algorithm_for_Dynamic_Cryptographic_Cyber_Defence

- https://papers.ssrn.com/sol3/papers.cfm?abstract_id=5185525

- https://www.classiq.io/insights/shors-algorithm-explained

- https://www.theguardian.com/world/interactive/2013/nov/01/snowden-nsa-files-surveillance-revelations-decoded

- https://arxiv.org/abs/2307.00691

- https://secureframe.com/blog/generative-ai-cybersecurity

- https://www.techtarget.com/searchsecurity/news/365531559/How-hackers-can-abuse-ChatGPT-to-create-malware

- https://arxiv.org/abs/1802.07228

- https://www.technologyreview.com/2019/05/30/65724/how-a-quantum-computer-could-break-2048-bit-rsa-encryption-in-8-hours/

- https://arxiv.org/pdf/1710.10377

- https://thequantuminsider.com/2025/05/24/google-researcher-lowers-quantum-bar-to-crack-rsa-encryption/

- https://csrc.nist.gov/projects/post-quantum-cryptography

***Google’s Gemini is used in this post to paraphrase some sentences to add more context. ***

About Author:

Omkar Bhalekar is a senior network engineer and technology enthusiast specializing in Data center architecture, Manufacturing infrastructure, and Sustainable solutions with extensive experience in designing resilient industrial networks and building smart factories and AI data centers with scalable networks. He is also the author of the Book Autonomous and Predictive Networks: The future of Networking in the Age of AI and co-author of Quantum Ops – Bridging Quantum Computing & IT Operations. Omkar writes to simplify complex technical topics for engineers, researchers, and industry leaders.