Intel

Analysis: Rakuten Mobile and Intel partnership to embed AI directly into vRAN

Today, Rakuten Mobile and Intel announced a partnership to embed Artificial Intelligence (AI) directly into the virtualized Radio Access Network (vRAN) stack. While vRAN currently represents a small percentage of the total RAN market (Dell’Oro Group recently forecasts vRAN to account for 5% to 10% of the total RAN market by 2026), this partnership could boost increase that percentage as it addresses key adoption hurdles—performance, power, and AI integration. Key areas of innovation include:

- Enhanced Wireless Spectral Efficiency: Optimizing spectrum utilization for superior network performance and capacity.

- Automated RAN Operations: Streamlining network management and reducing operational complexities through intelligent automation.

- Optimized Resource Allocation: Dynamically allocating network resources for maximum efficiency and subscriber experience.

- Increased Energy Efficiency: Significantly reducing power consumption in the RAN, contributing to sustainable network operations.

The partnership essentially aims to make vRAN superior in performance and TCO (Total Cost of Ownership) compared to traditional, proprietary, purpose built RAN hardware.

“We are incredibly excited to expand our collaboration with Intel to pioneer truly AI-native RAN architectures,” said Sharad Sriwastawa, co-CEO and CTO, Rakuten Mobile. “Together, we are validating transformative AI-driven innovations that will not only shape but define the future of mobile networks. This partnership showcases how intelligent RAN can be achieved through the seamless and efficient integration of AI workloads directly within existing vRAN software stacks, delivering unparalleled performance and efficiency.”

Rakuten Mobile and Intel are engaged in rigorous testing and validation of cutting-edge RAN AI use cases across Layer 1, Layer 2, and comprehensive RAN operation and network platform management. A core objective is the seamless integration of AI directly into the RAN stack, meticulously addressing integration challenges while upholding carrier-grade reliability and stringent latency requirements.

Utilizing Intel FlexRAN reference software, the Intel vRAN AI Development Kit, and a robust suite of AI tools and libraries, Rakuten Mobile is collaboratively training, optimizing, and deploying sophisticated AI models specifically tailored for demanding RAN workloads. This collaborative effort is designed to realize ultra-low, real-time AI latency on Intel Xeon 6 SoC, capitalizing on their built-in AI acceleration capabilities, including AVX512/VNNI and AMX.

“AI is transforming how networks are built and operated,” said Kevork Kechichian, Executive Vice President and General Manager of the Data Center Group, Intel Corporation. “Together with Rakuten, we are demonstrating how AI benefits can be achieved in vRAN. Intel Xeon processors power the majority of commercial vRAN deployments worldwide, and this transformation momentum continues to accelerate. Intel is providing AI-ready Xeon platforms that allow operators like Rakuten to design AI-ready infrastructure from the ground up, with built-in acceleration capabilities.”

Rakuten says they are “poised to unlock new levels of RAN performance, efficiency, and automation by embedding AI directly into the RAN software stack, this AI-native evolution represents the future of cloud-native, AI-powered RAN – inherently software-upgradable and built on open, general-purpose computing platforms. Additionally, the extended collaboration between Rakuten Mobile and Intel marks a significant step toward realizing the vision of autonomous, self-optimizing networks and powerfully reinforces both companies’ commitment to open, programmable, and intelligent RAN infrastructure worldwide.”

……………………………………………………………………………………………………………………………………………………………………..

- AI-Native Efficiency & Performance: The collaboration focuses on integrating AI to improve network performance and energy efficiency, which is a major pain point for operators. By embedding AI directly into the vRAN stack, they are enhancing wireless spectral efficiency, reducing power consumption, and automating RAN operations.

- Leveraging High-Performance Hardware: The initiative utilizes Intel® Xeon® 6 processors with built-in vRAN Boost. This eliminates the need for external, power-hungry accelerator cards, offering up to 2.4x more capacity and 70% better performance-per-watt.

- Validation of Large-Scale Commercial Viability: Rakuten Mobile operates the world’s first fully virtualized, cloud-native network. Its continued collaboration with Intel to make the vRAN AI-native provides a proven blueprint for other operators, reducing the perceived risk of adopting vRAN, particularly in brownfield (existing) networks.

- Acceleration of Open RAN Ecosystem: The collaboration supports the broader push towards Open RAN, which is expected to see a significant rise in market share, doubling between 2022 and 2026.

………………………………………………………………………………………………………………………………………………………………

- Market Share Shift: Omdia forecasts that vRAN’s share of the RAN baseband subsector will reach 20% by 2028. That’s a significant jump from its current low single-digit percentage.

- Explosive CAGR: The global vRAN market is projected to grow from approximately $16.6 billion in 2024 to nearly $80 billion by 2033, representing a 19.5% CAGR.

- Small Cell Dominance: By the end of 2026, it is estimated that 77% of all vRAN implementations will be on small cell architectures, a key area where Rakuten and Intel have demonstrated success.

References:

https://corp.mobile.rakuten.co.jp/english/news/press/2026/0210_01/

Virtual RAN gets a boost from Samsung demo using Intel’s Grand Rapids/Xeon Series 6 SoC

RAN silicon rethink – from purpose built products & ASICs to general purpose processors or GPUs for vRAN & AI RAN

vRAN market disappoints – just like OpenRAN and mobile 5G

LightCounting: Open RAN/vRAN market is pausing and regrouping

Dell’Oro: Private 5G ecosystem is evolving; vRAN gaining momentum; skepticism increasing

https://www.mordorintelligence.com/industry-reports/virtualized-ran-vran-market

https://www.grandviewresearch.com/industry-analysis/virtualized-radio-access-network-market-report

Virtual RAN gets a boost from Samsung demo using Intel’s Grand Rapids/Xeon Series 6 SoC

Samsung is the fifth largest worldwide RAN equipment vendor, behind Huawei, Ericsson, Nokia and ZTE. This week, the South Korean conglomerate claimed to have reached a virtual RAN (vRAN) milestone with the completion of a commercial phone call using Granite Rapids – Intel’s Xeon 6700P-B SoC processor series. The call took place on the network of a large, undisclosed U.S. network operator, but apparently Verizon. Samsung said, “this builds upon the company’s previous achievement in 2024, when it completed the industry-first end-to-end call in a lab environment with Intel Xeon 6 SoC.”

Samsung’s cloud-native vRAN with Intel’s latest Xeon SoC ran on a single commercial off-the-shelf (COTS) server from Hewlett Packard Enterprise with a cloud platform from Wind River. This milestone, coming only a few months after the first wave of Intel Xeon 6 SoC was made commercially available, presents an innovative pathway for single-server vRAN deployments for next-generation networks.

The commercial readiness of vRAN technology promises to give network operators the ability to run RAN and AI workloads on fewer, more powerful servers.

Samsung wrote: “As operators accelerate their transition to software-driven, flexible architectures while seeking more sustainable infrastructure, the ability to run RAN and AI workloads on fewer, more powerful servers becomes critical, On a single server of Samsung’s AI-powered vRAN with enhanced processors, operators can consolidate software-driven network elements such as mobile core, radio access, transport and security, which traditionally required multiple servers, significantly simplifying the management of complex site configuration.”

Image Credit: Samsung

“This breakthrough represents a major leap forward in network virtualization and efficiency. It confirms the real-world readiness of this latest technology under live network conditions, demonstrating that single-server vRAN deployments can meet the stringent performance and reliability standards required by leading carriers,” said June Moon, Executive Vice President, Head of R&D, Networks Business at Samsung Electronics. “We are not only deploying more sustainable, cost-effective networks, but also laying the foundation to fully utilize AI capabilities more easily and prepare for 6G with our end-to-end software-driven network solutions.”

Samsung’s vRAN leverages the latest Intel Xeon 6 SoC with Intel Advanced Matrix Extensions (Intel AMX), Intel vRAN Boost and up to 72 cores, delivering significant improvements in AI processing, memory bandwidth and energy efficiency compared to the previous generation.

“With Intel Xeon 6 SoC, featuring higher core counts and built-in acceleration for AI and vRAN, operators get the compute foundation for AI native, future ready networks,” said Cristina Rodriguez, VP and GM, Network & Edge, Intel. “This collaborative achievement with Samsung, HPE and Wind River enables greater consolidation of RAN and AI workloads, lowering power and total cost while speeding innovation.”

Samsung has been leading the deployment of vRAN solutions with major network operators worldwide and has achieved many industry breakthroughs, including the industry’s first call on a commercial network and large-scale deployments utilizing Intel Xeon processors with Intel vRAN Boost. The company continues to push the boundaries of network virtualization, working closely with ecosystem partners like Intel to deliver solutions that help operators build networks that are more efficient and sustainable.

“This successful first call is an important milestone for the industry,” said Daryl Schoolar, Analyst and Director at Recon Analytics. “By demonstrating multiple network functions running on next-generation processing technology, Samsung is showing what future networks look like — more cloud-native, more scalable and significantly more efficient. This achievement moves the industry beyond theoretical performance gains and into practical, deployable innovation that operators around the world can leverage to modernize their networks, accelerate automation and better support AI-driven use cases.”

“With Samsung’s vRAN and Intel’s Xeon 6 SoC running on a single server, Samsung expects enhanced cost savings for operators,” said a Samsung spokesperson via email to Light Reading, when asked what cost impact Granite Rapids would have. “The ability to consolidate multiple network functions including RAN, core, transport and security onto a single, high-performance COTS server reduces hardware footprint, simplifies site design and lowers power consumption.”

Vodafone is one Samsung customer that now expects to benefit from the availability of Granite Rapids. In November, Paco Pignatelli, Vodafone’s head of open RAN, told Light Reading that the new Intel platform offers “much better capacity and efficiency” than its predecessors. That was several weeks after the telco had announced plans to deploy Samsung’s virtual RAN technology in Germany and other European markets, starting in 2026.

…………………………………………………………………………………………………………………………………….

vRAN Market Assessment:

Virtual RAN still accounts for a very small share of the entire market. In 2023, data from Omdia put its market share at just 3% of the total RAN market which generates If vRAN is considered as part of the subsector for baseband RAN, its share was about 10% that year, implying baseband represents about 30% of the total expenditure on RAN products.

Hardware still dominates the RAN equipment business, but there is a rapid shift toward Commercial Off-The-Shelf (COTS) servers, particularly those using Intel’s Xeon 6 processors. Regional Dominance: North America and Asia-Pacific are expected to remain the largest markets in 2026, together accounting for over 70% of global vRAN revenue

- Dell’Oro Group:

- 2026 Stability: Predicts overall RAN revenues will remain “mostly stable” in 2026, but identifies AI-RAN, Cloud RAN, and Open RAN as favorable growth segments within that flat topline.

- Market Share: Expects vRAN to account for 5% to 10% of the total RAN market by 2026.

- Private Wireless: Forecasts that private wireless campus network RAN revenue will surpass USD 1 billion in 2026.

- Omdia:

- Growth Surge: Anticipates a doubling of vRAN’s market share by 2028. Specifically, it expects Open vRAN to reach a 16% share of the total RAN market in 2026, up from 7% in 2022.

- Automation Focus: Forecasts the Service Management and Orchestration (SMO) category to grow at a massive 99% CAGR through 2030 as operators align with O-RAN architectures.

- Research and Markets:

- Estimates the global Open RAN market size will reach between USD 5.0 billion and USD 10.0 billion by 2026, driven by aggressive greenfield deployments.

…………………………………………………………………………………………………………………………

References:

https://www.lightreading.com/5g/intel-and-samsung-add-to-pressure-on-purpose-built-5g

vRAN market disappoints – just like OpenRAN and mobile 5G

RAN silicon rethink – from purpose built products & ASICs to general purpose processors or GPUs for vRAN & AI RAN

LightCounting: Open RAN/vRAN market is pausing and regrouping

Dell’Oro: Private 5G ecosystem is evolving; vRAN gaining momentum; skepticism increasing

Heavy Reading: How network operators will deploy Open RAN and cloud native vRAN

Intel to spin off its Networking & Edge Group into a stand-alone business

As originally reported by CRN, Intel revealed its plan to spin off its Network and Edge Group in a memo addressed to customers and said it will seek outside investment for the business unit. Intel will be an anchor investor in the stand-alone business. The memo, seen by CRN, was authored by Sachin Katti, who has led the Network and Edge Group, abbreviated as NEX, since early 2023. He was given the extra role of chief technology and AI officer by Intel CEO Lip-Bu Tan in April to lead the chipmaker’s AI strategy. An Intel spokesperson confirmed the contents of the memo to CRN.

“We plan to establish key elements of our Networking and Communications business as a stand-alone company and we have begun the process of identifying strategic investors,” the representative said in a statement. “Like Altera, we will remain an anchor investor enabling us to benefit from future upside as we position the business for future growth,” the spokesperson added.

The NEX spin-off plan was announced to Intel customers and employees the same day the semiconductor giant revealed more changes under Tan’s leadership, including a 15% workforce reduction and a more conservative approach to its foundry business. “We are laser-focused on strengthening our core product portfolio and our AI roadmap to better serve customers,” Tan said in a statement.

In Katti’s memo, he said Intel “internally announced” on Thursday its plan to “establish its NEX business as a stand-alone company.” This will result in a “new, independent entity focused exclusively on delivering leading silicon solutions for critical communications, enterprise networking and ethernet connectivity infrastructure,” he added.

Katti did not give a timeline for when Intel could spin off NEX, which has been mainly focused on networking and communications products after the company moved its edge computing business to the Client Computing Group last September. Intel also shifted its integrated photonics solutions to the Data Center Group that same month. Similar to other businesses Intel has spun off, the company plans to maintain a stake in the stand-alone NEX company as it seeks out other investors, according to Katti. “While Intel will remain an anchor investor in the new company, we have begun the process of identifying additional strategic and capital partners to support the growth and development of the new company,” he wrote.

Katti said the move is “rooted in our commitment to serving” Intel’s customers better and promised that there will be “no change in service or the support” they rely on. He added that it will also help NEX “expand into new segments more effectively. Backed by Intel, this new, independent company will be positioned to accelerate its customer-facing strategy and product road map by innovating faster and investing in new offerings.”

Katti said he expects the transition to be “seamless” for Intel’s customers. “What we expect to change is our ability to operate with greater focus, speed and flexibility—all to better meet your needs,” he wrote.

…………………………………………………………………………………………………………………………………………………..

Intel was rumored to be looking for a buyer for its Network and Edge group in May. This business produced $5.8 billion in revenue in 2024.

This strategy seems similar to the company’s decision to spin off RealSense, its former stereoscopic imaging technology business, earlier this month. Intel decided to spin RealSense out during former CEO Pat Gelsinger’s tenure and the company struck out on its own with $50 million in venture funding.

…………………………………………………………………………………………………………………………………………………..

Shortly after Tan became Intel’s CEO in March, the leader made clear that he would seek to spin off businesses he considers not core to its strategy. Then in May, Reuters reported that the company was exploring a sale of the NEX business unit as part of that plan.

While Tan didn’t reference Intel’s decision to spin off NEX in the company’s Thursday earnings call, he discussed other actions it has taken to monetize “non-core assets,” including the sale of a portion of Intel’s stake in Mobileye earlier this month.

The company is also expected to complete its majority stake sale of the Altera programmable chip business to private equity firm Silver Lake by late September, according to Tan. Silver Lake will gain a 51 percent stake in the business while Intel will own the remaining 49%. “I will evaluate other opportunities as we continue to sharpen our focus around our core business and strategy,” Tan said on the earnings call.

………………………………………………………………………………………………………………………………………………………….

In 2019, Apple acquired the majority of Intel’s smartphone modem business for $1 billion. This deal included approximately 2,200 Intel employees, intellectual property, equipment, leases, and over 17,000 wireless technology patents. The acquisition allowed Apple to accelerate its development of 5G modem technology for its iPhones, reducing reliance on third-party suppliers like Qualcomm. Intel, in turn, refocused its 5G efforts on areas like network infrastructure, PCs, and IoT devices

References:

https://www.crn.com/news/networking/2025/intel-reveals-plan-to-spin-off-networking-business-in-memo

https://techcrunch.com/2025/07/25/intel-is-spinning-off-its-network-and-edge-group/

https://www.lightreading.com/semiconductors/intel-sale-of-networks-sounds-like-an-ericsson-problem

CES 2025: Intel announces edge compute processors with AI inferencing capabilities

Massive layoffs and cost cutting will decimate Intel’s already tiny 5G network business

Nokia utilizes Intel technology to drive greater 5G SA core network energy savings

Nokia selects Intel’s Justin Hotard as new CEO to increase growth in IP networking and data center connections

Nokia selects Intel’s Justin Hotard as new CEO to increase growth in IP networking and data center connections

Nokia today announced that their President and Chief Executive Officer, Pekka Lundmark will be replaced on April 1st by 50 year old Justin Hotard who currently leads Intel’s Data Center & AI Group. Hotard joins Nokia with more than 25 years’ experience with global technology companies, driving innovation, technology leadership and delivering revenue growth. Prior to Intel, he held several leadership roles at large technology companies, including Hewlett Packard Enterprise (more below) and NCR Corporation. He will be based at Nokia’s headquarters in Espoo, Finland.

“Leading Nokia has been a privilege. When I returned to Nokia in 2020, I called it a homecoming, and it really has felt like one. I am proud of the work our brilliant team has done in re-establishing our technology leadership and competitiveness, and positioning the company for growth in data centers, private wireless and industrial edge, and defense. This is the right time for me to move on. I have led listed companies for more than two decades and although I do not plan to stop working, I want to move on from executive roles to work in a different capacity, such as a board professional. Justin is a great choice for Nokia and I look forward to working with him on a smooth transition,” said Nokia’s President and CEO Pekka Lundmark.

“I am delighted to welcome Justin to Nokia. He has a strong track record of accelerating growth in technology companies along with vast expertise in AI and data center markets, which are critical areas for Nokia’s future growth. In his previous positions, and throughout the selection process, he has demonstrated the strategic insight, vision, leadership and value creation mindset required for a CEO of Nokia,” said Sari Baldauf, Chair of Nokia’s Board of Directors.

“I am honored by the opportunity to lead Nokia, a global leader in connectivity with a unique heritage in technology. Networks are the backbone that power society and businesses, and enable generational technology shifts like the one we are currently experiencing in AI. I am excited to get started and look forward to continuing Nokia’s transformation journey to maximize its potential for growth and value creation,” said Justin Hotard.

Rumors started to swirl in September, after a report in the Financial Times newspaper, that Nokia was seeking a replacement for Lundmark, who by then had been its CEO for about four years. Nokia said in a statement: “The Board fully supports President and CEO Pekka Lundmark and is not undergoing a process to replace him.”

–>How seriously should the FT and all other media now take the company’s public statements?

Lundmark had told Nokia’s board months earlier, in the spring of 2024, that he would consider stepping down once “the repositioning of the business was in a more advanced stage.” This author certainly does not think that “advanced stage” has been reached yet. “The current CEO has not got to grips with the growth problem. The top line has not increased since the Alcatel-Lucent takeover,” said a shareholder.

Nokia’s share price is now only 10% of its peak $260 Billion valuation in 2000 peak (that’s a 90% decline in price over almost 25 years- buy and hold?). However, the company has gained almost 40% in the last year after operational improvements and signs that construction of AI data centers could be a significant growth opportunity for Nokia’s network infrastructure business group, its second-biggest unit. In his leaving video, Lundmark drew attention to the sales growth rate of 9% for the final quarter of 2024 and the operating margin of 19.1%, Nokia’s best in a decade.

…………………………………………………………………………………………………………………………………………………………………………………………………………………………………………

“We’re at the start of a super cycle with AI,” said Hotard. “One that I see [as] very similar to the one we saw a couple of decades ago with the internet. In these major market transitions new winners are created and incumbents either reinvent themselves or fail… My focus will be to accelerate the transformation journey.”

During the Nokia conference call Q&A Hotard was defensive to questions about his plans for the company. He did say that networking comes second only to compute hardware when it comes to share of AI datacenter investment and he looks forward to the completion of the $2.1 billion Infinera acquisition. “The hundreds of billions of dollars being invested in data centers today from a technology standpoint of course start with compute accelerators and GPUs [graphical processing units], but the second thing is the network and the connectivity and further it is not just the connectivity inside the data center but the connectivity across data centers,” said Hotard on today’s call. That implies an increased emphasis Nokia will place on optical networking within and between data centers.

Indeed, IP networking and data center connectivity are becoming a fast-growing part of Nokia’s network infrastructure unit that provides the connectivity inside those data centers, recently landing deals with Microsoft and UK-headquartered Nscale. The hoped-for return is an additional €1 billion ($1.03 billion) in revenues by 2028. The Infinera acquisition, announced in June 2024 and expected to be finalized in the next few weeks, is also partly a data center play, bolstering Nokia’s portfolio of optical networking assets.

On Nokia’s Q3 2024 earnings call in October, Lundmark said, “Across Nokia, we are investing to create new growth opportunities outside of our traditional communications service provider market. We see a significant opportunity to expand our presence in the data center market and are investing to broaden our product portfolio in IP Networks to better address this. There will be others as well, but that will be the number one. This is obviously in the very core of our strategy.” At that time, Lundmark said Nokia’s telco total addressable market (TAM) is €84 billion, while its data center total addressable market is currently at €20 billion. “I mean, telco TAM will never be a significant growth market,” he added to no one’s surprise.

On today’s call, Lundmark drew attention to its revival and strength when asked to compare Nokia with Ericsson. “Of course, we respect them as a competitor in radio networks. We are slightly behind them in terms of market share, but we have had great deal momentum recently – you’ll have seen some of the deal announcements – and, very importantly, the feedback we are receiving from our customers is that we are now fully competitive in terms of our portfolio.”

Justin Hotard, slated to be Nokia’s new boss on April 1, 2025

During the past year Hotard has headed up Intel’s Datacenter & AI Group. Prior to that he was at HPE for nine years heading up the High Performance Computing, AI & Labs group.

“Networks are the backbone that power society and businesses, and enable generational technology shifts like the one we are currently experiencing in AI. I am excited to get started and look forward to continuing Nokia’s transformation journey to maximize its potential for growth and value creation,” said Justin Hotard.

What will Hotard led Nokia’s future commitment be to a shrinking market for mobile networks? Revenues generated by the global mobile market are estimated to have fallen about $5 billion last year, to $35 billion, after a $5 billion drop in 2023, according to Omdia (an Informa owned market research firm), as network operators cut spending. But an exit would rid Nokia of a business still responsible for 40% of total sales just as smaller rivals appear to be struggling.

Nokia seems to value Hotard’s U.S. background and experience in the data center and AI market. “If you look at the market and look at the world, the U.S. is an important market for us and so that is one element we consider – experience from that technology business there,” said Sari Baldauf, Nokia’s chair, when asked on today’s call why an external candidate was preferred to an internal appointment.

……………………………………………………………………………………………………………………………………………………………………………………………………………..

Telecoms.com Scott Bicheno offered his opinion: “Hotard reckons Nokia’s telco customer base gives it an advantage when it comes to AI datacenters, which are increasingly built near to sources of power, often in remote locations. So, while this does feel like a promising strategic pivot for Nokia, those telco customers might be worried about mobile being deprioritized as a consequence. The appointment of someone from a company with an appalling track record in that sector is unlikely to ease that concern.”

……………………………………………………………………………………………………………………………………………………………………………………………………………..

References:

https://www.telecoms.com/ai/nokia-signals-a-move-away-from-mobile-and-europe-with-new-ceo

https://www.ft.com/content/5f086aee-91b9-421a-9f32-c33e67b1af7f

Initiatives and Analysis: Nokia focuses on data centers as its top growth market

Nokia to acquire Infinera for $2.3 billion, boosting optical network division size by 75%

vRAN market disappoints – just like OpenRAN and mobile 5G

Most wireless network operators are not convinced virtual RAN (vRAN) [1.] is worth the effort to deploy. Omdia, an analyst company owned by Informa, put vRAN’s share of the total market for RAN baseband products at just 10% in 2023. It is growing slowly, with 20% market share forecast by 2028, but it far from being the default RAN architectural choice.

Among the highly touted benefits of virtualization is the ability for RAN developers to exploit the much bigger economies of scale found in the mainstream IT market. “General-purpose technology will eventually have so much investment in it that it will outpace custom silicon,” said Sachin Katti, the general manager of Intel’s network and edge group, during a previous Light Reading interview.

Note 1. The key feature of vRAN is the virtualization of RAN functions, allowing operators to perform baseband operations on standard servers instead of dedicated hardware. The Asia Pacific region is currently leading in vRAN adoption due to rapid 5G deployment in countries like China, South Korea, and Japan. Samsung has established a strong presence as a supplier of vRAN equipment and software.

The whole market for RAN products generated revenues of just $40 billion in 2023. Intel alone made $54.2 billion in sales that same year. Yet Huawei, Ericsson and Nokia, the big players in RAN base station technology, have continued to miniaturize and advance their custom chips. Nokia boasts 5-nanometer chips in its latest products and last year lured Derek Urbaniak, a highly regarded semiconductor expert, from Ericsson in a sign it wants to play an even bigger role in custom chip development.

Ericsson collaborates closely with Intel on virtual RAN, and yet it has repeatedly insisted its application-specific integrated circuits (ASICs) perform better than Intel’s CPUs in 5G. One year ago, Michael Begley, Ericsson’s head of RAN compute, told Light Reading that “purpose-built hardware will continue to be the most energy-efficient and compact hardware for radio site deployments going forward.”

Intel previously suffered delays when moving to smaller designs and there is gloominess about its prospects as note in several IEEE Techblog posts like this one and this one. Intel suffered a $17 billion loss for the quarter ending in September, after reporting a small $300 million profit a year before. Sales fell 6% year-over-year, to $13.3 billion, over this same period.

Unfortunately, for telcos eyeing virtualization, Intel is all they really have. Its dominance of the small market for virtual RAN has not been weakened in the last couple of years, leaving operators with no viable alternatives. This was made apparent in a recent blog post by Ericsson, which listed Intel as the only commercial-grade chip solution for virtual RAN. AMD was at the “active engagement” stage, said Ericsson last November. Processors based on the blueprints of ARM, a UK-based chip designer that licenses its designs, were not even mentioned.

The same economies-of-scale case for virtual RAN is now being made about Nvidia and its graphical processing units (GPUs), which Nvidia boss Jensen Huang seems eager to pitch as a kind of general-purpose AI successor to more humdrum CPUs. If the RAN market is too small, and its developers must ride in the slipstream of a much bigger market, Nvidia and its burgeoning ecosystem may seem a safer bet than Intel. And the GPU maker already has a RAN pitch, including a lineup of Arm-based CPUs to host some of the RAN software.

Semiconductor-related economies of scale, should not be the sole benefit of a virtual RAN. “With a lot of the work that’s been done around orchestration, you can deploy new software to hundreds of sites in a couple of hours in a way that was not feasible before,” said Alok Shah of Samsung Electronics. Architecturally, virtualization should allow an operator to host its RAN on the same cloud-computing infrastructure used for other telco and IT workloads. With a purpose-built RAN, an operator would be using multiple infrastructure platforms.

In telecom markets without much fiber or fronthaul infrastructure there is unlikely to be much centralization of RAN compute. This necessitates the deployment of servers at mast sites, where it is hard to see them being used for anything but the RAN. Even if a company wanted to host other applications at a mobile site, the processing power of Sapphire Rapids, the latest Intel generation, is fully consumed by the functions of the virtual distributed unit (vDU), according to Shah. “I would say the vDU function is kind of swallowing up the whole server,” he said.

Indeed, for all the talk of total cost of ownership (TCO) savings, some deployments of Sapphire Rapids have even had to feature two servers at a site to support a full 5G service, according to Paul Miller, the chief technology officer of Wind River, which provides the cloud-computing platform for Samsung’s virtual RAN in Verizon’s network. Miller expects that to change with Granite Rapids, the forthcoming successor technology to Sapphire Rapids. “It’s going to be a bit of a sea change for the network from a TCO perspective – that you may be able to get things that took two servers previously, like low-band and mid-band 5G, onto a single server,” he said.

Samsung’s Shah is hopeful Granite Rapids will even free up compute capacity for other types of applications. “We’ll have to see how that plays out, but the opportunity is there, I think, in the future, as we get to that next generation of compute.” In the absence of many alternative processor platforms, especially for telcos rejecting the inline virtual RAN approach, Intel will be under pressure to make sure the journey for Granite Rapids is less turbulent than it sounds.

Another challenge is the mobile backhaul, which is expected to limit the growth of the vRAN industry. Backhaul connectivity ia central s widely used in wireless networks to transfer a signal from a remote cell site to the core network (typically the edge of the Internet). The two main methods of mobile backhaul implementations are fiber-based and wireless point-to-point backhaul.

The pace of data delivery suffers in tiny cell networks with poor mobile network connectivity. Data management is becoming more and more important as tiny cells are employed for network connectivity. Increased data traffic across small cells, which raises questions about data security, is mostly to blame for poor data management. vRAN solutions promise improved network resiliency and utilization, faster network routing, and better-optimized network architecture to meet the diverse 5G requirements of enterprise customers.

References:

https://www.lightreading.com/5g/virtual-ran-still-seems-to-be-not-worth-the-effort

https://www.ericsson.com/en/blog/north-america/2024/open-ran-progress-report

https://www.sdxcentral.com/5g/ran/definitions/vran/

LightCounting: Open RAN/vRAN market is pausing and regrouping

Dell’Oro: Private 5G ecosystem is evolving; vRAN gaining momentum; skepticism increasing

Huawei CTO Says No to Open RAN and Virtualized RAN

Heavy Reading: How network operators will deploy Open RAN and cloud native vRAN

CES 2025: Intel announces edge compute processors with AI inferencing capabilities

At CES 2025 today, Intel unveiled the new Intel® Core™ Ultra (Series 2) processors, designed to revolutionize mobile computing for businesses, creators and enthusiast gamers. Intel said “the new processors feature cutting-edge AI enhancements, increased efficiency and performance improvements.”

“Intel Core Ultra processors are setting new benchmarks for mobile AI and graphics, once again demonstrating the superior performance and efficiency of the x86 architecture as we shape the future of personal computing,” said Michelle Johnston Holthaus, interim co-CEO of Intel and CEO of Intel Products. “The strength of our AI PC product innovation, combined with the breadth and scale of our hardware and software ecosystem across all segments of the market, is empowering users with a better experience in the traditional ways we use PCs for productivity, creation and communication, while opening up completely new capabilities with over 400 AI features. And Intel is only going to continue bolstering its AI PC product portfolio in 2025 and beyond as we sample our lead Intel 18A product to customers now ahead of volume production in the second half of 2025.”

Intel also announced new edge computing processors, designed to provide scalability and superior performance across diverse use cases. Intel Core Ultra processors were said to deliver remarkable power efficiency, making them ideal for AI workloads at the edge, with performance gains that surpass competing products in critical metrics like media processing and AI analytics. Those edge processors are targeted at compute servers running in hospitals, retail stores, factory floors and other “edge” locations that sit between big data centers and end-user devices. Such locations are becoming increasingly important to telecom network operators hoping to sell AI capabilities, private wireless networks, security offerings and other services to those enterprise locations.

Intel edge products launching today at CES include:

- Intel® Core™ Ultra 200S/H/U series processors (code-named Arrow Lake).

- Intel® Core™ 200S/H series processors (code-named Bartlett Lake S and Raptor Lake H Refresh).

- Intel® Core™ 100U series processors (code-named Raptor Lake U Refresh).

- Intel® Core™ 3 processor and Intel® Processor (code-named Twin Lake).

“Intel has been powering the edge for decades,” said Michael Masci, VP of product management in Intel’s edge computing group, during a media presentation last week. According to Masci, AI is beginning to expand the edge opportunity through inferencing [1.]. “Companies want more local compute. AI inference at the edge is the next major hotbed for AI innovation and implementation,” he added.

Note 1. Inferencing in AI refers to the process where a trained AI model makes predictions or decisions based on new data, rather than previously stored “training models.” It’s essentially AI’s ability to apply learned knowledge on fresh inputs in real-time. Edge computing plays a critical role in inferencing, because it brings it closer to users. That lowers latency (much faster AI responses) and can also reduce bandwidth costs and ensure privacy and security as well.

Editor’s Note: Intel’s edge compute business – the one pursuing AI inferencing – is in in its Client Computing Group (CCG) business unit. Intel’s chips for telecom operators reside inside its NEX business unit.

Intel’s Masci specifically called out Nvidia’s GPU chips, claiming Intel’s new silicon lineup supports up to 5.8x faster performance and better usage per watt. Indeed, Intel claims their “Core™ Ultra 7 processor uses about one-third fewer TOPS (Trillions Operations Per Second) than Nvidia’s Jetson AGX Orin, but beats its competitor with media performance that is up to 5.6 times faster, video analytics performance that is up to 3.4x faster and performance per watt per dollar up to 8.2x better.”

………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………….

However, Nvidia has been using inference in its AI chips for quite some time. Company officials last month confirmed that 40% of Nvidia’s revenues come from AI inference, rather than AI training efforts in big data centers. Colette Kress, Nvidia Executive Vice President and Chief Financial Officer, said, “Our architectures allows an end-to-end scaling approach for them to do whatever they need to in the world of accelerated computing and Ai. And we’re a very strong candidate to help them, not only with that infrastructure, but also with the software.”

“Inference is super hard. And the reason why inference is super hard is because you need the accuracy to be high on the one hand. You need the throughput to be high so that the cost could be as low as possible, but you also need the latency to be low,” explained Nvidia CEO Jensen Huang during his company’s recent quarterly conference call.

“Our hopes and dreams is that someday, the world does a ton of inference. And that’s when AI has really succeeded, right? It’s when every single company is doing inference inside their companies for the marketing department and forecasting department and supply chain group and their legal department and engineering, and coding, of course. And so we hope that every company is doing inference 24/7.”

……………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………….

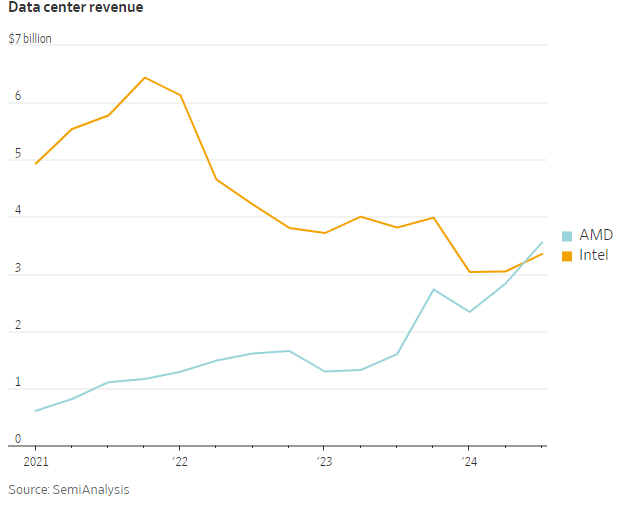

Sadly for its many fans (including this author), Intel continues to struggle in both data center processors and AI/ GPU chips. The Wall Street Journal recently reported that “Intel’s perennial also-ran, AMD, actually eclipsed Intel’s revenue for chips that go into data centers. This is a stunning reversal: In 2022, Intel’s data-center revenue was three times that of AMD.”

Even worse for Intel, more and more of the chips that go into data centers are GPUs and Intel has minuscule market share of these high-end chips. GPUs are used for training and delivering AI. The WSJ notes that many of the companies spending the most on building out new data centers are switching to chips that have nothing to do with Intel’s proprietary architecture, known as x86, and are instead using a combination of a competing architecture from ARM and their own custom chip designs. For example, more than half of the CPUs Amazon has installed in its data centers over the past two years were its own custom chips based on ARM’s architecture, Dave Brown, Amazon vice president of compute and networking services, said recently.

This displacement of Intel is being repeated all across the big providers and users of cloud computing services. Microsoft and Google have also built their own custom, ARM-based CPUs for their respective clouds. In every case, companies are moving in this direction because of the kind of customization, speed and efficiency that custom silicon supports.

References:

https://www.intel.com/content/www/us/en/newsroom/news/2025-ces-client-computing-news.html#gs.j0qbu4

https://www.intel.com/content/www/us/en/newsroom/news/2025-ces-client-computing-news.html#gs.j0qdhd

https://www.wsj.com/tech/intel-microchip-competitors-challenges-562a42e3

Massive layoffs and cost cutting will decimate Intel’s already tiny 5G network business

WSJ: China’s Telecom Carriers to Phase Out Foreign Chips; Intel & AMD will lose out

The case for and against AI-RAN technology using Nvidia or AMD GPUs

Superclusters of Nvidia GPU/AI chips combined with end-to-end network platforms to create next generation data centers

FT: Nvidia invested $1bn in AI start-ups in 2024

AI winner Nvidia faces competition with new super chip delayed

AI Frenzy Backgrounder; Review of AI Products and Services from Nvidia, Microsoft, Amazon, Google and Meta; Conclusions