HPE/Juniper

Analysis: Ethernet gains on InfiniBand in data center connectivity market; White Box/ODM vendors top choice for AI hyperscalers

Disclaimer: The author used Perplexity.ai for the research in this article.

……………………………………………………………………………………………………………..

Introduction:

Ethernet is now the leader in “scale-out” AI networking. In 2023, InfiniBand held an ~80% share of the data center switch market. A little over two years later, Ethernet has overtaken it in data center switch and server port counts. Indeed, the demand for Ethernet-based interconnect technologies continues to strengthen, reflecting the market’s broader shift toward scalable, open, and cost-efficient data center fabrics. According to Dell’Oro Group research published in July 2025, Ethernet was on track to overtake InfiniBand and establish itself as the primary fabric technology for large-scale data centers. The report projects cumulative data center switch revenue approaching $80 billion over the next five years, driven largely by AI infrastructure investments. Other analysts say Ethernet now represents a majority of AI‑back‑end switch ports, likely well above 50% and trending toward 70–80% as Ultra Ethernet / RoCE‑based fabrics (Remote Direct Memory Access/RDMA over Converged Ethernet) scale.

With Nvidia’s expanding influence across the data center ecosystem (via its Mellanox acquisition), Ethernet-based switching platforms are expected to maintain strong growth momentum through 2026 and the next investment cycle.

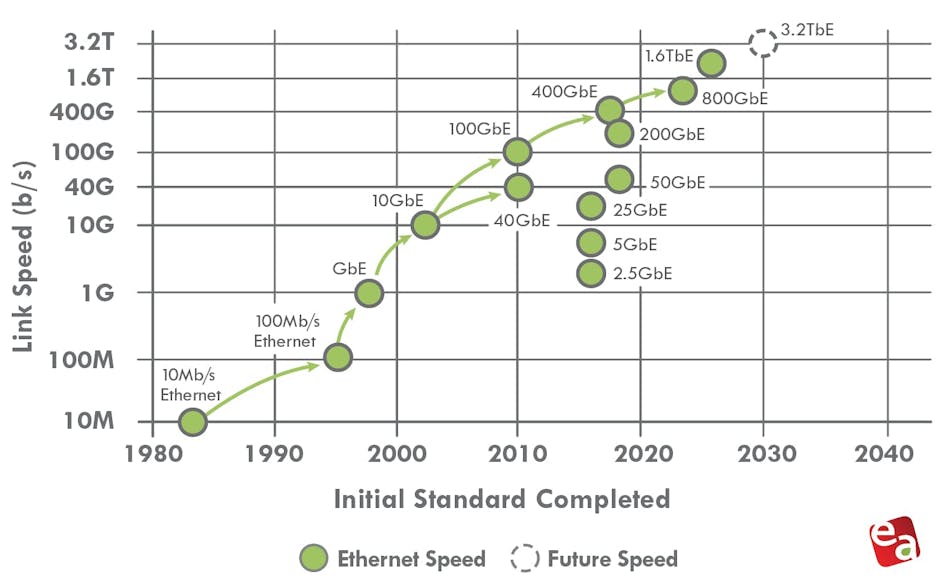

The past, present, and future of Ethernet speeds depicted in the Ethernet Alliance’s 2026 Ethernet Roadmap:

- IEEE 802.3 expects to complete IEEE 802.3dj, which supports 200 Gb/s, 400 Gb/s, 800 Gb/s, and 1.6 Tb/s, by late 2026.

- A 400-Gb/s/lane Signaling Call For Interest (CFI) is already scheduled for March.

- PAM-6 is an emerging, high-order modulation format for short-reach, high-speed optical fiber links (e.g., 100G/400G+ data center interconnects). It encodes 2.585 bits per symbol using 6 distinct amplitude levels, offering a 25% higher bitrate than PAM-4 within the same bandwidth.

………………………………………………………………………………………………………………………………………………………………………………………………………..

Dominant Ethernet speeds and PHY/PMD trends:

In 2026, the Ethernet portfolio spans multiple tiers of performance, with 100G, 200G, 400G, and 800G serving as the dominant server‑ and fabric‑facing speeds, while 1.6T begins to appear in early AI‑scale spine and inter‑cluster links.

-

Server‑to‑leaf topology:

-

100G and 200G remain prevalent for general‑purpose and mid‑tier AI inference workloads, often implemented over 100GBASE‑CR4 / 100GBASE‑FR / 100GBASE‑LR and their 200G counterparts (e.g., 200GBASE‑CR4 / 200GBASE‑FR4 / 200GBASE‑LR4) using 4‑lane PAM4 modulation.

-

Many AI‑optimized racks are migrating to 400G server interfaces, typically using 400GBASE‑CR8 / 400GBASE‑FR8 / 400GBASE‑LR8 with 8‑lane 50 Gb/s PAM4 lanes, often via QSFP‑DD or OSFP form‑factors.

-

-

Leaf‑to‑spine and spine‑to‑spine topology:

-

400G continues as the workhorse for many brownfield and cost‑sensitive fabrics, while 800G is increasingly targeted for new AI and high‑growth pods, typically deployed as 800GBASE‑DR8 / 800GBASE‑FR8 / 800GBASE‑LR8 over 8‑lane 100 Gb/s PAM4 links.

-

IEEE 802.3dj is progressing toward completion in 2026, standardizing 200 Gb/s per lane operation a

-

For cloud‑resident (hyperscale) data centers, the Ethernet‑switch leadership is concentrated among a handful of vendors that supply high‑speed, high‑density leaf‑spine fabrics and AI‑optimized fabrics.

Core Ethernet‑switch leaders:

-

NVIDIA (Spectrum‑X / Spectrum‑4)

NVIDIA has become a dominant force in cloud‑resident Ethernet, largely by bundling its Spectrum‑4 and Spectrum‑X Ethernet switches with H100/H200/Blackwell‑class GPU clusters. Spectrum‑X is specifically tuned for AI workloads, integrating with BlueField DPUs and offering congestion‑aware transport and in‑network collectives, which has helped NVIDIA surpass both Cisco and Arista in data‑center Ethernet revenue in 2025. -

Arista Networks

Arista remains a leading supplier of cloud‑native, high‑speed Ethernet to hyperscalers, with strong positions in 100G–800G leaf‑spine fabrics and its EOS‑based software stack. Arista has overtaken Cisco in high‑speed data‑center‑switch market share and continues to grow via AI‑cluster‑oriented features such as cluster‑load‑balancing and observability suites. -

Cisco Systems

Cisco maintains broad presence in cloud‑scale environments via Nexus 9000 / 7000 platforms and Silicon One‑based designs, particularly where customers want deep integration with routing, security, and multi‑cloud tooling. While its share in pure high‑speed data‑center switching has eroded versus Arista and NVIDIA, Cisco remains a major supplier to many large cloud providers and hybrid‑cloud operators.

Other notable players:

-

HPE (including Aruba and Juniper post‑acquisition)

HPE and its Aruba‑branded switches are widely deployed in cloud‑adjacent and hybrid‑cloud environments, while the HPE‑Juniper combination (via the 2025 acquisition) strengthens its cloud‑native switching and security‑fabric portfolio. -

Huawei

Huawei supplies CloudEngine Ethernet switches into large‑scale cloud and telecom‑owned data centers, especially in regions where its end‑to‑end ecosystem (switching, optics, and management) is preferred. -

White‑box / ODM‑based vendors

Most hyperscalers also source Ethernet switches from ODMs (e.g., Quanta, Celestica, Inspur) running open‑source or custom NOS’ (SONiC, Cumulus‑style stacks), which can collectively represent a large share of cloud‑resident ports even if they are not branded like Cisco or Arista. White‑box / ODM‑based Ethernet switches hold a meaningful and growing share of the data‑center Ethernet market, though they still trail branded vendors in overall revenue. Estimates vary by source and definition. - ODM / white‑box share of the global data‑center Ethernet switch market is commonly estimated in the low‑ to mid‑20% range by revenue in 2024–2025, with some market trackers putting it around 20–25% of the data‑center Ethernet segment. Within hyperscale cloud‑provider data centers specifically, the share of white‑box / ODM‑sourced Ethernet switches is higher, often cited in the 30–40% range by port volume or deployment count, because large cloud operators heavily disaggregate hardware and run open‑source NOSes (e.g., SONiC‑style stacks).

-

ODM‑direct sales into data centers grew over 150% year‑on‑year in 3Q25, according to IDC, signaling that white‑box share is expanding faster than the overall data‑center Ethernet switch market.

-

Separate white‑box‑switch market studies project the global data‑center white‑box Ethernet switch market to reach roughly $3.2–3.5 billion in 2025, growing at a ~12–13% CAGR through 2030, which implies an increasing percentage of the broader Ethernet‑switch pie over time.

Ethernet vendor positioning table:

| Vendor | Key Ethernet positioning in cloud‑resident DCs | Typical speed range (cloud‑scale) |

|---|---|---|

| NVIDIA | AI‑optimized Spectrum‑X fabrics tightly coupled to GPU clusters | 200G/400G/800G, moving toward 1.6T |

| Arista | Cloud‑native, high‑density leaf‑spine with EOS | 100G–800G, strong 400G/800G share |

| Cisco | Broad Nexus/Silicon One portfolio, multi‑cloud integration | 100G–400G, some 800G |

| HPE / Juniper | Cloud‑native switching and security fabrics | 100G–400G, growing 800G |

| Huawei | Cost‑effective high‑throughput CloudEngine switches | 100G–400G, some 800G |

| White‑box ODMs | Disaggregated switches running SONiC‑style NOSes | 100G–400G, increasingly 800G |

Supercomputers and modern HPC clusters increasingly use high‑speed, low‑latency Ethernet as the primary interconnect, often replacing or coexisting with InfiniBand. The “type” of Ethernet used is defined by three layers: speed/lane rate, PHY/PMD/optics, and protocol enhancements tuned for HPC and AI. Slingshot, the proprietary Ethernet-based solution from HPE, commanded 48.1% of performance for the Top500 list in June 2025 and 46.3% in November 2025. On both of the lists, it provided interconnectivity for six of the top 10 – including the top three: El Capitan, Frontier, and Aurora.

HPC Speed and lane‑rate tiers:

-

Mid‑tier HPC / legacy supercomputers:

-

100G Ethernet (e.g., 100GBASE‑CR4/FR4/LR4) remains common for mid‑tier clusters and some scientific workloads, especially where cost and power are constrained.

-

-

AI‑scale and next‑gen HPC:

-

400G and 800G Ethernet (400GBASE‑DR4/FR4/LR4, 800GBASE‑DR8/FR8/LR8) are now the workhorses for GPU‑based supercomputers and large‑scale HPC fabrics.

-

1.6T Ethernet (IEEE 802.3dj, 200 Gb/s per lane) is entering early deployment for spine‑to‑spine and inter‑cluster links in the largest AI‑scale “super‑factories.”

-

In summary, NVIDIA and Arista are the most prominent Ethernet‑switch leaders specifically for AI‑driven, cloud‑resident data centers, with Cisco, HPE/Juniper, Huawei, and white‑box ODMs rounding out the ecosystem depending on region, workload, and procurement model. In hyperscale cloud‑provider data centers, ODMs hold a 30%-to-40% market share.

References:

Will AI clusters be interconnected via Infiniband or Ethernet: NVIDIA doesn’t care, but Broadcom sure does!

Big tech spending on AI data centers and infrastructure vs the fiber optic buildout during the dot-com boom (& bust)

Fiber Optic Boost: Corning and Meta in multiyear $6 billion deal to accelerate U.S data center buildout

AI Data Center Boom Carries Huge Default and Demand Risks

Markets and Markets: Global AI in Networks market worth $10.9 billion in 2024; projected to reach $46.8 billion by 2029

Using a distributed synchronized fabric for parallel computing workloads- Part I

Using a distributed synchronized fabric for parallel computing workloads- Part II

Analysis: Cisco, HPE/Juniper, and Nvidia network equipment for AI data centers

Both telecom and enterprise networks are being reshaped by AI bandwidth and latency demands of AI. Network operators that fail to modernize architectures risk falling behind. Why? AI workloads are network killers — they demand massive east-west traffic, ultra-low latency, and predictable throughput.

- Real-time observability is becoming non-negotiable, as enterprises need to detect and fix issues before they impact AI model training or inference.

- Self-driving networks are moving from concept to reality, with AI not just monitoring but actively remediating problems.

- The competitive race is now about who can integrate AI into networking most seamlessly — and HPE/Juniper’s Mist AI, Cisco’s assurance stack, and Nvidia’s AI fabrics are three different but converging approaches.

Cisco, HPE/Juniper, and Nvidia are designing AI-optimized networking equipment, with a focus on real-time observability, lower latency and increased data center performance for AI workloads. Here’s a capsule summary:

Cisco: AI-Ready Infrastructure:

- Cisco is embedding AI telemetry and analytics into its Silicon One chips, Nexus 9000 switches, and Catalyst campus gear.

- The focus is on real-time observability via its ThousandEyes platform and AI-driven assurance in DNA Center, aiming to optimize both enterprise and AI/ML workloads.

- Cisco is also pushing AI-native data center fabrics to handle GPU-heavy clusters for training and inference.

- Cisco claims “exceptional momentum” and leadership in AI: >$800M in AI infrastructure orders taken from web-scale customers in Q4, bringing the FY25 total to over $2B.

- Cisco Nexus switches now fully and seamlessly integrated with NVIDIA’s Spectrum-X architecture to deliver high speed networking for AI clusters

HPE + Juniper: AI-Native Networking Push:

- Following its $13.4B acquisition of Juniper Networks, HPE has merged Juniper’s Mist AI platform with its own Aruba portfolio to create AI-native, “self-driving” networks.

- Key upgrades include:

-Agentic AI troubleshooting that uses generative AI workflows to pinpoint and fix issues across wired, wireless, WAN, and data center domains.

-Marvis AI Assistant with enhanced conversational capabilities — IT teams can now ask open-ended questions like “Why is the Orlando site slow?” and get contextual, actionable answers.

-Large Experience Model (LEM) with Marvis Minis — digital twins that simulate user experiences to predict and prevent performance issues before they occur.

-Apstra integration for data center automation, enabling autonomous service provisioning and cross-domain observability

Nvidia: AI Networking at Compute Scale

- Nvidia’s Spectrum-X Ethernet platform and Quantum-2 InfiniBand (both from Mellanox acquisition) are designed for AI supercomputing fabrics, delivering ultra-low latency and congestion control for GPU clusters.

- In partnership with HPE, Nvidia is integrating NVIDIA AI Enterprise and Blackwell architecture GPUs into HPE Private Cloud AI, enabling enterprises to deploy AI workloads with optimized networking and compute together.

- Nvidia’s BlueField DPUs offload networking, storage, and security tasks from CPUs, freeing resources for AI processing.

………………………………………………………………………………………………………………………………………………………..

Here’s a side-by-side comparison of how Cisco, HPE/Juniper, and Nvidia are approaching AI‑optimized enterprise networking — so you can see where they align and where they differentiate:

| Feature / Focus Area | Cisco | HPE / Juniper | Nvidia |

|---|---|---|---|

| Core AI Networking Vision | AI‑ready infrastructure with embedded analytics and assurance for enterprise + AI workloads | AI‑native, “self‑driving” networks across campus, WAN, and data center | High‑performance fabrics purpose‑built for AI supercomputing |

| Key Platforms | Silicon One chips, Nexus 9000 switches, Catalyst campus gear, ThousandEyes, DNA Center | Mist AI platform, Marvis AI Assistant, Marvis Minis, Apstra automation | Spectrum‑X Ethernet, Quantum‑2 InfiniBand, BlueField DPUs |

| AI Integration | AI‑driven assurance, predictive analytics, real‑time telemetry | Generative AI for troubleshooting, conversational AI for IT ops, digital twin simulations | AI‑optimized networking stack tightly coupled with GPU compute |

| Observability | End‑to‑end visibility via ThousandEyes + DNA Center | Cross‑domain observability (wired, wireless, WAN, DC) with proactive issue detection | Telemetry and congestion control for GPU clusters |

| Automation | Policy‑driven automation in campus and data center fabrics | Autonomous provisioning, AI‑driven remediation, intent‑based networking | Offloading networking/storage/security tasks to DPUs for automation |

| Target Workloads | Enterprise IT, hybrid cloud, AI/ML inference & training | Enterprise IT, edge, hybrid cloud, AI/ML workloads | AI training & inference at hyperscale, HPC, large‑scale data centers |

| Differentiator | Strong enterprise install base + integrated assurance stack | Deep AI‑native operations with user experience simulation | Ultra‑low latency, high‑throughput fabrics for GPU‑dense environments |

Key Takeaways:

- Cisco is strongest in enterprise observability and broad infrastructure integration.

- HPE/Juniper is leaning into AI‑native operations with a heavy focus on automation and user experience simulation.

- Nvidia is laser‑focused on AI supercomputing performance, building the networking layer to match its GPU dominance.

- Cisco leverages its market leadership, customer base and strategic partnerships to integrate AI with existing enterprise networks.

- HPE/Juniper challenges rivals with an AI-native, experience-first network management platform.

- Nvidia aims to dominate the full-stack AI infrastructure, including networking.

Telco and IT vendors pursue AI integrated cloud native solutions, while Nokia sells point products

The move to AI and cloud native is accelerating amongst network equipment and IT vendors which have announced highly integrated smart cloud solutions designed to migrate their telco customers into a new and profitable cloud future. The Cloud Native Computing Foundation (CNCF), as the name suggests, is a vendor-neutral consortium dedicated to making cloud native ubiquitous. The group defines cloud native as a collection of “technologies [that] empower organizations to build and run scalable applications in modern, dynamic environments such as public, private and hybrid clouds. Containers, service meshes, microservices, immutable infrastructure and declarative APIs exemplify this approach.”

CNCF writes that the cloud native approach “enable[s] loosely coupled systems that are resilient, manageable and observable. Combined with robust automation, they allow engineers to make high-impact changes frequently and predictably with minimal toll.”

In particular, Ericsson, HPE/Juniper, Cisco, Huawei, ZTE, IBM, and Dell have all announced telco end to end solutions that provide a platform for new services and applications by integrating AI, automation, orchestration and APIs over cloud-native based infrastructure. Let’s look at each of those capabilities:

- AI (Artificial Intelligence): Leveraging AI capabilities allows telcos to automate processes, optimize network performance, and enhance customer experiences. By analyzing vast amounts of data, AI-driven insights enable better decision-making and predictive maintenance.

- Automation: Automation streamlines operations, reduces manual intervention, and accelerates service delivery. Whether it’s provisioning new network resources, managing security protocols, or handling routine tasks, automation plays a pivotal role in modern telco infrastructure.

- Orchestration: Orchestration refers to coordinating and managing various network functions and services. It ensures seamless interactions between different components, such as virtualized network functions (VNFs) and physical infrastructure. By orchestrating these elements, telcos achieve agility and flexibility.

- APIs (Application Programming Interfaces): APIs facilitate communication between different software components. In the telco context, APIs enable interoperability, allowing third-party applications to interact with telco services. This openness encourages innovation and the development of new applications.

- Cloud-Native Infrastructure: Moving away from traditional monolithic architectures, cloud-native infrastructure embraces microservices, containerization, and scalability. Telcos are adopting cloud-native principles to build resilient, efficient, and adaptable networks.

While each company has its unique approach, the overarching goal is to empower telcos to deliver cutting-edge services, enhance network performance, and stay competitive in an ever-evolving industry. These advancements pave the way for exciting possibilities in the telecommunications landscape. When fully integrated, these technologies will enable the creation of smart cloud networks that can run themselves without human involvement and do so less expensively — but also more efficiently, responsively and securely than anything that exists today.

Our esteemed UK colleague Stephen M Saunders, MBE (Member of the Order of the British Empire– more below) notes that Nokia is not embracing smart cloud telco solutions, but is instead focusing on individual products. Last October, the company announced strategic and operational changes to its business model and divided the company into four business units. At that time, Nokia’s President and CEO Pekka Lundmark said:

“We continue to believe in the mid to long term attractiveness of our markets. Cloud Computing and AI revolutions will not materialize without significant investments in networks that have vastly improved capabilities. However, while the timing of the market recovery is uncertain, we are not standing still but taking decisive action on three levels: strategic, operational and cost. First, we are accelerating our strategy execution by giving business groups more operational autonomy. Second, we are streamlining our operating model by embedding sales teams into the business groups and third, we are resetting our cost-base to protect profitability. I believe these actions will make us stronger and deliver significant value for our shareholders.”

Steve says Nokia’s new divide-and-conquer strategy is being reinforced at its sales meetings, according to an attendee at one such gathering this year, with sales reps being urged to laser-focus on selling point products.

“The telco capex situation at the moment means Nokia — and others — have no choice but to examine every aspect of their business to work out how to adjust for a future CSP market that is itself going through dramatic change,” said Jeremiah Caron, global head of research and analysis at market research firm GlobalData Technology.

Most telcos are increasingly adopting cloud-native technologies to meet the demands of 5G SA core networks and to better automate their services.. However, some telcos are hesitant to fully embrace cloud-native due to concerns about complexity, cost, and reliability. Other challenges of cloud native are: changing the software development life cycle, privacy and security, guaranteeing end to end latency, and cloud vendor lock-in due to a lack of standards (every cloud vendor has their own proprietary APIs and network access configurations.

References:

https://www.silverliningsinfo.com/multi-cloud/report-smart-cloud-and-coming-paradigm-shift

https://www.fiercewireless.com/5g/op-ed-whither-nokia

Building and Operating a Cloud Native 5G SA Core Network

Omdia and Ericsson on telco transitioning to cloud native network functions (CNFs) and 5G SA core networks

https://www.ericsson.com/en/ran/intelligent-ran-automation/intelligent-automation-platform

https://www.huaweicloud.com/intl/en-us/solution/telecom/cloud-native-development-platform.html

https://sdnfv.zte.com.cn/en/solutions/VNF/5G-core-network/cloud-native

https://www.ibm.com/products/cloud-pak-for-network-automation

https://www.dell.com/en-us/dt/industry/telecom/index.htm#tab0=0

Steve Saunders (a.k.a. Silverlinings‘ Sky Captain), is a British-born communications analyst, investor, and digital media entrepreneur. In 2018 he was awarded an MBE in the Queen’s Birthday Honours List for services to the telecommunications industry and business.