Edge Computing

Verizon CEO: 5G will require fiber optic expansion, mobile edge computing and continue to use mmWave spectrum

Verizon 5G Overview:

Verizon’s 5G network strategy is centered on three deliverables with fiber optics for backhaul playing a huge role in all of them:

- 5G mobile for businesses and consumers,

- 5G home broadband (see Note 1. below) —delivering home internet over the air—and

- Mobile edge computing, which is essentially miniature data centers distributed throughout the network so they’re closer to the 5G endpoints.

The company’s CEO Hans Vestberg said that a total of 30 5G mobile cities will be launched by Verizon this year. He also plans to restart Verizon’s fixed wireless 5G Home service [1] later this year. 5G Home currently is in four U.S. markets.

Note 1. There is no standard for 5G fixed wireless and none is even being worked on. It is not an IMT 2020 use case within ITU.

………………………………………………………………………………………………………………………………………………………………………..

Fiber and Mobile Edge Computing:

The U.S.’s #1 wireless carrier by subscribers will continue to install fiber at a rate of 1,400 miles per month in support of its 5G network builds for between two and three years. Verizon will begin to provide mobile edge computing [aka Multi-access edge computing (MEC)] during the upcoming quarter, Vestberg said at a Goldman Sachs Communacopia investor conference on Thursday, September 19th. Verizon fiber deployments are critical to supporting a mixture of services, Vestberg said.

As part of its Fiber One project, two years ago Verizon signed a $1.1 billion, three-year fiber and hardware purchase agreement with Corning to build a next-generation fiber platform to support 4G LTE, 5G, and gigabit backhaul for 5G networks and fiber-to-the premise deployments to residential and business customers. Also in 2017, Verizon also announced a $300 million fiber deal with Prsymian Group to provide additional fiber for its wireline and wireless services.

“The whole Intelligent Edge Network was basically all of the way from the data center to the access point we have one unique network for redundancy. And then, of course, in between fiber to the access point and then you decide if its 5G, 4G, or fiber to the home or fiber to curb, or fiber to the enterprise,” Vestberg said. “In that, the fiber deployment for us was extremely important.”

“One part of the whole intelligent edge network was that . . . all the way from the data center to the access point you have one unique network with a lot of redundancy and, in between, a lot of fiber to the access point and then you decide if it’s 3G, 5G, 4G or fiber to the home or fiber to the curb or fiber to the enterprise,” he explained.

Vestberg said: “You have one unique network with a lot of redundancy and, in between, a lot of fiber to the access point,” he said of edge computing, which has become a priority for many wireless and wireline network operators.

………………………………………………………………………………………………………………………………………………………….

mmWave for 5G:

Verizon will continue to deploy millimeter wave (mmWave) for its 5G network for the foreseeable future, Vestberg told the investor conference audience. High frequency band mmWave has great download speeds but its range is very limited, which requires many more small cells.

“Maybe you have 50 to 70 megabits per second on a 4G network today, when you get 1 gig [on 5G] it’s a totally different experience and what you can do with it,” Vestberg said. “What we saw in the 4G era was enormous innovation coming with that [greater] coverage and that speed [over 3G]. It’s going to be the same with 5G for sure,” he added.

“Now we have 2 gigs [gigabits per second] on the phones,” Vestberg said. The range, however, can veer from 2,000 feet to 500 feet and the network can’t deliver flashy streaming videos — or, in fact, any kind of service — indoors. Verizon is the only US carrier solely dedicated to the highband (28GHz) approach to 5G for now. AT&T and T-Mobile plan to launch low and mid band 5G networks next year, along with limited mmWave deployments. Sprint has mid band 5G launched so far.

“We can launch nationwide with millimeter wave,” the Verizon CEO insisted. “Any spectrum will have 5G in the future,” Vestberg noted. Verizon will also offer dynamic spectrum sharing (DSS) in the future. DSS will allow operators to share spectrum instantaneously and simultaneously between 4G and 5G networks. But not for mmWave, since that doesn’t share spectrum with any 4G networks.

Vestberg said Verizon has all the spectrum it needs now to do a nationwide network on mmWave, and that adding more antennas in a given area or making software adjustments are also options for increasing capacity on existing spectrum bands.

Vestberg insisted that the mmWave-based service will be “self-install.” This would be more economical than the “white glove” — a.k.a. professional — installation model that 5G Home started with in October 2018.

……………………………………………………………………………………………………………………………………………………………………………………

Verizon’s mobile network:

A growing percentage of Verizon’s mobile subscribers are on unlimited data plans, with about half today. “This is a way for us to continue to see that our customers have a great journey from metered plan to Unlimited (data) plans and then they can move up…to 5G,” Vestberg said.

“We think that we are best equipped to leverage the best network and continue to partner with [media companies] rather than us managing it. Others might have better qualities for doing that but we don’t, Vestberg said.

………………………………………………………………………………………………………………………………………………………………………………….

References:

Verizon to speak at Goldman Sachs Communacopia Conference September 19

https://www.verizon.com/about/investors/goldman-sachs-28th-annual-communacopia-conference

https://event.webcasts.com/starthere.jsp?ei=1260712&tp_key=eae790b458

https://www.barrons.com/articles/verizon-ceo-hans-vestberg-stock-5g-wireless-competition-51568906382

https://www.lightreading.com/mobile/5g/verizons-vestberg-sticks-with-mmwave-for-5g-/d/d-id/754248

https://www.telecompetitor.com/verizon-ceo-ongoing-fiber-investments-paying-dividends-including-mec/

CenturyLink CTO on Network Virtualization; Major Investment in Edge Compute Services

Andrew Dugan, senior vice president and chief technology officer, CenturyLink, Inc. presented his company’s views on network virtualization and related topics at the Cowen and Company 5th Annual Communications Infrastructure Summit in Boulder CO., on Aug. 13th. You can listen to the audio webcast replay here.

Dugan said he doesn’t know what AT&T means when the mega carrier says it’s virtualizing 75% of its core network by the end of 2020. “I’d like to figure out what AT&T means by 75% virtualization,” said Dugan. “I don’t get it. The concept of virtualizing the core router or an optical platform, that’s a lot of cost of your network to provide services. We’re not working on virtualizing that stuff.”

Dugan said CenturyLink is focused on virtualizing systems that enable its customers to turn up and turn down services on demand, and it’s also focused on virtualization at the edge of its network. He said the company likes the benefits of putting a white box device on the customer premises and “letting a customer turn up a firewall or an SD-WAN appliance or a WAN accelerator whenever they want.”

Earlier this week, CenturyLink announced the rollout of its edge compute-focused strategy, beginning with a several hundred-million-dollar investment to build out and support edge compute services. This effort – which includes creating more than 100 initial edge compute locations across the U.S., and providing a range of hybrid cloud solutions and managed services – enables customers to advance their next-gen digital initiatives with technology that integrates high performance, low-latency networking with leading cloud service provider platforms in customized configurations.

“Customers are increasingly coming to us for help with applications where latency, bandwidth and geography are critical considerations,” said Paul Savill, senior vice president, product management, CenturyLink. “This investment creates the platform for CenturyLink to enable enterprises, hyperscalers, wireless carriers, and system integrators with the technology elements to drive years of innovation where workloads get placed closer to customers’ digital interactions.”

This expansion allows businesses and government agencies to leverage a highly diverse, global fiber network with edge facilities designed to serve their local locations within 5 milliseconds of latency. With this infrastructure, companies will be able to complete the linkage from office location to market edge compute aggregation to public cloud and data centers with redundant and dynamically consumable network.

“Digital transformation is gaining momentum as enterprises across all verticals look to technology to improve operational efficiency and enhance the customer experience,” said Melanie Posey, Research Vice President and General Manager at 451 Research. “As business processes become increasingly distributed, data-intensive, and transaction-based, the IT systems they depend on must be equally distributed to provide the necessary compute, storage and network resources to far-flung business value chains.”

Dugan said the edge compute platform plays into the company’s virtualization efforts, allowing customers the ability to turn up and turn down Ethernet services, increase capacity, change vLANs, and configure their services on-demand.

“That, to me, is where NFV and SDN comes in. We haven’t put a number on the percent of the network. We’re more focused on that customer enablement,” he said.

“When you build out an NFV platform, you’ve got the cost of the white box, you have the cost of the management or virtualization software that runs within the white box, and you have the cost of the virtual functions themselves. If you’re running one or two applications on premise, it’s not cheaper. The real value from NFV comes in the flexibility that it provides you to be able to put a box out there and be able to turn up and turn down services. It’s not a capex reduction…It’s a reduction in operating costs because you’re not having to roll trucks and put boxes out,” Dugan added.

CenturyLink says its “thousands of secure technical facilities combined with its network of 450,000-global route miles of fiber, expertise in high-performance cloud networking, and extensive cloud management expertise make this investment in the rapidly emerging edge compute market a natural evolution for the company.”

Key Facts (source: CenturyLink):

- CenturyLink today connects to over 2,200 public and private data centers and over 150,000 on-net, fiber-fed enterprise buildings.

- CenturyLink’s robust fiber network is one of the most deeply peered and well-connected in the world, with over 450,000 route-miles of coverage.

- CenturyLink is expanding access to its services by expanding network colocation services in many key markets to enable customers and partners to run distributed IT workloads close to the edge of the network.

……………………………………………………………………………………………………………..

CenturyLink References:

IHS Markit: CenturyLink #1 in the 2019 North American SIP Trunking Scorecard

VSG’s U.S. Carrier Ethernet LEADERBOARD: CenturyLink #1, AT&T #2; U.S. CE port base grew >12%

CenturyLink offers Multi Cloud Connect L2 Service for Fiber-fed Buildings

GSA Silicon Summit: Focus on Edge Computing, AI/ML and Vehicle to Everything (V2X) Communications

Introduction:

Many “big picture” technology trends and future requirements were detailed at GSA’s Silicon Summit, held June 18, 2019 in Santa Clara, CA. The conference was a “high level” executive briefing for the entire semiconductor ecosystem- including software, middleware and hardware. Insights on trends, key issues, opportunities and technology challenges (especially related to IoT security) were described and debated in panel sessions. Partnerships and collaboration were deemed necessary, especially for start-ups and small companies, to advance the technology, products and services to be offered in this new age of AI, ML/DL, cloud, IoT, autonomous vehicles, (fake) 5G, etc. Companies involved in the development of next generation Mobility and Edge Intelligence systems architectures and solutions discussed what opportunities, advancements and challenges exist in those key areas.

With the rapid proliferation of smart edge computing devices and applications, the volume of data produced is growing exponentially. Connected, and “intelligent,” devices are predicted to grow to 200 billion by 2020, generating enormous amounts of data every single day. The business potential created by this data comes with huge expectations. Edge devices, edge intelligence, high bandwidth connectivity, high performance computing, machine learning and other technologies are essential to enabling opportunities in markets such as Mobility and Industrial IoT.

This article will focus on Edge Computing, AI moving closer to the endpoint device (at the network edge or actually embedded in the end point device/thing), and vehicle to vehicle/everything communications.

While there were many presentations and panels on security, that is beyond the scope of the IEEE ComSoc Techblog. However, we share Intel’s opinion, expressed during a lunch panel session, that standards for Over The Air (OTA) security software/firmware updates are necessary for almost all smart/intelligent devices that are part of the IoT.

Architectural Implications of Edge Computing, Yogesh Bhatt VP of Products- ML, DL and Cognitive Tech Ericsson – Silicon Valley:

Several emerging application (data flow) patterns are moving intelligence from the cloud to local/metro area to on premises and ultimately to the endpoint devices. These applications include: cloud native apps like content delivery; AI enabled apps like sensing, thinking and acting; immersive apps like media processing/augmentation/distribution.

AI enabled Industrial apps are increasing. They were defined as: The ability to collect and deliver the right data/video/images, at the right velocity and in the right quantities to wide set of well-orchestrated ML-models and provide insights at all levels in the operation. Connectivity and compute are being packaged together and offered as “a service.” One example given was 4K video over (pre-standard) “5G” wireless access at the 2018 U.S. Open. That was intended to be a case study of whether 5G could replace miles of fiber to broadcast live, high definition sports events.

Yogesh Bhatt VP of Products- ML, DL and Cognitive Tech Ericsson – Silicon Valley

Image courtesy of GSA Global

……………………………………………………………………………………………………………………………………………………….

Required Architecture for Emerging App Patterns: Application Cloud, Management & Monetization Network slices, Mobile Fixed Cloud infrastructure, Distributed Cloud and Transport. The flow of emerging apps requires computing capability to be distributed based on the application pattern and flow. That in turn mandates cross-domain orchestration and automation of services.

Key take-aways:

- Emerging Application patterns will require significant compute capabilities close to the data sources and sinks (end points)

- Current Device-to-Cloud Architecture need to expand to encompass hosting points that provides such processing capabilities

- The processing capabilities at these Edge locations would be anything but like the centralized Cloud Data Centers (DCs)

……………………………………………………………………………………………………………………………………………………….

Heterogeneous Integration for the Edge, Yin Chang Sr. VP, Sales & Marketing ASE Group:

ASE sees the “Empowered Edge” as a key 2019 strategic trend. Edge computing drivers include: latency/determinism, cost of bandwidth, better privacy and security, and higher reliability/availability (connections go down, limited autonomy).

- At the edge (undefined where that is -see my comment below) we might see the following: Collect/Process data, Imaging Device, Image processing, Biometric Sensor, Microphone, Sensors with embedded MCUs, Environmental Sensor.

- At the core (assumed to be somewhere in the cloud/Internet): Compute/Intelligent processing, AI & Machine Learning, Networks/Server Processors, High Bandwidth Memory (HBM), Neuro-engine (future), Quantum computing (future).

Compute capabilities are moving to the edge and endpoints:

- Edge Infrastructure and IoT/Endpoint Systems are growing in compute power per system.

- As the number of IoT/Endpoint systems outgrows other categories, TOTAL Compute will be at the Endpoint.

Challenges at the Edge will require a cost effective integration solution which will need to deal with:

- Cloud connectivity – latency and bandwidth limitations

- Mixed device functionality – sense, compute, connect, power

- Multiple communication protocols

- Form factor constraints

- Battery life

- Security

- Cost High density

ASE advocates Heterogeneous Integration at the Edge— by material, component type, circuit type (IP), node and bonding/ interconnect method. The company has partnered with Cadence to realize System in Package (SiP) intelligent design with “advanced functional integration.” That partnership addresses the design/verification challenges of complex layout of advanced packages, including ultra-complex SiP, Fan-Out and 2.5D packages.

One such SiP design for wireless communications is antenna integration:

- Antenna on/in Package for SiP module integration

- Selective EMI Shielding for non-limited module level FCC certification

- Selective EMI Shielding – partial metal coating process by sputter for FCC EMI certification

- Small Size Antenna Integration – Chip antenna, Printed circuit antenna (under development)

…………………………………………………………………………………………………………………………………………………………………………………………………………………………….

Democratizing AI at the Endpoint, Brian Faith, CEO of QuickLogic:

QuickLogic was described as “a platform company that enables our customers to quickly and easily create intelligent ultra-low power endpoints to build a smarter, more connected world.” The company was founded in 1989, IPO in 1999, and now has a worldwide presence. Brian said they were focused on AI for growth markets including:

▪ Hearable/Wearable

▪ Consumer & Industrial IoT

▪ Smartphone/Tablet

▪ Consumer Electronics

AI and edge computing are coming together such that data analytics is moving from the cloud to the edge to the IoT endpoint (eventually). However, there are trade-offs for where computing should be located which are based on the application type. Some considerations include:

▪Applications latency & power consumption (battery life) requirements

▪Data security can be a factor

▪Local insights are trivial and non-actionable

▪Smart Sensors => rich data => actionable if real-time

▪Network sends insightful data (less bandwidth needed)

▪Cloud focuses on aggregate data insights and actions

AI Adoption Challenges:

1. Resource-Constrained Hardware:

▪ Can’t just run TensorFlow

▪ Limited SRAM, MIPS, FPU / GPU

▪ Mobile or wireless battery/power requirements

2. Resource-Constrained Development Teams:

▪ Embedded coding more complex & fragmented than cloud PaaS

▪ Scarcity of data scientists, DSP, FPGA and firmware engineers

▪ Limited bandwidth to explore new tools / methods

3. Lack of AI Automated Tools:

• Typical process: MATLAB modeling followed by hand coded C/C++

• Available AI tools focus on algorithms, not end-to-end workflows

• Per product algorithm cost: $500k, 6-9 months; often far greater

For Machine Learning (ML) good training is vital as is the data:

• Addresses anticipated sources of variance

• Leverages application domain expertise

• Includes all potentially relevant metadata

• Seeks optimal size for the problem at hand

ML Algorithms should fit within Embedded Computing Constraints:

Endpoint Inference Models:

• Starts with model appropriate to the problem

• Fits within available computing resources with headroom

• Utilizes least expensive features that deliver desired accuracy

SensiML Toolkit:

• Provides numerous different ML and AI algorithms and automates the selection process

• Leverages target hardware capabilities and builds models within its memory and computing limits

• Traverses library of over 80 features to optimize selection to best features to fit the problem

A Predictive Maintenance for a Motor Use Case was cited as an example of AI/ML:

Challenges:

▪Unique model doesn’t scale across similar motors (due to concrete, rubber, loading)

▪ Endpoint AI decreases system bandwidth, latency, power

Monitoring States:

▪ Bearing / shaft faults

▪ Pump cavitation / flow inefficiency

▪ Rotating machinery faults

▪ Seismic / structural health monitoring

▪ Factory predictive maintenance

QuickLogic aims to democratize AI-enabled SoC Design using SiFi templates and a cloud based SoC platform with a goal of a custom SoC in 12 weeks! In 2020 the company plans to have: an AI Software Platform, SoC Architecture, and eFPGA IP Cores. Very impressive indeed, if all that can be realized.

……………………………………………………………………………………………………………………………………………………………………………………………………………………

Empowering the Edge Panel Session:

Mike Noonen of Mixed-Com chaired a panel discussion on Empowering the Edge. Two key points made was the edge computing is MORE SECURE than cloud computing (smaller attack surface) and that as intelligence (AI/ML/data processing) moves to the edge, connections will be richer and richer. However, no speaker or panelist or moderator defined where the edge actually is located? Is it on premises, the first network element in the access network, the mobile packet core (for a cellular connection), LPWAN or ISP point of presence? Or any of the above?

Mike Noonen of Mixed-Com leads Panel Discussion

Photo courtesy of GSA Global

……………………………………………………………………………………………………………………………………………………………………………………………………………………

After the conference, Mike emailed this to me:

“One of the many aspects of the GSA Silicon Summit that I appreciate is the topic/theme (such as edge computing). The speakers and panelists addressing the chosen theme offer a 360 degree perspective ranging from technical, commercial and even social aspects of a technology. I always learn something and gain new insights when this broad perspective is presented.”

I couldn’t agree more with Mike!

…………………………………………………………………………………………………………………………………………………………………………………………………………………………..

V2X –Vehicle to Everything connectivity, Paul Sakamoto, COO of Savari:

V2X connectivity technology today is based on two competing standards: DSRC: Dedicated Short Range Communications (based on IEEE 802.11p WiFi) and C-V2X: Cellular Vehicle to Everything (based on LTE). Software can run on either, but the V2X connectivity hardware is based on one of the above standards.

DSRC: Dedicated Short Range Communications:

- Legacy Tech – 20 years of work, Low Latency Performance Range and reliability

- No carrier fees; minimize fixed cost

- Infrastructure needs; how to pay?

- EU Delegate Act win, but 5GAA is contesting

C-V2X: Cellular Vehicle to Everything:

- Developed from LTE-Big Money Backing

- Cellular communications history; good range and reliability

- Carrier fees required; subsidy for fixed costs

- Mix in with base stations to amortize costs

- China has chosen it as part of the government’s 5G plan

V2X Challenge: Navigate the Next 10 Years:

For mobile use, the main purpose is safety and awareness:

• Tight message security

• Low latency (<1ms)

• Needs client saturation

• Short range

For infrastructure, the main purpose is efficiency and planning:

• Tight message security

• Moderate latency (~100ms)

• Needed where needed

• Longer range

In closing, Paul said V2X is going to be a long raise with many twists and turns. Savari’s strategy is to be ”radio agnostic,” use scalable computing and scalable security elements, have a 7-10 year business plan with a 2-3 year product development cycle, and be ready to pounce at any inflection point (which may mean parallel developments).

May 20, 2020 Update:

ITU-R WP 5D will produce a draft new Report ITU-R M.[IMT.C-V2X] on “Application of the Terrestrial Component of IMT for Cellular-V2X.”

3GPP intends to contribute to the draft new Report and plans to submit relevant material at WP 5D meeting #36. 3GPP looks forward to the continuous collaboration with ITU-R WP 5D for the finalization of Report ITU-R M.[IMT.C-V2X].

…………………………………………………………………………………………………………………………………………..

IHS Markit: Cloud and Mobility Driving Enterprise Edge Connectivity in North America

IHS Markit Survey: Cloud and mobility driving new requirements for enterprise edge connectivity in North America

By Matthias Machowinski, senior research director, IHS Markit, and Joshua Bancroft, senior analyst, IHS Markit

Highlights

- By 2019, 51 percent of network professionals surveyed by IHS Markit will use hybrid cloud and 37 percent will adopt multi-cloud for application delivery.

- Bandwidth consumption continues to rise. Companies are expecting to increase provisioned wide-area network (WAN) bandwidth by more than 30 percent annually across all site types.

- Data backup and storage is the leading reason for traffic growth, followed by cloud services

- Software-defined WAN (SD-WAN) is maturing: 66 percent of surveyed companies anticipate deploying it by the end of 2020.

- Companies deploying SD-WAN use over 50 percent more bandwidth, than those who have not deployed it. Their bandwidth needs are also growing at twice the rate of companies using traditional WANs.

Analysis

Based on a survey of 292 network professionals at North American enterprises, IHS Markit explored the evolving requirements for enterprise edge connectivity, including WAN and SD-WAN. The study revealed that enterprise IT architectures and consumption models are currently undergoing a major transformation, from servers and applications placed at individual enterprise sites, to a hybrid-cloud model where centralized infrastructure-as-a-service (IaaS) complements highly utilized servers in enterprise-operated data centers. This process allows organizations to bring the benefits of cloud architectures to their own data centers – including simplified management, agility and scalability – and leverage the on-demand aspect of cloud services during peak periods. Respondents also reinforced the viewpoint that the hybrid cloud is a stepping stone to the emerging multi-cloud.

Changing business demographics is sparking the trend of more centralized applications: enterprises are moving closer to their customers, partners, and suppliers. They are adding more physical locations, making mobility a key part of their processes and taking on remote employees to leverage talent and expertise.

Following the current wave of application centralization, certain functions requiring low latency will migrate back to the enterprise edge, residing on universal customer premises equipment (uCPE) and other shared compute platforms. This development is still in its infancy, but it is already on the radar of some companies.

Hybrid cloud is an ideal architecture for distributed enterprises, but it is also contributing to traffic growth at the enterprise edge. Extra attention must be paid to edge connectivity, to ensure users don’t suffer from slow or intermittent access to applications. Performance is a top concern, and enterprises are not only adding more WAN capacity and redundancy, but also adopting SD-WAN.

The primary motivation for deploying SD-WAN is to improve application performance and simplify WAN management. The first wave of SD-WAN deployments focused on cost reduction, and this is still clearly the case, with survey respondents indicating their annual mega-bits-per-second cost is approximately 30 percent lower, with costs declining at a faster rate than in traditional WAN deployments. These results show that SD-WAN can be a crucial way to balance runaway traffic growth with budget constraints.

SD-WAN solutions not only solve the transportation and WAN cost reduction issue, but also help enterprises create a fabric for the multi-cloud. Features like analytics to understand end-user behaviour, enhanced branch security and having a centralized management portal all make SD-WAN an enticing proposition for enterprises looking to adopt a multi-cloud approach.

Enterprise Edge Connectivity Strategies North American Enterprise Survey

This IHS Markit study takes explores how companies are advancing connectivity at the enterprise edge, in light of new requirements. It includes traditional WAN and SD-WAN growth expectations, growth drivers, plans for new types of connectivity and technologies, equipment used, feature requirements, preferred suppliers, , and spending plans.

SK Telecom partners with MobiledgeX for Edge Computing; Ericsson video call over SK Telecom 5G test network

-

SK Telecom, the largest mobile operator in South Korea, announces an agreement to partner with MobiledgeX to enable a new generation of connected devices, content and experiences, creating new business models and revenue opportunities leading into 5G (?).

“In the 5G era, Mobile Edge Computing will be a key technology for next-generation industries including realistic media and autonomous driving,” said Jong-kwan Park, Senior Vice President and Head of Network Technology R&D Center of SK Telecom. “Based on this partnership, SK Telecom will continue to drive technology innovations to provide customers with differentiated 5G services.”

The relationship between SK Telecom and MobiledgeX reflects an aligned vision for the future of mobile operators as key players in future mobile application development, performance, security, and reliability. This vision is shared by leading cloud providers and device makers working with MobiledgeX to seamlessly pair the power and distribution of mobile operator infrastructure with the convenience and depth of developer tooling of hyperscale public cloud and the scale, mobility and distribution of billions of end user devices.

The relationship between SK Telecom and MobiledgeX reflects an aligned vision for the future of mobile operators as key players in future mobile application development, performance, security, and reliability. This vision is shared by leading cloud providers and device makers working with MobiledgeX to seamlessly pair the power and distribution of mobile operator infrastructure with the convenience and depth of developer tooling of hyperscale public cloud and the scale, mobility and distribution of billions of end user devices.“Deutsche Telekom created MobiledgeX as an independent company to drive strategic collaboration across the world’s leading telecoms, public cloud providers, device makers and the surrounding ecosystem – enabling a new era of business models, operating efficiencies and mobile experiences. We are particularly excited to announce SK Telecom‘s participation in this collaborative ecosystem where everybody wins. SK Telecom is a global leader in 5G which follows their rich tradition of innovation within their network, strategic partnerships and developer engagement. This new era is underway,” says Eric Braun, Chief Commercial Officer of MobiledgeX.

MobiledgeX and SK Telecom executives hold up their respective copies of a memorandum of understanding between the two companies to jointly develop mobile edge computing applications. (SK Telecom)

…………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………..

MobiledgeX is focused on delivering developer-facing edge cloud services and bringing mobility to those services, dynamically placing application back-end as close to mobile devices as possible and removing them when not needed. MobiledgeX, Deutsche Telekom and Intelhave partnered with Telecom Infra Project (TIP) to form an Edge Application Developer project group (see here) to ensure the gained insights and supporting source code are available to all. This is a new opportunity for everyone presented as a consequence of $2 trillion of CAPEX investment in network infrastructure over the past 10 years and the virtualization of the network from the central offices to the towers. MobiledgeX is building a marketplace of edge resources and services that will connect developers with the world’s largest mobile networks to power the next generation of applications and devices. MobiledgeX is an independent edge computing company founded by Deutsche Telekom and headquartered in Menlo Park, California.

https://mobiledgex.com/press-releases/2018/12/12/sk-telecom-partners-with-mobiledgex

https://www.fiercetelecom.com/telecom/sk-telecom-and-mobiledgex-join-hands-mobile-edge-computing

Separately, SK Telecom said it will comply with the IoT Security Guidelines proposed by GSMA for the safe usage and expansion of IoT networks.

……………………………………………………………………………………………………………………………………………………………………………………………………………………..

- Ericsson video call over SK Telecom’s 5G test network:

Ericsson and SK Telecom have conducted a video call over the operator’s live 5G test network, the latest milestone in a long-running partnership.

On this occasion, the companies used 100 MHz of 3.5-GHz spectrum, marking the first time that so much spectrum in this band has been used in a field test. SK Telecom used Ericsson’s commercial radio equipment, based on the 5G New Radio (NR) standard. Ericsson also supplied a test device equipped with Qualcomm’s Snapdragon x50 5G modem. As well as a video call, SK Telecom also demonstrated streaming via a 5G data session.

As has been well documented, South Korea is pushing hard to be a frontrunner on 5G, which might explain Ericsson’s keen involvement with SK Telecom’s research and trials. The two companies have been at the forefront of testing out network slicing, where an access network is subdivided into virtual partitions, with the parameters of each one tailored to meet the requirements of specific services, from low-bandwidth massive IoT connectivity, to low-latency, high-throughput A/VR services. Ericsson and SK Telecom have also trialled multi-vehicular 5G trials in partnership with BMW.

Another explanation for Ericsson’s close involvement with SK Telecom might have something to do with Samsung. The Korean vendor missed the boat on 4G when it backed WiMAX instead of LTE, but it is making a concerted effort to not be left out of 5G. Last year, it unveiled its end-to-end 5G portfolio, and it has struck important partnerships and supply deals, including with SK Telecom, Telefonica, and Verizon.

With South Korea expected to be among the first movers when it comes to 5G commercialisation, Ericsson will want to make sure SK Telecom’s network is adorned with as much of its equipment as possible.

Alex Jinsung Choi, CTO and Head of Corporate R&D Center, SK Telecom, said:

“5G will offer much more than just faster data speeds. It will serve as a true enabler for a whole new variety of powerful services that deliver unprecedented value to customers. Today’s demonstration of 5G-based connected car technologies marks the very first step towards achieving fully autonomous driving in the upcoming era of 5G.”

The test environment was realized by using an Ericsson 5G field trial network. It consists of multiple radio transmission points on 28GHz frequency band to cover the entire track and one user equipment installed in every car. The trials showed consistent Gbps-level throughput with a few millisecond latency. Uninterrupted connectivity, using beam tracking and beam transfer across the different transmission points at speeds exceeding 100 kilometers per hour is also achieved. The performance shown enables multiple connected car use cases such as augmented and virtual reality, obstacle control and vehicle to vehicle communication, based on a system solution including radio and core network infrastructure from Ericsson.

Thomas Norén, Head of Product Area Network Products, Ericsson, said:

“Ericsson is working with leading operators and ecosystem players to drive the realization of 5G – both with today’s pre-standard field trials, and through standardization activities along with global standards bodies and industry groups. The trial takes a step closer to 5G technology and commercialization, especially for connected vehicle applications.”

The trial simultaneously implements new key 5G capabilities with multi-site, multi-transmission point, MU-MIMO, and with multiple devices operating in the millimeter wave frequency band. It demonstrates beam tracking and beam mobility between different 5G access points, at high mobility.

https://www.ericsson.com/en/news/2018/12/sk-telecom-qualcomm-and-ericsson-collaborate-on-5g

https://www.mobileworldlive.com/asia/asia-news/skt-makes-5g-video-call-on-3-5ghz-band/

IHS Markit: Video to Drive Demand for Edge Computing Services

Edge computing will get its primary propulsion from demand for video services, IHS Markit found in a survey. The Linux Foundation commissioned IHS Markit to identify the top apps and revenue opportunities for edge compute services. Video content delivery was cited by 92% of respondents as the top driver of edge computing, while augmented/virtual reality, autonomous vehicles and the industrial internet of things (IIoT) all tied for second place.

During a keynote address at this week’s Layer123 SDN NFV World Congress at The Hague, IHS Markit’s Michael Howard, executive director research and analysis, carrier networks (and a long time colleague of this author), presented some of the results from the market research firm’s survey of edge compute application survey respondents.

“The edge ‘is in’ these days in conversations, conferences and considerations—and there are many definitions,” Howard wrote in an email to FierceTelecom. “Our conclusion is that there are many edges, but as an industry, I believe we can coalesce around a time-related distance to the end user, device or machine, which indicates a short latency, on which many edge applications rely. The other major driver for edge compute is big bandwidth, principally video, where caching and content delivery networks save enormous amounts of video traffic on access, metro, and core networks.”

IHS Markit defined edge compute as being within 20 milliseconds of the end user, device or machine. When compared to Internet Exchanges, telcos have an advantage at the edge because they are much closer to the users via their central offices, cell sites, cell backhaul aggregation, fixed backhaul and street cabinets.

Integrated communications providers and over-the-top providers have partial coverage for edge compute with distributed data centers that are within the 20 milliseconds to 50 milliseconds range, while telcos can hit 5 milliseconds to 20 milliseconds.

Among the top services that are driving edge compute, video content delivery, which included 360 video and venues, was first at 92% followed by a three-way tie among autonomous vehicles, augmented reality/virtual reality and industrial internet of things/automated factory all at 83%. Gaming was next at 75%, with distributed virtualized mobile core and fixed access in another tie with private LTE at 58%.

Other findings from the survey:

- Surveillance and supply chain management each garnered 33%, while smart cities was last at 25%.

- When it comes to which edge services will garner the most revenue, distributed virtualized mobile core and fixed access, private LTE, gaming, video content delivery and industrial IoT all tied at the top of the survey results.

- Supply chain management, autonomous vehicles and AR/VR tied in the next grouping while surveillance and smart cities tied for last.

- Consumer-driven revenue at the edge includes gaming and video content delivery networks while enterprise-driven revenues will include private LTE, industrial IoT and supply chain.

- Overall, many of the edge deployments will initially be justified by cost savings first followed by revenue-bearing applications.

- Edge compute apps will start out in limited or contained rollouts with full deployment taking years and investments across several areas, according to the survey.

Although edge compute brings services closer to end users and alleviates bandwidth constraints, it’s complex. Even a single edge compute location is complex with elements of network functions virtualization, mobile edge computing and fixed mobile convergence technologies that can spread across hundreds of thousands locations.

There are also authorization, billing and reconciliation issues that need to be addressed across various domains, which could be resolved using blockchain to create virtual ledgers.

Further, there’s a long investment road ahead to fully deploy edge compute. Areas that comprise the top tier of investments for edge compute include multi-access edge compute, integration, edge connectivity (two-way data flows, SD-WAN services, low latency and bandwidth), 5G spectrum and engineering.

…………………………………………………………………………………………………………………………………………………………………………..

Earlier this year, AT&T Foundry launched an edge computing test zone in Palo Alto, California, to kick the tires on AR, VR and cloud-driven gaming. As part of the second phase, AT&T Foundry is expanding its edge test zone footprint to cover all of the San Francisco Bay Area, allowing for increased application mobility and broader collaboration potential.

IDC Directions 2018 Insight: Intelligent Network Edge, SD-WANs & SD-Branch

Introduction:

IDC Directions is the market research firm’s annual conference, which always delivers an informative and actionable overview of the issues shaping the information technology, telecommunications, and consumer technology markets. IDC speakers look at the current state of various markets, cutting edge trends and future IT developments that are likely to result in transformation and change.

This year’s event only had one session on networking which we cover in detail in this article. A total event summary is beyond the scope of the IEEE ComSoc techblog.

Abstract:

As the edge plays host to a growing array of new applications, the focus ultimately turns to edge networking, which must deliver the requisite connectivity, bandwidth, low-latency, and network services for both enterprise and service provider deployments. Indeed, as IoT and other edge services proliferate, a one-size-fits-all approach to edge networking and network security will not suffice. In this session, Brad Casemore of IDC examined the diversity of network requirements and solutions at the edge, covering physical, virtual, and network-as-a-service (NaaS) use cases and application scenarios.

Presentation Highlights:

According to IDC, the “Intelligent Edge” includes both the IT Edge (IT activities performed outside the data center, but within purview of IT) and the OT/Operations Technology Edge (embedded technologies that do not directly generate data for enterprise use, and are outside the direct purview of IT).

That’s in contrast to the “Core,” which is the “IT Data Center” — an information aggregation facility that is located on the firm’s own physical premises, off-premises in a collocation facility, or off-premises at a virtual location such as a public cloud.

Networking at the Intelligent Edge involves three types of sub-networks:

▪ Enterprise Cloud IT Edge (branch networking for the cloud)

▪ Enterprise Branch IT Edge (the evolution of networking at branch offices/remote sites)

▪ IoT Edge (networking to, from, and at the IoT/OT Edge)

Networking provides essential connectivity and bandwidth, but it also provides valuable network and security services that accelerate and optimize application and service performance at the edge. Brad said that significant innovation is occurring in edge networking which are enabling better business outcomes at the intelligent edge. Some examples of innovation are:

• Software Defined Networks (SDN)/Intent-based

• Overlay networks (such as SD-WANs)

• Network Virtualization (NV)/Network Function Virtualization (NFV)

• Network security (software-defined perimeter)

As a result, the intelligent edge network is significantly contributing to automated network intelligence, in addition to providing wireless and wireline connectivity services.

……………………………………………………………………………………………………………

Enterprise IT is being challenged to provide access to public and private clouds while also maintaining secure and effective communications with regional offices and headquarters (usually through an IP-MPLS VPN).

Enterprise WANs are not effective for Cloud access, because they lack agility, flexibility, and efficiency.

These two issues are depicted in the following two IDC figures:

As a result, a different Application Centric WAN architecture is needed. Brad proposed SD-WANs for this purpose, despite the reality there is no standard definition or functionality for SD-WAN and no standards for multi-vendor inter-operability or inter-SD-WAN connections (e.g. UNI or NNI, respectively). SD-WAN is an overlay network that provides user control via the Application layer, rather than via a “Northbound” API to/from the Control plane (as in conventional SDN).

The use cases for SD-WAN have been well established, including improving application performance by enabling use of multiple WAN links, simplifying WAN architecture, reducing reliance on MPLS, and improving SaaS performance by automatically steering traffic based on application policy instead of back hauling all traffic to the data center.

IDC believes the Internet of Things (IoT) will have a huge impact on networking infrastructure, especially at the edge where low latency/ real time control of IoT devices will be needed.

Casemore said that SD-WANs will help companies overcome issues associated with a traditional enterprise WAN, which wasn’t built for cloud and lacks operational efficiently.

In a real world example of SD-WANs for a medical device supplier, Brad noted the goals were:

• Dynamic access to all available bandwidth (underlays)

• Move away from using relatively expensive MPLS circuits for voice traffic

• Prioritize business-critical cloud apps ahead of nonproduction apps/traffic

• Need for greater visibility –quickly remediate issues and respond to evolving application/service needs

Benefits cited were the following:

• Improved resilience

• Better application performance and availability

• Cost-effective bandwidth utilization

• Better visibility (faster troubleshooting/remediation and proactive planning)

• IT department and network team now contributing to the business of making and shipping products quickly

IDC sees SD-WAN evolving to incorporate more intent based networking and intelligent automation, with business intent consistently applied to application delivery and performance, he said.

…………………………………………………………………………………………………………………….

Editor’s Note: Intent-based networking is a hot buzzword in the industry right now, generally describing technology that uses automation and machine learning to implement business policy with little or no human intervention. Many believe that intelligent automation will be how business intent is applied to application delivery and network performance across the WAN.

…………………………………………………………………………………………………………………….

Brad also suggested the following additional attributes for future SD-WANs

▪ Machine Learning and AI – SD-WAN must become cognitive, proactive, and ultimately self-driving, continuously adapting to changing conditions

▪ Pervasive Security – Applications automatically steered over appropriate links and to appropriate security devices. Secure segmentation provided on a per -application basis.

▪ Stepping stone toward SD-branch

“This is all moving us toward the software-defined (SD)-branch. SD-WAN serves as the precursor and serves as the essential conduit to SD-branch and network as a service (NaaS) at the edge,” Casemore said.

In the SD-branch, routing, firewall, and WAN optimization are provided as virtual functions in a cloud-like NaaS model, replacing expensive hardware. Management is automated and services can be easily adjusted as business needs change, Casemore said.

IDC believes telcos will use SD-branch to provide virtual CPE and unversal CPE services as per this slide from IDC:

……………………………………………………………………………………………………………………………………

Sidebar on SD-branch from a recent Network World article:

The branch network is a critical piece of the IT infrastructure for most distributed organizations. The branch network is responsible for providing reliable, high quality communications to and from remote locations. It must be secure, easy to deploy, able to be managed centrally and cost effective. Requirements for branch networks continue to evolve with needs for increased bandwidth, quality of service, security and support for IoT.

SDN and network virtualization technologies have matured to the point where they can deliver significant benefits for branch networks. For example, SD-WAN technology is rapidly being deployed to improve the quality of application delivery and reducing operational complexity. SD-WAN suppliers are rapidly consolidating branch network functions and have reduced (or eliminated) the need for branch routers and WAN optimization.

The most compelling argument for SD-Branch is operational agility. IT organizations can rapidly deploy and provision a network branch-in-a-box solution for new locations. Via a centralized management console, they can control and adjust all branch network and security functions.

Reducing or eliminating the need for trained IT personnel to visit remote branch locations results in significant cost and time savings. SD-Branch also promises to reduce hardware costs by deploying software on consolidated hardware as compared to many separate appliances.

Other SD-Branch benefits include:

- Decreased cost of support and maintenance contracts because fewer vendors will be involved.

- The ability to right-size hardware requirements for each branch thanks to software virtualization.

- A smaller hardware footprint, which is ideal for space-constrained branches.

- Network performance scalability. As network requirements change, the performance of any function can be tuned up or down by changing processor allocation or adding hardware resources.

- Lower power consumption because one power-efficient platform replaces many appliances.

Over time the SD-Branch will be easier to deploy, less complex to manage, and more responsive to changing requirements at the branch. The cost benefits in CAPEX and OPEX could be significant as the technology matures.

The broader concept of SD-Branch is still in its early stages. During 2018, we will see a number of suppliers introduce their SD-Branch solutions. These initial SD-Branch implementations will primarily be single-vendor and may lack state-of-the-art technology in some applications.

IT leaders should carefully evaluate the benefits of the SD-Branch architecture. Migration to SD-Branch will likely require significant changes to the existing branch network and may require a forklift upgrade. SD-Branch suppliers should be evaluated on their current and near-future technology, technology partnerships (e.g. security), and deployment options (do it yourself, channel partners, and managed solutions).

……………………………………………………………………………………………………………………………………

Brad believes that SD-branch is inevitable. He provided the following rationale for that:

▪SD-Branch will be enabled by SD-WAN overlays

▪ SD-WAN will be integral component of SD-Branch, but latter will include other

virtualized (perhaps containerized) network/security services

▪ Automated provisioning, management, and orchestration results in SD-Branch that

yields dynamic network as a service (NaaS)

• Network and security services added or modified as needed

• Results in CapEx savings (separate hardware appliances no longer need for each network

function)

• Network operating costs are lower, minimized need for branch IT

• Provisioning is far more agile, resulting in faster time to revenue/business outcome

▪ It’s not enough to have virtual network appliances

• Virtual appliances are still appliances architecturally

• SD-Branch gets us to cloud-like NaaS at the branch/remote office

…………………………………………………………………………………………………………..

IDC Prediction: Edge IT goes mainstream in 2022, displacing 80% of existing edge appliances.

…………………………………………………………………………………………………………..

IDC’s Essential Guidance for Users:

- Consider the role and value of the network not just in terms of connectivity, but in how it can contribute to intelligence at the edge.

- Look for edge-networking solutions that abstract management complexity, provide application-centric automation, speed provisioning, and ensure application availability and security.

- Ensure your intelligent-edge network evolves to a NaaS model, in which virtualized network and security services are dynamically provisioned as needed. The edge network must be as agile as the apps/services it supports.

IDC’s Essential Guidance for Suppliers:

- Continually enhanced intelligent network automation and orchestration to reduce operational complexity and provide network agility.

- Leverage ML/AI as means to the end goal of providing increasingly actionable visibility that loops back to feed intent/policy and allows for proactive remediation.

- Provide for true NaaS at the intelligent edge, incorporating a full range of virtualized network and network-security services (through ecosystem partnerships).

Highlights of 2017 Telecom Infrastructure Project (TIP) Summit

Executive Summary:

The Telecom Infra Project (TIP) is gaining a lot of awareness and market traction, judging by last week’s very well attended TIP Summit at the Santa Clara Convention Center. The number of telecom network operators presented was very impressive, especially considering that none were from the U.S. with the exception of AT&T, which presented on behalf of the Open Compute Project (OCP) Networking Group. It was announced at the summit that the OCP Networking group had formed an alliance with TIP.

The network operators that presented or were panelists included representatives from: Deutsche Telekom AG, Telefonica, BT, MTN Group (Africa), Bharti Airtel LTD (India), Reliance Jio (India), Vodafone, Turkcell (Turkey), Orange, SK Telecom, TIM Brasil, etc. Telecom Italia, NTT, and others were present too. Cable Labs – the R&D arm of the MSOs/cablecos – was represented in a panel where they announced a new TIP Community Lab (details below).

Facebook co-founded TIP along with Intel, Nokia, Deutsche Telekom, and SK Telecom at the 2016 Mobile World Congress event. Like the OCP (also started by Facebook), its mission is to dis-aggregate network hardware into modules and define open source software building blocks. As its name implies, TIP’s focus is telecom infrastructure specific in its work to develop and deploy new networking technologies. TIP members include more than 500 companies, including telcos, Internet companies, vendors, consulting firms and system integrators. Membership seems to have grown exponentially in the last year.

During his opening keynote speech, Axel Clauberg, VP of technology and innovation at Deutsche Telekom and chairman of the TIP Board of Directors, announced that three more operators had joined the TIP Board: BT, Telefonica, and Vodafone.

“TIP is truly operator-focused,” Clauberg said. “It’s called Telecom Infrastructure Project, and I really count on the operators to continue contributing to TIP and to take us to new heights.” That includes testing and deploying the new software and hardware contributed to TIP, he added.

“My big goal for next year is to get into the deployment stage,” Clauberg said. “We are working on deployable technology. [In 2018] I want to be measured on whether we are successfully entering that stage.”

Jay Parikh, head of engineering and infrastructure at Facebook, echoed that TIP’s end goal is deployments, whether it is developing new technologies, or supporting the ecosystem that will allow them to scale.

“It is still very early. Those of you who have been in the telco industry for a long time know that it does not move lightning fast. But we’re going to try and change that,” Parikh said.

…………………………………………………………………………………………………………………….

TIP divides its work into three areas — access, backhaul, and core & management — and each of the project groups falls under one of those three areas. Several new project groups were announced at the summit:

- Artificial Intelligence and applied Machine Learning (AI/ML): will focus on using machine learning and automation to help carriers keep pace with the growth in network size, traffic volume, and service complexity. It will also work to accelerate deployment of new over-the-top services, autonomous vehicles, drones, and augmented reality/virtual reality.

- End-to-End Network Slicing (E2E-NS): aims to create multiple networks that share the same physical infrastructure. That would allow operators to dedicate a portion of their network to a certain functionality and should make it easier for them to deploy 5G-enabled applications.

- openRAN: will develop RAN technologies based on General Purpose Processing Platforms (GPPP) and disaggregated software.

The other projects/working groups are the following:

- Edge Computing: This group is addressing system integration requirements with innovative, cost-effective and efficient end-to-end solutions that serve rural and urban regions in optimal and profitable ways.

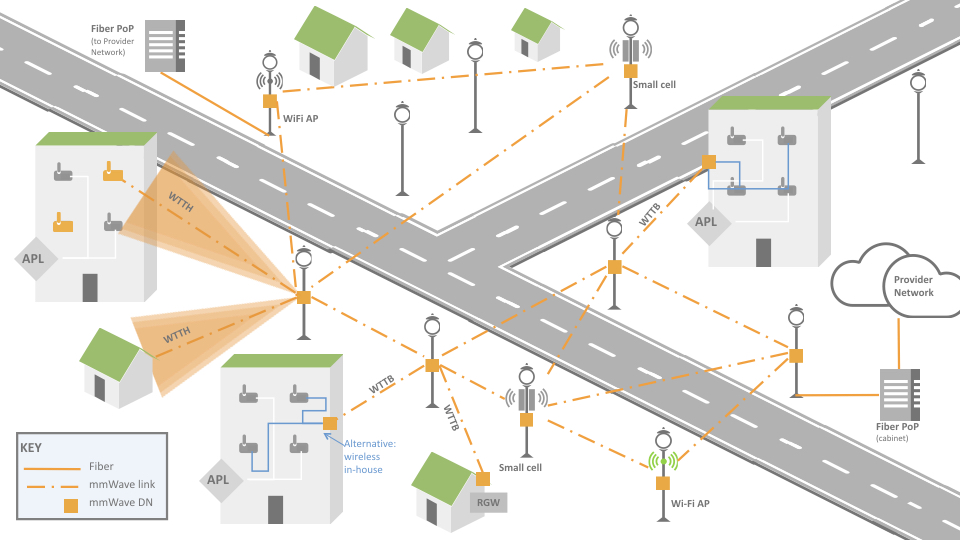

- This group is pioneering a 60GHz wireless networking system to deliver gigabits of capacity in dense, urban environments more quickly, easily and at a lower cost than deploying fiber. A contribution was made to IEEE 802.11ay task force this year on use cases for mmW backhaul.

Above illustration courtesy of TIP mmW Networks Group

- Open Optical Packet Transport: This project group will define Dense Wavelength Division Multiplexing (DWDM) open packet transport architecture that triggers new innovation and avoids implementation lock-ins. Open DWDM systems include open line system & control, transponder & network management and packet-switch and router technologies.

- The Working Group is focused on enabling carriers to more efficiently deliver new services and applications by using mobile edge computing (MEC) to turn the RAN network edge (mobile, fixed, licensed and unlicensed spectrum) into an open media and service hub.

- The project is pioneering a virtualized RAN (VRAN) solution comprised of low-cost remote radio units that can be managed and dynamically reconfigured by a centralized infrastructure over non-ideal transport.

- project group will develop an open RAN architecture by defining open interfaces between internal components and focusing on the lab activity with various companies for multi-vendor interoperability. The goal is to broaden the mobile ecosystem of related technology companies to drive a faster pace of innovation.

A complete description, with pointers/hyperlinks to respective project/work group charters is in the TIP Company Member Application here.

TEACs – Innovation Centers for TIP:

Also of note was the announcement of several new TEACs – TIP Ecosystem Acceleration Centers, where start-ups and investors can work together with incumbent network operators to progress their respective agendas for telecom infrastructure.

The TIP website comments on the mission of the TEACs:

“By bringing together the key actors – established operators, cutting-edge startups, and global & local investors – TEACs establish the necessary foundation to foster collaboration, accelerate trials, and bring deployable infrastructure solutions to the telecom industry.”

TEACs are located in London (BT), Paris (Orange), and Seoul (SK Telecom). .

TIP Community Labs:

TIP Community Labs are physical spaces that enable collaboration between member companies in a TIP project group to develop telecom infrastructure solutions. While the labs are dedicated to TIP projects and host TIP project teams, the space and basic equipment are sponsored by individual TIP member companies hosting the space. The labs are located in: Seoul, South Korea (sponsored by SK Telecom); Bonn, Germany (sponsored by Deutsche Telekom); Menlo Park, California, USA (sponsored by Facebook). Coming Soon Rio de Janiero, Brazil – to be sponsored by TIM Brasil. At this summit, Cable Labs announced it will soon open a TIP Community Lab in Louisville, CO.

…………………………………………………………………………………………………………………………..

Selected Quotes:

AT&T’s Tom Anschutz (a very respected colleague) said during his November 9th – 1pm keynote presentation:

“Network functions need to be disaggregated and ‘cloudified.’ We need to decompose monolithic, vertically integrated systems into building blocks; create abstraction layers that hide complexity. Design code and hardware as independent modules that don’t bring down the entire IT system/telecom network if they fail.”

Other noteworthy quotes:

“We’re going to build these use-case demonstrations,” said Mansoor Hanif, director of converged networks and innovation at BT. “If you’re going to do something as difficult and complex as network slicing, you might as well do it right.”

“This is the opening of a system that runs radio as a software on top of general purpose processes and interworks with independent radio,” said Santiago Tenorio, head of networks at Vodafone Group. The project will work to reduce the costs associated with building mobile networks and make it easier for smaller vendors to enter the market. “By opening the system will we get a lower cost base? Definitely yes,” absolutely yes,” Tenorio added.

“Opening up closed, black-box systems enables innovation at every level, so that customers can meet the challenges facing their networks faster and more efficiently,” said Josh Leslie, CEO of Cumulus Networks. “We’re excited to work with the TIP community to bring open systems to networks beyond the data center.” [See reference press release from Cumulus below].

“Open approaches are key to achieving TIP’s mission of disaggregating the traditional network deployment approach,” said Hans-Juergen Schmidtke, Co-Chair of the TIP Open Optical Packet Transport project group. “Our collaboration with Cumulus Networks to enable Cumulus Linux on Voyager (open packet DWDM architecture framework and white box transponder design) is an important contribution that will help accelerate the ecosystem’s adoption of Voyager.”

……………………………………………………………………………………………………………………………

Closing Comments: Request for Reader Inputs!

- What’s really interesting is that there are no U.S. telco members of TIP. Bell Canada is the only North American telecom carrier among its 500 members. Equinix and Cable Labs are the only quasi- network operator members in the U.S.

- Rather than write a voluminous report which few would read, we invite readers to contact the author or post a comment on areas of interest after reviewing the 2017 TIPS Summit agenda.

References:

https://www.devex.com/news/telecom-industry-tries-new-tactics-to-connect-the-unconnected-91492

AT&Ts Perspective on Edge Computing from Fog World Congress

Introduction:

In her October 31st keynote at the Fog World Congress, Alicia Abella, PhD and Vice President – Advanced Technology Realization at AT&T, discussed the implications of edge computing (EC) for network service providers, emphasizing that it will make the business case for 5G realizable when low latency is essential for real time applications (see illustration below).

The important trends and key drivers for edge computing were described along with AT&T’s perspective of its “open network” edge computing architecture emphasizing open source software modules.

Author’s Note: Ms. Abella did not distinguish between edge and fog computing nor did she even mention the latter term during her talk. We tried to address definitions and fog network architecture in this post. An earlier blog post quoted AT&T as being “all in” for edge computing to address low latency next generation applications.

………………………………………………………………………………………………………………..

AT&T Presentation Highlights:

- Ms. Abella defined EC as the placement of processing and storage resources at the perimeter of a service provider’s network in order to deliver low latency applications to customers. That’s consistent with the accepted definition.

“Edge compute is the next step in getting more out of our network, and we are busy putting together an edge computing (network) architecture,” she said.

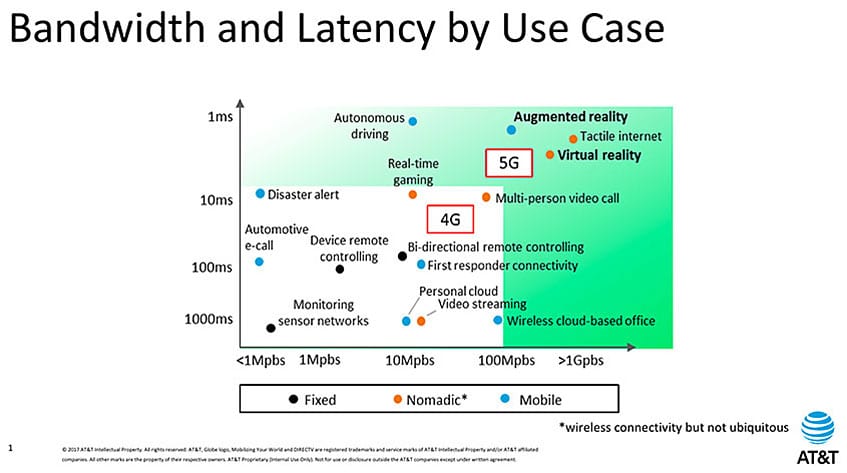

- “5G-like” applications will be the anchor tenant for network provider’s EC strategy. augmented reality/virtual reality, Multi-person real time video conferencing, and autonomous vehicles were a few applications cited in the illustration below:

Above illustration courtesy of AT&T.

“Size, location, configuration of EC resources will vary, depending on capacity demand and use cases,” said Ms. Abella.

…………………………………………………………………………………………………………..

- Benefits of EC to network service providers include:

- Reduce backhaul traffic

- Maintain quality of experience for customers

- Reduce cost by decomposing and disaggregating access function

- Optimize current central office infrastructure

- Improve reliability of the network by distributing content between the edge and centralized data centers

- Deliver innovative services not possible without edge compute, e.g. Industrial IoT autonomous vehicles, smart cities, etc

“In order to achieve some of the latency requirements of these [5G applications?] services a service provider needs to place IT resources at the edge of the network. Especially, when looking at autonomous vehicles where you have mission critical safety requirements. When we think about the edge, we’re looking at being able to serve these low latency requirements for those [real time] applications.”

- AT&T has “opened our network” to enable new services and reduce operational costs. The key attributes are the following:

- Modular architecture

- Robust network APIs

- Policy management

- Shared infrastructure for simplification and scaling

- Network Automation platform achieved using INDIGO on top of ONAP

- AT&T will offer increased network value and adaptability as traffic volumes change:

- Cost/performance leadership

- Improved speed to innovation

- Industry leading security, performance, reliability

“We are busy thinking about and putting together what that edge compute architecture would look like. It’s being driven by the need for low latency.”

In terms of where, physically, edge computing and storage is located:

“It depends on the use case. We have to be flexible when defining this edge compute architecture. There’s a lot of variables and a lot of constraints. We’re actually looking at optimization methods. We want to deploy edge compute nodes in mobile data centers, in buildings, at customers’ locations and in our central offices. Where it will be depends on where there is demand, where we have spectrum, we are developing methods for optimizing the locations. We want to be able to place those nodes in a place that will minimize cost to us (AT&T), while maintaining quality of experience. Size, location and configuration is going to depend on capacity demand and the use cases,” Alicia said.

- Optimization of EC processing to meet latency constraints may require GPUs and FPGAs in additional to conventional microprocessors. One such application cite was running video analytics for surveillance cameras.

- Real time control of autonomous vehicles would require a significant investment in roadside IT infrastructure but have an uncertain return-on-investment. AT&T now has 12 million smart cars on its network, a number growing by a million per quarter.

- We need to support different connectivity to the core network and use “SDN” within the site.

- Device empowerment at the edge must consider that while mobile devices (e.g. smart phones and tablets) are capable of executing complex tasks, they have been held back by battery life and low power requirements.

- Device complexity means higher cost to manufacturers and consumers.

- Future of EC may include “crowd sourcing computing power in your pocket.” The concept here is to distribute the computation needed over many people’s mobile devices and compensate them via Bitcoin, other crypto currency or asset class. Block chain may play a role here.

Fog Computing Definition, Architecture, Market and Use Cases

Introduction to Fog Computing, Architecture and Networks:

Fog computing is an extension of cloud computing which deploys data storage, computing and communications resources, control and management data analytics closer to the endpoints. It is especially important for the Internet of Things (IoT) continuum, where low latency and low cost are needed.

Fog computing architecture is the arrangement of physical and logical network elements, hardware, and software to implement a useful IoT network. Key architectural decisions involve the physical and geographical positioning of fog nodes, their arrangement in a hierarchy, the numbers, types, topology, protocols, and data bandwidth capacities of the links between fog nodes, things, and the cloud, the hartware and software design of individual fog nodes, and how a complete IoT network is orchestrated and managed. In order to optimize the architecture of a fog network, one must first understand the critical requirements of the general use cases that will take advantage of fog and specific software application(s) that will run on them. Then these requirements must be mapped onto a partitioned network of appropriately designed fog nodes. Certain clusters of requirements are difficult to implement on networks built with heavy reliance on the cloud (intelligence at the top) or intelligent things (intelligence at the bottom), and are particularly influential in the decision to move to fog-based architectures.

From a systematic perspective, fog networks provide a distributed computing system with a hierarchical topology. Fog networks aim at meeting stringent latency requirements, reducing power consumption of end devices, providing real-time data processing and control with localized computing resources, and decreasing the burden of backhaul traffic to centralized data centers. And of course, excellent network security, reliability and availability must be inherent in fog networks.

Fog computing network architecture

Illustration courtesy of August 2017 IEEE Communications Magazine article: “Architectural Imperatives for Fog Computing: Use Cases, Requirements, and Architectural Techniques for Fog-Enabled IoT Networks” (IEEE Xplore or IEEE Communications magazine subscription required to view on line)

………………………………………………………………………………………………………………………..

Fog Computing Market:

The fog computing market opportunity will exceed $18 billion worldwide by the year 2022, according to a new report by 451 Research. Commissioned by the OpenFog Consortium, the Size and Impact of Fog Computing Market projects that the largest markets for fog computing will be, in order, energy/utilities, transportation, healthcare and the industrial sectors.

“Through our extensive research, it’s clear that fog computing is on a growth trajectory to play a crucial role in IoT, 5G and other advanced distributed and connected systems,” said Christian Renaud, research director, Internet of Things, 451 Research, and lead author of the report. “It’s not only a technology path to ensure the optimal performance of the cloud-to-things continuum, but it’s also the fuel that will drive new business value.”

Key findings from the report were presented during an opening keynote on October 30th at the Fog World Congress conference. In addition to projecting an $18 billion fog market and identifying the top industry-specific market opportunities, the report also identified:

- Key market transitions fueling the growth include investments in energy infrastructure modernization, demographic shifts and regulatory mandates in transportation and healthcare.

- Hardware will have the largest percentage of overall fog revenue (51.6%), followed by fog applications (19.9%) and then services (15.7%). By 2022, spend will shift to apps and services, as fog functionality is incorporated into existing hardware.

- Cloud spend is expected to increase 147% to $6.4 billion by 2022.

“This is a seminal moment that not only validates the magnitude of fog, but also provides us with a first-row seat to the opportunities ahead,” said Helder Antunes, chairman of the OpenFog Consortium and Senior Director, Cisco. “Within the OpenFog community, we’ve understood the significance of fog—but with its growth rate of nearly 500 percent over the next five years—consider it a secret no more.”

The fog market report includes the sizing and impact of fog in the following verticals: agriculture, datacenters, energy and utilities, health, industrial, military, retail, smart buildings, smart cities, smart homes, transportation, and wearables.

Fog computing is the system-level architecture that brings computing, storage, control, and networking functions closer to the data-producing sources along the cloud-to-thing continuum. Applicable across industry sectors, fog computing effectively addresses issues related to security, cognition, agility, latency and efficiency.

Download the full report at www.openfogconsortium.org/growth.

………………………………………………………………………………………………………………

Fog Use Cases:

According to the Open Fog Consortium, fog architectures offer several unique advantages over other approaches, which include, but are not limited to:

Security: Additional security to ensure safe, trusted transactions

Cognition: awareness of client-centric objectives to enable autonomy

Agility: rapid innovation and affordable scaling under a common infrastructure

Latency: real-time processing and cyber-physical system control

Efficiency: dynamic pooling of local unused resources from participating end-user devices

New use cases created by the OpenFog Consortium were also released that showcase how fog works in industry. These use cases provide fog technologists with detailed views of how fog is deployed in autonomous driving, energy, healthcare and smart buildings.

The August 2017 IEEE Communications magazine article lists various IoT vertical markets and example fog use cases for each one:

It also delineates several application examples and allowable latency for each one:

The relationship between SK Telecom and MobiledgeX reflects an aligned vision for the future of mobile operators as key players in future mobile application development, performance, security, and reliability. This vision is shared by leading cloud providers and device makers working with MobiledgeX to seamlessly pair the power and distribution of mobile operator infrastructure with the convenience and depth of developer tooling of hyperscale public cloud and the scale, mobility and distribution of billions of end user devices.

The relationship between SK Telecom and MobiledgeX reflects an aligned vision for the future of mobile operators as key players in future mobile application development, performance, security, and reliability. This vision is shared by leading cloud providers and device makers working with MobiledgeX to seamlessly pair the power and distribution of mobile operator infrastructure with the convenience and depth of developer tooling of hyperscale public cloud and the scale, mobility and distribution of billions of end user devices.