AI

Granite Telecommunications expands its service offerings with Juniper Networks

Juniper Networks today announced that its customer and partner, Granite Telecommunications, a $1.8 billion provider of communications and technology solutions, has expanded its service offerings to include Juniper Networks’ full-stack of campus and branch services, including Wired Access, Wireless Access and SD-WAN, all driven by Mist AI™. This move will enhance Granite’s ability to support its customers’ unique verticals, such as healthcare, retail, education, manufacturing, hospitality and financial.

Granite has been working closely with Juniper for several years, and with this expanded AI-driven enterprise portfolio they now offer Juniper’s full suite of campus and branch networking solutions. By leveraging Mist AI and a single cloud across the wired, wireless and SD-WAN domains, Granite saves time and money with client-to-cloud automation and assurance, while accelerating deployments with Zero Touch Provisioning and automated configurations. In addition, Granite delivers more value to its customers with a broadened service portfolio that offers new highly differentiated services.

“Granite stands as Juniper’s largest AI-Driven SD-WAN partner in Managed Services within the Americas, underscoring the strength of our relationship and confidence in Juniper’s cutting-edge networking technology,” said Rob Hale, President and CEO at Granite. “As we expand our partnership, we are poised to elevate the customer experience to new heights by offering a full suite of Juniper solutions, imbued with the defining qualities of reliability, performance and security that characterize Juniper.”

Granite has been expanding its nationwide support to address the changing and growing needs of its customers. The company is committed to delivering specialized services for the unique requirements of its customers’ verticals. The addition of Juniper’s software-defined branch and wireless services is expected to be a significant benefit to many of its customer sectors. These services are designed to improve the performance and security of networks in various industries and make it easier for businesses to manage their network infrastructure.

“We are very excited to take our relationship with Granite Telecommunications to the next level,” said Sujai Hajela, Executive Vice President, AI-Driven Enterprise at Juniper Networks. “They have proven to be an exceptional partner and leader in the communications industry, who is especially adept at leveraging AI and the cloud to deliver high value managed services to their customers. With the full AI-driven enterprise portfolio, Granite can truly differentiate from their competition with exceptional client-to-cloud user experiences.”

With this expansion, Granite continues to demonstrate its commitment to providing customers with the best possible network experience. The addition of Juniper’s full-stack solutions will enable Granite to enhance its capabilities and better serve its customers, while also providing the company with a competitive edge in the market.

About Juniper Networks:

Juniper Networks is dedicated to dramatically simplifying network operations and driving superior experiences for end users. Our solutions deliver industry-leading insight, automation, security and AI to drive real business results. We believe that powering connections will bring us closer together while empowering us all to solve the world’s greatest challenges of well-being, sustainability and equality. Additional information can be found at Juniper Networks (www.juniper.net) or connect with Juniper on Twitter, LinkedIn and Facebook.

References:

TPG, Ericsson launch AI-powered analytics, troubleshooting service for 4G/5G Mobile, FWA, and IoT subscribers

Australia telco TPG Telecom and Ericsson today announced a new multi-year agreement to deliver an Australian-first cloud-native and AI-powered analytics tool to pinpoint and improve mobile network performance for customers. Based on Ericsson Expert Analytics and EXFO Adaptive Service Assurance, the solution gives TPG Telecom’s Technology, Network and Care teams an in-depth, end-to-end understanding of subscriber’s experience at an individual level. Through the new agreement, TPG Telecom will gain insights from its 4G and 5G Mobile, Fixed Wireless Access and IoT subscribers using smart data collection with embedded intelligence to predict, prioritise, and resolve performance issues as they arise in real-time.

These insights will enable TPG Telecom to react quicker to network issues, improve performance and reduce the need for infrastructure-based diagnoses, allowing the telco to enhance its service experience for customers.

The solution integrates Ericsson Expert Analytics, EXFO adaptive service assurance and Ericsson software probes, provided as part of Ericsson’s dual-mode 5G Core, to deliver end-to-end network visibility and reduced total costs.

TPG Telecom is the first in Australia and one of the first communication service providers (CSP) globally to deploy Ericsson Expert Analytics in a commercial network using cloud-native technologies. As a cloud-native software, its embedded scalability, agility and resilience means it is designed to flexibly handle TPG Telecom’s requirements as its network and use-cases evolve, adapting to any unexpected challenges.

TPG Telecom General Manager Cloud/Infrastructure NW Services, Chris Tsigros, said: “TPG Telecom is committed to investing in cutting edge technology designed to provide superior network experience to every single one of our customers. The analytics and troubleshooting solution we’re implementing with Ericsson will help ensure we deliver a great experience for our customers. This new technology will change the way in which the TPG Telecom customer care team interacts with our customers, leading to greater effectiveness and increased customer satisfaction. It’s just another way we’re putting our customers first.”

Emilio Romeo, Head of Ericsson, Australia and New Zealand, says: “Embarking on this multi-year deployment of advanced analytics and troubleshooting capabilities with TPG Telecom further demonstrates our commitment to bringing the best mobile telecommunications experience to all Australians. It is the latest in a long history of working side by side with TPG Telecom to bring groundbreaking technology to Australia and a new level of service experience to the Australian people. With the Ericsson Expert Analytics solution implementation, and the real-time access to the data from the dual-mode 5G Core thanks to its built-in software probes, TPG Telecom can gain greater network visibility at a lower cost, passing on the benefits to its customers as they enjoy its services across the country.”

The initial deployment phase focused on acquiring profound insights and troubleshooting capabilities through the software probes built into Ericsson’s cloud-native dual-mode 5G Core. The state-of-the-art solution delivered to TPG adopts an innovative approach that combines probing and event-based monitoring, ensuring rapid and effective issue resolution.

One of the standout features of this deployed solution is its capacity to provide comprehensive troubleshooting across the entire network, all within a unified application. TPG benefits from a range of advanced functionalities, including On-Demand Troubleshooting and robust filtering capabilities, significantly expediting the identification and resolution of network challenges.

With this implementation, TPG Telecom gains the ability to trace and monitor subscriber sessions handled by the core network, which forms the foundation of TPG Telecom’s mobile network. Currently, the solution successfully monitors approximately 5 million subscribers, and its coverage continues to expand

The full solution will continue to be rolled out in phases of further enhancements, and will give TPG Telecom the ability to automatically detect issues from captured network and subscriber insights. TPG Telecom will also benefit from AI-powered recommendations for correction of any network or customer issues found.

Ericsson Expert Analytics is an open system designed for ease of integration in multi-vendor hybrid environments. The software solution is powered by anomaly detection techniques based on machine learning and artificial intelligence, transforming data at scale into actionable insights for better business outcomes. It is designed and used to analyze the real-time network data of more than 250 million subscribers of mobile telecommunications operators all over the world.

The solution follows the 2021 completion of the virtualisation of TPG Telecom’s core network and a new partnership to deploy Ericsson’s dual-mode 5G Core for standalone 5G networks.

About TPG TELECOM:

TPG Telecom Limited, formerly named Vodafone Hutchison Australia Limited, was listed on the Australian Securities Exchange on 30 June 2020. On 13 July 2020, this newly listed company merged with TPG Corporation Limited, formerly named TPG Telecom, to bring together the resources of two of Australia’s largest telecommunications companies, creating the leading challenger full-service telecommunications provider. TPG Telecom is home to some of Australia’s most-loved brands including Vodafone, TPG, iiNet, AAPT, Internode, Lebara and felix. https://www.tpgtelecom.com.au/

Resources:

Securing 5G experience with software probes

Data and Analytics for better business outcomes

Cloud native is transforming the telecom industry

TPG Telecom and Ericsson announce 5G Core partnership for standalone 5G networks

TPG Telecom’s ground-breaking cloud transformation

References:

https://www.ericsson.com/en/press-releases/7/2023/tpg-telecom-and-ericsson-launch-australian-first-analytics-and-troubleshooting-solution-to-boost-network-performance-for-customers

Cloud Service Providers struggle with Generative AI; Users face vendor lock-in; “The hype is here, the revenue is not”

Global Telco AI Alliance to progress generative AI for telcos

Park Place Technologies on network monitoring, predictive fault diagnosis and repair; Entuity acquisition adds analytics

Bain & Co, McKinsey & Co, AWS suggest how telcos can use and adapt Generative AI

Forbes: Cloud is a huge challenge for enterprise networks; AI adds complexity

Cloud Service Providers struggle with Generative AI; Users face vendor lock-in; “The hype is here, the revenue is not”

Everyone agrees that Generative AI has great promise and potential. Martin Casado of Andreessen Horowitz recently wrote in the Wall Street Journal that the technology has “finally become transformative:”

“Generative AI can bring real economic benefits to large industries with established and expensive workloads. Large language models could save costs by performing tasks such as summarizing discovery documents without replacing attorneys, to take one example. And there are plenty of similar jobs spread across fields like medicine, computer programming, design and entertainment….. This all means opportunity for the new class of generative AI startups to evolve along with users, while incumbents focus on applying the technology to their existing cash-cow business lines.”

A new investment wave caused by generative AI is starting to loom among cloud service providers, raising questions about whether Big Tech’s spending cutbacks and layoffs will prove to be short lived. Pressed to say when they would see a revenue lift from AI, the big U.S. cloud companies (Microsoft, Alphabet/Google, Meta/FB and Amazon) all referred to existing services that rely heavily on investments made in the past. These range from the AWS’s machine learning services for cloud customers to AI-enhanced tools that Google and Meta offer to their advertising customers.

Microsoft offered only a cautious prediction of when AI would result in higher revenue. Amy Hood, chief financial officer, told investors during an earnings call last week that the revenue impact would be “gradual,” as the features are launched and start to catch on with customers. The caution failed to match high expectations ahead of the company’s earnings, wiping 7% off its stock price (MSFT ticker symbol) over the following week.

When it comes to the newer generative AI wave, predictions were few and far between. Amazon CEO Andy Jassy said on Thursday that the technology was in its “very early stages” and that the industry was only “a few steps into a marathon”. Many customers of Amazon’s cloud arm, AWS, see the technology as transformative, Jassy noted that “most companies are still figuring out how they want to approach it, they are figuring out how to train models.” He insisted that every part of Amazon’s business was working on generative AI initiatives and the technology was “going to be at the heart of what we do.”

There are a number of large language models that power generative AI, and many of the AI companies that make them have forged partnerships with big cloud service providers. As business technology leaders make their picks among them, they are weighing the risks and benefits of using one cloud provider’s AI ecosystem. They say it is an important decision that could have long-term consequences, including how much they spend and whether they are willing to sink deeper into one cloud provider’s set of software, tools, and services.

To date, AI large language model makers like OpenAI, Anthropic, and Cohere have led the charge in developing proprietary large language models that companies are using to boost efficiency in areas like accounting and writing code, or adding to their own products with tools like custom chatbots. Partnerships between model makers and major cloud companies include OpenAI and Microsoft Azure, Anthropic and Cohere with Google Cloud, and the machine-learning startup Hugging Face with Amazon Web Services. Databricks, a data storage and management company, agreed to buy the generative AI startup MosaicML in June.

If a company chooses a single AI ecosystem, it could risk “vendor lock-in” within that provider’s platform and set of services, said Ram Chakravarti, chief technology officer of Houston-based BMC Software. This paradigm is a recurring one, where a business’s IT system, software and data all sit within one digital platform, and it could become more pronounced as companies look for help in using generative AI. Companies say the problem with vendor lock-in, especially among cloud providers, is that they have difficulty moving their data to other platforms, lose negotiating power with other vendors, and must rely on one provider to keep its services online and secure.

Cloud providers, partly in response to complaints of lock-in, now offer tools to help customers move data between their own and competitors’ platforms. Businesses have increasingly signed up with more than one cloud provider to reduce their reliance on any single vendor. That is the strategy companies could end up taking with generative AI, where by using a “multiple generative AI approach,” they can avoid getting too entrenched in a particular platform. To be sure, many chief information officers have said they willingly accept such risks for the convenience, and potentially lower cost, of working with a single technology vendor or cloud provider.

A significant challenge in incorporating generative AI is that the technology is changing so quickly, analysts have said, forcing CIOs to not only keep up with the pace of innovation, but also sift through potential data privacy and cybersecurity risks.

A company using its cloud provider’s premade tools and services, plus guardrails for protecting company data and reducing inaccurate outputs, can more quickly implement generative AI off-the-shelf, said Adnan Masood, chief AI architect at digital technology and IT services firm UST. “It has privacy, it has security, it has all the compliance elements in there. At that point, people don’t really have to worry so much about the logistics of things, but rather are focused on utilizing the model.”

For other companies, it is a conservative approach to use generative AI with a large cloud platform they already trust to hold sensitive company data, said Jon Turow, a partner at Madrona Venture Group. “It’s a very natural start to a conversation to say, ‘Hey, would you also like to apply AI inside my four walls?’”

End Quotes:

“Right now, the evidence is a little bit scarce about what the effect on revenue will be across the tech industry,” said James Tierney of Alliance Bernstein.

Brent Thill, an analyst at Jefferies, summed up the mood among investors: “The hype is here, the revenue is not. Behind the scenes, the whole industry is scrambling to figure out the business model [for generative AI]: how are we going to price it? How are we going to sell it?”

………………………………………………………………………………………………………………

References:

https://www.ft.com/content/56706c31-e760-44e1-a507-2c8175a170e8

https://www.wsj.com/articles/companies-weigh-growing-power-of-cloud-providers-amid-ai-boom-478c454a

https://www.techtarget.com/searchenterpriseai/definition/generative-AI?Offer=abt_pubpro_AI-Insider

Global Telco AI Alliance to progress generative AI for telcos

Curmudgeon/Sperandeo: Impact of Generative AI on Jobs and Workers

Bain & Co, McKinsey & Co, AWS suggest how telcos can use and adapt Generative AI

Generative AI Unicorns Rule the Startup Roost; OpenAI in the Spotlight

Generative AI in telecom; ChatGPT as a manager? ChatGPT vs Google Search

Generative AI could put telecom jobs in jeopardy; compelling AI in telecom use cases

Qualcomm CEO: AI will become pervasive, at the edge, and run on Snapdragon SoC devices

Bain & Co, McKinsey & Co, AWS suggest how telcos can use and adapt Generative AI

Generative Artificial Intelligence (AI) uncertainty is especially challenging for the telecommunications industry which has a history of very slow adaptation to change and thus faces lots of pressure to adopt generative AI in their services and infrastructure. Indeed, Deutsche Telekom stated that AI poses massive challenges for telecom industry in this IEEE Techblog post.

Consulting firm Bain & Co. highlighted that inertia in a recent report titled, “Telcos, Stop Debating Generative AI and Just Get Going” Three partners stated network operators need to act fast in order to jump on this opportunity. “Speedy action trumps perfect planning here,” Herbert Blum, Jeff Katzin and Velu Sinha wrote in the brief. “It’s more important for telcos to quickly launch an initial set of generative AI applications that fit the company’s strategy, and do so in a responsible way – or risk missing a window of opportunity in this fast-evolving sector.”

Generative AI use cases can be divided into phases based on ease of implementation, inherent risk, and value:

………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………….

Telcos can pursue generative AI applications across business functions, starting with knowledge management:

Separately, a McKinsey & Co. report opined that AI has highlighted business leader priorities. The consulting firm cited organizations that have top executives championing an organization’s AI initiatives, including the need to fund those programs. This is counter to organizations that lack a clear directive on their AI plans, which results in wasted spending and stalled development. “Reaching this state of AI maturity is no easy task, but it is certainly within the reach of telcos,” the firm noted. “Indeed, with all the pressures they face, embracing large-scale deployment of AI and transitioning to being AI-native organizations could be key to driving growth and renewal. Telcos that are starting to recognize this is non-negotiable are scaling AI investments as the business impact generated by the technology materializes.”

…………………………………………………………………………………………………………………………………………………………………………………………………………………………………………….

Ishwar Parulkar, chief technologist for the telco industry at AWS, touted several areas that should be of generative AI interest to telecom operators. The first few were common ones tied to improving the customer experience. This includes building on machine learning (ML) to help improve that interaction and potentially reduce customer churn.

“We have worked with some leading customers and implemented this in production where they can take customer voice calls, translate that to text, do sentiment analysis on it … and then feed that into reducing customer churn,” Parulkar said. “That goes up another notch with generative AI, where you can have chat bots and more interactive types of interfaces for customers as well as for customer care agent systems in a call. So that just goes up another notch of generative AI.”

The next step is using generative AI to help operators bolster their business operations and systems. This is for things like revenue assurance and finding revenue leakage, items that Parulkar noted were in a “more established space in terms of what machine learning can do.”

However, Parulkar said the bigger opportunity is around helping operators better design and manage network operations. This is an area that remains the most immature, but one that Parulkar is “most excited about.” This can begin from the planning and installation phase, with an example of helping technicians when they are installing physical equipment.

“In installation of network equipment today, you have technicians who go through manuals and have procedures to install routers and base stations and connect links and fibers,” Parulkar said. “That all can be now made interactive [using] chat bot, natural language kind of framework. You can have a lot of this documentation, training data that can train foundational models that can create that type of an interface, improves productivity, makes it easier to target specific problems very quickly in terms of what you want to deploy.”

This can also help with network configuration by using large datasets to help automatically generate configurations. This could include the ability to help configure routers, VPNs and MPLS circuits to support network performance.

The final area of support could be in the running of those networks once they are deployed. Parulkar cited functions like troubleshooting failures that can be supported by a generative AI model.

“There are recipes that operators go through to troubleshoot and triage failure,” Parulkar said “A lot of times it’s trial-and-error method that can be significantly improved in a more interactive, natural language, prompt-based system that guides you through troubleshooting and operating the network.”

This model could be especially compelling for operators as they integrate more routers to support disaggregated 5G network models for mobile edge computing (MEC), private networks and the use of millimeter-wave (mmWave) spectrum bands.

Federal Communications Commission (FCC) Chairwoman Jessica Rosenworcel this week also hinted at the ability for AI to help manage spectrum resources.

“For decades we have licensed large slices of our airwaves and come up with unlicensed policies for joint use in others,” Rosenworcel said during a speech at this week’s FCC and National Science Foundation Joint Workshop. “But this scheme is not truly dynamic. And as demands on our airwaves grow – as we move from a world of mobile phones to billions of devices in the internet of things (IoT)– we can take newfound cognitive abilities and teach our wireless devices to manage transmissions on their own. Smarter radios using AI can work with each other without a central authority dictating the best of use of spectrum in every environment. If that sounds far off, it’s not. Consider that a large wireless provider’s network can generate several million performance measurements every minute. And consider the insights that machine learning can provide to better understand network usage and support greater spectrum efficiency.”

While generative AI does have potential, Parulkar also left open the door for what he termed “traditional AI” and which he described as “supervised and unsupervised learning.”

“Those techniques still work for a lot of the parts in the network and we see a combination of these two,” Parulkar said. “For example, you might use anomaly detection for getting some insights into the things to look at and then followed by a generative AI system that will then give an output in a very interactive format and we see that in some of the use cases as well. I think this is a big area for telcos to explore and we’re having active conversations with multiple telcos and network vendors.”

Parulkar’s comments come as AWS has been busy updating its generative AI platforms. One of the most recent was the launch of its $100 million Generative AI Innovation Center, which is targeted at helping guide businesses through the process of developing, building and deploying generative AI tools.

“Generative AI is one of those technological shifts that we are in the early stages of that will impact all organizations across the globe in some form of fashion,” Sri Elaprolu, senior leader of generative AI at AWS, told SDxCentral. “We have the goal of helping as many customers as we can, and as we need to, in accelerating their journey with generative AI.”

References:

https://www.bain.com/insights/telcos-stop-debating-generative-ai-and-just-get-going/

Deutsche Telekom exec: AI poses massive challenges for telecom industry

Generative AI in telecom; ChatGPT as a manager? ChatGPT vs Google Search

Generative AI could put telecom jobs in jeopardy; compelling AI in telecom use cases

Generative AI Unicorns Rule the Startup Roost; OpenAI in the Spotlight

Forbes: Cloud is a huge challenge for enterprise networks; AI adds complexity

Qualcomm CEO: AI will become pervasive, at the edge, and run on Snapdragon SoC devices

Bloomberg: China Lures Billionaires Into Race to Catch U.S. in AI

Generative AI Unicorns Rule the Startup Roost; OpenAI in the Spotlight

Introduction:

Despite mounting pressure on venture capital in a difficult economic environment, money is still flowing into generative Artificial Intelligence (AI) startups. Indeed, AI startups have emerged as a bright spot for VC investments this year amid a wider slowdown in funding caused by rising interest rates, a slowing economy and high inflation.

VCs have already poured $10.7 billion into Generative AI [1.] start-ups within the first three months of this year, a thirteen-fold increase from a year earlier, according to PitchBook, which tracks start-ups.

Note 1. Generative AI is a type of artificial intelligence that can create new content, such as text, synthetic data, images, and audio. The recent buzz around Generative AI has been driven by the simplicity of new user interfaces for creating high-quality content in a matter of seconds.

….………………………………………………………………………………….

Tech giants have poured effort and billions of dollars into what they say is a transformative technology, even amid rising concerns about A.I.’s role in spreading misinformation, killing jobs and one day matching human intelligence. What they don’t publicize is that the results (especially from ChatGPT) may be incorrect or inconclusive.

We take a close look at Generative AI Unicorns with an emphasis on OpenAI (the creator of ChatGPT) and the competition it will face from Google DeepMind.

Generative AI Unicorns and OpenAI:

AI startups make up half of all new unicorns (startups valued at more than $1B) in 2023, says CBInsights.

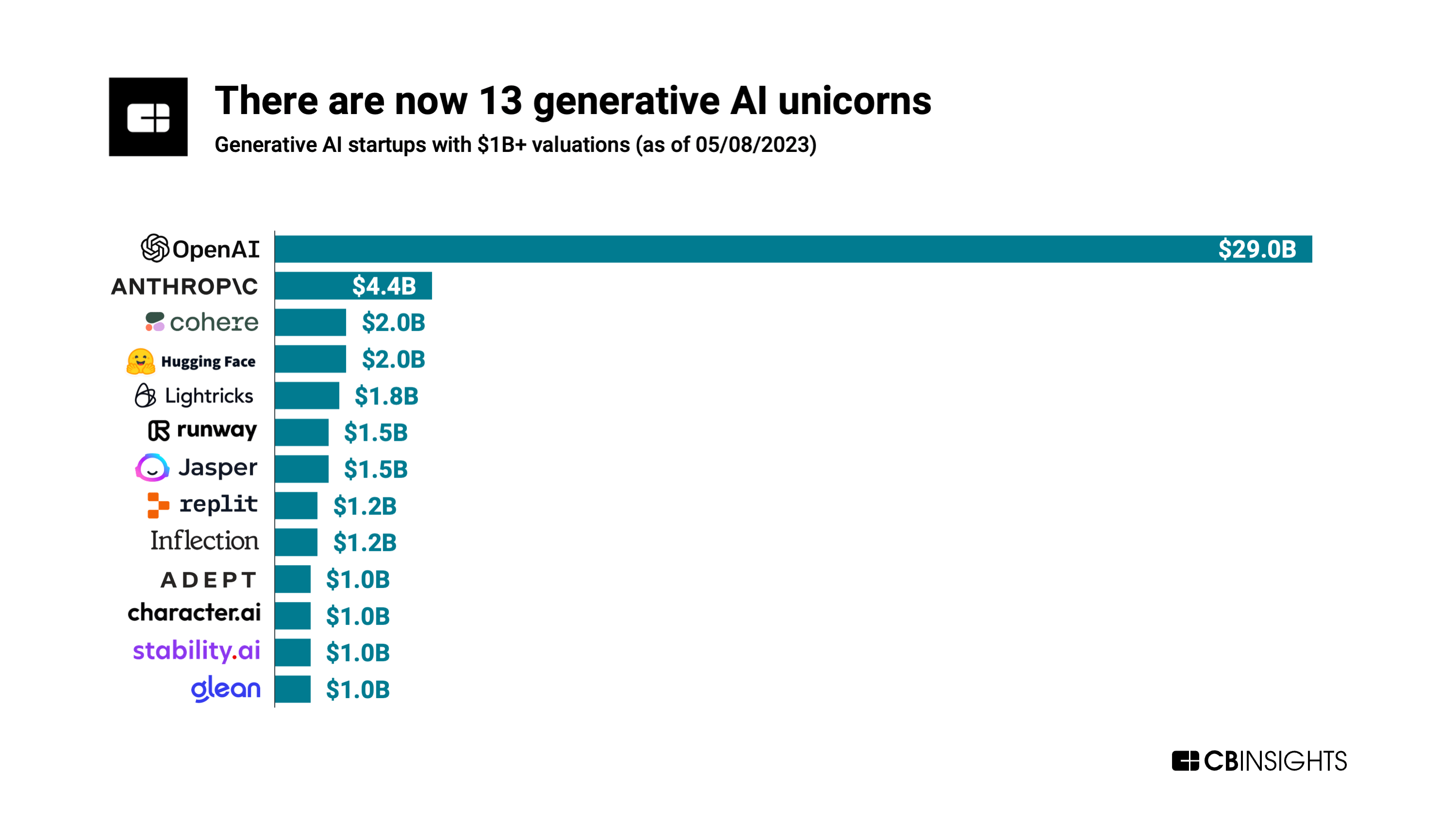

At Generative AI firms, startups are reaching $1 billion valuations at lightning speed. There are currently 13 Generative AI unicorns (see chart below), according to CBInsights which said they attained their unicorn status nearly twice as fast as the average $1 billion startup.

Across the 13 Generative AI unicorns, the average time to reach unicorn status was 3.6 years but for the unicorn club as a whole the average is 7 years — almost twice as long.

OpenAI, the poster child for Generative AI with its Chat GPT app, tops the list with a valuation of almost $30 billion. Microsoft is the largest investor as it provided OpenAI with a $1 billion investment in 2019 and a $10 billion investment in 2023. Bloomberg reported that the company recently closed an investment fund, exceeding expectations with a value that surpasses $175 million.

However, OpenAI may have a formidable competitor in Google DeepMind (more details in DeepMind section below).

….……………………………………………………………………………………………………………………………………………………….

Anthropic is #2 with a valuation of $4.4B. It’s an AI safety and research company based in San Francisco, CA. The company says they “develop large-scale AI systems so that we can study their safety properties at the technological frontier, where new problems are most likely to arise. We use these insights to create safer, steerable, and more reliable models, and to generate systems that we deploy externally, like Claude (to be used with Slack).”

In Q1-2023, Generative AI companies accounted for three of the entrants to the unicorn club with Anthropic, Adept, and Character.AI all gaining valuations of $1B or above.

New Generative AI Unicorns in May:

Ten companies joined the Crunchbase Unicorn Board in May 2023 — double the count for April 2023. Among them were several AI startups:

- Toronto-basedCohere, a generative AI large language model developer for enterprises, raised $270 million in its Series C funding. The funding was led by Inovia Capital valuing the 4-year-old company at $2.2 billion.

- Generative video AI company Runway, based out of New York, raised a $100 million Series D led by Google. The funding valued the 5-year-old company at $1.5 billion.

- Synthesia, a UK-based artificial intelligence (AI) startup, has raised about $90 million at a valuation of $1 billion from a funding round led by venture capital firms Accel and Nvidia-owned NVentures. “While we weren’t actively looking for new investment, Accel and NVIDIA share our vision for transforming traditional video production into a digital workflow,” said Victor Riparbelli, co-founder and CEO of Synthesia.

….…………………………………………………………………………………………………………………………………………………..

Google DeepMind:

Alphabet CEO Sundar Pichai said in a blog post, “we’ve been an AI-first company since 2016, because we see AI as the most significant way to deliver on our mission.”

In April, Alphabet Inc. created “Google DeepMind,” in order to bring together two leading research groups in the AI field: the Brain team from Google Research, and DeepMind (the AI startup Google acquired in 2014). Their collective accomplishments in AI over the last decade span AlphaGo, Transformers, word2vec, WaveNet, AlphaFold, sequence to sequence models, distillation, deep reinforcement learning, and distributed systems and software frameworks like TensorFlow and JAX for expressing, training and deploying large scale Machine Learning (ML) models.

By launching DeepMind as Google’s Generative AI solution, there could be a new battle front opening in quantum computing, machine learning perception, gaming and mobile systems, NLP and human-computer interaction and visualization.

A recent DeepMind paper says the Alphabet unit has extended AI capabilities with faster sorting algorithms to create ordered lists. Their paper says it shows “how artificial intelligence can go beyond the current state of the art,” because ultimately AlphaDev’s sorts use fewer lines of code for sorting sequences with between three elements and eight elements — for every number of elements except four. And these shorter algorithms “do indeed lead to lower latency,” the paper points out, “as the algorithm length and latency are correlated.”

Their researchers created a program based on DeepMind’s AlphaZero program, which beat the world’s best players in chess and Go. That program trained solely by playing games against itself, getting better and better using a kind of massively automated trial-and-error that eventually determines the most optimal approach.

DeepMind’s researchers modified into a new coding-oriented program called AlphaDev, calling this an important next step. “With AlphaDev, we show how this model can transfer from games to scientific challenges, and from simulations to real-world applications,” they wrote on the DeepMind blog. The newly-discovered sorting algorithms “contain new sequences of instructions that save a single instruction each time they’re applied. AlphaDev skips over a step to connect items in a way that looks like a mistake, but is actually a shortcut.”

….………………………………………………………………………………………………………………………………………………………..

Conclusions:

While many luminaries, such as Henry Kissinger, Eric Schmidt and Daniel Huttenlocher, have lauded Generative AI as the greatest invention since the printing press, the technology has yet to prove itself worthy of the enormous praise. Their central thesis, that a computer program could “transform the human cognitive process” in a way tantamount to the Enlightenment, is a huge stretch.

Gary Marcus, a well-known professor and frequent critic of A.I. technology, said that OpenAI hasn’t been transparent about the data its uses to develop its systems. He expressed doubt in CEO Sam Altman’s prediction that new jobs will replace those killed off by A.I.

“We have unprecedented opportunities here but we are also facing a perfect storm of corporate irresponsibility, widespread deployment, lack of adequate regulation and inherent unreliability,” Dr. Marcus said.

The promise and potential of Generative AI will not be realized for many years. Think of it as a “research work in progress” with many twists and turns along the way.

….………………………………………………………………………………………………………………………………..

References:

https://www.cbinsights.com/research/generative-ai-unicorns-valuations-revenues-headcount/

https://pitchbook.com/news/articles/Amazon-Bedrock-generative-ai-q1-2023-vc-deals

Curmudgeon/Sperandeo: Impact of Generative AI on Jobs and Workers

Generative AI in telecom; ChatGPT as a manager? ChatGPT vs Google Search

Generative AI could put telecom jobs in jeopardy; compelling AI in telecom use cases

Generative AI in telecom; ChatGPT as a manager? ChatGPT vs Google Search

Generative AI is probably the most hyped technology in the last 60 years [1.]. While the potential and power of microprocessors, Ethernet, WiFi, Internet, 4G, and cloud computing all lived up to or exceeded expectations, generative AI has yet to prove itself worthy of its enormous praise. Simply put, Generative AI is a type of artificial intelligence that can create new content, such as text, images, and audio.

Note 1. This author has been observing computer and communications technologies for 57 years. His first tech job for pay was in the summer of 1966 in Dallas, TX. He did mathematical simulations of: 1.) Worst Case Data Load on 3 Large Screen Displays (LSDs)-each 7 ft x 7 ft. and 2.) Efficiency of Manual Rate Aided Radar Tracking. In the summer of 1967 he helped install and test electronic modules for the central command and control system for the Atlantic Fleet Weapons Range at Roosevelt Roads Naval Air station in Puerto Rico. While there also did a computer simulation of a real time naval air exercise (battle ships, aircraft carriers, jets, helicopters, drones, etc) and displayed the results on the 3 LSDs. Skipping over his career in academia, industry and as a volunteer officer/chairman at IEEE ComSoc and IEEE SV Tech History, Alan has overseen the IEEE Techblog for over 14 years (since he was asked to do so in March 2009 by the IEEE ComSoc NA Chairman at that time).

………………………………………………………………………………………………………………………………………………………………………………..

Interest in Generative A.I. has exploded. Tech giants have poured effort and billions of dollars into what they say is a transformative technology, even amid rising concerns about A.I.’s role in spreading misinformation, killing jobs and one day matching human intelligence.

It’s been claimed that Generative AI can be used to optimize telecom networks and make them more efficient. This can lead to faster speeds, better reliability, and lower costs. Another way that generative AI is changing telecommunications is by improving customer service. Generative AI can be used to create virtual assistants that can answer customer questions and provide support. This can free up human customer service representatives to focus on more complex issues.

Generative AI is also being used to improve network security. Generative AI can be used to detect and prevent fraud and other security threats. This can help to protect customers and their data.

Here are some specific examples of how generative AI is planning to be used in the telecommunications industry:

- Network optimization: Generative AI can be used to analyze network traffic and identify patterns. This information can then be used to optimize the network and improve performance. For example, generative AI can be used to route traffic more efficiently or to add capacity to areas of the network that are experiencing congestion.

- Predictive maintenance: Generative AI can be used to analyze data from network equipment to identify potential problems before they occur. This information can then be used to schedule preventive maintenance, which can help to prevent outages and improve reliability. For example, generative AI can be used to monitor the temperature of network equipment and identify components that are at risk of overheating.

- Fraud detection: Generative AI can be used to analyze customer behavior and identify patterns that may indicate fraud. This information can then be used to prevent fraud and protect customers. For example, generative AI can be used to identify customers who are making suspicious calls or sending large amounts of text messages.

- Customer service: Generative AI can be used to create virtual assistants that can answer customer questions and provide support. This can free up human customer service representatives to focus on more complex issues. For example, generative AI can be used to create a virtual assistant that can answer questions about billing or troubleshoot technical issues.

Postscript: Gary Marcus, a well-known professor and frequent critic of A.I. technology, said that OpenAI hasn’t been transparent about the data its uses to develop its systems. He expressed doubt in CEO Sam Altman’s prediction that new jobs will replace those killed off by A.I.

“We have unprecedented opportunities here but we are also facing a perfect storm of corporate irresponsibility, widespread deployment, lack of adequate regulation and inherent unreliability,” Dr. Marcus said.

References:

The AI-native telco: Radical transformation to thrive in turbulent times; https://www.mckinsey.com/industries/technology-media-and-telecommunications/our-insights/the-ai-native-telco-radical-transformation-to-thrive-in-turbulent-times#/

Generative AI in Telecom Industry | The Ultimate Guide; https://www.xenonstack.com/blog/generative-ai-telecom-industry#:~:text=Generative%20AI%20can%20predict%20equipment,equipment%20failures%20before%20they%20occur.

Microsoft dangles generative AI for telcos and slams ‘DIY’ clouds; https://www.lightreading.com/aiautomation/microsoft-dangles-generative-ai-for-telcos-and-slams-diy-clouds/d/d-id/783438

Deutsche Telekom exec: AI poses massive challenges for telecom industry

Arista Networks unveils cloud-delivered, AI-driven network identity service

………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………….

ChatGPT (from OpenAI) is the poster child for Generative AI. Here is a study which showed in many ways in which Generative AI can not properly replace a manager. JobSage wanted to see how ChatGPT performed when it comes to sensitive management scenarios and had responses ranked by experts.

Key takeaways:

-

Sensitive management scenarios: 60% found to be acceptable while 40% failed.

-

ChatGPT was better at addressing diversity and worse at addressing compensation and underperforming employees.

-

ChatGPT earned its strongest marks addressing an employee being investigated for sexual harassment and a company switching healthcare providers to cut costs.

-

ChatGPT performed weakest when asked to respond to an employee concerned about pay equity, a company that needs people to work harder than ever, and a company’s freeze of raises despite record payout to the CEO.

ChatGPT showed inconsistent performance in management situations:

Using the same scoring scale, ChatGPT revealed that while it could provide balance and empathy with some employee-specific and company-wide communication, at other times that empathy and balance was missing, making it appear tone deaf.

ChatGPT even gave responses that many would deem inappropriate while other responses highlighted a more broad limitation of ChatGPT: its inability to provide detailed, tailored information about company policies and scenarios that occur.

This section details where this chatbot failed to deliver by responses scored from negative to very negative.

Negative: Notifying an employee they were being terminated for not working hard enough

Our experts had issues with ChatGPT’s response in this scenario. It emphasized the employee’s performance as compared to peers and offered an overall negative tone that would potentially make its recipient feel quite terrible about themself.

Negative: Notifying an employee that a complaint had been filed against them for being intoxicated on the job

For this response, ChatGPT employs a severe tone, which may discourage the employee from sharing the underlying issue that is motivating them to drink on the job. Management did deem this to be an outstanding response, though one wonders if this would be a conversation better conducted in person than over email.

Negative: Notifying an employee that they’ve worn clothing that’s revealing and inappropriate

ChatGPT failed to understand how language can be judgmental, and its response was less than informative. Its use of the word “revealing” to describe the clothing is subjective and the human resources expert provided the feedback that it “screams sexism and provides no meaningful detail about what the policy is and what part they violated.”

Very negative: Notifying the company to let them know they need to work harder

ChatGPT again came up short on necessary detail. The email neglects to include examples or benchmarks of what working harder actually looks like, which sets an unrealistic bar to measure employees against. And according to the human resources expert, “it assumes individuals aren’t already striving for excellence.”

Very negative: Notifying a company about a freeze on raises despite a record payout to the CEO

Legal found ChatGPT’s response “too direct in summarizing the record compensation received by the CEO.” With a very personal issue like compensation at play, an employee would hope this would be dealt with more empathy than what’s displayed here.

Very negative: Addressing an employee’s concerns about pay equity.

ChatGPT’s response focuses primarily on the company’s situation while failing to show empathy for the employee’s situation. It also discloses details about another’s employee’s compensation and how it was determined, which many would consider inappropriate. Since compensation was found to be the top factor of work-related stress in a 2023 survey, trusting this to ChatGPT would not be wise.

Conclusions:

Based on the Jobsage.com study, one might find ways to utilize ChatGPT to draft emails that serve as announcements or notifications. However, those emails still need a human touch. If you trust ChatGPT to draft correspondence, be sure those are reviewed by the company’s internal team to ensure they accurately reflect the company’s protocols and policies.

While ChatGPT may be useful in some ways, it simply can’t replace the empathy and human touch of a good manager, especially when dealing with sensitive situations. Employees want to feel respected, and receiving a blanket response full of corporate jargon likely won’t make them feel that way. Employees want to know a human is communicating with them, not a computer.

If you want to find a workplace that treats you with empathy and provides a safe, inclusive workspace that addresses your unique needs, JobSage provides an open forum for employees to leave honest, authentic company reviews. You can really get to know a company’s culture and see if its style of management and communication carves a meaningful place for you.

Addendum – ChatGPT vs Google Search Engine:

I asked ChatGPT: “What is the IEEE Techblog?” Here is the result I got:

“As of my last knowledge update in September 2021, the IEEE (Institute of Electrical and Electronics Engineers) Techblog was not a specific entity or platform associated with IEEE. However, IEEE is a renowned professional association dedicated to advancing technology and innovation across various fields, including electrical engineering, computer science, and telecommunications.”

When I did a Google search on the same keywords, I got the CORRECT answer as the 1st search result:

Technology Blog – ComSoc Technology Blog – IEEE …

IEEE Communications Society

About the BlogNOTE: IEEE Techblog posts may not be reposted without …

|

|

About the Techblog Editorial …Alan J Weissberger, ScD. Content Manager and principal …

|

|

IEEE Techblog recognized by …The IEEE ComSoc Techblog was voted #2 best broadband blog …

|

|

Contact UsIEEE websites place cookies on your device to give you the best …

|

|

“The IEEE ComSoc Techblog features contributions from various authors who are experts, researchers, and professionals in the field of communications engineering. The blog does not have a single author, but rather includes posts from multiple individuals who are associated with the IEEE Communications Society (ComSoc) and its technical community.” No mention of Alan J Weissberger!

When I asked Google the same thing, I got:

Content Manager and principal contributing author to IEEE ComSoc blog sites since March 2009. IEEE volunteer and technical conference session organizer since March 1974.

About the Techblog Editorial Team – Technology Blog

References:

Curmudgeon/Sperandeo: Impact of Generative AI on Jobs and Workers

Deutsche Telekom exec: AI poses massive challenges for telecom industry

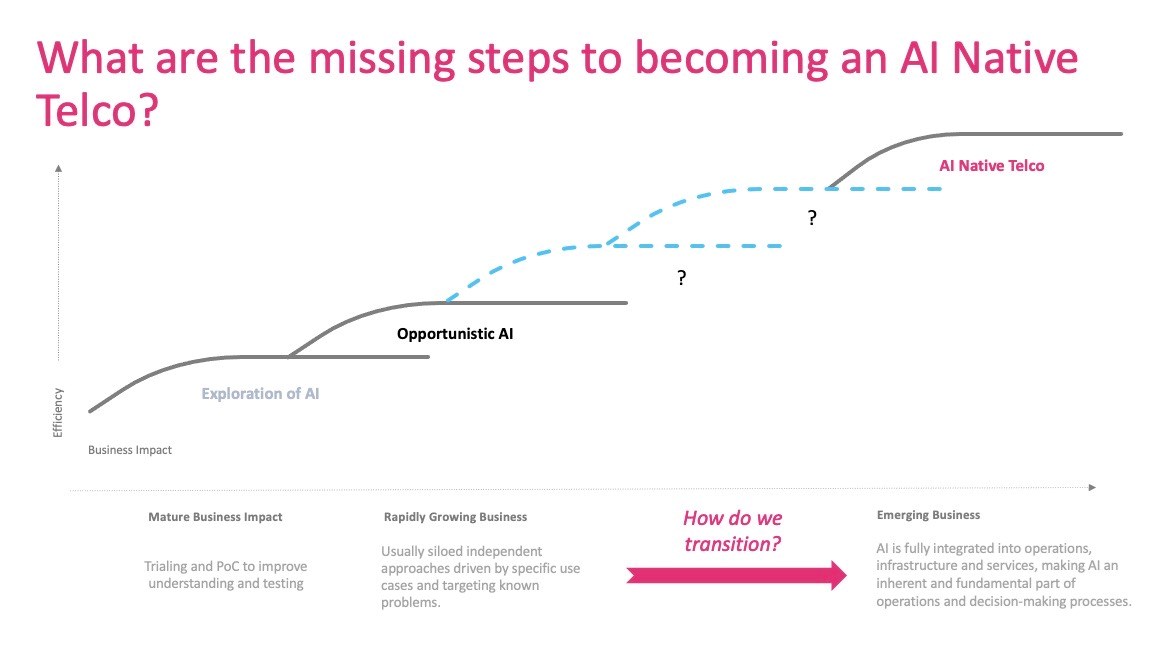

Deutsche Telekom’s VP of technology strategy, Ahmed Hafez, co-hosted the DSP Leaders World Forum 2023 session entitled “Creating a framework for the AI-native telco” this week in the UK. He said that AI will deliver the telecom sector its biggest ever challenges and opportunities, but to take advantage of the benefits that AI will bring the industry needs to figure out a way to evolve from being opportunistic to becoming AI-native.

To date, the telecom sector has been exploring the potential of AI without looking at the bigger picture, and that holistic view needs to be taken in order to figure out the best way to go, Hafez believes.

Like so many other pundits and cheerleaders, Hafez regards the impact of AI as “the biggest transformation we will ever encounter.” And this is not only about the magnitude of what AI will do, but also the pace – it will outpace our understanding of things so fast, so we need to be ready…

“Previous transformations have [happened at an] accommodating pace – they were not changing so fast that we couldn’t comprehend or adapt to them. In order for us to adapt to AI, we need to transform as individuals, not [just as] companies. On an individual level you need to be able to comprehend what’s going on and pick the right information.”

To illustrate the magnitude of the challenges that AI will deliver to the telecom sector, Hafez presented a few supporting statistics:

- The AI market was worth $136bn in 2022 and is set to be worth $1.8tn by 2030

- The telecom AI market alone was worth $2.2bn in 2022

- Global private investment in AI reached $91.9bn in 2022

- AI delivers a 40% increase in business productivity, according to a study by Accenture (Hafez thinks that number is too low, that productivity gains will be much higher)

- There are already thousands of AI-focused companies – by 2018 there were already nearly 3,500

- AI will drive the need for 500x compute power between now and 2030 (“What does that mean for telcos? How can we deal with that?” asked Hafez)

- In terms of human resources, 63% of executives believe their biggest skills shortage is in AI expertise

- Three in every four CEOs believe they don’t have enough transparency when it comes to AI and are concerned about skewed bias in the AI sector

So a lot of eye-opening trends that should give the telecom industry food for thought, especially when it comes to attracting employees with AI skills. “How will we get the people we need if there are thousands of AI companies” attracting the experts, he asked.

Hafez also related how he encountered what he described as some “depressing” information about how unattractive telecom operators are to potential employees, especially those of a younger generation. Of the top-50 most attractive companies in advanced economies for employees, none of them are telcos: “This is a worrying trend… we need to become more attractive to the younger generations,” he noted.

The telecom industry began exploring the use of AI in earnest less than 10 years ago, noted the DT executive, when it started looking into its potential with proofs of concept and trials. “Then we took the opportunistic approach to AI – use case-based, where you find a good use case, you implement it and it’s concrete. There’s nothing bad about that, as it’s the right thing to do… and we’ve been doing that for a while and it’s delivering value. That’s fine as long as you are doing a few tens of use cases.”

But using AI at scale, which is what the industry needs to do to become AI-native, where AI is fully integrated into everything and becomes part of all operations and decision-making processes, throws up a lot of new questions about how the sector progresses from being opportunistic to becoming AI-native – what are the missing steps, Hafez asked?

Source: Deutsche Telekom

“Once we start to ask, what would the future be with AI in everything we do, in every appliance, in every application, in every network component, it would be over the top. You would have data that is being worked on by five or six AI engines, creating different things…. You would have not just tens of use cases, but hundreds, or thousands. Are we prepared for that? Are we ready to embrace such scale? Are we building AI for scale? I don’t think so.

“We are building AI trying to get things done – which is okay. But in order for us to get through this journey, through this transformation, what stages do we need to pass through? What are the steps that we need to take to… make sure that the problem is clear. If we have a huge amount of AI, do we run the risk of conflicting AI? So if I have AI for energy efficiency and I have another one that actually improves network quality, could they create conflicts? Can they be a problem? If I have AI that is on the optical layer and AI on the IP layer, can they make different decisions because they consume data differently?

“If we look at things from this perspective, do we need, within our organisations, another stream of hiring people and the need to upskill leadership? Do we need to upskill ourselves to help our teams? What do we need to do? If you look at technologies, do we need to change the perspective of how, for example, the 3GPP is building the standards in order to make sure the standards are AI friendly? Do we need separate standard bodies to look at AI? What would be their functions? What would be their scope?” asked Hafez.

And does the industry need a framework that can provide guidance so that the telecom sector can develop in the same direction with its use of AI?

“This is the discussion we want to have, and I hope the message is clear – we have a great opportunity, but opportunities do not come without challenges,” he cautioned.

Hafez set the scene for a great discussion with his fellow speakers, Juniper’s chief network strategist Neil McRae, Rakuten Symphony CMO Geoff Hollingworth, Nokia’s CTO for Europe Azfar Aslam, and Digital Catapult’s CTO Joe Butler – and it’s fair to say there were differences of opinion! You can view the full session on demand here.

…………………………………………………………………………………………………………………………………………………………………….

Here are some specific examples of how AI is being used in the telecom industry in 2023:

Network optimization:

AI is being used to analyze data from network sensors to identify potential problems before they occur. This allows telecom providers to take proactive steps to fix problems and prevent outages. For example, companies are using AI to predict network congestion and proactively reroute traffic to avoid outages. 5G networks began to roll out in 2019 and are predicted to have more than 1.7 billion subscribers worldwide – 20% of global connections — by 2025. AI is essential for helping CSPs build self-optimizing networks (SONs) to support this growth. These allow operators to automatically optimize network quality based on traffic information by region and time zone. AI in the telecom industry uses advanced algorithms to look for patterns within the data, enabling telecoms to both detect and predict network anomalies. As a result of using AI in telecom, CSPs can proactively fix problems before customers are negatively impacted.

Customer service automation and Virtual Assistants:

AI-powered chatbots can answer customer questions and resolve issues without the need for human intervention. This can free up customer service representatives to focus on more complex issues. For example, Verizon is using AI to power its Virtual Assistant, which can answer customer questions about billing, service plans, and technical support.

Predictive Maintenance:

AI-driven predictive analytics are helping telecoms provide better services by utilizing data, sophisticated algorithms, and machine learning techniques to predict future results based on historical data. This means operators can use data-driven insights to monitor the state of equipment and anticipate failure based on patterns. Implementing AI in telecoms also allows CSPs to proactively fix problems with communications hardware, such as cell towers, power lines, data center servers, and even set-top boxes in customers’ homes. In the short term, network automation and intelligence will enable better root cause analysis and prediction of issues. Long term, these technologies will underpin more strategic goals, such as creating new customer experiences and dealing efficiently with emerging business needs.

Robotic Process Automation (RPA) for Telecoms:

CSPs have vast numbers of customers engaged in millions of daily transactions, each susceptible to human error. Robotic Process Automation (RPA) is a form of business process automation technology based on AI. RPA can bring greater efficiency to telecom functions by allowing telcos to more easily manage their back-office operations and large volumes of repetitive and rules-based actions. RPA frees up CSP staff for higher value-add work by streamlining the execution of complex, labor-intensive, and time-consuming processes, such as billing, data entry, workforce management, and order fulfillment. According to Statista, the RPA market is forecast to grow to 13 billion USD by 2030, with RPA achieving almost universal adoption within the next five years. Telecom, media, and tech companies expect cognitive computing to “substantially transform” their companies within the next few years.

Fraud Prevention:

Telecoms are harnessing AI’s powerful analytical capabilities to combat instances of fraud. AI and machine learning algorithms can detect anomalies in real-time, effectively reducing telecom-related fraudulent activities, such as unauthorized network access and fake profiles. The system can automatically block access to the fraudster as soon as suspicious activity is detected, minimizing the damage. With industry estimates indicating that 90% of operators are targeted by scammers on a daily basis – amounting to billions in losses every year – this AI application is especially timely for CSPs.

Revenue Growth:

AI in telecommunications has a powerful ability to unify and make sense out of a wide range of data, such as devices, networks, mobile applications, geolocation data, detailed customer profiles, service usage, and billing data. Using AI-driven data analysis, telecoms can increase their rate of subscriber growth and average revenue per user (ARPU) through smart upselling and cross-selling of their services. By anticipating customer needs using real-time context, telecoms can make the right offer at the right time over the right channel.

………………………………………………………………………………………………………………………………………………………

References:

https://www.telecomtv.com/content/network-automation/towards-the-ai-native-telco-47596/

https://www.telecomtv.com/content/dsp-leaders-forum/

Generative AI could put telecom jobs in jeopardy; compelling AI in telecom use cases

Allied Market Research: Global AI in telecom market forecast to reach $38.8 by 2031 with CAGR of 41.4% (from 2022 to 2031)

SK Telecom inspects cell towers for safety using drones and AI

The case for and against AI in telecommunications; record quarter for AI venture funding and M&A deals

Global AI in Telecommunication Market at CAGR ~ 40% through 2026 – 2027

Cybersecurity threats in telecoms require protection of network infrastructure and availability

China to launch world’s first 5G cruise ship via China Telecom Corp Ltd Shanghai Branch

Generative AI could put telecom jobs in jeopardy; compelling AI in telecom use cases

The loss of jobs due to AI forecasts are very grim. IBM’s CEO Arvind Krishna this week announced a hiring freeze while speculating that 7,800 jobs could be replaced by AI in the next few years. A new report from the World Economic Forum (WEF) states that AI will cause 14 million jobs to be lost by 2027. The organization’s Future of Jobs Report 2023 shows that 590 million jobs will not change, while 69 million will be created and 83 million positions will be lost.

Even more scary was Goldman Sachs issued a report in March predicting AI would “replace” 300 million jobs and citing the recent impact of generative AI. Generative AI, able to create content indistinguishable from human work, is “a major advancement”, the report says. However, those predictions don’t usually forecast let alone mention the new jobs that will be created in an AI prevalent world.

According to Light Reading’s Iain Morris, new types of AI like Hawk-Eye, ChatGPT, GitHub Copilot and other permutations threaten a jobs apocalypse. The telecom sector looks extraordinarily exposed. For one thing, it’s stocked with people in sales, marketing and customer services, including high-street stores increasingly denuded of workers, like those coffee chains where you select your beverage on a giant touchscreen instead of telling somebody what you want. Chatbots have already replaced some roles. One very big (unnamed) network operator is known to be exploring the use of ChatGPT in customer services for added efficiency – a move that could turn thinned ranks anorexic.

The schema is that telco networks could feasibly be a self-operating, self-healing entity, stripped clean of people, run by an AI that’s probably been developed by Google or Microsoft even though it lives in facilities owned by the telco to keep GDPR watchdogs and other regulatory authorities on side. All those fault-monitoring, trouble-ticketing and other routine technical jobs have gone. If staff have been “freed up,” it’s not to do other jobs at the telco.

Opinion: This author strongly disagrees as these new versions of AI have not proven themselves to be that effective in doing telecom network tasks. Meanwhile, chat bots are somewhere between ineffective and totally dysfunctional so won’t replace live/real person chat or call centers till they improve.

……………………………………………………………………………………………………………………………………………………………….

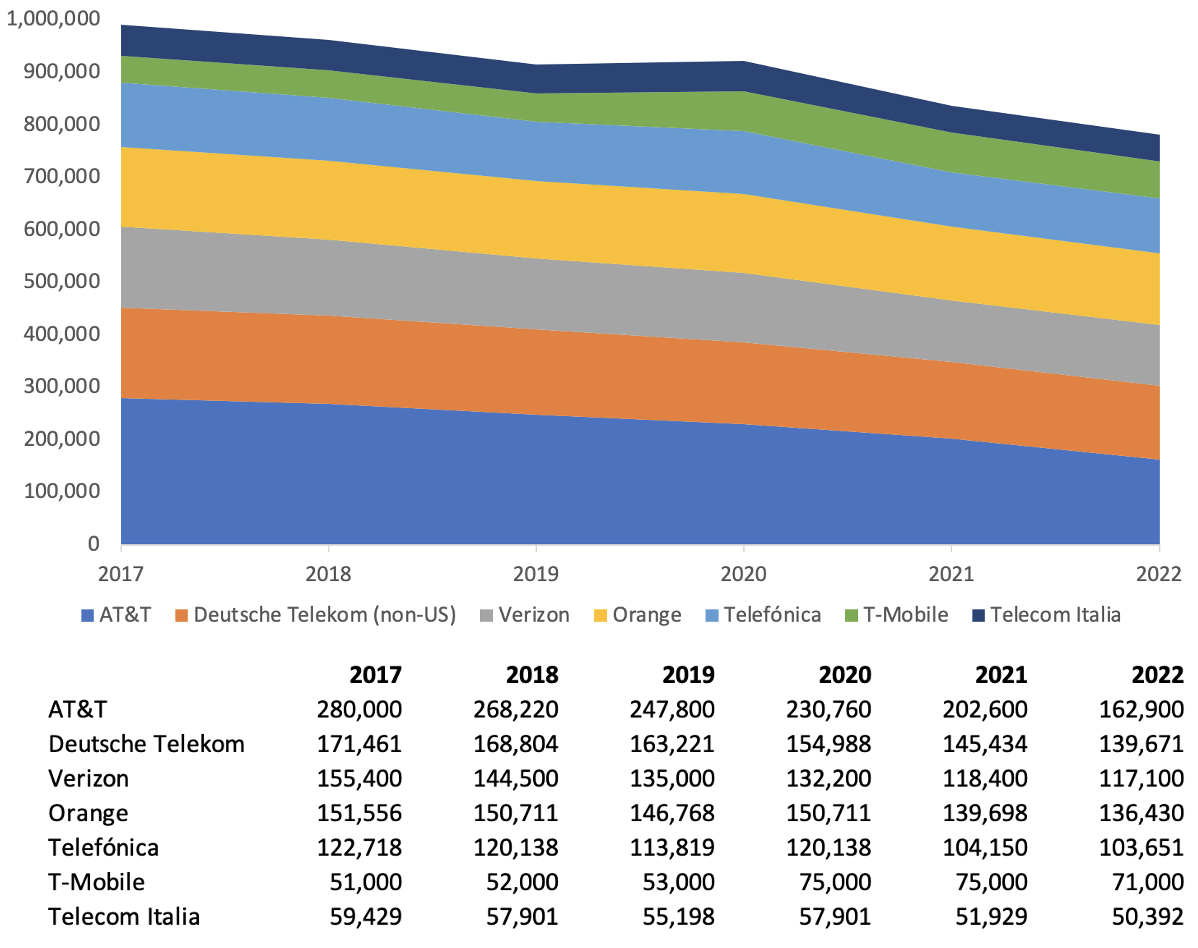

At big telcos tracked by Light Reading, collective headcount fell nearly 58,000 last year. Across AT&T, T-Mobile and Verizon, the big three of the U.S. mobile telecom market, around 45,000 jobs disappeared in 2022, more than 11% of the end-2021 total.

Source: Companies tracked by Light Reading

Outside the U.S., around 11,000 jobs were cut at Deutsche Telekom, Orange, Telecom Italia and Telefónica. That was a much smaller 2.5% of the earlier total, and yet more than 67,000 non-US jobs have been cut from the payrolls of these companies since 2018, a figure equal to 13.5% of headcount at the end of the previous year.

Much of this attrition has very little if anything to do with technology. Instead, it’s the result of more routine efficiency measures and the disposal of assets, including geographical units, infrastructure (such as towers) once but no longer deemed strategically important, and IT resources farmed out to the public cloud. This is a frightening thought for employees.

Morris asks, “If jobs were disappearing this fast before the arrival of ChatGPT, what does the future hold?”

AI Can Improve Telecom Industry without causing major job losses:

We think AI has the potential to improve various aspects of the telecommunication industry without causing major job losses. For example, Ericsson has reported that the implementation of AI-powered solutions in networks can lead to a 35 percent decrease in critical incidents and a 60 percent decrease in network performance problems. Additionally, energy costs can be reduced by 15 percent through the automation, making the network more environmentally sustainable.

AI can help telcos optimize their networks by automatically adjusting network settings and configurations to improve performance and reduce costs. AI algorithms can further be used to analyze vast amounts of data generated by telecommunication networks, providing valuable insights into network performance, and helping to identify and resolve issues in real-time. This can significantly improve network reliability and reduce downtime, ultimately leading to enhanced customer satisfaction.

Some of the other compelling AI use cases in telecom are:

- Fraud detection and prevention: AI algorithms can play a crucial role by analyzing massive amounts of data to detect and prevent various forms of fraudulent activities in real time, such as SIM-swapping, unauthorized network access, fake profiles, and bill fraud.

- Predictive maintenance: AI can analyze data from telecom equipment to predict when it will require maintenance—reducing downtime and costs associated with maintenance.

- Personalized marketing: AI can analyze customer data to create targeted marketing campaigns—improving customer engagement and reducing the costs associated with marketing efforts. Using machine learning models to recommend products or services to customers based on their usage patterns and preferences.

- Automated decision making: Using deep learning models to automate decisions such as network routing, dynamic pricing, and more.

References:

Allied Market Research: Global AI in telecom market forecast to reach $38.8 by 2031 with CAGR of 41.4% (from 2022 to 2031)

The case for and against AI in telecommunications; record quarter for AI venture funding and M&A deals

Global AI in Telecommunication Market at CAGR ~ 40% through 2026 – 2027

SK Telecom inspects cell towers for safety using drones and AI

Cybersecurity threats in telecoms require protection of network infrastructure and availability

Arista Networks unveils cloud-delivered, AI-driven network identity service

At the RSA Conference today, Arista Networks announced a cloud-delivered, AI-driven network identity service for enterprise security and IT operations. Based on Arista’s flagship CloudVisionⓇ platform, Arista Guardian for Network Identity (CV AGNI™) expands Arista’s zero trust networking approach to enterprise security. CV AGNI helps to secure IT operations with simplified deployment and cloud scale for all enterprise network users, their associated endpoints, and Internet of Things (IoT) devices.

“Proliferation of IoT devices in the healthcare network creates a huge management and security challenge for our IT and security operations. The ease of securely onboarding devices on the network by CV AGNI and its integration with Medigate by Claroty for device profiling greatly simplifies this problem for a healthcare network,” said Aaron Miri, CIO of Baptist Healthcare.

AI-Driven Network Identity brings Simplicity and Security at Scale

While enterprise networks have seen massive transformation in recent years with the adoption of cloud and the acceleration of a post-pandemic, perimeter-less enterprise, Network Access Control (NAC) solutions have changed little for decades. Traditional NAC solutions continue to suffer from the complexity of on-premises deployment and administration and have been unable to adapt to the explosion of SaaS-based identity stores, users, devices and their associated profiles across the enterprise.

CloudVision AGNI takes a novel approach to enterprise network identity management. Built on a modern, cloud-native microservices architecture, the CV AGNI solution leverages AI/ML to greatly simplify the secure onboarding and troubleshooting for users and devices and the management of ever-expanding security policies.

CV AGNI is based on Arista’s foundational NetDL architecture and leverages AVA™ (Autonomous Virtual Assist) for a conversational interface that removes the complexity inherent in managing network identity from a traditional legacy NAC solution. AVA codifies real-world network and security operations expertise and leverages supervised and unsupervised ML models into an ‘Ask AVA’ service, a chat-like interface for configuring, troubleshooting and analyzing enterprise security policies and device onboarding. CV AGNI also adds user context into Arista’s network data lake (NetDL), greatly simplifying the integration of device and user information across Arista’s products and third-party systems.

CloudVision AGNI delivers key attributes from client to cloud across the cognitive enterprise:

- Simplicity: CV AGNI is a cloud service that eliminates the complexity of planning and scaling the compute resources for an on-premises solution. Administrative actions take a fraction of the time compared to a traditional NAC solution. It also natively integrates with industry-leading identity stores.

- Security: CV AGNI leapfrogs legacy NAC solutions by redefining and greatly simplifying how enterprise networks can be secured and segmented by leveraging user and device context in the security policies.

- Scale: With a modern microservices-based architecture, the CV AGNI solution scales elastically with the growing needs of any enterprise.

CloudVision Delivers Network Identity as-a-Service

Based on the CloudVision platform, CV AGNI delivers network identity as a service to any standards-based wired or wireless network.

CloudVision AGNI’s key features include the following:

- User self-service onboarding for wireless with per-user unique pre-shared keys (UPSK) and 802.1X digital certificates.

- Certificate management with a cloud-native PKI infrastructure

- Enterprise-wide visibility of all connected devices. Devices are discovered, profiled and classified into groups for single-pane-of-glass control.

- Security policy enforcement that goes beyond the traditional inter-group macro-segmentation and includes intra-group micro-segmentation capabilities when combined with Arista networking platforms through VLANs, ACLs, Unique-PSK and Arista MSS-Group techniques.

- AI-driven network policy enforcement based on AVA for behavioral anomalies. When a threat is detected by Arista NDR, it will work with CV AGNI to quarantine the device or reduce its level of access.

Tailored for Multi-vendor Integration

CloudVision AGNI leverages cognitive context from third-party systems, including solutions for mobile device management, endpoint protection, and security information and event management. This greatly simplifies the identification and onboarding process and application of segmentation policies. Examples include:

- Endpoint Management: Medigate by Claroty, CrowdStrike XDR, Palo Alto Cortex XDR

- Identity Management: Okta, Google Workspace, Microsoft Azure, Ping Identity and OneLogin.

- MDM: Microsoft Intune, JAMF

- SIEM: Splunk

- Networking devices: Multi-vendor interoperability in addition to Arista platforms

Availability

CV AGNI is integrated into Arista CloudVision to provide a complete identity solution. CV AGNI is in trials now with general availability in Q2 2023.

Visit us at booth #1443 at RSA. Learn more about AI-driven network identity at Arista’s webinar on May 18, register here. For more insight on this announcement, read Jayshree Ullal’s blog here.

About Arista

Arista Networks is an industry leader in data-driven, client to cloud networking for large data center, campus and routing environments. Arista’s award-winning platforms deliver availability, agility, automation, analytics and security through an advanced network operating stack. For more information, visit www.arista.com.

Competing Product:

SailPoint’s AI driven Identity Security Platform

References:

https://www.arista.com/en/company/news/press-release/17244-pr-20230424

https://www.sailpoint.com/platform/?campaignid=11773644133

Arista’s WAN Routing System targets routing use cases such as SD-WANs

AT&T realizes huge value from AI; will use full suite of NVIDIA AI offerings

Executive Summary:

AT&T Corp. and NVIDIA today announced a collaboration in which AT&T will continue to transform its operations and enhance sustainability by using NVIDIA-powered AI for processing data, optimizing service-fleet routing and building digital avatars for employee support and training.

AT&T is the first telecommunications provider to explore the use of a full suite of NVIDIA AI offerings. This includes enhancing its data processing using the NVIDIA AI Enterprise software suite, which includes the NVIDIA RAPIDS Accelerator for Apache Spark; enabling real-time vehicle routing and optimization with NVIDIA cuOpt; adopting digital avatars with NVIDIA Omniverse Avatar Cloud Engine and NVIDIA Tokkio; and utilizing conversational AI with NVIDIA Riva.

“We strive each day to deliver the most efficient global network, as we drive towards net zero emissions in our operations,” said Andy Markus, chief data officer at AT&T. “Working with NVIDIA to drive AI solutions across our business will help enhance experiences for both our employees and customers.” He said it’s AT&T’s goal to make AI part of the fabric of the company, to have “all parts of the business leveraging AI and creating AI” rather than limit its use to creation of AI by its specialist data scientists.

“Industries are embracing a new era in which chatbots, recommendation engines and accelerated libraries for data optimization help produce AI-driven innovations,” said Manuvir Das, vice president of Enterprise Computing at NVIDIA. “Our work with AT&T will help the company better mine its data to drive new services and solutions for the AI-powered telco.”

The Data Dilemma:

AT&T, which has pledged to be carbon neutral by 2035, has instituted broad initiatives to make its operations more efficient. A major challenge is optimizing energy consumption while providing network infrastructure that delivers data at high speeds. AT&T processes more than 590 petabytes of data on average a day. That is the equivalent of about 6.5 million 4K movies or more than 8x the content housed in the U.S. Library of Congress if all its collections were digitized.

Telecoms aiming to reduce energy consumption face challenges across their operations. Within networks, the radio access network (RAN) consumes 73% of energy, while core network services, data centers and operations use 13%, 9% and 5%, respectively, according to the GSMA, a mobile industry trade group.

AT&T first adopted NVIDIA RAPIDS Accelerator for Apache Spark to capitalize on energy-efficient GPUs across its AI and data science pipelines. This helped boost its operational efficiency across everything from training AI models and maintaining network quality and optimization, to reducing customer churn and improving fraud detection.

Of the data and AI pipelines targeted with Spark-RAPIDS, AT&T saves about half of its cloud computing spend and sees faster performance, while enabling reductions in its carbon footprint.

Enhanced Field Dispatch Services:

AT&T, which operates one of the largest field dispatch teams to service its customers, is currently testing NVIDIA cuOpt software to enhance its field dispatch capabilities to handle more complex technician routing and optimization challenges. AT&T has a fleet of roughly 30,000 vehicles with over 700 million options in how they can be dispatched and routed. The operator would run dispatch optimization algorithms overnight to get plans for the next day, but it took too long and couldn’t account for the realities that would crop up the next morning: Workers calling in sick, vehicles breaking down, and so on.

“It wasn’t as good at noon as it was at 8 in the morning,” Markus said. Using Nvidia GPUs and software, he said, AT&T was able to speed up its processing 60x so that it could run the scenario in near-real-time, as often as it needed to and achieve more jobs in a day (as well as reduce its cloud-related costs by 40%).

Routing requires trillions of computations to factor in a variety of factors, from traffic and weather conditions to customer change of plans or a technician’s skill level, where a complicated job might then require an additional truck roll.

In early trials, cuOpt delivered solutions in 10 seconds, while the same computation on x86 CPUs took 1,000 seconds. The results yielded a 40% reduction in cloud costs and allowed technicians to complete more service calls each day. NVIDIA cuOpt allows AT&T to run nearly continuous dispatch optimization software by combining NVIDIA RAPIDS with local search heuristics algorithms and metaheuristics such as Tabu search.

Pleasing Customers, Speeding Network Design:

As part of its efforts to improve productivity for its more than 150,000 employees, AT&T is moving to adopt NVIDIA Omniverse ACE and NVIDIA Tokkio, cloud-native AI microservices, workflows and application frameworks for developers to easily build, customize and deploy interactive avatars that see, perceive, intelligently converse and provide recommendations to enhance the customer service experience.

For conversational AI, the carrier also uses the NVIDIA Riva software development kit and is examining other customer service and operations use cases for digital twins and generative AI.

AT&T also is taking advantage of fast 5G and its fiber network to deliver NVIDIA GeForce NOW™ cloud gaming at 120 frames per second on mobile and 240 FPS at home.

Markus added that AI-powered Nvidia tools are also helping AT&T to both serve its customers better through various channels, from sales recommendations to customer care; and that its internal processes are leveraging AI as well, to help employees be more efficient. The company is embracing Nvidia’s AI solutions as a foundation for development of interactive and intelligent customer service avatars.

In the past 12 months, AI has created more than $2.5 billion in value for AT&T. About half of that came via Marcus’ team, but the other half came from what he calls “citizen data scientists” across the company who have been able to leverage AI to solve problems in their respective areas, whether than was marketing, network operations, software development or finance.

“As we mobilize that citizen data-scientist across the company, we’re doing that via a self-service platform that we call AI-as-a-service, where we’re bringing a unified experience together. But behind the experience, we’re allowing those users to leverage AI in a curated way for their use case,” he explained. “So they bring their subject matter expertise to the problem that they’re trying to solve, and we … enable the technology [and processes for them to create] robust AI. But we also govern it with some guardrails, so the AI we’re creating is ethical and responsible.”

In AT&T’s automation development, 92% of its automation is created by employees via self-service to solve a problem. “The goal is that over time, we bake in incredible functionality like Nvidia, so that AI-as-a-service is delivering that self-service functionality so that we do most of our routine AI creation via the platform, where you don’t have to have a professional data scientist, a code warrior, to be your sherpa,” Markus concluded.

References:

https://nvidianews.nvidia.com/news/at-t-supercharges-operations-with-nvidia-ai