AT&T asks FCC to prohibit 6 GHz band for unlicensed use

AT&T is asking the Federal Communications Commission (FCC) to avoid any expansion of the 6 GHz band to unlicensed users, noting that roughly 25% of the links in the band support public safety and critical infrastructure licensees. AT&T said that band contains about 100,000 microwave links, many of which are carrying critical voice and data traffic, including for the nation’s first responders. AT&T added that introducing unlicensed devices would cause interference at the receive antennas of those microwave links, thereby reducing their reliability.

The telco estimates 27% of the links support utilities, making the upper and lower 6 GHz bands key in supporting the nation’s critical infrastructure. It also said that maintaining long-haul and high-reliability microwave links will be critical for 5G and other advanced services.

AT&T officials met last week with FCC staff to discuss its concerns about a proposed expansion of the 5.9125-7.125 GHz band to include unlicensed use. AT&T said it will be relying on a significant number of existing and newly developed 6 GHz fixed links in support of FirstNet [1.], where it’s contractually obligated to provide high levels of reliability.

Note 1. FirstNet is the first nationwide U.S. wireless network dedicated to public safety. AT&T won the contract to provide the wireless network for FirstNet last year.

AT&T’s letter to the FCC was made public in an ex parte filing.

References:

https://ecfsapi.fcc.gov/file/10319087261781/6GHz-ExParte031618.pdf

https://www.fiercewireless.com/wireless/at-t-says-6-ghz-band-key-for-firstnet-5g

Will the FCC Amend Rules for Small Cells on March 22nd? 2 Cities Express Concern!

The FCC is due to vote on exempting small cell installations from certain federal reviews on March 22, 2018. The CTIA-commissioned analysis by Accenture Strategy found that when such reviews are required, almost a third of the cost of next-generation wireless deployments go to federal regulatory reviews that the FCC now proposes to eliminate as unnecessary. These reviews cost industry $36 million in 2017, and are expected to increase over six-fold in 2018.

Backgrounder: To keep up with increasing demand for wireless data and build out 5G networks, the wireless industry needs to deploy hundreds of thousands of modern wireless antennas – small cells – in the next few years. Small cell deployments will escalate rapidly from roughly 13,000 deployed in 2017 to over 800,000 cumulatively deployed by 2026, according to the analysis.

Small cells are similar in size to a pizza box and can be deployed on streetlights or utility poles in about one hour. However, under rules that were designed decades ago for 200-foot cell towers, getting necessary federal and local permissions can take over a year and require multiple, duplicative reviews including federal environmental and historic preservation reviews.

The FCC’s new rules would modernize the historic and environmental regulatory requirements for wireless deployments, exclude small cells from certain federal regulatory hurdles, and adopt a “shot clock” for FCC review of environmental assessments when required. The analysis found that the U.S. will see a 550% increase in small cells year over year in 2018, underscoring the need for FCC action now to jumpstart more broadband investment.

………………………………………………………………………………………………………………………………………….

From the March 1, 2018 FCC fact sheet:

Background:

As part of the FCC’s efforts in the Wireless Infrastructure docket to streamline the deployment of next-generation wireless facilities, the agency has been attempting to identify instances in which regulatory review imposes needless burdens and slows infrastructure deployment. As part of that effort, the Commission has consulted extensively with Tribal Nations, intertribal organizations, state and local historic preservation officers, wireless carriers, network builders, relevant federal agencies, and many others to determine the steps the Commission needs to take to enable the deployment of 5G networks throughout America.

This Order focuses on the types of deployments that are subject to National Historic Preservation Act (NHPA) and National Environmental Policy Act (NEPA) review, and it reexamines and revises Commission rules and procedures for such deployments.

What the Second Report and Order Would Do:

o Amend Commission rules to clarify that the deployment of small wireless facilities by private parties does not constitute either a “federal undertaking” within the meaning of NHPA or a “major federal action” under NEPA, meaning that neither statute’s review process would be mandated for such deployments. Small wireless facilities deployments would continue to be subject to currently applicable state and local government approval requirements.

o Clarify and make improvements to the process for Tribal participation in Section 106 historic preservation reviews.

o Remove the requirement that applicants file Environmental Assessments (EAs) solely due to the location of a proposed facility in a floodplain, as long as certain conditions are met.

o Establish timeframes for the Commission to act on EAs.

………………………………………………………………………………………………………………………………………

However, San Jose, CA, in the heart of Silicon Valley, and Lincoln, NE, an innovative university and capitol city, both could be profoundly affected if the FCC decides to “cut red tape” with modifications to small cell antenna deployment rules. Local city officials argue the regulatory process serves an important purpose. “By removing the historic and environmental review, and taking away local control, it won’t allow cities to make sure that 5G is deployed in an equitable manner for citizens,” said Shireen Santosham, the chief innovation officer for the city of San Jose. San Jose is the tenth largest city in the U.S., the largest in Silicon Valley and has a median income that is over $90,000, but has approximately 9.5 percent of the city’s 1 million residents living below the poverty line. “Currently there are more than 100,000 people in our city who do not have broadband,” she said. “Small (5G) cells will not solve the digital divide; we need to know that there will be service to rural and low-income areas,” Santosham added.

Service Provider VoIP and IMS -12% YoY in 2017; VoLTE Deployments Slowing

Following are highlights from the first quarter 2018 edition of the IHS Markit Service Provider VoIP and IMS Equipment and Subscribers Market Tracker, which includes data for the quarter ended December 31, 2017. Author is IHS Markit analyst Diane Myers.

Global service provider voice over Internet Protocol (VoIP) and IP multimedia subsystem (IMS) product revenue fell to $4.5 billion in 2017, a decline of 12 percent year over year. Overall worldwide revenue is forecast to decline at a compound annual growth rate (CAGR) of 3 percent, falling to $4 billion in 2022. This decline is due to the slowing of voice over LTE (VoLTE) network deployments and overall spending falling into a steady pattern. Downward pricing — because of continued competitive factors and large deal sizes in India, China and Brazil — is also dampening growth.

“The big story over the past four years has been mobile operators and the transition to VoLTE, which has been the number-one driver of VoIP and IMS market growth, along with voice over WIFI (VoWiFi) and other mobility services,” said Diane Myers, senior research director, VoIP, UC, and IMS, IHS Markit. “However, after initial VoLTE network builds are completed, sales growth drops off, as operators launch services and fill network capacity,” she added.

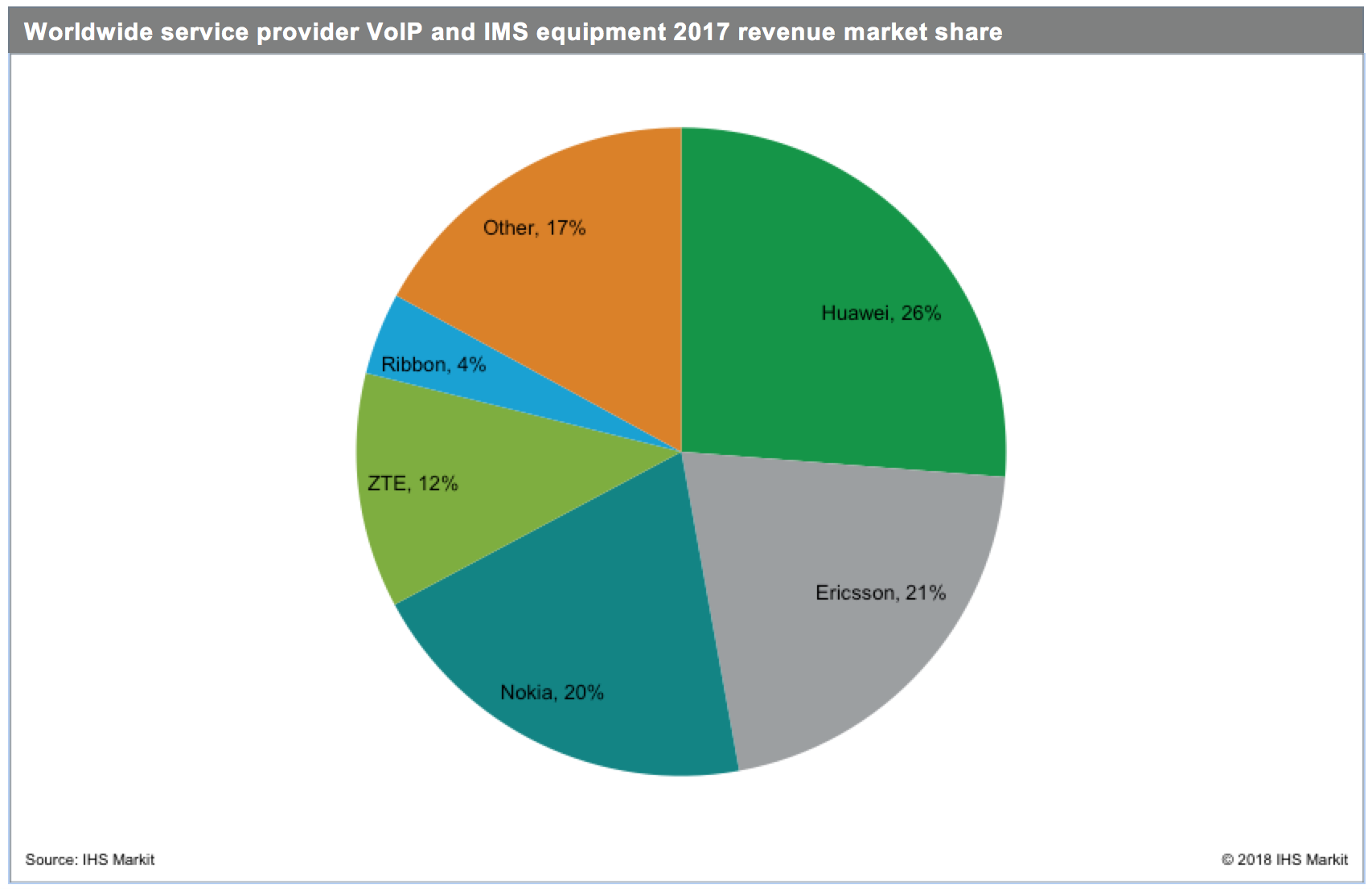

Huawei was the standout vendor in the VoIP and IMS market, with 26 percent of worldwide revenue in 2017. Ericsson ranked second, with 21 percent, followed by Nokia, ZTE and Ribbon Communications.

Service provider VoIP and IMS market highlights

- As large VoLTE projects in India and China continued to expand, the Asia-Pacific region ranked first in global revenue in 2017, accounting for 37 percent of the market. Spending from VoLTE projects in Asia-Pacific and Caribbean and Latin America continue, but it is not enough to move the worldwide market back into growth territory, primarily due to price compression in both regions.

- As of January 2018, 119 operators launched commercial VoLTE services, with more coming every year. However, new launches are slowing. Most VoLTE launches in 2017 occurred in Europe. There was also some expansion in Chinese provinces, due to growth from China Mobile; in Brazil, with Vivo; and in India, with Bharti Airtel.

Service Provider VoIP and IMS Equipment and Subscribers report synopsis

The quarterly Service Provider VoIP and IMS Equipment and Subscribers Market Tracker from IHS Markit provides worldwide and regional vendor market share, market size, forecasts through 2022, analysis and trends for trunk media gateways, SBCs, media servers, softswitches, voice application servers, HSS, CSCF and IM/presence servers.

New Southeast Asia-Japan 2 Cable to Link 9 Asian Countries

A consortium of Asia-Pacific network operators has contracted NEC Corp. to build a 10,500km subsea cable which will connect Singapore, Thailand, Cambodia, Vietnam, Hong Kong, Taiwan, mainland China, Korea and Japan. The Southeast Asia-Japan 2 cable (SJC2) will be built and operated by a consortium including China Mobile International, Chunghwa Telecom, Chuan Wei, Facebook, KDDI, Singtel, SK Broadband and VNPT. The eight fiber pair cable will have a total capacity of 144Tbps. Construction of the cable is expected to be completed by the fourth quarter of 2020.

“The construction of SJC2 cable is timely and will provide additional bandwidth between Southeast and North Asia, whose combined population of more than two billion are driving demand for data as their economies undergo digital transformation,” Singtel VP for carrier services Ooi Seng Keat said.

“As a new generation multimedia superhighway, the SJC2 can play a pivotal role in facilitating economic cooperation and digital innovation among the countries in this region. The construction of this cable reinforces Singtel’s position as the leading data services provider in the region and strengthens Singapore as a global business and info-communications hub,” Keat added.

China Mobile said in a statement it would be solely responsible for the landing stations in China and Hong Kong, with SJC2 complementing China’s Belt and Road Initiative, and this cable makes up one of seven investments the company has made into subsea cables.

Singtel has been investing in augmenting its international connectivity, including through the joint construction of the 9,000km INDIGO subsea cable linking Singapore with Perth and Sydney in Australia, and its involvement in the Southeast Asia-Middle East-Western Europe 5 (SEA-ME-WE 5) cable, which was completed in December 2016.

SJC2 submarine cable to connect 9 Asian countries

………………………………………………………………………………………………………………………………………….

In May, NEC demonstrated speeds of 50.9Tbps across subsea cables of up to 11,000km on a single optical fibre through the use of C+L-band erbium-doped optical fibre amplifiers (EDFA), amounting to speeds of 570 petabits per second-kilometre.

To hit those speeds, NEC researchers developed a multi-level, linear, and non-linear algorithm to obtain an optimisied 32 quadrature amplitude modulation (QAM) or opt32 constellation with a higher limit for non-linear capacity specifically for transmission across subsea cables.

NEC announced the completion of the 54Tbps Asia-Pacific Gateway cable in November 2016 between China, Hong Kong, Japan, South Korea, Malaysia, Taiwan, Thailand, Vietnam, and Singapore.

The cable is owned by China Telecom, China Unicom, China Mobile, NTT Communications, KT Corporation, LG Uplus, StarHub, Chunghwa Telecom, CAT, Global Transit Communications, Viettel, and VNPT.

References:

https://www.telecomasia.net/content/southeast-asia-japan-2-cable-link-9-markets

http://www.zdnet.com/article/nec-signed-to-build-southeast-asia-japan-2-cable/

………………………………………………………………………………………………………………………..

Related subsea cable construction:

Construction begins on Japan-Guam-Australia cable

https://www.telecomasia.net/content/construction-begins-japan-guam-australia-cable

New Partnerships Aim to Simplify Global IoT Connectivity & Deployment

Two new partnerships promise to increase global IoT connectivity.

1. Chunghwa Telecom and Tata Communications:

India’s global network operator Tata Communications is partnering with Taiwan’s Chunghwa Telecom to bring global connectivity to consumer electronics and Industrial Internet of Things devices. In a joint press release, the companies said the partnership will enable Chunghwa Telecom to tap into additional revenues by connecting IoT devices through Tata Communications’ MOVE-IoT Connect platform which enables IoT devices to be deployed quickly -both locally and internationally- by leveraging Tata Communications’ ecosystem of mobile network operators worldwide.

The Taiwanese electronics industry output is expected to be worth $228 billion (NT$6.65 trillion) in 2018, and the wearables market grew at double digits in both volume and value terms in 2017. Chunghwa Telecom looks to capitalize on this growth through the existing IoT roaming services the operator provides.

“Our aim is to serve three million IoT devices in the next three years, and Tata Communications will be one of our key partners to fulfill this ambition,” Ming-Shih Chen, president of mobile business group at Chunghwa Telecom.

“Tata Communications MOVE will give our customers’ IoT services borderless network coverage across 200 countries, ensuring a consistently high-quality and reliable user experience.”

One of Chunghwa Telecom’s first Tata Communications’ MOVE – IoT Connect customers is TaiDoc Technology, which manufactures premium medical devices to improve people’s health and quality of life. TaiDoc will use Tata Communications MOVE – IoT Connect to extend the reach of its devices to countries such as China, Thailand and the U.S.

“Our aim is to serve three million IoT devices in the next three years, and Tata Communications will be one of our key partners to fulfil this ambition,” said Ming-Shih Chen, President of Mobile Business Group, Chunghwa Telecom. “Tata Communications MOVE™ will give our customers’ IoT services borderless network coverage across 200 countries, ensuring a consistently high-quality and reliable user experience. At the same time, we’re able to extend our business easily by being part of the Tata Communications’ MOVE™ ecosystem of mobile network operators. It’s a win-win for us.”

“IoT devices require borderless, secure and scalable connectivity to enable the capture, movement and management of information worldwide. Cellular connectivity is an effective foundation for IoT services, but the problem is that today’s mobile networks are inherently local – there is no such concept as a global mobile network,” said Anthony Bartolo, chief product officer at Tata Communications.

“We want to change that. We’re excited to work with Chunghwa Telecom and other leading mobile network operators around the world to build a truly global ecosystem of connectivity, and spur IoT adoption by businesses worldwide.”

References:

https://www.telecomasia.net/content/chunghwa-telecom-tata-com-ink-deal-global-iot-connectivity

………………………………………………………………………………………………………………………………

2. China Unicom and Telefonica:

China Unicom has signed an agreement with Spain’s Telefonica designed to simplify and accelerate IoT deployments for their respective enterprise customers globally. The partnership will give the two operators access to each others’ network and allow them to provide IoT products and services across several key markets through a single global SIM card.

The agreement will also consolidate their respective positions in IoT in Europe, Latin America and China, three of the most important IoT markets in the world, the companies said in a joint statement. Enterprise customers of Telefonica and China Unicom will be able to easily and seamlessly deploy IoT products and services in these three regions with a single global IoT SIM card. By using a unified IoT connectivity management platform, they will be able to control connections globally and to localize IoT SIMs once they reach a certain geography (subscription swap), the pair said.

“We are leveraging IoT technologies to accompany our customers on their digital transformation journey, where IoT has a very important role to play. Telefónica IoT is named a Leader in Gartner’s Magic Quadrant for Managed M2M Services worldwide, maintaining that position we have held since inception four years ago…..Simplifying massive IoT deployments is key and therefore we are strengthening our ecosystem of partners. Our partnership with China Unicom will strengthen Telefónica’s capability to meet our global customer needs,” Telefonica chief IoT officer Vicente Muñoz said.

Enterprise customers will be able to enjoy these new IoT capabilities later this year, Muñoz added.

In related news, China Mobile has signed a deal with UK’s Vodafone, under which the pair have agreed to resell each other’s IoT services for the first time. The agreement, announced at the recent Mobile World Congress in Barcelona, will see each company share new IoT project opportunities with their partners.

Vodafone customers will be given access to China Mobile IoT SIMs for deployments in China, while China Mobile customers wanting to offer IoT-enabled products outside of China will do so via Vodafone’s Global IoT SIM and management platform.

Vodafone will manage all elements of the operational model for its customers including on-boarding, SIM and logistics as well as billing and support. The company will act as a single point of contact for its enterprise IoT customers wanting to move into China.

China Mobile will discuss the possibility for IoT sales opportunities with Vodafone for companies wanting to expand outside of China.

Dorothy Lin, China Mobile International’s head of mobile business partnership, said the collaboration with Vodafone will allow the Chinese mobile giant to offer customers the greatest possible reach of IoT services.

“Having reached over 200 million IoT connections last year, China Mobile aims to increase the connections by 60% in 2018,” Lin noted.

………………………………………………………………………………………………………………………………………..

References:

https://www.telefonica.com/en/web/press-office/-/telefonica-and-china-unicom-partner-in-iot

https://www.telecomasia.net/content/china-unicom-telefonica-simplify-iot-deployment-enterprises

TrendForce: Small Cell Deployment to reach 2.838M units in 2018; 4.329M units in 2019 for CAG of 52.5%

Global mobile operators will be using small cells to expand the indoor coverage and improve network capacity, improving the quality of telecommunication. Small cells can divert 80 percent of data traffic in crowded areas. Increase in hotspot capacity will make up for areas not covered by macro cells (both indoor and outdoor) to improve network performance and service quality.

Kelly Hsieh, research director of TrendForce, said small cells will achieve higher level of integration, allowing for multi-mode, multi-band deployment, and integrate unlicensed spectrum.

The deployments of small cells – fuelled by 5G — will reach 2.838 million units in 2018 and 4.329 million units in 2019, an annual growth of 52.5 percent. Hsieh said that demand for 5G applications will gradually emerge as consensus on 5G standards and 5G application scenarios are being formed. Particularly, small cells are key to 5G as they can support increasing demand of data performance. It is estimated that the global deployments and installed base of small cells will reach 2.838 million units in 2018 and 4.329 million units in 2019, an annual growth of 52.5%.

The TrendForce report predicts that small cells will upgrade network performance and improve efficiency through indoor digital deployments. Small cells can carry large sum of data transmission brought by Internet of Things (IoT) and integrated wireless backhaul, reducing investment costs significantly.

China Mobile, Verizon, AT&T and SK Telecom are currently making investment for deploying small cell technology to boost customer experience. Therefore, with the development of 5G technology, small cells will become key equipment adopted by global mobile operators. Currently, mobile operators in China, the United States and South Korea are the most active, including China Mobile, Verizon, AT&T, and SK Telecom.

…………………………………………………………………………………………………………………………………………

Backgrounder on Small Cells (see IEEE reference below):

Small Cells

Small cells are portable miniature base stations that require minimal power to operate and can be placed every 250 meters or so throughout cities. To prevent signals from being dropped, carriers could blanket a city with thousands of these stations. Together, they would form a dense network that acts like a relay team, handing off signals like a baton and routing data to users at any location.

While traditional cell networks have also come to rely on an increasing number of base stations, achieving 5G performance will require an even greater infrastructure. Luckily, antennas on small cells can be much smaller than traditional antennas if they are transmitting tiny millimeter waves. This size difference makes it even easier to stick cells unobtrusively on light poles and atop buildings.

What’s more, this radically different network structure should provide more targeted and efficient use of spectrum. Having more stations means the frequencies that one station uses to connect with devices in its small broadcast area can be reused by another station in a different area to serve another customer. There is a problem, though: The sheer number of small cells required to build a 5G network may make it impractical to set up in rural areas.

References:

https://press.trendforce.com/press/20180314-3076.html

https://spectrum.ieee.org/video/telecom/wireless/5g-bytes-small-cells-explained

How 5G will Transform Smart Cities: Infrastructure, Public Events, Traffic

The infrastructure for 5G is still only beginning—with wider availability not expected until at least 2020—but cities like New York, Las Vegas, Sacramento, Calif., and Atlanta will soon get a chance to preview the promise of 5G this year when Verizon, T-Mobile and Sprint begin rolling out their faster networks in select areas. Using 5G, a city can sense “all sorts of variables across its many areas of interest, be it parking meters, traffic flow, where people are, security issues,” said Ron Marquardt, vp of technology at Sprint.

Mark Hung, an analyst at Gartner, pointed out that while 3G brought web browsing and data communication to the smartphone, 4G greatly enhanced it. And even though towers today can support hundreds or thousands of devices, 5G could help scale the Internet of Things from “hundreds and thousands to hundreds of thousands.”

Here are some of the ways 5G might transform cities over the next few years:

Infrastructure

Telecoms will increasingly weave their way into the infrastructure through 5G. By gathering data from buildings, 5G can help cities understand patterns in electricity usage, leading to lower power consumption across the grid. Those savings could vary greatly. According to a 2017 report by Accenture, smart technology and 5G in a small city with a population of around 30,000 could have a $10 million impact on the power grid and transportation systems. A slightly larger city of 118,000 could see $70 million. Meanwhile, a major metro area—say, Chicago—could see an economic impact of $5 billion.

Private-public partnerships are still in the early stages of being developed. For example, Nokia last month announced a partnership with the Port of Hamburg in Germany and Deutsche Telekom to monitor real-time data to measure water gates, environmental metrics or construction sites.

“I think last year the buzzword was fourth industrial revolution, but for me it still rings true,” Jane Rygaard, head of 5G marketing at Nokia, said about the endless possibilities of a 5G network.

As infrastructure becomes digitized through 5G, some agencies are already investing in understanding a 5G-infused infrastructure to help clients adapt to smarter cities. R/GA’s new venture studio with Macquarie Capital will include tackling how 5G will be tied to emerging technologies like AI and blockchain. R/GA chief technology officer Nick Coronges said the connectivity of 5G goes hand in hand with emerging technologies such as AI and blockchain.

Public events

Verizon, which will test 5G in nearly a dozen cities in 2018, is making its first broadband debut in Sacramento with a 5G wireless network later this year. As part of the rollout, Verizon is placing 5G in stadiums. Lani Ingram, Verizon’s vp for smart communities, sports and IoT platforms, said 5G could also be put to good use in airports, convention centers and other venues.

“The amount of usage of data during sporting events and concerts is only growing,” she said. “We see that every year during the Super Bowl, for example.”

Traffic

Many tout the need for 5G to power self-driving cars. For an autonomous vehicle to smoothly travel through a city, it will need to have low latency that allows it to continuously “see” its surroundings. 5G will allow for smart traffic lights, which connect with cars on the road to improve traffic flow. Carnegie Mellon University and Pittsburgh tested the use of smart traffic lights. The result? A 40 percent reduction in vehicle wait time, a 26 percent faster commute and a 21 percent decrease in vehicle emissions.

A 5G network will also make the roads safer. For example, as a car enters an intersection, a smart traffic light will notify it that a pedestrian has just hit the “walk” button, which will provide drivers more warning. Ambulances will be able to change traffic lights faster to accommodate their route and clear intersections.

“We see more and more of our customers linking smart city and safe city,” Rygaard said, adding that 5G isn’t just for consumers. It will improve their daily lives—whether that’s through safety, energy or countless other ways.

Reference:

4 U.S. Telcos ask FCC to support 3.5 GHz spectrum under Connect America Fund II

The Federal Communications Commission u (FCC) has received a petition from Windstream, CenturyLink, Frontier and Consolidated urging the agency to continue to support census tract license sizes in rural areas. The providers said this would help them deploy 3.5 GHz broadband wireless access technology under the agency’s Connect America Fund (CAF) II.

In a joint FCC filing, these telecom service providers, which are leveraging CAF-II funding to provide 10/1 Mbps rural broadband services, said they are testing and deploying 3.5 GHz-compatible broadband wireless technology in areas where deploying fiber and related facilities is cost prohibitive. By offering neutral access to the 3.5 GHz band in rural areas, these providers say they would be able to accelerate rural broadband rollouts. A key issue for these providers is that Partial Economic Areas (PEAs) aren’t aligned with how rural CAF areas are structured. A recent WISPA study illustrated that rural CAF areas tend to cluster around the edges of PEAs.

“To make these types of rural broadband deployments possible, the FCC must preserve census tract license sizes in rural areas—partial economic areas and even counties would preclude meaningful participation in the band by companies focused on providing broadband in the most rural areas,” the service providers said in the joint filing. “By licensing the 3.5 GHz band in rural areas on a census tract basis, the FCC can help enable faster broadband to more rural Americans.”

The other issue raised in rural areas is the amount of available spectrum. Although there’s a shortage of spectrum in urban areas like Boston and New York City, the service providers say “there is a relative spectrum abundance in rural areas.”

Proving out the economics for broadband deployments is also a challenge. In an urban area, there could be several providers vying for consumers’ and business’ attention, but in a rural area service providers rely on subsidies like the CAF-II program to make investments. Finally, the service provider group said that secondary markets are too costly and slow to allow for rural deployments.

“Rural players have not been able to realistically obtain spectrum in other bands,” the providers said. “At the same time, package bidding coupled with census tract license sizes reduces exposure risk for larger companies while promoting competition.”

The providers added that “there should be no concern that carriers are going to ‘cherry-pick’ licenses in rural areas.”

To make more spectrum available to service providers expanding rural broadband access, these service providers proposed that the FCC should allocate additional spectrum available for rural areas. Specifically, the group said that the FCC should consider allocating 80 MHz of spectrum as part of the CAF program.

“80 MHz in CAF CBs would enable carriers to deploy sustained speeds greater than 25/3 or more to over 200 customers per site,” the service provider group said. “Technology advances will allow for faster speeds in the future.”

Frontier is currently rolling out 25 Mbps speeds in some of its rural markets using the 3.5 GHz spectrum and will consider higher speeds as it procures new equipment and spectrum over time.

While it’s going to take time to see how these providers apply broadband wireless to their rural builds, it’s clear that they are showing some commitment to finding new ways to serve markets that have been traditionally ignored.

Ongoing tests, deployments

Seeing the 3.5 GHz band as another tool to reach rural Americans, these providers are in some stage of either testing or deploying broadband wireless.

Frontier, for one, confirmed in September it was testing broadband wireless with plans to deploy it in more areas if it meets its requirements. The service provider is also exploring 3.5 GHz deployments, including as a member of the CBRS Alliance, which is exploring CBRS specifications and spectrum use rules.

Already, the service provider has been making progress with its CAF-II commitments, providing broadband to over 331,000 and small businesses in its CAF-eligible areas, and the company has improved speeds to nearly 875,000 additional homes and businesses. The deployments reflect a combination of Frontier capital investment and resources that the FCC has made available through the CAF program.

Perley McBride, CFO of Frontier, told investors during a conference in September 2017 that broadband wireless could be a “good solution” to the deployment challenge “in very rural America[,] and if it works the way [Frontier is] expecting it to work, . . . [Frontier] will deploy more of that next year.”

Windstream is trialing fixed wireless and modeling 3.5 GHz deployments and is also a member of the CBRS Alliance.

CenturyLink is also advancing its CAF-II commitments, reaching over 600,000 rural homes and businesses with broadband over the past two years. While it has not revealed any specific plans yet, CenturyLink has obtained an experimental license for 3.5 GHz spectrum.

“The testing seeks to understand the viability of new technologies in this band that may be useful in providing fixed broadband services,” CenturyLink said in the filing.

References:

https://techblog.comsoc.org/2017/10/09/centurylink-asks-fccs-for-3-4-ghz-fixed-wireless-test/

https://www.agri-pulse.com/articles/10523-new-bill-would-set-up-rural-broadband-task-force

TBR: “5G” Business Case Remains Elusive; Economics Questionable

by Chris Antlitz, TBR

The mobile industry continues to move toward realizing the vision of a hyper-connected, intelligent world and is at the cusp of a major inflection point. 5G, artificial intelligence (AI), cognitive analytics, virtualization, pervasive automation and other new technologies will be deployed at scale over the next decade, and this will have significant implications not only for end users but also for all stakeholders in the global economy.

The mobile industry has significant hope that the 5G era will address key challenges and will lead to revenue generation, stronger positioning against over-the-top (OTT) providers, as well as help communication service providers (CSPs) better handle data traffic growth. This hope appears to be premature, however, as the economics of 5G still do not make sense, evident in the lack of a viable business case for the technology. Said differently, it remains uncertain whether the revenue generation possibilities from 5G will be attractive enough to justify the infrastructure cost to deploy 5G.

With 5G likely several years away from starting to help CSPs address their revenue and OTT challenges, they are expected to remain vigilant in their quest to drive down costs. Herein lies the challenge for the vendor community.

Vendors, especially incumbent vendors, will face ongoing price pressure and significant disruption from new technologies and architectures in areas such as NFV/SDN (virtualization and the white-box threat), SaaS, automation, AI and analytics.

Despite all the talk and hope of what the 5G era will bring, industry trends are moving against the vendor community, with incumbent vendors, particularly hardware-centric vendors, poised to struggle the most. Leading CSPs are focused on significantly reducing the cost of network operations and capex, underscored by a desire to disaggregate the black box and commoditize the hardware layer.

Vendors also face overall lower spend as CSPs shift investments away from LTE now that those networks are pervasively deployed and focus instead on the service layer, where they aim to digitalize themselves and offer innovative services. Other trends that are moving against incumbent vendors include the open sourcing of software, evident by the slew of new open infrastructure initiatives such as the ORAN Alliance.

Incumbent vendors are susceptible to major disruption by relatively new companies that are not tied to legacy portfolios and are actively looking to align with the desires of CSPs. One example includes vRAN (virtual Radio Access Network) vendors, which were well represented at MWC2018. Incumbents will have to stay vigilant and keep a good pulse on the market to navigate appropriately.

…………………………………………………………………………………………………………………….

“The hyper-connected, ‘intelligent’ world will challenge long-held societal beliefs and will create significant moral and ethical debates, such as how hyperconnectivity will impact people’s privacy and who owns whose data. This world will also upend and challenge tried-and-true business models that have been around for decades.”

The mobile industry is increasingly pinning its hopes on 5G to address key challenges, generate new revenues, manage exploding mobile data traffic volumes and better compete against OTT providers.

“This hope appears to be premature, however, as the economics of 5G still do not make sense, evident in the lack of a viable business case for the technology. Said differently, it remains uncertain whether the revenue generation possibilities from 5G will be attractive enough to justify the infrastructure cost to deploy 5G.”

……………………………………………………………………………………………………………………….

Though use cases for 5G were myriad at MWC2018, particularly as they relate to Internet of Things (IoT), it remains to be seen how CSPs, and consequently vendors, will grow revenue from 5G. According to TBR’s 1Q18 5G Telecom Market Landscape, with the exception of fixed wireless broadband access, most of the operators that have made formal commitments to deploy 5G thus far have justified those investments by the efficiency gains that are realizable versus LTE. Said differently, the main driver of 5G investment will be the cost efficiencies the technology provides operators to remain competitive in their core business, which is offering traditional connectivity services.

In order for the business case to materialize for 5G use cases and drive revenue for CSPs, significant developmental progress must be made in deep fiber, wireless densification, edge computing and regulatory reform, among other areas. It will take years for these areas to be built up to the level required to begin delivering on the vision of some breakthrough use cases, such as hyper-connected cars and other mission-critical IoT use cases that would require 5G technology to become commercially viable.

Low latency is essential to enable commercialization of mission-critical use cases A significant reduction in latency is required to realize mission-critical uses for the network. Specifically, TBR notes that the mobile industry will have to make two variables align to see these use cases become commercialized.

First, latency must be lower than 5 milliseconds, and second, that latency must be sustained at 100% reliability. If either of these requirements is not fully met, mission-critical uses of the network will not be implemented due to a variety of considerations, including insurance and risk. For example, remote surgery will require sustained, ultralow latency for the duration of the procedure. Any lapse in connectivity, even for a millisecond, could prove disastrous from a patient outcome perspective. Insurance companies would not insure such a use case unless they are confident in the technology’s ability to perform.

TBR notes that though mission-critical uses of the network are compelling, the infrastructure cost to achieve these two foundational parameters is almost cost prohibitive. Due to the limitations of physics, spectrum and capital, many mission-critical 5G use cases will not be economically viable, at least through the next five years, according to TBR’s projections for infrastructure and ecosystem development. The most likely outcome is it will take until the mid- to late 2020s before networks are at the level of development needed to begin supporting the strict requirements of mission-critical use cases.

Where are the profit margins for vendors if everyone is open?

Vendors were nearly falling over themselves to proclaim how “open” they are and how much they are partnering with other companies. Though being open helps drive innovation, it could backfire from a business standpoint. With proprietary technology under attack from virtualization, white box, and other methods of disaggregating and open sourcing traditionally closed systems, vendors’ ability to differentiate, maintain top-line revenue and earn a profit will be significantly impacted.

TBR believes there is an existential threat on black box hardware pervading the ICT industry and that it will become more and more difficult to retain pricing power and earn a profit on proprietary hardware, which will push vendors increasingly into the software and services spheres to maintain their value-add in overall solutions. These market trends are reinforced by strong words spoken by chief technology officers (CTOs) from some of the largest operators in the world who believe the black box is dead and made that point very clear in their presentations at MWC2018.

In the digital era, value will reside in the software layer of the ICT stack, with hardware increasingly commoditized and services shifting to become more software-related in nature. Vendors, particularly incumbent vendors, that can pivot and align with these fundamental changes in the ICT market will be able to stay successful in the market.

Sophisticated telcos aim for the platform:

The world’s leading telecom operators see the value of the platform in the digital era and are steering their organizations to exploit the value of those platforms. Though connectivity will remain critical to realizing the digital era, the reality is that connectivity is increasingly viewed as a commodity, reflected in falling average revenue per user (ARPU). Conversely, the value and economic profit in the digital era will be obtainable by companies in the ecosystem that either own the platform or derive significant economic value and/or differentiation from being part of a platform.

TBR notes that more and more operators in the global ecosystem are actively looking for ways to reduce their exposure to the connectivity layer, either through business diversification or by engaging in network sharing or infrastructure spin-offs or divestitures, to redeploy capital toward other domains, such as content platforms, advertising platforms and virtual network services platforms. The topic of infrastructure handling in the digital era was discussed by several well-known presenters in various keynotes and panel discussions during MWC2018.

The car is the smartphone of the 5G era:

Many demos on the floor were related to connected transportation, particularly as it pertains to the car. Samsung put it best, stating that the car is the new smartphone. There is a lot riding on connected transportation to take off. Should there be a “killer app” for the car, much like the App Store was for iPhone and smartphones in general, it could become a key driver for 5G investment at scale because connected transportation would require significant investment in 5G infrastructure to support the latency and bandwidth requirements. Some vendors posited that the “killer app” for the car will be voice assistance. Samsung, via its Bixby platform, and other vendors aim to be the central platform residing in the brain of the car of the future. There are also industry organizations established to facilitate the development of the connected car, most notably the 5G Automotive Association (5GAA).

Regulators need to get progressive faster:

Regulatory reform remains slow, and this is stifling industry development. On one hand, regulators are trying to maintain the status quo and continue to deal with CSPs like they are utilities, while on the other hand, this stance is hindering CSPs’ ability to introduce innovation in the market and effectively compete against new competitors, such as OTT providers. Political debates aside, at the very least, regulators need to be progressive and create a more level playing field between CSPs and new entrants that compete against them, particularly OTT companies, which have come to dominate the digital economy.

Conclusion:

Business models for CSPs and other enterprises will fundamentally change during the digital era. Those companies that can navigate the landscape and align themselves with market shifts will be best able to remain profitable as their historical business models are upended by innovation. There is hope in the mobile industry that revenue growth will return, even though the actual use case(s) that will provide that growth remains elusive. In the meantime, while operators continue their search, they will remain in a sustained cost optimization phase to stay cost competitive in their traditional businesses. 5G holds some promise for a return to revenue growth, but the technology will require multiple “killer apps” to drive significant market development and deliver on the vision of a hyperconnected, intelligent world.

AT&T at DB Investor Conference: Strategic Services, Fiber & Wireless to Drive Revenue Growth

At the Deutsche Bank Media, Telecom and Business Services Conference, John Stephens, senior executive vice president and chief financial officer, AT&T discussed the company’s plans for 2018 and beyond. Mr. Stephens said AT&T remains confident that it is on the right track to get its wireline business services back to positive growth as more customers transition to next-generation strategic services like SD-WAN and Carrier Ethernet. However, the drag from legacy services will continue to be an issue for the near term. He then outlined the company’s priorities for 2018, which include closing its pending acquisition of Time Warner and investing $23 billion in capital to build the best gigabit network in the United States.

On the entertainment side of the business, AT&T plans to launch the next generation of its DIRECTV Now video streaming service in the first half of 2018. The new platform will include features like cloud DVR and a third video stream. Additional features expected to launch later in 2018 include pay-per-view functionality and more video on demand. Note that DIRECT TV Now can operate over a wireline or wireless network with sufficient bandwidth to support video streaming. Stephens said during the interview:

“……Giving us this opportunity to come up with a new platform later in this first half of this year, the second-generation plant for giving customers Cloud DVR, additional ability to pay per view and most sporting events and movies, and all kinds of other capabilities is what we’re seeing here, that’s what we want to do with regard to that entertain business and transitioning and we’re confident that we’re on the right track and it’s going quite well.”

The company’s 2018 plans also include improved profitability in its wireless operations in Mexico and, after the Time Warner acquisition closes, deployment of a new advertising and analytics platform that will use the company’s customer data to bring new, data-driven advertising capabilities within premium video. And, as always, AT&T remains laser-focused on maintaining an industry-leading cost structure.

AT&T’s investment plans include deployment of the FirstNet network, America’s first nationwide public safety broadband network specifically designed for our nation’s police, firefighters, EMS and other first responders.

“We were 56 out of 56, 50 states, 5 territories and the districts, probably all choose to put their public safety network, their FirstNet, their first responder network with AT&T, so that’s thrilling for us, that gives us the full funding of the program, it gives us the full authority to be the public service provider for the country, we’re really proud of that, and only because of the business aspects that’s serving our fellow citizens and being able to participate in the honorable job of saving lives and protecting people. So we’re really jazzed up about that.

Secondly, our plans were made last year for how to build out, and we’ve now been given the authority and the official build plans, approved build plans from the FirstNet authority. We spend last year investing in the core network, I think if people filed us in the fourth quarter; they said we actually got a $300 million reimbursement from the FirstNet authority for the expenditures we incurred last year. So the relentless preemption, the prioritized service refers to prices for police and fire and handling some emergency medical personal; all of that’s been done and now we’re out deploying the network, not only the 700 but also our AWS and WCS, our inventoried network that we now get to put into service on a very economic basis because we can do one tower client, we have the crane out there once, we have the people out there once and they’ve put all three pieces of spectrum at it once.”

The company will also enhance wireless network quality and capacity and plans to be the first to launch mobile “5G” service in 12 cities by the end of the year. AT&T announced in February that Atlanta, GA; Dallas and Waco, TX. will be among its first “5G” markets.

“We think about 5G is 5G evolution and I say that because it’s really important to put it all in perspective. So we think FirstNet, put WCS, AWS with 700 band 14 [ph], and use carrier aggregation and you use forward [indiscernible]; we’ve done that kind of test without the 700, we did that in San Francisco, we got 750 mag speeds in the City of San Francisco on this new network, this new 5G evolution; it’s using the LTE technology, it’s using the existing network but all this new technology. So if you think about that evolution now, when you lower that network hub, those 750 theoretical speeds might go down to 150 or 100 or somewhere down but tremendous speed even on a loaded network; so that’s the first step, we’re doing that now extensively and we’re going to do more of that as we build the first step that work out and put the 700 band in. So that’s the first step for us in this evolution.

Second, people might not think about this way but for us absolutely critical is the fiber bill. We’re taking a lot of fiber out to the Prime [ph], we’re taking a lot of fiber out to business locations, currently we have about 15 million locations with fiber between business and consumer, and by July next year, we’ll have about 22 million, about 8 million business, about 14 million to the Prime if you will, for consumers. So fiber is the key, and it’s a key not only delivering to the home or to the business but for the backhaul support. So if you’re an integrated carrier like we are and you’re building this fiber to go to the home, you’re going to pass the tower, you’re going to get fiber to that tower, you’re going to pass the business location, shopping mall, strip center, you’re going to build out to those.

So 5G is the second stage, we’ve got to think all the inter-gig this is the ability to deliver broadband overall electrical power lines, we’re testing that, we’ll see how that goes, that’s another step. If you think about using millimeter wave to do backhaul for small cells in really congested areas, we have high traffic volumes, you want to take a lot of traffic off, we have tested that, we have used millimeter wave to do that, we can do that. If you think about millimeter wave to do fixed wireless; so from the ally to my home, we have tested that we have the capability to do that, the challenges on that is where do you take it from the ally, where do you offload it, give it on to the network at what those costs are, but we can do that.

Lastly, you will see us put 5G into the core network. All of those things that were going to have to be measured by one of the chipsets ready for the handsets, we expect the chipsets might be next year, handset will come after that but we’re looking at the historically slow upgrade timeframe for phones. We had a couple of quarters last year that the upgrade rates were about 4%, that would equate the 25 quarters before your phone base turned over in an extreme example; so suggesting that things are going to be in the core network, it’s going to take a while, we’ll have pucks [ph] out by the end of the year, that will help but you have to have balance with regard to this.

When you think about those business cases, you think about those augmented reality and virtual reality and robotics and autonomous cars and things on the edge, those are going to be really important, that’s where the business cases will take us but we’ve got a long way to go before we get there. As we build FirstNet, we have been good fortunate being able to so to speak build the network house and leave the room for our 5G capability so that when it’s ready, we can just plug it in to do it with software defined network design, we had a great advantage for that but we’re going to have to make sure we have all of the equipment, not only switching equipment, the radio, the antenna but also the handset equipment before we start — if you will over-indexing on the revenues opportunities, they will be there, we will lead in the gigabit network.

We’ll have the best one because what FirstNet provides us and what the technology developments have allowed us and we will use 5G in that network but I want to be careful about how we think about when it’s going to be — you’re going to have a device in your hand and walking around on a normal kind of usage basis using 5G.”

Stephens said that AT&T reaches about 15 million customer locations with fiber. This includes more than 7 million consumer customer locations and more than 8 million business customer locations within 1,000 feet of AT&T’s fiber footprint. He expects this to increase to about 22 million locations by mid-2019.

…………………………………………………………………………………………………………………………………….

For 2018, AT&T expects organic adjusted earnings per share growth in the low single digits, driven by improvements in wireless service revenue trends, improving profitability from its international operations, cost structure improvements from its software defined network/network function virtualization efforts and lower depreciation versus 2017.

Like earlier quarters, the challenges in the fourth quarter for AT&T came from declines in legacy services like Frame Relay and ATM. The company noted that fourth-quarter declines in legacy products were partially offset by continued growth in strategic business services. Total business wireline revenues were $7.4 billion, down 3.5% year-over-year but up sequentially.

Stephens said that more AT&T customers are adopting next-gen services, creating a new foundation for wireline business revenue growth.

“What’s happened is our customers have embraced the strategic services,” Stephens said. “Strategic services are over a $12 billion annual business and are over 42% or so of our revenue and are still growing quickly.”

Indeed, AT&T’s fourth-quarter strategic business services revenues grew by nearly 6%, or $176 million, versus the year-earlier quarter. These services represent 42% of total business wireline revenues and more than 70% of wireline data revenues and have an annualized revenue stream of more than $12 billion. This growth helped offset a decline of more than $400 million in legacy service revenues in the quarter.

Stopping short of forecasting overall wireline business service revenue growth, Stephens said that AT&T will eventually see a point where strategic services will surpass legacy declines.

“As we get past this inflection point where strategic services are growing at a faster than the degradation of legacy, we can get to a point where we are growing revenues,” Stephens said. “We’re not predicting that but we see the opportunity to do that.”

To achieve these business services revenue goals, AT&T’s business sales team is taking a two-pronged approach: retaining legacy services or converting them to strategic services.

While wireline business services continue to be a key focus for AT&T, the service provider is not surprisingly looking at ways to leverage its wireless network to help customers solve issues in their business. The wireless network can be used to support a business customer’s employee base while enabling IoT applications like monitoring of a manufacturing plant or a trucking fleet. Stephens expanded on the role of IoT to close out the interview:

“…you (‘ve) got to realize that if you build this FirstNet network out, things like IoT, things like coverage for business customers, things like the ability to connect factories that are automated, the robotics that have to have wireless connectivity to a controlled center for business customers, all improves dramatically and with that comes this opportunity to sell these wireless services. When you’re in — with the CIO and you can solve his security business, you can solve his big pipe of strategic services but you can also solve some wireless issues that his HR guy has for his connectivity for his employees, you can solve some issues that his engineering department has because they want to get real-time information about how their products are working out, whether it’s a car or a jet engine or a tractor, how it’s working in the field in real-time or you can give them new product and services demand for their internal sources like their pipelines or their shipping fleet.

This IoT capability can solve a lot of issues, you can make that CIO as the success factor for all his related peers, that’s a great thing to great solutions approach to business and that’s what we’re trying to do. Our team is trying to provide solutions for the business customers and we think having those two things together are really important.”

References:

https://www.businesswire.com/news/home/20180306006770/en/John-Stephens-ATT-CFO-Discusses-Plans-2018

https://techblog.comsoc.org/2018/01/14/att-mobile-5g-will-use-mm-wave-small-cells/