Cloud Data Centers

Gartner: AWS, Azure, and Google Cloud top rankings for Cloud Infrastructure and Platform Services

Gartner’s latest Magic Quadrant report for cloud infrastructure and platform services (CIPS) ranks Amazon Web Services (AWS), Microsoft Azure, and Google Cloud as the top cloud service providers.

Beyond the top three players, Gartner placed Alibaba Cloud in the “visionaries” box, and ranked Oracle, Tencent Cloud, and IBM as “niche players,” in that order.

The scope of Gartner’s Magic Quadrant for CIPS includes infrastructure as a service (IaaS) and integrated platform as a service (PaaS) offerings. These include application PaaS (aPaaS), functions as a service (FaaS), database PaaS (dbPaaS), application developer PaaS (adPaaS) and industrialized distributed cloud offerings that are often deployed in enterprise data centers (i.e. private clouds).

Figure 1: Magic Quadrant for Cloud Infrastructure and Platform Services

……………………………………………………………………………………………..

……………………………………………………………………………………………..

1. Gartner analysts praise Amazon AWS for its broad support of IT services, including cloud native, edge compute, and processing mission-critical workloads. Also noteworthy is Amazon’s “engineering prowess” in designing CPUs and silicon. This focus on owning increasingly larger portions of the supply chain for cloud infrastructure bolsters the No. 1 cloud provider’s long-term outlook and earns it advantages against competitors, according to the Gartner report.

“AWS often sets the pace in the market for innovation, which guides the roadmaps of other CIPS providers. As the innovation leader, AWS has materially more mind share across a broad range of personas and customer types than all other providers,” the analysts wrote.

AWS, which recently achieved $59 billion in annual revenues, contributed 13% of Amazon’s total revenue and almost 54% of its profit during second-quarter 2021.

AWS’s future focus is on attempting to own increasingly larger portions of the supply chain used to deliver cloud services to customers. Its operations are geographically diversified, and its clients tend to be early-stage startups to large enterprises.

……………………………………………………………………………………

2. Microsoft Azure, which remains the #2 Cloud Services Provider, sports a 51% annual growth rate. It earned praise from Gartner for its strength “in all use cases, which include the extended cloud and edge computing,” particularly among Microsoft-centric organizations.

The No. 2 public cloud provider also enjoys broad appeal. “Microsoft has the broadest set of capabilities, covering a full range of enterprise IT needs from SaaS to PaaS and IaaS, compared to any provider in this market,” the analysts wrote.

Microsoft has the broadest sets of capabilities, covering a full range of enterprise IT needs from SaaS to PaaS and IaaS, compared to any provider in this market. From the perspective of IaaS and PaaS, Microsoft has compelling capabilities ranging from developer tooling such as Visual Studio and GitHub to public cloud services.

Enterprises often choose Azure because of the trust in Microsoft built over many years. Such strategic alignment with Microsoft gives Azure advantages across nearly every vertical market.

“Strategic alignment with Microsoft gives Azure advantages across nearly every vertical market,” Gartner said. However, Gartner criticized Microsoft for very complex licensing and contracting. Also, Microsoft sales pressures to grow overall account revenue prevent it from effectively deploying Azure to bring down a customer’s total Microsoft costs.

Microsoft Azure’s forays in operational databases and big data solutions have been markedly successful over the past year. Azure’s Cosmos DB and its joint offering with Databricks stand out in terms of customer adoption.

………………………………………………………………………………………

3. Google Cloud Platform (GCP) is strong in nearly all use cases and is slowly improving its edge compute capabilities. Google continues to invest in being a broad-based provider of IaaS and PaaS by expanding its capabilities as well as the size and reach of its go-to-market operations. Its operations are geographically diversified, and its clients tend to be startups to large enterprises.

The company is making gains in mindshare among enterprises and “lands at the top of survey results when infrastructure leaders are asked about strategic cloud provider selection in the next few years,” Gartner analysts wrote. Google is also closing “meaningful gaps with AWS and Microsoft Azure in CIPS capabilities,” and outpacing its larger competitors in some cases, according to the report.

The analysts also noted that Google Cloud “is the only CIPS provider with significant market share that currently operates at a financial loss.” The No. 3 public cloud provider reported a 54% year-over-year revenue increase and a 59% decrease in operating losses during Q2.

………………………………………………………………………………..

Separately, Dell’Oro Group Research Director Baron Fung recently said that hyperscalers make up a big portion of the overall IT market, with the 10 largest cloud-service providers, including AWS, Google, and Alibaba, accounting for up to 40% of global data center spending, and “some of these companies can have really tremendous weight on the ecosystem.”

The Dell’Oro report noted that some providers have deployed accelerated servers using internally developed artificial intelligence (AI) chips, while other cloud providers and enterprises have commonly deployed solutions based on graphics processing units (GPUs) and FPGAs.

Fung explained that this model has also spilled over into those cloud providers also building their own servers and networking equipment to better fit their needs while “moving away from the traditional model in which users are buying equipment from companies like Dell and [Hewlett Packard Enterprise]. … It’s really disrupting the vendor landscape.”

Certain applications—such as cloud gaming, autonomous driving, and industrial automation—are latency-sensitive, requiring Multi-Access Edge Compute, or MEC, nodes to be situated at the network edge, where sensors are located. Unlike cloud computing, which has been replacing enterprise data centers, edge computing creates new market opportunities for novel use cases.

…………………………………………………………………………………

References:

https://www.gartner.com/doc/reprints?id=1-26YXE86I&ct=210729&st=sb

AWS deployed in Digital Realty Data Centers at 100Gbps & for Bell Canada’s 5G Edge Computing

I. Digital Realty, the largest global provider of cloud- and carrier-neutral data center, colocation and interconnection solutions, announced today the deployment of Amazon Web Services (AWS) Direct Connect 100Gbps capability at the company’s Westin Building Exchange in Seattle, Washington and on its Interxion Dublin Campus in Ireland, bringing one of the fastest AWS Direct Connect [1.] capabilities to PlatformDIGITAL®. Digital Realty’s platform connects 290 centers of data exchange with over 4,000 participants around the world, enabling enterprise customers to scale digital business and interconnect distributed workflows on a first of its kind global data center platform.

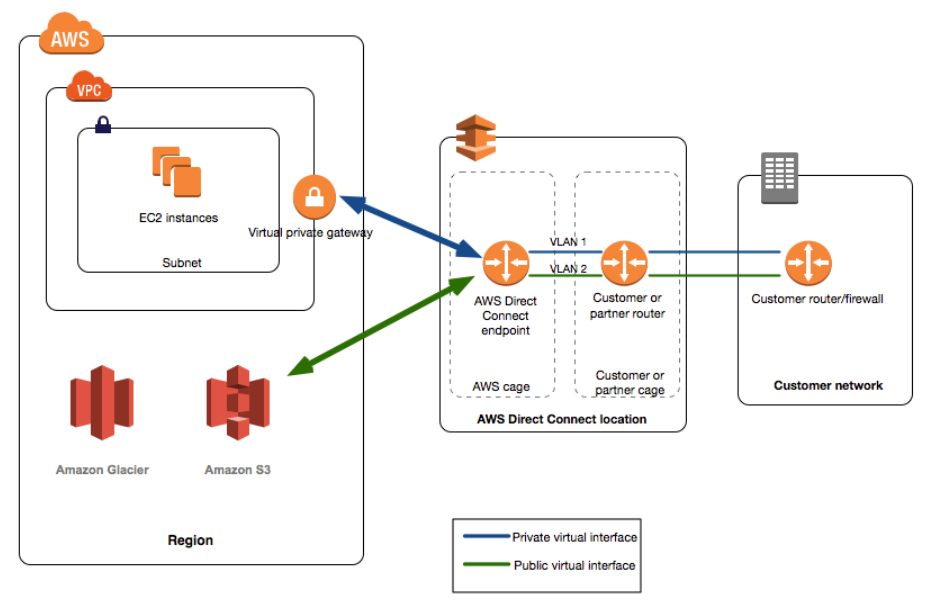

Note 1. AWS Direct Connect is a cloud service solution that makes it easy to establish a dedicated network connection from your premises to AWS. This can increase bandwidth throughput and provide a more consistent network experience than internet-based connections.

……………………………………………………………………………………………………………………………….

As organizations bring on new technologies and solutions such as artificial intelligence (AI) and IoT at scale, the explosive growth of digital business is posing new challenges, as data takes on its own gravity, becoming heavier, denser, and more expensive to move.

The new AWS Direct Connect 100Gbps is tailored to providing easy access to larger data sets, enabling high availability, reliability and lower latency. As a result, customers will be able to move bandwidth-heavy workloads seamlessly – and break through the barriers posed by data gravity. Customers gain access to strategic IT infrastructure that can aggregate and maintain data with less design time and spend, enabling access to AWS with one of the fastest and highest quality AWS network connections available.

As an AWS Outposts Ready Partner, Digital Realty’s global platform is optimized to support the needs of data-intensive, secure hybrid IT deployments. Digital Realty supports AWS Outposts deployments by enabling access to more than 40 AWS Direct Connect locations globally to address local processing, compliance, and storage requirements, while optimizing cost and performance. When coupled with the availability of AWS Direct Connect 100Gbps connections, the Westin Building Exchange and Interxion Dublin campuses become ideal meeting places for customers to tackle data gravity challenges and unlock new opportunities with their AWS Outposts deployments.

“As emerging technologies such as AI, VR and blockchain move from the margins to the mainstream, enterprises need new levels of performance from their hybrid solutions,” said Tom Sly, General Manager, AWS Direct Connect. “Deploying AWS Direct Connect at 100Gbps at Digital Realty facilities in Seattle and Dublin is critical to our strategy of helping customers build more sophisticated applications with increased flexibility, scalability and reliability. We’re excited to see the value Digital Realty’s PlatformDIGITAL® delivers for our mutual customers.”

The Westin Building Exchange serves as a primary interconnection hub for the Pacific Northwest, linking Canada, Alaska and Asia along the Pacific Rim. The building is one of the most densely interconnected facilities in North America, and is home to leading global cloud, content and interconnection providers, housing over 150 carriers and more than 10,000 cross-connects, giving Amazon customers low-latency access to the largest companies and services representing the digital economy. The 34-story tower is adjacent to Amazon’s existing 4.1 million square foot campus in Seattle.

Digital Realty offers six colocation data centers in the Irish capital, which forms a strategic bridge between Europe and the U.S. Ireland has particular significance as a global trading hub and provides the headquarters location for several global multinationals within the software, finance and life science industries. Multiple transatlantic cables also land in Ireland before continuing to the UK or continental Europe, making Interxion Dublin a prime location for the new AWS Direct Connect 100Gbps at the heart of a vibrant connected data community.

“Today’s announcement of the opening of AWS Direct Connect 100Gbps on-ramps significantly expands opportunities for customers to scale their digital transformation through our global PlatformDIGITAL®,” added Digital Realty Chief Technology Officer Chris Sharp. “AWS serves some of the world’s most innovative and demanding customers, from start-up to enterprise, that are looking to drive the digital economy forward. Our platform expands the coverage, capacity, and next-generation connectivity that AWS customers need to extend workloads to the cloud rapidly. We are honored to open up next-generation access in collaboration with AWS and specifically at the heart of the rich digital communities at the Westin Building Exchange and on our Interxion Dublin campus.”

The new deployments create centers of data exchange in Network Hubs deployed on PlatformDIGITAL®, enabling distributed workflows to be rapidly scaled and securely interconnected – reducing operating costs, enhancing visibility, saving time and improving compliance. The new capability also gives AWS customers instant access to a growing list of powerful AWS services such as Blockchain, Machine Learning, IoT and countless others – all over a direct, private connection optimized for high performance and security.

AIB, Inc., a leading data exchange and management firm with a software as a service platform deployed at over 1,600 automotive industry customers, recognized the value of deploying a physical Network Hub on PlatformDIGITAL® coupled with a virtual direct interconnection to AWS to enable flexibility in its hybrid IT environment.

“Our Texas-based operations required new cloud zone diversity solutions for our cloud native national vision. Digital Realty provided an innovative and comprehensive solution for AWS cloud access through PlatformDIGITAL®,” said Kellen Dunham, CTO, AIB, Inc.

Digital Realty’s global platform enables low-latency access to both the nearest AWS Region as well as a wide array of options to connect edge deployments or devices. Customers can securely connect to their desired AWS Region using both physical and virtual connectivity options. Globally, PlatformDIGITAL® offers access to more than 40 AWS Direct Connect locations, including 11 in EMEA, providing secure, high-performance access to numerous AWS Outposts-Ready data centers around the world. In addition, the Digital Realty Internet Exchange (DRIX) supports AWS Direct Peering capabilities and dedicated access to multiple third-party Internet Exchanges on PlatformDIGITAL®, providing a direct path from on-premise networks to AWS. The solution is part of PlatformDIGITAL®’s robust and expanding partner community that solves hybrid IT challenges for the enterprise.

About Digital Realty:

Digital Realty supports the world’s leading enterprises and service providers by delivering the full spectrum of data center, colocation and interconnection solutions. PlatformDIGITAL®, the company’s global data center platform, provides customers a trusted foundation and proven Pervasive Datacenter Architecture (PDx™) solution methodology for scaling digital business and efficiently managing data gravity challenges. Digital Realty’s global data center footprint gives customers access to the connected communities that matter to them with 290 facilities in 47 metros across 24 countries on six continents. To learn more about Digital Realty, please visit digitalrealty.com or follow us on LinkedIn and Twitter.

Additional Resources:

- For more information on locations and availability please visit www.digitalrealty.com/cloud/aws-direct-connect

- Learn about Digital Realty’s Data Hub featuring AWS Outposts solution for data localization and compliance on PlatformDIGITAL

- Explore global coverage options on PlatformDIGITAL®

- Read the AIB case study on deploying hybrid IT flexibly with Digital Realty and AWS

………………………………………………………………………………………………………………………………………

II. Bell Canada today announced it has entered into an agreement with Amazon Web Services, Inc. (AWS) to modernize the digital experience for Bell customers and support 5G innovation across Canada. Bell will use the breadth and depth of AWS technologies to create and scale new consumer and business applications faster, as well as enhance how its voice, wireless, television and internet subscribers engage with Bell services and content such as streaming video. In addition, AWS and Bell are teaming up to bring AWS Wavelength to Canada, deploying it at the edge of Bell’s 5G network to allow developers to build ultra-low-latency applications for mobile devices and users. With this rollout, Bell will become the first Canadian communications company to offer AWS-powered multi-access edge computing (MEC) to business and government users.

“Bell’s partnership with AWS further heightens both our 5G network leadership and the Bell customer experience with greater automation, enhanced agility and streamlined service options. Together, we’ll provide the next-generation service innovations for consumers and business customers that will support Canada’s growth and prosperity in the years ahead,” said Mirko Bibic, President and CEO of BCE and Bell Canada. “With this first in Canada partnership to deploy AWS Wavelength at the network edge, where 5G’s high capacity, unprecedented speed and ultra low latency are crucial for next-generation applications, Bell and AWS are opening up all-new opportunities for developers to enhance our customers’ digital experiences. As Canada recovers from COVID-19 and looks forward to the economic, social and sustainability advantages of 5G, Bell is moving rapidly to expand the country’s next-generation network infrastructure capabilities. Bell’s accelerated capital investment plan, supported by government and regulatory policies that encourage significant investment and innovation in network facilities, will double our 5G coverage this year while growing the high-capacity fibre connections linking our national network footprint.”

The speed and increased bandwidth capacity of the Bell 5G network support applications that can respond much more quickly and handle greater volumes of data than previous generations of wireless technology. Through its relationship with AWS, Bell will leverage AWS Wavelength to embed AWS compute and storage services at the edge of its 5G telco networks so that applications developers can serve edge computing workloads like machine learning, IoT, and content streaming. Bell and AWS will move 5G data processing to the network edge to minimize latency and power customer-led 5G use cases such as immersive gaming, ultra-high-definition video streaming, self-driving vehicles, smart manufacturing, augmented reality, machine learning inference and distance learning throughout Canada. Developers will also have direct access to AWS’s full portfolio of cloud services to enhance and scale their 5G applications.

Optimized for MEC applications, AWS Wavelength minimizes the latency involved in sending data to and from a mobile device. AWS delivers the service through Wavelength Zones, which are AWS infrastructure deployments that embed AWS compute and storage services within a telecommunications provider’s datacenters at the edge of the 5G network so that data traffic can reach application servers within the zones without leaving the mobile provider’s network. Application data need only travel from the device to a cell tower to an AWS Wavelength Zone running in a metro aggregation site. This results in increased performance by avoiding the multiple hops between regional aggregation sites and across the internet that traditional mobile architectures require.

Outside of the AWS Wavelength deployment, Bell is also continuing to evolve its offerings to enhance its customers’ digital experiences. From streaming media to network performance to customer service, Bell will leverage AWS’s extensive portfolio of cloud capabilities to better serve its tens of millions of customers coast to coast. This work will allow Bell’s product innovation teams to streamline and automate processes as well as adapt more quickly to changing market conditions and customer preferences.

“As the first telecommunications company in Canada to provide access to AWS Wavelength, Bell is opening the door for businesses and organizations throughout the country to combine the speed of its 5G network with the power and versatility of the world’s leading cloud. Together, Bell and AWS are bringing the transformative power of cloud and 5G to users all across Canada,” said Andy Jassy, CEO of Amazon Web Services, Inc. “Cloud and 5G are changing the business models for telecommunications companies worldwide, and AWS’s unmatched infrastructure capabilities in areas like machine learning and IoT will enable leaders like Bell to deliver new digital experiences that will enhance their customers’ lives.”

Launched in June 2020, Bell’s 5G network is now available to approximately 35% of the Canadian population. On February 4, Bell announced it was accelerating its typical annual capital investment of $4 billion by an additional $1 billion to $1.2 billion over the next 2 years to rapidly expand its fibre, rural Wireless Home Internet and 5G networks, followed May 31 by the announcement of a further up to $500 million increase in capital spending. With this accelerated capital investment plan, Bell’s 5G network is on track to reach approximately 70% of the Canadian population by year end.

5G will support a wide range of new consumer and business applications in coming years, including virtual and augmented reality, artificial intelligence and machine learning, connected vehicles, remote workforces, telehealth and Smart Cities, with unprecedented IoT opportunities for business and government. 5G is also accelerating the positive environmental impact of Bell’s networks. The Canadian Wireless Telecommunications Association estimates 5G technology can support 1000x the traffic at half of current energy consumption over the next decade, enhancing the potential of IoT and other next-generation technologies to support sustainable economic growth, and supporting Bell’s own objective to be carbon neutral across its operations in 2025.

About Bell Canada:

The Bell team builds world-leading broadband wireless and fiber networks, provides innovative mobile, TV, Internet and business communications services and delivers the most compelling content with premier television, radio, out of home and digital media brands. With a goal to advance how Canadians connect with each other and the world, Bell serves more than 22 million consumer and business customer connections across every province and territory. Founded in Montréal in 1880, Bell is wholly owned by BCE Inc. (TSX, NYSE: BCE). To learn more, please visit Bell.ca or BCE.ca.

Bell supports the social and economic prosperity of our communities with a commitment to the highest environmental, social and governance (ESG) standards. We measure our progress in increasing environmental sustainability, achieving a diverse and inclusive workplace, leading data governance and protection, and building stronger and healthier communities. This includes confronting the challenge of mental illness with the Bell Let’s Talk initiative, which drives mental health awareness and action with programs like the annual Bell Let’s Talk Day and Bell funding for community care, research and workplace programs nationwide all year round.

……………………………………………………………………………………………………………………………………..

Comment and Analysis:

AWS already has an edge compute footprint that covers parts of Asia, Europe and North America. AWS, Google Cloud and Microsoft Azure increasingly (unsurprisingly) look like the real power brokers and empire builders in multi-access/mobile edge computing. Rogers and Telus, Bell’s two main rivals. will likely contract with one of the three big cloud service providers for their 5G edge computing needs.

References:

Vodafone and Google Cloud to Develop Integrated Data Platform

Vodafone and Google Cloud today announced a new, six-year strategic partnership to drive the use of reliable and secure data analytics, insights, and learnings to support the introduction of new digital products and services for Vodafone customers simultaneously worldwide.

In a significant expansion of their existing agreement, Vodafone and Google Cloud will jointly build a powerful new integrated data platform with the added capability of processing and moving huge volumes of data globally from multiple systems into the cloud.

The platform, called ‘Nucleus‘, will house a new system – ‘Dynamo‘ – which will drive data throughout Vodafone to enable it to more quickly offer its customers new, personalized products and services across multiple markets. Dynamo will allow Vodafone to tailor new connectivity services for homes and businesses through the release of smart network features, such as providing a sudden broadband speed boost.

Capable of processing around 50 terabytes of data per day, equivalent to 25,000 hours of HD film (and growing), both Nucleus and Dynamo, which are industry firsts, are being built in-house by Vodafone and Google Cloud specialist teams. Up to 1,000 employees of both companies located in Spain, the UK, and the United States are collaborating on the project.

Vodafone has already identified more than 700 use-cases to deliver new products and services quickly across Vodafone’s markets, support fact-based decision-making, reduce costs, remove duplication of data sources, and simplify and centralize operations. The speed and ease with which Vodafone’s operating companies in multiple countries can access its data analytics, intelligence, and machine-learning capabilities will also be vastly improved.

By generating more detailed insight and data-driven analysis across the organization and with its partners, Vodafone customers around the world can have a better and more enriched experience. Some of the key benefits include:

- Enhancing Vodafone’s mobile, fixed, and TV content and connectivity services through the instantaneous availability of highly personalized rewards, content, and applications. For example, a consumer might receive a sudden broadband speed boost based on personalized individual needs.

- Increasing the number of smart network services in its Google Cloud footprint from eight markets to the entire Vodafone footprint. This allows Vodafone to precisely match network roll-out to consumer demand, increase capacity at critical times, and use machine learning to predict, detect, and fix issues before customers are aware of them.

- Empowering data scientists to collaborate on key environmental and health issues in 11 countries using automated machine learning tools. Vodafone is already assisting governments and aid organisations, upon their request, with secure, anonymised, and aggregated movement data to tackle COVID-19. This partnership will further improve Vodafone’s ability to provide deeper insights, in accordance with local laws and regulations, into the spread of disease through intelligent analytics across a wider geographical area.

- Providing a complete digital replica of many of Vodafone’s internal support functions using artificial intelligence and advanced analytics. Called a digital twin, it enables analytic models on Google Cloud to improve response times to enquiries and predict future demand. The system will also support a digital twin of Vodafone’s vast digital infrastructure worldwide.

- In addition, Vodafone will re-platform its entire SAP environment to Google Cloud, including the migration of its core SAP workloads and key corporate SAP modules such as SAP Central Finance.

Johan Wibergh, Chief Technology Officer for Vodafone, said: “Vodafone is building a powerful foundation for a digital future. We have vast amounts of data which, when securely processed and made available across our footprint using the collective power of Vodafone and Google Cloud’s engineering expertise, will transform our services, to our customers and governments, and the societies where they live and serve.”

Thomas Kurian, CEO at Google Cloud, commented: “Telecommunications firms are increasingly differentiating their customer experiences through the use of data and analytics, and this has never been more important than during the current pandemic. We are thrilled to be selected as Vodafone’s global strategic cloud partner for analytics and SAP, and to co-innovate on new products that will accelerate the industry’s digital transformation.”

Revenues at Google’s Cloud business grew 46% this past quarter. However, Google continues to be a distant third to Amazon and Microsoft in the cloud business.

Technical Notes:

All data generated by Vodafone in the markets in which it operates is stored and processed in the required Google Cloud facilities as per local jurisdiction requirements and in accordance with local laws and regulations. Customer permissions and Vodafone’s own rigorous security and privacy by design processes also apply.

On the back of their collaborative work, Vodafone and Google Cloud will also explore opportunities to provide consultancy services, offered either jointly or independently, to other multi-national organizations and businesses.

The platform is being built using the latest hybrid cloud technologies from Google Cloud to facilitate the rapid standardization and movement of data in both Vodafone’s physical data centers and onto Google Cloud. Dynamo will direct all of Vodafone’s worldwide data, extracting, encrypting, and anonymizing the data from source to cloud and back again, enabling intelligent data analysis and generating efficiencies and insight.

References:

https://cloud.google.com/press-releases/2021/0503/vodafone-google-cloud (video)

MTN Consulting: Network operator capex forecast at $520B in 2025

Executive Summary:

Telco, webscale and carrier-neutral capex will total $520 billion by 2025 according to a report from MTN Consulting.. That’s compared with $420 billion in 2019.

- Telecom operators (telco) will account for 53% of industry Capex by 2025 vs 9% in 2019;

- Webscale operators will grow from 25% to 39%;

- Carrier-neutral [1.] providers will add 8% of total Capex in 2025 from 6% in 2019.

Note 1. A Carrier-neutral data center is a data center (or carrier hotel) which allows interconnection between multiple telecommunication carriers and/or colocation providers. It is not owned and operated by a single ISP, but instead offers a wide variety of connection options to its colocation customers.

Adequate power density, efficient use of server space, physical and digital security, and cooling system are some of the key attributes organizations look for in a colocation center. Some facilities distinguish themselves from others by offering additional benefits like smart monitoring, scalability, and additional on-site security.

……………………………………………………………………………………………………………………………………………………….

The number of telco employees will decrease from 5.1 million in 2019 to 4.5 million in 2025 as telcos deploy automation more widely and spin off parts of their network to the carrier-neutral sector.

By 2025, the webscale sector will dominate with revenues of approximately $2.51 trillion, followed by $1.88 trillion for the telco sector and $108 billion for carrier-neutral operators (CNNOs).

KEY FINDINGS from the report:

Revenue growth for telco, webscale and carrier-neutral sector will average 1, 10, and 7% through 2025

Telecom network operator (TNO, or telco) revenues are on track for a significant decline in 2020, with the industry hit by COVID-19 even as webscale operators (WNOs) experienced yet another growth surge as much of the world was forced to work and study from home. For 2020, telco, webscale, and carrier-neutral revenues are likely to reach $1.75 trillion (T), $1.63T, and $71 billion (B), amounting to YoY growth of -3.7%, +12.2%, and 5.0%, respectively. Telcos will recover and webscale will slow down, but this range of growth rates will persist for several years. By 2025, the webscale sector will dominate with revenues of approximately $2.51 trillion, followed by $1.88 trillion for the telco sector and $108 billion for carrier-neutral operators (CNNOs).

Network operator capex will grow to $520B by 2025

In 2019, telco, webscale and carrier-neutral capex totaled $420 billion, a total which is set to grow to $520 billion by 2025. The composition will change starkly though: telcos will account for 53% of industry capex by 2025, from 9% in 2019; webscale operators will grow from 25% to 39% in the same timeframe; and, carrier-neutral providers will add 8% of total capex in 2025 from their 2019 level of 6%.

By 2025, the webscale sector will employ more than the telecom industry

As telcos deploy automation more widely and cast off parts of their network to the carrier-neutral sector, their employee base should decline from 5.1 million in 2019 to 4.5 million in 2025. The cost of the average telco employee will rise significantly in the same timeframe, as they will require many of the same software and IT skills currently prevalent in the webscale workforce. For their part, webscale operators have already grown from 1.3 million staff in 2011 to 2.8 million in 2019, but continued rapid growth in the sector (especially its ecommerce arms) will spur further growth in employment to reach roughly 4.8 million by 2025. The carrier-neutral sector’s headcount will grow far more modestly, rising from 90 million in 2019 to about 119 million in 2025. Managing physical assets like towers tends to involve a far lighter human touch than managing network equipment and software.

Example of a Carrier Neutral Colo Data Center

RECOMMENDATIONS:

Telcos: embrace collaboration with the webscale sector

Telcos remain constrained at the top line and will remain in the “running to stand still” mode that has characterized their last decade. They will continue to shift towards more software-centric operations and automation of networks and customer touch points. What will become far more important is for telcos to actively collaborate with webscale operators and the carrier-neutral sector in order to operate profitable businesses. The webscale sector is now targeting the telecom sector actively as a vertical market. Successful telcos will embrace the new webscale offerings to lower their network costs, digitally transform their internal operations, and develop new services more rapidly. Using the carrier-neutral sector to minimize the money and time spent on building and operating physical assets not viewed as strategic will be another key to success through 2025.

Vendors: to survive you must improve your partnership and integration capabilities

Collaboration across the telco/webscale/carrier-neutral segments has implications for how vendors serve their customers. Some of the biggest telcos will source much of their physical infrastructure from carrier-neutral providers and lean heavily on webscale partners to manage their clouds and support new enterprise and 5G services. Yet telcos spend next to nothing on R&D, especially when compared to the 10% or more of revenues spent on R&D by their vendors and the webscale sector. Vendors who develop customized offerings for telcos in partnership with either their internal cloud divisions (e.g. Oracle, HPE, IBM) or AWS/GCP/Azure/Alibaba will have a leg up. This is not just good for growing telco business, but also for helping webscale operators pursue 5G-based opportunities. One of the earliest examples of a traditional telco vendor aligning with a cloud player for the telco market is NEC’s 2019 development of a mobile core solution for the cloud that can be operated on the AWS network; there will be many more such partnerships going forward.

All sectors: M&A is often not the answer, despite what the bankers urge

M&A will be an important part of the network infrastructure sector’s evolution over the next 5 years. However, the difficulty of successfully executing and integrating a large transaction is almost always underappreciated. There is incredible pressure from bankers to choose M&A, and the best ones are persuasive in arguing that M&A is the best way to improve your competitiveness, enter a new market, or lower your cost base. Many chief executives love to make the big announcements and take credit for bringing the parties together. But making the deal actually work in practice falls to staff way down the chain of command, and to customers’ willingness to cope with the inevitable hiccups and delays brought about by the transaction. And the bankers are long gone by then, busy spending their bonuses and working on their next deal pitch. Be extremely skeptical about M&A. Few big tech companies have a history of doing it well.

Webscale: stop abusing privacy rights and trampling on rules and norms of fair competition

The big tech companies that make up the webscale sector tracked by MTN Consulting have been rightly abused in the press recently for their disregard for consumer privacy rights, and overly aggressive, anti-competitive practices. After years of avoiding increased regulatory oversight through aggressive lobbying and careful brand management, the chickens are coming home to roost in 2021. Public concerns about abuses of privacy, facilitation of fake news, and monopolistic or (at the least) oligopolistic behavior will make it nearly impossible for these companies to stem the increased oversight likely to come soon from policymakers.

Australia’s pending law, the “News Media and Digital Platforms Bargaining Code,” could foreshadow things to come for the webscale sector, as do recent antitrust lawsuits against Facebook and Alphabet. Given that webscale companies are supposed to be fast moving and innovative, they should get out ahead of these problems. They need to implement wholesale, transparent changes to how they treat consumer privacy and commit to (and actually follow) a code of conduct that is conducive to innovation and competition. The billionaires leading the companies may even consider encouraging fairer tax codes so that some of their excessive wealth can be spread across the countries that actually fostered their growth.

ABOUT THIS REPORT:

This report presents MTN Consulting’s first annual forecast of network operator capex. The scope includes telecommunications, webscale and carrier-neutral network operators. The forecast presents revenue, capex and employee figures for each market, both historical and projected, and discusses the likely evolution of the three sectors through 2025. In the discussion of the individual sectors, some additional data series are projected and analyzed; for example, network operations opex in the telco sector. The forecast report presents a baseline, most likely case of industry growth, taking into account the significant upheaval in communication markets experienced during 2020. Based on our analysis, we project that total network operator capex will grow from $420 billion in 2020 to $520 billion in 2025, driven by substantial gains in the webscale and (much less so) carrier-neutral segments. The primary audience for the report is technology vendors, with telcos and webscale/cloud operators a secondary audience.

References:

………………………………………………………………………………………………………………………………………………….

January 8, 2021 Update:

Analysys Mason: Cloud technology will pervade the 5G mobile network, from the core to the RAN and edge

“Communications Service Providers (CSPs) spending on multi-cloud network infrastructure software, hardware and professional services will grow from USD4.3 billion in 2019 to USD32 billion by 2025, at a CAGR of 40%.”

5G and edge computing are spurring CSPs to build multi-cloud, cloud-native mobile network infrastructure

Many CSPs acknowledge the need to use cloud-native technology to transform their networks into multi-cloud platforms in order to maximise the benefits of rolling out 5G. Traditional network function virtualisation (NFV) has only partly enabled the software-isation and disaggregation of the network, and as such, limited progress has been made on cloudifying the network to date. Indeed, Analysys Mason estimates that network virtualisation reached only 6% of its total addressable market for mobile networks in 2019.

The telecoms industry is now entering a new phase of network cloudification because 5G calls for ‘true’ clouds that are defined by cloud-native technologies. This will require radical changes to the way in which networks are designed, deployed and operated, and we expect that investments will shift to support this new paradigm. The digital infrastructure used for 5G will be increasingly built as horizontal, open network platforms comprising multiple cloud domains such as mobile core cloud, vRAN cloud and network and enterprise edge clouds. As a result, we have split the spending on network cloud into spending on multiple cloud domains (Figure 1) for the first time in our new network cloud infrastructure report. We forecast that CSP spending on multi-cloud network infrastructure software, hardware and professional services will grow from USD4.3 billion in 2019 to USD32 billion by 2025, at a CAGR of 40%.

https://www.analysysmason.com/research/content/comments/network-cloud-forecast-comment-rma16/

Synergy Research: Hyperscale Operator Capex at New Record in Q3-2020

Hyperscale cloud operator capex topped $37 billion in Q3-2020, which easily set a new quarterly record for spending, according to Synergy Research Group (SRG). Total spending for the first three quarters of 2020 reached $99 billion, which was a 16% increase over the same period last year.

The top-four hyperscale spenders in the first three quarters of this year were Amazon, Google, Microsoft and Facebook. Those four easily exceeded the spending by the rest of the hyperscale operators. The next biggest cloud spenders were Apple, Alibaba, Tencent, IBM, JD.com, Baidu, Oracle, and NTT.

SRG’s data found that capex growth was particularly strong across Amazon, Microsoft, Tencent and Alibaba while Apple’s spend dropped off sharply and Google’s also declined.

Much of the hyperscale capex goes towards building, expanding and equipping huge data centers, which grew in number to 573 at the end of Q3. The hyperscale data is based on analysis of the capex and data center footprint of 20 of the world’s major cloud and internet service firms, including the largest operators in IaaS, PaaS, SaaS, search, social networking and e-commerce. In aggregate these twenty companies generated revenues of over $1.1 trillion in the first three quarters of the year, up 15% from 2019.

“As expected the hyperscale operators are having little difficulty weathering the pandemic storm. Their revenues and capex have both grown by strong double-digit amounts this year and this has flowed down to strong growth in spending on data centers, up 18% from 2019,” said John Dinsdale, a Chief Analyst at Synergy Research Group. “They generate well over 80% of their revenues from cloud, digital services and online activities, all of which have seen COVID-19 related boosts. As these companies go from strength to strength they need an ever-larger footprint of data centers to support their rapidly expanding digital activities. This is good news for companies in the data center ecosystem who can ride along in the slipstream of the hyperscale operators.”

Separately, Google Cloud announced it is set to add three new ‘regions,’ which provide faster and more reliable services in targeted locations, to its global footprint. The new regions in Chile, Germany and Saudi Arabia will take the total to 27 for Google Cloud.

About Synergy Research Group:

Synergy provides quarterly market tracking and segmentation data on IT and Cloud related markets, including vendor revenues by segment and by region. Market shares and forecasts are provided via Synergy’s uniquely designed online database tool, which enables easy access to complex data sets. Synergy’s CustomView ™ takes this research capability one step further, enabling our clients to receive on-going quantitative market research that matches their internal, executive view of the market segments they compete in.

Synergy Research Group helps marketing and strategic decision makers around the world via its syndicated market research programs and custom consulting projects. For nearly two decades, Synergy has been a trusted source for quantitative research and market intelligence. Synergy is a strategic partner of TeleGeography.

To speak to an analyst or to find out how to receive a copy of a Synergy report, please contact [email protected] or 775-852-3330 extension 101.

References:

Internet traffic spikes under “stay at home”; Move to cloud accelerates

With worldwide coronavirus induced “stay at home/shelter in place” orders, almost everyone that has high speed internet at home is using a lot more bandwidth for video conferences and streaming. How is the Internet holding up against the huge increase in data/video traffic? We focus this article on U.S. Internet traffic since the stay at home orders went into effect in late March.

………………………………………………………………………………………..

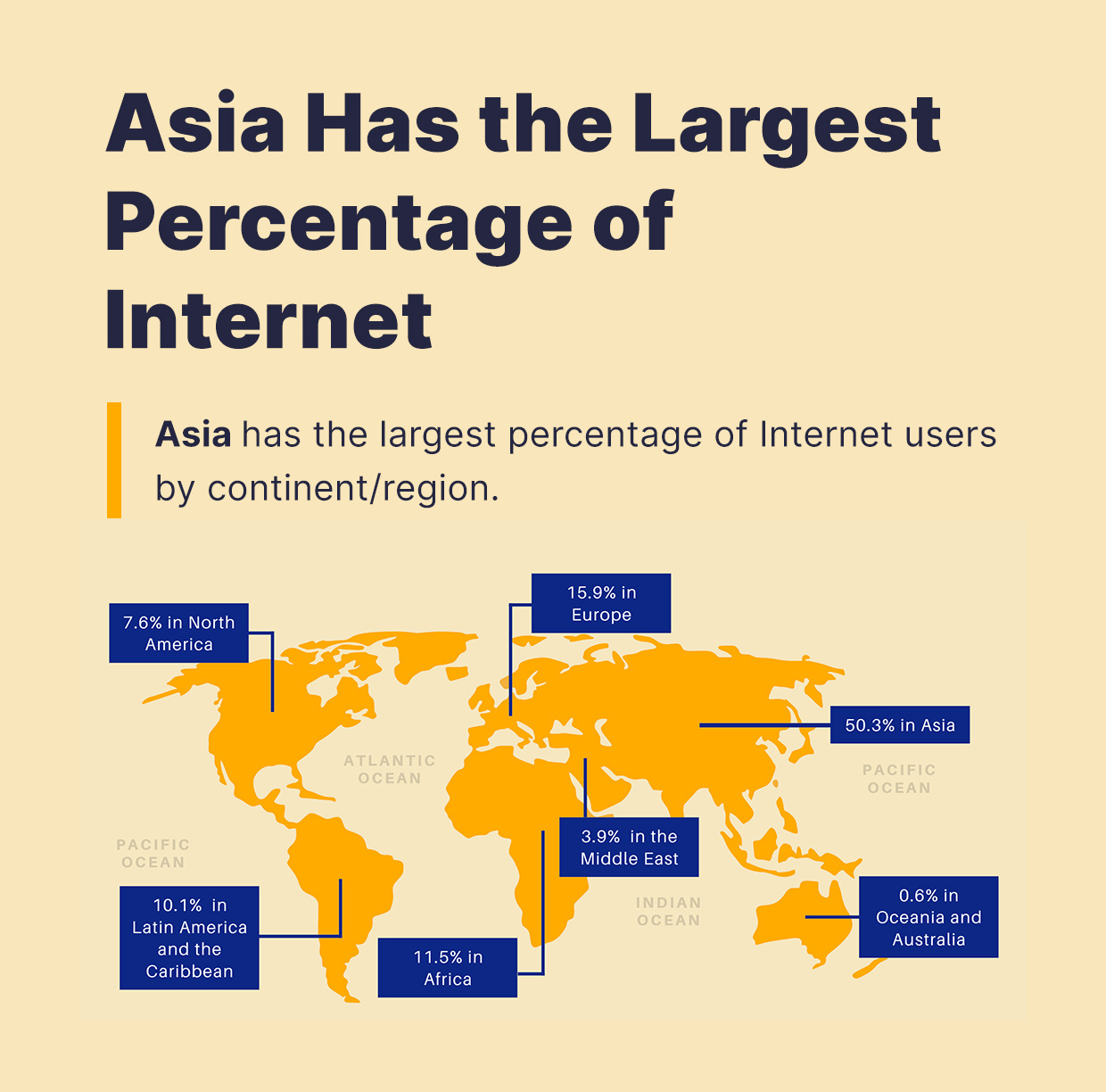

Sidebar: North America has only 7.6% of world’s Internet users:

………………………………………………………………………………………..

According to Eric Savitz of Barron’s, the U.S. networks are handling the traffic spikes without any major hiccups. In a call this past week with reporters, Comcast, the largest U.S. internet service provider, said that its network is working well, with tests done 700,000 times a day through customer modems showing average speeds running 110% to 115% of contracted rates. Overall peak traffic is up 32% on the network, with some areas up 60%, in particular around Seattle and the San Francisco Bay area, where lockdowns were put in place before they were in most of the rest of the country. In both Seattle and San Francisco, peak traffic volumes are plateauing, suggesting a new normal.

While Comcast said its peak internet traffic has increased 32 percent since the start of March, total traffic remains within the overall capacity its network. The increase in people working at home has shifted the downstream peak to earlier in the evening, while upload traffic is growing during the day in most cities. Tony Werner, head of technology at Comcast Cable, says it has a long-term strategy of adding network capacity 12 to 18 months ahead of expected peaks. He says that approach has given Comcast the ability to smoothly absorb the added traffic. The company hasn’t requested that video providers or anyone else limit their traffic.

AT&T, the second largest U.S. internet service provider, likewise asserts that its network is performing “very well” during the pandemic. This past Wednesday, it said, core traffic, including business, home broadband, and wireless, was up 18% from the same day last month. Wireless voice minutes were up 41%, versus the average Wednesday; consumer home voice minutes rose 57%, and WiFi calling was up 105%.

Over the past three weeks, the company has seen new usage patterns on its mobile network, with voice calls up 33% and instant messaging up 63%, while web browsing is down 5% and email is off 18%.

Verizon also says its network is handling the traffic well. One telling stat: The carrier says that mobile handoffs, the shifting of sessions from one cell site to another as users move around, is down 53% in the New York metro area, and 29% nationally; no one is going anywhere. More on Verizon’s COVID-19 initiatives here.

In the United States prior to coronavirus, total home internet traffic averaged about 15% on weekdays. But it started growing in mid March, and by late March it had reached about 35%, clearly connected to all the working and learning from home due to stay-at-home orders.

“The data suggests remote working will remain elevated in the U.S. for a prolonged period of time,” wrote analysts from Cowen analysts.

Craig Moffett of MoffetNathanson said “The cable companies are simply digital infrastructure providers. They are agnostic about how you can get your video content. And the broadband business is going to be just fine.”

“Our broadband connections are becoming our lifelines – figuratively and literally: we are using them to get news, connect to our work environments (now all virtual), and for entertainment too,” wrote Craig Labovitz, CTO for Nokia’s Deepfield portfolio, in a blog post.

………………………………………………………………………………………..

Enterprise IT Accelerates Move to Cloud:

One takeaway from this extended, forced stay at home period is that, more than ever, corporate IT (think enterprise computing and storage) is moving to the cloud. We’ve previously reported on this mega-trend in an IEEE techblog post noting the delay in 5G roll-outs. In particular:

Now the new (5G) technology faces an unprecedented slow down to launch and expand pilot deployments. Why? It’s because of the stay at home/shelter in place orders all over the world. Non essential business’ are closed and manufacturing plants have been idled. Also, why do you need a mobile network if you’re at home 95% of the time?

One reason to deploy 5G is to off load data (especially video) traffic on congested 4G-LTE networks. But just like the physical roads and highways, those 4G networks have experienced less traffic since the virus took hold. People confined to their homes need wired broadband and Wi-Fi, NOT 4G and 5G mobile access.

David Readerman of Endurance Capital Partners, a San Francisco, CA based tech hedge fund told Barron’s: “What’s certainly being reinforced right now, is that cloud-based information-technology architecture is providing agility and resiliency for companies to operate dispersed workforces.”

Readerman says the jury is out on whether there’s a lasting impact on how we work, but he adds that contingency planning now requires the ability to work remotely for extended periods.

On March 27th, the Wall Street Journal reported:

Cloud-computing providers are emerging as among the few corporate winners in the coronavirus pandemic as office and store closures across the U.S. have pushed more activity online.

The remote data storage and processing services provided by Amazon.com Inc., Microsoft Corp., Google and others have become the essential link for many people to remain connected with work and families, or just to unwind.

The hardware and software infrastructure those tech giants and others provide, commonly referred to as the cloud, underpins the operation of businesses that have become particularly popular during the virus outbreak, such as workplace collaboration software provider Slack, streaming video service company Netflix Inc. and online video game maker Epic Games Inc.

Demand has been so strong that Microsoft has told some customers its Azure cloud is running up against limits in parts of Australia.

“Due to increased usage of Azure, some regions have limited capacity,” the software giant said, adding it had, in some instances, placed restrictions on new cloud-based resources, according to a customer notice seen by The Wall Street Journal.

A Microsoft spokesman said the company was “actively monitoring performance and usage trends” to support customers and growth demands. “At the same time,” he said, “these are unprecedented times and we’re also taking proactive steps to plan for these high-usage periods.”

“If we think of the cloud as utility, it’s hard to imagine any other public utility that could sustain a 50% increase in utilization—whether that’s electric or water or sewage system—and not fall over,” Matthew Prince, chief executive of cloud-services provider Cloudflare Inc. said in an interview. “The fact that the cloud is holding up as well as it has is one of the real bright spots of this crisis.”

The migration to the cloud has been happening for about a decade as companies have opted to forgo costly investments into in-house IT infrastructure and instead rent processing hardware and software from the likes of Amazon or Microsoft, paying as they go for storage and data processing features. The trends have made cloud-computing one of the most contested battlefields among business IT providers.

“If you look at Amazon or Azure and how much infrastructure usage increased over the past two weeks, it would probably blow your mind how much capacity they’ve had to spin up to keep the world operating,” said Dave McJannet, HashiCorp Inc., which provides tools for both cloud and traditional servers. “Moments like this accelerate the move to the cloud.”

In a message to rally employees, Andy Jassy, head of the Amazon’s Amazon Web Services (AWS) cloud division, urged them to “think about all of the AWS customers carrying extra load right now because of all of the people at home.”

Brad Schick, chief executive of Seattle-based Skytap Inc., which works with companies to move existing IT systems to the cloud, has seen a 20% jump in use of its services in the past month. “A lot of the growth is driven by increased usage of the cloud to deal with the coronavirus.”

For many companies, one of the attractions of cloud services is they can quickly rent more processing horsepower and storage when it is needed, but can scale back during less busy periods. That flexibility also is helping drive cloud-uptake during the coronavirus outbreak, said Nikesh Parekh, CEO and cofounder of Seattle-based Suplari Inc., which helps companies manage their spending with outside vendors such as cloud services.

“We are starting to see CFOs worry about their cash positions and looking for ways to reduce spending in a world where revenue is going to decline dramatically over the next quarter or two,” he said. “That will accelerate the move from traditional suppliers to the cloud.”

Dan Ives of Wedbush opines that the coronavirus pandemic is a “key turning point” around deploying cloud-driven and remote-learning environments. As a majority of Americans are working or learning from home amid federal social distancing measures, Ives’ projections of moving 55% of workloads to the cloud by 2022 from 33% “now look conservative as these targets could be reached a full year ahead of expectations given this pace,” he said. He also expects that $1 trillion will be spent on cloud services over the next decade, benefiting companies such as Microsoft and Amazon.

…………………………………………………………………………………………………………..

Synergy Research Group: Hyperscale Data Center Count > 500 as of 3Q-2019

New data from Synergy Research Group shows that the total number of large data centers operated by hyperscale providers increased to 504 at the end of the third quarter, having tripled since the beginning of 2013. The EMEA and Asia-Pacific regions continue to have the highest growth rates, though the US still accounts for almost 40% of the major cloud and internet data center sites.

The next most popular locations are China, Japan, the UK, Germany and Australia, which collectively account for another 32% of the total. Over the last four quarters new data centers were opened in 15 different countries with the U.S., Hong Kong, Switzerland and China having the largest number of additions. Among the hyperscale operators, Amazon and Microsoft opened the most new data centers in the last twelve months, accounting for over half of the total, with Google and Alibaba being the next most active companies. Synergy research indicates that over 70% of all hyperscale data centers are located in facilities that are leased from data center operators or are owned by partners of the hyperscale operators.

……………………………………………………………………………………………………………………………………………………………………………………………………

Backgrounder:

One vendor in the data center equipment space recently called hyperscale “too big for most minds to envision.” Scalability has always been about creating opportunities to do small things using resources that happen to encompass a very large scale.

IDC, which provides research and advisory services to the tech industry, classifies any data center with at least 5,000 servers and 10,000 square feet of available space as hyperscale, but Synergy Research Group focuses less on physical characteristics and more on “scale-of-business criteria” that assess a company’s cloud, e-commerce, and social media operations.

A hyperscale data center is to be distinguished from a multi-tenant data center as the former is owned and operated by a mega cloud provider (Amazon, Microsoft, Google, Alibaba, etc) while the latter is owned and operator by a real estate company that leases cages to tenants who supply their own IT equipment.

A hyperscale data center accomplishes the following functions:

- Maximizes cooling efficiency. The largest operational expense in most data centers worldwide — more so than powering the servers — is powering the climate control systems. A hyperscale structure may be partitioned to compartmentalize high-intensity computing workloads, and concentrate cooling power on the servers hosting those workloads. For general-purpose workloads, a hyperscale architecture optimizes airflow throughout the structure, ensuring that hot air flows in one direction (even if it’s a serpentine one) and often reclaiming the heat from that exhaust flow for recycling purposes.

- Allocates electrical power in discrete packages. In facilities designed to be occupied by multiple tenants, “blocks” are allocated like lots in a housing development. Here, the racks that occupy those blocks are allocated a set number of kilowatts — or, more recently, fractions of megawatts — from the main power supply. When a tenant leases space from a colocation provider, that space is often phrased not in terms of numbers of racks or square footage, but kilowatts. A design that’s more influenced by hyperscale helps ensure that kilowatts are available when a customer needs them.

- Ensures electricity availability. Many enterprise data centers are equipped with redundant power sources (engineers call this configuration 2N), often backed up by a secondary source or generator (2N + 1). A hyperscale facility may utilize one of these configurations as well, although in recent years, workload management systems have made it feasible to replicate workloads across servers, making the workloads redundant rather than the power, reducing electrical costs. As a result, newer data centers don’t require all that power redundancy. They can get away with just N + 1, saving not just equipment costs but building costs as well.

- Balances workloads across servers. Because heat tends to spread, one overheated server can easily become a nuisance for the other servers and network gear in its vicinity. When workloads and processor utilization are properly monitored, the virtual machines and/or containers housing high-intensity workloads may be relocated to, or distributed among, processors that are better suited to its functions, or that are simply not being utilized nearly as much at the moment. Even distribution of workloads directly correlates to temperature reduction, so how a data center manages its software is just as important as how it maintains its support systems.

References:

https://www.zdnet.com/article/how-hyperscale-data-centers-are-reshaping-all-of-it/

https://www.vxchnge.com/blog/rise-of-hyperscale-data-centers

………………………………………………………………………………………………………………………………………………………………………………………………………..

Synergy’s research is based on an analysis of the data center footprint of 20 of the world’s major cloud and internet service firms, including the largest operators in SaaS, IaaS, PaaS, search, social networking, e-commerce and gaming. The companies with the broadest data center footprint are the leading cloud providers – Amazon, Microsoft, Google and IBM. Each has 60 or more data center locations with at least three in each of the four regions – North America, APAC, EMEA and Latin America. Oracle also has a notably broad data center presence. The remaining firms tend to have their data centers focused primarily in either the US (Apple, Facebook, Twitter, eBay, Yahoo) or China (Alibaba, Baidu, Tencent).

“There were more new hyperscale data centers opened in the last four quarters than in the preceding four quarters, with activity being driven in particular by continued strong growth in cloud services and social networking,” said John Dinsdale, a Chief Analyst and Research Director at Synergy Research Group.

“This is good news for wholesale data center operators and for vendors supplying the hardware that goes into those data centers. In addition to the 504 current hyperscale data centers we have visibility of a further 151 that are at various stages of planning or building, showing that there is no end in sight to the data center building boom.”

Reference:

https://www.srgresearch.com/articles/hyperscale-data-center-count-passed-500-milestone-q3

…………………………………………………………………………………………………………………………………………………………………………………………………………

About Synergy Research Group:

Synergy provides quarterly market tracking and segmentation data on IT and Cloud related markets, including vendor revenues by segment and by region. Market shares and forecasts are provided via Synergy’s uniquely designed online database tool, which enables easy access to complex data sets. Synergy’s CustomView ™ takes this research capability one step further, enabling our clients to receive on-going quantitative market research that matches their internal, executive view of the market segments they compete in.

Synergy Research Group helps marketing and strategic decision makers around the world via its syndicated market research programs and custom consulting projects. For nearly two decades, Synergy has been a trusted source for quantitative research and market intelligence. Synergy is a strategic partner of TeleGeography.

To speak to an analyst or to find out how to receive a copy of a Synergy report, please contact [email protected] or 775-852-3330 extension 101.

Verizon Software-Defined Interconnect: Private IP network connectivity to Equinix global DC’s

Verizon today announced the launch of Software-Defined Interconnect (SDI), a solution that works with Equinix Cloud Exchange Fabric™ (ECX Fabric™), offering organizations with a Private IP network direct connectivity to 115 Equinix International Business Exchange™ (IBX ®) data centers (DC’s) around the globe within minutes.

Verizon claims its new Private IP service [1] provides a faster, more flexible alternative to traditional interconnectivity, which requires costly buildouts, long lead times, complex provisioning and often truck rolls: APIs are used to automate connections and, often, reduce costs, boasts Verizon. The telco said in a press release:

SDI addresses the longstanding challenges associated with connecting premises networks to colocation data centers. To do this over traditional infrastructure requires costly build-outs, long lead times and complex provisioning. The SDI solution leverages an automated Application Program Interface (API) to quickly and simply integrate pre-provisioned Verizon Private IP bandwidth via ECX Fabric, while eliminating the need for dedicated physical connectivity. The result is to make secure colocation and interconnection faster and easier for customers to implement, often at a significantly lower cost.

Note 1. Private IP is an MPLS-based VPN service that provides a simple network designed to grow with your business and help you consolidate your applications into a single network infrastructure. It gives you dedicated, secure connectivity that helps you adapt to changing demands, so you can deliver a better experience for customers, employees and partners.

Private IP uses Layer 3 networking to connect locations virtually rather than physically. That means you can exchange data among many different sites using Permanent Virtual Connections through a single physical port. Our MPLS-based VPN solution combines the flexibility of IP with the security and reliability of proven network technologies.

……………………………………………………………………………………………………………

“SDI is an addition to our best-in-class software-defined suite of services that can deliver performance ‘at the edge’ and support real-time interactions for our customers,” said Vickie Lonker, vice president of product management and development for Verizon. “Think about how many devices are connected to data centers, the amount of data generated, and then multiply that when 5G becomes ubiquitous. Enabling enterprises to virtually connect to Verizon’s private IP services by coupling our technology with the proven ECX Fabric makes it easy to provision and manage data-intensive network traffic in real time, lifting a key barrier to digital transformation.”

Verizon’s private IP – MPLS network is seeing high double-digit traffic growth year-over-year, and the adoption of colocation services continues to proliferate as more businesses grapple with complex cloud deployments to achieve greater efficiency, flexibility and additional functionality in data management.

“Verizon’s new Software Defined Interconnect addresses one of the leading issues for organizations by improving colocation access. This offer facilitates a reduction in network and connectivity costs for accessing colocation data centers, while promoting agility and innovation for enterprises. This represents a competitive advantage for Verizon as it applies SDN technology to improve interconnecting its Private IP MPLS network globally,” said Courtney Munroe, group vice president at IDC.

“With Software-Defined Interconnect, a key barrier to digital transformation has been lifted. By allowing enterprises to virtually connect to Verizon’s private IP services using the proven ECX Fabric, SDI makes secure colocation and interconnection easier – and more financially viable – to implement than ever before,” said Bill Long, vice president, interconnection services at Equinix [2].

Note 2. Equinix Internet Exchange™ enables networks, content providers and large enterprises to exchange internet traffic through the largest global peering solution across 52 markets.

………………………………………………………………………………………………………

Expert Opinion:

SDI is an incremental addition to Verizon’s overall strategy of interconnecting with other service providers to meet customer needs, as well as virtualizing its network, says Brian Washburn, an analyst at Ovum (owned by Informa as is LightReading and many other market research firms).

“Everything can be dynamic, everything can be made pay-as-you-go, everything can be controlled as a series of virtual resources to push them around the network as you need it, when you need it,” Washburn says.

For Equinix, the Verizon deal builds its gravitational pull. “It pulls in assets and just connects as many things to other things as possible. It is a virtuous circle. The more things they get into their data centers, the more resources they have there, that pulls in more companies to connect to the resources,” Washburn says. Equinix is standardizing its APIs to make interconnections easily.

SDI is similar to CenturyLink Dynamic Connections, which connects enterprises directly to public cloud services. And telcos are building interconnects with each other; for example, AT&T with Colt. “I expect we’ll see more of this sort of automation taking advantage of Equinix APIs,” Washburn says.

Microsoft also provides a virtual WAN service to connect enterprises to Azure. “It’s a different story, but it falls into the broader category of automation between network operators and cloud services,” Washburn said.

…………………………………………………………………………………………………………..

Verizon manages 500,000+ network, hosting, and security devices and 4,000+ networks in 150+ countries. To find out more about how Verizon’s global IP network, managed network services and Software-Defined Interconnect work please visit:

https://enterprise.verizon.com/products/network/connectivity/private-ip/

IHS Markit: Microsoft #1 for total cloud services revenue; AWS remains leader for IaaS; Multi-clouds continue to form

Following is information and insight from the IHS Markit Cloud & Colocation Services for IT Infrastructure and Applications Market Tracker.

Highlights:

· The global off-premises cloud service market is forecast to grow at a five-year compound annual growth rate (CAGR) of 16 percent, reaching $410 billion in 2023.

· We expect cloud as a service (CaaS) and platform as a service (PaaS) to be tied for the largest 2018 to 2023 CAGR of 22 percent. Infrastructure as a service (IaaS) and software as a service (SaaS) will have the second and third largest CAGRs of 14 percent and 13 percent, respectively.

IHS Markit analysis:

Microsoft in 2018 became the market share leader for total off-premises cloud service revenue with 13.8 percent share, bumping Amazon to the #2 spot with 13.2 percent; IBM was #3 with 8.8 percent revenue share. Microsoft’s success can be attributed to its comprehensive portfolio and the growth it is experiencing from its more advanced PaaS and CaaS offerings.

Although Amazon relinquished its lead in total off-premises cloud service revenue, it remains the top IaaS provider. In this very segmented market with a small number of large, well-established providers competing for market share:

• Amazon was #1 in IaaS in 2018 with 45 percent of IaaS revenue.

• Microsoft was #1 for CaaS with 22 percent of CaaS revenue and #1 in PaaS with 27 percent of PaaS revenue.

• IBM was #1 for SaaS with 17 percent of SaaS revenue.

…………………………………………………………………………………………………………………………………

“Multi-clouds [1] remain a very popular trend in the market; many enterprises are already using various services from different providers and this is continuing as more cloud service providers (CSPs) offer services that interoperate with services from their partners and their competitors,” said Devan Adams, principal analyst, IHS Markit. Expectations of increased multi-cloud adoption were displayed in our recent Cloud Service Strategies & Leadership North American Enterprise Survey – 2018, where respondents stated that in 2018 they were using 10 different CSPs for SaaS (growing to 14 by 2020) and 10 for IT infrastructure (growing to 13 by 2020).

Note 1. Multi-cloud (also multicloud or multi cloud) is the use of multiple cloud computing and storage services in a single network architecture. This refers to the distribution of cloud assets, software, applications, and more across several cloud environments.

There have recently been numerous multi-cloud related announcements highlighting its increased availability, including:

· Microsoft: Entered into a partnership with Adobe and SAP to create the Open Data Initiative, designed to provide customers with a complete view of their data across different platforms. The initiative allows customers to use several applications and platforms from the three companies including Adobe Experience Cloud and Experience Platform, Microsoft Dynamics 365 and Azure, and SAP C/4HANA and S/4HANA.

· IBM: Launched Multicloud Manager, designed to help companies manage, move, and integrate apps across several cloud environments. Multicloud Manager is run from IBM’s Cloud Private and enables customers to extend workloads from public to private clouds.

· Cisco: Introduced CloudCenter Suite, a set of software modules created to help businesses design and deploy applications on different cloud provider infrastructures. It is a Kubernetes-based multi-cloud management tool that provides workflow automation, application lifecycle management, cost optimization, governance and policy management across cloud provider data centers.

IHS Markit Cloud & Colocation Intelligence Service:

The bi-annual IHS Markit Cloud & Colocation Services Market Tracker covers worldwide and regional market size, share, five-year forecast analysis, and trends for IaaS, CaaS, PaaS, SaaS, and colocation. This tracker is a component of the IHS Markit Cloud & Colocation Intelligence Service which also includes the Cloud & Colocation Data Center Building Tracker and Cloud and Colocation Data Center CapEx Market Tracker. Cloud service providers tracked within this service include Amazon, Alibaba, Baidu, IBM, Microsoft, Salesforce, Google, Oracle, SAP, China Telecom, Deutsche Telekom Tencent, China Unicom and others. Colocation providers tracked include Equinix, Digital Realty, China Telecom, CyrusOne, NTT, Interion, China Unicom, Coresite, QTS, Switch, 21Vianet, Internap and others.

DriveNets Network Cloud: Fully disaggregated software solution that runs on white boxes

by Ofer Weill, Director of Product Marketing at DriveNets; edited and augmented by Alan J Weissberger

Introduction:

Networking software startup DriveNets announced in February that it had raised $110 million in first round (Series A) of venture capital funding. With headquarters in Ra’anana, Israel, DriveNets’ cloud-based service, called Network Cloud, simplifies the deployment of new services for carriers at a time when many telcos are facing declining profit margins. Bessemer Venture Partners and Pitango Growth are the lead VC investors in the round, which also includes money from an undisclosed number of private angel investors.

DriveNets was founded in 2015 by telco experts Ido Susan and Hillel Kobrinsky who are committed to creating the best performing CSP Networks and improving its economics. Network Cloud was designed and built for CSPs (Communications Service Providers), addressing their strict resilience, security and QoS requirements, with zero compromise.

“We believe Network Cloud will become the networking model of the future,” said DriveNets co-founder and CEO Ido Susan, in a statement. “We’ve challenged many of the assumptions behind traditional routing infrastructures and created a technology that will allow service providers to address their biggest challenges like the exponential capacity growth, 5G deployments and low-latency AI applications.”’

The Solution:

Network Cloud does not use open-source code. It’s an “unbundled” networking software solution, which runs over a cluster of low-cost white box routers and white box x86 based compute servers. DriveNets has developed its own Network Operating System (NOS) rather than use open source or Cumulus’ NOS as several other open networking software companies have done.

Fully disaggregated, its shared data plane scales-out linearly with capacity demand. A single Network Cloud can encompass up to 7,600 100Gb ports in its largest configuration. Its control plane scales up separately, consolidating any service and routing protocol.

Network Cloud data-plane is created from just two building blocks white boxes – NCP for packet forwarding and NCF for fabric, shrinking operational expenses by reducing the number of hardware devices, software versions and change procedures associated with building and managing the network. The two white-boxes (NCP and NCF) are based on Broadcom’s Jericho2 chipset which has high-speed, high-density port interfaces of 100G and 400G bits/sec. A single virtual chassis for max ports might have this configuration: 30720 x 10G/25G / 7680 x 100G / 1920 x 400G bits/sec.

Last month, DriveNets disaggregated router added 400G-port routing support (via whitebox routers using the aforementioned Broadcom chipset). The latest Network Cloud hardware and software is now being tested and certified by an undisclosed tier-1 Telco customer.

“Just like hyper-scale cloud providers have disaggregated hardware and software for maximum agility, DriveNets is bringing a similar approach to the service provider router market. It is impressive to see it coming to life, taking full advantage of the strength and scale of our Jericho2 device,” said Ram Velaga, Senior Vice President and General Manager of the Switch Products Division at Broadcom.

Network Cloud control-plane runs on a separate compute server and is based on containerized microservices that run different routing services for different network functions (Core, Edge, Aggregation, etc.). Where they are co-located, service-chaining allows sharing of the same infrastructure for all router services.

Multi-layer resiliency, with auto failure recovery, is a key feature of Network Cloud. There is inter-router redundancy and geo-redundancy of control to select a new end to end path by routing around points of failure.

Network Cloud’s orchestration capabilities include Zero Touch Provisioning, full life cycle management and automation, as well as superior diagnostics with unmatched transparency. These are illustrated in the figures below:

Image Courtesy of DriveNets

Future New Services:

Network Cloud is a platform for new revenue generation. For example, adding 3rd party services as separate micro-services, such as DDoS Protection, Managed LAN to WAN, Network Analytics, Core network and Edge network.

“Unlike existing offerings, Network Cloud has built a disaggregated router from scratch. We adapted the data-center switching model behind the world’s largest clouds to routing, at a carrier-grade level, to build the world’s largest Service Providers’ networks. We are proud to show how DriveNets can rapidly and reliably deploy technological innovations at that scale,” said Ido Susan CEO and Co-Founder of DriveNets in a press release.

………………………………………………………………………………………………

References:

https://www.drivenets.com/about-us

https://www.drivenets.com/uploads/Press/201904_dn_400g.pdf