New VMware Private Mobile Network Service to be delivered by Federated Wireless

Federated Wireless, a shared spectrum and private wireless network operator, today announced it will deliver private 4G and 5G networks-as-a-service for enterprises in the form of the new VMware Private Mobile Network Service. Federated Wireless will build and operate private 4G and 5G radio access network (RAN) infrastructure to be deployed on customers’ premises. VMware will provide its Private Mobile Network Orchestrator to manage the end-to-end network and integrate it with existing IT environments.

The streamlined solution provides the performance, coverage, and security benefits of private cellular networks without the complexity of building and operating standalone infrastructure.

Key features and benefits of the joint solution include:

- Streamlined deployment of private 4G/5G RAN at enterprise locations

- Simplified private mobile core integrated with existing IT management platforms

- Centralized orchestration and automation of the end-to-end networks

- Enhanced security and more optimized connectivity for business- and mission-critical applications

- Carrier-grade performance with SLAs tailored to enterprise requirements

- Ability to leverage CBRS shared spectrum as well as privately licensed spectrum

“Enterprises are looking to private cellular networks to enable business transformation, but need solutions that integrate with their existing infrastructure,” said Kevin McCartney, Vice President of Alliances at Federated Wireless. “Through the strength of our combined solutioning with VMware, we’re giving customers in difficult-to-cover environments an easy on-ramp to private 4G and 5G with the performance and scale they require.”

“VMware is committed to helping customers modernize their networks through innovative software solutions,” said Saadat Malik, Vice President and General Manager, Edge Computing at VMware. “With Federated Wireless and a growing partner ecosystem, we’re making it simpler for enterprises to deploy and run private networks in a model that aligns with their business needs.”

The solution will be delivered by Federated Wireless as part of its private wireless managed service and will be available to both direct customers and channel partners.

…………………………………………………………………………………………………………………………………………………………………………………………………………………………………….

VMware today is also introducing new and enhanced orchestration capabilities for the edge. VMware Edge Cloud Orchestrator (formerly VMware SASE Orchestrator) will provide unified management for VMware SASE and the VMware Edge Compute Stack—an industry-first offering to bridge the gap between edge networking and edge compute. Enhancements to the orchestrator will help customers plan, deploy, run, visualize, and manage their edge environments in a friction-free manner—allowing them to run edge-native applications focused on business outcomes. The VMware Edge Cloud Orchestrator (VECO) will deliver holistic edge management by providing a single console to manage edge compute infrastructure, networking, and security.

VMware defines the software-defined edge as a distributed digital Infrastructure that runs workloads across a number of locations, close to endpoints that are producing and consuming data. It extends to where the users and devices are—whether they are in the office, on the road or on the factory floor. Enterprises need solutions to connect these elements more securely and reliably to the larger enterprise network in a scalable manner. VMware Edge Cloud Orchestrator is key to enabling a software-defined edge approach. VMware’s approach to the software-defined edge features right-sized infrastructure (shrinking the stack to the smallest possible footprint); pull-based orchestration (security and administrative updates are “pulled” by the workload); and network programmability (defined by APIs and code).

“Audi wants to take factory automation to the next level and benefit from a scalable edge infrastructure at its factories worldwide,” said Jörg Spindler, Global Head of Manufacturing Engineering, Audi. “Audi’s Edge Cloud 4 Production will be the key component of this digital transformation, replacing individual PCs and hardware on the shop floor. Ultimately, it will increase factory uptime, agility, and the speed of rolling out new applications and tools across the production line. VMware Edge Compute Stack (ECS) and the VMware Edge Cloud Orchestrator (VECO) will offer a scalable way for Audi to operate a distributed edge infrastructure, manage resources more efficiently, and lower its operations costs.”

……………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………

VMware also announced that the VMware Private Mobile Network, a managed connectivity service to accelerate edge digital transformation, will become initially available in the current quarter (FY24 Q3). VMware partners with wireless service providers to help remove the complexity associated with private mobile networks and enable enterprises to focus on their strategic business outcomes. Built on VMware Edge Compute Stack, VMware Private Mobile Network offers service providers trusted VMware technology, seamlessly integrated into existing IT management platforms. This enables rapid deployment and effortless management and orchestration. VMware is also pleased to announce that it is working with Betacom, Boingo Wireless, and Federated Wireless as the initial beta wireless service provider partners for this new offering.

Supporting Diverse Use Cases at the Edge:

VMware offers enterprises the right edge solution to address diverse use cases at the right price. It is collaborating with customers to successfully address the following edge use cases:

- Manufacturing – Support for autonomous vehicles, digital twin, inventory management, safety, and security;

- Retail – Support for loss prevention, inventory management, safety, security, and computer vision;

- Energy – Enable increased production visibility and efficiency, reduced unplanned downtime, maintain regulatory compliance; and,

- Healthcare – Support for IoT wearables, smart utilities, and surgical robotics.

End Quote:

“Boingo is collaborating with VMware to enhance our managed private 5G networks that connect mobile and IoT devices at airports, stadiums and large venues. VMware’s Private Mobile Network simplifies network integration and management, helping us accelerate deployments.” – Dr. Derek Peterson, chief technology officer, Boingo

References:

https://www.federatedwireless.com/products/private-wireless/

https://go.federatedwireless.com/l/940493/2023-06-12/3pj9f/940493/1686554112NWvEuSUE/WhyFederatedWireless_SolutionBrief.pdf

Granite Telecommunications expands its service offerings with Juniper Networks

Juniper Networks today announced that its customer and partner, Granite Telecommunications, a $1.8 billion provider of communications and technology solutions, has expanded its service offerings to include Juniper Networks’ full-stack of campus and branch services, including Wired Access, Wireless Access and SD-WAN, all driven by Mist AI™. This move will enhance Granite’s ability to support its customers’ unique verticals, such as healthcare, retail, education, manufacturing, hospitality and financial.

Granite has been working closely with Juniper for several years, and with this expanded AI-driven enterprise portfolio they now offer Juniper’s full suite of campus and branch networking solutions. By leveraging Mist AI and a single cloud across the wired, wireless and SD-WAN domains, Granite saves time and money with client-to-cloud automation and assurance, while accelerating deployments with Zero Touch Provisioning and automated configurations. In addition, Granite delivers more value to its customers with a broadened service portfolio that offers new highly differentiated services.

“Granite stands as Juniper’s largest AI-Driven SD-WAN partner in Managed Services within the Americas, underscoring the strength of our relationship and confidence in Juniper’s cutting-edge networking technology,” said Rob Hale, President and CEO at Granite. “As we expand our partnership, we are poised to elevate the customer experience to new heights by offering a full suite of Juniper solutions, imbued with the defining qualities of reliability, performance and security that characterize Juniper.”

Granite has been expanding its nationwide support to address the changing and growing needs of its customers. The company is committed to delivering specialized services for the unique requirements of its customers’ verticals. The addition of Juniper’s software-defined branch and wireless services is expected to be a significant benefit to many of its customer sectors. These services are designed to improve the performance and security of networks in various industries and make it easier for businesses to manage their network infrastructure.

“We are very excited to take our relationship with Granite Telecommunications to the next level,” said Sujai Hajela, Executive Vice President, AI-Driven Enterprise at Juniper Networks. “They have proven to be an exceptional partner and leader in the communications industry, who is especially adept at leveraging AI and the cloud to deliver high value managed services to their customers. With the full AI-driven enterprise portfolio, Granite can truly differentiate from their competition with exceptional client-to-cloud user experiences.”

With this expansion, Granite continues to demonstrate its commitment to providing customers with the best possible network experience. The addition of Juniper’s full-stack solutions will enable Granite to enhance its capabilities and better serve its customers, while also providing the company with a competitive edge in the market.

About Juniper Networks:

Juniper Networks is dedicated to dramatically simplifying network operations and driving superior experiences for end users. Our solutions deliver industry-leading insight, automation, security and AI to drive real business results. We believe that powering connections will bring us closer together while empowering us all to solve the world’s greatest challenges of well-being, sustainability and equality. Additional information can be found at Juniper Networks (www.juniper.net) or connect with Juniper on Twitter, LinkedIn and Facebook.

References:

AT&T Internet Air FWA home internet service now available in 16 markets

AT&T announced today that its new fixed wireless access (FWA) home internet service, named Internet Air, is now available in more than a dozen markets across the U.S. The emphasis here is on the customer installation process, which AT&T says can be done in five easy steps, with the customer up and running in less than 15 minutes. No tech dispatch or truck rolls are necessary to install this FWA home internet service.

AT&T is now the third nationwide mobile network operator (MNO) to launch a 5G FWA home internet service. Verizon is the current FWA leader, followed by T-Mobile. USCellular has also launched a 5G FWA service in the area it serves (not nationwide).

AT&T says they’ve already deployed Internet Air to existing copper-based (DSL) customers with great success. The company is now hyper-focused on selecting locations with enough wireless coverage and capacity to deliver not only a great in-home experience, but also maintain a top-notch wireless service for our existing mobile users.

Installation: Upon opening the box, customers will scan a QR code to access a step-by-step guide providing clear instructions. AT&T Smart Home Manager app makes set-up fool-proof with the use of a unique feature that helps you find the best spot in your home with the strongest connection. We also offer add-on Wi-Fi extenders to create a whole-home mesh Wi-Fi eliminating dead zones. While connectivity will always be our focus, we understand customers want a product that is visually appealing and easy to use. AT&T Internet Air sports a sleek and modern look that seamlessly blends into any design aesthetic.

AT&T Internet Air complements AT&T Fiber, expanding our footprint into new locations including areas of Los Angeles, CA; Philadelphia, PA: Cincinnati, OH; Harrisburg-Lancaster-Lebanon, PA; Pittsburgh, PA; Las Vegas, NV; Phoenix (Prescott), AZ; Chicago, IL; Detroit, MI; Flint-Saginaw-Bay City, MI; Hartford-New Haven, CT; Minneapolis-St. Paul, MN; Portland, OR; Salt Lake City, UT; Seattle-Tacoma, WA; and Tampa-St. Petersburg (Sarasota), FL.

Los Angeles is Charter’s largest market and a T-Mobile FWA stronghold. Philadelphia is Comcast’s home market, and Seattle is T-Mobile’s FWA home market.

Gigapower, a joint venture of AT&T and BlackRock, is building out fiber in Mesa, AZ. While the two are about 100 miles apart, it will be interesting to see how fiber and FWA technologies will be adopted in the same market.

AT&T Internet Air costs $55 a month plus taxes. AT&T Internet Air has no overage fees, no price increase at 12 months, no equipment fees and no annual contract. Coupled with AT&T ActiveArmorSM internet security included, customers can stream and surf the web with peace of mind. New and existing AT&T cellular customers with an eligible wireless plan can get Internet Air at $35/month.

AT&T Internet Air is also eligible for the Affordable Connectivity Program (ACP) providing eligible households with a benefit of up to $30 a month (up to $75 a month on qualifying Tribal lands) to reduce the cost of broadband service.

References:

https://about.att.com/blogs/2023/internet-air.html

https://www.att.com/internet/internet-air/

https://www.fiercewireless.com/wireless/fwa-and-then-there-were-three-entner

ABI Research joins the chorus: 5G FWA is a competitive alternative to wired broadband access

MoffettNathanson: ROI will be disappointing for new fiber deployments; FWA best for rural markets

IBD – Controversy over 5G FWA: T-Mobile and Verizon are in; AT&T is out

Verizon broadband – a combination of FWA and Fios (but not so much pay TV)

Verizon makes 5G Business Internet (FWA) available in 24 U.S. cities

Kaleido Intelligence: eSIMs for smartphones and IoT at 21% CAGR from 2023-2028

A new report from Kaleido Intelligence predicts that 1.4 billion eSIMs will be shipped in 2028, while CAGR will rise 21% between now and then. The market research firm forecasts a robust average growth of 77% in smartphone eSIM activations between 2023 and 2028.

Market disruption will come in the form of ‘travel eSIM’ as well as increased ability to transfer an eSIM from one device to another, ‘forcing the market to take new shape.’

However, the most profound transformation is anticipated in the IoT space. An IoT specification for eSIMs will see ‘commercialization as pre-compliant solutions’ in 2024, and will lower the technical and investment burden of setting up IoT systems.

The press release also states: “Although many MNOs may not have a concrete strategy for IoT connectivity at retail, support for eSIM via the new specification will offer considerable opportunities at the wholesale level. With well over half of active IoT eSIMs using the new specification by 2028, demand for connectivity profiles will be greater than ever before.”

Kaleido’s research found that the combined migration towards ‘ijo,hudfwe4’ smartphones and the ability to offer a more seamless journey to travel SIM purchasing is likely to help accelerate travel SIM adoption and erode the existing physical travel SIM market.

Nitin Bhas, Chief of Strategy & Insights at Kaleido commented:

“High roaming costs, growing traveller awareness of eSIM, and the future ratification of GSMA eSIM specifications for smartphones in China will further drive this adoption surge for alternative eSIM solutions. Nevertheless, the market landscape presents mobile operators with the opportunity to reshape their traditional roaming strategies, attract new customers through similar travel eSIM services and to drive usage amongst existing customers with compelling roaming deals.”

500% Growth in Spend:

Travel eSIM retail spend predicted to rise 500% between 2023 and 2028, as leisure and business travellers embrace cheaper esim travel plans outside core rlah markets.

$10 billion in Retail Spend:

The global travel eSIM market will be worth close to $10 billion in 2028, accounting for over 80% of total travel SIM spend by then. ‘

$30 billion Travel Market:

Retail spend on travel connectivity services, including roaming packages and travel SIMs, to be worth over $30 billion by 2028.

“The eSIM market has seen several developments recently that smooth the path to adoption, and address many lingering ecosystem challenges,” said Steffen Sorrell, Chief of Research at Kaleido. “The effect of this will mean eSIM or iSIM form factors will gradually become a de facto requirement by 2028 for most cellular devices.”

A recent survey by Omdia would seem to back up this projection of growth for the IoT and eSIM sector – of the hundreds of enterprise professionals polled over 70% of said they are planning to use 5G connectivity for IoT, while, eSIM/iSIM technology has already been or will be adopted by nearly 90% over the next two years. 95% of them expected to see measurable benefits from IoT within two years of deployment, and 90% said existing IoT projects have met or exceeded their expectations.

References:

https://telecoms.com/523216/esim-shipments-will-grow-77-by-2028/

Counterpoint Research: eSIM adoption now entering high-growth phase; +11% YoY in 2022

3GPP Release 16 5G NR Enhancements for URLLC in the RAN & URLLC in the 5G Core network

Introduction:

3GPP Release 16 was “frozen” July 3, 2022. However, two key work items were not completed: Enhancement of the 5G RAN and the 5G Core network to support ultra-high reliability and low-latency communications (URLLC).

The enhancements, especially in the RAN, are essential for 3GPP New Radio (NR) to meet the ITU-R M.2410 Minimum Performance Requirements for the URLLC use case. That was to enable a whole new set of mission critical applications that required either ultra high reliability or ultra low latency (< or =1 ms in the data plane and < or =10ms in the control plane) or both.

Yet URLLC in the RAN and the associated URLLC in the RAN Conformance Test specification still have not been completed (more below)!

Overview of URLCC Enhancements:

The main functionalities introduced were the support of redundant transmission, QoS monitoring, dynamic division of the Packet Delay Budget, and enhancements of the session continuity mechanism.

The 3GPP Rel 16 URLLC in the RAN spec, once complete and performance tested, is needed to meet the ITU-R M.2410 URLLC Performance Requirements.

The 5G NR Physical Layer is improved for the support of URLLC in the RAN in several ways: new DCI formats, Enhanced PDCCH monitoring capability, Sub-slot based HARQ-ACK feedback, Two HARQ-ACK codebooks constructed simultaneously, PUSCH enhancements, Enhanced inter UE Tx prioritization/multiplexing and Multiple active configured grant configurations for a BWP.

3GPP Rel-17 URLLC work is mostly contained in the feature “Enhanced Industrial IoT and URLLC support for NR.” This covers mostly some “Physical Layer feedback enhancements for HARQ-ACK and CSI reporting” and the “Intra-UE multiplexing and prioritization of traffic with different priority.”

Current Status:

The most recent URLLC in the RAN spec dated December 2022 is 96% complete as per:

| 830074 | NR_L1enh_URLLC | Physical Layer Enhancements for NR Ultra-Reliable and Low Latency Communication (URLLC) | Rel-16 | R1 | 22/12/2022 | RP-191584 | history | 2019/03/26 | 26/06/2019 | 26/6/19: WID:RP-190726->RP-191584 |

The URLLC in the RAN Conformance Test spec is only 90% complete as per:

| 900054 | NR_L1enh_URLLC-UEConTest | … UE Conformance Test Aspects – Physical Layer Enhancements for NR URLLC | Rel-16 | R5 | 22/12/2022 | RP-202566 | history | 2021/01/06 | 20/06/2022 | 22/3/22: Compl:16 ; 20/6/22: Rapporteur: Huawei->Chunying GU, Huawei; Rap eMail: ->guchunying@huawei. |

……………………………………………………………………………………………………..

Here are the key 3GPP Rel16 URLLC work items from https://www.3gpp.org/dynareport?code=WI-List.htm

- 830074 NR_L1enh_URLLC Physical Layer Enhancements for NR Ultra-Reliable and Low Latency Communication (URLLC)

- 800095 FS_NR_L1enh_URLLC… Study on physical layer enhancements for NR UR Low Latency Cases

- 830174 NR_L1enh_URLLC-Core… Core part: Physical Layer Enhancements for NR URLLC

- 830274 NR_L1enh_URLLC-Perf… Perf. part: Physical Layer Enhancements for NR URLLC (R4)

- 900054 NR_L1enh_URLLC-UEConTest… UE Conformance Test Aspects – Physical Layer Enhancements for NR URLLC (R5)

References:

https://portal.3gpp.org/desktopmodules/Specifications/SpecificationDetails.aspx?specificationId=3498

https://www.3gpp.org/dynareport?code=WI-List.htm

https://www.3gpp.org/dynareport?code=status-report.htm

https://www.3gpp.org/dynareport?code=FeatureOrStudyItemFile-830074.htm

https://www.3gpp.org/technologies/urlcc-2022

https://techblog.comsoc.org/category/3gpp-release-16

Executive Summary: IMT-2020.SPECS defined, submission status, and 3GPP’s RIT submissions

5G Specifications (3GPP), 5G Radio Standard (IMT 2020) and Standard Essential Patents

Another Opinion: 5G Fails to Deliver on Promises and Potential

Ericsson and Vodafone enable Irish rugby team to use Private 5G SA network for 2023 Rugby World Cup

Ericsson and Vodafone Ireland have partnered to install a cutting-edge 5G Standalone Mobile Private Network (MPN) solution for the Irish rugby team to supply fast and reliable in-play data analysis ahead of the 2023 Rugby World Cup in September.

Previously the team relied on standard WiFi across stadiums and training facilities both at home and away. Now giving instant feedback on team plays and tactics, the 5G Standalone MPN solution and artificial intelligence technology ensures faster download and upload speeds and lower latency, which can be utilised for real-time performance analysis and decisions on the pitch.

Using this reliable connectivity, up to eight high-resolution video streams are captured by multiple cameras and a 5G connected drone and then analysed in real-time to collate data on team performance. The technology helps to improve the communication between management, coaches and players and maximises the time on pitch where the smallest tweak to a running line or defensive position, can have a significant impact on the weekend’s game.

Vodafone Ireland and Ericsson have worked closely with the IRFU and their Head of Analytics and Innovation, Vinny Hammond and his analysis team of John Buckley, Alan Walsh and Jack Hannon. This collaboration has led to a clear understanding of the specific performance outcomes sought by such an elite sports team and has supported the design and installation of the Ericsson Private 5G solution, which now enables the management team, coaches and players to feel the real benefit of instant feedback to enhance the ability to make decisions quikcly.

The new solution has been tested at the Irish team’s High Performance Centre and will be brought to France in a bespoke 5G connected van for the World Cup in September.

Vodafone Ireland Network Director, Sheila Kavanagh says: “At Vodafone, we are so proud of our support for the Irish Rugby team, so we’re delighted to bring further value through the delivery of this cutting-edge technology solution. Performance analysis has experienced massive changes in the past couple of decades. What started with pen and paper-based methods for collecting notational data has evolved to using cutting-edge computer-based technologies and artificial intelligence to collect ever increasing amounts of real-time information. Distilling and delivering this data back to the team at top speed requires a reliable, secure and scalable connectivity solution.”

“This 5G MPN, drone and additional technology will support Vinny Hammond and his analytics team to quickly breakdown and organise unstructured data and present it back in a clear manner to other coaching staff and management – helping them understand the performance of the plays and overall team, without delay. It’s fantastic to see it in use in the HPC, but we’re also really excited to support the team with 5G connectivity throughout their time at the World Cup in France with our fully kitted Connected Van. Our 5G MPN technology is a demonstration of how technology and connectivity innovation can enhance the business of sport and the performance of teams, bringing added layers of data and analysis to coaches, management, and their players.”

IRFU Head of Analytics and Innovation, Vinny Hammond says: “So much of our roles revolve around moving large quantities of data so we can analyse performance to understand what is working and what is not. Vodafone’s 5G MPN stretches the boundaries of what we can do in terms of how quickly we can analyse multiple high-resolution cameras and drone footage which ultimately informs our strategic decision making. The work John and Alan have done on this project in conjunction with Vodafone and Ericson has enabled us to push new boundaries at this years RWC. Being on our own 5G network also gives us that level of security and reliability that we really need, and we’ll have the added benefit of that connectivity with our 5G Connected Van, linking back to our High Performance centre, to reduce reliance on third party connectivity.”

John Griffin, Head of Ericsson Ireland, says: “5G is the ultimate platform of future innovation and our successful partnership with Vodafone continues to ensure new organisations like the IRFU can benefit from the low latency, high bandwidth, and secure connectivity of a 5G standalone private network. Our global leadership in 5G technology and accelerated software availability mean the IRFU will be one step ahead of their competitors on and off the field, giving them the best chance of success at an elite level of performance and revolutionizing the future of a key function within the sports industry.”

References:

https://www.ericsson.com/en/news/3/2023/ericsson-and-vodafone-help-irish-rugby-team-adopt-5g-technology-to-get-fast-in-play-data-analysis

South Korea government fines mobile carriers $25M for exaggerating 5G speeds; KT says 5G vision not met

South Korea’s antitrust regulator said it had imposed a total of 33.6 billion won ($25.06 million) in fines on three domestic mobile carriers for exaggerating their 5G network speeds. The Korea Fair Trade Commission (KFTC) said the three South Korean firms – SK Telecom Co Ltd, KT Corp, and LG Uplus Corp – had also unfairly advertised that they were the fastest relative to their competitors.

“The three telecom companies advertised that consumers could use target 5G network speeds, which cannot be achieved in real-life environment … companies advertised that their 5G network speed was faster than competitors without evidence,” the KFTC said in a statement.

In support of ongoing civil lawsuits filed by consumers, the advertisements released by the three mobile carriers have been presented by the regulator to a local court.

SK Telecom and KT Corp declined to comment. A spokesperson at LG Uplus said the company is reviewing the sanctions.

The KFTC imposed a fine of 16.8 billion won on SK Telecom, 13.9 billion won on KT and 2.8 billion won on LG Uplus.

There were 30.76 million 5G network users in South Korea in June, accounting for about 38% of the total 80.23 million mobile subscriptions in the country, according to data from the Ministry of Science and ICT.

Source: Reuters

In a paper issued last week, SK Telecom states correctly the industry is far from achieving its 5G goals even four years after commercialization. There were “misunderstandings” about network performance and problems such as device form factors and lack of market demand, it said. “A variety of visionary services were expected, but there was no killer service,” the paper stated. “We should have taken a more objective perspective,” it added. In particular:

A variety of visionary services were expected, but there was no killer service Even at the time when preparing for 5G, services such as autonomous driving, UAM, XR, hologram, and digital twin had appeared and expected, but most of them did not live up to expectations. We should have taken a more objective perspective. For example, whether 5G technology alone could change the future, or whether the overall environment constituting the service was prepared together. If so, the gap between the public’s expectations for 5G and the reality would not have been large. 3D video, UHD streaming, AR/VR, autonomous driving, remote surgery, etc. are representative services that are not still successful presented by the 5G Vision Recommendation. Most of them are the result of a combination of factors such as form factor constraints, immaturity of device and service technology, low or absent

market demand, and policy/regulation issues, rather than a single factor of the lack of 5G performance.

The authors concluded that instead of expecting that the new technology alone could create successful services, it would have been more effective to have collaborated with partners to build a broader 5G ecosystem.

Gap between 5G Vision Recommendations and customer expectations:

Although the usage scenarios and capability goals presented in the 5G Vision Recommendation are future goals to be achieved in the long term, misunderstandings have been created that can lead to excessive expectations of 5G performance and innovative services based on it from the beginning of commercialization. To prevent this misunderstanding from recurring in 6G, it is necessary to consider various usage scenarios of 6G, set achievable goals, and communicate accurately with the public. In particular, there were issues raised about the maximum transmission speed of 20Gbps, which was considered an icon of 5G key performance indicators. As 3G evolved into LTE, the radio access technology also evolved from WCDMA to OFDMA, and with the introduction of CA and multi-antenna technology, it became possible to use a much wider bandwidth than 3G. This can be seen as a ‘revolutionary’ improvement. On the other hand, 5G is considered as an ‘evolutionary’ improvement that supplements the performance of LTE based on the same radio access technology, CA, and multi-antenna system technology. Due to this, it was difficult to implement the increase in transmission speed shown in LTE in 5G at once. Moreover, the difference in technology perception was further revealed in the initial stage of 5G commercialization. Early commercialization was promoted for 5G, however, 5G required more base station compared to LTE to build a nationwide network due to frequency characteristics, requiring more efforts in terms of cost and time.

SK Telecom has made significant efforts to expedite 5G nationwide rollout, but customers wanted the same level of coverage as LTE in a brief period.

References:

https://newsroom-prd-data.s3.ap-northeast-2.amazonaws.com/wp-content/uploads/2023/08/SKT6G-White-PaperEng_v1.0_web.pdf

Barriers for telcos deploying AI in order to improve network operations

Communications service providers (CSPs) face a host of barriers, such as accessing high-quality data, that impede thesir ability to effectively deploy AI which could improve network and service operations, according to new research commissioned by Nokia and conducted by Analysys Mason.

“CSPs are unable to access high-quality data sets (which will enable them to make more accurate decisions) because they are using legacy systems with proprietary interfaces. This will restrict how quickly they can integrate AI into their networks,” according to the research, which is based on responses from 84 CSPs surveyed globally.

Almost 50 percent of Tier-1 CSPs ranked data collection as the most challenging stage of the telco AI use case development cycle.

Further, the research found that only six percent of CSPs surveyed believe they are at the most-advanced level of automation, or zero-touch automation, which relies on AI and machine learning (ML) algorithms to manage and improve network operations. The high-quality data issue is also impacting CSPs’ ability to retain AI talent.

Still, 87 percent of CSPs have started to implement AI into their network operations, either as proof of concepts or into production; with 57 percent saying they have deployed telco AI use cases to the point of production.

CSP respondents said they believe AI will help improve network service quality, top-line growth, customer experience, and energy optimisation to meet their sustainability goals.

The research said CSPs should evaluate their telco AI implementation strategies and develop a clear roadmap for AI implementation to overcome their data challenge and other impediments, such as an inability to scale AI use case deployments. The report can be found here.

Adaora Okeleke, Principal Analyst, at Analysys Mason said: “CSPs must transition to more-autonomous operations if they are to manage networks more efficiently and deliver on their main business priorities. But as this research demonstrates, accessing high-quality data remains a critical obstacle to deploying telco AI within their networks. They need to really examine their AI implementation strategies to work around this data quality issue.”

Andrew Burrell, Head of Business Applications Marketing, Cloud and Network Services at Nokia, said: “AI has a crucial role in driving step changes in network performance, including cutting carbon footprints. CSPs are aware of the challenges of more deeply embedding AI into their operations and, as this research points out, the steps they can take to positively alter that situation, including building the right ecosystem of vendor partners with the right skillsets that can better cater to their network needs.”

Resources and additional information:

Webpage: https://www.nokia.com/networks/ai-ops/

References:

ETSI NFV evolution, containers, kubernetes, and cloud-native virtualization initiatives

Backgrounder:

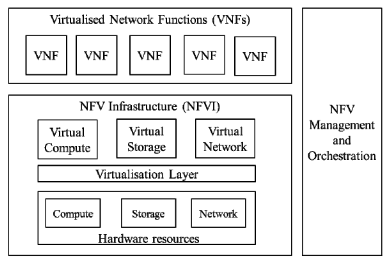

NFV, as conceived by ETSI in November, 2012, has radically changed. While the virtualization and automation concepts remain intact, the implementation envisioned is completely different. Both Virtual Network Functions (VNFs) [1.] and Management, Automation, and Network Orchestration (MANO) [2.] were not commercially successful due to telco’s move to a cloud native architecture. Moving beyond virtualization to a fully cloud-native design helps push to a new level the efficiency and agility needed to rapidly deploy innovative, differentiated offers that markets and customers demand. An important distinguishing feature of the cloud-native approach is that it uses Containers [3.] rather than VNFs implemented as VMs.

Note 1. Virtual network functions (VNFs) are software applications that deliver network functions such as directory services, routers, firewalls, load balancers, and more. They are deployed as virtual machines (VMs). VNFs are built on top of NFV infrastructure (NFVI), including a virtual infrastructure manager (VIM) like OpenStack® to allocate resources like compute, storage, and networking efficiently among the VNFs.

Note 2. Management, Automation, and Network Orchestration (MANO) is a framework for how VNFs are provisioned, their configuration, and also the deployment of the infrastructure VNFs will run on. MANO has been superseded by Kubernetes, as described below.

Note 3. Containers are units of a software application that package code and all dependencies and can be run individually and reliably from one environment to another. Some advantages of Containers are: faster deployment and much smaller footprint, factors that can help in improving the resource utilization and lowering resource consumption.

An article which compares Containers to VMs is here.

High Level NFV Framework:

Kubernetes Defined:

Each application consisted of many of these “container modules,” also called Pods, so a way to manage them was needed. Many different container orchestration systems were developed, but the one that became most popular was an open source project called Kubernetes which assumed the role of MANO. Kubernetes ensured declarative interfaces at each level and defined a set of building blocks/intents (“primitives”) in terms of API objects. These objects are representation of various resources such as Pods, Secrets, Deployments, Services. Kubernetes ensured that its design was loosely coupled, which made it easy to extend it to support the varying needs of different workloads, while still following intent-based framework.

The traditional ETSI MANO framework as defined in the context of virtual machines along with 3GPP management functions.

ETSI MANO Framework and Kubernetes and associated constructs

Source of both diagrams: Amazon Web Services

…………………………………………………………………………………………………………………………………………………………………………………………………………………………….

ETSI NFV at 2023 MWC-Shanghai Conference:

During the 2023 MWC-Shanghai conference, ETSI hosted a roundtable discussion of its NFV and cloud-native virtualization initiatives. There were presentations from China Telecom, China Mobile, China Unicom, SKT, AIS, and NTT DOCOMO. Apparently, telcos want to leverage opportunities in cloud-based microservices and network resource management, but it also has become clear that there are “challenges.”

Three reoccurring themes during the roundtable were the following:

1) the best approach to implement containerization (i.e., Virtual Machine (VM)-based containers versus bare-metal containers) which have replaced the Virtual Network Machine (VNF) concept

2) the lack of End-to-End (E2E) automation;

3) the friction and cost that is incurred from the presence of various incompatible fragmented solutions and products.

Considering the best approach to implement containerization, most attendees present suggested that having a single unified backward-compatible platform for managing both bare metal and virtualized resource pools would be advantageous. Their top three concerns for selecting between VM-based containers and bare-metal containers were performance, resource consumption, and security. The top three concerns for selecting between VM-based containers and bare-metal containers were performance, resource consumption, and security.

…………………………………………………………………………………………………………………………………………………………………………………………………………….

ETSI NFV Evolution:

While the level of achievements and real benefits of NFV might not equate among all service providers worldwide, partly due to the particular use cases and contexts where these operate. Based on the ETSI NFV architecture, service providers have been able to build ultra-largescale telco cloud infrastructures based on cross-layer and multi-vendor interoperability. For example, one of the world’s largest telco clouds based on the ETSI NFV standard architecture includes distributed infrastructure of multiple centralized regions and hundreds of edge data centers, with a total of more than 100,000 servers. In addition, some network operators have also achieved very high ratios of virtualization (i.e., amount of virtualized network functions compared to legacy ATCA-based network elements) in their targeted network systems, e.g., above 70% in the case of 4G and 5G core network systems. In addition, ETSI NFV standards are continuously providing essential value for wider-scale multivendor interoperability, also into the hyperscaler ecosystem as exemplified by recent announcements on offering support for ETSI NFV specifications in offered telco network management service solutions.

ETSI ISG NFV Release 5, initiated in 2021, had “consolidation and ecosystem” as its slogan. It aimed to address further operational issues in areas such as energy efficiency, configuration management, fault management, multi-tenancy, network connectivity, etc., and consider new use cases or technologies developed by other organizations in the ecosystem

Work on ETSI NFV Release 6 has started. It will focus on: 1) new challenges, 2) architecture evolution, and 3) additional infrastructure work items.

Key changes include:

- The broadening of virtualization technologies beyond traditional Virtual Machines (VMs) and containers (e.g., micro VM, Kata Containers, and WebAssembly)

- Creation of declarative intent-driven network operations

- Integrating heterogenous hardware, Application Programming Interfaces (APIs), and cloud platforms through a unified management framework

All changes aim for simplification and automation within the NFV architecture. The developments are preceded by recent announcements of standards-based applications by hyperscalers: Amazon Web Services (AWS) Telco Network Builder (TNB) and Microsoft Azure Operator Nexus (AON) are two new NFV-Management and Orchestration (NFV-MANO)-compliant platforms for automating deployment of network services (including the core and Radio Access Network (RAN)) through the hybrid cloud.

As more network operators and vendors are already leveraging the potential of OS container virtualization (containers) technologies for deploying telecom networks, the ETSI ISG NFV also studied how to enhance its specifications to support this trend. During this work, the community has found ways to reuse the VNF modeling and existing NFV management and orchestration (NFV-MANO) interfaces to address both OS container and VM virtualization technologies, hence ensuring that the VNF modeling embraces the cloud-native network function (CNF) concepts, which is now a term commonly referred in the industry.

This has been achieved despite OS container and VM technologies having somewhat different management logic and resource descriptions. However, diverse and quickly changing open source solutions make it hard to define unified and standardized specifications. Nevertheless, due to the fact that both kind of virtualization technologies can and will still play a major role in the future to fulfill the various and broad set of telecom network use cases, efforts to further evolve them as well as to complement them with other newer virtualization technologies (e.g., unikernels) are needed.

Furthermore, driven by new application scenarios and different workload requirements (e.g., video, Cloud RAN, etc.), new requirements for deploying diversified heterogeneous hardware resources in the NFV system are becoming a reality. For example, to meet high-performance VNFs, requirements for heterogeneous acceleration hardware resources such as DPUs, GPUs, NPU, FPGAs, and AI ASIC are being brought forward. In another example, to meet the ubiquitous deployment of edge devices in the future, other types of heterogeneous hardware resources, such as integrated edge devices and specialized access

devices, are also starting to be considered.

NFV architectures have and will continue to evolve, especially with the rise of Artificial Intelligence (AI) and Machine Learning (ML) automation. Data centers, either cloud-based or “on-premises” are becoming complex, heterogeneous environments. In addition to Central Processing Units (CPUs), complementary Graphics Processing Units (GPUs) handle parallel processing functions for accelerated computing tasks of all kinds. AI, Deep Learning (DL), and big data analytics applications are underpinned by GPUs. However, as data centers have expanded in complexity, Digital Processing Units (DPUs) have become the third member of this data-centric accelerated computing model. The DPU helps orchestrate and direct the data around the data center and other processing nodes on the network.

……………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………

References:

Updates in ETSI NFV for Accelerating the Transition to Cloud (abiresearch.com)

Omdia and Ericsson on telco transitioning to cloud native network functions (CNFs) and 5G SA core networks

Virtual Network Function Orchestration (VNFO) Market Overview: VMs vs Containers

Qualcomm and BT open 5G Lab in Farnborough, UK

BT Group and Qualcomm have together formed a new 5G laboratory located at Qualcomm Technologies’ offices in Farnborough, United Kingdom. The 5G test lab will have BT Group’s live environment installed; BT Group runs the EE mobile network in the U.K.

From the early days of 4G to the development of 5G, this collaboration has grown from strength to strength they say. While the companies have not disclosed many details regarding which technologies and use cases are being explored at the lab, they did state that enabling faster deployment and commercialization of 5G features and services is a key focus area.

Vikrant Jain, Director, Business Development, Qualcomm Technologies International, Ltd. says: “We are excited to announce our collaboration with BT for testing and validation of new 5G features / next generation 5G services. Our state-of-the-art lab facilities will help facilitate and speed up the time-to-market, which means customers can benefit from the new technology sooner. We value our relationship and that has been running for over a decade, and we would like to thank BT for their continued support on this advancing innovation and we look forward to what else is to come for us in the technology space in the future.”

Naveen Khapali, Senior Manager, Device Technology at BT Group, says: “By working directly with Qualcomm Technologies in an embedded 5G lab, we’ll be able to realize the benefits of closer working, helping to bring the next generation of technology to our customers sooner.”

About Qualcomm:

Qualcomm is enabling a world where everyone and everything can be intelligently connected. Our one technology roadmap allows us to efficiently scale the technologies that launched the mobile revolution – including advanced connectivity, high-performance, low-power compute, on-device intelligence and more – to the next generation of connected smart devices across industries. Innovations from Qualcomm and our family of Snapdragon platforms will help enable cloud-edge convergence, transform industries, accelerate the digital economy, and revolutionize how we experience the world, for the greater good.

Qualcomm Incorporated includes our licensing business, QTL, and the vast majority of our patent portfolio. Qualcomm Technologies, Inc., a subsidiary of Qualcomm Incorporated, operates, along with its subsidiaries, substantially all of our engineering, research and development functions, and substantially all of our products and services businesses, including our QCT semiconductor business. Snapdragon and Qualcomm branded products are products of Qualcomm Technologies, Inc. and/or its subsidiaries. Qualcomm patented technologies are licensed by Qualcomm Incorporated.

About BT Group:

BT Group is the UK’s leading provider of fixed and mobile telecommunications and related secure digital products, solutions and services. We also provide managed telecommunications, security and network and IT infrastructure services to customers across 180 countries.

BT Group consists of three customer-facing units: Business covers companies and public services in the UK and internationally; Consumer serves individuals and families in the UK; Openreach is an independently governed, wholly owned subsidiary wholesaling fixed access infrastructure services to its customers – over 650 communications providers across the UK.

British Telecommunications plc is a wholly owned subsidiary of BT Group plc and encompasses virtually all businesses and assets of the BT Group. BT Group plc is listed on the London Stock Exchange.

For more information, visit www.bt.com/about

………………………………………………………………………………………………………………………..

References:

https://www.qualcomm.com/news/releases/2023/08/qualcomm-and-bt-group-announce-5g-lab-r-d-facilities

BT and Ericsson wideband FDD trial over live 5G SA network in the UK

BT tests 4CC Carrier Aggregation over a standalone 5G network using Nokia equipment

BT and Ericsson in partnership to provide commercial 5G private networks in the UK

Qualcomm CEO: AI will become pervasive, at the edge, and run on Snapdragon SoC devices

Qualcomm Introduces the World’s First “5G NR-Light” Modem-RF System for new 5G use cases and apps

Nokia to open 5G and 6G research lab in Amadora, Portugal

AT&T Lab to research 5G use cases, 5G+ available in Houston, TX