AI

AI Meets Telecom: Automating RF Plumbing Diagrams at Scale

By Chode Balaji with Ajay Lotan Thakur

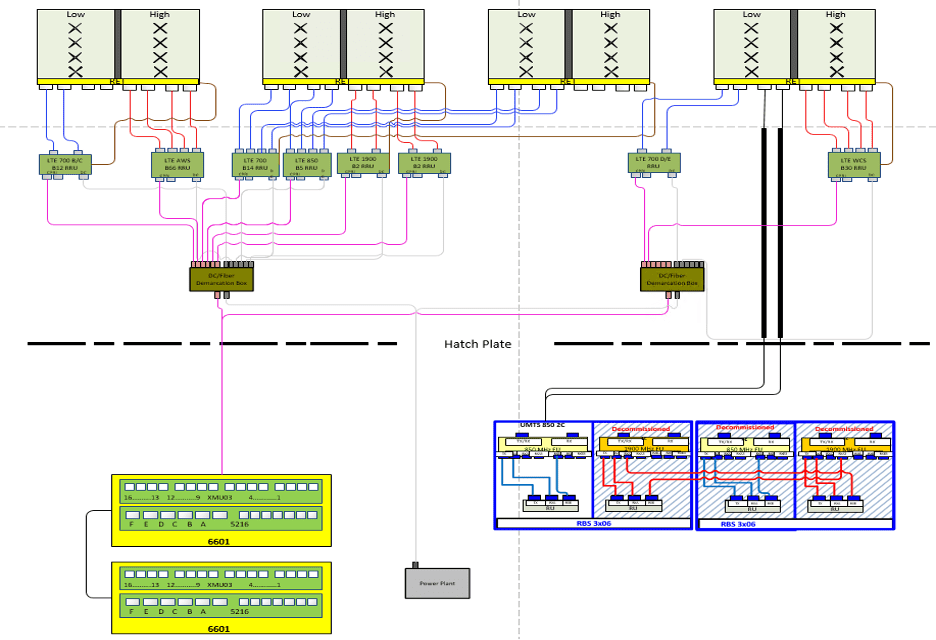

In radio frequency (RF) circuit design, an RF plumbing diagram is a visual representation of how components such as antennas, amplifiers, filters, and cables are physically interconnected to manage RF signal flow across a network node. Unlike logical or schematic diagrams, these diagrams emphasize signal routing, cable paths, and component connectivity, ensuring spectrum compliance and accurate transmission behavior.

In this article, I introduce an AI-powered automation platform designed to generate RF plumbing diagrams for complex telecom deployments, which dramatically reduces manual effort and engineering errors. The system has been field-tested within a major telecom provider’s RF design workflow, showing measurable reduction in design time and increased compliance, where it has cut design time from hours to minutes while standardizing outputs across markets. We discuss the architecture of the platform, its real-world use cases, and the broader implications for network scalability and compliance in next-generation RF deployments.

Introduction

In RF circuit and system design, an RF plumbing diagram is a critical visual blueprint that shows how physical components—antennas, cables, combiners, duplexers, and power sources—are interconnected to manage signal flow across a network node. Unlike logical network schematics, these diagrams emphasize actual deployment wiring, routing, and interconnection details across multiple frequency bands and sectors.

As networks become increasingly dense and distributed, especially with 5G and Open RAN architectures, RF plumbing diagrams have grown in both complexity and importance. Yet across the industry, they are still predominantly created using manual methods—introducing inconsistency, delay, and high operational cost [1].

Challenges with Manual RF Documentation

Creating RF plumbing diagrams manually demands deep subject matter expertise, detailed knowledge of hardware interconnections, and alignment with region-specific compliance standards. Each diagram can take hours to complete, and even minor errors—such as incorrect port mappings or misaligned frequency bands—can result in service degradation, failed field validations, or regulatory non-compliance. In some cases, incorrect diagrams have delayed spectrum audits or triggered failed E911 checks, which are critical in public safety contexts.

Compliance requirements often vary by country due to differences in spectrum licensing, environmental limits, and emergency services integration. For example, the U.S. mandates specific RF configuration standards for E911 systems [3], while European operators must align with ETSI guidelines [4].

According to industry discussions on automation in telecom operations [1], reducing manual overhead and standardizing documentation workflows is a key goal for next-generation network teams.

System Overview – AI Powered Diagram Generation

To streamline this process, we developed CERTA RFDS—a system that automates RF plumbing diagram generation using input configuration data. CERTA ingests band and sector mappings, node configurations, and passive element definitions, then applies business logic to render a complete, standards-aligned diagram.

The system is built as a cloud-native microservice and can be integrated into OSS workflows or CI/CD pipelines used by RF planning teams. Its modular engine outputs standardized SVG/PDFs and maintains design versioning aligned with audit requirements.

This system aligns with automation trends seen in AI-native telecom operations [1] and can scale to support edge-native deployments as part of broader infrastructure-as-code workflows.

Deployment and Public Availability

The CERTA RFDS system has been internally validated within major telecom design teams and is now available publicly for industry adoption. It has demonstrated consistent savings in engineering time—reducing diagram effort from 2–4 hours to under 5 minutes per node—while improving compliance through template consistency. These results and the underlying platform were presented at the IEEE International Conference on Emerging and Advanced Information Systems (EEAIS) [5]. (Note – Paper is presented at EEAIS 2025; publication pending)

Output Showcase and Engineering Impact

Below is a sample RF plumbing diagram generated by the CERTA platform for a complex LTE and UMTS multi-sector node. The system automatically determines feed paths, port mappings, and labeling conventions based on configuration metadata.

As 5G networks continue to roll out globally, RF plumbing diagrams are becoming even more complex due to increased densification, the use of small cells, and the incorporation of mmWave technologies. The AI-driven automation framework we developed is fully adaptable to 5G architecture. It supports configuration planning for high-frequency spectrum bands, MIMO antenna arrangements, and ensures that E911 and regulatory compliance standards are maintained even in ultra-dense urban deployments. This makes the system a valuable asset in accelerating the design and validation processes for next generation 5G infrastructure.

Figure 1. AI-Generated RF Plumbing Diagram from CERTA RFDS: Illustrating dual-feed, multi-sector layout for LTE and UMTS deployment.

Figure 1. AI-Generated RF Plumbing Diagram from CERTA RFDS: Illustrating dual-feed, multi-sector layout for LTE and UMTS deployment.

Benefits include:

- 90%+ time savings per node

- Consistency across regions and engineering teams

- Simplified field validation and compliance review

Future Scope

CERTA RFDS is being extended to support:

- GIS visualization of RF components with geo-tagged layouts

- Integration with planning systems for real-time topology generation

- LLM-based auto-summary of node-level changes for audit documentation

Conclusion

RF plumbing diagrams are fundamental to reliable telecom deployment and compliance. By shifting from manual workflows to intelligent automation, systems like CERTA RFDS enable engineers and operators to scale with confidence, consistency, and speed—meeting the challenges of modern wireless networks.

Abbreviation

- CERTA RFDS – Cognitive Engineering for Rapid RFDS Transformation & Automation

- RFDS – Radio Frequency Data Sheet

- GIS – Geographic Information System

- LLM – Large Language Model

- OSS – Operations Support System

- MIMO – Multiple Input Multiple Output

- RF – Radio Frequency

Reference

[1] ZTE’s Vision for AI-Native Infrastructure and AI-Powered Operations

[5] IEEE EEAIS 2025 Conference, “CERTA RFDS: Automating RF Plumbing Diagrams at Scale,”

About Author

Balaji Chode is an AI Solutions Architect at UBTUS, where he leads telecom automation initiatives including the design and deployment of CERTA RFDS. He has contributed to large-scale design and automation platforms across telecom and public safety, authored multiple peer-reviewed articles, and filed several patents.

“The author acknowledges the use of AI-assisted tools for language refinement and formatting”

OpenAI announces new open weight, open source GPT models which Orange will deploy

Overview:

OpenAI today introduced two new open-weight, open-source GPT models (gpt-oss-120b and gpt-oss-20b) designed to deliver top-tier performance at a lower cost. Available under the flexible Apache 2.0 license, these models outperform similarly sized open models on reasoning tasks, demonstrate strong tool use capabilities, and are optimized for efficient deployment on consumer hardware. They were trained using a mix of reinforcement learning and techniques informed by OpenAI’s most advanced internal models, including o3 and other frontier systems.

These two new AI models require much less compute power to run, with the gpt-oss20B version able to run on just 16 GB of memory. The smaller memory size and less compute power enables OpenAI’s models to run in a wider variety of environments, including at the network edge. The open weights mean those using the models can tweak the training parameters and customize them for specific tasks.

OpenAI has been working with early partner companies, including AI Sweden, Orange, and Snowflake to learn about real-world applications of our open models, from hosting these models on-premises for data security to fine-tuning them on specialized datasets. We’re excited to provide these best-in-class open models to empower everyone—from individual developers to large enterprises to governments—to run and customize AI on their own infrastructure. Coupled with the models available in our API, developers can choose the performance, cost, and latency they need to power AI workflows.

In lockstep with OpenAI, France’s Orange today announced plans to deploy the new OpenAI models in its regional cloud data centers as well as small on-premises servers and edge sites to meet demand for sovereign AI solutions. Orange’s deep AI engineering talent enables it to customize and distill the OpenAI models for specific tasks, effectively creating smaller sub-models for particular use-cases, while ensuring the protection of all sensitive data used in these customized models. This process facilitates innovative use-cases in network operations and will enable Orange to build on its existing suite of ‘Live Intelligence’ AI solutions for enterprises, as well as utilizing it for its own operational needs to improve efficiency, and drive cost savings.

Using AI to improve the quality and resilience of its networks, for example by enabling Orange to more easily explore and diagnose complex network issues with the help of AI. This can be achieved with trusted AI models that operate entirely within Orange sovereign data centers where Orange has complete control over the use of sensitive network data. This ability to create customized, secure, and sovereign AI models for network use cases is a key enabler in Orange’s mission to achieve higher levels of automation across all of its networks.

Steve Jarrett, Orange’s Chief AI Officer, noted the decision to use state-of-the-art open-weight models will allow it to drive “new use cases to address sensitive enterprise needs, help manage our networks, enable innovating customer care solutions including African regional languages, and much more.”

Performance of the new OpenAI models:

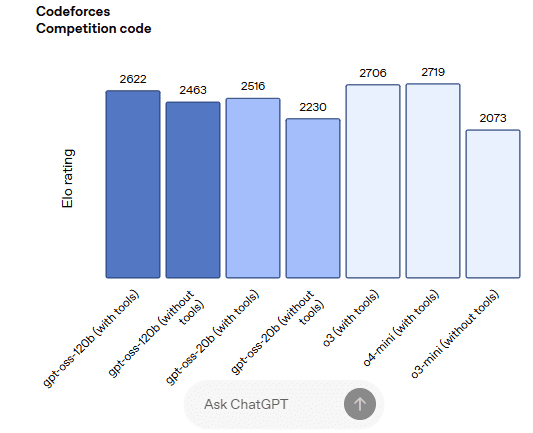

gpt-oss-120b outperforms OpenAI o3‑mini and matches or exceeds OpenAI o4-mini on competition coding (Codeforces), general problem solving (MMLU and HLE) and tool calling (TauBench). It furthermore does even better than o4-mini on health-related queries (HealthBench) and competition mathematics (AIME 2024 & 2025). gpt-oss-20b matches or exceeds OpenAI o3‑mini on these same evals, despite its small size, even outperforming it on competition mathematics and health.

Sovereign AI Market Forecasts:

Open-weight and open-source AI models play a significant role in enabling and shaping the development of Sovereign AI, which refers to a nation’s or organization’s ability to control its own AI technologies, data, and infrastructure to meet its specific needs and regulations.

Sovereign AI refers to a nation’s ability to control and manage its own AI development and deployment, including data, infrastructure, and talent. It’s about ensuring a country’s strategic autonomy in the realm of artificial intelligence, enabling them to leverage AI for their own economic, social, and security interests, while adhering to their own values and regulations.

Bank of America’s financial analysts recently forecast the sovereign AI market segment could become a “$50 billion a year opportunity, accounting for 10%–15% of the global $450–$500 billion AI infrastructure market.”

BofA analysts said, “Sovereign AI nicely complements commercial cloud investments with a focus on training and inference of LLMs in local culture, language and needs,” and could mitigate challenges such as “limited power availability for data centers in US” and trade restrictions with China.

References:

https://openai.com/index/introducing-gpt-oss/

https://newsroom.orange.com/orange-and-openai-collaborate-on-trusted-responsible-and-inclusive-ai/

https://finance.yahoo.com/news/nvidia-amd-targets-raised-bofa-162314196.html

Open AI raises $8.3B and is valued at $300B; AI speculative mania rivals Dot-com bubble

OpenAI partners with G42 to build giant data center for Stargate UAE project

Reuters & Bloomberg: OpenAI to design “inference AI” chip with Broadcom and TSMC

Nvidia’s networking solutions give it an edge over competitive AI chip makers

Nvidia’s networking equipment and module sales accounted for $12.9 billion of its $115.1 billion in data center revenue in its prior fiscal year. Composed of its NVLink, InfiniBand, and Ethernet solutions, Nvidia’s networking products (from its Mellanox acquisition) are what allow its GPU chips to communicate with each other, let servers talk to each other inside massive data centers, and ultimately ensure end users can connect to it all to run AI applications.

“The most important part in building a supercomputer is the infrastructure. The most important part is how you connect those computing engines together to form that larger unit of computing,” explained Gilad Shainer, senior vice president of networking at Nvidia.

In Q1-2025, networking made up $4.9 billion of Nvidia’s $39.1 billion in data center revenue. And it’ll continue to grow as customers continue to build out their AI capacity, whether that’s at research universities or massive data centers.

“It is the most underappreciated part of Nvidia’s business, by orders of magnitude,” Deepwater Asset Management managing partner Gene Munster told Yahoo Finance. “Basically, networking doesn’t get the attention because it’s 11% of revenue. But it’s growing like a rocket ship. “[Nvidia is a] very different business without networking,” Munster explained. “The output that the people who are buying all the Nvidia chips [are] desiring wouldn’t happen if it wasn’t for their networking.”

Nvidia senior vice president of networking Kevin Deierling says the company has to work across three different types of networks:

- NVLink technology connects GPUs to each other within a server or multiple servers inside of a tall, cabinet-like server rack, allowing them to communicate and boost overall performance.

- InfiniBand connects multiple server nodes across data centers to form what is essentially a massive AI computer.

- Ethernet connectivity for front-end network for storage and system management.

Note: Industry groups also have their own competing networking technologies including UALink, which is meant to go head-to-head with NVLink, explained Forrester analyst Alvin Nguyen.

“Those three networks are all required to build a giant AI-scale, or even a moderately sized enterprise-scale, AI computer,” Deierling explained. Low latency is key as longer transit times for data going to/from GPUs slows the entire operation, delaying other processes and impacting the overall efficiency of an entire data center.

Nvidia CEO Jensen Huang presents a Grace Blackwell NVLink72 as he delivers a keynote address at the Consumer Electronics Show (CES) in Las Vegas, Nevada on January 6, 2025. Photo by PATRICK T. FALLON/AFP via Getty Images

As companies continue to develop larger AI models and autonomous and semi-autonomous agentic AI capabilities that can perform tasks for users, making sure those GPUs work in lockstep with each other becomes increasingly important.

The AI industry is in the midst of a broad reordering around the idea of inferencing, which requires more powerful data center systems to run AI models. “I think there’s still a misperception that inferencing is trivial and easy,” Deierling said.

“It turns out that it’s starting to look more and more like training as we get to [an] agentic workflow. So all of these networks are important. Having them together, tightly coupled to the CPU, the GPU, and the DPU [data processing unit], all of that is vitally important to make inferencing a good experience.”

Competitor AI chip makers, like AMD are looking to grab more market share from Nvidia, and cloud giants like Amazon, Google, and Microsoft continue to design and develop their own AI chips. However, none of them have the low latency, high speed connectivity solutions provided by Nvidia (again, think Mellanox).

References:

https://www.nvidia.com/en-us/networking/

Networking chips and modules for AI data centers: Infiniband, Ultra Ethernet, Optical Connections

Nvidia enters Data Center Ethernet market with its Spectrum-X networking platform

Superclusters of Nvidia GPU/AI chips combined with end-to-end network platforms to create next generation data centers

Does AI change the business case for cloud networking?

The case for and against AI-RAN technology using Nvidia or AMD GPUs

Telecom sessions at Nvidia’s 2025 AI developers GTC: March 17–21 in San Jose, CA

Open AI raises $8.3B and is valued at $300B; AI speculative mania rivals Dot-com bubble

According to the Financial Times (FT), OpenAI (the inventor of Chat GPT) has raised another $8.3 billion in a massively over-subscribed funding round, including $2.8 billion from Dragoneer Investment Group, a San Francisco-based technology-focused fund. Leading VCs that also participated in the funding round included Founders Fund, Sequoia Capital, Andreessen Horowitz, Coatue Management, Altimeter Capital, D1 Capital Partners, Tiger Global and Thrive Capital, according to the people with knowledge of the deal.

The oversubscribed funding round came months ahead of schedule. OpenAI initially raised $2.5 billion from VC firms in March when it announced its intention to raise $40 billion in a round spearheaded by SoftBank. The Chat GPT maker is now valued at $300 billion.

OpenAI’s annual recurring revenue has surged to $12bn, according to a person with knowledge of OpenAI’s finances, and the group is set to release its latest model, GPT-5, this month.

OpenAI is in the midst of complex negotiations with Microsoft that will determine its corporate structure. Rewriting the terms of the pair’s current contract, which runs until 2030, is seen as a prerequisite to OpenAI simplifying its structure and eventually going public. The two companies have yet to agree on key issues such as how long Microsoft will have access to OpenAI’s intellectual property. Another sticking point is the future of an “AGI clause”, which allows OpenAI’s board to declare that the company has achieved a breakthrough in capability called “artificial general intelligence,” which would then end Microsoft’s access to new models.

An additional risk is the increasing competition from rivals such as Anthropic — which is itself in talks for a multibillion-dollar fundraising — and is also in a continuing legal battle with Elon Musk. The FT also reported that Amazon is set to increase its already massive investment in Anthropic.

OpenAI CEO Sam Altman. The funding forms part of a round announced in March that values the ChatGPT maker at $300bn © Yuichi Yamazaki/AFP via Getty Images

………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………..

While AI is the transformative technology of this generation, comparisons are increasingly being made with the Dot-com bubble. 1999 saw such a speculative frenzy for anything with a ‘.com’ at the end that valuations and stock markets reached unrealistic and clearly unsustainable levels. When that speculative bubble burst, the global economy fell into an extended recession in 2001-2002. As a result, analysts are now questioning the wisdom of the current AI speculative bubble and fearing dire consequences when it eventually bursts. Just as with the Dot-com bubble, AI revenues are nowhere near justifying AI company valuations, especially for private AI companies that are losing tons of money (see Open AI losses detailed below).

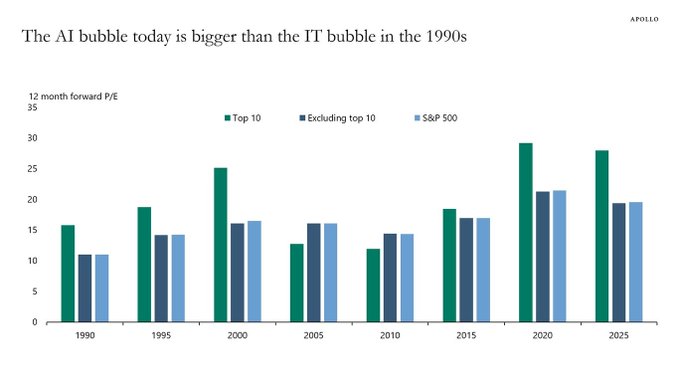

Torsten Slok, Partner and Chief Economist at Apollo Global Management via ZERO HEDGE on X: “The difference between the IT bubble in the 1990s and the AI bubble today is that the top 10 companies in the S&P 500 today (including Nvidia, Microsoft, Amazon, Google, and Meta) are more overvalued than the IT companies were in the 1990s.”

AI private companies may take a lot longer to reach the lofty profit projections institutional investors have assumed. Their reliance on projected future profits over current fundamentals is a dire warning sign to this author. OpenAI, for example, faces significant losses and aggressive revenue targets to become profitable. OpenAI reported an estimated loss of $5 billion in 2024, despite generating $3.7 billion in revenue. The company is projected to lose $14 billion in 2026 while total projected losses from 2023 to 2028 are expected to reach $44 billion.

Other AI bubble data points (publicly traded stocks):

- The proportion of the S&P 500 represented by the 10 largest companies is significantly higher now (almost 40%) compared to 25% in 1999. This indicates a more concentrated market driven by a few large technology companies deeply involved in AI development and adoption.

- Investment in AI infrastructure has reportedly exceeded the spending on telecom and internet infrastructure during the dot-com boom and continues to grow, suggesting a potentially larger scale of investment in AI relative to the prior period.

- Some indices tracking AI stocks have demonstrated exceptionally high gains in a short period, potentially surpassing the rates of the dot-com era, suggesting a faster build-up in valuations.

- The leading hyperscalers, such as Amazon, Microsoft, Google, and Meta, are investing vast sums in AI infrastructure to capitalize on the burgeoning AI market. Forecasts suggest these companies will collectively spend $381 billion in 2025 on AI-ready infrastructure, a significant increase from an estimated $270 billion in 2024.

Check out this YouTube video: “How AI Became the New Dot-Com Bubble”

References:

https://www.ft.com/content/76dd6aed-f60e-487b-be1b-e3ec92168c11

https://www.telecoms.com/ai/openai-funding-frenzy-inflates-the-ai-bubble-even-further

https://x.com/zerohedge/status/1945450061334216905

AI spending is surging; companies accelerate AI adoption, but job cuts loom large

Will billions of dollars big tech is spending on Gen AI data centers produce a decent ROI?

Canalys & Gartner: AI investments drive growth in cloud infrastructure spending

AI Echo Chamber: “Upstream AI” companies huge spending fuels profit growth for “Downstream AI” firms

Huawei launches CloudMatrix 384 AI System to rival Nvidia’s most advanced AI system

Gen AI eroding critical thinking skills; AI threatens more telecom job losses

Dell’Oro: AI RAN to account for 1/3 of RAN market by 2029; AI RAN Alliance membership increases but few telcos have joined

China gaining on U.S. in AI technology arms race- silicon, models and research

Introduction:

According to the Wall Street Journal, the U.S. maintains its early lead in AI technology with Silicon Valley home to the most popular AI models and the most powerful AI chips (from Santa Clara based Nvidia and AMD). However, China has shown a willingness to spend whatever it takes to take the lead in AI models and silicon.

The rising popularity of DeepSeek, the Chinese AI startup, has buoyed Beijing’s hopes that it can become more self-sufficient. Huawei has published several papers this year detailing how its researchers used its homegrown AI chips to build large language models without relying on American technology.

“China is obviously making progress in hardening its AI and computing ecosystem,” said Michael Frank, founder of think tank Seldon Strategies.

AI Silicon:

Morgan Stanley analysts forecast that China will have 82% of AI chips from domestic makers by 2027, up from 34% in 2024. China’s government has played an important role, funding new chip initiatives and other projects. In July, the local government in Shenzhen, where Huawei is based, said it was raising around $700 million to invest in strengthening an “independent and controllable” semiconductor supply chain.

During a meeting with President Xi Jinping in February, Huawei Chief Executive Officer Ren Zhengfei told Xi about “Project Spare Tire,” an effort by Huawei and 2,000 other enterprises to help China’s semiconductor sector achieve a self-sufficiency rate of 70% by 2028, according to people familiar with the meeting.

……………………………………………………………………………………………………………………………………………

AI Models:

Prodded by Beijing, Chinese financial institutions, state-owned companies and government agencies have rushed to deploy Chinese-made AI models, including DeepSeek [1.] and Alibaba’s Qwen. That has fueled demand for homegrown AI technologies and fostered domestic supply chains.

Note 1. DeepSeek’s V3 large language model matched many performance benchmarks of rival AI programs developed in the U.S. at a fraction of the cost. DeepSeek’s open-weight models have been integrated into many hospitals in China for various medical applications.

In recent weeks, a flurry of Chinese companies have flooded the market with open-source AI models, many of which are claiming to surpass DeepSeek’s performance in certain use cases. Open source models are freely accessible for modification and deployment.

The Chinese government is actively supporting AI development through funding and policy initiatives, including promoting the use of Chinese-made AI models in various sectors.

Meanwhile, OpenAI’s CEO Sam Altman said his company had pushed back the release of its open-source AI model indefinitely for further safety testing.

AI Research:

China has taken a commanding lead in the exploding field of artificial intelligence (AI) research, despite U.S. restrictions on exporting key computing chips to its rival, finds a new report.

The analysis of the proprietary Dimensions database, released yesterday, finds that the number of AI-related research papers has grown from less than 8500 published in 2000 to more than 57,000 in 2024. In 2000, China-based scholars produced just 671 AI papers, but in 2024 their 23,695 AI-related publications topped the combined output of the United States (6378), the United Kingdom (2747), and the European Union (10,055).

“U.S. influence in AI research is declining, with China now dominating,” Daniel Hook, CEO of Digital Science, which owns the Dimensions database, writes in the report DeepSeek and the New Geopolitics of AI: China’s ascent to research pre-eminence in AI.

In 2024, China’s researchers filed 35,423 AI-related patent applications, more than 13 times the 2678 patents filed in total by the U.S., the U.K., Canada, Japan, and South Korea.

References:

https://www.wsj.com/tech/ai/how-china-is-girding-for-an-ai-battle-with-the-u-s-5b23af51

Huawei launches CloudMatrix 384 AI System to rival Nvidia’s most advanced AI system

U.S. export controls on Nvidia H20 AI chips enables Huawei’s 910C GPU to be favored by AI tech giants in China

Goldman Sachs: Big 3 China telecom operators are the biggest beneficiaries of China’s AI boom via DeepSeek models; China Mobile’s ‘AI+NETWORK’ strategy

Gen AI eroding critical thinking skills; AI threatens more telecom job losses

Softbank developing autonomous AI agents; an AI model that can predict and capture human cognition

AI spending is surging; companies accelerate AI adoption, but job cuts loom large

Big Tech and VCs invest hundreds of billions in AI while salaries of AI experts reach the stratosphere

ZTE’s AI infrastructure and AI-powered terminals revealed at MWC Shanghai

Deloitte and TM Forum : How AI could revitalize the ailing telecom industry?

Huawei launches CloudMatrix 384 AI System to rival Nvidia’s most advanced AI system

On Saturday, Huawei Technologies displayed an advanced AI computing system in China, as the Chinese technology giant seeks to capture market share in the country’s growing artificial intelligence sector. Huawei’s CloudMatrix 384 system made its first public debut at the World Artificial Intelligence Conference (WAIC), a three-day event in Shanghai where companies showcase their latest AI innovations, drawing a large crowd to the company’s booth.

The Huawei CloudMatrix 384 is a high-density AI computing system featuring 384 Huawei Ascend 910C chips, designed to rival Nvidia’s GB200 NVL72 (more below). The AI system employs a “supernode” architecture with high-speed internal chip interconnects. The system is built with optical links for low-latency, high-bandwidth communication. Huawei has also integrated the CloudMatrix 384 into its cloud platform. The system has drawn close attention from the global AI community since Huawei first announced it in April.

The CloudMatrix 384 resides on the super-node Ascend platform and uses high-speed bus interconnection capability, resulting in low latency linkage between 384 Ascend NPUs. Huawei says that “compared to traditional AI clusters that often stack servers, storage, network technology, and other resources, Huawei CloudMatrix has a super-organized setup. As a result, it also reduces the chance of facing failures at times of large-scale training.

Huawei staff at its WAIC booth declined to comment when asked to introduce the CloudMatrix 384 system. A spokesperson for Huawei did not respond to questions. However, Huawei says that “early reports revealed that the CloudMatrix 384 can offer 300 PFLOPs of dense BF16 computing. That’s double of Nvidia GB200 NVL72 AI tech system. It also excels in terms of memory capacity (3.6x) and bandwidth (2.1x).” Indeed, industry analysts view the CloudMatrix 384 as a direct competitor to Nvidia’s GB200 NVL72, the U.S. GPU chipmaker’s most advanced system-level product currently available in the market.

One industry expert has said the CloudMatrix 384 system rivals Nvidia’s most advanced offerings. Dylan Patel, founder of semiconductor research group SemiAnalysis, said in an April article that Huawei now had AI system capabilities that could beat Nvidia’s AI system. The CloudMatrix 384 incorporates 384 of Huawei’s latest 910C chips and outperforms Nvidia’s GB200 NVL72 on some metrics, which uses 72 B200 chips, according to SemiAnalysis. The performance stems from Huawei’s system design capabilities, which compensate for weaker individual chip performance through the use of more chips and system-level innovations, SemiAnalysis said.

Huawei has become widely regarded as China’s most promising domestic supplier of chips essential for AI development, even though the company faces U.S. export restrictions. Nvidia CEO Jensen Huang told Bloomberg in May that Huawei had been “moving quite fast” and named the CloudMatrix as an example.

Huawei says the system uses “supernode” architecture that allows the chips to interconnect at super-high speeds and in June, Huawei Cloud CEO Zhang Pingan said the CloudMatrix 384 system was operational on Huawei’s cloud platform.

According to Huawei, the Ascend AI chip-based CloudMatrix 384 with three important benefits:

- Ultra-large bandwidth

- Ultra-Low Latency

- Ultra-Strong Performance

These three perks can help enterprises achieve better AI training as well as stable reasoning performance for models. They could further retain long-term reliability.

References:

https://www.huaweicentral.com/huawei-launches-cloudmatrix-384-ai-chip-cluster-against-nvidia-nvl72/

https://semianalysis.com/2025/04/16/huawei-ai-cloudmatrix-384-chinas-answer-to-nvidia-gb200-nvl72/

U.S. export controls on Nvidia H20 AI chips enables Huawei’s 910C GPU to be favored by AI tech giants in China

Huawei’s “FOUR NEW strategy” for carriers to be successful in AI era

FT: Nvidia invested $1bn in AI start-ups in 2024

Gen AI eroding critical thinking skills; AI threatens more telecom job losses

Two alarming research studies this year have drawn attention to the damage that Gen AI agents like ChatGPT are doing to our brains:

The first study, published in February, by Microsoft and Carnegie Mellon University, surveyed 319 knowledge workers and concluded that “while GenAI can improve worker efficiency, it can inhibit critical engagement with work and can potentially lead to long-term overreliance on the tool and diminished skills for independent problem-solving.”

An MIT study divided participants into three essay-writing groups. One group had access to Gen AI and another to Internet search engines while the third group had access to neither. This “brain” group, as MIT’s researchers called it, outperformed the others on measures of cognitive ability. By contrast, participants in the group using a Gen AI large language model (LLM) did the worst. “Brain connectivity systematically scaled down with the amount of external support,” said the report’s authors.

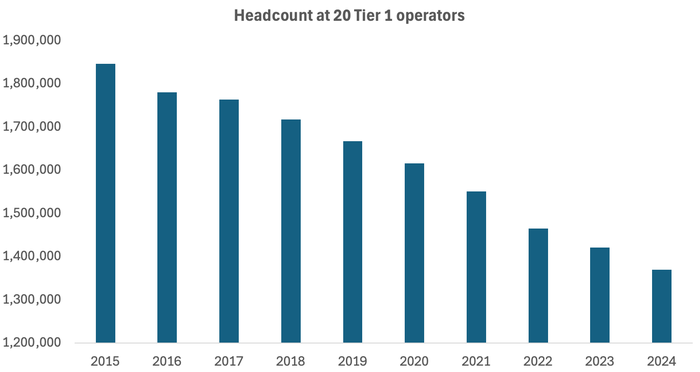

Across the 20 companies regularly tracked by Light Reading, headcount fell by 51,700 last year. Since 2015, it has dropped by more than 476,600, more than a quarter of the previous total.

Source: Light Reading

………………………………………………………………………………………………………………………………………………

Doing More with Less:

- In 2015, Verizon generated sales of $131.6 billion with a workforce of 177,700 employees. Last year, it made $134.8 billion with fewer than 100,000. Revenues per employee, accordingly, have risen from about $741,000 to more than $1.35 million over this period.

- AT&T made nearly $868,000 per employee last year, compared with less than $522,000 in 2015.

- Deutsche Telekom, buoyed by its T-Mobile US business, has grown its revenue per employee from about $356,000 to more than $677,000 over the same time period.

- Orange’s revenue per employee has risen from $298,000 to $368,000.

Significant workforce reductions have happened at all those companies, especially AT&T which finished last year with 141,000 employees – about half the number it had in 2015!

Not to be outdone, headcount at network equipment companies are also shrinking. Ericsson, Europe’s biggest 5G vendor, cut 6,000 jobs or 6% of its workforce last year and has slashed 13,000 jobs since 2023. Nokia’s headcount fell from 86,700 in 2023 to 75,600 at the end of last year. The latest message from Börje Ekholm, Ericsson’s CEO, is that AI will help the company operate with an even smaller workforce in future. “We also see and expect big benefits from the use of AI, and that is one reason why we expect restructuring costs to remain elevated during the year,” he said on this week’s earnings call with analysts.

………………………………………………………………………………………………………………………………………………

Other Voices:

Light Reading’s Iain Morris wrote, “An erosion of brainpower and ceding of tasks to AI would entail a loss of control as people are taken out of the mix. If AI can substitute for a junior coder, as experts say it can, the entry-level job for programming will vanish with inevitable consequences for the entire profession. And as AI assumes responsibility for the jobs once done by humans, a shrinking pool of individuals will understand how networks function.

“If you can’t understand how the AI is making that decision, and why it is making that decision, we could end up with scenarios where when something goes wrong, we simply just can’t understand it,” said Nik Willetts, the CEO of a standards group called the TM Forum, during a recent conversation with Light Reading. “It is a bit of an extreme to just assume no one understands how it works,” he added. “It is a risk, though.”

………………………………………………………………………………………………………………………………………………

References:

AI spending is surging; companies accelerate AI adoption, but job cuts loom large

Verizon and AT&T cut 5,100 more jobs with a combined 214,350 fewer employees than 2015

Big Tech post strong earnings and revenue growth, but cuts jobs along with Telecom Vendors

Nokia (like Ericsson) announces fresh wave of job cuts; Ericsson lays off 240 more in China

Deutsche Telekom exec: AI poses massive challenges for telecom industry

Softbank developing autonomous AI agents; an AI model that can predict and capture human cognition

Speaking at a customer event Wednesday in Tokyo, Softbank Chairman and CEO Masayoshi Son said his company is developing “the world’s first” artificial intelligence (AI) agent system that can autonomously perform complex tasks. Human programmers will no longer needed. “The AI agents will think for themselves and improve on their own…so the era of humans doing the programming is coming to an end,”

Softbank estimated it needed to create around 1000 agents per person – a large number because “employees have complex thought processes. The agents will be active 24 hours a day, 365 days a year and will interact with each other.” Son estimates the agents will be at least four times as productive and four times as efficient as humans, and would cost around 40 Japanese yen (US$0.27) per agent per month. At that rate, the billion-agent plan would cost SoftBank $3.2 billion annually.

“For 40 yen per agent per month, the agent will independently memorize, negotiate and conduct learning. So with these actions being taken, it’s incredibly cheap,” Son said. “I’m excited to see how the AI agents will interact with one another and advance given tasks,” Son added that the AI agents, to achieve the goals, will “self-evolve and self-replicate” to execute subtasks.

Unlike generative AI, which needs human commands to carry out tasks, an AI agent performs tasks on its own by designing workflows with data available to it. It is expected to enhance productivity at companies by helping their decision-making and problem-solving.

While the CEO’s intent is clear, details of just how and when SoftBank will build this giant AI workforce are scarce. Son admitted the 1 billion target would be “challenging” and that the company had not yet developed the necessary software to support the huge numbers of agents. He said his team needed to build a toolkit for creating more agents and an operating system to orchestrate and coordinate them. Son, one of the world’s most ardent AI evangelists, is betting the company’s future on the technology.

According to Son, the capabilities of AI agents had already surpassed PhD-holders in advanced fields including physics, mathematics and chemistry. “There are no questions it can’t comprehend. We’re almost at a stage where there are hardly any limitations,” he enthused. Son acknowledged the problem of AI hallucinations, but dismissed it as “a temporary and minor issue.” Son said the development of huge AI data centers, such as the $500 billion Stargate project, would enable exponential growth in computing power and AI capabilities.

Softbank Group Corp. Chairman and CEO Masayoshi Son (L) and OpenAI CEO Sam Altman at an event on July 16, 2025. (Kyodo)

The project comes as SoftBank Group and OpenAI, the developer of chatbot ChatGPT, said in February they had agreed to establish a joint venture to promote AI services for corporations. Wednesday’s event included a cameo appearance from Sam Altman, CEO of SoftBank partner OpenAI, who said he was confident about the future of AI because the scaling law would exist “for a long time” and that cost was continually going down. “I think the first era of AI, the…ChatGPT initial era was about an AI that you could ask anything and it could tell you all these things,” Altman said.

“Now as these (AI) agents roll out, AI can do things for you…You can ask the computer to do something in natural language, a sort of vaguely defined complex task, and it can understand you and execute it for you,” Altman said. “The productivity and potential that it unlocks for the world is quite huge.”

……………………………………………………………………………………………………………………………………………..

According to the NY Times, an international team of scientists believe that A.I. systems can help them understand how the human mind works. They have created a ChatGPT-like system that can play the part of a human in a psychological experiment and behave as if it has a human mind. Details about the system, known as Centaur, were published on Wednesday in the journal Nature. Dr. Marcel Binz, a cognitive scientist at Helmholtz Munich, a German research center, is the author of the new AI study.

References:

https://english.kyodonews.net/articles/-/57396#google_vignette

https://www.lightreading.com/ai-machine-learning/softbank-aims-for-1-billion-ai-agents-this-year

https://www.nytimes.com/2025/07/02/science/ai-psychology-mind.html

https://www.nature.com/articles/s41586-025-09215-4

AI spending is surging; companies accelerate AI adoption, but job cuts loom large

Big Tech and VCs invest hundreds of billions in AI while salaries of AI experts reach the stratosphere

Ericsson reports ~flat 2Q-2025 results; sees potential for 5G SA and AI to drive growth

Agentic AI and the Future of Communications for Autonomous Vehicle (V2X)

Dell’Oro: AI RAN to account for 1/3 of RAN market by 2029; AI RAN Alliance membership increases but few telcos have joined

Indosat Ooredoo Hutchison and Nokia use AI to reduce energy demand and emissions

Deloitte and TM Forum : How AI could revitalize the ailing telecom industry?

McKinsey: AI infrastructure opportunity for telcos? AI developments in the telecom sector

ZTE’s AI infrastructure and AI-powered terminals revealed at MWC Shanghai

Ericsson revamps its OSS/BSS with AI using Amazon Bedrock as a foundation

Big tech firms target data infrastructure software companies to increase AI competitiveness

SK Group and AWS to build Korea’s largest AI data center in Ulsan

OpenAI partners with G42 to build giant data center for Stargate UAE project

Nile launches a Generative AI engine (NXI) to proactively detect and resolve enterprise network issues

AI infrastructure investments drive demand for Ciena’s products including 800G coherent optics

Ericsson reports ~flat 2Q-2025 results; sees potential for 5G SA and AI to drive growth

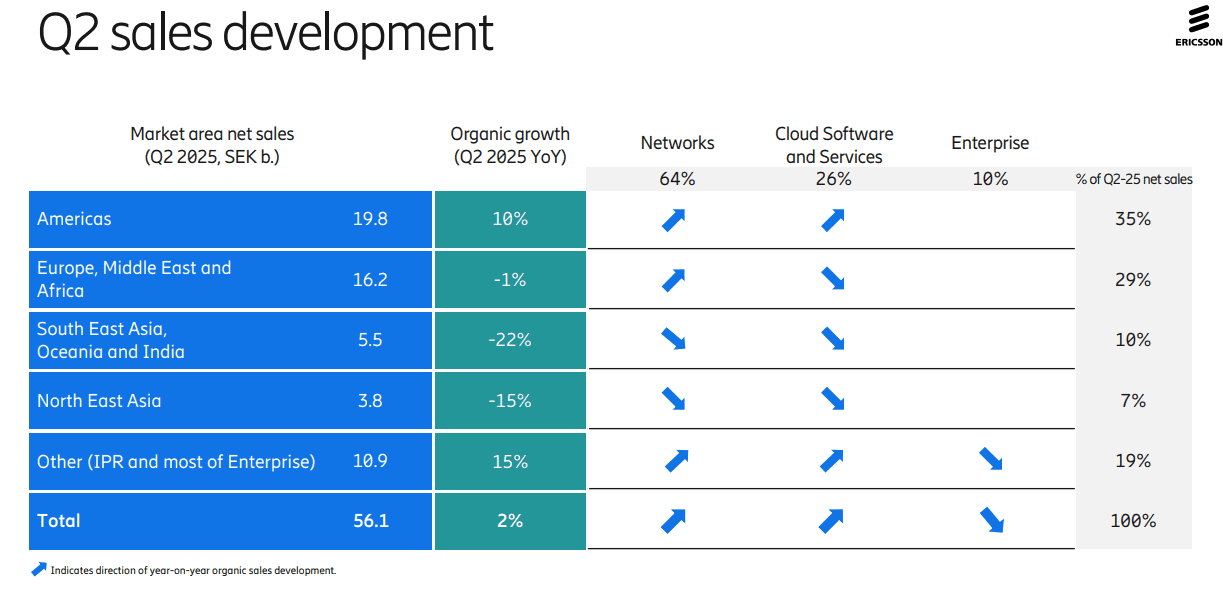

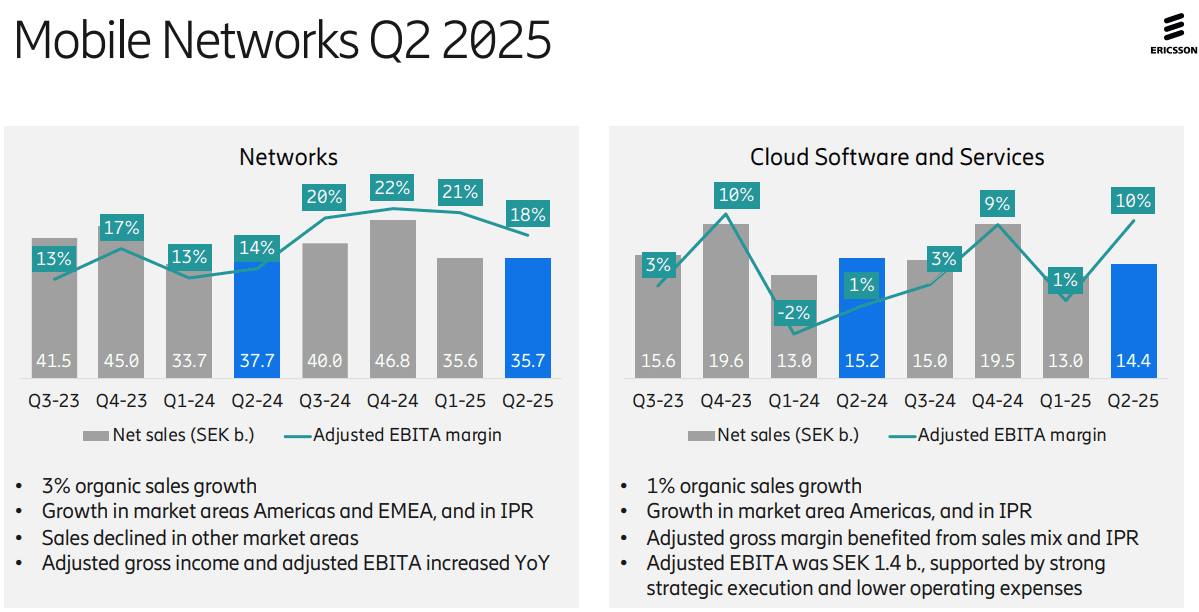

Ericsson’s second-quarter results were not impressive, with YoY organic sales growth of +2% for the company and +3% for its network division (its largest). Its $14 billion AT&T OpenRAN deal, announced in December of 2023, helped lift Swedish vendor’s share of the global RAN market by +1.4 percentage points in 2024 to 25.7%, according to new research from analyst company Omdia (owned by Informa). As a result of its AT&T contract, the U.S. accounted for a stunning 44% of Ericsson’s second-quarter sales while the North American market resulted in a 10% YoY increase in organic revenues to SEK19.8bn ($2.05bn). Sales dropped in all other regions of the world! The charts below depict that very well:

Ericsson’s attention is now shifting to a few core markets that Ekholm has identified as strategic priorities, among them the U.S., India, Japan and the UK. All, unsurprisingly, already make up Ericsson’s top five countries by sales, although their contribution minus the US came to just 15% of turnover for the recent second quarter. “We are already very strong in North America, but we can do more in India and Japan,” said Ekholm. “We see those as critically important for the long-term success.”

Opportunities: As telco investment in RAN equipment has declined by 12.5% (or $5 billion) last year, the Swedish equipment vendor has had few other obvious growth opportunities. Ericsson’s Enterprise division, which is supposed to be the long-term provider of sales growth for Ericsson, is still very small – its second-quarter revenues stood at just SEK5.5bn ($570m) and even once currency exchange changes are taken into account, its sales shrank by 6% YoY.

On Tuesday’s earnings call, Ericsson CEO Börje Ekholm said that the RAN equipment sector, while stable currently, isn’t offering any prospects of exciting near-term growth. For longer-term growth the industry needs “new monetization opportunities” and those could come from the ongoing modest growth in 5G-enabled fixed wireless access (FWA) deployments, from 5G standalone (SA) deployments that enable mobile network operators to offer “differentiated solutions” and from network APIs (that ultra hyped market is not generating meaningful revenues for anyone yet).

Cost Cutting Continues: Ericsson also has continued to be aggressive about cost reduction, eliminating thousands of jobs since it completed its Vonage takeover. “Over the last year, we have reduced our total number of employees by about 6% or 6,000,” said Ekholm on his routine call with analysts about financial results. “We also see and expect big benefits from the use of AI and that is one reason why we expect restructuring costs to remain elevated during the year.”

Use of AI: Ericsson sees AI as an opportunity to enable network automation and new industry revenue opportunities. The company is now using AI as an aid in network design – a move that could have negative ramifications for staff involved in research and development. Ericsson is already using AI for coding and “other parts of internal operations to drive efficiency… We see some benefits now. And it’s going to impact how the network is operated – think of fully autonomous, intent-based networks that will require AI as a fundamental component. That’s one of the reasons why we invested in an AI factory,” noted the CEO, referencing the consortium-based investment in a Swedish AI Factory that was announced in late May. At the time, Ericsson noted that it planned to “leverage its data science expertise to develop and deploy state-of-the-art AI models – improving performance and efficiency and enhancing customer experience.

Ericsson is also building AI capability into the products sold to customers. “I usually use the example of link adaptation,” said Per Narvinger, the head of Ericsson’s mobile networks business group, on a call with Light Reading, referring to what he says is probably one of the most optimized algorithms in telecom. “That’s how much you get out of the spectrum, and when we have rewritten link adaptation, and used AI functionality on an AI model, we see we can get a gain of 10%.”

Ericsson hopes that AI will boost consumer and business demand for 5G connectivity. New form factors such as smart glasses and AR headsets will need lower-latency connections with improved support for the uplink, it has repeatedly argued. But analysts are skeptical, while Ericsson thinks Europe is ill equipped for more advanced 5G services.

“We’re still very early in AI, in [understanding] how applications are going to start running, but I think it’s going to be a key driver of our business going forward, both on traffic, on the way we operate networks, and the way we run Ericsson,” Ekholm said.

Europe Disappoints: In much of Europe, Ericsson and Nokia have been frustrated by some government and telco unwillingness to adopt the European Union’s “5G toolbox” recommendations and evict Chinese vendors. “I think what we have seen in terms of implementation is quite varied, to be honest,” said Narvinger. Rather than banning Huawei outright, Germany’s government has introduced legislation that allows operators to use most of its RAN products if they find a substitute for part of Huawei’s management system by 2029. Opponents have criticized that move, arguing it does not address the security threat posed by Huawei’s RAN software. Nevertheless, Ericsson clearly eyes an opportunity to serve European demand for military communications, an area where the use of Chinese vendors would be unthinkable.

“It is realistic to say that a large part of the increased defense spending in Europe will most likely be allocated to connectivity because that is a critical part of a modern defense force,” said Ekholm. “I think this is a very good opportunity for western vendors because it would be far-fetched to think they will go with high-risk vendors.” Ericsson is also targeting related demand for mission-critical services needed by first responders.

5G SA and Mobile Core Networks: Ekholm noted that 5G SA deployments are still few and far between – only a quarter of mobile operators have any kind of 5G SA deployment in place right now, with the most notable being in the US, India and China. “Two things need to happen,” for greater 5G SA uptake, stated the CEO.

- “One is mid-band [spectrum] coverage… there’s still very low build out coverage in, for example, Europe, where it’s probably less than half the population covered… Europe is clearly behind on that“ compared with the U.S., China and India.

- “The second is that [network operators] need to upgrade their mobile core [platforms]... Those two things will have to happen to take full advantage of the capabilities of the [5G] network,” noted Ekholm, who said the arrival of new devices, such as AI glasses, that require ultra low latency connections and “very high uplink performance” is starting to drive interest. “We’re also seeing a lot of network slicing opportunities,” he added, to deliver dedicated network resources to, for example, police forces, sports and entertainment stadiums “to guarantee uplink streams… consumers are willing to pay for these things. So I’m rather encouraged by the service innovation that’s starting to happen on 5G SA and… that’s going to drive the need for more radio coverage [for] mid-band and for core [systems].”

Ericsson’s Summary -Looking Ahead:

- Continue to strengthen competitive position

- Strong customer engagement for differentiated connectivity

- New use cases to monetize network investments taking shape

- Expect RAN market to remain broadly stable

- Structurally improving the business through rigorous cost management

- Continue to invest in technology leadership

………………………………………………………………………………………………………………………………………………………………………………………………

References:

https://www.telecomtv.com/content/5g/ericsson-ceo-waxes-lyrical-on-potential-of-5g-sa-ai-53441/

https://www.lightreading.com/5g/ericsson-targets-big-huawei-free-places-ai-and-nato-as-profits-soar

Ericsson revamps its OSS/BSS with AI using Amazon Bedrock as a foundation

Agentic AI and the Future of Communications for Autonomous Vehicles (V2X)

by Prashant Vajpayee (bio below), edited by Alan J Weissberger

Abstract:

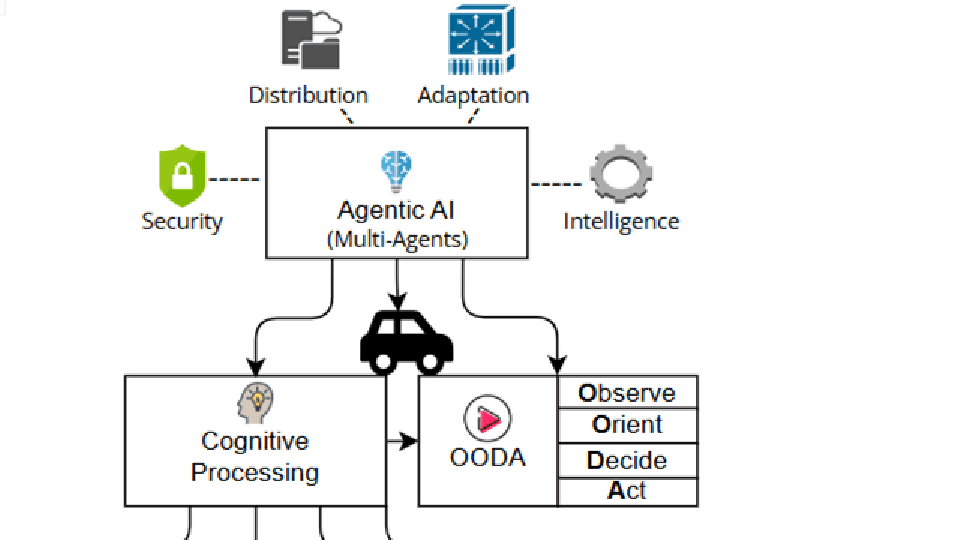

Autonomous vehicles increasingly depend on Vehicle-to-Everything (V2X) communications, but 5G networks face challenges such as latency, coverage gaps, high infrastructure costs, and security risks. To overcome these limitations, this article explores alternative protocols like DSRC, VANETs, ISAC, PLC, and Federated Learning, which offer decentralized, low-latency communication solutions.

Of critical importance for this approach is Agentic AI—a distributed intelligence model based on the Object, Orient, Decide, and Act (OODA) loop—that enhances adaptability, collaboration, and security across the V2X stack. Together, these technologies lay the groundwork for a resilient, scalable, and secure next-generation Intelligent Transportation System (ITS).

Problems with 5G for V2X Communications:

There are several problems with using 5G for V2X communications, which is why the 5G NR (New Radio) V2X specification, developed by the 3rd Generation Partnership Project (3GPP) in Release 16, hasn’t been widely implemented. Here are a few of them:

- Variable latency: Even though 5G promises sub-milliseconds latency, realistic deployment often reflects 10 to 50 milliseconds delay, specifically V2X server is hosted in cloud environment. Furthermore, multi-hop routing, network slicing, and delay in handovers cause increment in latency. Due to this fact, 5G becomes unsuitable for ultra-reliable low-latency communication (URLLC) in critical scenarios [1, 2].

- Coverage Gaps & Handover Issues: Availability of 5G network is a problem in rural and remote areas. Furthermore, in fast moving vehicle, switching between 5G networks can cause delays in communication and connectivity failure [3, 4].

- Infrastructure and Cost Constraint: The deployment of full 5G infrastructure requires dense small-cell infrastructure, which cost burden and logistically complex solution especially in developing regions and along highways.

- Spectrum Congestion and Interference: During the scenarios of share spectrum, other services can cause interference in realm of 5G network, which cause degradation on V2X reliability.

- Security and Trust Issues: Centralized nature of 5G architectures remain vulnerable to single point of failure, which is risky for autonomous systems in realm of cybersecurity.

Alternative Communications Protocols as a Solution for V2X (when integrated with Agentic AI):

The following list of alternative protocols offers a potential remedy for the above 5G shortcomings when integrated with Agentic AI.

|

While these alternatives reduce dependency on centralized infrastructure and provide greater fault tolerance, they also introduce complexity. As autonomous vehicles (AVs) become increasingly prevalent, Vehicle-to-Everything (V2X) communication is emerging as the digital nervous system of intelligent transportation systems. Given the deployment and reliability challenges associated with 5G, the industry is shifting toward alternative networking solutions—where Agentic AI is being introduced as a cognitive layer that renders these ecosystems adaptive, secure, and resilient.

The following use cases show how Agentic AI can bring efficiency:

- Cognitive Autonomy: Each vehicle or roadside unit (RSU) operates an AI agent capable of observing, orienting, deciding, and acting (OOAD) without continuous reliance on cloud supervision. This autonomy enables real-time decision-making for scenarios such as rerouting, merging, or hazard avoidance—even in disconnected environments [12].

- Multi-Agent Collaboration: AI agents negotiate and coordinate with one another using standardized protocols (e.g., MCP, A2A), enabling guidance on optimal vehicle spacing, intersection management, and dynamic traffic control—without the need for centralized orchestration [13].

- Embedded Security Intelligence: While multiple agents collaborate, dedicated security agents monitor system activities for anomalies, enforce access control policies, and quarantine threats at the edge. As Forbes notes, “Agentic AI demands agentic security,” emphasizing the importance of embedding trust and resilience into every decision node [14].

- Protocol-Agnostic Adaptability: Agentic AI can dynamically switch among various communication protocols—including DSRC, VANETs, ISAC, or PLC—based on real-time evaluations of signal quality, latency, and network congestion. Agents equipped with cognitive capabilities enhance system robustness against 5G performance limitations or outages.

- Federated Learning and Self-Improvement: Vehicles independently train machine learning models locally and transmit only model updates—preserving data privacy, minimizing bandwidth usage, and improving processing efficiency.

The figure below illustrates the proposed architectural framework for secure Agentic AI enablement within V2X communications, leveraging alternative communication protocols and the OODA (Observe–Orient–Decide–Act) cognitive model.

Conclusions:

With the integration of an intelligent Agentic AI layer into V2X systems, autonomous, adaptive, and efficient decision-making emerges from seamless collaboration of the distributed intelligent components.

Numerous examples highlight the potential of Agentic AI to deliver significant business value.

- TechCrunch reports that Amazon’s R&D division is actively developing an Agentic AI framework to automate warehouse operations through robotics [15]. A similar architecture can be extended to autonomous vehicles (AVs) to enhance both communication and cybersecurity capabilities.

- Forbes emphasizes that “Agentic AI demands agentic security,” underscoring the need for every action—whether executed by human or machine—to undergo rigorous review and validation from a security perspective [16]. Forbes notes, “Agentic AI represents the next evolution in AI—a major transition from traditional models that simply respond to human prompts.” By combining Agentic AI with alternative networking protocols, robust V2X ecosystems can be developed—capable of maintaining resilience despite connectivity losses or infrastructure gaps, enforcing strong cyber defense, and exhibiting intelligence that learns, adapts, and acts autonomously [19].

- Business Insider highlights the scalability of Agentic AI, referencing how Qualtrics has implemented continuous feedback loops to retrain its AI agents dynamically [17]. This feedback-driven approach is equally applicable in the mobility domain, where it can support real-time coordination, dynamic rerouting, and adaptive decision-making.

- Multi-agent systems are also advancing rapidly. As Amazon outlines its vision for deploying “multi-talented assistants” capable of operating independently in complex environments, the trajectory of Agentic AI becomes even more evident [18].

References:

-

- Coll-Perales, B., Lucas-Estañ, M. C., Shimizu, T., Gozalvez, J., Higuchi, T., Avedisov, S., … & Sepulcre, M. (2022). End-to-end V2X latency modeling and analysis in 5G networks. IEEE Transactions on Vehicular Technology, 72(4), 5094-5109.

- Horta, J., Siller, M., & Villarreal-Reyes, S. (2025). Cross-layer latency analysis for 5G NR in V2X communications. PloS one, 20(1), e0313772.

- Cellular V2X Communications Towards 5G- Available at “pdf”

- Al Harthi, F. R. A., Touzene, A., Alzidi, N., & Al Salti, F. (2025, July). Intelligent Handover Decision-Making for Vehicle-to-Everything (V2X) 5G Networks. In Telecom (Vol. 6, No. 3, p. 47). MDPI.

- DSRC Safety Modem, Available at- “https://www.nxp.com/products/wireless-connectivity/dsrc-safety-modem:DSRC-MODEM”

- VANETs and V2X Communication, Available at- “https://www.sanfoundry.com/vanets-and-v2x-communication/#“

- Yu, K., Feng, Z., Li, D., & Yu, J. (2023). Secure-ISAC: Secure V2X communication: An integrated sensing and communication perspective. arXiv preprint arXiv:2312.01720.

- Study on integrated sensing and communication (ISAC) for C-V2X application, Available at- “https://5gaa.org/content/uploads/2025/05/wi-isac-i-tr-v.1.0-may-2025.pdf“

- Ramasamy, D. (2023). Possible hardware architectures for power line communication in automotive v2g applications. Journal of The Institution of Engineers (India): Series B, 104(3), 813-819.

- Xu, K., Zhou, S., & Li, G. Y. (2024). Federated reinforcement learning for resource allocation in V2X networks. IEEE Journal of Selected Topics in Signal Processing.

- Asad, M., Shaukat, S., Nakazato, J., Javanmardi, E., & Tsukada, M. (2025). Federated learning for secure and efficient vehicular communications in open RAN. Cluster Computing, 28(3), 1-12.

- Bryant, D. J. (2006). Rethinking OODA: Toward a modern cognitive framework of command decision making. Military Psychology, 18(3), 183-206.

- Agentic AI Communication Protocols: The Backbone of Autonomous Multi-Agent Systems, Available at- “https://datasciencedojo.com/blog/agentic-ai-communication-protocols/”

- Agentic AI And The Future Of Communications Networks, Available at- “https://www.forbes.com/councils/forbestechcouncil/2025/05/27/agentic-ai-and-the-future-of-communications-networks/”

- Amazon launches new R&D group focused on agentic AI and robotics, Available at- “Amazon launches new R&D group focused on agentic AI and robotics”

- Securing Identities For The Agentic AI Landscape, Available at “https://www.forbes.com/councils/forbestechcouncil/2025/07/03/securing-identities-for-the-agentic-ai-landscape/”

- Qualtrics’ president of product has a vision for agentic AI in the workplace: ‘We’re going to operate in a multiagent world’, Available at- “https://www.businessinsider.com/agentic-ai-improve-qualtrics-company-customer-communication-data-collection-2025-5”

- Amazon’s R&D lab forms new agentic AI group, Available at- “https://www.cnbc.com/2025/06/04/amazons-rd-lab-forms-new-agentic-ai-group.html”

- Agentic AI: The Next Frontier In Autonomous Work, Available at- “https://www.forbes.com/councils/forbestechcouncil/2025/06/27/agentic-ai-the-next-frontier-in-autonomous-work/”

About the Author:

Prashant Vajpayee is a Senior Product Manager and researcher in AI and cybersecurity, with expertise in enterprise data integration, cyber risk modeling, and intelligent transportation systems. With a foundation in strategic leadership and innovation, he has led transformative initiatives at Salesforce and advanced research focused on cyber risk quantification and resilience across critical infrastructure, including Transportation 5.0 and global supply chain. His work empowers organizations to implement secure, scalable, and ethically grounded digital ecosystems. Through his writing, Prashant seeks to demystify complex cybersecurity as well as AI challenges and share actionable insights with technologists, researchers, and industry leaders.