Data Center Interconnect

AI infrastructure investments drive demand for Ciena’s products including 800G coherent optics

Artificial Intelligence (AI) infrastructure investments are starting to shift toward networks needed to support the technology, rather than focusing exclusively on computing and power, according to Ciena Chief Executive Gary Smith. The trends helped Ciena swing to a profit and post a 24% jump in sales in the recent quarter.

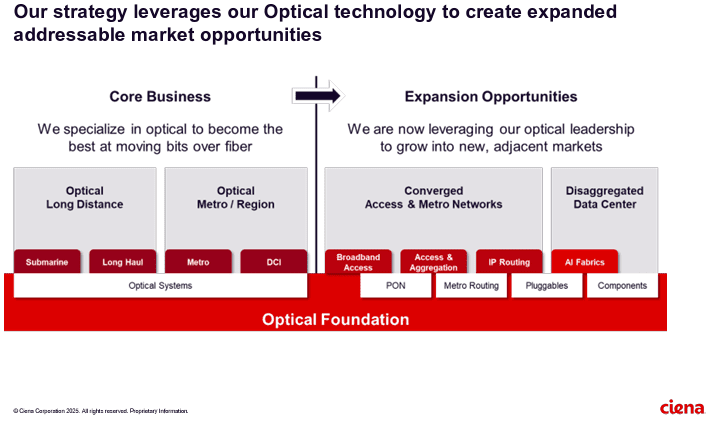

The company enables high-speed fiber optic connectivity for telecommunications and data centers, helping hyper-scalers such as Amazon and Microsoft support AI initiatives via data center interconnects and intra-data center networking. Currently, the company is ramping up production to meet surging demand fueled by cloud and AI investments.

“There’s no point in investing in these massive amounts of GPUs if we’re going to strand it because we didn’t invest in the network,” Smith said Thursday.

……………………………………………………………………………………………………………………………………………………..

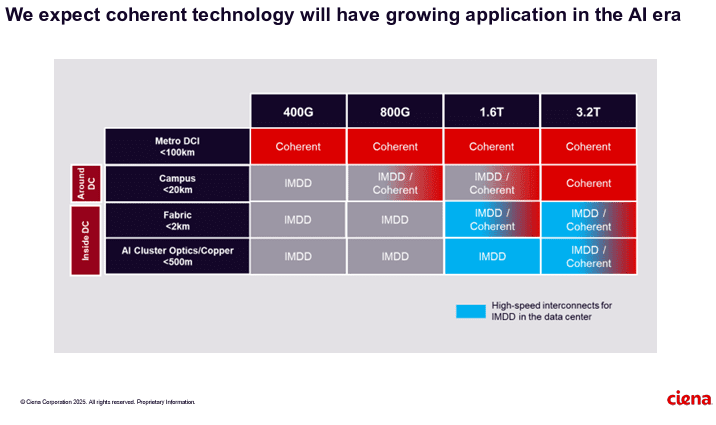

Ciena sees a bright future in 800G coherent optics that can accommodate AI traffic. Smith said a global cloud provider has selected Ciena’s coherent 800-gig pluggable modules and Reconfigurable Line System (RLS) photonics for investing in geographically distributed, regional GPU clusters. “With our coherent optical technology ideally suited for this type of connectivity, we expect to see more of these opportunities emerge as cloud providers evolve their data center network architectures to support their AI strategies,” he added.

It’s still early innings for 800G adoption, but demand is climbing due to AI and cloud connectivity. Vertical Systems Group expects to see “a measurable increase” in 800G installations this year. Dell’Oro optical networking analyst Jimmy Yu noted on LinkedIn Ciena’s data center interconnect win is the first he’s heard of that involves connecting GPU clusters across 100+ kilometer spans. “It was a hot topic of discussion for nearly 2 years. It is now going to start,” Yu said.

……………………………………………………………………………………………………………………………………………………

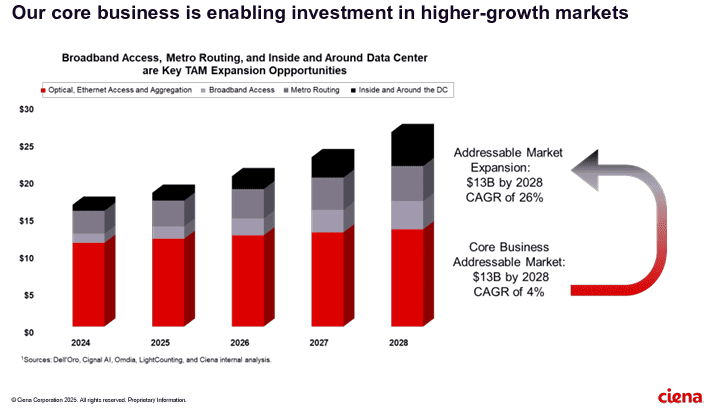

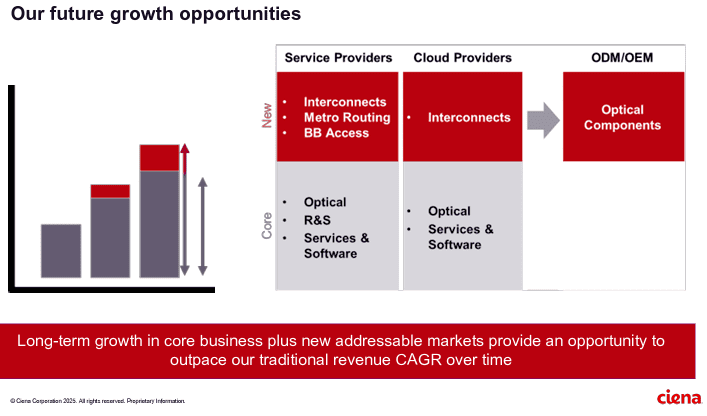

Ciena’s future growth opportunities include network service and cloud service providers as well as ODM/OEM sales of optical components.

References:

https://www.wsj.com/business/earnings/ciena-swings-to-profit-as-ai-investments-drive-demand-0195f30c

https://investor.ciena.com/static-files/d964ccac-74b3-43d9-a73e-ecf67fab6060

https://www.fierce-network.com/broadband/ciena-now-expects-tariff-costs-10m-quarter

Cisco CEO sees great potential in AI data center connectivity, silicon, optics, and optical systems

It’s no surprise to IEEE Techblog readers that Cisco’s networking business – still its biggest unit, generating nearly half its total sales – reported <$6.9 billion in revenue for the three-month period ending in January (Cisco’s second fiscal quarter). That was down 3% compared with the same quarter the year before. For its first half year, networking sales dropped 14% year-over-year, to about $13.6 billion.

However, total second-quarter revenues grew 9% year-over-year, to just less than $14 billion, boosted by the Splunk (security company) acquisition in March 2024. Thanks to that deal, Cisco’s security revenues more than doubled for the first half, to about $4.1 billion. But net income fell 8%, to roughly $2.4 billion, due partly to higher costs for research and development, as well as sales and marketing expenses.

Cisco groused about an “inventory correction” as networking customers digested stock they had already bought, but that surely is not the case now as that inventory has been worked off by its customers (ISPs, telcos, enterprise & government end users). Cisco CFO Richard Scott Herren now says “The demand that we’re seeing today a function of extended lead times like we saw a couple of years ago. That’s not the case. Our lead times are not extending.”

Currently, Cisco firmly believes that Ethernet connectivity sales to owners of AI data centers is an “emerging opportunity.” That refers to Cisco’s data center switching solutions for “web-scale” and enterprise customer intra-data center communications. The company’s AI strategy is described here.

Image Courtesy of Cisco Systems

………………………………………………………………………………………………………………………………………

AI investments “will lead to our networking equipment being combined with Nvidia GPUs, and that’s how we’ll accomplish that in the future,” CEO Chuck Robbins told industry analysts on a call to discuss second-quarter results, according to a Motley Fool transcript. “There’s so much change going on right now from a technology perspective that there’s both excitement about the opportunity, and candidly, there’s a little bit of fear of slowing down too much and letting your competition get too much ahead of you. So, we saw solid demand,” he said.

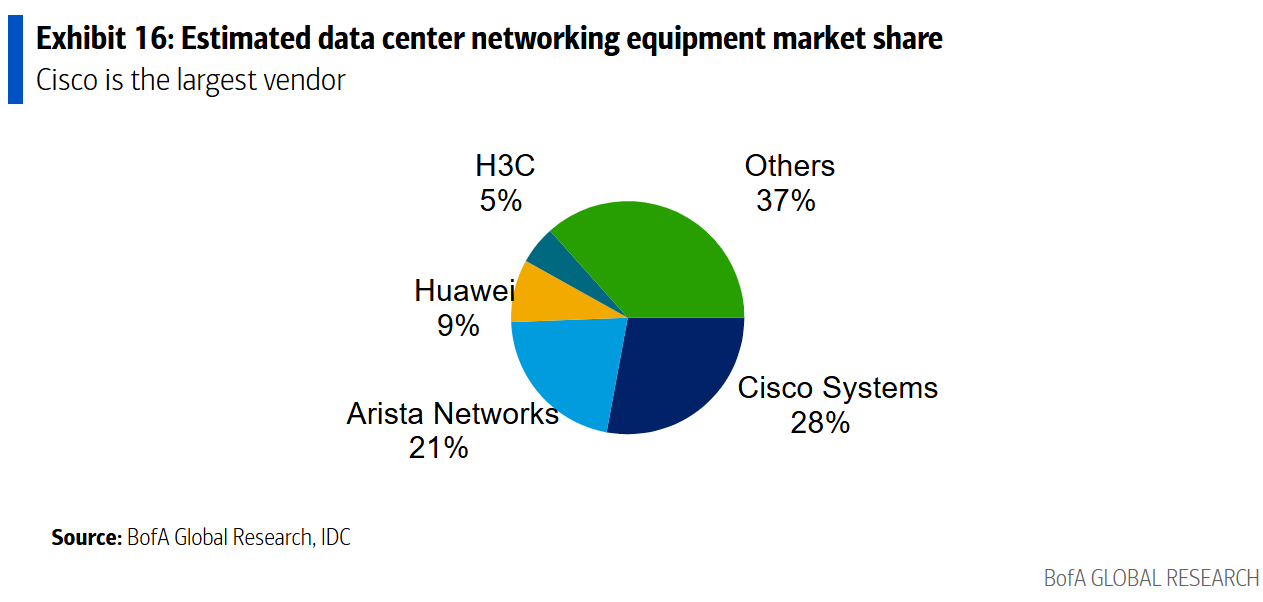

However, Cisco will face mighty competition in that space.

- Nokia is targeting the same opportunity and last month said it would spend an additional €100 million (US$104 million) on its Internet Protocol unit annually with the goal of generating another €1 billion ($1.04 billion) in data center revenues by 2028.

- Arista Networks is another rival in this market, selling high performance Ethernet switches to cloud service providers like Microsoft.

- Nvidia, whose $7 billion acquisition of Mellanox in 2019 gave it effective control of InfiniBand, an alternative to Ethernet that had represented the main option for connecting GPU clusters when analysts published research on the topic in August 2023. Just as important, the Mellanox division of Nvidia also is a leader in Ethernet connectivity within data centers as described in this IEEE Techblog post.

- Juniper Networks (being acquired by HPC) is also focusing on networking the AI data center as per a white paper you can download after filling out this form.

During the Q & A, Robbins elaborated: “On the $700 million in AI orders, it’s a combination of systems, silicon, optics, and optical systems. And I think if you break it down, it’s about half is in silicon and systems. And it continues to accelerate. And I’d say the teams have done a great job on the silicon front. We’ve invested heavily in more resources there. The team is running parallel development efforts for multiple chips that are staggered in their time frames. They’ve worked hard. They were increasing the yield, which is a positive thing. And so, we feel good about it, but it’s a combination of all those things that we’re selling to the customers.”

…………………………………………………………………………………………………………………………………………………………………………………………

Enterprise AI:

“What we’re seeing on the enterprise side relative to AI is it’s still — customers are still in the very early days, and they all realize they need to figure out exactly what their use cases are. We’re starting to see some spending though on specific AI-driven infrastructure. And we think as we get AI pods out there — we got Hyperfabric coming. We got AI defense coming.

We have Hypershield in the market. And we got this new DPU switch, they are all going to be a part of the infrastructure to support these AI applications. So, we’re beginning to see it happen, but I think it’s also really important to understand that as the enterprises leverage their private data, their proprietary data, and they’ll do some training on that and then they’ll run inference obviously against that. We believe that opportunity is an order of magnitude higher than what we’ve seen in training today. We’re going to continue to innovate and build capabilities to put ourselves in a better position to be a real beneficiary as this continues to accelerate. But as of today, we feel like we’re in pretty good shape.”

“If you look at AI defense with the AI Summit that we did recently, there’s — I think there’s about 20-some-odd customers who are interested in going to proof of concept with us right now on it. We had almost half the Fortune 100 there for that event. So, I feel good about where we are. It will turn into greater demand as we just continue to scale these products.”

Telco use of AI Edge Applications:

“We see some of the European network operators are looking at delivering AI as a service,” said Robbins. “We see a lot of them planning for AI edge applications that are sitting at the edge of their networks that they’re managing for customers.”

,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,………………………………………………

Cisco raised its guidance and now expects revenues for the full year of between $56 billion and $56.5 billion, up from its earlier range of $55.3 billion to $56.3 billion.

,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,,………………………………………………

References:

https://www.cisco.com/site/uk/en/solutions/artificial-intelligence/index.html

https://www.juniper.net/content/dam/www/assets/white-papers/us/en/networking-the-ai-data-center.pdf

Nokia selects Intel’s Justin Hotard as new CEO to increase growth in IP networking and data center connections

Initiatives and Analysis: Nokia focuses on data centers as its top growth market

Nvidia enters Data Center Ethernet market with its Spectrum-X networking platform

Lumen Technologies to connect Prometheus Hyperscale’s energy efficient AI data centers

The need for more cloud computing capacity and AI applications has been driving huge investments in data centers. Those investments have led to a steady demand for fiber capacity between data centers and more optical networking innovation inside data centers. Here’s the latest example of that:

Prometheus Hyperscale has chosen Lumen Technologies to connect its energy-efficient data centers to meet growing AI data demands. Lumen network services will help Prometheus with the rapid growth in AI, big data, and cloud computing as they address the critical environmental challenges faced by the AI industry.

Rendering of Prometheus Hyperscale flagship Data Center in Evanston, Wyoming:

……………………………………………………………………………….

Prometheus Hyperscale, known for pioneering sustainability in the hyperscale data center industry, is deploying a Lumen Private Connectivity Fabric℠ solution, including new network routes built with Lumen next generation wavelength services and Dedicated Internet Access (DIA) [1.] services with Distributed Denial of Service (DDoS) protection layered on top.

Note 1. Dedicated Internet Access (DIA) is a premium internet service that provides a business with a private, high-speed connection to the internet.

This expanded network will enable high-density compute in Prometheus facilities to deliver scalable and efficient data center solutions while maintaining their commitment to renewable energy and carbon neutrality. Lumen networking technology will provide the low-latency, high-performance infrastructure critical to meet the demands of AI workloads, from training to inference, across Prometheus’ flagship facility in Wyoming and four future data centers in the western U.S.

“What Prometheus Hyperscale is doing in the data center industry is unique and innovative, and we want to innovate alongside of them,” said Ashley Haynes-Gaspar, Lumen EVP and chief revenue officer. “We’re proud to partner with Prometheus Hyperscale in supporting the next generation of sustainable AI infrastructure. Our Private Connectivity Fabric solution was designed with scalability and security to drive AI innovation while aligning with Prometheus’ ambitious sustainability goals.”

Prometheus, founded as Wyoming Hyperscale in 2020, turned to Lumen networking solutions prior to the launch of its first development site in Aspen, WY. This facility integrates renewable energy sources, sustainable cooling systems, and AI-driven energy optimization, allowing for minimal environmental impact while delivering the computational power AI-driven enterprises demand. The partnership with Lumen reinforces Prometheus’ dedication to both technological innovation and environmental responsibility.

“AI is reshaping industries, but it must be done responsibly,” said Trevor Neilson, president of Prometheus Hyperscale. “By joining forces with Lumen, we’re able to offer our customers best-in-class connectivity to AI workloads while staying true to our mission of building the most sustainable data centers on the planet. Lumen’s network expertise is the perfect complement to our vision.”

Prometheus’ data center campus in Evanston, Wyoming will be one of the biggest data centers in the world with facilities expected to come online in late 2026. Future data centers in Pueblo, Colorado; Fort Morgan, Colorado; Phoenix, Arizona; and Tucson, Arizona, will follow and be strategically designed to leverage clean energy resources and innovative technology.

About Prometheus Hyperscale:

Prometheus Hyperscale, founded by Trenton Thornock, is revolutionizing data center infrastructure by developing sustainable, energy-efficient hyperscale data centers. Leveraging unique, cutting-edge technology and working alongside strategic partners, Prometheus is building next-generation, liquid-cooled hyperscale data centers powered by cleaner energy. With a focus on innovation, scalability, and environmental stewardship, Prometheus Hyperscale is redefining the data center industry for a sustainable future. This announcement follows recent news of Bernard Looney, former CEO of bp, being appointed Chairman of the Board.

To learn more visit: www.prometheushyperscale.com

About Lumen Technologies:

Lumen uses the scale of their network to help companies realize AI’s full potential. From metro connectivity to long-haul data transport to edge cloud, security, managed service, and digital platform capabilities, Lumenn meets its customers’ needs today and is ready for tomorrow’s requirements.

In October, Lumen CTO Dave Ward told Light Reading that a “fundamentally different order of magnitude” of compute power, graphics processing units (GPUs) and bandwidth is required to support AI workloads. “It is the largest expansion of the Internet in our lifetime,” Ward said.

Lumen is constructing 130,000 fiber route miles to support Meta and other customers seeking to interconnect AI-enabled data centers. According to a story by Kelsey Ziser, the fiber conduits in this buildout would contain anywhere from 144 to more than 500 fibers to connect multi-gigawatt data centers.

REFERENCES:

https://www.lightreading.com/data-centers/2024-in-review-data-center-shifts

Will billions of dollars big tech is spending on Gen AI data centers produce a decent ROI?

Superclusters of Nvidia GPU/AI chips combined with end-to-end network platforms to create next generation data centers

Initiatives and Analysis: Nokia focuses on data centers as its top growth market

Proposed solutions to high energy consumption of Generative AI LLMs: optimized hardware, new algorithms, green data centers

Deutsche Telekom with AWS and VMware demonstrate a global enterprise network for seamless connectivity across geographically distributed data centers

Initiatives and Analysis: Nokia focuses on data centers as its top growth market

Telco is no longer the top growth market for Nokia. Instead, it’s data centers, said Nokia’s CEO Pekka Lundmark on the company’s Q3 2024 earnings call last week. “Across Nokia, we are investing to create new growth opportunities outside of our traditional communications service provider market,” he said. “We see a significant opportunity to expand our presence in the data center market and are investing to broaden our product portfolio in IP Networks to better address this. There will be others as well, but that will be the number one. This is obviously in the very core of our strategy.”

Lundmark said Nokia’s telco total addressable market (TAM) is €84 billion, while its data center total addressable market is currently at €20 billion. “I mean, telco TAM will never be a significant growth market,” he added to no one’s surprise.

Nokia’s recent deal to acquire fiber optics equipment vendor Infinera for $2.3 Billion might help. The Finland based company said the combination with Infinera is expected to accelerate its path to double-digit operating margins in its optical-networks business unit (which was inherited from Alcatel-Lucent) . The transaction (expected to close in the first half of 2025) and the recent sale of submarine networks will reshape Nokia’s Network Infrastructure business to be built around fixed networks, internet-protocol networks and optical networks, the company said. Data centers not only require GPUs, but they also require optical networking to support their AI workloads. Lundmark said the role of optics will increase, not only in connections between data centers, but also inside data centers to connect servers to each other. “Once we get there, that market will be of extremely high volumes,” he said.

Pekka Lundmark, Nokia CEO– Photo: Arno Mikkor

- In September, Nokia announced the availability of its AI era, Event-Driven Automation (EDA) platform. Nokia EDA raises the bar on data center network operations with a modern approach that builds on Kubernetes to bring highly reliable, simplified, and adaptable lifecycle management to data center networks. Aimed at driving human error in network operations to zero, Nokia’s new platform reduces network disruptions and application downtime while also decreasing operational effort up to 40%. Nokia says its new EDA platform helps data center operators reduce errors in network operations. Nokia said it hopes to remove the risk of human error and reduce network disruptions and application downtime.

- A highlight of the recent quarter is a September deal with self proclaimed “AI hyperscalar” CoreWeave [1.] which selected Nokia to deploy its IP routing and optical transport equipment globally as part of its extensive backbone build-out, with immediate roll-out across its data centers in the U.S. and Europe. Raymond James analyst Simon Leopold said the CoreWeave win was good for Nokia to gain some exposure to AI, and he wondered if Nokia had a long-term strategy of evolving customers away from its telco base into more enterprise-like opportunities. “The reason why CoreWeave is so important is that they are now the leading GPU-as-a- service company,” said Lundmark. “And they have now taken pretty much our entire portfolio, both on the IP side and optical side. And as we know, AI is driving new business models, and one of the business models is clearly GPU-as-a-service,” he added.

Note 1. CoreWeave rents graphical processing units (GPUs) to artificial intelligence (AI) developers. A modern, Kubernetes native cloud that’s purpose-built for large scale, GPU-accelerated workloads. Designed with engineers and innovators in mind, CoreWeave claims to offer unparalleled access to a broad range of compute solutions that are up to 35x faster and 80% less expensive than legacy cloud providers.

……………………………………………………………………………………………………………………………………………………………

Nokia says its IP Interconnection can provide attractive business benefits to data center customers including:

- Improved security – Applications and services can be accessed via private direct connections to the networks of cloud providers collocated in the same facility without traversing the internet.

- Reduced transport costs – Colocated service providers, alternative network providers and carrier neutral network operators offer a wide choice of connections to remote destinations at a lower price.

- Higher performance and lower latency – As connections are direct and are often located closer to the person or thing they are serving, there is a reduction in latency and an increase in reliability as they bypass multiple hops across the public internet.

- More control – Through network automation and via customer portals, cloud service providers can gain more control of their cloud connectivity.

- Greater flexibility – With a wider range of connectivity options, enterprises can distribute application workloads and access cloud applications and services globally to meet business demands and to gain access to new markets.

……………………………………………………………………………………………………………………………………………………………

Nokia’s Data Center Market Risks:

The uncertainty is whether spending on GPUs and optical network equipment in the data center will produce the traffic growth to justify a decent ROI for Nokia. Also, the major cloud vendors (Amazon, Google, Microsoft and Facebook) design, develop, and install their own fiber optic networks. So it will likely be the new AI Data Center players that Nokia will try to sell to. William Webb, an independent consultant and former executive at Ofcom told Light Reading, “There may be substantially more traffic between data centers as models are trained but this will flow across high-capacity fiber connections which can be expanded easily if needed.” Text-based AI apps like ChatGPT generate “minuscule amounts of traffic,” he said. Video-based AI will merely substitute for the genuinely intelligent form.

References:

https://www.datacenterdynamics.com/en/news/nokia-eyes-data-center-market-growth-as-q3-sales-fall/

https://www.nokia.com/blog/enhance-cloud-services-with-high-capacity-interconnection/

https://www.lightreading.com/5g/telecom-glory-days-are-over-bad-news-for-nokia-worse-for-ericsson

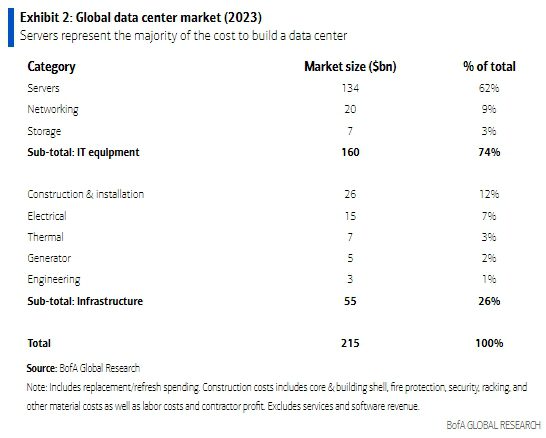

AI adoption to accelerate growth in the $215 billion Data Center market

Market Overview:

Data Centers are a $215bn global market that grew 18% annually between 2018-2023. AI adoption is expected to accelerate data center growth as AI chips require 3-4x more electrical power versus traditional central processing units (CPUs).

AI adoption is poised to accelerate this growth meaningfully over coming years. BofA‘s US Semis analyst, Vivek Arya, forecasts the AI chip market to reach ~$200bn in 2027, up from $44bn in 2023. This has positive implications for the broader data center industry.

AI workloads are bandwidth-intensive, connecting hundreds of processors with gigabits of throughput. As these AI models grow, the number of GPUs required to process them grows, requiring larger networks to interconnect the GPUs. See Network Equipment market below.

The electrical and thermal equipment within a data center is sized for maximum load to ensure reliability and uptime. For electrical and thermal equipment manufacturers, AI adoption drives faster growth in data center power loads. AI chips require 3-4x more electrical power versus traditional CPUs (Central Processing Units).

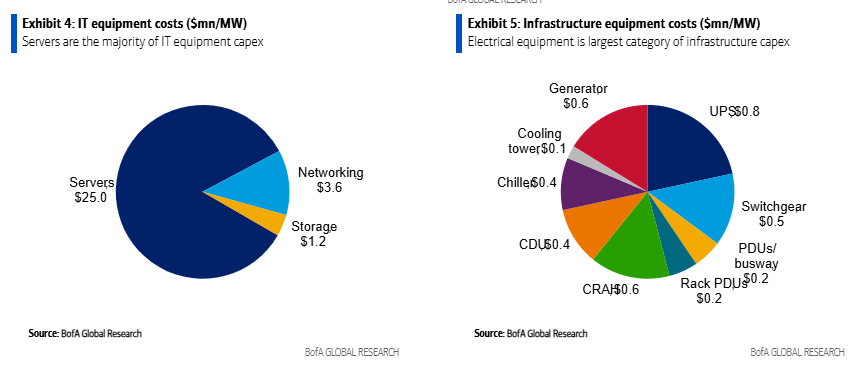

BofA estimates data center capex was $215bn globally in 2023. The majority of this spend is for compute servers, networking and storage ($160bn) with data center infrastructure being an important, but smaller, piece ($55bn). For perspective, data center capex represented ~1% of global fixed capital formation, which includes all private & public sector spending on equipment and structures.

Networking Equipment Market:

BofA estimates a $20bn market size for Data Center networking equipment. Cisco is the market share leader, with an estimated 28% market share.

- Ethernet switches which communicate within the data center via local area networks. Typically, each rack would have a networking switch.

- Routers handle traffic between buildings, typically using internet protocol (IP). Some cloud service providers use “white box“ networking switches (e.g., manufactured by third parties, such as Taiwanese ODMs, to their specifications).

Data center speeds are in a state of constant growth. The industry has moved from 40G speeds to 100G speeds, and those are quickly giving way to 400G speeds. Yet even 400G speeds won’t be fast enough to support some emerging applications which may require 800G and 1.6TB data center speeds.

…………………………………………………………………………………………………………………………………….

Data Centers are also a bright spot for the construction industry. BofA notes that construction spending for data centers is approaching $30bn (vs $2bn in 2014) and accounts for nearly 21% of data center capex. At 4% of private construction spending (vs 2% five years ago), the data center category has surpassed retail, and could be a partial offset in a construction downturn.

Source: BofA Global Research

………………………………………………………………………………………………………………………..

References:

https://www.belden.com/blogs/smart-building/faster-data-center-speeds-depend-on-fiber-innovation#

Proposed solutions to high energy consumption of Generative AI LLMs: optimized hardware, new algorithms, green data centers

Nvidia enters Data Center Ethernet market with its Spectrum-X networking platform

Co-Packaged Optics to play an important role in data center switches

EdgeCore Digital Infrastructure and Zayo bring fiber connectivity to Santa Clara data center

Deutsche Telekom with AWS and VMware demonstrate a global enterprise network for seamless connectivity across geographically distributed data centers

Light Source Communications Secures Deal with Major Global Hyperscaler for Fiber Network in Phoenix Metro Area

Light Source Communications is building a 140-mile fiber middle-mile network in the Phoenix, AZ metro area, covering nine cities: Phoenix, Mesa, Tempe, Chandler, Gilbert, Queen Creek, Avondale, Coronado and Cashion. The company already has a major hyperscaler as the first anchor tenant.

There are currently 70 existing and planned data centers in the area that Light Source will serve. As one might expect, the increase in data centers stems from the boom in artificial intelligence (AI).

The network will include a big ring, which will be divided into three separate rings. In total, Light Source will be deploying 140 miles of fiber. The company has partnered with engineering and construction provider Future Infrastructure LLC, a division of Primoris Services Corp., to make it happen.

“I would say that AI happens to be blowing up our industry, as you know. It’s really in response to the amount of data that AI is demanding,” said Debra Freitas [1.], CEO of Light Source Communications (LSC).

Note 1. Debra Freitas has led LSC since co-founding in 2014. Owned and operated network with global OTT as a customer. She developed key customer relationships, secured funding for growth. Currently sits on the Executive Board of Incompas.

……………………………………………………………………………………………………..

Light Source plans for the entire 140-mile route to be underground. It’s currently working with the city councils and permitting departments of the nine cities as it goes through its engineering and permit approval processes. Freitas said the company expects to receive approvals from all the city councils and to begin construction in the third quarter of this year, concluding by the end of 2025.

Primoris delivers a range of specialty construction services to the utility, energy, and renewables markets throughout the United States and Canada. Its communications business is a leading provider of critical infrastructure solutions, including program management, engineering, fabrication, replacement, and maintenance. With over 12,700 employees, Primoris had revenue of $5.7 billion in 2023.

“We’re proud to partner with Light Source Communications on this impactful project, which will exceed the growing demands for high-capacity, reliable connectivity in the Phoenix area,” said Scott Comley, president of Primoris’ communications business. “Our commitment to innovation and excellence is well-aligned with Light Source’s cutting-edge solutions and we look forward to delivering with quality and safety at the forefront.”

Light Source is a carrier neutral, owner-operator of networks serving enterprises throughout the U.S. In addition to Phoenix, several new dark fiber routes are in development in major markets throughout the Central and Western United States. For more information about Light Source Communications, go to lightsourcecom.net.

The city councils in the Phoenix metro area have been pretty busy with fiber-build applications the past couple of years because the area is also a hotbed for companies building fiber-to-the-premises (FTTP) networks. In 2022 the Mesa City Council approved four different providers to build fiber networks. AT&T and BlackRock have said their joint venture would also start deploying fiber in Mesa.

Light Source is focusing on middle-mile, rather than FTTP because that’s where the demand is, according to Freitas. “Our route is a unique route, meaning there are no other providers where we’re going. We have a demand for the route we’re putting in,” she noted.

The company says it already has “a major, global hyperscaler” anchor tenant, but it won’t divulge who that tenant is. Its network will also touch Arizona State University at Tempe and the University of Arizona.

Light Source doesn’t light any of the fiber it deploys. Rather, it is carrier neutral and sells the dark fiber to customers who light it themselves and who may resell it to their own customers.

Light Source began operations in 2014 and is backed by private equity. It did not receive any federal grants for the new middle-mile network in Arizona.

………………………………………………………………………………………………………..

Bill Long, Zayo’s chief product officer, told Fierce Telecom recently that data centers are preparing for an onslaught of demand for more compute power, which will be needed to handle AI workloads and train new AI models.

…………………………………………………………………………………………………………

About Light Source Communications:

Light Source Communications (LSC) is a carrier neutral, customer agnostic provider of secure, scalable, reliable connectivity on a state-of-the-art dark fiber network. The immense amounts of data businesses require to compete in today’s global market requires access to an enhanced fiber infrastructure that allows them to control their data. With over 120 years of telecom experience, LSC offers an owner-operated network for U.S. businesses to succeed here and abroad. LSC is uniquely positioned and is highly qualified to build the next generation of dark fiber routes across North America, providing the key connections for business today and tomorrow.

References:

https://www.lightsourcecom.net/services/

https://www.fiercetelecom.com/ai/ai-demand-spurs-light-source-build-middle-mile-network-phoenix

Proposed solutions to high energy consumption of Generative AI LLMs: optimized hardware, new algorithms, green data centers

AI sparks huge increase in U.S. energy consumption and is straining the power grid; transmission/distribution as a major problem

AI Frenzy Backgrounder; Review of AI Products and Services from Nvidia, Microsoft, Amazon, Google and Meta; Conclusions

CoreSite Enables 50G Multi-cloud Networking with Enhanced Virtual Connections to Oracle Cloud Infrastructure FastConnect

Note 1. CoreSite is a subsidiary of American Tower Corporation and a member of Oracle PartnerNetwork (OPN).

Note 2. Oracle FastConnect enables customers to bypass the public internet and connect directly to Oracle Cloud Infrastructure and other Oracle Cloud services. With connectivity available at CoreSite’s data centers, FastConnect provides a flexible, economical private connection to higher bandwidth options for your hybrid cloud architecture. Oracle FastConnect is accessible at CoreSite’s data center facilities in Northern Virginia and Los Angeles through direct fiber connectivity. FastConnect is also available via the CoreSite Open Cloud Exchange® in seven CoreSite markets, including Los Angeles, Silicon Valley, Denver, Chicago, New York, Boston and Northern Virginia.

The integration of Oracle FastConnect and the CoreSite Open Cloud Exchange offers on-demand, virtual connectivity and access to best in class, end-to-end, fully redundant connection architecture.

Image Credit: CoreSite

…………………………………………………………………………………………………………………………………………………………………………………………………………..

The connectivity of FastConnect and the OCX can offer customers deploying artificial intelligence (AI) and data-intensive applications the ability to transfer large datasets securely and rapidly from their network edge to machine learning (ML) models and big data platforms running on OCI. With the launch of the new OCX capabilities to FastConnect, businesses can gain greater flexibility to provision on-demand, secure bandwidth to OCI with virtual connections of up to 50 Gbps.

With OCI, customers benefit from best-in-class security, consistent high performance, simple predictable pricing, and the tools and expertise needed to bring enterprise workloads to cloud quickly and efficiently. In addition, OCI’s distributed cloud offers multicloud, hybrid cloud, public cloud, and dedicated cloud options to help customers harness the benefits of cloud with greater control over data residency, locality, and authority, even across multiple clouds. As a result, customers can bring enterprise workloads to the cloud quickly and efficiently while meeting the strictest regulatory compliance requirements.

“The digital world requires faster connections to deploy complex, data-intense workloads. The simplified process offered through the Open Cloud Exchange enables businesses to rapidly scale network capacity between the enterprise edge and cloud providers,” said Juan Font, President and CEO of CoreSite, and SVP of U.S. Tower. “These enhanced, faster connections with FastConnect can provide businesses with a competitive advantage by ensuring near-seamless and reliable data transfers at massive scale for real-time analysis and rapid data processing.”

OCI’s extensive network of more than 90 FastConnect global and regional partners offer customers dedicated connectivity to Oracle Cloud Regions and OCI services – providing customers with the best options anywhere in the world. OCI is a deep and broad platform of cloud infrastructure services that enables customers to build and run a wide range of applications in a scalable, secure, highly available, and high-performance environment. From application development and business analytics to data management, integration, security, AI, and infrastructure services including Kubernetes and VMware, OCI delivers unmatched security, performance, and cost savings.

The new Open Cloud Exchange capabilities on FastConnect will be available in Q4 2023.

Related Resources:

- Watch What is The Open Cloud Exchange® and How Can It Simplify and Automate Your Cloud Connectivity?

- Open Cloud Exchange® Solution Brochure

- Trust CoreSite Data Centers to Enable Your AI Strategy

- Why businesses partner with CoreSite

About CoreSite:

CoreSite, an American Tower company (NYSE: AMT), provides hybrid IT solutions that empower enterprises, cloud, network, and IT service providers to monetize and future-proof their digital business. Our highly interconnected data center campuses offer a native digital supply chain featuring direct cloud onramps to enable our customers to build customized hybrid IT infrastructure and accelerate digital transformation. For more than 20 years, CoreSite’s team of technical experts has partnered with customers to optimize operations, elevate customer experience, dynamically scale, and leverage data to gain competitive edge. For more information, visit CoreSite.com and follow us on LinkedIn and Twitter.

References:

IEEE Santa Clara Valley (SCV) Lecture and Tour of CoreSite Multi-Tenant Data Center

https://www.coresite.com/cloud-networking/oracle-fastconnect

Using a distributed synchronized fabric for parallel computing workloads- Part I

by Run Almog Head of Product Strategy, Drivenets (edited by Alan J Weissberger)

Introduction:

Different networking attributes are needed for different use cases. Endpoints can be the source of a service provided via the internet or can also be a handheld device streaming a live video from anywhere on the planet. In between endpoints we have network vertices that handle this continuous and ever-growing traffic flow onto its destination as well as handle the knowhow of the network’s whereabouts, apply service level assurance, handle interruptions and failures and a wide range of additional attributes that eventually enable network service to operate.

This two part article will focus on a use case of running artificial intelligence (AI) and/or high-performance computing (HPC) applications with the resulting networking aspects described. The HPC industry is now integrating AI and HPC, improving support for AI use cases. HPC has been successfully used to run large-scale AI models in fields like cosmic theory, astrophysics, high-energy physics, and data management for unstructured data sets.

In this Part I article, we examine: HPC/AI workloads, disaggregation in data centers, role of the Open Compute Project, telco data center networking, AI clusters and AI networking.

HPC/AI Workloads, High Performance Compute Servers, Networking:

HPC/AI workloads are applications that run over an array of high performance compute servers. Those servers typically host a dedicated computation engine like GPU/FPGA/accelerator in addition to a high performance CPU, which by itself can act as a compute engine, and some storage capacity, typically a high-speed SSD. The HPC/AI application running on such servers is not running on a specific server but on multiple servers simultaneously. This can range from a few servers or even a single machine to thousands of machines all operating in synch and running the same application which is distributed amongst them.

The interconnect (networking) between these computation machines need to allow any to any connectivity between all machines running the same application as well as cater for different traffic patterns which are associated with the type of application running as well as stages of the application’s run. An interconnect solution for HPC/AI would resultingly be different than a network built to serve connectivity to residential households or a mobile network as well as be different than a network built to serve an array of servers purposed to answers queries from multiple users as a typical data center structure would be used for.

Disaggregation in Data Centers (DCs):

Disaggregation has been successfully used as a solution for solving challenges in cloud resident data centers. The Open Compute Project (OCP) has generated open source hardware and software for this purpose. The OCP community includes hyperscale data center operators and industry players, telcos, colocation providers and enterprise IT users, working with vendors to develop and commercialize open innovations that, when embedded in product are deployed from the cloud to the edge.

High-performance computing (HPC) is a term used to describe computer systems capable of performing complex calculations at exceptionally high speeds. HPC systems are often used for scientific research, engineering simulations and modeling, and data analytics. The term high performance refers to both speed and efficiency. HPC systems are designed for tasks that require large amounts of computational power so that they can perform these tasks more quickly than other types of computers. They also consume less energy than traditional computers, making them better suited for use in remote locations or environments with limited access to electricity.

HPC clusters commonly run batch calculations. At the heart of an HPC cluster is a scheduler used to keep track of available resources. This allows for efficient allocation of job requests across different compute resources (CPUs and GPUs) over high-speed networks. Several HPC clusters have integrated Artificial Intelligence (AI).

While hyperscale, cloud resident data centers and HPC/AI clusters have a lot of similarities between them, the solution used in hyperscale data centers is falling short when trying to address the additional complexity imposed by the HPC/AI workloads.

Large data center implementations may scale to thousands of connected compute servers. Those servers are used for an array of different application and traffic patterns shift between east/west (inside the data center) and north/south (in and out of the data center). This variety boils down to the fact that every such application handles itself so the network does not need to cover guarantee delivery of packets to and from application endpoints, these issues are solved with standard based retransmission or buffering of traffic to prevent traffic loss.

An HPC/AI workload on the other hand, is measured by how fast a job is completed and is interfacing to machines so latency and accuracy are becoming more of a critical factor. A delayed packet or a packet being lost, with or without the resulting retransmission of that packet, drags a huge impact on the application’s measured performance. In HPC/AI world, this is the responsibility of the interconnect to make sure this mishaps do not happen while the application simply “assumes” that it is getting all the information “on-time” and “in-synch” with all the other endpoints it shares the workload with.

–>More about how Data centers use disaggregation and how it benefits HPC/AI in the second part of this article (Part II).

Telco Data Center Networking:

Telco data centers/central offices are traditionally less supportive of deploying disaggregated solutions than hyper scale, cloud resident data centers. They are characterized by large monolithic, chassis based and vertically integrated routers. Every such router is well-structured and in fact a scheduled machine built to carry packets between every group of ports is a constant latency and without losing any packet. A chassis based router would potentially pose a valid solution for HPC/AI workloads if it could be built with scale of thousands of ports and be distributed throughout a warehouse with ~100 racks filled with servers.

However, some tier 1 telcos, like AT&T, use disaggregated core routing via white box switch/routers and DriveNets Network Cloud (DNOS) software. AT&T’s open disaggregated core routing platform was carrying 52% of the network operators traffic at the end of 2022, according to Mike Satterlee, VP of AT&T’s Network Core Infrastructure Services. The company says it is now exploring a path to scale the system to 500Tbps and then expand to 900Tbps.

“Being entrusted with AT&T’s core network traffic – and delivering on our performance, reliability and service availability commitments to AT&T– demonstrates our solution’s strengths in meeting the needs of the most demanding service providers in the world,” said Ido Susan, DriveNets founder and CEO. “We look forward to continuing our work with AT&T as they continue to scale their next-gen networks.”

Satterlee said AT&T is running a nearly identical architecture in its core and edge environments, though the edge system runs Cisco’s disaggregates software. Cisco and DriveNets have been active parts of AT&T’s disaggregation process, though DriveNets’ earlier push provided it with more maturity compared to Cisco.

“DriveNets really came in as a disruptor in the space,” Satterlee said. “They don’t sell hardware platforms. They are a software-based company and they were really the first to do this right.”

AT&T began running some of its network backbone on DriveNets core routing software beginning in September 2020. The vendor at that time said it expected to be supporting all of AT&T’s traffic through its system by the end of 2022.

Attributes of an AI Cluster:

Artificial intelligence is a general term that indicates the ability of computers to run logic which assimilates the thinking patterns of a biological brain. The fact is that humanity has yet to understand “how” a biological brain behaves, how are memories stored and accessed, how come different people have different capacities and/or memory malfunction, how are conclusions being deduced and how come they are different between individuals and how are actions decided in split second decisions. All this and more are being observed by science but not really understood to a level where it can be related to an explicit cause.

With evolution of compute capacity, the ability to create a computing function that can factor in large data sets was created and the field of AI focuses on identifying such data sets and their resulting outcome to educate the compute function with as many conclusion points as possible. The compute function is then required to identify patterns within these data sets to predict the outcome of new data sets which it did not encounter before. Not the most accurate description of what AI is (it is a lot more than this) but it is sufficient to explain why are networks built to run AI workloads different than regular data center networks as mentioned earlier.

Some example attributes of AI networking are listed here:

- Parallel computing – AI workloads are a unified infrastructure of multiple machines running the same application and same computation task

- Size – size of such task can reach thousands of compute engines (e.g., GPU, CPU, FPGA, Etc.)

- Job types – different tasks vary in their size, duration of the run, the size and number of data sets it needs to consider, type of answer it needs to generate, etc. this as well as the different language used to code the application and the type of hardware it runs on contributes to a growing variance of traffic patterns within a network built for running AI workloads

- Latency & Jitter – some AI workloads are resulting a response which is anticipated by a user. The job completion time is a key factor for user experience in such cases which makes latency an important factor. However, since such parallel workloads run over multiple machines, the latency is dictated by the slowest machine to respond. This means that while latency is important, jitter (or latency variation) is in fact as much a contributor to achieve the required job completion time

- Lossless – following on the previous point, a response arriving late is delaying the entire application. Whereas in a traditional data center, a message dropped will result in retransmission (which is often not even noticed), in an AI workload, a dropped message means that the entire computation is either wrong or stuck. It is for this reason that AI running networks requires lossless behavior of the network. IP networks are lossy by nature so for an IP network to behave as lossless, certain additions need to be applied. This will be discussed in. follow up to this paper.

- Bandwidth – large data sets are large. High bandwidth of traffic needs to run in and out of servers for the application to feed on. AI or other high performance computing functions are reaching interface speeds of 400Gbps per every compute engine in modern deployments.

The narrowed down conclusion from these attributes is that a network purposed to run AI workloads differs from a traditional data center network in that it needs to operate “in-synch.

There are several such “in-synch” solutions available. The main options are: Chassis based solutions, Standalone Ethernet solutions, and proprietary locked solutions.–>These will be briefly described to their key advantages and deficiencies in our part II article.

Conclusions:

There are a few differences between AI and HPC workloads and how this translates to the interconnect used to build such massive computation machines.

While the HPC market finds proprietary implementations of interconnect solutions acceptable for building secluded supercomputers for specific uses, the AI market requires solutions that allow more flexibility in their deployment and vendor selection.

AI workloads have greater variance of consumers of outputs from the compute cluster which puts job completion time as the primary metric for measuring the efficiency of the interconnect. However, unlike HPC where faster is always better, some AI consumers will only detect improvements up to a certain level which gives interconnect jitter a higher impact than latency.

Traditional solutions provide reasonable solutions up to the scale of a single machine (either standalone or chassis) but fail to scale beyond a single interconnect machine and keep the required performance to satisfy the running workloads. Further conclusions and merits of the possible solutions will be discussed in a follow up article.

………………………………………………………………………………………………………………………………………………………………………………..

About DriveNets:

DriveNets is a fast-growing software company that builds networks like clouds. It offers communications service providers and cloud providers a radical new way to build networks, detaching network growth from network cost and increasing network profitability.

DriveNets Network Cloud uniquely supports the complete virtualization of network and compute resources, enabling communication service providers and cloud providers to meet increasing service demands much more efficiently than with today’s monolithic routers. DriveNets’ software runs over standard white-box hardware and can easily scale network capacity by adding additional white boxes into physical network clusters. This unique disaggregated network model enables the physical infrastructure to operate as a shared resource that supports multiple networks and services. This network design also allows faster service innovation at the network edge, supporting multiple service payloads, including latency-sensitive ones, over a single physical network edge.

References:

https://drivenets.com/resources/events/nfdsp1-drivenets-network-cloud-and-serviceagility/

https://www.run.ai/guides/hpc-clusters/hpc-and-ai

https://drivenets.com/news-and-events/press-release/drivenets-network-cloud-now-carries-more-than-52-of-atts-core-production-traffic/

https://techblog.comsoc.org/2023/01/27/att-highlights-5g-mid-band-spectrum-att-fiber-gigapower-joint-venture-with-blackrock-disaggregation-traffic-milestone/

AT&T Deploys Dis-Aggregated Core Router White Box with DriveNets Network Cloud software

DriveNets Network Cloud: Fully disaggregated software solution that runs on white boxes

Equinix to deploy Nokia’s IP/MPLS network infrastructure for its global data center interconnection services

Today, Nokia announced that Equinix will deploy a new Nokia IP/MPLS network infrastructure to support its global interconnection services. As one of the largest data center and colocation providers, Equinix currently runs services on multiple networks from multiple vendors. With the new network, Equinix will be able to consolidate into one, efficient web-scale infrastructure to provide FP4-powered connectivity to all data centers – laying the groundwork for customers to deploy 5G networks and services.

Muhammad Durrani, Director of IP Architecture for Equinix, said, “We see tremendous opportunity in providing our customers with 5G services, but this poses special demands for our network, from ultra-low latency to ultra broadband performance, all with business- and mission-critical reliability. Nokia’s end-to-end router portfolio will provide us with the highly dynamic and programmable network fabric we need, and we are pleased to have the support of the Nokia team every step of the way.”

“We’re pleased to see Nokia getting into the data center networking space and applying the same rigor to developing a next-generation open and easily extendible data center network operating system while leveraging its IP routing stack that has been proven in networks globally. It provides a platform that network operations teams can easily adapt and build applications on, giving them the control they need to move fast.”

Sri Reddy, Co-President of IP/Optical Networks, Nokia, said, “We are working closely with Equinix to help advance its network and facilitate the transformation and delivery of 5G services. Our end-to-end portfolio was designed precisely to support this industrial transformation with a highly flexible, scalable and programmable network fabric that will be the ideal platform for 5G in the future. It is exciting to work with Equinix to help deliver this to its customers around the world.”

With an end-to-end portfolio, including the Nokia FP4-powered routing family, Nokia is working in partnership with operators to deliver real 5G. The FP4 chipset is the industry’s leading network processor for high-performance routing, setting the bar for density and scale. Paired with Nokia’s Service Router Operating System (SR OS) software, it will enable Equinix to offer additional capabilities driven by routing technologies such as Ethernet VPNs (EVPNs) and segment routing (SR).

Image Credit: Nokia

……………………………………………………………………………………………………………………………………………………………………………….

This latest deal comes just two weeks after Equinix said it will host Nokia’s Worldwide IoT Network Grid (WING) service on its data centers. WING is an Infrastructure-as-a-Service offering that provides low-latency and global reach to businesses, hastening their deployment of IoT and utilizing solutions offered by the Edge and cloud.

Equinix operates more than 210 data centers across 55 markets. It is unclear which of these data centers will first offer Nokia’s services and when WING will be available to customers.

“Nokia needed access to multiple markets and ecosystems to connect to NSPs and enterprises who want a play in the IoT space,” said Jim Poole, VP at Equinix. “By directly connecting to Nokia WING, mobile network operators can capture business value across IoT, AI, and security, with a connectivity strategy to support business transformation.”

References:

…………………………………………………………………………………………………………………………………………………………..

About Nokia:

We create the technology to connect the world. Only Nokia offers a comprehensive portfolio of network equipment, software, services and licensing opportunities across the globe. With our commitment to innovation, driven by the award-winning Nokia Bell Labs, we are a leader in the development and deployment of 5G networks.

Our communications service provider customers support more than 6.4 billion subscriptions with our radio networks, and our enterprise customers have deployed over 1,300 industrial networks worldwide. Adhering to the highest ethical standards, we transform how people live, work and communicate. For our latest updates, please visit us online www.nokia.com and follow us on Twitter @nokia.

Resources:

- Webpage: Nokia 7750 SR-s

- Webpage: Nokia FP4 silicon

- Webpage: Nokia Service Router Operating System (SR OS)

- Webpage: Nokia Network Services Platform

NeoPhotonics demonstrates 90 km 400ZR transmission in 75 GHz DWDM channels enabling 25.6 Tbps per fiber

NeoPhotonics completed experimental verification of the transmission of 400Gbps data over data center interconnect (DCI) link in a 75 GHz spaced Dense Wavelength Division Multiplexing (DWDM) channel.

NeoPhotonics achieved two milestones using its interoperable pluggable 400ZR [1.] coherent modules and its specially designed athermal arrayed waveguide grating (AWG) multiplexers (MUX) and de-multiplexers (DMUX).

Note 1. ZR stands for Extended Reach which can transmit 10G data rate and 80km distance over single mode fiber and use 1550nm lasers.

- Data rate per channel increases from today’s non-interoperable 100Gbps direct-detect transceivers to 400Gbps interoperable coherent 400ZR modules.

- The current DWDM infrastructure can be increased from 32 channels of 100 GHz-spaced DWDM signals to 64 channels of 75 GHz-spaced DWDM signals.

- The total DCI fiber capacity can thus be increased from 3.2 Tbps (100Gbps/ch. x 40 ch.) to 25.6 Tbps (400Gbps/ch. x 64 ch.), which is a total capacity increase of 800 percent.

NeoPhotonics said its technology overcomes multiple challenges in transporting 400ZR signals within 75 GHz-spaced DWDM channels.

The filters used in NeoPhotonics MUX and DMUX units are designed to limit ACI [2.] while at the same time having a stable center frequency against extreme temperatures and aging.

Note 2. ACI stands for Adjacent Channel Interface; it also can refer to Application Centric Infrastructure.

NeoPhotonics has demonstrated 90km DCI links using three in-house 400ZR pluggable transceivers with their tunable laser frequencies tuned to 75GHz spaced channels, and a pair of passive 75GHz-spaced DWDM MUX and DMUX modules designed specifically for this application. The optical signal-to-noise ratio (OSNR) penalty due to the presence of the MUX and DMUX and the worst-case frequency drifts of the lasers, as well as the MUX and DMUX filters, is less than 1dB. The worst-case component frequency drifts were applied to emulate the operating conditions for aging and extreme temperatures, the company said in a press release.

“The combination of compact 400ZR silicon photonics-based pluggable coherent transceiver modules with specially designed 75 GHz channel spaced multiplexers and de-multiplexers can greatly increase the bandwidth capacity of optical fibers in a DCI application and consequently greatly decrease the cost per bit,” said Tim Jenks, Chairman and CEO of NeoPhotonics. “These 400ZR coherent techniques pack 400Gbps of data into a 75 GHz wide spectral channel, placing stringent requirements on the multiplexers and de-multiplexers. We are uniquely able to meet these requirements because we do both design and fabrication of planar lightwave circuits and we have 20 years of experience addressing the most challenging MUX/DMUX applications,” concluded Mr. Jenks.

About NeoPhotonics

NeoPhotonics is a leading developer and manufacturer of lasers and optoelectronic solutions that transmit, receive and switch high-speed digital optical signals for Cloud and hyper-scale data center internet content provider and telecom networks. The Company’s products enable cost-effective, high-speed over distance data transmission and efficient allocation of bandwidth in optical networks. NeoPhotonics maintains headquarters in San Jose, California and ISO 9001:2015 certified engineering and manufacturing facilities in Silicon Valley (USA), Japan and China. For additional information visit www.neophotonics.com.

References: