Dell’Oro Group: RAN Market Grows Outside of China in 2Q 2025

Following two years of steep declines, initial estimates by Dell’Oro Group reveal that total RAN revenues—including baseband, radio hardware, and software, excluding services—advanced for a third consecutive quarter outside of China in 2Q 2025.

“Our initial assessment confirms that the narrative we’ve been discussing for some time is now coming to fruition. Market conditions have continued to stabilize, resulting in growth for three consecutive quarters outside of China,” said Stefan Pongratz, Vice President of RAN market research at the Dell’Oro Group. “However, broader market sentiment remains subdued, and a rapid rebound is not anticipated. The industry acknowledges that short-term fluctuations are unlikely to alter the market’s generally flat long-term trajectory,” Pongratz added.

Additional highlights from the 2Q 2025 RAN report:

- Growth in Europe, as well as the Middle East and Africa, nearly offset declines in the Caribbean and Latin America, as well as the Asia Pacific region.

- RAN vendor dynamics are gradually shifting, driven by three major trends: the strong are getting stronger, laggards are not improving, and the market is becoming increasingly divided.

- Ericsson and Huawei together accounted for more than 60 percent of the 1H25 market in North America and China, respectively.

- The top 5 RAN suppliers, based on worldwide revenues for the trailing four quarters, are Huawei, Ericsson, Nokia, ZTE, and Samsung.

- The short-term outlook remains unchanged, with total RAN expected to stabilize in 2025.

For sure, RAN is not a growth market (+1% CAGR between 2000 and 2023). However, underneath that flattish topline over time, RAN revenues fluctuate significantly as new spectrum/technologies become available. After a massive RAN surge between 2017 and 2021, RAN revenues declined sharply in 2023 and the fundamental question now is fairly straightforward – how will the slowdown in mobile data traffic impact the RAN market over the next five years? The constantly changing and increasingly demanding end-user expectations in combination with the search for growth present opportunities and challenges for incumbent RAN suppliers and new entrants.

………………………………………………………………………………………………………………………………………………………………………………………………

Huawei’s ability to sustain growth during a period of industry volatility can be attributed to several key factors:

- Strong Presence in China: Huawei maintains a commanding position in its home market, which remains one of the largest and most competitive globally. Despite external pressures and restrictions, its domestic strength provides stability and scale.

- Expanding Global Footprint: Growth in regions such as Europe, the Middle East, and Africa helped Huawei offset weaker performance in Asia Pacific, the Caribbean, and Latin America. These markets have been central to Huawei’s strategy of diversifying its global presence.

- Technological Advancements in 5G: Huawei has continued to invest heavily in 5G RAN innovation, leveraging advanced radio hardware, AI-driven network optimization, and energy-efficient base stations. These capabilities strengthen its competitive edge in delivering cost-effective and high-performance solutions.

- Resilient Business Strategy: Despite global challenges, including regulatory restrictions in certain markets, Huawei has adapted by strengthening local partnerships, investing in regional ecosystems, and optimizing supply chain resilience.

………………………………………………………………………………………………………………………………………………………………………………………………….

According to the recent Omdia report, Ericsson is the top RAN vendor in both business performance and portfolio strength in 2025, thanks in part to its energy-efficient products, comprehensive support across radio technologies, and Open RAN–ready offerings.

Ericsson also continues expanding its enterprise solutions, with integrated strategies that include private 5G, Cradlepoint, and cloud-native cores. In India, Ericsson signed a multi-billion-dollar 4G/5G equipment deal with Bharti Airtel to enhance network coverage using Open RAN-ready solutions.

Nokia is actively replacing Huawei in key European deployments—securing a major Open RAN contract to supply Deutsche Telekom across 3,000 German sites. In the U.S., Nokia signed a multi-year deal with AT&T to provide cloud-based voice core and 5G network automation solutions powered by AI/ML. Nokia is gaining ground in Europe and the U.S. through modernization and automation contracts. Samsung is leveraging Open RAN partnerships for a comeback, and overall vendor competition is shaped by technology shifts toward cloud-native, AI-enabled, and multi-vendor architectures.

Samsung is stepping up in the Open RAN ecosystem — as illustrated by a successful joint demonstration between Samsung, Vodafone, and AMD showcasing a full Open RAN voice call using AMD processors and Samsung’s O-RAN vRAN software. Despite its RAN equipment revenues falling 25% in 2024, Samsung remains well positioned in Europe and Africa, particularly in Vodafone tenders for replacing Huawei, which may drive recovery through expanded vRAN/Open RAN adoption.

In summary, the global RAN market is stabilizing after a steep downturn in 2024. Huawei holds steady in core markets like China and parts of Europe, while Ericsson leads globally on portfolio strength and new deals — particularly Open RAN and enterprise solutions.

………………………………………………………………………………………………………………………………………………………………………………………………………

References:

RAN Market Grows Outside of China, According to Dell’Oro Group

https://telecomlead.com/telecom-equipment/huawei-achieves-growth-in-global-ran-market-amid-industry-stabilization-122275

Dell’Oro: AI RAN to account for 1/3 of RAN market by 2029; AI RAN Alliance membership increases but few telcos have joined

Omdia: Huawei increases global RAN market share due to China hegemony

Network equipment vendors increase R&D; shift focus as 0% RAN market growth forecast for next 5 years!

vRAN market disappoints – just like OpenRAN and mobile 5G

Mobile Experts: Open RAN market drops 83% in 2024 as legacy carriers prefer single vendor solutions

Dell’Oro: Global RAN Market to Drop 21% between 2021 and 2029

Ericsson CEO’s strong statements on 5G SA, WRC 27, and AI in networks

At the Technology Policy Institute Forum in Aspen, Colorado this week, Ericsson CEO Börje Ekholm made many comments about “The Future of Wireless & Global Connectivity.” To begin with, he said it’s super critical for western nations, including the U.S., to increase their 5G Stand Alone (SA) network deployments. 5G network operators need 5G SA to take full advantage of the platform to support apps and services that are optimized for low latency, higher uplink prioritization and network slicing. “It’s hard to monetize something you don’t have,” Ekholm said. “The network has to be built for 5G SA.”

Ekholm’s 5G SA comments echo those of Magnus Ewerbring, Ericsson’s chief technology officer – Asia Pacific, who strongly asserted that 5G SA is the way for wireless network operators to monetize and differentiate their 5G networks.

Ekholm noted that China has prioritized 5G SA and has more than 4 million base stations deployed, estimating that this represents about ten times what’s been deployed in the US. China has been able to monetize that by supporting advanced robotics and automation in tens of thousands of factories. China is “highly competitive,” has “enormous scale, domestically,” and has made 5G SA a priority, the Ericsson CEO said. Western countries needs to take China’s 5G SA efforts “seriously” and invest more in their wireless infrastructure as it’s a competitive imperative.

Status of 5G SA network deployments:

A recent Heavy Reading (now part of Omdia) operator survey found that 35% of respondents said they have deployed 5G SA, with 20% expecting to be live by year-end. Some 41% cited “new or better services” as the primary driver for 5G core investment.

After a very slow start during the past five years, Téral Research says the migration to 5G SA has increased. Of the total 354 commercially available 5G public networks reported at the end of 1Q25, 74 are 5G SA – up from 49 one year ago. This growth is being driven by the success of fixed wireless access (FWA), a wider range of 5G SA-compatible devices, and the rise of voice over new radio (VoNR). Téral is also seeing increased adoption of private cloud for SA core deployments, with data sovereignty concerns shaping CSP strategies. Network slicing, which requires 5G SA, is moving from theory to practice—now extending to critical use cases like military applications.

3GPP URLC specifications are still not finalized and approved:

It should be noted that the 3GPP specifications for URLLC (Ultra-Reliable Low-Latency Communication) in the 5G SA core network and 5G NR access network are not considered 100% completed or finalized. URLLC relies on both the 5G NR (Radio Access Network) and the 5G Core network to achieve its goals. URLLC is vital for various industrial applications requiring real-time control and automation, such as the Industrial Internet of Things (IIoT), virtual reality, and autonomous vehicles.

3GPP Release 16 introduced significant enhancements for URLLC in the 5G New Radio (NR) access and 5G Core network. While Release 16 was “frozen” in July 2022, work on URLLC enhancements, particularly in the Radio Access Network (RAN), was not fully completed. These enhancements are crucial for 3GPP NR to meet the ITU-R M.2410 minimum performance requirements for URLLC for ultra-high reliability and ultra low latency.

3GPP Technical Specifications (TS) and Technical Reports (TR) become “official” standards when transposed into corresponding publications of the 3GPP Organizational Partner (like ETSI) or the standards body ((ITU-R)) acting as publisher for the Partner (ATIS for ITU-R). Once a Release is frozen (see definition in TR 21.900) and all work items completed, 3GPP specifications are officially transposed and published by the Organizational Partners, as a part of their standards series.

………………………………………………………………………………………………………………………………………………………………………..

Ekholm also said that strong western representation at ITU-R’s WRC-27 “is critically important.” That’s because licensed spectrum is likewise critical for the next generation of automation, self-driving vehicles and AI applications that will require a “truly reliable” and low-latency network, he added without mentioning the incomplete 3GPP URLLC specs.

“AI is the most fundamental technology we’ve seen so far,” he said. Ericsson has already been able to generate a 10% boost in spectrum efficiency using AI tools. While AI will no doubt erase some jobs, he’s also optimistic it will create new ones. Like so many analysts, Ekholm expects Gen AI to drive more traffic and new capabilities. “The criticality of the connectivity layer will become even more important,” he added.

References:

https://www.lightreading.com/5g/ericsson-ceo-calls-for-bigger-push-toward-5g-sa

https://www.tpiaspenforum.tech/agenda

Ericsson reports ~flat 2Q-2025 results; sees potential for 5G SA and AI to drive growth

Ookla: Uneven 5G deployment in Europe, 5G SA remains sluggish; Ofcom: 28% of UK connections on 5G with only 2% 5G SA

Ookla: Europe severely lagging in 5G SA deployments and performance

Téral Research: 5G SA core network deployments accelerate after a very slow start

Vision of 5G SA core on public cloud fails; replaced by private or hybrid cloud?

Latest Ericsson Mobility Report talks up 5G SA networks and FWA

3GPP Release 16 5G NR Enhancements for URLLC in the RAN & URLLC in the 5G Core network

Lumen deploys 400G on a routed optical network to meet AI & cloud bandwidth demands

Lumen is actively expanding its 400G optical network to support growing demands for high-bandwidth services, particularly for AI and cloud applications. This expansion includes deploying 400G connectivity in key markets and enhancing its Ultra-Low Loss (ULL) fiber network, the largest in North America. Lumen has deployed 400G in over a dozen markets, enabling faster speeds for accessing cloud services and third-party applications, according to SDxCentral. The initial rollout includes major markets like Atlanta, Chicago, Dallas, Denver, Kansas City, Las Vegas, Los Angeles, Minneapolis, New York City, Phoenix, and Seattle.

Lumen’s 400G network provides faster speeds for accessing third-party applications, cloud on-ramps, and various on-demand services. 400G connectivity is available through Lumen’s Ethernet On-Demand, Internet On-Demand, E-Line, E-LAN, and E-Access services. Jeff Ary, VP of product management at Lumen, explained that those combinations of services offer their customers flexibility in selecting how they consume the new capabilities, including through Lumen’s network-as-a-service (NaaS) model.

“We have customers that still want a one-year term in a fixed amount that they pay monthly. We have others that are really liking the NaaS, where they can turn it up, turn it down. But this supports all those customers, whether they want fixed bandwidth speed, fixed monthly amount, or if they want fixed and be able to increase and decrease through our NaaS portfolio, it’ll support that also,” Ary said. Ary explained that the move builds on Lumen’s agreement last year with Corning to secure 10% of that vendor’s global fiber production capacity over the next two years. “That helped us optimize how we run our optical network,” Ary said, and helped push Lumen’s 400G network reach to more than 90,000 route miles. “That enabled us to put our layer-two network on top of that, to have up to 400-gig on our Ethernet network, which then, of course, helps our IP network as well,” he added.

Lumen is working with multiple vendors for its 400G deployment, including Cisco for the routed optical network, Juniper for the routers in the data centers, Ciena for long-haul optical transport and Corning for the fiber itself. Lumen is also using pluggable optic modules, and as demand increases the company can change out the optical pluggable modules from 400G to 800G or 1.6T, as needed. Lumen said in February that it would use Ciena’s WaveLogic 6 Extreme (WL6e) 1.6 Tb/s coherent transceiver to support increased demand for running AI workloads, and in May Lumen announced it is working with Corning for a fiber buildout in western North Carolina to expand network capacity in light of the AI boom.

Dave Ward, CTO and product officer for Lumen, told Light Reading that the wireline network service provider is delivering Internet Protocol (IP) and Ethernet services built on a routed optical network, “taking advantage of all the bandwidth and capacity we have in our fiber network,” instead of using a hub and spoke model where a centralized hub acts as the network core. Lumen is providing the network to transport their data to locations where they want to train AI or develop inference workloads, said Ward. While Lumen’s network already connects to over 2,200 data centers, this launch provides a 400G upgrade and integration with Lumen Digital, Lumen’s on-demand IP and Ethernet services, according to Ward.

“With a routed optical network, we get orders of magnitude improvement on capacity that we can now route across our fiber wherever we have fiber available, and it’s two to three orders of magnitude lower cost to deliver a bit,” Ward said. “We’re really trying to build that cloud core and really make that accessible. It’s really building out those cloud core pieces, and lowering friction and having bandwidth, latency and redundancy engineered paths for our customers,” he added.

“We are partnering not only with the data center operators, but also with the hyperscalers to improve the speeds and access to all of those locations where our customers have their workloads and where they want their workloads and data to be,” said Ward.

Lumen’s overall network provides connectivity to the major cloud providers and 163,000 on-net customer locations. By 2028, Lumen plans to extend its network to 47 million intercity fiber miles.

References:

https://www.sdxcentral.com/news/lumen-lights-400g-connections-to-support-ai-naas-demand/

https://www.lumen.com/en-us/solutions/use-case/artificial-intelligence.html

Lumen and Ciena Transmit 1.2 Tbps Wavelength Service Across 3,050 Kilometers

Analysts weigh in: AT&T in talks to buy Lumen’s consumer fiber unit – Bloomberg

Lumen Technologies to connect Prometheus Hyperscale’s energy efficient AI data centers

Microsoft choses Lumen’s fiber based Private Connectivity Fabric℠ to expand Microsoft Cloud network capacity in the AI era

Lumen, Google and Microsoft create ExaSwitch™ – a new on-demand, optical networking ecosystem

ACSI report: AT&T, Lumen and Google Fiber top ranked in fiber network customer satisfaction

RtBrick survey: Telco leaders warn AI and streaming traffic to “crack networks” by 2030

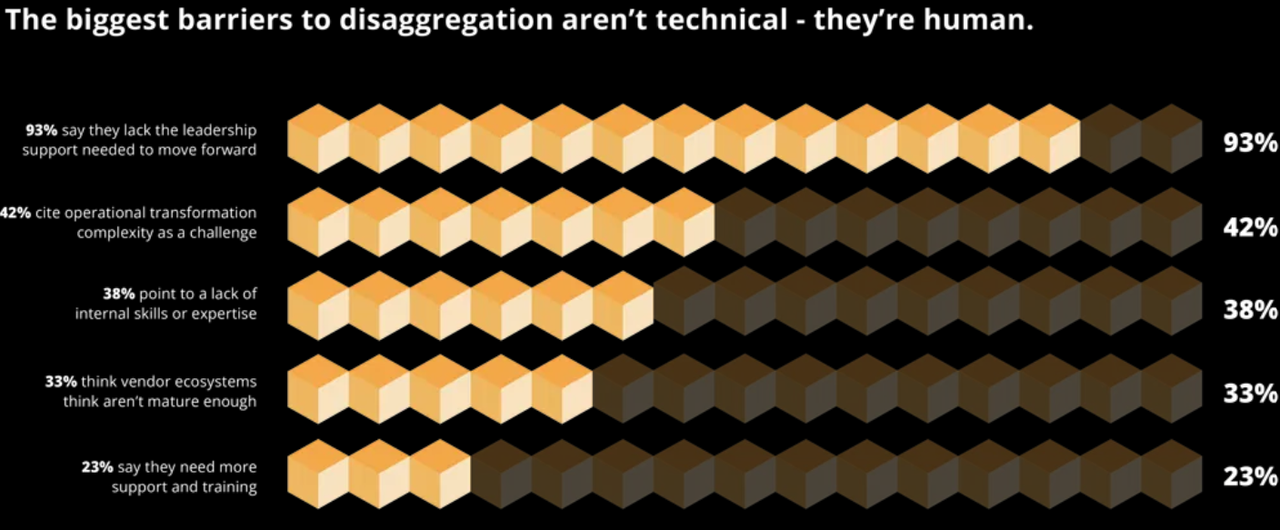

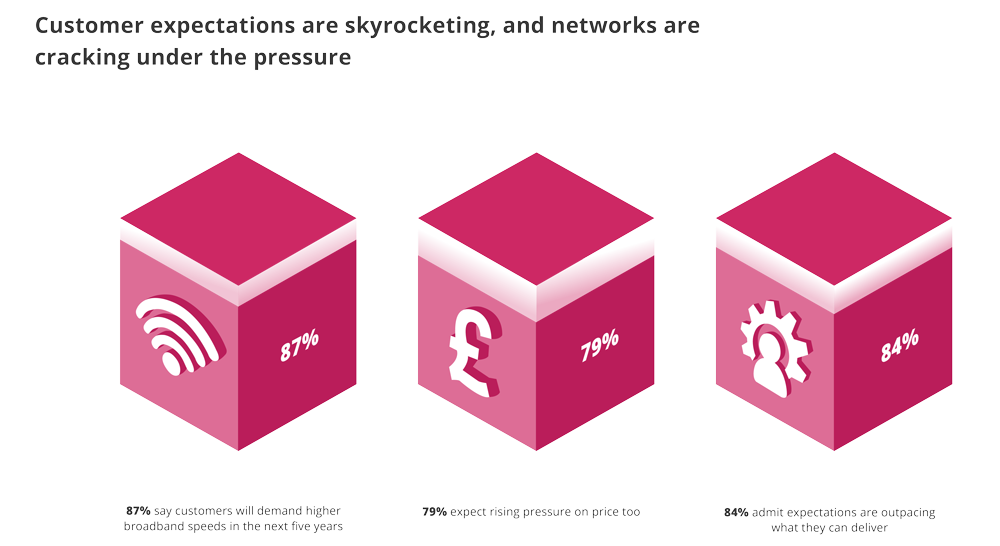

Respondents to a RtBrick survey of 200 senior telecom decision makers in the U.S., UK, and Australia finds that network operator leaders are failing to make key decisions and lack the motivation to change. The report exposes urgent warnings from telco engineers that their networks are on a five-year collision course with AI and streaming traffic. It finds that 93% of respondents report a lack of support from leadership to deploy disaggregated network equipment. Key findings:

- Risk-averse leadership and a lack of skills are the top factors that are choking progress.

- Majority are stuck in early planning, while AT&T, Deutsche Telekom, and Comcast lead large-scale disaggregation rollouts.

- Operators anticipate higher broadband prices but fear customer backlash if service quality can’t match the price.

- Organizations require more support from leadership to deploy disaggregation (93%).

- Complexity around operational transformation (42%), such as redesigning architectures and workflows.

- Critical shortage of specialist skills/staff (38%) to manage disaggregated systems.

The survey finds that almost nine in ten operators (87%) expect customers to demand higher broadband speeds by 2030, while roughly the same (79%) state their customers expect costs to increase, suggesting they will pay more for it. Yet half of all leaders (49%) admit they lack complete confidence in delivering services at a viable cost. Eighty-four percent say customer expectations for faster, cheaper broadband are already outpacing their networks, while 81% concede their current architectures are not well-suited to handling the future increases in bandwidth demand, suggesting they may struggle with the next wave of AI and streaming traffic.

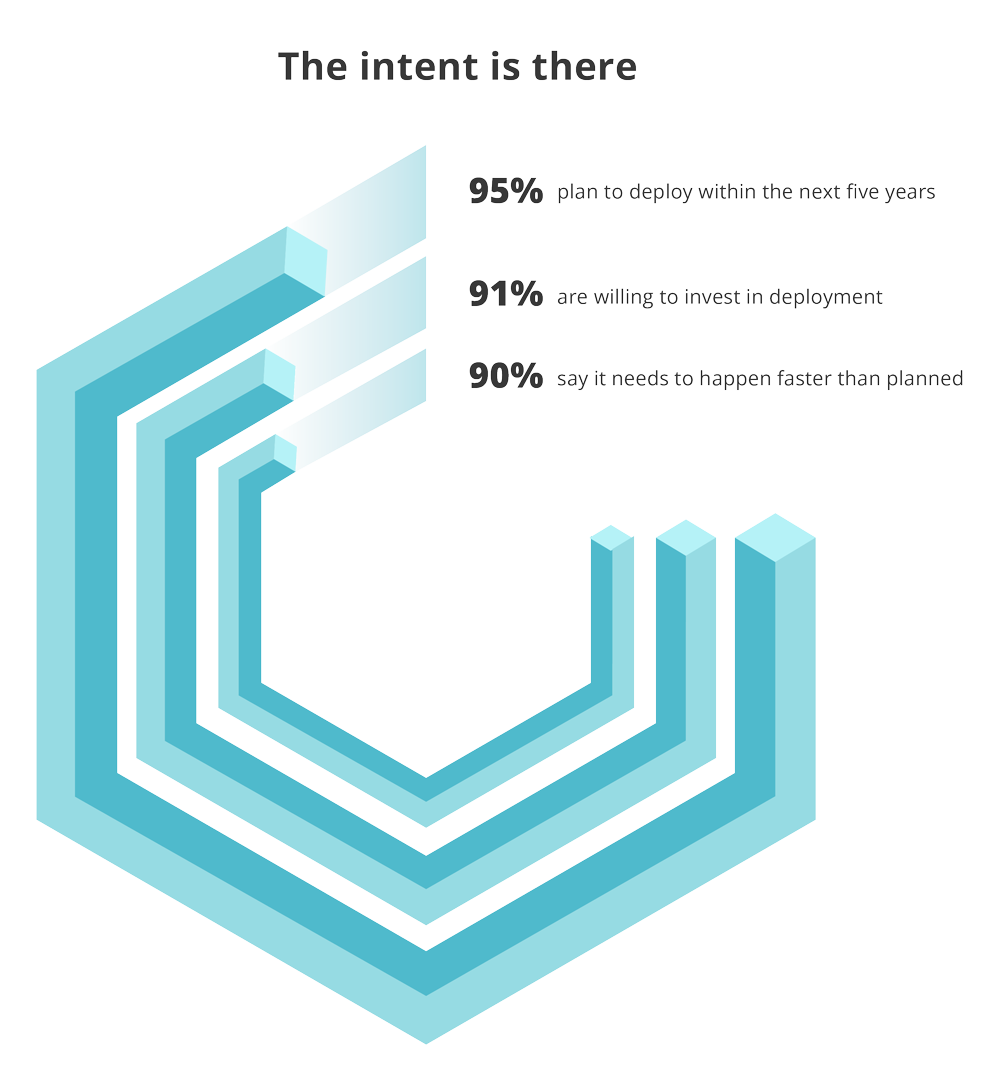

“Senior leaders, engineers, and support staff inside operators have made their feelings clear: the bottleneck isn’t capacity, it’s decision-making,” said Pravin S Bhandarkar, CEO and Founder of RtBrick. “Disaggregated networks are no longer an experiment. They’re the foundation for the agility, scalability, and transparency operators need to thrive in an AI-driven, streaming-heavy future,” he added noting the intent to deploy disaggregation as per this figure:

However, execution continues to trail ambition. Only one in twenty leaders has confirmed they’re “in deployment” today, while 49% remain stuck in early-stage “exploration”, and 38% are still “in planning”. Meanwhile, big-name operators such as AT&T, Deutsche Telekom, and Comcast are charging ahead and already actively deploying disaggregation at scale, demonstrating faster rollouts, greater operational control, and true vendor flexibility. Here’s a snapshot of those activities:

- AT&T has deployed an open, disaggregated routing network in their core, powered by DriveNets Network Cloud software on white-box bare metal switches and routers from Taiwanese ODMs, according to Israel based DriveNets. DriveNets utilizes a Distributed Disaggregated Chassis (DDC) architecture, where a cluster of bare metal switches act as a single routing entity. That architecture has enabled AT&T to accelerate 5G and fiber rollouts and improve network scalability and performance. It has made 1.6Tb/s transport a reality on AT&T’s live network.

- Deutsche Telekom has deployed a disaggregated broadband network using routing software from RtBrick running on bare-metal switch hardware to provide high-speed internet connectivity. They’re also actively promoting Open BNG solutions as part of this initiative.

- Comcast uses network cloud software from DriveNets and white-box hardware to disaggregate their core network, aiming to increase efficiency and enable new services through a self-healing and consumable network. This also includes the use of disaggregated, pluggable optics from multiple vendors.

Nearly every leader surveyed also claims their organization is “using” or “planning to use” AI in network operations, including for planning, optimization, and fault resolution. However, nine in ten (93%) say they cannot unlock AI’s full value without richer, real-time network data. This requires more open, modular, software-driven architecture, enabled by network disaggregation.

“Telco leaders see AI as a powerful asset that can enhance network performance,” said Zara Squarey, Research Manager at Vanson Bourne. “However, the data shows that without support from leadership, specialized expertise, and modern architectures that open up real-time data, disaggregation deployments may risk further delays.”

When asked what benefits they expect disaggregation to deliver, operators focused on outcomes that could deliver the following benefits:

- 54% increased operational automation

- 54% enhanced supply chain resilience

- 51% improved energy efficiency

- 48% lower purchase and operational costs

- 33% reduced vendor lock-in

Transformation priorities align with those goals, with automation and agility (57%) ranked first, followed by vendor flexibility (55%), supply chain security (51%), cost efficiency (46%) and energy usage and sustainability (47%).

About the research:

The ‘State of Disaggregation’ research was independently conducted by Vanson Bourne in June 2025 and commissioned by RtBrick to identify the primary drivers and barriers to disaggregated network rollouts. The findings are based on responses from 200 telecom decision makers across the U.S., UK, and Australia, representing operations, engineering, and design/Research and Development at organizations with 100 to 5,000 or more employees.

References:

https://www.rtbrick.com/state-of-disaggregation-report-2

https://drivenets.com/blog/disaggregation-is-driving-the-future-of-atts-ip-transport-today/

Disaggregation of network equipment – advantages and issues to consider

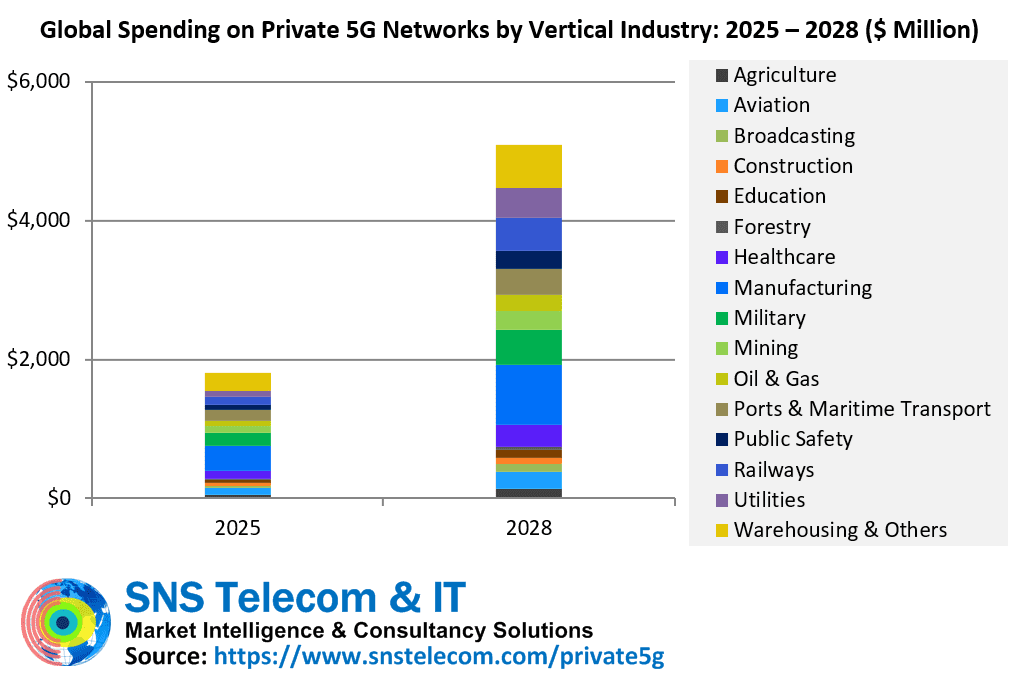

SNS Telecom & IT: Private 5G Market Nears Mainstream With $5 Billion Surge

SNS Telecom & IT’s latest research report indicates that private 5G networks are on the verge of mainstream adoption, with annual spending projected to reach $5 billion by 2028. Their real-world impact is becoming increasingly visible through accelerated investments by industrial giants and other end user organizations to support diverse applications such as autonomous transport systems, mobile robots, remote-controlled machinery, and high-definition video transmission, while reducing reliance on unlicensed wireless and hard-wired connections.

Private LTE networks are a well-established market and have been around for more than a decade, albeit as a niche segment of the wider cellular infrastructure sector. Here are a few examples: iNET’s (Infrastructure Networks) 700 MHz LTE network in the Permian Basin, Tampnet’s offshore 4G infrastructure in the North Sea, Rio Tinto’s private LTE network for its Western Australia mining operations, and other initial installations date back to the early 2010s.

However, private cellular networks or NPNs (Non-Public Networks) based on 3GPP-defined 5G specifications (there are no ITU-R standards/recommendations for private cellular networks or “IMT”) are just on the cusp of becoming a mainstream technology, with a market potential exceeding that of private LTE. Over the last 12 months, there has been a noticeable increase in production-grade deployments of private 5G networks by household names and industrial giants such as Airbus, Aker BP, Boliden, CIL (Coal India Limited), Equinor, Etihad, Ford, Hutchison Ports, Hyundai, Jaguar Land Rover, John Deere, LG Electronics, Lufthansa, Newmont, POSCO, Tesla, Toyota, and Walmart, paving the way for Industry 4.0 and advanced application scenarios.

Compared to LTE technology, private 5G networks – also referred to as 5G MPNs (Mobile Private Networks), 5G campus networks, P5G, local 5G, or e-Um 5G systems depending on geography – can address far more demanding performance requirements in terms of throughput, latency, reliability, availability, and connection density. In particular, 5G’s URLLC (Ultra-Reliable, Low-Latency Communications) and mMTC (Massive Machine-Type Communications) capabilities, along with a future-proof transition path to 6G networks in the 2030s, have positioned it as a viable alternative to physically wired connections for industrial-grade communications between machines, robots, and control systems.

Furthermore, despite its relatively higher cost of ownership, 5G’s wider coverage radius per radio node, scalability, determinism, security features, and mobility support have stirred strong interest in its potential as a replacement for interference-prone unlicensed wireless technologies in IIoT (Industrial IoT) environments, where the number of connected sensors and other endpoints is expected to increase significantly over the coming years.

China remains the most mature national market thanks to state-funded directives aimed at accelerating the adoption of 5G connectivity in industrial settings such as factories, warehouses, mines, power plants, substations, oil and gas facilities, and ports. To provide context, the largest private 5G installations in China can comprise hundreds to even thousands of dedicated RAN (Radio Access Network) nodes supported by on-premise or edge cloud-based core network functions depending on specific latency, reliability, and security requirements. Several Chinese private 5G adopters – including State Grid, Midea, and Wanhua Chemical – are also among the front-runners in utilizing cost-efficient 5G RedCap (Reduced Capability) modules, primarily to support video surveillance and IoT sensor use cases. In addition, some of the most technically advanced features of 5G-Advanced – 5G’s next evolutionary phase – have been implemented over private wireless installations in the country. For example, steel manufacturer Baosteel is leveraging DetNet (Deterministic Networking) enhancements for real-time coordination of multiple automated processes within its factories; China Huaneng Group relies on a tri-band (700 MHz, 2.6 GHz & 4.9 GHz) 5G-Advanced network to connect a fleet of 100 autonomous electric mining trucks at its Yimin open pit coal mine in Inner Mongolia; and automaker Great Wall Motor is using an indoor 5G-Advanced network for time-critical industrial control within a car roof production line to prevent wire abrasion in mobile application scenarios – an issue that had previously resulted in production interruptions averaging 60 hours of downtime per year. Recently, Chinese mobile operators and vendors have expanded beyond their domestic market in pursuit of private 5G business opportunities abroad, from Thailand’s manufacturing sector to mining in South Africa.

As end user organizations in the United States, Canada, Germany, United Kingdom, France, Japan, South Korea, Taiwan, Australia, Brazil, and other countries ramp up their digitization and automation initiatives, private 5G networks are progressively being implemented to support use cases as diverse as wirelessly connected machinery for the rapid reconfiguration of production lines, distributed PLC (Programmable Logic Controller) environments, AMRs (Autonomous Mobile Robots) and AGVs (Automated Guided Vehicles) for intralogistics, connected workers with mobile and paperless workflows, AR (Augmented Reality)-assisted guidance and troubleshooting, machine vision-based quality control, wireless software flashing of manufactured vehicles, remote-controlled cranes, unmanned mining equipment, digital twin models of complex industrial systems, virtual visits for parents to see their infants in NICUs (Neonatal Intensive Care Units), live broadcast production in locations not easily accessible by traditional solutions, operations-critical communications during major sporting events, precision agriculture and livestock farming, BVLOS (Beyond Visual Line-of-Sight) operation of drones, ATO (Automatic Train Operation), video analytics for railway crossing and station platform safety, remote visual inspections of aircraft engine parts, real-time collaboration for flight line maintenance, XR (Extended Reality)-based training, autonomous and remote operations at military bases, and missile field communications.

With non-smartphone device availability, end user conservatism, and other teething problems continuing to wane, early adopters are affirming their faith in the long-term potential of private 5G by investing in networks built in collaboration with specialist integrators, through traditional mobile operators, or independently via direct procurement from 5G equipment suppliers – made possible by the availability of new shared and local area licensed spectrum options in many national markets. As SNS Telecom & IT highlighted last year, some private 5G installations have progressed to a stage where practical and tangible benefits – particularly efficiency gains, cost savings, and worker safety – are becoming increasingly evident. Notable examples, featuring new additions this year, include but are not limited to:

- Tesla’s deployment of a private 5G network at its Gigafactory Texas facility in Austin has eliminated AGV (Automated Guided Vehicle) stoppages, previously caused by unstable Wi-Fi connections, within the 12 million square foot facility. Another private 5G implementation on the shop floor of its Gigafactory Berlin-Brandenburg plant in Germany has helped in overcoming up to 90% of the overcycle issues for a particular process in the factory’s GA (General Assembly) shop. The electric automaker is integrating private 5G network infrastructure to address high-impact use cases in production, intralogistics, and quality operations across its global manufacturing facilities.

- Rival luxury automaker Jaguar Land Rover’s installation of a private 5G network at its Solihull plant in England, United Kingdom, has established connectivity for sensors and data within the plant’s five-story paint shop, which had previously been left unconnected due to the cost and complexity of wired Ethernet links. The network has also resolved Wi-Fi-related challenges, including limited device connections, poor signal penetration in the metal-heavy environment, and unstable handovers between access points along the production line.

- Lufthansa’s private 5G network at its LAX (Los Angeles International Airport) cargo facility has resulted in a 60% reduction in processing time per item by eliminating latency spikes and dropped connections from Wi-Fi and public cellular networks, which had previously delayed logistics operations and forced an occasional return to manual pen-and-paper processes. Another 5G campus network at the Lufthansa Technik facility in Hamburg, Germany, has removed the need for civil aviation customers to physically attend servicing by providing reliable, high-resolution video access for virtual parts inspections, and borescope examinations at both of its engine overhaul workshops. Previous attempts to implement virtual inspections using unlicensed Wi-Fi technology proved ineffective due to the presence of large metal structures.

- At VINCI Airports’ Lyon-Saint Exupéry Airport in the southeast of France, Stanley Robotics is using a standalone private 5G network to provide reliable and low-latency connectivity for autonomous valet parking robots, which have increased parking efficiency by 50%. Efforts are also underway to leverage 5G’s precise positioning capabilities to further enhance the localization accuracy of the robots’ control system.

- Since adopting a private 5G network for public safety and smart city applications, the southern French city of Istres has reduced video surveillance camera installation costs from $34,000 to less than $6,000 per unit by eliminating the need for ducts, civil works, and other infrastructure-related overhead costs typically associated with fiber-based connections in urban environments.

- HavelPort Berlin has increased its annual weighing capacity by up to 60% through automated weighing processes managed via tablets in lorry cabs using an Open RAN-compliant private 5G network. The network also supports drone-based inventory control for bulk goods monitoring and autonomous transportation within the inland port in Wustermark (Brandenburg), Germany.

- John Deere is steadily progressing with its goal of reducing dependency on wired Ethernet connections from 70% to 10% over the next five years by deploying private 5G networks at its industrial facilities in the United States, South America, and Europe. Two of the most recent deployments are at the heavy machinery giant’s 2.2 million square foot Davenport Works manufacturing complex in Iowa and its Horizontina factory in Rio Grande do Sul, Brazil, which is in the midst of continued expansion. In a similar effort, automotive aluminum die-castings supplier IKD has replaced 6 miles of cables connecting 600 pieces of machinery with a private 5G network, thereby reducing cable maintenance costs to near zero and increasing the product yield rate by 10%.

- Newmont’s implementation of one of Australia’s first production-grade private 5G networks at its Cadia gold-copper underground mine in New South Wales has enabled remote-controlled operation of its entire dozer fleet across the full 2.5 kilometer width of the mine’s tailings works construction area. Previously, the mining company was unable to connect more than two machines at distances of no more than 100 meters over Wi-Fi, with unstable connectivity causing up to six hours of downtime per shift. Newmont plans to leverage private 5G connectivity to roll out more teleremote and autonomous machines in its tier-one underground and surface mines worldwide.

- The U.S. Marine Corps’ private 5G network at MCLB (Marine Corps Logistics Base) in Southwest Georgia has significantly improved warehouse management and logistics operations, including 98% accuracy in inventory reordering, a 65% increase in goods velocity, and a 55% reduction in labor costs. Currently under a $6 million sustainment contract for the next three years, the purpose-built 5G network was deployed to enhance automation and overcome the challenges posed by complex fiber optic installations and unreliable Wi-Fi systems in the logistics hub’s demanding physical environment.

- The Liverpool 5G Create network in the inner city area of Kensington has demonstrated substantial cost savings potential for digital health, education and social care services, including an astonishing $10,000 drop in yearly expenditure per care home resident through a 5G-connected fall prevention system and a $2,600 reduction in WAN (Wide Area Network) connectivity charges per GP (General Practitioner) surgery – which represents $220,000 in annual savings for the United Kingdom’s NHS (National Health Service) when applied to 86 surgeries in Liverpool.

- The EWG (East-West Gate) Intermodal Terminal’s private 5G network has increased productivity from 23-25 containers per hour to 32-35 per hour and reduced the facility’s personnel-related operating expenses by 40% while eliminating the possibility of crane operator injury due to remote-controlled operation with a latency of less than 20 milliseconds.

- NEC Corporation has improved production efficiency by 30% through the introduction of a local 5G-enabled autonomous transport system for intralogistics at its new factory in Kakegawa (Shizuoka Prefecture), Japan. The manufacturing facility’s on-premise 5G network has also resulted in an elevated degree of freedom in terms of the factory floor layout, thereby allowing NEC to flexibly respond to changing customer needs, market demand fluctuations, and production adjustments.

- A local 5G installation at Ushino Nakayama’s Osumi farm in Kanoya (Kagoshima Prefecture), Japan, has enabled the Wagyu beef producer to achieve labor cost savings of more than 10% through reductions in accident rates, feed loss, and administrative costs. The 5G network provides wireless connectivity for AI (Artificial Intelligence)-based image analytics and autonomous patrol robots.

- CJ Logistics has achieved a 20% productivity increase at its Ichiri center in Icheon (Gyeonggi), South Korea, following the adoption of a private 5G network to replace the 40,000 square meter warehouse facility’s 300 Wi-Fi access points for Industry 4.0 applications, which experienced repeated outages and coverage issues.

- Delta Electronics – which has installed private 5G networks for industrial wireless communications at its plants in Taiwan and Thailand – estimates that productivity per direct labor and output per square meter have increased by 69% and 75% respectively following the implementation of 5G-connected smart production lines.

- Yawata Electrode has improved the efficiency of its goods transportation processes – involving the movement of raw materials, semi-completed goods, and finished products between production floors – by approximately 24% since adopting a private 5G network for autonomous mobile robots at its electrode manufacturing plant in Nakhon Ratchasima, Thailand.

- An Open RAN-compliant standalone private 5G network in Taiwan’s Pingtung County has facilitated a 30% reduction in pest-related agricultural losses and a 15% boost in the overall revenue of local farms through the use of 5G-equipped UAVs (Unmanned Aerial Vehicles), mobile robots, smart glasses and AI-enabled image recognition.

- JD Logistics – the supply chain and logistics arm of online retailer JD.com – has achieved near-zero packet loss and reduced the likelihood of connection timeouts by an impressive 70% since migrating AGV communications from unlicensed Wi-Fi systems to private 5G networks at its logistics parks in Beijing and Changsha (Hunan), China.

- Risun Group has deployed a private 5G network at its Risun Zhongran Park facility in Hohhot (Inner Mongolia), China, to provide industrial-grade wireless connectivity for both wheeled and rail-mounted transport machinery, typically measuring tens of meters in height and length. Since transitioning from Wi-Fi to private 5G, the coke producer has increased production efficiency by nearly 20% and reduced labor costs by approximately 30%.

- Baosteel – a business unit of the world’s largest steelmaker China Baowu Steel Group – credits its 43-site private 5G deployment at two neighboring factories with reducing manual quality inspections by 50% and achieving a steel defect detection rate of more than 90%, which equates to $7 million in annual cost savings by reducing lost production capacity from 9,000 tons to 700 tons.

- Dongyi Group Coal Gasification Company ascribes a 50% reduction in manpower requirements and a 10% increase in production efficiency – which translates to more than $1 million in annual cost savings – at its Xinyan coal mine in Lvliang (Shanxi), China, to private 5G-enabled digitization and automation of underground mining operations.

- Sinopec’s (China Petroleum & Chemical Corporation) explosion-proof 5G network at its Guangzhou oil refinery in Guangdong, China, has reduced accidents and harmful gas emissions by 20% and 30% respectively, resulting in an annual economic benefit of more than $4 million. The solution is being replicated across more than 30 refineries of the energy giant.

- Since adopting a hybrid public-private 5G network to enhance the safety and efficiency of urban rail transit operations, the Guangzhou Metro rapid transit system has reduced its maintenance costs by approximately 20% using 5G-enabled digital perception applications for the real-time identification of water logging and other hazards along railway tracks.

Although the vast majority of the networks referenced above have been built using 5G equipment supplied by traditional wireless infrastructure players – from incumbents Ericsson, Nokia, Huawei, and ZTE to the likes of Samsung and NEC – alternative suppliers are continuing to gain traction in the private 5G market. Noteworthy examples include:

- Celona – whose 5G LAN solution has been deployed by over 100 customers;

- Globalstar – which has developed a 3GPP Release 16-compliant multipoint terrestrial RAN system optimized for dense private wireless deployments in Industry 4.0 automation environments;

- Airspan Networks – a well-known Open RAN and small cell technology provider;

- Mavenir – an end-to-end provider of Open RAN and converged packet core solutions;

- JMA Wireless – an American RAN equipment vendor;

- GXC – a private cellular technology provider recently acquired by Motive Companies;

- Baicells – a 4G and 5G NR access equipment manufacturer;

- Siemens – which has developed an in-house private 5G network solution for use at its own plants as well as those of industrial customers;

- HFR Mobile – the private 5G business unit of Korean telecommunications equipment maker HFR;

- Ataya – a private 5G startup focused on unifying and simplifying enterprise connectivity;

- Moso Networks – a U.S.-based provider of private 5G radio products backed by Taiwanese small cell pioneer Sercomm;

- Abside Networks – an American manufacturer of military-grade 5G infrastructure;

- Radisys – a RAN software vendor for many private network deployments;

- Druid Software – whose mobile core platform has been deployed for private networks worldwide;

- HPE (Hewlett Packard Enterprise) – which is transitioning from a mobile core specialist to end-to-end private 5G network provider;

- Cisco Systems – a mobile core and transport network technology provider for both public and private 5G networks;

- Pente Networks – a mobile core and orchestration solution provider;

- Highway 9 Networks – which has developed a cloud-based platform to simplify private 5G deployments;

- Neutroon Technologies – another private 5G orchestration specialist;

- Qucell – a Korean manufacturer specializing in 5G small cell equipment;

- Askey Computer – a Taiwanese telecommunications equipment manufacturer;

- QCT (Quanta Cloud Technology) – a Taiwanese data center and 5G solutions provider;

- G REIGNS – a business unit of HTC specializing in portable private 5G network solutions;

- Pegatron – a Taiwanese manufacturer that has recently entered the Open RAN-compliant 5G infrastructure market;

- AsiaInfo Technologies – a Chinese provider of lightweight mobile core software and end-to-end private 5G solutions;

- Firecell – a French startup specializing in industrial-grade private 5G solutions;

- CampusGenius – which has developed a customizable 5G core solution for small and medium-sized enterprises;

- Blackned – a German developer of tactical core middleware for defense communications;

- Cumucore – a provider of mobile core software for private networks;

- Accelleran – a Belgian provider of Open RAN software solutions;

- IS-Wireless – a Polish Open RAN software vendor;

- Benetel – an Irish Open RAN radio developer; and Wireless Excellence – a British 5G equipment vendor.

SNS Telecom & IT forecasts that annual investments in private 5G networks for vertical industries will grow at a CAGR of approximately 41% between 2025 and 2028, eventually surpassing $5 billion by the end of 2028. Much of this growth will initially be driven by highly localized 5G networks covering geographically limited areas for Industry 4.0 applications in manufacturing and process industries. Industrial giants experiencing patchy Wi-Fi coverage, cabling-related inflexibility, and network scalability limitations at their facilities are championing the private 5G movement for local area networking. Additionally, sub-1 GHz wide area critical communications networks for public safety, utility, and railway communications are anticipated to accelerate their transition from LTE, GSM-R, and other legacy narrowband technologies to 5G towards the latter half of the forecast period, as 5G-Advanced technology reaches commercial maturity. Among other features for mission-critical networks, 3GPP Release 18 – which defines the first set of 5G-Advanced specifications – adds support for 5G NR equipment operating in dedicated spectrum with less than 5 MHz of bandwidth, paving the way for private 5G networks operating in sub-500 MHz, 700 MHz, 850 MHz, and 900 MHz bands for public safety broadband, smart grid modernization, and FRMCS (Future Railway Mobile Communication System).

The “Private 5G Market: 2025 – 2030 – Opportunities, Challenges, Strategies & Forecasts” report presents an in-depth assessment of the private 5G network market, including the value chain, market drivers, barriers to uptake, enabling technologies, operational and business models, vertical industries, application scenarios, key trends, future roadmap, standardization, spectrum availability and allocation, regulatory landscape, case studies, ecosystem player profiles, and strategies. The report also presents global and regional market size forecasts from 2025 to 2030. The forecasts cover three infrastructure submarkets, 16 vertical industries, and five regional markets.

The report comes with an associated Excel datasheet suite covering quantitative data from all numeric forecasts presented in the report, as well as a database of over 8,300 global private cellular engagements – including more than 3,700 private 5G installations – as of Q3’2025.

Key Findings

The report has the following key findings:

- SNS Telecom & IT projects that annual investments in private 5G networks for vertical industries will grow at a CAGR of approximately 41% between 2025 and 2028, eventually surpassing $5 billion by the end of 2028. Much of this growth will initially be driven by highly localized 5G networks covering geographically limited areas for Industry 4.0 applications in manufacturing and process industries.

- Industrial giants experiencing patchy Wi-Fi coverage, cabling-related inflexibility, and network scalability limitations at their facilities are championing the private 5G movement for local area networking. Additionally, sub-1 GHz wide area critical communications networks for public safety, utility, and railway communications are anticipated to accelerate their transition from LTE, GSM-R, and other legacy narrowband technologies to 5G towards the latter half of the forecast period, as 5G-Advanced – 5G’s next evolutionary phase – reaches commercial maturity.

- Enterprises and industrial customers – depending on their specific connectivity needs – are adopting private 5G networks both as a complement to and as a replacement for Wi-Fi solutions. Kyushu Electric Power, for instance, leverages a local 5G network to provide outdoor coverage and backhaul for an indoor Wi-Fi 6 network at its Matsuura thermal power plant. Similarly, KHNP (Korea Hydro & Nuclear Power), Hyundai Motor, and John Deere are pursuing a multi-technology wireless access strategy that integrates private 5G with Wi-Fi. Others – including Airbus, LG Electronics, Tesla, Toyota, Newmont, Prinzhorn Group, Chevron, BD SENSORS, CJ Logistics, Del Conca, and Wonderful Citrus – have deployed private cellular networks with a relatively small number of radio nodes to replace dozens of Wi-Fi access points, which had previously failed to deliver reliable coverage in large facilities.

- As end user organizations ramp up their digitization and automation initiatives, some private 5G installations have progressed to a stage where practical and tangible benefits are becoming increasingly evident. Notably, private 5G networks have resulted in productivity and efficiency gains for specific manufacturing, quality control, and intralogistics processes in the range of 20 to 90%, labor cost savings of 55% at a warehousing facility, up to 40% lower operating expenditures at an intermodal rail terminal, reductions in worker accidents and harmful gas emissions by 20% and 30% respectively at an oil refinery, and a 50% decrease in manpower requirements for underground mining operations.

- In addition to deployments at existing sites, organizations are increasingly incorporating on-premise 5G connectivity into the building plans of new greenfield projects. For example, Future Technologies Venture and iBwave Solutions used CAD (Computer-Aided Design) files to design the Band n48 (3.5 GHz CBRS) private 5G network for automaker Hyundai Motor’s HMGMA (Hyundai Motor Group Metaplant America) electrified vehicle plant near Savannah, Georgia. Other examples of new facilities with private 5G networks integrated from the outset include but are not limited to the Los Angeles Chargers’ El Segundo training facility; Formula 1’s Las Vegas complex; Cleveland Clinic’s Mentor Hospital; CHI’s (Children’s Health Ireland) New Children’s Hospital; Port of Aberdeen’s South Harbour; NEC’s Kakegawa plant; Pegatron’s Batam smart factory; PATTA’s low-carbon Renwu factory; and Jacto’s Paulópolis production facility.

- Spectrum liberalization initiatives – particularly shared and local spectrum licensing frameworks for mid-band 5G NR frequencies such as n40 (2.3 GHz), n38 (2.6 GHz), n48 (3.5 GHz), n78 (3.3-3.8 GHz), n77 (3.8-4.2 GHz), and n79 (4.6-4.9 GHz) – are playing a pivotal role in accelerating the adoption of private 5G networks. Telecommunications regulators in multiple national markets – including the United States, Canada, Germany, United Kingdom, Ireland, France, Spain, Netherlands, Belgium, Switzerland, Finland, Sweden, Norway, Poland, Slovenia, Lithuania, Moldova, Bahrain, Japan, South Korea, Taiwan, Hong Kong, Australia, and Brazil – have released or are in the process of granting access to shared and local area licensed spectrum.

- By capitalizing on their extensive licensed spectrum holdings, infrastructure assets, and cellular networking expertise, national mobile operators have continued to retain a significant presence in the private 5G network market, even in countries where shared and local area licensed spectrum is available. With an expanded focus on vertical B2B (Business-to-Business) opportunities in the 5G era, mobile operators are actively involved in diverse projects extending from localized 5G networks for secure and reliable wireless connectivity in industrial and enterprise environments to sliced hybrid public-private networks that integrate on-premise 5G infrastructure with a dedicated slice of public mobile network resources for wide area coverage.

- With channel sales accounting for over two-thirds of all private 5G contracts, global system integrators and new classes of private network service providers have also found success in the market. Notable examples include but are not limited to NTT, Fujitsu, Accenture, Capgemini, Kyndryl, Booz Allen Hamilton, Lockheed Martin, Oceus Networks, Future Technologies Venture, STEP CG, Kajeet, TLC Solutions, 4K Solutions, Lociva, Tampnet, iNET (Infrastructure Networks), Ambra Solutions, PMY Group, Vocus, Aqura, Federated Wireless, Betacom, InfiniG, Ballast Networks, Hawk Networks (Althea), Airtower Networks, Fortress Solutions, HALO Networks, Ramen Networks, Meter Cellular, Sigma Wireless, IONX Networks (formerly Dense Air), MUGLER, Opticoms, COCUS, TRIOPT, Xantaro, Alsatis, Axians, Axione, Hub One, SPIE Group, TDF, Weaccess Group, ORAXIO Telecom Solutions, Unitel Group, Numerisat, Telent, Logicalis, Telet Research, Citymesh, RADTONICS, Grape One, NS Solutions, OPTAGE, Wave-In Communication, LG CNS, SEJONG Telecom, CJ OliveNetworks, Megazone Cloud, Nable Communications, Qubicom, NewGens, and Comsol. Also active in this space are the private 5G business units of Boldyn Networks, American Tower, Boingo Wireless, Freshwave, Shared Access, Digita, and other neutral host infrastructure providers; cable operators’ enterprise divisions such as Comcast Business and Cox Private Networks; and global IoT connectivity providers Onomondo, Monogoto, and floLIVE.

- Although the vast majority of existing private 5G networks have been built using equipment supplied by traditional wireless infrastructure players – from incumbents Ericsson, Nokia, Huawei, and ZTE to the likes of Samsung and NEC – alternative suppliers are continuing to gain traction in the market. There is much greater OEM (Original Equipment Manufacturer) and vendor diversity than in the public mobile network segment with other players making their presence known in markets as far afield as the United States, Canada, Germany, France, United Kingdom, Saudi Arabia, Brazil, Japan, South Korea, Taiwan, China, and Australia.

- Examples include Celona, Globalstar, Airspan Networks, Mavenir, GXC, Baicells, Telrad Networks, BLiNQ Networks, JMA Wireless, Ataya, Moso Networks (Sercomm), Abside Networks, SEMPRE, Eridan Communications, Ubiik, Star Solutions, Expeto, Druid Software, HPE (Hewlett Packard Enterprise), Cisco Systems, Pente Networks, A5G Networks, Radisys, Wilson Connectivity, Nextivity, SOLiD, HFR Mobile, Qucell, Askey Computer, QCT (Quanta Cloud Technology), G REIGNS, Pegatron, AsiaInfo Technologies, AI-LINK, FLARE SYSTEMS, Hytec Inter, Siemens, Firecell, Obvios, Eviden, Kontron, BubbleRAN, Amarisoft, CampusGenius, Blackned, Cumucore, Accelleran, IS-Wireless, Effnet, Node-H, SRS (Software Radio Systems), Benetel, AttoCore, cellXica, JET Connectivity, Neutral Wireless, Wireless Excellence, Antevia Networks, ASOCS, ASELSAN, PROTEI, and Trópico.

- There is a growing focus on private 5G security solutions enabling device management, network visibility, traffic segregation, access control, and threat prevention across both IT (Information Technology) and OT (Operational Technology) domains. Some of the key players in this segment include OneLayer, Palo Alto Networks, Fortinet, Trend Micro’s subsidiary CTOne, and Thales. Network orchestration and management is another area garnering considerable interest, with solutions from companies like Highway 9 Networks, Neutroon Technologies, Nearby Computing, NEC’s Netcracker division, and Weaver Labs.

- As the market moves toward mainstream adoption, funding is ramping up for private 5G infrastructure and connectivity specialists. For example, Celona has raised over $135 million in venture funding to date, and Druid Software recently secured $20 million in strategic growth capital to expand into verticals such as defense, shipping, and utilities. Firecell and Highway 9 Networks raised $7.2 million and $25 million, respectively, in seed funding last year, while Monogoto secured $27 million in a Series A round. More recently, SEMPRE closed its $14.3 million Series A funding round to support deployments of secure and resilient 5G infrastructure products across defense and commercial markets. Also worth noting is Boldyn’s completion of a $1.2 billion debt financing deal to expand its private wireless and neutral host infrastructure footprint throughout the United States.

- Although greater vendor diversity is beginning to be reflected in infrastructure sales, an atmosphere of acquisitions persists as highlighted by recent deals such as the divestiture of Corning’s small cell RAN and DAS portfolio to Airspan Networks, Motive Companies’ acquisition of private cellular technology provider GXC, Riedel Communications’ buyout of former Nokia spinoff and 5G campus network specialist MECSware, Rheinmetall’s share purchase agreement for majority ownership of tactical core middleware developer Blackned, and Nokia’s acquisition of tactical communications technology provider Fenix Group to strengthen its position in the defense sector. An earlier example is HPE’s acquisition of Italian mobile core technology provider Athonet two years ago.

- The service provider segment is not immune to consolidation either. For example, Boldyn Networks recently acquired SML (Smart Mobile Labs), a German provider of bespoke private 5G networks and turnkey applications. This follows Boldyn’s 2024 takeover of Cellnex’s private networks business unit, which largely included Edzcom – a private 4G/5G specialist with installations in Finland, France, Germany, Spain, Sweden, and the United Kingdom.

- Another recent development is Day Wireless Systems’ acquisition of Sigma Wireless, a leading private 5G system integrator in Ireland. Consolidation activity has also been underway in other national markets. In Australia, for instance, Vocus acquired Challenge Networks in 2023 and Telstra Purple took over Aqura Technologies in 2022 — both well-known pioneers in industrial private wireless networks.

- Hyperscalers have scaled back their ambitions in the hypercompetitive private 5G market as they pivot towards AI and other high-growth opportunities better served by their cloud infrastructure and service ecosystems. Amazon has recently discontinued its AWS (Amazon Web Services) Private 5G managed service, while Microsoft’s Azure Private 5G Core service is set to be retired by the end of September 2025.

References:

https://www.snstelecom.com/p5g

https://www.celona.io/the-state-of-private-wireless-market-4g-5g

SNS Telecom & IT: Private 5G and 4G LTE cellular networks for the global defense sector are a $1.5B opportunity

SNS Telecom & IT: $6 Billion Private LTE/5G Market Shines Through Wireless Industry’s Gloom

SNS Telecom & IT: Private 5G Network market annual spending will be $3.5 Billion by 2027

SNS Telecom & IT: Q1-2024 Public safety LTE/5G report: review of engagements across 86 countries, case studies, spectrum allocation and more

SNS Telecom & IT: Shared Spectrum 5G NR & LTE Small Cell RAN Investments to Reach $3 Billion

SNS Telecom & IT: CBRS Network Infrastructure a $1.5 Billion Market Opportunity

SNS Telecom & IT: Private LTE & 5G Network Infrastructure at $6.4 Billion by end of 2026

NTT Data and Google Cloud partner to offer industry-specific cloud and AI solutions

NTT Data and Google Cloud plan to combine their expertise in AI and the cloud to offer customized solutions to accelerate enterprise transformation across sectors including banking, insurance, manufacturing, retail, healthcare, life sciences and the public sector.. The partnership will include agentic AI solutions, security, sovereign cloud and developer tools. This collaboration combines NTT DATA’s deep industry expertise in AI, cloud-native modernization and data engineering with Google Cloud’s advanced analytics, AI and cloud technologies to deliver tailored, scalable enterprise solutions.

With a focus on co-innovation, the partnership will drive industry-specific cloud and AI solutions, leveraging NTT DATA’s proven frameworks and best practices along with Google Cloud’s capabilities to deliver customized solutions backed by deep implementation expertise. Significant joint go-to-market investments will support seamless adoption across key markets.

According to Gartner®, worldwide end-user spending on public cloud services is forecast to reach $723 billion in 2025, up from $595.7 billion in 2024.1 The use of AI deployments in IT and business operations is accelerating the reliance on modern cloud infrastructure, highlighting the critical importance of this strategic global partnership.

“This collaboration with Google Cloud represents a significant milestone in our mission to drive innovation and digital transformation across industries,” said Marv Mouchawar, Head of Global Innovation, NTT DATA. “By combining NTT DATA’s deep expertise in AI, cloud-native modernization and enterprise solutions with Google Cloud’s advanced technologies, we are helping businesses accelerate their AI-powered cloud adoption globally and unlock new opportunities for growth.”

“Our partnership with NTT DATA will help enterprises use agentic AI to enhance business processes and solve complex industry challenges,” said Kevin Ichhpurani, President, Global Partner Ecosystem at Google Cloud. “By combining Google Cloud’s AI with NTT DATA’s implementation expertise, we will enable customers to deploy intelligent agents that modernize operations and deliver significant value for their organizations.”

Photo Credit: Phil Harvey/Alamy Stock Photo

In financial services, this collaboration will support regulatory compliance and reporting through NTT DATA solutions like Regla, which leverage Google Cloud’s scalable AI infrastructure. In hospitality, NTT DATA’s Virtual Travel Concierge enhances customer experience and drives sales with 24×7 multilingual support, real-time itinerary planning and intelligent travel recommendations. It uses the capabilities of Google’s Gemini models to drive personalization across more than 3 million monthly conversations.

Key focus areas include:

- Industry-specific agentic AI solutions: NTT DATA will build new industry solutions that transform analytics, decision-making and client experiences using Google Agentspace, Google’s Gemini models, secure data clean rooms and modernized data platforms.

- AI-driven cloud modernization: Accelerating enterprise modernization with Google Distributed Cloud for secure, scalable modernization built and managed on NTT DATA’s global infrastructure, from data centers to edge to cloud.

- Next-generation application and security modernization: Strengthening enterprise agility and resilience through mainframe modernization, DevOps, observability, API management, cybersecurity frameworks and SAP on Google Cloud.

- Sovereign cloud innovation: Delivering secure, compliant solutions through Google Distributed Cloud in both air-gapped and connected deployments. Air-gapped environments operate offline for maximum data isolation. Connected deployments enable secure integration with cloud services. These scenarios meet data sovereignty and regulatory demands in sectors such as finance, government and healthcare without compromising innovation.

- Google Distributed Cloud sandbox environment: Google Distributed Cloud sandbox environment is a digital playground where developers can build, test and deploy industry-specific and sovereign cloud deployments. This sandbox will help teams upskill through hands-on training and accelerate time to market with Google Distributed Cloud technologies through preconfigured, ready-to-deploy templates.

NTT DATA will support these innovations through a full-stack suite of services including advisory, building, implementation and ongoing hosting and managed services.

By combining NTT DATA’s proven blueprints and delivery expertise with Google Cloud’s technology, the partnership will accelerate the development of repeatable, scalable solutions for enterprise transformation. At the heart of this innovation strategy is Takumi, NTT DATA’s GenAI framework that guides clients from ideation to enterprise-wide deployment. Takumi integrates seamlessly with Google Cloud’s AI stack, enabling rapid prototyping and operationalization of GenAI use cases.

This initiative expands NTT DATA’s Smart AI Agent Ecosystem, which unites strategic technology partnerships, specialized assets and an AI-ready talent engine to help clients deploy and manage responsible, business-driven AI at scale.

Accelerating global delivery with a dedicated Google Cloud Business Group:

To achieve excellence, NTT DATA has established a dedicated global Google Cloud Business Group comprising thousands of engineers, architects and advisory consultants. This global team at NTT DATA will work in close collaboration with Google Cloud teams to help clients adopt and scale AI-powered cloud technologies.

NTT DATA is also investing in advanced training and certification programs ensuring teams across sales, pre-sales and delivery are equipped to sell, secure, migrate and implement AI-powered cloud solutions. The company aims to certify 5,000 engineers in Google Cloud technology, further reinforcing its role as a leader in cloud transformation on a global scale.

Additionally, both companies are co-investing in global sales and go-to-market campaigns to accelerate client adoption across priority industries. By aligning technical, sales and marketing expertise, the companies aim to scale transformative solutions efficiently across global markets.

This global partnership builds on NTT DATA and Google Cloud’s 2024 co-innovation agreement in APAC. In addition it further strengthens NTT DATA’s acquisition of Niveus Solutions, a leading Google Cloud specialist recognized with three 2025 Google Cloud Awards – “Google Cloud Country Partner of the Year – India”, “Google Cloud Databases Partner of the Year – APAC” and “Google Cloud Country Partner of the Year – Chile,” further validating NTT DATA’s commitment to cloud excellence and innovation.

“We’re excited to see the strengthened partnership between NTT DATA and Google Cloud, which continues to deliver measurable impact. Their combined expertise has been instrumental in migrating more than 380 workloads to Google Cloud to align with our cloud-first strategy,” said José Luis González Santana, Head of IT Infrastructure, Carrefour. “By running SAP HANA on Google Cloud, we have consolidated 100 legacy applications to create a powerful, modernized e-commerce platform across 200 hypermarkets. This transformation has given us the agility we need during peak times like Black Friday and enabled us to launch new services faster than ever. Together, NTT DATA and Google Cloud are helping us deliver more connected, seamless experiences for our customers,”

About NTT DATA:

NTT DATA is a $30+ billion trusted global innovator of business and technology services. We serve 75% of the Fortune Global 100 and are committed to helping clients innovate, optimize and transform for long-term success. As a Global Top Employer, we have experts in more than 50 countries and a robust partner ecosystem of established and start-up companies. Our services include business and technology consulting, data and artificial intelligence, industry solutions, as well as the development, implementation and management of applications, infrastructure and connectivity. We are also one of the leading providers of digital and AI infrastructure in the world. NTT DATA is part of NTT Group, which invests over $3.6 billion each year in R&D to help organizations and society move confidently and sustainably into the digital future.

Resources:

https://www.nttdata.com/global/en/news/press-release/2025/august/081300

Google Cloud targets telco network functions, while AWS and Azure are in holding patterns

Deutsche Telekom and Google Cloud partner on “RAN Guardian” AI agent

Ericsson and Google Cloud expand partnership with Cloud RAN solution

NTT & Yomiuri: ‘Social Order Could Collapse’ in AI Era

AI Meets Telecom: Automating RF Plumbing Diagrams at Scale

By Chode Balaji with Ajay Lotan Thakur

In radio frequency (RF) circuit design, an RF plumbing diagram is a visual representation of how components such as antennas, amplifiers, filters, and cables are physically interconnected to manage RF signal flow across a network node. Unlike logical or schematic diagrams, these diagrams emphasize signal routing, cable paths, and component connectivity, ensuring spectrum compliance and accurate transmission behavior.

In this article, I introduce an AI-powered automation platform designed to generate RF plumbing diagrams for complex telecom deployments, which dramatically reduces manual effort and engineering errors. The system has been field-tested within a major telecom provider’s RF design workflow, showing measurable reduction in design time and increased compliance, where it has cut design time from hours to minutes while standardizing outputs across markets. We discuss the architecture of the platform, its real-world use cases, and the broader implications for network scalability and compliance in next-generation RF deployments.

Introduction

In RF circuit and system design, an RF plumbing diagram is a critical visual blueprint that shows how physical components—antennas, cables, combiners, duplexers, and power sources—are interconnected to manage signal flow across a network node. Unlike logical network schematics, these diagrams emphasize actual deployment wiring, routing, and interconnection details across multiple frequency bands and sectors.

As networks become increasingly dense and distributed, especially with 5G and Open RAN architectures, RF plumbing diagrams have grown in both complexity and importance. Yet across the industry, they are still predominantly created using manual methods—introducing inconsistency, delay, and high operational cost [1].

Challenges with Manual RF Documentation

Creating RF plumbing diagrams manually demands deep subject matter expertise, detailed knowledge of hardware interconnections, and alignment with region-specific compliance standards. Each diagram can take hours to complete, and even minor errors—such as incorrect port mappings or misaligned frequency bands—can result in service degradation, failed field validations, or regulatory non-compliance. In some cases, incorrect diagrams have delayed spectrum audits or triggered failed E911 checks, which are critical in public safety contexts.

Compliance requirements often vary by country due to differences in spectrum licensing, environmental limits, and emergency services integration. For example, the U.S. mandates specific RF configuration standards for E911 systems [3], while European operators must align with ETSI guidelines [4].

According to industry discussions on automation in telecom operations [1], reducing manual overhead and standardizing documentation workflows is a key goal for next-generation network teams.

System Overview – AI Powered Diagram Generation

To streamline this process, we developed CERTA RFDS—a system that automates RF plumbing diagram generation using input configuration data. CERTA ingests band and sector mappings, node configurations, and passive element definitions, then applies business logic to render a complete, standards-aligned diagram.

The system is built as a cloud-native microservice and can be integrated into OSS workflows or CI/CD pipelines used by RF planning teams. Its modular engine outputs standardized SVG/PDFs and maintains design versioning aligned with audit requirements.

This system aligns with automation trends seen in AI-native telecom operations [1] and can scale to support edge-native deployments as part of broader infrastructure-as-code workflows.

Deployment and Public Availability

The CERTA RFDS system has been internally validated within major telecom design teams and is now available publicly for industry adoption. It has demonstrated consistent savings in engineering time—reducing diagram effort from 2–4 hours to under 5 minutes per node—while improving compliance through template consistency. These results and the underlying platform were presented at the IEEE International Conference on Emerging and Advanced Information Systems (EEAIS) [5]. (Note – Paper is presented at EEAIS 2025; publication pending)

Output Showcase and Engineering Impact

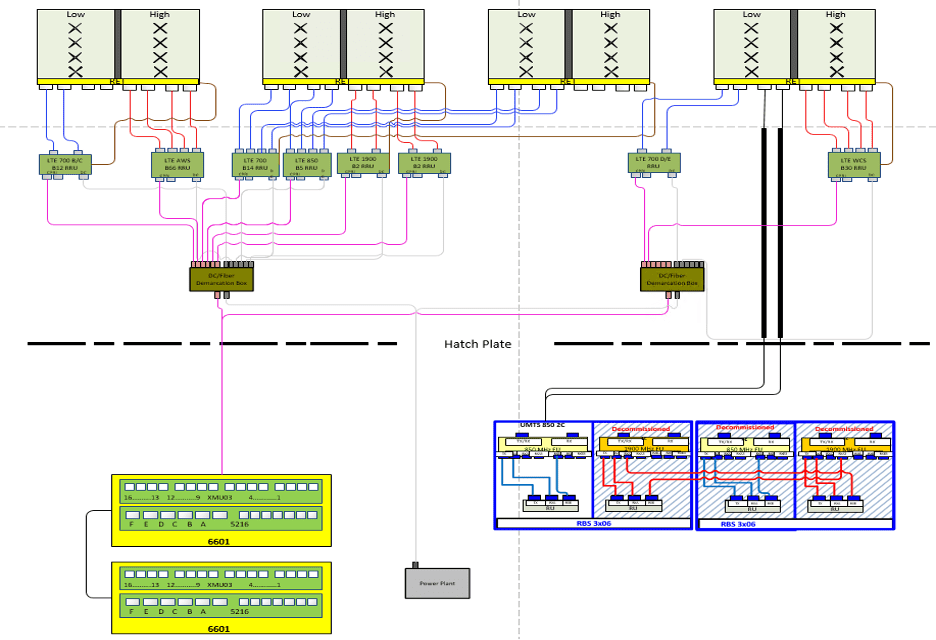

Below is a sample RF plumbing diagram generated by the CERTA platform for a complex LTE and UMTS multi-sector node. The system automatically determines feed paths, port mappings, and labeling conventions based on configuration metadata.

As 5G networks continue to roll out globally, RF plumbing diagrams are becoming even more complex due to increased densification, the use of small cells, and the incorporation of mmWave technologies. The AI-driven automation framework we developed is fully adaptable to 5G architecture. It supports configuration planning for high-frequency spectrum bands, MIMO antenna arrangements, and ensures that E911 and regulatory compliance standards are maintained even in ultra-dense urban deployments. This makes the system a valuable asset in accelerating the design and validation processes for next generation 5G infrastructure.

Figure 1. AI-Generated RF Plumbing Diagram from CERTA RFDS: Illustrating dual-feed, multi-sector layout for LTE and UMTS deployment.

Figure 1. AI-Generated RF Plumbing Diagram from CERTA RFDS: Illustrating dual-feed, multi-sector layout for LTE and UMTS deployment.

Benefits include:

- 90%+ time savings per node

- Consistency across regions and engineering teams

- Simplified field validation and compliance review

Future Scope

CERTA RFDS is being extended to support:

- GIS visualization of RF components with geo-tagged layouts

- Integration with planning systems for real-time topology generation

- LLM-based auto-summary of node-level changes for audit documentation

Conclusion

RF plumbing diagrams are fundamental to reliable telecom deployment and compliance. By shifting from manual workflows to intelligent automation, systems like CERTA RFDS enable engineers and operators to scale with confidence, consistency, and speed—meeting the challenges of modern wireless networks.

Abbreviation

- CERTA RFDS – Cognitive Engineering for Rapid RFDS Transformation & Automation

- RFDS – Radio Frequency Data Sheet

- GIS – Geographic Information System

- LLM – Large Language Model

- OSS – Operations Support System

- MIMO – Multiple Input Multiple Output

- RF – Radio Frequency

Reference

[1] ZTE’s Vision for AI-Native Infrastructure and AI-Powered Operations

[5] IEEE EEAIS 2025 Conference, “CERTA RFDS: Automating RF Plumbing Diagrams at Scale,”

About Author

Balaji Chode is an AI Solutions Architect at UBTUS, where he leads telecom automation initiatives including the design and deployment of CERTA RFDS. He has contributed to large-scale design and automation platforms across telecom and public safety, authored multiple peer-reviewed articles, and filed several patents.

“The author acknowledges the use of AI-assisted tools for language refinement and formatting”

FCC updates subsea cable regulations; repeals 98 “outdated” broadcast rules and regulations

The U.S. Federal Communications Commission (FCC) is updating its regulations for subsea cables to enhance security and streamline the licensing process. The updates, adopted at an FCC open meeting on August 7th, aim to address national security concerns related to foreign adversaries (like Russia and China) and accelerate the deployment of these critical communication networks. This initiative, developed by the Office of International Affairs in collaboration with the Public Safety and Homeland Security Bureau and the Enforcement Bureau, is intended to bolster national security. The new rules address potential vulnerabilities of subsea cables to foreign adversaries, recognizing their critical role in global internet traffic and financial transactions.

FCC Chairman Brendan Carr said that while the FCC often focuses on airwaves as vital but unseen infrastructure, submarine cables are just as essential. “They are the real unseen heroes of global communications. [The Commission] must facilitate, not frustrate the buildout of submarine cable industries.” Indeed, the vast global network of subsea cables carry ~ 99% of the world’s internet traffic and support more than $10 trillion in daily financial transactions.

……………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………

The risk of Russia- and China-backed attacks on undersea cables carrying international internet traffic is likely to rise amid a spate of incidents in the Baltic Sea and around Taiwan, according to a report by Recorded Future, a U.S. cybersecurity company. It singled out nine incidents in the Baltic Sea and off the coast of Taiwan in 2024 and 2025 as a harbinger for further disruptive activity. The report said that while genuine accidents remained likely to cause most undersea cable disruption, the Baltic and Taiwanese incidents pointed to increased malicious activity from Russia and China. “(Sabotage) Campaigns attributed to Russia in the North Atlantic-Baltic region and China in the western Pacific are likely to increase in frequency as tensions rise,” the company said.

……………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………

The FCC also repealed 98 “outdated” broadcast rules and regulations as part of a deregulation effort aimed at streamlining and modernizing the agency’s rules. These rules, deemed obsolete or unnecessary, included outdated requirements for testing equipment and authorization procedures, as well as provisions related to technologies like analog broadcasting that are no longer in use. The move is part of the FCC’s “Delete, Delete, Delete” docket, which seeks to identify and remove rules that no longer serve the public interest.

FCC Chairman Brendan Carr (middle) and Commissioners Olivia Trusty (right) and Anna Gomez (left) at the Open Meeting on August 7, 2025 in Washington, D.C. Photo credit: Broadband Breakfast/Patricia Blume.

Many of the repealed rules relate to analog-era technologies and practices that are no longer relevant in today’s digital broadcasting landscape. These rules cover various areas, such as obsolete subscription television rules, outdated equipment requirements from the 1970s, unnecessary authorization rules for standard practices, obsolete international broadcast provisions, and sections that were for reference only or were duplicative or reserved. In particular:

- The repealed rules covered equipment requirements for AM, FM, and TV stations that are now obsolete, as well as rules related to subscription television systems that operated on now-defunct analog technology.

- The FCC eliminated rules regarding international broadcasting that used outdated terms and procedures.

- Several sections that merely listed citations to outdated FCC orders, court decisions, and policies were also removed.