Generative AI

AI Frenzy Backgrounder; Review of AI Products and Services from Nvidia, Microsoft, Amazon, Google and Meta; Conclusions

Backgrounder:

Artificial intelligence (AI) continues both to astound and confound. AI finds patterns in data and then uses a technique called “reinforcement learning from human feedback.” Humans help train and fine-tune large language models (LLMs). Some humans, like “ethics & compliance” folks, have a heavier hand than others in tuning models to their liking.

Generative Artificial Intelligence (generative AI) is a type of AI that can create new content and ideas, including conversations, stories, images, videos, and music. AI technologies attempt to mimic human intelligence in nontraditional computing tasks like image recognition, natural language processing (NLP), and translation. Generative AI is the next step in artificial intelligence. You can train it to learn human language, programming languages, art, chemistry, biology, or any complex subject matter. It reuses training data to solve new problems. For example, it can learn English vocabulary and create a poem from the words it processes. Your organization can use generative AI for various purposes, like chatbots, media creation, and product development and design.

Review of Leading AI Company Products and Services:

1. AI poster child Nvidia’s (NVDA) market cap is about $2.3 trillion, due mainly to momentum-obsessed investors who have driven up the stock price. Nvidia currently enjoys 75% gross profit margins and has an estimated 80% share of the Graphic Processing Unit (GPU) chip market. Microsoft and Facebook are reportedly Nvidia‘s biggest customers, buying its GPUs last year in a frenzy.

Nvidia CEO Jensen Huang talks of computing going from retrieval to generative, which investors believe will require a long-run overhaul of data centers to handle AI. All true, but a similar premise about an overhaul also was true for Cisco in 1999.

During the dot-com explosion in the late 1990s, investors believed a long-run rebuild of telecom infrastructure was imminent. Worldcom executives claimed that internet traffic doubled every 100 days, or about 3.5 months. The thinking at that time was that the whole internet would run on Cisco routers at 50% gross margins.

Cisco’s valuation at its peak of the “Dot.com” mania was at 33x sales. CSCO investors lost 85% of their money when the stock price troughed in October 2002. Over the next 16 years, as investors waited to break even, the company grew revenues by 172% and earnings per share by a staggering 681%. Over the last 24 years, CSCO buy and hold investors earned only 0.67% per year!

2. Microsoft is now a cloud computing/data-center company, more utility than innovator. Microsoft invested $13 billion in OpenAI for just under 50% of the company to help develop and roll out ChatGPT. But much of that was funny money — investment not in cash but in credits for Microsoft‘s Azure data centers. Microsoft leveraged those investments into super powering its own search engine, Bing, with generative AI which is now called “Copilot.” Microsoft spends a tremendous amount of money on Nvidia H100 processors to speed up its AI calculations. It also has designed its own AI chips.

3. Amazon masquerades as an online retailer, but is actually the world’s largest cloud computing/data-center company. The company offers several generative AI products and services which include:

- Amazon CodeWhisperer, an AI-powered coding companion.

- Amazon Bedrock, a fully managed service that makes foundational models (FMs) from AI21 Labs, Anthropic, and Stability AI, along with Amazon’s own family of FMs, Amazon Titan, accessible via an API.

- A generative AI tool for sellers to help them generate copy for product titles and listings.

- Generative AI capabilities that simplify how Amazon sellers create more thorough and captivating product descriptions, titles, and listing details.

Amazon CEO Jassy recently said the the company’s generative AI services have the potential to generate tens of billions of dollars over the next few years. CFO Brian Olsavsky told analysts that interest in Amazon Web Services’ (AWS) generative AI products, such as Amazon Q and AI chatbot for businesses, had accelerated during the quarter. In September 2023, Amazon said it plans to invest up to $4 billion in startup chatbot-maker Anthropic to take on its AI based cloud rivals (i.e. Microsoft and Google). Its security teams are currently using generative AI to increase productivity

4. Google, with 190,000 employees, controls 90% of search. Google‘s recent launch of its new Gemini AI tools was a disaster, producing images of the U.S. Founding Fathers and Nazi soldiers as people of color. When asked if Elon Musk or Adolf Hitler had a more negative effect on society, Gemini responded that it was “difficult to say.” Google pulled the product over “inaccuracies.” Yet Google is still promoting its AI product: “Gemini, a multimodal model from Google DeepMind, is capable of understanding virtually any input, combining different types of information, and generating almost any output.”

5. Facebook/Meta controls social media but has lost $42 billion investing in the still-nascent metaverse. Meta is rolling out three AI features for advertisers: background generation, image cropping and copy variation. Meta also unveiled a generative AI system called Make-A-Scene that allows artists to create scenes from text prompts . Meta’s CTO Andrew Bosworth said the company aims to use generative AI to help companies reach different audiences with tailored ads.

Conclusions:

Voracious demand has outpaced production and spurred competitors to develop rival chips. The ability to secure GPUs governs how quickly companies can develop new artificial-intelligence systems. Tech CEOs are under pressure to invest in AI, or risk investors thinking their company is falling behind the competition.

As we noted in a recent IEEE Techblog post, researchers in South Korea have developed the world’s first AI semiconductor chip that operates at ultra-high speeds with minimal power consumption for processing large language models (LLMs), based on principles that mimic the structure and function of the human brain. The research team was from the Korea Advanced Institute of Science and Technology.

While it’s impossible to predict how fast additional fabricating capacity comes on line, there certainly will be many more AI chips from cloud giants and merchant semiconductor companies like AMD and Intel. Fat profit margins Nvidia is now enjoying will surely attract many competitors.

………………………………………………………………………………….,……………………………………….

References:

https://www.zdnet.com/article/how-to-use-the-new-bing-and-how-its-different-from-chatgpt/

https://cloud.google.com/ai/generative-ai

https://aws.amazon.com/what-is/generative-ai/

https://www.wsj.com/articles/amazon-is-going-super-aggressive-on-generative-ai-7681587f

Curmudgeon: 2024 AI Fueled Stock Market Bubble vs 1999 Internet Mania? (03/11)

Korea’s KAIST develops next-gen ultra-low power Gen AI LLM accelerator

Telco and IT vendors pursue AI integrated cloud native solutions, while Nokia sells point products

MTN Consulting: Generative AI hype grips telecom industry; telco CAPEX decreases while vendor revenue plummets

Proposed solutions to high energy consumption of Generative AI LLMs: optimized hardware, new algorithms, green data centers

Amdocs and NVIDIA to Accelerate Adoption of Generative AI for $1.7 Trillion Telecom Industry

Cloud Service Providers struggle with Generative AI; Users face vendor lock-in; “The hype is here, the revenue is not”

Global Telco AI Alliance to progress generative AI for telcos

Bain & Co, McKinsey & Co, AWS suggest how telcos can use and adapt Generative AI

Generative AI Unicorns Rule the Startup Roost; OpenAI in the Spotlight

Generative AI in telecom; ChatGPT as a manager? ChatGPT vs Google Search

Generative AI could put telecom jobs in jeopardy; compelling AI in telecom use cases

Impact of Generative AI on Jobs and Workers

“SK Wonderland at CES 2024;” SK Group Chairman: AI-led revolution poses challenges to companies

On Tuesday at CES 2024, SK Group [1.] displayed world-leading Artificial Intelligence (AI) and carbon reduction technologies under an amusement park concept called “SK Wonderland.” It provided CES attendees a view of a world that uses the latest AI and clean technologies from SK companies and their business partners to a create a smarter, greener world. Highlights of the booth included:

- Magic Carpet Ride in a flying vehicle embedded with an AI processor that helps it navigate dense, urban areas – reducing pollution, congestion and commuting frustrations

- AI Fortune Teller powered by next-generation memory technologies that can help computers analyze and learn from massive amounts of data to predict the future

- Dancing Car that’s fully electric, able to recharge in 20 minutes or less and built to travel hundreds of miles between charges

- Clean Energy Train that’s capable of being powered by hydrogen, whose only emission is water

- Rainbow Tube that shows how plastics are finding a new life through a technology that turns waste into fuel

Note 1. SK Group is South Korea’s second-largest conglomerate, with Samsung at number one.

SK’s CES 2024 displays include participation from seven SK companies — SK Inc., SK Innovation, SK Hynix, SK Telecom, SK E&S, SK Ecoplant and SKC. While the displays are futuristic, they’re based on technologies that SK companies and their global partners have already developed and are bringing to market.

SK Group Chairman Chey Tae-won said that companies are facing challenges in navigating the transformative era led by artificial intelligence (AI) due to its unpredictable impact and speed. He said AI technology and devices with AI are the talk of the town at this year’s annual trade show and companies are showcasing their AI innovations achieved through early investment.

“We are on the starting line of the new era, and no one can predict the impact and speed of the AI revolution across the industries,” Chey told Korean reporters after touring corporate booths on the opening day of CES 2024 at the Las Vegas Convention Center in Las Vegas. Reflecting on the rapid evolution of AI technologies, he highlighted the breakthrough made by ChatGPT, a language model launched about a year ago, which has significantly influenced how AI is perceived and utilized globally. “Until ChatGPT, no one has thought of how AI would change the world. ChatGPT made a breakthrough, and everybody is trying to ride on the wave.”

SK Group Chairman Chey Tae-won speaks during a brief meeting with Korean media on the sidelines of CES 2024 at the Las Vegas Convention Center in Las Vegas on Jan. 9, 2024

…………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………

SK Hynix Inc., SK Group’s chipmaking unit, is one of the prominent companies at CES 2024, boasting its high-performance AI chips like high bandwidth memory (HBM). The latest addition is the HBM3E chips, recognized as the world’s best-performing memory product. Mass production of HBM3E is scheduled to begin in the first half of 2024.

SK Telecom Co. is also working on AI, having Sapeon, an AI chip startup under its wing. Chey stressed the importance of integrating AI services and solutions across SK Group’s diverse business sectors, ranging from energy to telecommunications and semiconductors. “It’s crucial for each company to collaborate and present a unified package or solution rather than developing them separately,” Chey said. “But I don’t think it is necessary to set up a new unit for that. I think we should come up with an integrated channel for customers.”

SK Telecom and Deutsche Telekom are jointly developing Large Language Models for generative AI to be used by telecom network providers.

…………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………

References:

https://en.yna.co.kr/view/AEN20240110001900320#

https://eng.sk.com/news/ces-2024-sk-to-showcase-world-class-carbon-reduction-and-ai-technologies

SK Telecom inspects cell towers for safety using drones and AI

SK Telecom and Deutsche Telekom to Jointly Develop Telco-specific Large Language Models (LLMs)

SK Telecom and Thales Trial Post-quantum Cryptography to Enhance Users’ Protection on 5G SA Network

Google announces Gemini: it’s most powerful AI model, powered by TPU chips

Google claims it has developed a new Generative Artificial Intelligence (GenAI) system and Large Language Model (LLM) more powerful than any currently on the market, including technology developed by ChatGPT creator OpenAI. Gemini can summarize text, create images and answer questions. Gemini was trained on Google’s Tensor Processing Units v4 and v5e.

Google’s Bard is a generative AI based on the PaLM large language mode. Starting today, Gemini will be used to give Bard “more advanced reasoning, planning, understanding and more,” according to a Google blog post.

While global users of Google Bard and the Pixel 8 Pro will be able to run Gemini now, an enterprise product, Gemini Pro, is coming on Dec. 13th. Developers can sign up now for an early preview in Android AICore.

Gemini comes in three model sizes: Ultra, Pro and Nano. Ultra is the most capable, Nano is the smallest and most efficient, and Pro sits in the middle for general tasks. The Nano version is what Google is using on the Pixel, while Bard gets Pro. Google says it plans to run “extensive trust and safety checks” before releasing Gemini Ultra to select groups.

Gemini can code in Python, Java, C++, Go and other popular programming languages. Google used Gemini to upgrade Google’s AI-powered code generation system, AlphaCode. Next, Google plans to bring Gemini to Ads, Chrome and Duet AI. In the future, Gemini will be used in Google Search as well.

……………………………………………………………………………………………………………………………………………………

Market Impact:

Gemini’s release and use will present a litmus test for Google’s technology following a push to move faster in developing and releasing AI products. It coincides with a period of turmoil at OpenAI that has sent tremors through the tight knit AI community, suggesting the industry’s leaders is far from settled.

The announcement of the new GenAI software is the latest attempt by Google to display its AI portfolio after the launch of ChatGPT about a year ago shook up the tech industry. Google wanted outside customers to perform testing on the most advanced version of Gemini before releasing it more widely, said Demis Hassabis, chief executive officer of Google DeepMind.

“We’ve been pushing forward with a lot of focus and intensity,” Hassabis said, adding that Gemini likely represented the company’s most ambitious combined science and engineering project to date.

Google said Wednesday it would offer a range of AI programs to customers under the Gemini umbrella. It touted the software’s ability to process various media, from audio to video, an important development as users turn to chatbots for a wider range of needs.

The most powerful Gemini Ultra version outperformed OpenAI’s technology, GPT-4, on a range of industry benchmarks, according to Google. That version is expected to become widely available for software developers early next year following testing with a select group of customers.

………………………………………………………………………………………………………………………………………………………………………………………………

Role of TPUs:

While most GenAI software and LLM’s are processed using NVIDIA’s neural network processors, Google’s tensor processing units (TPUs) will power Gemini. TPUs are custom-designed AI accelerators, which are optimized for training and inference of large AI models. Cloud TPUs are optimized for training large and complex deep learning models that feature many matrix calculations, for instance building large language models (LLMs). Cloud TPUs also have SparseCores, which are dataflow processors that accelerate models relying on embeddings found in recommendation models. Other use cases include healthcare, like protein folding modeling and drug discovery.

Google’s custom AI chips, known as tensor processing units, are embedded in compute servers at the company’s data center. Photo Credit: GOOGLE

…………………………………………………………………………………………………………………………………

Competitors:

Gemini and the products built with it, such as chatbots, will compete with OpenAI’s GPT-4, Microsoft’s Copilot (which is based on OpenAI’s GPT-4), Anthropic’s Claude AI, Meta’s Llama 2 and more. Google claims Gemini Ultra outperforms GPT-4 in several benchmarks, including the massive multitask language understanding general knowledge test and in Python code generation.

…………………………………………………………………………………………………………………………………

References:

Everything to know about Gemini, Google’s new AI model (blog.google)

Google Reveals Gemini, Its Much-Anticipated Large Language Model (techrepublic.com)

MTN Consulting: Generative AI hype grips telecom industry; telco CAPEX decreases while vendor revenue plummets

Ever since Generative (Gen) AI burst into the mainstream through public-facing platforms (e.g. ChatGPT) late last year, its promising capabilities have caught the attention of many. Not surprisingly, telecom industry execs are among the curious observers wanting to try Gen AI even as it continues to evolve at a rapid pace.

MTN Consulting says the telecom industry’s bond with AI is not new though. Many telcos have deployed conventional AI tools and applications in the past several years, but Gen AI presents opportunities for telcos to deliver significant incremental value over existing AI. A few large telcos have kickstarted their quest for Gen AI by focusing on “localization.” Through localization of processes using Gen AI, telcos vow to eliminate language barriers and improve customer engagement in their respective operating markets, especially where English as a spoken language is not dominant.

Telcos can harness the power of Gen AI across a wide range of different functions, but the two vital telco domains likely to witness transformative potential of Gen AI are networks and customer service. Both these domains are crucial: network demands are rising at an unprecedented pace with increased complexity, and delivering differentiated customer experiences remains an unrealized ambition for telcos.

Several Gen AI use cases are emerging within these two telco domains to address these challenges. In the network domain, these include topology optimization, network capacity planning, and predictive maintenance, for example. In the customer support domain, they include localized virtual assistants, personalized support, and contact center documentation.

Most of the use cases leveraging Gen AI applications involve dealing with sensitive data, be it network-related or customer-related. This will have major implications from the regulatory point of view, and regulatory concerns will constrain telcos’ Gen AI adoption and deployment strategies. The big challenge is the mosaic of complex and strict regulations prevalent in different markets that telcos will have to understand and adhere to when implementing Gen AI use cases in such markets. This is an area where third-party vendors will try to cash in by offering Gen AI solutions that are compliant with regulations in the respective markets.

Vendors will also play a key role for small- and medium-sized telcos in Gen AI implementation, by eliminating constraints due to the lack of technical expertise and HW/SW resources, skilled manpower, along with opex costs burden. Key vendors to watch out for in the Gen AI space are webscale providers who possess the ideal combination of providing cloud computing resources required to train large language models (LLM) coupled with their Gen AI expertise offered through pre-trained models.

Other key points from MTN Consulting on Gen AI in the telecom industry:

- Network operations and customer support will be key transformative areas.

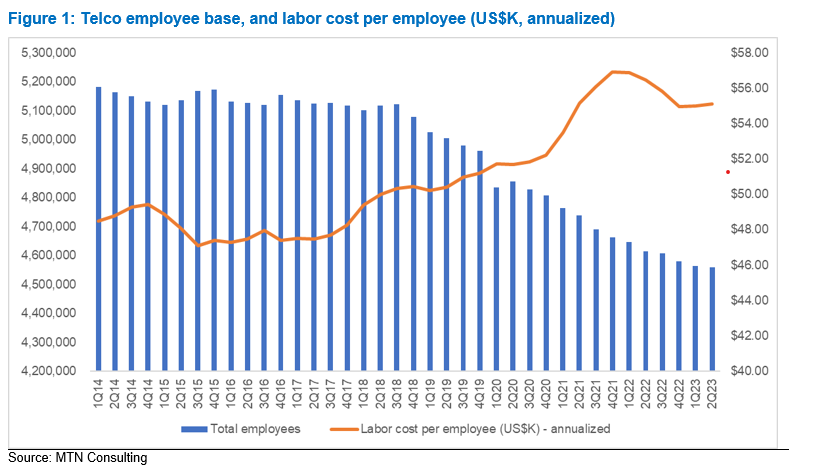

- Telco workforce will become leaner but smarter in the Gen AI era.

- Strict regulations will be a major barrier for telcos.

- Vendors key to Gen AI integration; webscale providers set for more telco gains.

- Lock-in risks and rising software costs are key considerations in choosing vendors.

………………………………………………………………………………………………………………………………

Separately, MTN Consulting’s latest forecast called for $320B of telco capex in 2023, down only slightly from the $328B recorded in 2022. Early 3Q23 revenue reports from vendors selling into the telco market call this forecast into question. The dip in the Americas is worse than expected, and Asia’s expected 2023 growth has not materialized.

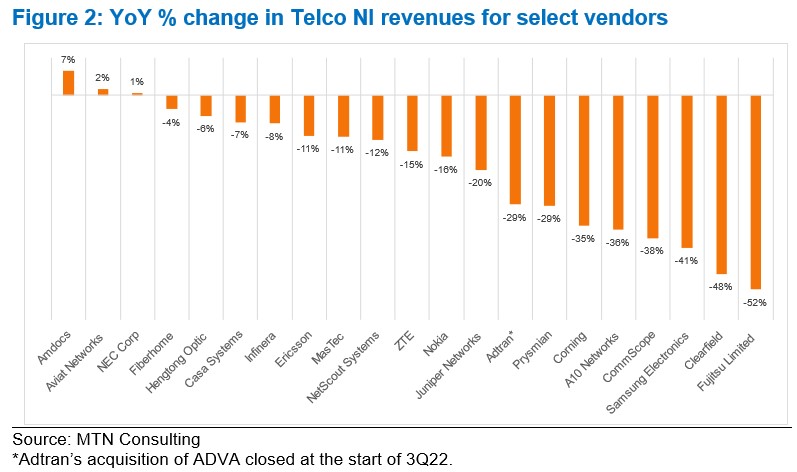

Key vendors are reporting significant YoY drops in revenue, pointing to inventory corrections, macroeconomic uncertainty (interest rates, in particular), and weaker telco spending. Network infrastructure sales to telcos (Telco NI) for key vendors Ericsson and Nokia dropped 11% and 16% YoY in 3Q23, respectively, measured in US dollars. By the same metric, NEC, Fujitsu and Samsung saw +1%, -52%, and -41% YoY growth; Adtran, Casa, and Juniper declined 29%, 7%, and 20%; fiber-centric vendors Clearfield, Corning, CommScope, and Prysmian all saw double digit declines.

MTN Consulting will update its operator forecast formally next month. In advance, this comment flags a weaker spending outlook than expected. Telco capex for 2023 is likely to come in around $300-$310B.

MTN Consulting’s Network Operator Forecast Through 2027: “Telecom is essentially a zero-growth industry”

MTN Consulting: Top Telco Network Infrastructure (equipment) vendors + revenue growth changes favor cloud service providers

Proposed solutions to high energy consumption of Generative AI LLMs: optimized hardware, new algorithms, green data centers

Cloud Service Providers struggle with Generative AI; Users face vendor lock-in; “The hype is here, the revenue is not”

Global Telco AI Alliance to progress generative AI for telcos

Amdocs and NVIDIA to Accelerate Adoption of Generative AI for $1.7 Trillion Telecom Industry

Bain & Co, McKinsey & Co, AWS suggest how telcos can use and adapt Generative AI

Generative AI Unicorns Rule the Startup Roost; OpenAI in the Spotlight

Generative AI in telecom; ChatGPT as a manager? ChatGPT vs Google Search

Generative AI could put telecom jobs in jeopardy; compelling AI in telecom use cases

MTN Consulting: Satellite network operators to focus on Direct-to-device (D2D), Internet of Things (IoT), and cloud-based services

MTN Consulting on Telco Network Infrastructure: Cisco, Samsung, and ZTE benefit (but only slightly)

MTN Consulting: : 4Q2021 review of Telco & Webscale Network Operators Capex

Proposed solutions to high energy consumption of Generative AI LLMs: optimized hardware, new algorithms, green data centers

Introduction:

Many generative AI tools rely on a type of natural-language processing called large language models (LLMs) to first learn and then make inferences about languages and linguistic structures (like code or legal-case prediction) used throughout the world. Some companies that use LLMs include: Anthropic (now collaborating with Amazon), Microsoft, OpenAI, Google, Amazon/AWS, Meta (FB), SAP, IQVIA. Here are some examples of LLMs: Google’s BERT, Amazon’s Bedrock, Falcon 40B, Meta’s Galactica, Open AI’s GPT-3 and GPT-4, Google’s LaMDA Hugging Face’s BLOOM Nvidia’s NeMO LLM.

The training process of the Large Language Models (LLMs) used in generative artificial intelligence (AI) is a cause for concern. LLMs can consume many terabytes of data and use over 1,000 megawatt-hours of electricity.

Alex de Vries is a Ph.D. candidate at VU Amsterdam and founder of the digital-sustainability blog Digiconomist published a report in Joule which predicts that current AI technology could be on track to annually consume as much electricity as the entire country of Ireland (29.3 terawatt-hours per year).

“As an already massive cloud market keeps on growing, the year-on-year growth rate almost inevitably declines,” John Dinsdale, chief analyst and managing director at Synergy, told CRN via email. “But we are now starting to see a stabilization of growth rates, as cloud provider investments in generative AI technology help to further boost enterprise spending on cloud services.”

Hardware vs Algorithmic Solutions to Reduce Energy Consumption:

Roberto Verdecchia is an assistant professor at the University of Florence and the first author of a paper published on developing green AI solutions. He says that de Vries’s predictions may even be conservative when it comes to the true cost of AI, especially when considering the non-standardized regulation surrounding this technology. AI’s energy problem has historically been approached through optimizing hardware, says Verdecchia. However, continuing to make microelectronics smaller and more efficient is becoming “physically impossible,” he added.

In his paper, published in the journal WIREs Data Mining and Knowledge Discovery, Verdecchia and colleagues highlight several algorithmic approaches that experts are taking instead. These include improving data-collection and processing techniques, choosing more-efficient libraries, and improving the efficiency of training algorithms. “The solutions report impressive energy savings, often at a negligible or even null deterioration of the AI algorithms’ precision,” Verdecchia says.

……………………………………………………………………………………………………………………………………………………………………………………………………………………

Another Solution – Data Centers Powered by Alternative Energy Sources:

The immense amount of energy needed to power these LLMs, like the one behind ChatGPT, is creating a new market for data centers that run on alternative energy sources like geothermal, nuclear and flared gas, a byproduct of oil production. Supply of electricity, which currently powers the vast majority of data centers, is already strained from existing demands on the country’s electric grids. AI could consume up to 3.5% of the world’s electricity by 2030, according to an estimate from IT research and consulting firm Gartner.

Amazon, Microsoft, and Google were among the first to explore wind and solar-powered data centers for their cloud businesses, and are now among the companies exploring new ways to power the next wave of AI-related computing. But experts warn that given their high risk, cost, and difficulty scaling, many nontraditional sources aren’t capable of solving near-term power shortages.

Exafunction, maker of the Codeium generative AI-based coding assistant, sought out energy startup Crusoe Energy Systems for training its large-language models because it offered better prices and availability of graphics processing units, the advanced AI chips primarily produced by Nvidia, said the startup’s chief executive, Varun Mohan.

AI startups are typically looking for five to 25 megawatts of data center power, or as much as they can get in the near term, according to Pat Lynch, executive managing director for commercial real-estate services firm CBRE’s data center business. Crusoe will have about 200 megawatts by year’s end, Lochmiller said. Training one AI model like OpenAI’s GPT-3 can use up to 10 gigawatt-hours, roughly equivalent to the amount of electricity 1,000 U.S. homes use in a year, University of Washington research estimates.

Major cloud providers capable of providing multiple gigawatts of power are also continuing to invest in renewable and alternative energy sources to power their data centers, and use less water to cool them down. By some estimates, data centers account for 1% to 3% of global electricity use.

An Amazon Web Services spokesperson said the scale of its massive data centers means it can make better use of resources and be more efficient than smaller, privately operated data centers. Amazon says it has been the world’s largest corporate buyer of renewable energy for the past three years.

Jen Bennett, a Google Cloud leader in technology strategy for sustainability, said the cloud giant is exploring “advanced nuclear” energy and has partnered with Fervo Energy, a startup beginning to offer geothermal power for Google’s Nevada data center. Geothermal, which taps heat under the earth’s surface, is available around the clock and not dependent on weather, but comes with high risk and cost.

“Similar to what we did in the early days of wind and solar, where we did these large power purchase agreements to guarantee the tenure and to drive costs down, we think we can do the same with some of the newer energy sources,” Bennett said.

References:

https://aws.amazon.com/what-is/large-language-model/

https://spectrum.ieee.org/ai-energy-consumption

https://www.crn.com/news/cloud/microsoft-aws-google-cloud-market-share-q3-2023-results/6

Amdocs and NVIDIA to Accelerate Adoption of Generative AI for $1.7 Trillion Telecom Industry

SK Telecom and Deutsche Telekom to Jointly Develop Telco-specific Large Language Models (LLMs)

AI Frenzy Backgrounder; Review of AI Products and Services from Nvidia, Microsoft, Amazon, Google and Meta; Conclusions

Amdocs and NVIDIA to Accelerate Adoption of Generative AI for $1.7 Trillion Telecom Industry

Amdocs and NVIDIA today announced they are collaborating to optimize large language models (LLMs) to speed adoption of generative AI applications and services across the $1.7 trillion telecommunications and media industries.(1)

Amdocs and NVIDIA will customize enterprise-grade LLMs running on NVIDIA accelerated computing as part of the Amdocs amAIz framework. The collaboration will empower communications service providers to efficiently deploy generative AI use cases across their businesses, from customer experiences to network provisioning.

Amdocs will use NVIDIA DGX Cloud AI supercomputing and NVIDIA AI Enterprise software to support flexible adoption strategies and help ensure service providers can simply and safely use generative AI applications.

Aligned with the Amdocs strategy of advancing generative AI use cases across the industry, the collaboration with NVIDIA builds on the previously announced Amdocs-Microsoft partnership. Service providers and media companies can adopt these applications in secure and trusted environments, including on premises and in the cloud.

With these new capabilities — including the NVIDIA NeMo framework for custom LLM development and guardrail features — service providers can benefit from enhanced performance, optimized resource utilization and flexible scalability to support emerging and future needs.

“NVIDIA and Amdocs are partnering to bring a unique platform and unmatched value proposition to customers,” said Shuky Sheffer, Amdocs Management Limited president and CEO. “By combining NVIDIA’s cutting-edge AI infrastructure, software and ecosystem and Amdocs’ industry-first amAlz AI framework, we believe that we have an unmatched offering that is both future-ready and value-additive for our customers.”

“Across a broad range of industries, enterprises are looking for the fastest, safest path to apply generative AI to boost productivity,” said Jensen Huang, founder and CEO of NVIDIA. “Our collaboration with Amdocs will help telco service providers automate personalized assistants, service ticket routing and other use cases for their billions of customers, and help the telcos analyze and optimize their operations.”

Amdocs counts more than 350 of the world’s leading telecom and media companies as customers, including 27 of the world’s top 30 service providers.(2) With more than 1.7 billion daily digital journeys, Amdocs platforms impact more than 3 billion people around the world.

NVIDIA and Amdocs are exploring a number of generative AI use cases to simplify and improve operations by providing secure, cost-effective and high-performance generative AI capabilities.

Initial use cases span customer care, including accelerating customer inquiry resolution by drawing information from across company data. On the network operations side, the companies are exploring how to proactively generate solutions that aid configuration, coverage or performance issues as they arise.

(1) Source: IDC, OMDIA, Factset analyses of Telecom 2022-2023 revenue.

(2) Source: OMDIA 2022 revenue estimates, excludes China.

Editor’s Note:

- Language models: These models, like OpenAI’s GPT-3, generate human-like text. One of the most popular examples of language-based generative models are called large language models (LLMs).

- Large language models are being leveraged for a wide variety of tasks, including essay generation, code development, translation, and even understanding genetic sequences.

- Generative adversarial networks (GANs): These models use two neural networks, a generator, and a discriminator.

- Unimodal models: These models only accept one data input format.

- Multimodal models: These models accept multiple types of inputs and prompts. For example, GPT-4 can accept both text and images as inputs.

- Variational autoencoders (VAEs): These deep learning architectures are frequently used to build generative AI models.

- Foundation models: These models generate output from one or more inputs (prompts) in the form of human language instructions.

https://www.nvidia.com/en-us/glossary/data-science/generative-ai/

https://blogs.nvidia.com/blog/2023/01/26/what-are-large-language-models-used-for/

Cloud Service Providers struggle with Generative AI; Users face vendor lock-in; “The hype is here, the revenue is not”

Global Telco AI Alliance to progress generative AI for telcos

Bain & Co, McKinsey & Co, AWS suggest how telcos can use and adapt Generative AI

Generative AI Unicorns Rule the Startup Roost; OpenAI in the Spotlight

Generative AI in telecom; ChatGPT as a manager? ChatGPT vs Google Search

Generative AI could put telecom jobs in jeopardy; compelling AI in telecom use cases

SK Telecom and Deutsche Telekom to Jointly Develop Telco-specific Large Language Models (LLMs)

SK Telecom and Deutsche Telekom announced that they signed a Letter of Intent (LOI) to jointly develop a telco-specific Large Language Models (LLMs) that will enable global telecommunication companies (telcos) to develop generative AI models easily and quickly. The LOI signing ceremony took place at SK Seorin Building located in Seoul with the attendance of key executives from both companies including Ryu Young-sang, CEO of SKT, Chung Suk-geun, Chief AI Global Officer of SKT, Tim Höttges, CEO of Deutsche Telekom, Claudia Nemat, Board Member Technology and Innovation of Deutsche Telekome, and Jonathan Abrahamson, Chief Product and Digital Officer of Deutsche Telekom.

SK Telecom and Deutsche Telekom to Jointly Develop Telco-specific LLM

This marks the first fruition of discussions held by the Global Telco AI Alliance, which was launched by SKT, Deutsche Telekom, E&, and Singtel, in July 2023, and lays the foundation to enter the global market. SKT and Deutsche Telekom plan to collaborate with AI companies such as Anthropic (Claude 2) and Meta (Llama2) to co-develop a multilingual – i.e, German, English, Korean, etc. – large language model (LLM) tailored to the needs of telcos. They plan to unveil the first version of the telco-specific LLM in the first quarter of 2024.

The telco-specific LLM will have a higher understanding of telecommunication service-related areas and customer’s intentions than general LLMs, making it suitable for customer services like AI contact center. The goal is to support telcos across the world, including Europe, Asia, and the Middle East, to develop generative AI services such as AI agents flexibly according to their respective environment. That will enable telcos to save both time and cost for developing large platforms, and secure new business opportunities and growth engines through AI innovation that shifts the paradigm in the traditional telecommunications industry. To this end, SKT and Deutsche Telekom plan to jointly develop AI platform technologies that telcos can use to create generative AI services to reduce both development time and cost.

For instance, when a telco tries to build an AI contact center based on generative AI, it itself will be able to build one that suits their environment more quickly and flexibly. In addition, AI can be applied to other areas such as network monitoring and on-site operations to increase efficiency, resulting in cost savings in the mid- to long-term.

Through this collaboration, the two companies will proactively respond to the recent surge in AI demand from telcos, while also promoting the expansion of the global AI ecosystem through the successful introduction of generative AI optimized for specific industries or domains.

“AI shows impressive potential to significantly enhance human problem-solving capabilities. To maximize its use especially in customer service, we need to adapt existing large language models and train them with our unique data. This will elevate our generative AI tools,” says Claudia Nemat, Member of the Board of Management for Technology and Innovation at Deutsche Telekom.

“Through our partnership with Deutsche Telekom, we have secured a strong opportunity and momentum to gain global AI leadership and drive new growth,” said Ryu Young-sang, CEO of SKT. “By combining the strengths and capabilities of the two companies in AI technology, platform and infrastructure, we expect to empower enterprises in many different industries to deliver new and higher value to their customers.”

References:

Global Telco AI Alliance to progress generative AI for telcos

Cloud Service Providers struggle with Generative AI; Users face vendor lock-in; “The hype is here, the revenue is not”

Bain & Co, McKinsey & Co, AWS suggest how telcos can use and adapt Generative AI

Generative AI Unicorns Rule the Startup Roost; OpenAI in the Spotlight

Generative AI in telecom; ChatGPT as a manager? ChatGPT vs Google Search

Generative AI could put telecom jobs in jeopardy; compelling AI in telecom use cases

Google Cloud infrastructure enhancements: AI accelerator, cross-cloud network and distributed cloud

Cloud infrastructure services market grows; AI will be a major driver of future cloud service provider investments

TPG, Ericsson launch AI-powered analytics, troubleshooting service for 4G/5G Mobile, FWA, and IoT subscribers

Cloud Service Providers struggle with Generative AI; Users face vendor lock-in; “The hype is here, the revenue is not”

Everyone agrees that Generative AI has great promise and potential. Martin Casado of Andreessen Horowitz recently wrote in the Wall Street Journal that the technology has “finally become transformative:”

“Generative AI can bring real economic benefits to large industries with established and expensive workloads. Large language models could save costs by performing tasks such as summarizing discovery documents without replacing attorneys, to take one example. And there are plenty of similar jobs spread across fields like medicine, computer programming, design and entertainment….. This all means opportunity for the new class of generative AI startups to evolve along with users, while incumbents focus on applying the technology to their existing cash-cow business lines.”

A new investment wave caused by generative AI is starting to loom among cloud service providers, raising questions about whether Big Tech’s spending cutbacks and layoffs will prove to be short lived. Pressed to say when they would see a revenue lift from AI, the big U.S. cloud companies (Microsoft, Alphabet/Google, Meta/FB and Amazon) all referred to existing services that rely heavily on investments made in the past. These range from the AWS’s machine learning services for cloud customers to AI-enhanced tools that Google and Meta offer to their advertising customers.

Microsoft offered only a cautious prediction of when AI would result in higher revenue. Amy Hood, chief financial officer, told investors during an earnings call last week that the revenue impact would be “gradual,” as the features are launched and start to catch on with customers. The caution failed to match high expectations ahead of the company’s earnings, wiping 7% off its stock price (MSFT ticker symbol) over the following week.

When it comes to the newer generative AI wave, predictions were few and far between. Amazon CEO Andy Jassy said on Thursday that the technology was in its “very early stages” and that the industry was only “a few steps into a marathon”. Many customers of Amazon’s cloud arm, AWS, see the technology as transformative, Jassy noted that “most companies are still figuring out how they want to approach it, they are figuring out how to train models.” He insisted that every part of Amazon’s business was working on generative AI initiatives and the technology was “going to be at the heart of what we do.”

There are a number of large language models that power generative AI, and many of the AI companies that make them have forged partnerships with big cloud service providers. As business technology leaders make their picks among them, they are weighing the risks and benefits of using one cloud provider’s AI ecosystem. They say it is an important decision that could have long-term consequences, including how much they spend and whether they are willing to sink deeper into one cloud provider’s set of software, tools, and services.

To date, AI large language model makers like OpenAI, Anthropic, and Cohere have led the charge in developing proprietary large language models that companies are using to boost efficiency in areas like accounting and writing code, or adding to their own products with tools like custom chatbots. Partnerships between model makers and major cloud companies include OpenAI and Microsoft Azure, Anthropic and Cohere with Google Cloud, and the machine-learning startup Hugging Face with Amazon Web Services. Databricks, a data storage and management company, agreed to buy the generative AI startup MosaicML in June.

If a company chooses a single AI ecosystem, it could risk “vendor lock-in” within that provider’s platform and set of services, said Ram Chakravarti, chief technology officer of Houston-based BMC Software. This paradigm is a recurring one, where a business’s IT system, software and data all sit within one digital platform, and it could become more pronounced as companies look for help in using generative AI. Companies say the problem with vendor lock-in, especially among cloud providers, is that they have difficulty moving their data to other platforms, lose negotiating power with other vendors, and must rely on one provider to keep its services online and secure.

Cloud providers, partly in response to complaints of lock-in, now offer tools to help customers move data between their own and competitors’ platforms. Businesses have increasingly signed up with more than one cloud provider to reduce their reliance on any single vendor. That is the strategy companies could end up taking with generative AI, where by using a “multiple generative AI approach,” they can avoid getting too entrenched in a particular platform. To be sure, many chief information officers have said they willingly accept such risks for the convenience, and potentially lower cost, of working with a single technology vendor or cloud provider.

A significant challenge in incorporating generative AI is that the technology is changing so quickly, analysts have said, forcing CIOs to not only keep up with the pace of innovation, but also sift through potential data privacy and cybersecurity risks.

A company using its cloud provider’s premade tools and services, plus guardrails for protecting company data and reducing inaccurate outputs, can more quickly implement generative AI off-the-shelf, said Adnan Masood, chief AI architect at digital technology and IT services firm UST. “It has privacy, it has security, it has all the compliance elements in there. At that point, people don’t really have to worry so much about the logistics of things, but rather are focused on utilizing the model.”

For other companies, it is a conservative approach to use generative AI with a large cloud platform they already trust to hold sensitive company data, said Jon Turow, a partner at Madrona Venture Group. “It’s a very natural start to a conversation to say, ‘Hey, would you also like to apply AI inside my four walls?’”

End Quotes:

“Right now, the evidence is a little bit scarce about what the effect on revenue will be across the tech industry,” said James Tierney of Alliance Bernstein.

Brent Thill, an analyst at Jefferies, summed up the mood among investors: “The hype is here, the revenue is not. Behind the scenes, the whole industry is scrambling to figure out the business model [for generative AI]: how are we going to price it? How are we going to sell it?”

………………………………………………………………………………………………………………

References:

https://www.ft.com/content/56706c31-e760-44e1-a507-2c8175a170e8

https://www.wsj.com/articles/companies-weigh-growing-power-of-cloud-providers-amid-ai-boom-478c454a

https://www.techtarget.com/searchenterpriseai/definition/generative-AI?Offer=abt_pubpro_AI-Insider

Global Telco AI Alliance to progress generative AI for telcos

Curmudgeon/Sperandeo: Impact of Generative AI on Jobs and Workers

Bain & Co, McKinsey & Co, AWS suggest how telcos can use and adapt Generative AI

Generative AI Unicorns Rule the Startup Roost; OpenAI in the Spotlight

Generative AI in telecom; ChatGPT as a manager? ChatGPT vs Google Search

Generative AI could put telecom jobs in jeopardy; compelling AI in telecom use cases

Qualcomm CEO: AI will become pervasive, at the edge, and run on Snapdragon SoC devices

Global Telco AI Alliance to progress generative AI for telcos

- Four major global telcos joined forces to launch the Global Telco AI Alliance to accelerate AI transformation of the existing telco business and create new business opportunities with AI services.

- They signed a Multilateral MOU for cooperation in the AI business, which includes the co-development of the Telco AI Platform.

Deutsche Telekom, e&, Singtel and SK Telecom have established a new industry group that aims to progress generative AI. Called the Global Telco AI Alliance, it represents a coordinated effort by these four operators to accelerate the AI-fuelled transformation of their businesses, and to develop new, AI-powered business models.

The Telco AI Platform will serve as the foundation both for new services – like chatbots and apps – as well as enhancements to existing telco services. The alliance members plan to establish a working group whose task will be to hammer out co-investment opportunities and the co-development of said platform.

Members will also support one another in operating AI services and apps in their respective markets, and cooperate to foster the growth of a telco AI-based ecosystem.

As of today, all the operators have done is sign a memorandum of understanding (MoU), under which they pledge to carry out all this work. A signing ceremony took place in Seoul, Korea, and was attended – either in person or virtually – by the CEOs of e&, Singtel and SK Telecom, and Deutsche Telekom’s board member for technology and innovation, Claudia Nemat. The Global Telco AI Alliance will also have to ensure that any AI-based services they develop are capable of accounting for cultural differences. They won’t get very far if their virtual assistants make culturally insensitive recommendations, for example.

The seniority of these signatories represents a strong statement of intent though, and the group said it will discuss appointing C-level representatives from each member to the Alliance.

“In order to make the most of the possibilities of generative AI for our customers and our industry, we want to develop industry-specific applications in the Telco AI Alliance. I am particularly pleased that this alliance also stands for bridging the gap between Europe and Asia and that we are jointly pursuing an open-vendor approach. Depending on the application, we can use the best technology. The founding of this alliance is an important milestone for our industry,” said Claudia Nemat, Board Member Technology and Innovation at Deutsche Telekom.

“We recognize AI’s immense potential in reshaping the telecommunications landscape and beyond and are excited to embark on this transformative journey with the formation of the Global Telco AI Alliance. The alliance signifies a strategic commitment to driving innovation and fostering collaborative efforts. Our shared goal is to redefine industry paradigms, establish new growth drivers through AI-powered business models, and pave the way for a new era of strategic cooperation, guiding our industry towards an exciting and prosperous future,” said Khalifa Al Shamsi, CEO of e& life.

“This alliance will enable us and our ecosystem of partners to significantly expedite the development of new and innovative AI services that can bring tremendous benefits to both businesses and consumers. With our advanced 5G network, we are well-placed to leverage AI to ideate and co-create and are already using it to enhance our own customer service and employee experience, increase productivity and drive learning,” said Yuen Kuan Moon, Group Chief Executive Officer of Singtel.

It is not clear at this stage of proceedings whether the operators plan to develop their own in-house AI assets, or license them from the likes of OpenAI’s ChatGPT, or Google Bard. On the one hand, going with a third party that has done most of the legwork offers efficiencies, but on the other hand, the Global Telco AI Alliance might prefer an AI that specialises in telecoms, rather than a generalist.

Japanese vendor NEC showed earlier this month – with the launch of its own large language model (LLM) for enterprises in its home market – that generative AI isn’t necessarily the preserve of Silicon Valley big tech. It also highlighted the desire to develop localised AI for different languages.

The announcement also doesn’t attempt to grapple with any potential ethical pitfalls that might befall the Alliance. While it’s a fairly safe bet that responsible AI development will be an important consideration, it’s always better when companies make that clear.

Even big tech has come round to that way of thinking, with the launch earlier this week of the Frontier Model Forum. Established by Google, Microsoft, OpenAI and self-styled ethical AI company Anthropic, the group aims to advance the development of responsible artificial intelligence for the benefit of humanity.

References:

https://telecoms.com/522891/telcos-team-up-for-ai-platform-project/

https://telecoms.com/522865/google-microsoft-anthropic-and-openai-launch-ai-safety-body/

https://telecoms.com/522603/nec-launches-its-own-generative-ai/

Bain & Co, McKinsey & Co, AWS suggest how telcos can use and adapt Generative AI

Generative Artificial Intelligence (AI) uncertainty is especially challenging for the telecommunications industry which has a history of very slow adaptation to change and thus faces lots of pressure to adopt generative AI in their services and infrastructure. Indeed, Deutsche Telekom stated that AI poses massive challenges for telecom industry in this IEEE Techblog post.

Consulting firm Bain & Co. highlighted that inertia in a recent report titled, “Telcos, Stop Debating Generative AI and Just Get Going” Three partners stated network operators need to act fast in order to jump on this opportunity. “Speedy action trumps perfect planning here,” Herbert Blum, Jeff Katzin and Velu Sinha wrote in the brief. “It’s more important for telcos to quickly launch an initial set of generative AI applications that fit the company’s strategy, and do so in a responsible way – or risk missing a window of opportunity in this fast-evolving sector.”

Generative AI use cases can be divided into phases based on ease of implementation, inherent risk, and value:

………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………….

Telcos can pursue generative AI applications across business functions, starting with knowledge management:

Separately, a McKinsey & Co. report opined that AI has highlighted business leader priorities. The consulting firm cited organizations that have top executives championing an organization’s AI initiatives, including the need to fund those programs. This is counter to organizations that lack a clear directive on their AI plans, which results in wasted spending and stalled development. “Reaching this state of AI maturity is no easy task, but it is certainly within the reach of telcos,” the firm noted. “Indeed, with all the pressures they face, embracing large-scale deployment of AI and transitioning to being AI-native organizations could be key to driving growth and renewal. Telcos that are starting to recognize this is non-negotiable are scaling AI investments as the business impact generated by the technology materializes.”

…………………………………………………………………………………………………………………………………………………………………………………………………………………………………………….

Ishwar Parulkar, chief technologist for the telco industry at AWS, touted several areas that should be of generative AI interest to telecom operators. The first few were common ones tied to improving the customer experience. This includes building on machine learning (ML) to help improve that interaction and potentially reduce customer churn.

“We have worked with some leading customers and implemented this in production where they can take customer voice calls, translate that to text, do sentiment analysis on it … and then feed that into reducing customer churn,” Parulkar said. “That goes up another notch with generative AI, where you can have chat bots and more interactive types of interfaces for customers as well as for customer care agent systems in a call. So that just goes up another notch of generative AI.”

The next step is using generative AI to help operators bolster their business operations and systems. This is for things like revenue assurance and finding revenue leakage, items that Parulkar noted were in a “more established space in terms of what machine learning can do.”

However, Parulkar said the bigger opportunity is around helping operators better design and manage network operations. This is an area that remains the most immature, but one that Parulkar is “most excited about.” This can begin from the planning and installation phase, with an example of helping technicians when they are installing physical equipment.

“In installation of network equipment today, you have technicians who go through manuals and have procedures to install routers and base stations and connect links and fibers,” Parulkar said. “That all can be now made interactive [using] chat bot, natural language kind of framework. You can have a lot of this documentation, training data that can train foundational models that can create that type of an interface, improves productivity, makes it easier to target specific problems very quickly in terms of what you want to deploy.”

This can also help with network configuration by using large datasets to help automatically generate configurations. This could include the ability to help configure routers, VPNs and MPLS circuits to support network performance.

The final area of support could be in the running of those networks once they are deployed. Parulkar cited functions like troubleshooting failures that can be supported by a generative AI model.

“There are recipes that operators go through to troubleshoot and triage failure,” Parulkar said “A lot of times it’s trial-and-error method that can be significantly improved in a more interactive, natural language, prompt-based system that guides you through troubleshooting and operating the network.”

This model could be especially compelling for operators as they integrate more routers to support disaggregated 5G network models for mobile edge computing (MEC), private networks and the use of millimeter-wave (mmWave) spectrum bands.

Federal Communications Commission (FCC) Chairwoman Jessica Rosenworcel this week also hinted at the ability for AI to help manage spectrum resources.

“For decades we have licensed large slices of our airwaves and come up with unlicensed policies for joint use in others,” Rosenworcel said during a speech at this week’s FCC and National Science Foundation Joint Workshop. “But this scheme is not truly dynamic. And as demands on our airwaves grow – as we move from a world of mobile phones to billions of devices in the internet of things (IoT)– we can take newfound cognitive abilities and teach our wireless devices to manage transmissions on their own. Smarter radios using AI can work with each other without a central authority dictating the best of use of spectrum in every environment. If that sounds far off, it’s not. Consider that a large wireless provider’s network can generate several million performance measurements every minute. And consider the insights that machine learning can provide to better understand network usage and support greater spectrum efficiency.”

While generative AI does have potential, Parulkar also left open the door for what he termed “traditional AI” and which he described as “supervised and unsupervised learning.”

“Those techniques still work for a lot of the parts in the network and we see a combination of these two,” Parulkar said. “For example, you might use anomaly detection for getting some insights into the things to look at and then followed by a generative AI system that will then give an output in a very interactive format and we see that in some of the use cases as well. I think this is a big area for telcos to explore and we’re having active conversations with multiple telcos and network vendors.”

Parulkar’s comments come as AWS has been busy updating its generative AI platforms. One of the most recent was the launch of its $100 million Generative AI Innovation Center, which is targeted at helping guide businesses through the process of developing, building and deploying generative AI tools.

“Generative AI is one of those technological shifts that we are in the early stages of that will impact all organizations across the globe in some form of fashion,” Sri Elaprolu, senior leader of generative AI at AWS, told SDxCentral. “We have the goal of helping as many customers as we can, and as we need to, in accelerating their journey with generative AI.”

References:

https://www.bain.com/insights/telcos-stop-debating-generative-ai-and-just-get-going/

Deutsche Telekom exec: AI poses massive challenges for telecom industry

Generative AI in telecom; ChatGPT as a manager? ChatGPT vs Google Search

Generative AI could put telecom jobs in jeopardy; compelling AI in telecom use cases

Generative AI Unicorns Rule the Startup Roost; OpenAI in the Spotlight

Forbes: Cloud is a huge challenge for enterprise networks; AI adds complexity

Qualcomm CEO: AI will become pervasive, at the edge, and run on Snapdragon SoC devices

Bloomberg: China Lures Billionaires Into Race to Catch U.S. in AI