AI

Big Tech and VCs invest hundreds of billions in AI while salaries of AI experts reach the stratosphere

Introduction:

Two and a half years after OpenAI set off the generative artificial intelligence (AI) race with the release of the ChatGPT, big tech companies are accelerating their A.I. spending, pumping hundreds of billions of dollars into their frantic effort to create systems that can mimic or even exceed the abilities of the human brain. The areas of super huge AI spending are data centers, salaries for experts, and VC investments. Meanwhile, the UAE is building one of the world’s largest AI data centers while Softbank CEO Masayoshi Son believes that Artificial General Intelligence (AGI) will surpass human-level cognitive abilities (Artificial General Intelligence or AGI) within a few years. And that Artificial Super Intelligence (ASI) will surpass human intelligence by a factor of 10,000 within the next 10 years.

AI Data Center Build-out Boom:

Tech industry’s giants are building AI data centers that can cost more than $100 billion and will consume more electricity than a million American homes. Meta, Microsoft, Amazon and Google have told investors that they expect to spend a combined $320 billion on infrastructure costs this year. Much of that will go toward building new data centers — more than twice what they spent two years ago.

As OpenAI and its partners build a roughly $60 billion data center complex for A.I. in Texas and another in the Middle East, Meta is erecting a facility in Louisiana that will be twice as large. Amazon is going even bigger with a new campus in Indiana. Amazon’s partner, the A.I. start-up Anthropic, says it could eventually use all 30 of the data centers on this 1,200-acre campus to train a single A.I system. Even if Anthropic’s progress stops, Amazon says that it will use those 30 data centers to deliver A.I. services to customers.

Amazon is building a data center complex in New Carlisle, Ind., for its work with the A.I. company Anthropic. Photo Credit…AJ Mast for The New York Times

……………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………..

Stargate UAE:

OpenAI is partnering with United Arab Emirates firm G42 and others to build a huge artificial-intelligence data center in Abu Dhabi, UAE. The project, called Stargate UAE, is part of a broader push by the U.A.E. to become one of the world’s biggest funders of AI companies and infrastructure—and a hub for AI jobs. The Stargate project is led by G42, an AI firm controlled by Sheikh Tahnoon bin Zayed al Nahyan, the U.A.E. national-security adviser and brother of the president. As part of the deal, an enhanced version of ChatGPT would be available for free nationwide, OpenAI said.

The first 200-megawatt chunk of the data center is due to be completed by the end of 2026, while the remainder of the project hasn’t been finalized. The buildings’ construction will be funded by G42, and the data center will be operated by OpenAI and tech company Oracle, G42 said. Other partners include global tech investor, AI/GPU chip maker Nvidia and network-equipment company Cisco.

……………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………..

Softbank and ASI:

Not wanting to be left behind, SoftBank, led by CEO Masayoshi Son, has made massive investments in AI and has a bold vision for the future of AI development. Son has expressed a strong belief that Artificial Super Intelligence (ASI), surpassing human intelligence by a factor of 10,000, will emerge within the next 10 years. For example, Softbank has:

- Significant investments in OpenAI, with planned investments reaching approximately $33.2 billion. Son considers OpenAI a key partner in realizing their ASI vision.

- Acquired Ampere Computing (chip designer) for $6.5 billion to strengthen their AI computing capabilities.

- Invested in the Stargate Project alongside OpenAI, Oracle, and MGX. Stargate aims to build large AI-focused data centers in the U.S., with a planned investment of up to $500 billion.

Son predicts that AI will surpass human-level cognitive abilities (Artificial General Intelligence or AGI) within a few years. He then anticipates a much more advanced form of AI, ASI, to be 10,000 times smarter than humans within a decade. He believes this progress is driven by advancements in models like OpenAI’s o1, which can “think” for longer before responding.

……………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………..

Super High Salaries for AI Researchers:

Salaries for A.I. experts are going through the roof and reaching the stratosphere. OpenAI, Google DeepMind, Anthropic, Meta, and NVIDIA are paying over $300,000 in base salary, plus bonuses and stock options. Other companies like Netflix, Amazon, and Tesla are also heavily invested in AI and offer competitive compensation packages.

Meta has been offering compensation packages worth as much as $100 million per person. The owner of Facebook made more than 45 offers to researchers at OpenAI alone, according to a person familiar with these approaches. Meta’s CTO Andrew Bosworth implied that only a few people for very senior leadership roles may have been offered that kind of money, but clarified “the actual terms of the offer” wasn’t a “sign-on bonus. It’s all these different things.” Tech companies typically offer the biggest chunks of their pay to senior leaders in restricted stock unit (RSU) grants, dependent on either tenure or performance metrics. A four-year total pay package worth about $100 million for a very senior leader is not inconceivable for Meta. Most of Meta’s named officers, including Bosworth, have earned total compensation of between $20 million and nearly $24 million per year for years.

Meta CEO Mark Zuckerberg on Monday announced its new artificial intelligence organization, Meta Superintelligence Labs, to its employees, according to an internal post reviewed by The Information. The organization includes Meta’s existing AI teams, including its Fundamental AI Research lab, as well as “a new lab focused on developing the next generation of our models,” Zuckerberg said in the post. Scale AI CEO Alexandr Wang has joined Meta as its Chief AI Officer and will partner with former GitHub CEO Nat Friedman to lead the organization. Friedman will lead Meta’s work on AI products and applied research.

“I’m excited about the progress we have planned for Llama 4.1 and 4.2,” Zuckerberg said in the post. “In parallel, we’re going to start research on our next generation models to get to the frontier in the next year or so,” he added.

On Thursday, researcher Lucas Beyer confirmed he was leaving OpenAI to join Meta along with the two others who led OpenAI’s Zurich office. He tweeted: “1) yes, we will be joining Meta. 2) no, we did not get 100M sign-on, that’s fake news.” (Beyer politely declined to comment further on his new role to TechCrunch.) Beyer’s expertise is in computer vision AI. That aligns with what Meta is pursuing: entertainment AI, rather than productivity AI, Bosworth reportedly said in that meeting. Meta already has a stake in the ground in that area with its Quest VR headsets and its Ray-Ban and Oakley AI glasses.

……………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………..

VC investments in AI are off the charts:

Venture capitalists are strongly increasing their AI spending. U.S. investment in A.I. companies rose to $65 billion in the first quarter, up 33% from the previous quarter and up 550% from the quarter before ChatGPT came out in 2022, according to data from PitchBook, which tracks the industry.

This astounding VC spending, critics argue, comes with a huge risk. A.I. is arguably more expensive than anything the tech industry has tried to build, and there is no guarantee it will live up to its potential. But the bigger risk, many executives believe, is not spending enough to keep pace with rivals.

“The thinking from the big C.E.O.s is that they can’t afford to be wrong by doing too little, but they can afford to be wrong by doing too much,” said Jordan Jacobs, a partner with the venture capital firm Radical Ventures. “Everyone is deeply afraid of being left behind,” said Chris V. Nicholson, an investor with the venture capital firm Page One Ventures who focuses on A.I. technologies.

Indeed, a significant driver of investment has been a fear of missing out on the next big thing, leading to VCs pouring billions into AI startups at “nosebleed valuations” without clear business models or immediate paths to profitability.

Conclusions:

Big tech companies and VCs acknowledge that they may be overestimating A.I.’s potential. Developing and implementing AI systems, especially large language models (LLMs), is incredibly expensive due to hardware (GPUs), software, and expertise requirements. One of the chief concerns is that revenue for many AI companies isn’t matching the pace of investment. Even major players like OpenAI reportedly face significant cash burn problems. But even if the technology falls short, many executives and investors believe, the investments they’re making now will be worth it.

……………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………..

References:

https://www.nytimes.com/2025/06/27/technology/ai-spending-openai-amazon-meta.html

Meta is offering multimillion-dollar pay for AI researchers, but not $100M ‘signing bonuses’

https://www.theinformation.com/briefings/meta-announces-new-superintelligence-lab

OpenAI partners with G42 to build giant data center for Stargate UAE project

AI adoption to accelerate growth in the $215 billion Data Center market

Will billions of dollars big tech is spending on Gen AI data centers produce a decent ROI?

Networking chips and modules for AI data centers: Infiniband, Ultra Ethernet, Optical Connections

Superclusters of Nvidia GPU/AI chips combined with end-to-end network platforms to create next generation data centers

Proposed solutions to high energy consumption of Generative AI LLMs: optimized hardware, new algorithms, green data centers

ZTE’s AI infrastructure and AI-powered terminals revealed at MWC Shanghai

ZTE Corporation unveiled a full range of AI initiatives under the theme “Catalyzing Intelligent Innovation” at MWC Shanghai 2025. Those innovations include AI + networks, AI applications, and AI-powered terminals. During several demonstrations, ZTE showcased its key advancements in AI phones and smart homes. Leveraging its underlying capabilities, the company is committed to providing full-stack solutions—from infrastructure to application ecosystems—for operators, enterprises, and consumers, co-creating an era of AI for all.

ZTE’s Chief Development Officer Cui Li outlined the vendor’s roadmap for building intelligent infrastructure and accelerating artificial intelligence (AI) adoption across industries during a keynote session at MWC Shanghai 2025. During her speech, Cui highlighted the growing influence of large AI models and the critical role of foundational infrastructure. “No matter how AI technology evolves in the future, the focus will remain on efficient infrastructure, optimized algorithms and practical applications,” she said. The Chinese vendor is deploying modular, prefabricated data center units and AI-based power management, which she said reduce energy use and cooling loads by more than 10%. These developments are aimed at delivering flexible, sustainable capacity to meet growing AI demands, the ZTE executive said.

ZTE is also advancing “AI-native” networks that shift from traditional architectures to heterogeneous computing platforms, with embedded AI capabilities. This, Cui said, marks a shift from AI as a support tool to autonomous agents shaping operations. Ms. Cui emphasized the role of high-quality, secure data and efficient algorithms in building more capable AI. “Data is like fertile ‘soil’. Its volume, purity and security decide how well AI as a plant can grow,” she said. “Every digital application — including AI — depends on efficient and green infrastructure,” she said.

ZTE is heavily investing in AI-native network architecture and high-efficiency computing:

- AI-native networks – ZTE is redesigning telecom infrastructure with embedded intelligence, modular data centers and AI-driven energy systems to meet escalating AI compute demands.

- Smarter models, better data – With advanced training methods and tools, ZTE is pushing the boundaries of model accuracy and real-world performance.

- Edge-to-core deployment – ZTE is integrating AI across consumer, home and industry use cases, delivering over 100 applied solutions across 18 verticals under its “AI for All” strategy.

ZTE has rolled out a full range of innovative solutions for network intelligence upgrades.

-

AIR RAN solution: deeply integrating AI to fully improve energy efficiency, maintenance efficiency, and user experience, driving the transition towards value creation of 5G

-

AIR Net solution: a high-level autonomous network solution that encompasses three engines to advance network operations towards “Agentic Operations”

-

AI-optical campus solution: addressing network pain points in various scenarios for higher operational efficiency in cities

-

HI-NET solution: a high-performance and highly intelligent transport network solution enabling “terminal-edge-network-computing” synergy with multiple groundbreaking innovations, including the industry’s first integrated sensing-communication-computing CPE, full-band OTNs, highest-density 800G intelligent switches, and the world’s leading AI-native routers

Through technological innovations in wireless and wired networks, ZTE is building an energy-efficient, wide-coverage, and intelligent network infrastructure that meets current business needs and lays the groundwork for future AI-driven applications, positioning operators as first movers in digital transformation.

In the home terminal market, ZTE AI Home establishes a family-centric vDC and employs MoE-based AI agents to deliver personalized services for each household member. Supported by an AI network, home-based computing power, AI screens, and AI companion robots, ZTE AI Home ensures a seamless and engaging experience—providing 24/7 all-around, warm-hearted care for every family member. The product highlights include:

-

AI FTTR: Serving as a thoughtful life assistant, it is equipped with a household knowledge base to proactively understand and optimize daily routines for every family member.

-

AI Wi-Fi 7: Featuring the industry’s first omnidirectional antenna and smart roaming solution, it ensures high-speed and stable connectivity.

-

Smart display: It acts like an exclusive personal trainer, leveraging precise semantic parsing technology to tailor personalized services for users.

-

AI flexible screen & cloud PC: Multi-screen interactions cater to diverse needs for home entertainment and mobile office, creating a new paradigm for smart homes.

-

AI companion robot: Backed by smart emotion recognition and bionic interaction systems, the robot safeguards children’s healthy growth with emotionally intelligent connections.

ZTE will anchor its product strategy on “Connectivity + Computing.” Collaborating with industry partners, the company is committed to driving industrial transformation, and achieving computing and AI for all, thereby contributing to a smarter, more connected world.

References:

ZTE reports H1-2024 revenue of RMB 62.49 billion (+2.9% YoY) and net profit of RMB 5.73 billion (+4.8% YoY)

ZTE reports higher earnings & revenue in 1Q-2024; wins 2023 climate leadership award

Malaysia’s U Mobile signs MoU’s with Huawei and ZTE for 5G network rollout

China Mobile & ZTE use digital twin technology with 5G-Advanced on high-speed railway in China

Dell’Oro: RAN revenue growth in 1Q2025; AI RAN is a conundrum

Dell’Oro: RAN market still declining with Huawei, Ericsson, Nokia, ZTE and Samsung top vendors

Dell’Oro: Global RAN Market to Drop 21% between 2021 and 2029

Deloitte and TM Forum : How AI could revitalize the ailing telecom industry?

IEEE Techblog readers are well aware of the dire state of the global telecommunications industry. In particular:

- According to Deloitte, the global telecommunications industry is expected to have revenues of about US$1.53 trillion in 2024, up about 3% over the prior year.Both in 2024 and out to 2028, growth is expected to be higher in Asia Pacific and Europe, Middle East, and Africa, with growth in the Americas being around 1% annually.

- Telco sales were less than $1.8 trillion in 2022 vs. $1.9 trillion in 2012, according to Light Reading. Collective investments of about $1 trillion over a five-year period had brought a lousy return of less than 1%.

- Last year (2024), spending on radio access network infrastructure fell by $5 billion, more than 12% of the total, according to analyst firm Omdia, imperilling the kit vendors on which telcos rely.

Deloitte believes generative (gen) AI will have a huge impact on telecom network providers:

Telcos are using gen AI to reduce costs, become more efficient, and offer new services. Some are building new gen AI data centers to sell training and inference to others. What role does connectivity play in these data centers?

There is a gen AI gold rush expected over the next five years. Spending estimates range from hundreds of billions to over a trillion dollars on the physical layer required for gen AI: chips, data centers, and electricity.16 Close to another hundred billion US dollars will likely be spent on the software and services layer.17 Telcos should focus on the opportunity to participate by connecting all of those different pieces of hardware and software. And shouldn’t telcos, whose business is all about connectivity, be able to profit in some way?

There are gen AI markets for connectivity: Inside the data centers there are miles of mainly copper (and some fiber) cables for transmitting data from board to board and rack to rack. Serving this market is worth billions in 2025,18 but much of this connectivity is provided by data centers and chipmakers and have never been provided by telcos.

There are also massive, long-haul fiber networks ranging from tens to thousands of miles long. These connect (for example) a hyperscaler’s data centers across a region or continent, or even stretch along the seabed, connecting data centers across continents. Sometimes these new fiber networks are being built to support sovereign AI—that is, the need to keep all the AI data inside a given country or region.

Historically, those fiber networks were massive expenditures, built by only the largest telcos or (in the undersea case) built by consortia of telcos, to spread the cost across many players. In 2025, it looks like some of the major gen AI players are building at least some of this connection capacity, but largely on their own or with companies that are specialists in long-haul fiber.

Telcos may want to think about how they can continue to be a relevant player in the part of the connectivity space, rather than just ceding it to the gen AI behemoths. For context, it is estimated that big tech players will spend over US$100 billion on network capex between 2024 and 2030, representing 5% to 10% of their total capex in that period, up from only about 4% to 5% of capex for a network historically.

Where the opportunities could be greater are for connecting billions of consumers and enterprises. Telcos already serve these large markets, and as consumers and businesses start sending larger amounts of data over wireline and wireless networks, that growth might translate to higher revenues. A recent research report suggests that direct gen AI data traffic could be in exabyte by 2033.24

The immediate challenge is that many gen AI use cases for both consumer and enterprise markets are not exactly bandwidth hogs: In 2025, they tend to be text-based (so small file sizes) and users may expect answers in seconds rather than milliseconds,25 which can limit how telcos can monetize the traffic. Users will likely pay a premium for ultra-low latency, but if latency isn’t an issue, they are unlikely to pay a premium.

Telcos may want to think about how they can continue to be a relevant player in the part of the connectivity space, rather than just ceding it to the gen AI behemoths.

A longer-term challenge is on-device edge computing. Even if users start doing a lot more with creating, consuming, and sharing gen AI video in real time (requiring much larger file transmission and lower latency), the majority of devices (smartphones, PCs, wearables, or Internet of Things (IoT) devices in factories and ports) are expected to soon have onboard gen AI processing chips.26 These gen accelerators, combined with emerging smaller language AI models, may mean that network connectivity is less of an issue. Instead of a consumer recording a video, sending the raw image to the cloud for AI processing, then the cloud sending it back, the image could be enhanced or altered locally, with less need for high-speed or low-latency connectivity.

Of course, small models might not work well. The chips on consumer and enterprise edge devices might not be powerful enough or might be too power inefficient with unacceptably short battery life. In which case, telcos may be lifted by a wave of gen AI usage. But that’s unlikely to be in 2025, or even 2026.

Another potential source of gen AI monetization is what’s being called AI Radio Access Network (RAN). At the top of every cell tower are a bunch of radios and antennas. There is also a powerful processor or processors for controlling those radios and antennas. In 2024, a consortium (the AI-RAN Alliance) was formed to look at the idea of adding the same kind of generative AI chips found in data centers or enterprise edge servers (a mix of GPUs and CPUs) to every tower.The idea would be that they could run the RAN, help make it more open, flexible, and responsive, dynamically configure the network in real time, and be able to perform gen AI inference or training as service with any extra capacity left over, generating incremental revenues. At this time, a number of original equipment manufacturers (OEMs, including ones who currently account for over 95% of RAN sales), telcos, and chip companies are part of the alliance. Some expect AI RAN to be a logical successor to Open RAN and be built on top of it, and may even be what 6G turns out to be.

…………………………………………………………………………………………………………………………………………………………………………….

The TM Forum has three broad “AI initiatives,” which are part of their overarching “Industry Missions.” These missions aim to change the future of global connectivity, with AI being a critical component.

The three broad “AI initiatives” (or “Industry Missions” where AI plays a central role) are:

-

AI and Data Innovation: This mission focuses on the safe and widespread adoption of AI and data at scale within the telecommunications industry. It aims to help telcos accelerate, de-risk, and reduce the costs of applying AI technologies to cut operational expenses and drive revenue growth. This includes developing best practices, standards, data architectures, ontologies, and APIs.

-

Autonomous Networks: This initiative is about unlocking the power of seamless end-to-end autonomous operations in telecommunications networks. AI is a fundamental technology for achieving higher levels of network automation, moving towards zero-touch, zero-wait, and zero-trouble operations.

-

Composable IT and Ecosystems: While not solely an “AI initiative,” this mission focuses on simpler IT operations and partnering via AI-ready composable software. AI plays a significant role in enabling more agile and efficient IT systems that can adapt and integrate within dynamic ecosystems. It’s based on the TM Forum’s Open Digital Architecture (ODA). Eighteen big telcos are now running on ODA while the same number of vendors are described by the TM Forum as “ready” to adopt it.

These initiatives are supported by various programs, tools, and resources, including:

- AI Operations (AIOps): Focusing on deploying and managing AI at scale, re-engineering operational processes to support AI, and governing AI operations.

- Responsible AI: Addressing ethical considerations, risk management, and governance frameworks for AI.

- Generative AI Maturity Interactive Tool (GAMIT): To help organizations assess their readiness to exploit the power of GenAI.

- AI Readiness Check (AIRC): An online tool for members to identify gaps in their AI adoption journey across key business dimensions.

- AI for Everyone (AI4X): A pillar focused on democratizing AI across all business functions within an organization.

Under the leadership of CEO Nik Willetts, a rejuvenated, AI-wielding TM Forum now underpins what many telcos do in business and operational support systems, the essential IT plumbing. The TM Forum rates automation using the same five-level system as the car industry, where 0 means completely manual and 5 heralds the end of human intervention. Many telcos are on track for Level 4 in specific areas this year, said Willetts. China Mobile has already realized an 80% reduction in major faults, saving 3,000 person years of effort and 4,000 kilowatt hours of energy each year, thanks to automation.

Outside of China, telcos and telco vendors are leaning heavily on technologies mainly developed by just a few U.S. companies to implement AI. A person remains in the loop for critical decision-making, but the justifications for taking any decision are increasingly provided by systems built on the core underlying technologies from those same few companies. As IEEE Techblog has noted, AI is still hallucinating – throwing up nonsense or falsehoods – just as domain-specific experts are being threatened by it.

Agentic AI substitutes interacting software programs for junior technicians, the future decision-makers. If AI Level 4 renders them superfluous, where do the future decision-makers come from?

Caroline Chappell, an independent consultant with years of expertise in the telecom industry, says there is now talk of what the AI pundits call “learning world models,” more sophisticated AI that grows to understand its environment much as a baby does. When mature, it could come up with completely different approaches to the design of telecom networks and technologies. At this stage, it may be impossible for almost anyone to understand what AI is doing, she said.

References:

Sources: AI is Getting Smarter, but Hallucinations Are Getting Worse

McKinsey: AI infrastructure opportunity for telcos? AI developments in the telecom sector

SK Group and AWS to build Korea’s largest AI data center in Ulsan

Amazon Web Services (AWS) is partnering with the SK Group to build South Korea’s largest AI data center. The two companies are expected to launch the project later this month and will hold a groundbreaking ceremony for the 100MW facility in August, according to state news service Yonhap.

Scheduled to begin operations in 2027, the AI Zone will empower organizations in Korea to develop innovative AI applications locally while leveraging world-class AWS services like Amazon SageMaker, Bedrock, and Q. SK Group expects to bolster Korea’s AI competitiveness and establish the region as a key hub for hyperscale infrastructure in Asia-Pacific through AI initiatives.

AWS provides on-demand cloud computing platforms and application programming interfaces (APIs) to individuals, businesses and governments on a pay-per-use basis.The data center will be built on a 36,000-square-meter site in an industrial park in Ulsan, 305 km southeast of Seoul. It will be powered by 60,000 GPUs, making it the country’s first large-scale AI data center.

The facility will be located in the Mipo industrial complex in Ulsan, 305 kilometers southeast of Seoul. It will house 60,000 graphics processing units (GPUs) and have a power capacity of 100 megawatts, making it the country’s first AI infrastructure of such scale, the sources said.

Ryu Young-sang, chief executive officer (CEO) of SK Telecom Co., had announced the company’s plan to build a hyperscale AI data center equipped with 60,000 GPUs in collaboration with a global tech partner, during the Mobile World Congress (MWC) 2025 held in Spain in March.

SK Telecom plans to invest 3.4 trillion won (US$2.49 billion) in AI infrastructure by 2028, with a significant portion expected to be allocated to the data center project. SK Telecom- South Korea’s biggest mobile operator and 31% owned by the SK Group – will manage the project. “They have been working on the project, but the exact timeline and other details have yet to be finalized,” an SK Group spokesperson said.

The AI data center will be developed in two phases, with the initial 40MW phase to be completed by November 2027 and the full 100MW capacity to be operational by February 2029, the Korea Herald reported Monday. Once completed, the facility, powered by 60,000 graphics processing units, will have a power capacity of 103 megawatts, making it the country’s largest AI infrastructure, sources said.

SK Group appears to have chosen Ulsan as the site, considering its proximity to SK Gas’ liquefied natural gas combined heat and power plant, ensuring a stable supply of large-scale electricity essential for data center operations. The facility is also capable of utilizing LNG cold energy for data center cooling.

SKT last month released its revised AI pyramid strategy, targeting AI infrastructure including data centers, GPUaaS and customized data centers. It is also developing personal agents A. and Aster for consumers and AIX services for enterprise customers.

Globally, it has found partners through the Global Telecom Alliance, which it co-founded, and is collaborating with US firms Anthropic and Lambda.

SKT’s AI business unit is still small, however, recording just KRW156 billion ($115 million) in revenue in Q1, two-thirds of it from data center infrastructure. Its parent SK Group, which also includes memory chip giant SK Hynix and energy firm SK Innovation, reported $88 billion in revenue last year.

AWS, the world’s largest cloud services provider, has been expanding its footprint in Korea. It currently runs a data center in Seoul and began constructing its second facility in Incheon’s Seo District in late 2023. The company has pledged to invest 7.85 trillion won in Korea’s cloud computing infrastructure by 2027.

“When SK Group’s exceptional technical capabilities combine with AWS’s comprehensive AI cloud services, we’ll empower customers of all sizes, and across all industries here in Korea to build and innovate with safe, secure AI technologies,” said Prasad Kalyanaraman, VP of Infrastructure Services at AWS. “This partnership represents our commitment to Korea’s AI future, and I couldn’t be more excited about what we’ll achieve together.”

Earlier this month AWS launched its Taiwan cloud region – its 15th in Asia-Pacific – with plans to invest $5 billion on local cloud and AI infrastructure.

References:

https://en.yna.co.kr/view/AEN20250616004500320?section=k-biz/corporate

https://www.koreaherald.com/article/10510141

https://www.lightreading.com/data-centers/aws-sk-group-to-build-korea-s-largest-ai-data-center

Big tech firms target data infrastructure software companies to increase AI competitiveness

- Meta announced Friday a $14.3 billion deal for a 49% stake in data-labeling company Scale AI. It’s 28 year old co-founder and CEO will join Meta as an AI advisor.

- Salesforce announced plans last month to buy data integration company Informatica for $8 billion. It will enable Salesforce to better analyze and assimilate scattered data from across its internal and external systems before feeding it into its in-house AI system, Einstein AI, executives said at the time.

- IT management provider ServiceNow said in May it was buying data catalogue platform Data.world, which will allow ServiceNow to better understand the business context behind data, executives said when it was announced.

- IBM announced it was acquiring data management provider DataStax in February to manage and process unstructured data before feeding it to its AI platform.

Nile launches a Generative AI engine (NXI) to proactively detect and resolve enterprise network issues

Nile is a Nile is a private, venture-funded technology company specializing in AI-driven network and security infrastructure services for enterprises and government organizations. Nile has pioneered the use of AI and machine learning in enterprise networking. Its latest generative AI capability, Nile Experience Intelligence (NXI), proactively resolves network issues before they impact users or IT teams, automating fault detection, root cause analysis, and remediation at scale. This approach reduces manual intervention, eliminates alert fatigue, and ensures high performance and uptime by autonomously managing networks.

Significant Innovations Include:

-

Automated site surveys and network design using AI and machine learning

-

Digital twins for simulating and optimizing network operations

-

Edge-to-cloud zero-trust security built into all service components

-

Closed-loop automation for continuous optimization without human intervention

Today, the company announced the launch of Nile Experience Intelligence (NXI), a novel generative AI capability designed to proactively resolve network issues before they impact IT teams, users, IoT devices, or the performance standards defined by Nile’s Network-as-a-Service (NaaS) guarantee. As a core component of the Nile Access Service [1.], NXI uniquely enables Nile to take advantage of its comprehensive, built-in AI automation capabilities. NXI allows Nile to autonomously monitor every customer deployment at scale, identifying performance anomalies and network degradations that impact reliability and user experience. While others market their offerings as NaaS, only the Nile Access Service with NXI delivers a financially backed performance guarantee—an unmatched industry standard.

………………………………………………………………………………………………………………………………………………………………

Note 1. Nile Access Service is a campus Network-as-a-Service (NaaS) platform that delivers both wired and wireless LAN connectivity with integrated Zero Trust Networking (ZTN), automated lifecycle management, and a unique industry-first performance guarantee. The service is built on a vertically integrated stack of hardware, software, and cloud-based management, leveraging continuous monitoring, analytics, and AI-powered automation to simplify deployment, automate maintenance, and optimize network performance.

………………………………………………………………………………………………………………………………………………………………………………………………….

“Traditional networking and NaaS offerings based on service packs rely on IT organizations to write rules that are static and reactive, which requires continuous management. Nile and NXI flipped that approach by using generative AI to anticipate and resolve issues across our entire install base, before users or IT teams are even aware of them,” said Suresh Katukam, Chief Product Officer at Nile. “With NXI, instead of providing recommendations and asking customers to write rules that involve manual interaction—we’re enabling autonomous operations that provide a superior and uninterrupted user experience.”

Key capabilities include:

- Proactive Fault Detection and Root Cause Analysis: predictive modeling-based data analysis of billions of daily events, enabling proactive insights across Nile’s entire customer install base.

- Large Scale Automated Remediation: leveraging the power of generative AI and large language models (LLMs), NXI automatically validates and implements resolutions without manual intervention, virtually eliminating customer-generated trouble tickets.

- Eliminate Alert Fatigue: NXI eliminates alert overload by shifting focus from notifications to autonomous, actionable resolution, ensuring performance and uptime without IT intervention.

Unlike rules-based systems dependent on human-configured logic and manual maintenance, NXI is:

- Generative AI and self-learning powered, eliminating the need for static, manually created rules that are prone to human error and require ongoing maintenance.

- Designed for scale, NXI already processes terabytes of data daily and effortlessly scales to manage thousands of networks simultaneously.

- Built on Nile’s standardized architecture, enabling consistent AI-driven optimization across all customer networks at scale.

- Closed-loop automated, no dashboards or recommended actions for customers to interpret, and no waiting on manual intervention.

Katukam added, “NXI is a game-changer for Nile. It enables us to stay ahead of user experience and continuously fine-tune the network to meet evolving needs. This is what true autonomous networking looks like—proactive, intelligent, and performance-guaranteed.”

From improved connectivity to consistent performance, Nile customers are already seeing the impact of NXI. For more information about NXI and Nile’s secure Network as a Service platform, visit www.nilesecure.com.

About Nile:

Nile is leading a fundamental shift in the networking industry, challenging decades-old conventions to deliver a radically new approach. By eliminating complexity and rethinking how networks are built, consumed, and operated, Nile is pioneering a new category designed for a modern, service-driven era. With a relentless focus on simplicity, security, reliability, and performance, Nile empowers organizations to move beyond the limitations of legacy infrastructure and embrace a future where networking is effortless, predictable, and fully aligned with their digital ambitions.

Nile is recognized as a disruptor in the enterprise networking market, offering a modern alternative to traditional vendors like Cisco and HPE. Its model enables organizations to reduce total cost of ownership by more than 60% and reclaim IT resources while providing superior connectivity. Major customers include Stanford University, Pitney Bowes, and Carta.

The company has received several industry accolades, including the CRN Tech Innovators Award (2024) and recognition in Gartner’s Peer Insights Voice of the Customer Report1. Nile has raised over $300 million in funding, with a significant $175 million Series C round in 2023 to fuel expansion.

References:

https://nilesecure.com/company/about-us

Does AI change the business case for cloud networking?

Networking chips and modules for AI data centers: Infiniband, Ultra Ethernet, Optical Connections

Qualcomm to acquire Alphawave Semi for $2.4 billion; says its high-speed wired tech will accelerate AI data center expansion

AI infrastructure investments drive demand for Ciena’s products including 800G coherent optics

HPE cost reduction campaign with more layoffs; 250 AI PoC trials or deployments

Hewlett Packward Enterprise (HPE) is going through yet another restructuring to reduce costs and to capitalize on the AI use cases it’s been developing. HPE’s workforce reduction program announced in March 2025 was to reduce its headcount of around 61,000 by 2,500 and to have another reduction of 500 people by attrition, over a period of 12 to 18 months, eliminating about $350 million in annual costs when it is said and done. The plan is to have this restructuring done by the end of this fiscal year, which comes to a close at the end of October. The headcount at the end of Q2 Fiscal Year 2025 was 59,000, so the restructuring is proceeding apace and this is, by the way, the lowest employee count that HPE’s enterprise business has had since absorbing Compaq in the wake of the Dot Com Bust in 2001.

The company, which sells IT servers, network communications equipment and cloud services, employed about 66,000 people in 2017, not long after it was created by the bi-section of Hewlett-Packard (with the PC- and printer-making part now called HP Inc). By the end of April this year, the number of employees had dropped to 59,000 – “the lowest we have seen as an independent company,” said HPE chief financial officer of Marie Myers, on the company’s Wednesday earnings call (according to this Motley Fool transcript)– after 2,000 job cuts in the last six months. By the end of October, under the latest plans, HPE expects to have shed another 1,050 employees.

Weak profitability of its server and cloud units is why HPE now attaches such importance to intelligent edge. HPE’s networking division today encompasses the Aruba enterprise Wi-Fi business along with more recent acquisitions such as Athonet, an Italian developer of core network software for private 5G. It accounts for only 15% of sales but a huge 41% of earnings, which makes it HPE’s most profitable division by far, with a margin of 24%.

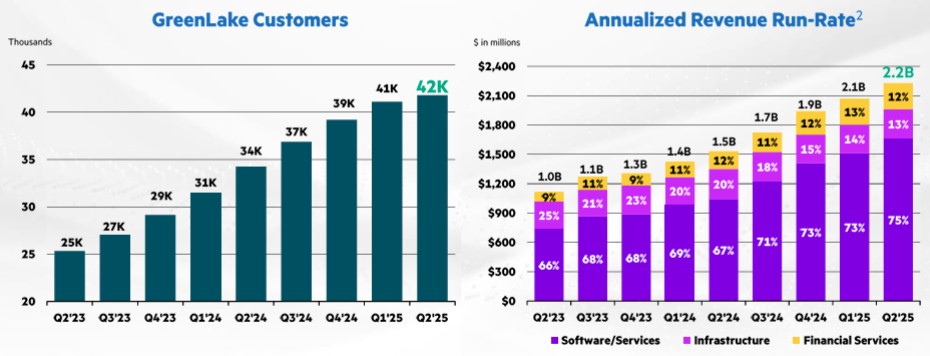

Customer growth is slowing at HPE’s GreenLake cloud services division. Only 1,000 customers added in the quarter, bringing to total to 42,000 worldwide. The annualized run rate for the GreenLake business inched up to $2.2 billion, compared to $2.1 billion in Q1 F2025 and from $1.5 billion a year ago. It is in this area that HPE plans to accelerate it’s AI growth, via Nvidia’s AI/GPU chips.

Source: HPE

With respect to the Juniper Networks acquisition, there is a possibility that the $14 billion deal may collapse. A legal battle in court is due to begin on July 9th, but Neri talked on the analyst call about exploring “a number of other options if the Juniper deal doesn’t happen.”

Photo Credit: HPE

Apparently, artificial intelligence (AI) is allowing HPE to eject staff it once needed. It has apparently worked with Deloitte, a management consultancy, to create AI “agents” based on Nvidia’s technology and its own cloud. Let loose in finance, those agents already seem to be taking over some jobs. “This strategic move will transform our executive reporting,” said Myers. “We’re turning data into actionable intelligence, accelerating our reporting cycles by approximately 50% and reducing processing costs by an estimated 25%. Our ambition is clear: a leaner, faster and more competitive organization. Nothing is off limits.”

HPE CEO Anthony Neris AI comments on yesterday’s earnings call:

Ultimately, it comes down to the mix of the business with AI. And that’s why we take a very disciplined approach across the AI ecosystem, if you will. And what I’m really pleased in AI is that this quarter, one-third of our orders came from enterprise, which tend to come with higher margin because there is more software and services attached to that enterprise market. Then you have to pay attention also to working capital. Working capital is very important because in some of these deals, you are deploying a significant amount of capital and there is a time between the capital deployment and the revenue profit recognition. So that’s why, it is a technology transition, there is a business transition, and then there’s a working capital transition. But I’m pleased with the progress we made in Q2.

The fact is that we have more than 250 use cases where we are doing PoCs (Proof of Concepts) or already deploying AI. In fact, more than 40 are already in production. And we see the benefits of that across finance, global operations, marketing, as well as services. So that’s why we believe there is an opportunity to accelerate that improvement, not just by reducing the workforce, but really becoming nimbler and better at everything we do.

- About Hewlett Packard Enterprise (HPE):

HP Enterprise (HPE) is a large US based business and technology services company. HPE was founded on 1 November 2015 as part of splitting of the Hewlett-Packard company. The company has over 240,000 employees and the headquarters are based in Palo Alto, CA (as of 2016).

HPE operates in 60 countries, centered in the metropolitan areas of Dallas-Fort Worth; Detroit; Des Moines and Clarion, Iowa; Salt Lake City; Indianapolis; Winchester, Kentucky; Tulsa, Oklahoma; Boise, Idaho; and Northern Virginia in the United States. Other major locations are as follows: Argentina, Colombia, Costa Rica, India, Brazil, Mexico, the United Kingdom, Australia, Canada, Egypt, Germany, New Zealand, Hungary, Spain, Slovakia, Israel, South Africa, Italy, Malaysia and the Philippines.

HPE has four major operating divisions: Enterprise Group, which works in servers, storage, networking, consulting and support; Services; Software; and Financial Services. In May 2016, HPE announced it would sell its Enterprise Services division to one of its competitors, Computer Sciences Corporation (CSC).

References:

Dell’Oro: RAN revenue growth in 1Q2025; AI RAN is a conundrum

Dell’Oro Group just completed its 1Q-2025 Radio Access Network (RAN) report. Initial findings suggest that after two years of steep declines, market conditions improved in the quarter. Preliminary estimates show that worldwide RAN revenue, excluding services, stabilized year-over-year, resulting in the first growth quarter since 1Q-2023. Author Stefan Pongratz attributes the improved conditions to favorable regional mix and easy comparisons (investments were very low same quarter lasts year), rather than a change to the fundamentals that shape the RAN market.

Pongratz believes the long-term trajectory has not changed. “While it is exciting that RAN came in as expected and the full year outlook remains on track, the message we have communicated for some time now has not changed. The RAN market is still growth-challenged as regional 5G coverage imbalances, slower data traffic growth, and monetization challenges continue to weigh on the broader growth prospects,” he added.

Vendor rankings haven’t changed much in several years, as per this table:

Additional highlights from the 1Q 2025 RAN report:

– Strong growth in North America was enough to offset declines in CALA, China, and MEA.

– The picture is less favorable outside of North America. RAN, excluding North America, recorded a fifth consecutive quarter of declines.

– Revenue rankings did not change in 1Q 2025. The top 5 RAN suppliers (4-Quarter Trailing) based on worldwide revenues are Huawei, Ericsson, Nokia, ZTE, and Samsung.

– The top 5 RAN (4-Quarter Trailing) suppliers based on revenues outside of China are Ericsson, Nokia, Huawei, Samsung, and ZTE.

– The short-term outlook is mostly unchanged, with total RAN expected to remain stable in 2025 and RAN outside of China growing at a modest pace.

Dell’Oro Group’s RAN Quarterly Report offers a complete overview of the RAN industry, with tables covering manufacturers’ and market revenue for multiple RAN segments including 5G NR Sub-7 GHz, 5G NR mmWave, LTE, macro base stations and radios, small cells, Massive MIMO, Open RAN, and vRAN. The report also tracks the RAN market by region and includes a four-quarter outlook. To purchase this report, please contact us by email at [email protected]

………………………………………………………………………………………………………………………………………………………………………………..

Separately, Pongrantz says “there is great skepticism about AI’s ability to reverse the flat revenue trajectory that has defined network operators throughout the 4G and 5G cycles.”

The 3GPP AI/ML activities and roadmap are mostly aligned with the broader efficiency aspects of the AI RAN vision, primarily focused on automation, management data analytics (MDA), SON/MDT, and over-the-air (OTA) related work (CSI, beam management, mobility, and positioning).

Current AI/ML activities align well with the AI-RAN Alliance’s vision to elevate the RAN’s potential with more automation, improved efficiencies, and new monetization opportunities. The AI-RAN Alliance envisions three key development areas: 1) AI and RAN – improving asset utilization by using a common shared infrastructure for both RAN and AI workloads, 2) AI on RAN – enabling AI applications on the RAN, 3) AI for RAN – optimizing and enhancing RAN performance. Or from an operator standpoint, AI offers the potential to boost revenue or reduce capex and opex.

While operators generally don’t consider AI the end destination, they believe more openness, virtualization, and intelligence will play essential roles in the broader RAN automation journey.

Operators are not revising their topline growth or mobile data traffic projections upward as a result of AI growing in and around the RAN. Disappointing 4G/5G returns and the failure to reverse the flattish carrier revenue trajectory is helping to explain the increased focus on what can be controlled — AI RAN is currently all about improving the performance/efficiency and reducing opex.

Since the typical gains demonstrated so far are in the 10% to 30% range for specific features, the AI RAN business case will hinge crucially on the cost and power envelope—the risk appetite for growing capex/opex is limited.

The AI-RAN business case using new hardware is difficult to justify for single-purpose tenancy. However, if the operators can use the resources for both RAN and non-RAN workloads and/or the accelerated computing cost comes down (NVIDIA recently announced ARC-Compact, an AI-RAN solution designed for D-RAN), the TAM could expand. For now, the AI service provider vision, where carriers sell unused capacity at scale, remains somewhat far-fetched, and as a result, multi-purpose tenancy is expected to account for a small share of the broader AI RAN market over the near term.

In short, improving something already done by 10% to 30% is not overly exciting. However, suppose AI embedded in the radio signal processing can realize more significant gains or help unlock new revenue opportunities by improving site utilization and providing telcos with an opportunity to sell unused RAN capacity. In that case, there are reasons to be excited. But since the latter is a lower-likelihood play, the base case expectation is that AI RAN will produce tangible value-add, and the excitement level is moderate — or as the Swedes would say, it is lagom.

…………………………………………………………………………………………………………………………………………………………………………………………………………………………………………….

Editor’s Note:

ITU-R WP 5D is working on aspects related to AI in the Radio Access Network (RAN) as part of its IMT-2030 (6G) recommendations. IMT-2030 is expected to consider an appropriate AI-native new air interface that uses to the extent practicable, and proved demonstrated actionable AI to enhance the performance of radio interface functions such as symbol detection/decoding, channel estimation etc. An appropriate AI-native radio network would enable automated and intelligent networking services such as intelligent data perception, supply of on-demand capability etc. Radio networks that support applicable AI services would be fundamental to the design of IMT technologies to serve various AI applications, and the proposed directions include on-demand uplink/sidelink-centric, deep edge, and distributed machine learning.

In summary:

- ITU-R WP5D recognizes AI as one of the key technology trends for IMT-2030 (6G).

- This includes “native AI,” which encompasses both AI-enabled air interface design and radio network for AI services.

- AI is expected to play a crucial role in enhancing the capabilities and performance of 6G networks.

…………………………………………………………………………………………………………………………………………………………………………………………………………………………………………….

References:

Dell’Oro: Private RAN revenue declines slightly, but still doing relatively better than public RAN and WLAN markets

ITU-R WP 5D reports on: IMT-2030 (“6G”) Minimum Technology Performance Requirements; Evaluation Criteria & Methodology

https://www.itu.int/dms_pubrec/itu-r/rec/m/R-REC-M.2160-0-202311-I!!PDF-E.pdf

McKinsey: AI infrastructure opportunity for telcos? AI developments in the telecom sector

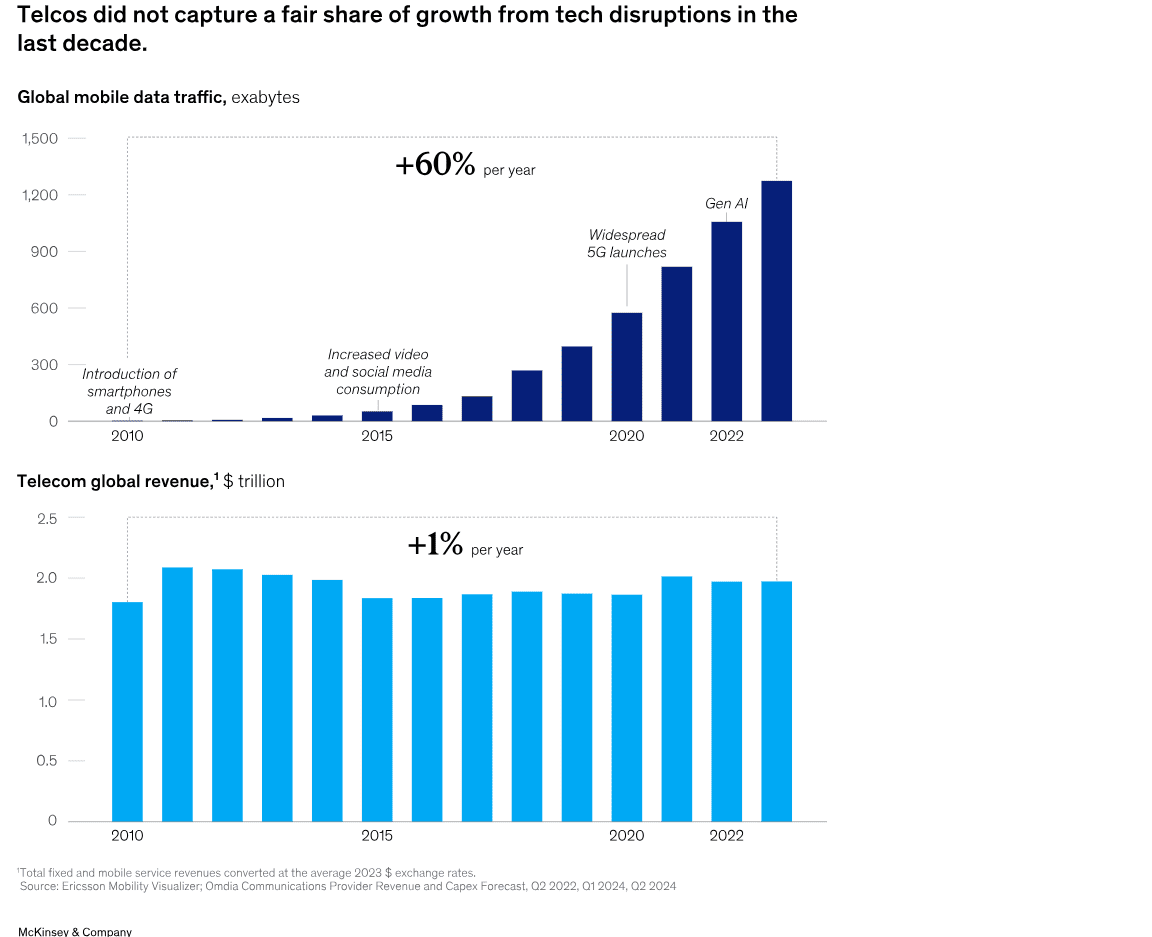

A new report from McKinsey & Company offers a wide range of options for telecom network operators looking to enter the market for AI services. One high-level conclusion is that strategy inertia and decision paralysis might be the most dangerous threats. That’s largely based on telco’s failure to monetize past emerging technologies like smartphones and mobile apps, cloud networking, 5G-SA (the true 5G), etc. For example, global mobile data traffic rose 60% per year from 2010 to 2023, while the global telecom industry’s revenues rose just 1% during that same time period.

“Operators could provide the backbone for today’s AI economy to reignite growth. But success will hinge on effectively navigating complex market dynamics, uncertain demand, and rising competition….Not every path will suit every telco; some may be too risky for certain operators right now. However, the most significant risk may come from inaction, as telcos face the possibility of missing out on their fair share of growth from this latest technological disruption.”

McKinsey predicts that global data center demand could rise as high as 298 gigawatts by 2030, from just 55 gigawatts in 2023. Fiber connections to AI infused data centers could generate up to $50 billion globally in sales to fiber facilities based carriers.

Pathways to growth -Exploring four strategic options:

- Connecting new data centers with fiber

- Enabling high-performance cloud access with intelligent network services

- Turning unused space and power into revenue

- Building a new GPU as a Service business.

“Our research suggests that the addressable GPUaaS [GPU-as-a-service] market addressed by telcos could range from $35 billion to $70 billion by 2030 globally.” Verizon’s AI Connect service (described below), Indosat Ooredoo Hutchinson (IOH), Singtel and Softbank in Asia have launched their own GPUaaS offerings.

……………………………………………………………………………………………………………………………………………………………………………………………………………………………….

Recent AI developments in the telecom sector include:

- The AI-RAN Alliance, which promises to allow wireless network operators to add AI to their radio access networks (RANs) and then sell AI computing capabilities to enterprises and other customers at the network edge. Nvidia is leading this industrial initiative. Telecom operators in the alliance include T-Mobile and SoftBank, as well as Boost Mobile, Globe, Indosat Ooredoo Hutchison, Korea Telecom, LG UPlus, SK Telecom and Turkcell.

- Verizon’s new AI Connect product, which includes Vultr’s GPU-as-a-service (GPUaaS) offering. GPU-as-a-service is a cloud computing model that allows businesses to rent access to powerful graphics processing units (GPUs) for AI and machine learning workloads without having to purchase and maintain that expensive hardware themselves. Verizon also has agreements with Google Cloud and Meta to provide network infrastructure for their AI workloads, demonstrating a focus on supporting the broader AI economy.

- Orange views AI as a critical growth driver. They are developing “AI factories” (data centers optimized for AI workloads) and providing an “AI platform layer” called Live Intelligence to help enterprises build generative AI systems. They also offer a generative AI assistant for contact centers in partnership with Microsoft.

- Lumen Technologies continues to build fiber connections intended to carry AI traffic.

- British Telecom (BT) has launched intelligent network services and is working with partners like Fortinet to integrate AI for enhanced security and network management.

- Telus (Canada) has built its own AI platform called “Fuel iX” to boost employee productivity and generate new revenue. They are also commercializing Fuel iX and building sovereign AI infrastructure.

- Telefónica: Their “Next Best Action AI Brain” uses an in-house Kernel platform to revolutionize customer interactions with precise, contextually relevant recommendations.

- Bharti Airtel (India): Launched India’s first anti-spam network, an AI-powered system that processes billions of calls and messages daily to identify and block spammers.

- e& (formerly Etisalat in UAE): Has launched the “Autonomous Store Experience (EASE),” which uses smart gates, AI-powered cameras, robotics, and smart shelves for a frictionless shopping experience.

- SK Telecom (Korea): Unveiled a strategy to implement an “AI Infrastructure Superhighway” and is actively involved in AI-RAN (AI in Radio Access Networks) development, including their AITRAS solution.

- Vodafone: Sees AI as a transformative force, with initiatives in network optimization, customer experience (e.g., their TOBi chatbot handling over 45 million interactions per month), and even supporting neurodiverse staff.

- Deutsche Telekom: Deploys AI across various facets of its operations

……………………………………………………………………………………………………………………………………………………………………..

A recent report from DCD indicates that new AI models that can reason may require massive, expensive data centers, and such data centers may be out of reach for even the largest telecom operators. Across optical data center interconnects, data centers are already communicating with each other for multi-cluster training runs. “What we see is that, in the largest data centers in the world, there’s actually a data center and another data center and another data center,” he says. “Then the interesting discussion becomes – do I need 100 meters? Do I need 500 meters? Do I need a kilometer interconnect between data centers?”

……………………………………………………………………………………………………………………………………………………………………..

References:

https://www.datacenterdynamics.com/en/analysis/nvidias-networking-vision-for-training-and-inference/

https://opentools.ai/news/inaction-on-ai-a-critical-misstep-for-telecos-says-mckinsey

Bain & Co, McKinsey & Co, AWS suggest how telcos can use and adapt Generative AI

Nvidia AI-RAN survey results; AI inferencing as a reinvention of edge computing?

The case for and against AI-RAN technology using Nvidia or AMD GPUs

Telecom and AI Status in the EU

Major technology companies form AI-Enabled Information and Communication Technology (ICT) Workforce Consortium

AI RAN Alliance selects Alex Choi as Chairman

AI Frenzy Backgrounder; Review of AI Products and Services from Nvidia, Microsoft, Amazon, Google and Meta; Conclusions

AI sparks huge increase in U.S. energy consumption and is straining the power grid; transmission/distribution as a major problem

Deutsche Telekom and Google Cloud partner on “RAN Guardian” AI agent

NEC’s new AI technology for robotics & RAN optimization designed to improve performance

MTN Consulting: Generative AI hype grips telecom industry; telco CAPEX decreases while vendor revenue plummets

Amdocs and NVIDIA to Accelerate Adoption of Generative AI for $1.7 Trillion Telecom Industry

SK Telecom and Deutsche Telekom to Jointly Develop Telco-specific Large Language Models (LLMs)

Wedbush: Middle East (Saudi Arabia and UAE) to be next center of AI infrastructure boom

The next major area of penetration for the AI revolution appears to be the Middle East, Wedbush analysts say in a research note. Analyst Dan Ives said the rapid expansion of artificial intelligence infrastructure in the Middle East marks a “watershed moment” for U.S. tech companies, driven by major developments in Saudi Arabia and the United Arab Emirates. President Trump was recently there to negotiate a deal to have the U.S. tech sector make data centers, supercomputers, software, and overall infrastructure for a massive AI buildout in Saudi Arabia and the U.A.E in the coming years, the analysts say. Saudi Arabia is now due to get 18,000 Nvidia chips for a massive data center while the U.A.E. has Trump’s support to guild the largest data center outside of the U.S., two factors that should start an era of new growth for the U.S. tech sector and be a game-changer for the industry, the analysts say.

“We believe the market opportunity in Saudi Arabia and UAE alone could over time add another $1 trillion to the broader global AI market in the coming year,” Wedbush said. “No Nvidia chips for China… red carpet rollout for the Kingdom,” the firm wrote, contrasting Middle East expansion with chip export restrictions affecting Beijing. Ives called the momentum in the region “a bullish indicator that further shows the U.S. tech’s lead in this 4th Industrial Revolution.” He said that Nvidia CEO Jensen Huang was “the Godfather of AI” and this author totally agrees. Without Nvidia [1.] AI-GPT chips there would be no AI compute servers in the massive data centers now being built.

Wedbush believes Saudi Arabia, the UAE, and Qatar are now on the “priority list” for U.S. tech, with regional demand for AI chips, software, robotics, and data centers expected to surge over the next decade. ……………………………………………………………………………………………………………………………………………………………………………………………

Note 1. Nvidia should see its trend of strong revenue growth continue, but consensus estimates may not fully account for the recent H20 export restriction, Raymond James’ Srini Pajjuri and Grant Li say in a research note. The analysts expect revenue growth between $4 billion and $5 billion during the past six quarters to continue on strong ramps for its Blackwell chip, but they note that the restrictions on the H20 chips present a roughly $4 billion headwind, leaving them to expect limited sequential growth in 2Q. “That said, we fully expect management to sound bullish on 2H given the strong hyperscale capex trends and recent AI diffusion rule changes,” say the analysts. Nvidia is scheduled to report 1QFY26 results on May 28th.

…………………………………………………………………………………………………………………………………………………………………………………………….

Last week, Saudi Arabia unveiled a series of blockbuster AI partnerships with US chip makers, cloud infrastructure providers, and software developers this week, signaling its ambition to become a global AI hub.

Image Credit: Adam Flaherty / Shutterstock.com

Leveraging its $940 billion Public Investment Fund (PIF) and strategic location, Saudi Arabia is forming partnerships to create sovereign AI infrastructure including advanced data centers and Arabic large language models. Google, Oracle, and Salesforce are deepening AI and cloud commitments in Saudi Arabia that will support Vision 2030, a 15-year program to diversify the country’s economy. Within that, the $100 billion Project Transcendence aims to put the kingdom among the top 15 countries in AI by 2030.

The deals include a $20 billion commitment from Saudi firm DataVolt for AI data centers and energy infrastructure in the US and an $80 billion joint investment by Google, DataVolt, Oracle, Salesforce, AMD, and Uber in technologies across both nations, according to a White House fact sheet.

References:

https://finance.yahoo.com/news/wedbush-ives-sees-ai-boom-123710639.html

https://www.wsj.com/tech/tech-media-telecom-roundup-market-talk-87c22df6

US companies are helping Saudi Arabia to build an AI powerhouse