Month: September 2025

AI Data Center Boom Carries Huge Default and Demand Risks

“How does the digital economy exist?” asked John Medina, a senior vice president at Moody’s, who specializes in assessing infrastructure investments. “It exists on data centers.”

New investments in data centers to power Artificial Intelligence (AI) are projected to reach $3 trillion to $4 trillion by 2030, according to Nvidia. Other estimates suggest the investment needed to keep pace with AI demand could be as high as $7 trillion by 2030, according to McKinsey. This massive spending is already having a significant economic impact, with some analysis indicating that AI data center expenditure has surpassed the total impact from US consumer spending on GDP growth in 2025.

U.S. data center demand, driven largely by A.I., could triple by 2030, according to McKinsey. That would require data centers to make nearly $7 trillion in investment to keep up. OpenAI, SoftBank and Oracle recently announced a pact to invest $500 billion in A.I. infrastructure through 2029. Meta and Alphabet are also investing billions. Merely saying “please” and “thank you” to a chatbot eats up tens of millions of dollars in processing power, according to OpenAI’s chief executive, Sam Altman.

- OpenAI, SoftBank, and Oracle pledging to invest $500 billion in AI infrastructure through 2029.

- Nvidia and Intel collaborating to develop AI infrastructure, with Nvidia investing $5 billion in Intel stock.

- Microsoft spending $4 billion on a second data center in Wisconsin.

- Amazon planning to invest $20 billion in Pennsylvania for AI infrastructure.

Compute and Storage Servers within an AI Data Center. Photo credit: iStock quantic69

The spending frenzy comes with a big default risk. According to Moody’s, structured finance has become a popular way to pay for new data center projects, with more than $9 billion of issuance in the commercial mortgage-backed security and asset-backed security markets during the first four months of 2025. Meta, for example, tapped the bond manager Pimco to issue $26 billion in bonds to finance its data center expansion plans.

As more debt enters these data center build-out transactions, analysts and lenders are putting more emphasis on lease terms for third-party developers. “Does the debt get paid off in that lease term, or does the tenant’s lease need to be renewed?” Medina of Moody’s said. “What we’re seeing often is there is lease renewal risk, because who knows what the markets or what the world will even be like from a technology perspective at that time.”

Even if A.I. proliferates, demand for processing power may not. Chinese technology company DeepSeek has demonstrated that A.I. models can produce reliable outputs with less computing power. As A.I. companies make their models more efficient, data center demand could drop, making it much harder to turn investments in A.I. infrastructure into profit. After Microsoft backed out of a $1 billion data center investment in March, UBS wrote that the company, which has lease obligations of roughly $175 billion, most likely overcommitted.

Some worry costs will always be too high for profits. In a blog post on his company’s website, Harris Kupperman, a self-described boomer investor and the founder of the hedge fund Praetorian Capital, laid out his bearish case on A.I. infrastructure. Because the building needs upkeep and the chips and other technology will continually evolve, he argued that data centers will depreciate faster than they can generate revenue.

“Even worse, since losing the A.I. race is potentially existential, all future cash flow, for years into the future, may also have to be funneled into data centers with fabulously negative returns on capital,” he added. “However, lighting hundreds of billions on fire may seem preferable than losing out to a competitor, despite not even knowing what the prize ultimately is.”

It’s not just Silicon Valley with skin in the game. State budgets are being upended by tax incentives given to developers of A.I. data centers. According to Good Jobs First, a nonprofit that promotes corporate and government accountability in economic development, at least 10 states so far have lost more than $100 million per year in tax revenue to data centers. But the true monetary impact may never be truly known: Over one-third of states that offer tax incentives for data centers do not disclose aggregate revenue loss.

Local governments are also heralding the expansion of energy infrastructure to support the surge of data centers. Phoenix, for example, is expected to grow its data center power capacity by over 500 percent in the coming years — enough power to support over 4.3 million households. Virginia, which has more than 50 new data centers in the works, has contracted the state’s largest utility company, Dominion, to build 40 gigawatts of additional capacity to meet demand — triple the size of the current grid.

The stakes extend beyond finance. The big bump in data center activity has been linked to distorted residential power readings across the country. And according to the International Energy Agency, a 100-megawatt data center, which uses water to cool servers, consumes roughly two million liters of water per day, equivalent to 6,500 households. This puts strain on water supply for nearby residential communities, a majority of which, according to Bloomberg News, are already facing high levels of water stress.

“I think we’re in that era right now with A.I. models where it’s just who can make the bigger and better one,” said Vijay Gadepally, a senior scientist at the Lincoln Laboratory Supercomputing Center at the Massachusetts Institute of Technology. “But we haven’t actually stopped to think about, Well, OK, is this actually worth it?”

Postscript: November 23, 2025:

In this new AI era, consumers and workers are not what drives the economy anymore. Instead, it’s spending on all things AI, mostly with borrowed money or circular financing deals.

BofA Research noted that Meta and Oracle issued $75 billion in bonds and loans in September and October 2025 alone to fund AI data center build outs, an amount more than double the annual average over the past decade. They warned that “The AI boom is hitting a money wall” as capital expenditures consume a large portion of free cash flow. Separately, a recent Bank of America Global Fund Manager Survey found that 53% of participating fund managers felt that AI stocks had reached bubble proportions. This marked a slight decrease from a record 54% in the prior month’s survey, but the concern has grown over time, with the “AI bubble” cited as the top “tail risk” by 45% of respondents in the November 2025 poll.

JP Morgan Chase estimates up to $7 trillion of AI spending will be with borrowed money. “The question is not ‘which market will finance the AI-boom?’ Rather, the question is ‘how will financings be structured to access every capital market?’ according to strategists at the bank led by Tarek Hamid.

As an example of AI debt financing, Meta did a $27 billion bond offering. It wasn’t on their balance sheet. They paid 100 basis points over what it would cost to put it on their balance sheet. Special purpose vehicles happen at the tail end of the cycle, not the early part of the cycle, notes Rajiv Jain of GQG Partners.

References:

What Wall Street Sees in the Data Center Boom – The New York Times

Will billions of dollars big tech is spending on Gen AI data centers produce a decent ROI?

Gartner: AI spending >$2 trillion in 2026 driven by hyperscalers data center investments

Analysis: Cisco, HPE/Juniper, and Nvidia network equipment for AI data centers

Cisco CEO sees great potential in AI data center connectivity, silicon, optics, and optical systems

Networking chips and modules for AI data centers: Infiniband, Ultra Ethernet, Optical Connections

Superclusters of Nvidia GPU/AI chips combined with end-to-end network platforms to create next generation data centers

Lumen Technologies to connect Prometheus Hyperscale’s energy efficient AI data centers

Gartner: AI spending >$2 trillion in 2026 driven by hyperscalers data center investments

According to Gartner, global AI spending will reach close to US$1.5 trillion this year and will top $2 trillion in 2026 as ongoing demand fuel IT infrastructure investment. This significant growth is driven by hyperscalers’ ongoing investments in AI-optimized data centers and hardware, such as GPUs, along with increased enterprise adoption and the integration of AI into consumer devices like smartphones and PCs.

| Market | 2024 | 2025 | 2026 |

| AI Services | 259,477 | 282,556 | 324,669 |

| AI Application Software | 83,679 | 172,029 | 269,703 |

| AI Infrastructure Software | 56,904 | 126,177 | 229,825 |

| GenAI Models | 5,719 | 14,200 | 25,766 |

| AI-optimized Servers (GPU and Non-GPU AI Accelerators) | 140,107 | 267,534 | 329,528 |

| AI-optimized IaaS | 7,447 | 18,325 | 37,507 |

| AI Processing Semiconductors | 138,813 | 209,192 | 267,934 |

| AI PCs by ARM and x86 | 51,023 | 90,432 | 144,413 |

| GenAI Smartphones | 244,735 | 298,189 | 393,297 |

| Total AI Spending | 987,904 | 1,478,634 | 2,022,642 |

Source: Gartner (September 2025)

…………………………………………………………………………………………………………………………………………………….

Hyperscaler Investments:

Cloud service providers are heavily investing in data centers and AI-optimized hardware to expand their services at scale. Amazon, Google and Microsoft are all ploughing massive sums into their cloud infrastructure, while reaping the benefits of AI-driven market growth, as Canalys’s latest data showed last week.

Businesses are increasingly investing in AI infrastructure and services, though there’s a shift towards using commercial off-the-shelf solutions with embedded GenAI features rather than solely developing custom solutions.

A growing number of consumer products, including smartphones and PCs, are incorporating AI capabilities by default, contributing to the overall spending growth. IDC forecasts GenAI smartphones* to reach 54% of the market by 2028, while Gartner projects nearly 100% of premium models to feature GenAI by 2029, driving significant increases in both shipments and end-user spending.

* A GenAI smartphone is a a mobile device featuring a system-on-a-chip (SoC) with a powerful Neural Processing Unit (NPU) capable of running advanced Generative Artificial Intelligence (GenAI) models directly on the device. It enables features like content creation, personalized assistants, and real-time task processing without needing constant cloud connectivity. These phones are designed to execute complex AI tasks faster, more efficiently, and with enhanced privacy compared to standard smartphones that rely heavily on the internet for such functions.

AI hardware, particularly GPUs and other AI accelerators, accounts for a substantial portion of the growth, with hyperscaler spending on these components nearly doubling, according to a story at CIO Drive.

About Gartner AI Use Case Insights:

Gartner AI Use Case Insights is an interactive tool that helps technology and business leaders efficiently discover, evaluate, and prioritize AI use cases to potentially pursue. Clients can search over 500 use cases (applications of AI in specific industries) and over 380 case studies (real world examples) based on industry, business function, and Gartner’s assessment of potential business value.

……………………………………………………………………………………………………………………………………………

Postscript: November 23, 2025:

In this new AI era, consumers and workers are not what drives the economy anymore. Instead, it’s spending on all things AI, mostly with borrowed money or circular financing deals.

BofA Research noted that Meta and Oracle issued $75 billion in bonds and loans in September and October 2025 alone to fund AI data center build outs, an amount more than double the annual average over the past decade. They warned that “The AI boom is hitting a money wall” as capital expenditures consume a large portion of free cash flow. Separately, a recent Bank of America Global Fund Manager Survey found that 53% of participating fund managers felt that AI stocks had reached bubble proportions. This marked a slight decrease from a record 54% in the prior month’s survey, but the concern has grown over time, with the “AI bubble” cited as the top “tail risk” by 45% of respondents in the November 2025 poll.

JP Morgan Chase estimates up to $7 trillion of AI spending will be with borrowed money. “The question is not ‘which market will finance the AI-boom?’ Rather, the question is ‘how will financings be structured to access every capital market?’ according to strategists at the bank led by Tarek Hamid.

As an example of AI debt financing, Meta did a $27 billion bond offering. It wasn’t on their balance sheet. They paid 100 basis points over what it would cost to put it on their balance sheet. Special purpose vehicles happen at the tail end of the cycle, not the early part of the cycle, notes Rajiv Jain of GQG Partners.

AI spending is surging; companies accelerate AI adoption, but job cuts loom large

Will billions of dollars big tech is spending on Gen AI data centers produce a decent ROI?

Canalys & Gartner: AI investments drive growth in cloud infrastructure spending

AI Echo Chamber: “Upstream AI” companies huge spending fuels profit growth for “Downstream AI” firms

AI wave stimulates big tech spending and strong profits, but for how long?

Deutsche Telekom selects Iridium for NB-IoT direct-to-device (D2D) connectivity

Low Earth orbit (LEO) satellite operator Iridium Communications Inc announced a new partnership with Deutsche Telekom to deliver global connectivity to their customers through the Iridium NTN DirectSM service. Deutsche Telekom will gain roaming access to Iridium’s forthcoming 3GPP 5G NTN specification-based service, providing NB-IoT direct-to-device (D2D) connectivity that will keep customers, and their assets, connected from pole to pole. The two companies will be collaborating to integrate Iridium NTN Direct with Deutsche Telekom’s terrestrial global IoT network.

Deutsche Telekom is among the first mobile network operators to begin integrating Iridium NTN Direct with terrestrial infrastructure, positioning it at the forefront of standards-based IoT innovation in areas beyond the reach of traditional mobile networks and competing satellite networks. The launch of NTN Direct was made possible by Project Stardust. Launched in early 2024, it has enabled Iridium to upgrade its existing L-band LEO network to support narrowband non-terrestrial-networking (NB-NTN).

“Iridium NTN Direct is designed to complement terrestrial networks like Deutsche Telekom and provide seamless global coverage, extending the reach of their own infrastructure,” said Matt Desch, CEO, Iridium. “This partnership underscores the power of creating a straightforward, scalable solution that builds on existing technology to enable global service expansion.”

“We look forward to integrating Iridium as our next non-terrestrial roaming partner for IoT connectivity. By providing our customers with access to Iridium’s extensive LEO satellite network, they will benefit from broadened global NB-IoT coverage to reliably connect sensors, machines and vehicles,” said Jens Olejak, Head of Satellite IoT, Deutsche Telekom. “This convergence is now possible through affordable, 3GPP-standardized 5G devices that function across both terrestrial and non-terrestrial networks.”

Planned for commercial launch in 2026, the service will allow Deutsche Telekom’s IoT customers to roam onto the Iridium network to support use cases such as messaging, tracking, and status updates for IoT, automotive, and industrial devices, with applications spanning international cargo logistics, remote utility monitoring, smart agriculture, and emergency response.

The Iridium constellation is the only network delivering truly global coverage using L-band spectrum, providing reliable connectivity through both routine and extreme weather events, like hurricanes and blizzards. Its LEO orbit provides superior coverage, look angles and lower latency compared to geostationary systems. Upon successful integration and testing, Iridium and Deutsche Telekom plan to execute a roaming agreement to support full commercial service launch.

https://www.iridium.com/ntn-direct/

About Iridium Communications Inc:

Iridium® is the only mobile voice, data, and PNT satellite network that spans the entire globe. Iridium enables connections between people, organizations, and assets to and from anywhere, in real time. Together with its ecosystem of partner companies, Iridium delivers an innovative and rich portfolio of reliable solutions for markets that require truly global communications. In 2024, Iridium acquired Satelles and its positioning, navigation, and timing (PNT) service. Iridium Communications Inc. is headquartered in McLean, Va., U.S.A., and its common stock trades on the Nasdaq Global Select Market under the ticker symbol IRDM. For more information about Iridium products, services, and partner solutions, visit www.iridium.com.

…………………………………………………………………………………………………………………………………..

Activity at other LEO Operators:

- SpaceX/Starlink agreed to acquire AWS-4 and H-block spectrum from EchoStar for 17 billion last week. The frequencies will be used to strengthen the company’s direct-to-cell (D2C) business.

- Globalstar, has ratcheted up its ambitions by initiating a plan to bolster its upcoming C-3 network through the addition of another complementary constellation called HIBLEO-XL-1. Details are scant, but a Space Intel report from May 2022 revealed that Globalstar had registered with regulators in Europe its intention to launch 3,080 satellites at altitudes of between 485-700 km.

- SES has struck a deal with space technology specialist to K2 Space to develop a new generation of medium Earth orbit (MEO) satellites. An on-orbit mission is planned for Q1 2026. SES has also partnered with France-based laser specialist Cailabs to test optical ground stations that use lasers to transmit and receive data from space.

- “Our future MEO network will evolve through agile innovation cycles,” said SES CEO Adel Al-Saleh. “By collaborating with K2 Space and other trusted innovative partners, we’re combining our solutions development experience and operational depth with NewSpace agility to develop a flexible, software-defined network that adapts to customer requirements.”

References:

Huge significance of EchoStar’s AWS-4 spectrum sale to SpaceX

Qualcomm and Iridium launch Snapdragon Satellite for 2-Way Messaging on Android Premium Smartphones

Iridium Introduces its NexGen Satellite IoT Data Service

Satellite 2024 conference: Are Satellite and Cellular Worlds Converging or Colliding?

SatCom market services, ITU-R WP 4B, 3GPP Release 18 and ABI Research Market Forecasts

Economic Times: Qualcomm, MediaTek developing chipsets for Satcom services

ABI Research and CCS Insight: Strong growth for satellite to mobile device connectivity (messaging and broadband internet access)

Qualcomm announces 4 new SoC’s for IoT applications and use cases

FCC proposes regulatory framework for space-mobile network operator collaboration

Nokia & Deutsche Bahn deploy world’s first 1900 MHz 5G radio network meeting FRMCS requirements

Nokia and Deutsche Bahn (Germany’s national railway company), today announced they have deployed the first commercial 1900 MHz 5G railway network with a 5G SA core. The new network meets Future Railway Mobile Communication System (FRMCS) requirements by supporting automated, resilient rail operations. The rollout marks a transition away from legacy GSM-R [1.], adding self-healing, failover, real-time monitoring, and low latency to enable smarter stations, infrastructure, and safety-critical applications. Built to support full railway automation, FRMCS integrates advanced technologies like AI and underpins a more competitive, capable and future-ready industry.

………………………………………………………………………………………………………………………………………………………………………………………

Note 1. Global System for Mobile Communications-Railway (GSM-R) is a digital, cellular telecommunications system designed specifically for railways to provide reliable voice and data services for operations, such as communication between drivers and signalers and for systems like European Train Control System (ETCS). Based on the public GSM standard, it includes railway-specific features like advanced speech services and is known for its secure and dependable performance at high speeds, supporting trains up to 500 km/hr without losing communications.

………………………………………………………………………………………………………………………………………………………………………………………

The new technology is being implemented at DB’s digital railway test field in the Ore Mountains (Erzgebirge, Germany), running on live trains. Key features include built-in failover, self-healing capabilities and real-time monitoring to ensure high availability and efficiency. The solution will also be used for the European FP2-MORANE-2 project, which evolves from earlier FRMCS initiatives to advance the digitalization of rail across Europe. The contract extends Deutsche Bahn’s ongoing test trials with Nokia’s 5G SA core and 3700 MHz (n78) radio network, while upgrading to a new solution that includes Nokia’s 1900 MHz (n101) 5G radio network equipment from its AirScale portfolio and optimized 5G SA core. Designed for a smooth migration from GSM-R to FRMCS, it delivers the high reliability and low latency needed for modern rails.

DB Test Track in Erzgebirge, Germany. Photo Credit: Copyright Deutsche Bahn

Quotes:

Rainer Fachinger, Head of Telecom Platforms at DB InfraGO, said: “Deutsche Bahn wants to benefit from modern 5G-based telecommunications to upgrade the railway communication infrastructure. Collaborating with technology experts like Nokia is key for DB to bring the latest innovations into our real-world operations. This deployment on test tracks builds on a successful pre-FRMCS 5G trial conducted with Nokia and aims to standardize our private mobile network as a foundation for further pilots and future rollout.”

Rolf Werner, Head of Europe at Nokia, said: “Nokia and DB have been frontrunners in advancing FRMCS. We are proud to deliver the first-ever commercial 5G solution that utilizes the 1900 MHz spectrum band on the rail track. This is a milestone that will unlock key benefits for DB, including automated train operations, smart maintenance, and intelligent infrastructure and stations. We believe this launch will serve as an important benchmark for FRMCS upgrades in rail networks around the world in the coming years.”

………………………………………………………………………………………………………………………………………………………….

Nokia is also working with ProRail in the Netherlands on the first cloud-native GSM-R core, building a bridge to FRMCS and lowering long-term costs across European rail networks.

………………………………………………………………………………………………………………………………………………………….

References:

Nokia, Deutsche Bahn claim world’s first 5G railway network in n101 band

Multimedia, technical information and related news

Web Page: FRMCS

Product Page: AirScale Radio Access

Product Page: Core Enterprise Solution for Railways

Blog: Laying the tracks for digital railways

Social Media Post: Nokia at EU FP2-MORANE2

Web Page: Deutsche Bahn

China Unicom & Huawei deploy 2.1 GHz 8T8R 5G network for high-speed railway in China

SNS Telecom & IT: Private 5G Market Nears Mainstream With $5 Billion Surge

Samsung & SK Telecom offer Korea’s first LTE-Railway network

Google’s Internet Access for Emerging Markets – Managed WiFi Network for India Railways

Ericsson integrates Agentic AI into its NetCloud platform for self healing and autonomous 5G private networks

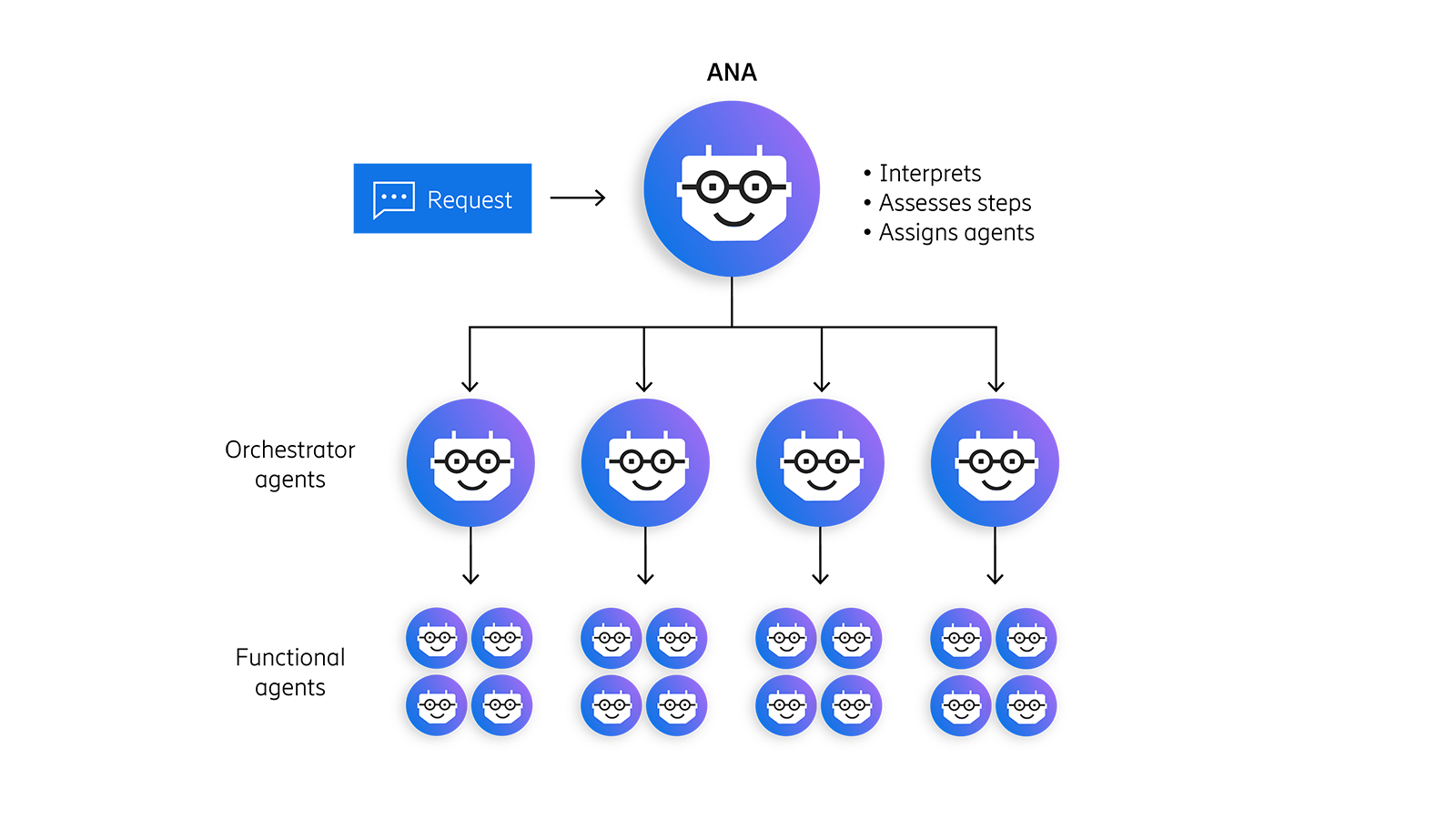

Ericsson is integrating Agentic AI into its NetCloud platform to create self-healing and autonomous 5G private (enterprise) networks. This initiative upgrades the existing NetCloud Assistant (ANA), a generative AI tool, into a strategic partner capable of managing complex workflows and orchestrating multiple AI agents. The agentic AI agent aims to simplify private 5G adoption by reducing deployment complexity and the need for specialized administration. This new agentic architecture allows the new Ericsson system to interpret high-level instructions and autonomously assign tasks to a team of specialized AI agents.

Key AI features include:

- Agentic organizational hierarchy: ANA will be supported by multiple orchestrator and functional AI agents capable of planning and executing (with administrator direction). Orchestrator agents will be deployed in phases, starting with a troubleshooting agent planned in Q4 2025, followed by configuration, deployment, and policy agents planned in 2026. These orchestrators will connect with task, process, knowledge, and decision agents within an integrated agentic framework.

- Automated troubleshooting: ANA’s troubleshooting orchestrator will include automated workflows that address the top issues identified by Ericsson support teams, partners, and customers, such as offline devices and poor signal quality. Planned to launch in Q4 2025, this feature is expected to reduce downtime and customer support cases by over 20 percent.

- Multi-modal content generation: ANA can now generate dynamic graphs to visually represent trends and complex query results involving multiple data points.

- Explainable AI: ANA displays real-time process feedback, revealing steps taken by AI agents in order to enhance transparency and trust.

- Expanded AIOps insights: NetCloud AIOps will be expanded to provide isolation and correlation of fault, performance, configuration, and accounting anomalies for Wireless WAN and NetCloud SASE. For Ericsson Private 5G, NetCloud is expected to provide service health analytics including KPI monitoring and user equipment connectivity diagnostics. Planned availability Q4 2025.

Manish Tiwari, Head of Enterprise 5G, Ericsson Enterprise Wireless Solutions, adds: “With the integration of Ericsson Private 5G into the NetCloud platform, we’re taking a major step forward in making enterprise connectivity smarter, simpler, and adaptive. By building on powerful AI foundations, seamless lifecycle management, and the ability to scale securely across sites, we are providing flexibility to further accelerate digital transformation across industries. This is about more than connectivity: it is about giving enterprises the business-critical foundation they need to run IT and OT systems with confidence and unlock the next wave of innovation for their businesses.”

Pankaj Malhotra, Head of WWAN & Security, Ericsson Enterprise Wireless Solutions, says: “By introducing agentic AI into NetCloud, we’re enabling enterprises to simplify deployment and operations while also improving reliability, performance, and user experience. More importantly, it lays the foundation for our vision of fully autonomous, self-optimizing 5G enterprise networks, that can power the next generation of enterprise innovation.”

Agentic AI and the Future of Communications for Autonomous Vehicle (V2X)

Ericsson completes Aduna joint venture with 12 telcos to drive network API adoption

Ericsson reports ~flat 2Q-2025 results; sees potential for 5G SA and AI to drive growth

Ericsson revamps its OSS/BSS with AI using Amazon Bedrock as a foundation

Ericsson’s sales rose for the first time in 8 quarters; mobile networks need an AI boost

Elon Musk: Starlink could become a global mobile carrier; 2 year timeframe for new smartphones

Yesterday, during a segment of the All-in Podcast dedicated to the SpaceX-EchoStar spectrum sales agreement [1.], Space X/Starlink boss Elon Musk was asked if this sets the industry down a path where Starlink’s end goal is to emerge as a global carrier that, effectively, would limit the role of regional carriers. “That would be one of the options,” Musk responded. Musk downplayed any threat against AT&T, Verizon and T-Mobile. The podcast section dedicated to the EchoStar agreement starts around the 16:50 mark. You can start watching at that point via this YouTube link.

Note 1. SpaceX’s $17 billion agreement with EchoStar includes $8.5 billion in stock, plus $2 billion of cash interest payments payable on EchoStar debt. Separately, AT&T’s is paying $23 billion – all in cash – for its acquisition of EchoStar’s spectrum.

Regarding the EchoStar spectrum deal, Musk said, “This is kind of a long term thing. It will allow SpaceX to deliver high bandwidth connectivity directly from the satellites to the phones.”

Musk said that deal would not seriously challenge the big three U.S. mobile carriers. He said:

“To be clear, we’re not going to put the other carriers out of business. They’re still going to be around because they own a lot of spectrum. But, yes, you should be able to have a Starlink, like you have an AT&T or T-Mobile or Verizon, or whatever. You can have an account with Starlink that works with your Starlink [satellite] antenna at home with … Wi-Fi, as well as on your phone. We’d be a comprehensive solution for high bandwidth at home and high bandwidth for direct-to-cell.”

“Could you buy Verizon?” Musk was asked. “Not out of the question. I suppose that may happen,” Musk said with a chuckle.

That idea at least “highlights the possibility that SpaceX could pursue additional spectrum,” LightShed Partners analysts Walter Piecyk and Joe Galone explained in this blog post. “We highly doubt SpaceX has any interest in the people or infrastructure of a telco, there are plenty of compelling spectrum assets in and outside of those carriers to consider.”

Getting smartphones equipped with chips to support those new frequency bands will take some time. Musk estimated that’s “probably a two-year timeframe.” LightShed Partners analysts agreed, “On devices, Elon’s two-year timeline for a Starlink phone isn’t surprising given spectrum banding, chip development, and satellite integration. He’s mused before that if phone manufacturers continued to hinder his technology that he “would make a phone as a forcing function to compete with them.”

Some analysts view MVNO agreements as Starlink’s best route to becoming a full scale mobile carrier of satellite and terrestrial wireless services.

“The most plausible business model is that Starlink partners with MNOs for them to resell the service or embed the service as part of their plans,” Lluc Palerm Serra, research director at Analysys Mason, told PCMag.

LightShed Partners agreed. Musk’s point that SpaceX isn’t out to displace the incumbent carriers “reinforced our view that securing an MVNO deal will be essential if SpaceX wants to deliver a Starlink phone directly to consumers,” LightShed’s Walter Piecyk and Joe Galone explained in this blog post.

“In parallel, we’re working on the satellites and working with the handset makers to add these frequencies to the phones,” Musk said. “And the phones will then handshake well to achieve high-bandwidth connectivity. The net effect is that you should be able to watch videos anywhere on your phone.”

AT&T CEO John Stankey addressed Starlink’s “mobile-first” possibility earlier this week at an investor conference. Starlink’s current access to spectrum, including what is coming way of EchoStar, isn’t enough to create a “robust terrestrial replacement,” he said. But he acknowledged that, with the right type of commitments, perhaps it could happen someday.

“EchoStar still owns the highly lucrative 700 and AWS-3 spectrum, in which we note that all three wireless carriers have a robust ecosystem,” TD Cowen analyst Gregory Williams wrote in a note earlier this week. “Whether EchoStar sells more [spectrum] in short order remains to be seen,” TD Cowen’s Williams wrote Monday, explaining that, with the FCC dispute resolved, it may hold onto its portfolio longer. “EchoStar is not a forced seller, now has an excellent balance sheet and liquidity, and may desire to hold onto the spectrum as long as possible for higher sale valuations at a later date,” he added.

References:

https://www.lightreading.com/5g/turning-starlink-into-a-global-carrier-one-of-the-options-musk-says

Elon Talks Starlink Phone. Disruption Looms for Telcos and Apple

Huge significance of EchoStar’s AWS-4 spectrum sale to SpaceX

U.S. BEAD overhaul to benefit Starlink/SpaceX at the expense of fiber broadband providers

Telstra selects SpaceX’s Starlink to bring Satellite-to-Mobile text messaging to its customers in Australia

SpaceX launches first set of Starlink satellites with direct-to-cell capabilities

SpaceX has majority of all satellites in orbit; Starlink achieves cash-flow breakeven

Highlights of Nokia’s Smart Factory in Oulu, Finland for 5G and 6G innovation

Nokia has opened a Smart Factory in Oulu, Finland, for 5G/6G design, manufacturing, and testing, integrating AI technologies and Industry 4.0 applications. It brings ~3,000 staff under one roof and is positioned as Europe’s flagship site for radio access (RAN) innovation.

The Oulu campus will initially focus on 5G, including: Standardization, System-on Chips as well as 5G radio hardware and software and patents. Oulu Factory, part of the new campus, will target New Production Introduction for Nokia’s 5G radio and baseband products. The new campus strengthens Oulu’s ecosystem as a global testbed for resilient and secure networks for both civilian and defense applications.

At Oulu “Home of Radio” campus, Nokia’s research and innovation underpins high quality, tested world class products readymade for customers across markets. Nokia’s experts will continue to foster innovation, from Massive MIMO radios like Osprey and Habrok to next-generation 6G solutions, creating secure, high-performance, future-proof connectivity.

Sustainability is integral to the facility. Renewable energy is used throughout the site, with additional energy used to heat 20,000 households in Oulu. The on-site energy station is one of the world’s largest CO2-based district heating and cooling plants.

Active 6G proof-of-concept trials will be tested using ~7 GHz and challenging propagation scenarios.

“Our teams in Oulu are shaping the future of 5G and 6G developing our most advanced radio networks. Oulu has a unique ecosystem that integrates Nokia’s R&D and smart manufacturing with an ecosystem of partners – including universities, start-ups and NATO’s DIANA test center. Oulu embodies our culture of innovation and the new campus will be essential to advancing connectivity necessary to power the AI supercycle,” said Justin Hotard, President and CEO of Nokia

Nokia Oulu Facts:

- Around 3,000 employees and 40 nationalities working on the campus.

- Oulu campus covers the entire product lifecycle of a product, from R&D to manufacturing and testing of the products.

- Footprint of the building is overall 55,000 square metres, including manufacturing, R&D and office space.

- Green campus with all energy purchased green and all surplus energy generated fed back into the district heating system and used to heat 20,000 local households.

- The campus boasts 100% waste utilization rate and 99% avoidance in CO2 emissions.

- Construction started in the second half of 2022, with the first employees moving into the facility in the first half of this year.

- YIT constructed the site and Arkkitehtitoimisto ALA were the architects.

References:

https://www.sdxcentral.com/analysis/behind-the-scenes-at-nokias-new-home-of-radio/

Will the wave of AI generated user-to/from-network traffic increase spectacularly as Cisco and Nokia predict?

Nokia’s Bell Labs to use adapted 4G and 5G access technologies for Indian space missions

Indosat Ooredoo Hutchison and Nokia use AI to reduce energy demand and emissions

Verizon partners with Nokia to deploy large private 5G network in the UK

Nokia selects Intel’s Justin Hotard as new CEO to increase growth in IP networking and data center connections

Nokia sees new types of 6G connected devices facilitated by a “3 layer technology stack”

Nokia and Eolo deploy 5G SA mmWave “Cloud RAN” network

Huge significance of EchoStar’s AWS-4 spectrum sale to SpaceX

EchoStar has entered into a definitive agreement to sell its entire portfolio of prized AWS-4 [1.] and H-block spectrum licenses to SpaceX in a deal valued at approximately $19 billion. The spectrum purchase allows SpaceX to start building and deploying upgraded, laser-connected satellites that the company said will expand the cell network’s capacity by “more than 100 times.”

This deal marks EchoStar/Dish Network’s exit as a mobile network provider (goodbye multi-vendor 5G OpenRAN) which once again makes the U.S. wireless market a three-player (AT&T, Verizon, T-Mobile) affair. Despite that operational failure, the deal helps EchoStar address regulatory pressure and strengthen its financial position, especially after AT&T agreed to buy spectrum licenses from EchoStar for $23 billion.

The companies also agreed to a deal that will enable EchoStar’s Boost Mobile subscribers to access Starlink direct-to-cell (D2C) service to extend satellite service to areas without mobile network service.

……………………………………………………………………………………………………………………………………………………………

Note 1. The AWS-4 spectrum band (2000-2020 MHz and 2180-2200 MHz) is widely considered the “golden band” for D2C services. Unlike repurposed terrestrial spectrum, the AWS-4 band was originally allocated for Mobile Satellite Service (MSS).

…………………………………………………………………………………………………………………………………………………………..

The AWS-4 spectrum acquisition transforms SpaceX from a D2C partner into an owner that controls its dedicated MSS spectrum. The deal with EchoStar will allow SpaceX to operate Starlink direct-to-cell (D2C) services on frequencies it owns, rather than relying solely on those leased from mobile carriers like T-Mobile and other mobile operators it’s working with (see References below).

Roger Entner wrote that SpaceX is now a “kingmaker.” He emailed this comment:

“With 50 MHz of dedicated spectrum, the raw bandwidth that Starlink can deliver increases by 1.5 GBbit/s. This is a substantial increase in speed to customers. The math is 30 bit/s/hz which is LTE spectral efficiency x 50 MHz = 1.5 Gbit/s. “This agreement makes Starlink an even more serious play in the D2C market as it will have first hand experience with how to utilize terrestrial spectrum. It is one thing to have this experience through a partner, this a completely different game when you own it.”

The combination of T-Mobile’s terrestrial network and Starlink’s enhanced D2C capabilities allows T-Mobile to market a service with virtually seamless connectivity that eliminates outdoor dead zones using Starlink’s spectrum.

“For the past decade, we’ve acquired spectrum and facilitated worldwide 5G spectrum standards and devices, all with the foresight that direct-to-cell connectivity via satellite would change the way the world communicates,” said Hamid Akhavan, president & CEO, EchoStar. “This transaction with SpaceX continues our legacy of putting the customer first as it allows for the combination of AWS-4 and H-block spectrum from EchoStar with the rocket launch and satellite capabilities from SpaceX to realize the direct-to-cell vision in a more innovative, economical and faster way for consumers worldwide.”

“We’re so pleased to be doing this transaction with EchoStar as it will advance our mission to end mobile dead zones around the world,” said Gwynne Shotwell, president & COO, SpaceX. “SpaceX’s first generation Starlink satellites with Direct to Cell capabilities have already connected millions of people when they needed it most – during natural disasters so they could contact emergency responders and loved ones – or when they would have previously been off the grid. In this next chapter, with exclusive spectrum, SpaceX will develop next generation Starlink Direct to Cell satellites, which will have a step change in performance and enable us to enhance coverage for customers wherever they are in the world.”

EchoStar anticipates this transaction with SpaceX along with the previously announced spectrum sale will resolve the Federal Communications Commission’s (FCC) inquiries. Closing of the proposed transaction will occur after all required regulatory approvals are received and other closing conditions are satisfied.

The EchoStar-Space X transaction is structured with a balanced mix of cash and equity plus interest payments:

-

Cash and Stock Components: SpaceX will provide up to $8.5 billion in cash and an equivalent amount in its own stock, with the valuation fixed at the time the agreement was signed. This 50/50 structure provides EchoStar with immediate liquidity to address its creditors while allowing SpaceX to preserve capital for its immense expenditures on Starship and Starlink development. A pure stock deal would have been untenable for EchoStar, which is saddled with over $26.4 billion in debt, while a pure cash deal would have strained SpaceX.

-

Debt Servicing: In a critical provision underscoring EchoStar’s dire financial state, SpaceX has also agreed to fund approximately $2 billion of EchoStar’s cash interest payments through November 2027.

-

Commercial Alliance: The deal establishes a long-term commercial partnership wherein EchoStar’s Boost Mobile subscribers will gain access to SpaceX’s next-generation Starlink D2C service. This provides a desperately needed lifeline for the struggling Boost brand. More strategically, this alliance serves as a masterful piece of regulatory maneuvering. It allows regulators to plausibly argue that they have preserved a “fourth wireless competitor,” providing the political cover necessary to approve a deal that permanently cements a three-player terrestrial market.

The move comes amid rapidly increasing U.S. mobile data usage. In 2024, Americans used a record 132 trillion megabytes of mobile data, up 35% over the prior all-time record, industry group CTIA said Monday.

About EchoStar Corporation:

EchoStar Corporation (Nasdaq: SATS) is a premier provider of technology, networking services, television entertainment and connectivity, offering consumer, enterprise, operator and government solutions worldwide under its EchoStar®, Boost Mobile®, Sling TV, DISH TV, Hughes®, HughesNet®, HughesON™, and JUPITER™ brands. In Europe, EchoStar operates under its EchoStar Mobile Limited subsidiary and in Australia, the company operates as EchoStar Global Australia. For more information, visit www.echostar.com and follow EchoStar on X (Twitter) and LinkedIn.

©2025 EchoStar, Hughes, HughesNet, DISH and Boost Mobile are registered trademarks of one or more affiliate companies of EchoStar Corp.

About SpaceX:

SpaceX designs, manufactures, and launches the world’s most advanced rockets and spacecraft. The company was founded in 2002 to revolutionize space technology, with the ultimate goal of making life multiplanetary. As the world’s leading provider of launch services, SpaceX is leveraging its deep experience with both spacecraft and on-orbit operations to deploy the world’s most advanced internet and Direct to Cell networks. Engineered to end mobile dead zones around the world, Starlink’s satellites with Direct to Cell capabilities enable ubiquitous access to texting, calling, and browsing wherever you may be on land, lakes, or coastal waters.

………………………………………………………………………………………………………………………………………………………………………………………………

References:

Mulit-vendor Open RAN stalls as Echostar/Dish shuts down it’s 5G network leaving Mavenir in the lurch

AT&T to buy spectrum Licenses from EchoStar for $23 billion

SpaceX launches first set of Starlink satellites with direct-to-cell capabilities

Starlink’s Direct to Cell service for existing LTE phones “wherever you can see the sky”

Space X “direct-to-cell” service to start in the U.S. this fall, but with what wireless carrier? (T-Mobile)

KDDI unveils AU Starlink direct-to-cell satellite service

Starlink Direct to Cell service (via Entel) is coming to Chile and Peru be end of 2024

Telstra selects SpaceX’s Starlink to bring Satellite-to-Mobile text messaging to its customers in Australia

Telstra partners with Starlink for home phone service and LEO satellite broadband services

One NZ launches commercial Satellite TXT service using Starlink LEO satellites

Ookla: Global performance of Apple’s in-house designed C1 modem in iPhone 16e

Apple designed the C1 modem entirely in-house [1.] as part of its Apple Silicon efforts, a significant move to reduce its reliance on external vendors like Qualcomm. The C1 modem is featured in the iPhone 16e and is manufactured by TSMC, which produces the chip on 4nm and 7nm process nodes. The C1 modem supports all the low and mid-band 5G spectrum but it doesn’t support 5G mmWave spectrum. It also supports Wi-Fi 6 with 2×2 MIMO and Bluetooth 5.3, but lacks Wi-Fi 7 support unlike the rest of the iPhone 16 series of devices.

Note 1. In 2019, Apple purchased Intel’s smartphone modem business for $1 billion with the explicit goal of eventually designing its own modems. The C1 modem is the first major outcome of this strategic shift, replacing Qualcomm’s modems in certain Apple devices, starting with the iPhone 16e. Historically, Apple relied upon Qualcomm to provide most of its iPhone modems so its decision to use the C1 modem in the iPhone 16e is considered a significant move.

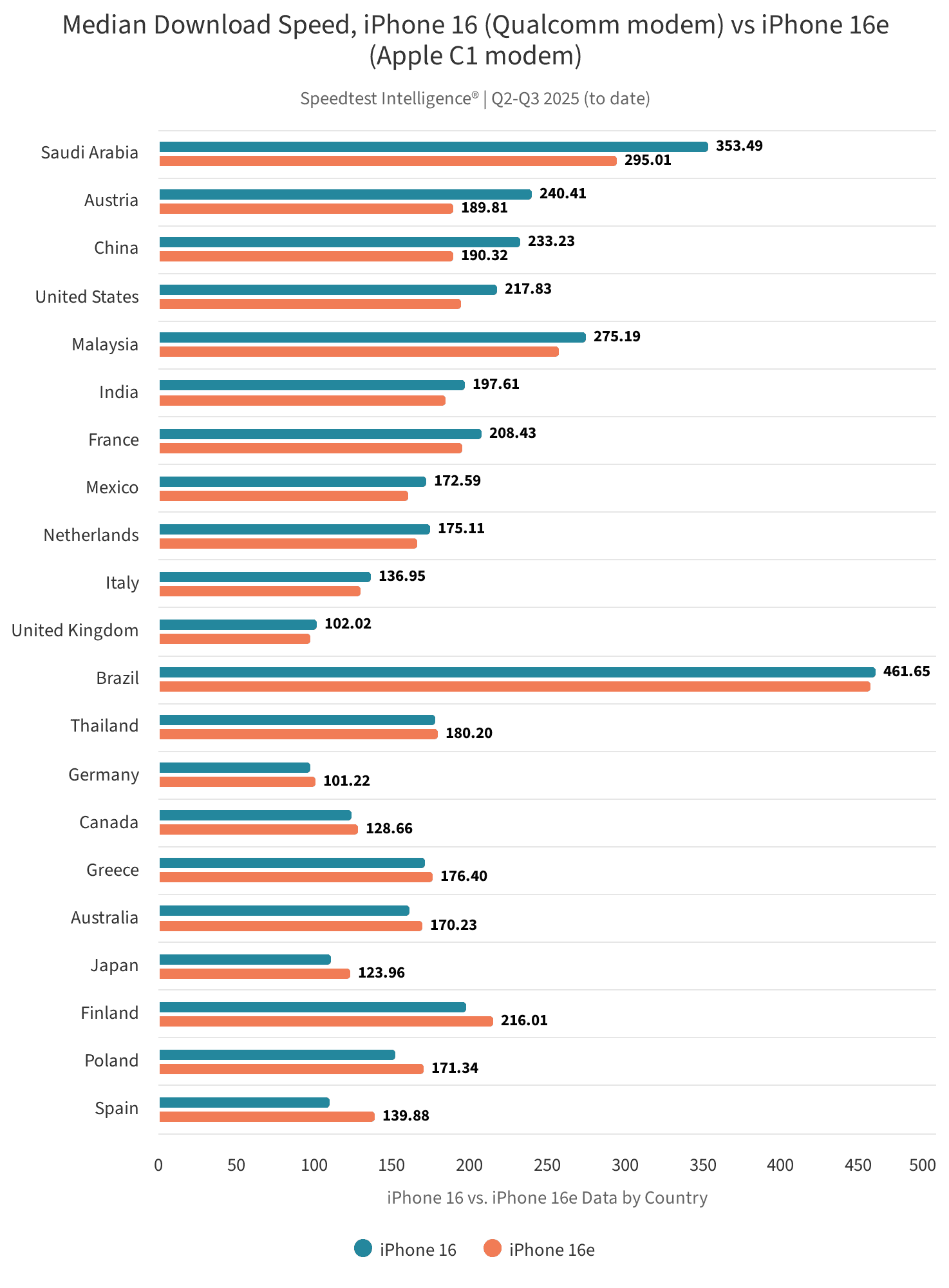

Ookla has just analyzed the performance of the iPhone 16e and compared it to the performance of the iPhone 16 on 5G, using Speedtest Intelligence data for Q2 and Q3 2025.

A few important takeaways include:

- The iPhone 16e with the Apple C1 modem performs similarly to the iPhone 16 with the Qualcomm modem in the vast majority of markets we examined.

- The iPhone 16 with Qualcomm modem performs better on more capable mobile networks that have a 5G standalone (SA) footprint supporting higher carrier aggregation combinations and uplink MIMO technology. The iPhone 16e with the C1 modem is not able to achieve the same frontier of performance in these markets due to its technical limitations. Key examples of networks facilitating stronger performance for the iPhone 16 include those in Saudi Arabia, China, India and the U.S.

- In the U.S., T-Mobile users experienced better performance on the iPhone 16, which supports four-carrier aggregation, than iPhone 16e users with the Apple C1 modem, which supports a maximum of three-carrier aggregation. Median download speed for the iPhone 16 on T-Mobile’s network was 317.64 Mbps, compared to 252.80 Mbps on the iPhone 16e.

- The iPhone 16e performs strongly on other key performance metrics. Across the markets analyzed, it tended to record better download speeds among the 10th percentile of users (those with the lowest overall download speeds), and across 10th, median and 90th percentiles for upload speeds. At the lower 10th percentile it’s likely that more users are connected solely to low-band spectrum (sub GHz) which offers better coverage but slower speeds.

Japan is the most popular market for the iPhone 16e, with 11.3% of samples from the 16 lineup, followed chiefly by European markets. Adoption of the iPhone 16e depends on a range of factors, including the level of subsidies within a market and to which devices they are directed, level of price sensitivity among consumers, as well as launch timing, and consumer preferences for different form factors and device features.

The combination of these factors likely explains the relatively higher 16e penetration observed in Japan. Beyond the historic appetite for lower-cost, compact iPhones like the SE (to which the 16e is a spiritual successor) and a subsidy structure that favors entry variants, the recent weakness of the yen has made the Pro and Pro Max models more expensive in local terms, prompting elastic buyers (like students and families) to shift down the line-up.

……………………………………………………………………………………………………………………………………………………………………………….

The iPhone 16e outperforms the iPhone 16 in median upload speed in 15 of the 21 markets we examined. Canada is perhaps the most dramatic example where iPhone 16e median upload speeds of 23.91 Mbps are more than double the iPhone 16’s median upload speed of 11.57 Mbps. However, once again we saw the iPhone 16 perform strongly in median upload speed in countries with advanced 5G networks such as Saudi Arabia and China. Although in the US market the iPhone 16e outperformed the iPhone 16 in upload speeds, when we drilled down further (see the US section of this report), we found that upload performance varied between the different operators.

…………………………………………………………………………………………………………………………………………………………………………….

In the U.S., the iPhone 16 performs better than the iPhone 16e in median download speed for T-Mobile and Verizon customers. This is a slight change from our March 2025 analysis when the iPhone 16e performed better for Verizon customers than the iPhone 16. Because the iPhone 16 supports mmWave spectrum and mmWave is part of Verizon’s 5G Ultra Wideband service, it’s likely that this is a contributing factor in the iPhone 16’s better performance on the Verizon network. Verizon users on the iPhone 16 only clocked a median download speed of 172.12 Mbps, which is significantly lower than iPhone 16 users on T-Mobile’s network that logged a median download speed of 317.64 Mbps.

When comparing the median upload speeds of the iPhone 16 and 16e across US providers there’s a much different story than when comparing median download speeds. On Verizon’s and AT&T’s networks the iPhone 16e outperforms the iPhone 16 in upload speeds. Verizon iPhone 16e users experienced median upload speeds of 11.51 Mbps compared to Verizon iPhone 16 users that logged median upload speeds of 9.67 Mbps. Likewise, AT&T iPhone 16e users experienced median upload speeds of 8.47 Mbps compared to iPhone 16 users with median upload speeds of 7.09 Mbps.

Instead of seeing the iPhone 16 outperform the iPhone 16e at T-Mobile, the two devices are nearly equal in median UL performance with 16e users seeing median upload speeds of 11.79 Mbps compared to iPhone 16 users with 11.70 Mbps. These results are very similar to what we uncovered in our March 2025 report where we saw clear differences in the iPhone 16e and the iPhone 16 performance for AT&T and Verizon users but nearly equal performance for T-Mobile users.

References:

https://www.ookla.com/articles/iphone-c1-modem-performance-q2-q3-2025

Apple in advanced talks to buy Intel’s 5G modem business for $1 billion

China’s mobile data consumption slumps; Apple’s market share shrinks-no longer among top 5 vendors

AST SpaceMobile completes 1st ever LEO satellite voice call using AT&T spectrum and unmodified Samsung and Apple smartphones

OpenAI and Broadcom in $10B deal to make custom AI chips

Overview:

Late last October, IEEE Techblog reported that “OpenAI the maker of ChatGPT, was working with Broadcom to develop a new artificial intelligence (AI) chip focused on running AI models after they’ve been trained.” On Friday, the WSJ and FT (on-line subscriptions required) separately confirmed that OpenAI is working with Broadcom to develop custom AI chips, a move that could help alleviate the shortage of powerful processors needed to quickly train and release new versions of ChatGPT. OpenAI plans to use the new AI chip internally, according to one person close to the project, rather than make them available to external customers.

………………………………………………………………………………………………………………………………………………………………………………………….

Broadcom:

During its earnings call on Thursday, Broadcom’s CEO Hock Tan said that it had signed up an undisclosed fourth major AI developer as a custom AI chip customer, and that this new customer had committed to $10bn in orders. While Broadcom did not disclose the names of the new customer, people familiar with the matter confirmed OpenAI was the new client. Broadcom and OpenAI declined to comment, according to the FT. Tan said the deal had lifted the company’s growth prospects by bringing “immediate and fairly substantial demand,” shipping chips for that customer “pretty strongly” starting next year. “The addition of a fourth customer with immediate and fairly substantial demand really changes our thinking of what 2026 would be starting to look like,” Tan added.

Image credit: © Dado Ruvic/Reuters

HSBC analysts have recently noted that they expect to see a much higher growth rate from Broadcom’s custom chip business compared with Nvidia’s chip business in 2026. Nvidia continues to dominate the AI silicon market, with “hyperscalers” still representing the largest share of its customer base. While Nvidia doesn’t disclose specific customer names, recent filings show that a significant portion of their revenue comes from a small number of unidentified direct customers, which likely are large cloud providers like Microsoft, Amazon, Alphabet (Google), and Meta Platforms.

In August, Broadcom launched its Jericho networking chip, which is designed to help speed up AI computing by connecting data centers as far as 60 miles apart. By August, Broadcom’s market value had surpassed that of oil giant Saudi Aramco, making the chip firm the world’s seventh-largest publicly listed company.

……………………………………………………………………………………………………………………………………….

Open AI:

OpenAI CEO Sam Altman has been saying for months that a shortage of graphics processing units, or GPUs, has been slowing his company’s progress in releasing new versions of its flagship chatbot. In February, Altman wrote on X that ChatGPT-4.5, its then-newest large language model, was the closest the company had come to designing an AI model that behaved like a “thoughtful person,” but there were very high costs that came with developing it. “We will add tens of thousands of GPUs next week and roll it out to the plus tier then. (hundreds of thousands coming soon, and i’m pretty sure y’all will use every one we can rack up.)”

In recent years, OpenAI has relied heavily on so-called “off the shelf” GPUs produced by Nvidia, the biggest player in the chip-design space. But as demand from large AI firms looking to train increasingly sophisticated models has surged, chip makers and data-center operators have struggled to keep up. The company was one of the earliest customers for Nvidia’s AI chips and has since proven to be a voracious consumer of its AI silicon.

“If we’re talking about hyperscalers and gigantic AI factories, it’s very hard to get access to a high number of GPUs,” said Nikolay Filichkin, co-founder of Compute Labs, a startup that buys GPUs and offers investors a share in the rental income they produce. “It requires months of lead time and planning with the manufacturers.”

To solve this problem, OpenAI has been working with Broadcom for over a year to develop a custom chip for use in model training. Broadcom specializes in what it calls XPUs, a type of semiconductor that is designed with a particular application—such as training ChatGPT—in mind.

Last month, Altman said the company was prioritizing compute “in light of the increased demand from [OpenAI’s latest model] GPT-5” and planned to double its compute fleet “over the next 5 months.” OpenAI also recently struck a data-center deal with Oracle that calls for OpenAI to pay more than $30 billion a year to the cloud giant, and signed a smaller contract with Google earlier this year to alleviate computing shortages. It is also embarking on its own data-center construction project, Stargate, though that has gotten off to a slow start.

OpenAI’s move follows the strategy of tech giants such as Google, Amazon and Meta, which have designed their own specialized custom chips to run AI workloads. The industry has seen huge demand for the computing power to train and run AI models.

………………………………………………………………………………………………………………………………………………………………………………..

References:

https://www.ft.com/content/e8cc6d99-d06e-4e9b-a54f-29317fa68d6f

https://www.wsj.com/tech/ai/openai-broadcom-deal-ai-chips-5c7201d2