AI

GSMA, ETSI, IEEE, ITU & TM Forum: AI Telco Troubleshooting Challenge + TelecomGPT: a dedicated LLM for telecom applications

The GSMA — along with ETSI, IEEE GenAINet, the ITU, and TM Forum — today opened an innovation challenge calling on telco operators, AI researchers, and startups to build large-language models (LLMs) capable of root-cause analysis (RCA) for telecom network faults. The AI Telco Troubleshooting Challenge is supported by Huawei, InterDigital, NextGCloud, RelationalAI, xFlowResearch and technical advisors from AT&T.

The new competition invites teams to submit AI models in three categories: Generalization to New Faults will assess the best performing LLMs for RCA; Small Models at the Edge will evaluate lightweight edge-deployable models; and Explainability/Reasoning will focus on the AI systems that clearly explain their reasoning. Additional categories will include securing edge-cloud deployments and enabling AI services for application developers.

The goal is to deliver AI tools that help operators automatically identify, diagnose, and (eventually) remediate network problems — potentially reducing both downtime and operational costs. This marks a concrete step toward turning “telco-AI” from pilot projects into operational infrastructure.

As telecom networks scale (5G, 5G-Advanced, edge, IoT), faults and failures become costlier. Automating fault detection and troubleshooting with AI could significantly boost network resilience, reduce manual labor, and enable faster recovery from outages.

“Large Language Models have become instrumental in the pursuit of autonomous, resilient and adaptive networks,” said Prof. Merouane Debbah, General Chair of IEEE GenAINet ETI. “Through this challenge, we are tackling core research and engineering challenges, such as generalisation to unseen network faults, interpretability and edge-efficient AI, that are vital for making AI-native telecom infrastructures a reality. IEEE GenAINet ETI is proud to support this initiative, which serves as a testbed for future-ready innovations across the global telco ecosystem.”

“ITU’s global AI challenges connect innovators with computing resources, datasets, and expert mentors to nurture AI innovation ecosystems worldwide,” said Seizo Onoe, Director of the ITU Telecommunication Standardization Bureau. “Crowdsourcing new solutions and creating conditions for them to scale, our challenges boost business by helping innovations achieve meaningful impact.”

“The future of telecoms depends on the autonomation of network resiliency – shifting from static infrastructure to AI-driven, context-aware, self-optimising networks. TM Forum’s AI-Native Blueprint provides the architectural foundation to make this reality, and the AI Telco Troubleshooting Challenge aligns perfectly to support the industry in moving beyond isolated pilots to production-grade resilient autonomation,” said Guy Lupo, AI and Data Mission lead at TM Forum.

The initiative builds on recent breakthroughs in applying AI to network operations, leveraging curated datasets such as TeleLogs and benchmarking frameworks developed by GSMA and its partners under the GSMA Open-Telco LLM Benchmarks community, which includes a leaderboard that highlights how various LLMs perform on telco-specific use cases.

“Network faults cost operators millions annually and root cause analysis is a critical pain point for operators,” said Louis Powell, Director of AI Technologies at GSMA. “By harnessing AI models capable of reasoning and diagnosing unseen faults, the industry can dramatically improve reliability and reduce operational costs. Through this challenge, we aim to accelerate the development of LLMs that combine reasoning, efficiency and scalability.”

“We are encouraged by the upside of this challenge after our team at AT&T fine-tuned a 4-billion-parameter small language model that topped all other evaluated models on the GSMA Open-Telco LLM Benchmarks (TeleLogs RCA task), including frontier models such as GPT-5, Claude Sonnet 4.5 and Grok-4,” said Andy Markus, Chief Data Officer at AT&T. “This challenge has the right mix of an important business problem and a technical opportunity, and we welcome the industry’s collaboration to take it to the next level.”

The AI Telco Troubleshooting Challenge is open for submissions on the 28th November and it closes on 1st February 2026, with the winners announced at a dedicated prize-giving session at MWC26 Barcelona.

…………………………………………………………………………………………………………………………………………………………………………

Separately, the GSMA Foundry and Khalifa University announced a strategic collaboration to develop “TelecomGPT,” a dedicated LLM for telecom applications, plus an Open-Telco Knowledge Graph based on 3GPP specifications.

-

These assets are intended to help the industry overcome limitations of general-purpose LLMs, which often struggle with telecom-specific technical contexts. PR Newswire+2Mobile World Live+2

-

The plan: make TelecomGPT and related knowledge tools available for operators, vendors and researchers to accelerate AI-driven telco innovations. PR Newswire+1

Why it matters: A specialized “telco-native” LLM could improve automation, operations, R&D and standardization efforts — for example, helping operators configure networks, analyze logs, or build AI-powered services. It represents a shift toward embedding AI more deeply into core telecom infrastructure and operations.

…………………………………………………………………………………………………………………………………………………………………………………..

About GSMA

The GSMA is a global organization unifying the mobile ecosystem to discover, develop and deliver innovation foundational to positive business environments and societal change. Our vision is to unlock the full power of connectivity so that people, industry, and society thrive. Representing mobile operators and organizations across the mobile ecosystem and adjacent industries, the GSMA delivers for its members across three broad pillars: Connectivity for Good, Industry Services and Solutions, and Outreach. This activity includes advancing policy, tackling today’s biggest societal challenges, underpinning the technology and interoperability that make mobile work, and providing the world’s largest platform to convene the mobile ecosystem at the MWC and M360 series of events.

We invite you to find out more at gsma.com

About ETSI

ETSI is one of only three bodies officially recognized by the European Union as a European Standards Organization (ESO). It is an independent, not-for-profit body dedicated to ICT standardisation. With over 900 member organizations from more than 60 countries across five continents, ETSI offers an open and inclusive environment for members representing large and small private companies, research institutions, academia, governments, and public organizations. ETSI supports the timely development, ratification, and testing of globally applicable standards for ICT‑enabled systems, applications, and services across all sectors of industry and society. More on: etsi.org

About IEEE GenAINet

The aim of the IEEE Large Generative AI Models in Telecom Emerging Technology Initiative (GenAINet ETI) is to create a dynamic platform of research and innovation for academics, researchers, and industry leaders to advance the research on large generative AI in Telecom, through collaborative efforts across various disciplines, including mathematics, information theory, wireless communications, signal processing, networking, artificial intelligence, and more. More on: https://genainet.committees.comsoc.org

About ITU

The International Telecommunication Union (ITU) is the United Nations agency for digital technologies, driving innovation for people and the planet with 194 Member States and a membership of over 1,000 companies, universities, civil society, and international and regional organizations. Established in 1865, ITU coordinates the global use of the radio spectrum and satellite orbits, establishes international technology standards, drives universal connectivity and digital services, and is helping to make sure everyone benefits from sustainable digital transformation, including the most remote communities. From artificial intelligence (AI) to quantum, from satellites and submarine cables to advanced mobile and wireless broadband networks, ITU is committed to connecting the world and beyond. Learn more: www.itu.int

About TM Forum

TM Forum is an alliance of over 800 organizations spanning the global connectivity ecosystem, including the world’s top ten Communication Service Providers (CSPs), top three hyperscalers and Network Equipment Providers (NEPs), vendors, consultancies and system integrators, large and small. We provide a place for our Members to collaborate, innovate, and deliver lasting change. Together, we are building a sustainable future for the industry in connectivity and beyond. To find out more, visit: www.tmforum.org

References:

The AI Telco Troubleshooting Challenge Launches to Transform Network Reliability

AI Telco Troubleshooting Challenge global launch webinar

GSMA Vision 2040 study identifies spectrum needs during the peak 6G era of 2035–2040

Gartner: Gen AI nearing trough of disillusionment; GSMA survey of network operator use of AI

Expose: AI is more than a bubble; it’s a data center debt bomb

We’ve previously described the tremendous debt that AI companies have assumed, expressing serious doubts that it will ever be repaid. This article expands on that by pointing out the huge losses incurred by the AI startup darlings and that AI poster child Open AI won’t have the cash to cover its costs 9which are greater than most analysts assume). Also, we quote from the Wall Street Journal, Financial Times, Barron’s, along with a dire forecast from the Center for Public Enterprise.

In Saturday’s print edition, The Wall Street Journal notes:

OpenAI and Anthropic are the two largest suppliers of generative AI with their chatbots ChatGPT and Claude, respectively, and founders Sam Altman and Dario Amodei have become tech celebrities.

What’s only starting to become clear is that those companies are also sinkholes for AI losses that are the flip side of chunks of the public-company profits.

OpenAI hopes to turn profitable only in 2030, while Anthropic is targeting 2028. Meanwhile, the amounts of money being lost are extraordinary.

It’s impossible to quantify how much cash flowed from OpenAI to big tech companies. But OpenAI’s loss in the quarter equates to 65% of the rise in underlying earnings of Microsoft, Nvidia, Alphabet, Amazon and Meta together. That ignores Anthropic, from which Amazon recorded a profit of $9.5B from its holding in the loss-making company in the quarter.

OpenAI committed to spend $250 billion more on Microsoft’s cloud and has signed a $300 billion deal with Oracle, $22 billion with CoreWeave and $38 billion with Amazon, which is a big investor in rival Anthropic.

OpenAI doesn’t have the income to cover its costs. It expects revenue of $13 billion this year to more than double to $30 billion next year, then to double again in 2027, according to figures provided to shareholders. Costs are expected to rise even faster, and losses are predicted to roughly triple to more than $40 billion by 2027. Things don’t come back into balance even in OpenAI’s own forecasts until total computing costs finally level off in 2029, allowing it to scrape into profit in 2030.

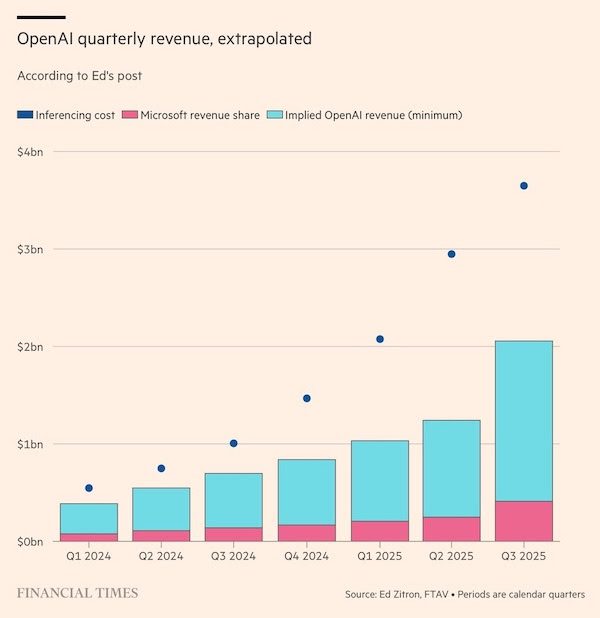

The losses at OpenAI that has helped boost the profits of Big Tech may, in fact, understate the true nature of the problem. According to the Financial Times:

OpenAI’s running costs may be a lot more than previously thought, and that its main backer Microsoft is doing very nicely out of their revenue share agreement.

OpenAI appears to have spent more than $12.4bn at Azure on inference compute alone in the last seven calendar quarters. Its implied revenue for the period was a minimum of $6.8bn. Even allowing for some fudging between annualised run rates and period-end totals, the apparent gap between revenues and running costs is a lot more than has been reported previously.

The apparent gap between revenues and running costs is a lot more than has been reported previously. If the data is accurate, then it would call into question the business model of OpenAI and nearly every other general-purpose LLM vendor.

Also, the financing needed to build out the data centers at the heart of the AI boom is increasingly becoming an exercise in creative accounting. The Wall Street Journal reports:

The Hyperion deal is a Frankenstein financing that combines elements of private-equity, project finance and investment-grade bonds. Meta needed such financial wizardry because it already issued a $30B bond in October that roughly doubled its debt load overnight.

Enter Morgan Stanley, with a plan to have someone else borrow the money for Hyperion. Blue Owl invested about $3 billion for an 80% private-equity stake in the data center, while Meta retained 20% for the $1.3 billion it had already spent. The joint venture, named Beignet Investor after the New Orleans pastry, got another $27 billion by issuing bonds that pay off in 2049, $18 billion of which Pimco purchased. That debt is on Beignet’s balance sheet, not Meta’s.

Dan Fuss, vice chairman of Loomis Sayles told Barrons: “We are good at taking credit risk,” Dan said, cheerfully admitting to having the scars to show for it. That is, he added, if they know the credit. But that’s become less clear with the recent spate of mind-bendingly complex megadeals, with myriad entities funding multibillion-dollar data centers. Fuss thinks current data-center deals are too speculative. The risk is too great and future revenue too uncertain. And yields aren’t enough to compensate, he concluded.

Increased wariness about monster hyper-scaler borrowings has sent the cost of insuring their debt against default soaring. Credit default swaps (CDS) more than doubled for Oracle since September, after it issued $18 billion in public bonds and took out a $38 billion private loan. CoreWeave’s CDS gapped higher this past week, mirroring the slide of the data-center company’s stock.

According to the Bank Credit Analyst (BCA), capex busts weigh on the economy, which further hits asset prices, the firm says. Following the dot-com bust, a housing bubble grew, which burst in the 2008-09 financial crisis. “It is far from certain that a new bubble will emerge (after the AI bubble bursts) this time around, in which case the resulting recession could be more severe than the one in 2001,” BCA notes.

………………………………………………………………………………………………………………………………………………

The widening gap between the expenditures needed to build out AI data centers and the cash flows generated by the products they enable creates a colossal risk which could crash asset values of AI companies. The Center for Public Enterprise reports that it’s “Bubble or Nothing.”

Should economic conditions in the tech sector sour, the burgeoning artificial intelligence (AI) boom may evaporate—and, with it, the economic activity associated with the boom in data center development.

Circular financing, or “roundabouting,” among so-called hyperscaler tenants—the leading tech companies and AI service providers—create an interlocking liability structure across the sector. These tenants comprise an incredibly large share of the market and are financing each others’ expansion, creating concentration risks for lenders and shareholders.

Debt is playing an increasingly large role in the financing of data centers. While debt is a quotidian aspect of project finance, and while it seems like hyperscaler tech companies can self-finance their growth through equity and cash, the lack of transparency in some recent debt-financed transactions and the interlocked liability structure of the sector are cause for concern.

If there is a sudden stop in new lending to data centers, Ponzi finance units ‘with cash flow shortfalls will be forced to try to make position by selling out position’—in other words to force a fire sale—which is ‘likely to lead to a collapse of asset values.’

The fact that the data center boom is threatened by, at its core, a lack of consumer demand and the resulting unstable investment pathways, is itself an ironic miniature of the U.S. economy as a whole. Just as stable investment demand is the linchpin of sectoral planning, stable aggregate demand is the keystone in national economic planning. Without it, capital investment crumbles.

……………………………………………………………………………………………………………..

Postscript (November 23, 2025):

In addition to cloud/hyperscaler AI spending, AI start-ups (especially OpenAI) and newer IT infrastructure companies (like Oracle) play a prominent role. It’s often a “scratch my back and I’ll scratch yours” type of deal. Let’s look at the “circular financing” arrangement between Nvidia and OpenAI where capital flows from Nvidia to OpenAI and then back to Nvidia. That ensures Nvidia a massive, long-term customer and providing OpenAI with the necessary capital and guaranteed access to critical, high-demand hardware. Here’s the scoop:

- Nvidia has agreed to invest up to $100 billion in OpenAI over time. This investment will be in cash, likely for non-voting equity shares, and will be made in stages as specific data center deployment milestones are met.

- OpenAIhas committed to building and deploying at least 10 gigawatts of AI data center capacity using Nvidia’s silicon and equipment, which will involve purchasing millions of Nvidia expensive GPU chips.

Here’s the Circular Flow of this deal:

- Nvidia provides a cash investment to OpenAI.

- OpenAI uses that capital (and potentially raises additional debt using the commitment as collateral) to build new data centers.

- OpenAI then uses the funds to purchase Nvidia GPUs and other data center infrastructure.

- The revenue from these massive sales flows back to Nvidia, helping to justify its soaring stock price and funding further investments.

What’s wrong with such an arrangement you ask? Anyone remember the dot-com/fiber optic boom and bust? Critics have drawn parallels to the “vendor financing” practices of the dot-com era, arguing these interconnected deals could create a “mirage of growth” and potentially an AI bubble, as the actual organic demand for the products is difficult to assess when companies are essentially funding their own sales.

However, supporters note that, unlike the dot-com bubble, these deals involve the creation of tangible physical assets (data centers and chips) and reflect genuine, booming demand for AI compute capacity although it’s not at all certain how they’ll be paid for.

There’s a similar cozy relationship with the $1B Nvidia invested in Nokia with the Finnish company now planning to ditch Marvell’s silicon and replace it by buying the more expensive, power hungry Nvidia GPUs for its wireless network equipment. Nokia, has only now become a strong supporter of Nvidia’s AI RAN (Radio Access Network), which has many telco skeptics.

………………………………………………………………………………………………………………………………………………….

References:

https://www.wsj.com/tech/ai/big-techs-soaring-profits-have-an-ugly-underside-openais-losses-fe7e3184

https://www.ft.com/content/fce77ba4-6231-4920-9e99-693a6c38e7d5

https://www.wsj.com/tech/ai/three-ai-megadeals-are-breaking-new-ground-on-wall-street-896e0023

Can the debt fueling the new wave of AI infrastructure buildouts ever be repaid?

AI Data Center Boom Carries Huge Default and Demand Risks

Big tech spending on AI data centers and infrastructure vs the fiber optic buildout during the dot-com boom (& bust)

AI spending boom accelerates: Big tech to invest an aggregate of $400 billion in 2025; much more in 2026!

Gartner: AI spending >$2 trillion in 2026 driven by hyperscalers data center investments

Amazon’s Jeff Bezos at Italian Tech Week: “AI is a kind of industrial bubble”

FT: Scale of AI private company valuations dwarfs dot-com boom

Indosat Ooredoo Hutchison, Nokia and Nvidia AI-RAN research center in Indonesia amongst telco skepticism

Indosat Ooredoo Hutchison (Indosat) Nokia, and Nvidia have officially launched the AI-RAN Research Centre in Surabaya, a strategic collaboration designed to advance AI-native wireless networks and edge AI applications across Indonesia. This collaboration, aims to support Indonesia’s digital transformation goals and its “Golden Indonesia Vision 2045.” The facility will allow researchers and engineers to experiment with combining Nokia’s RAN technologies with Nvidia’s accelerated computing platforms and Indosat’s 5G network.

According to the partners, the research facility will serve as a collaborative environment for engineers, researchers, and future digital leaders to experiment, learn, and co-create AI-powered solutions. Its work will centre on integrating Nokia’s advanced RAN technologies with Nvidia’s accelerated computing platforms and Indosat’s commercial 5G network. The three companies view the project as a foundation for AI-driven growth, with applications spanning education, agriculture, and healthcare.

The AI-RAN infrastructure enables high-performance software-defined RAN and AI workloads on a single platform, leveraging Nvidia’s Aerial RAN Computer 1 (ARC-1). The facility will also act as a distributed computing extension of Indosat’s sovereign AI Factory, a national AI platform powered by Nvidia, creating an “AI Grid” that connects datacentres and distributed 5G nodes to deliver intelligence closer to users.

Nezar Patria, vice minister of communication and digital affairs of the Republic of Indonesia said: “The inauguration of the AI-RAN Research Centre marks a concrete step in strengthening Indonesia’s digital sovereignty. The collaboration between the government, industry, and global partners such as Indosat, Nokia, and Nvidia demonstrates that Indonesia is not merely a user but also a creator of AI technology. This initiative supports the acceleration of the Indonesia Emas 2045 vision by building an inclusive, secure, and globally competitive AI ecosystem.”

Vikram Sinha, president director and CEO of Indosat Ooredoo Hutchison said: “As Indonesia accelerates its digital transformation, the AI-RAN Research Centre reflects Indosat’s larger purpose of empowering Indonesia. When connectivity meets compute, it creates intelligence, delivered at the edge, in a sovereign manner. This is how AI unlocks real impact, from personalised tutors for children in rural areas to precision farming powered by drones. Together with Nokia and Nvidia, we’re building the foundation for AI-driven growth that strengthens Indonesia’s digital future.”

From a network perspective, the project demonstrates how AI-RAN architectures can optimize wireless network performance, energy efficiency, and scalability through machine learning–based radio signal processing.

Ronnie Vasishta, senior vice president of telecom at Nvidia added: “The AI Grid is the biggest opportunity for telecom providers to make AI as ubiquitous as connectivity and distribute intelligence at scale by tapping into their nationwide wireless networks.”

Pallavi Mahajan, chief technology and AI officer at Nokia said: “This initiative represents a major milestone in our journey toward the future of AI-native networks by bringing AI-powered intelligence into the hands of every Indonesian.”

………………………………………………………………………………………………………………………………………………………..

Wireless Telcos are Skeptical about AI-RAN:

According to Light Reading, the AI RAN uptake is facing resistance from telcos. The problem is Nvidia’s AI GPUs are very costly and not GPUs power-efficient enough to reside in wireless base stations, central offices or even small telco data centers.

Nvidia references 300 watts for the power consumption of ARC-Pro, which is much higher than the peak of 40 watts that Qualcomm claimed more than two years ago for its own RAN silicon when supporting throughput of 24 Gbit/s. How ARC-Pro would measure up on a like-for-like basis in a commercial network is obviously unclear.

Nvidia also claims a Gbit/s-per-watt performance “on par with” today’s traditional custom silicon. Yet the huge energy consumption of GPU-filled telco data centers does not bear that out.

“Is there a case for a wide-area indiscriminate rollout? I am not sure,” said Verizon CTO Yago Tenorio, during the Brooklyn 6G Summit, another telecom event, last week. “It depends on the unit cost of the GPU, on the power efficiency of the GPU, and the main factor will always be just doing what’s best for the basestation. Don’t try to just overcomplicate the whole thing monetizing that platform, as there are easier ways to do it.”

“We have no way to justify a business case like that,” said Bernard Bureau, the vice president of wireless strategy for Canada’s Telus, at FYUZ. “Our COs [central offices] are not necessarily the best places to run a data center. It would mean huge investments in space and power upgrades for those locations, and we’ve got sunk investment that can be leveraged in our cell sites.”

Light Reading’s Iain Morris wrote, “Besides turning COs into data centers, operators would need to invest in fiber connections between those facilities and their masts.”

How much spectral efficiency can be gained by using Nvidia GPUs as RAN silicon?

“It’s debatable if it’s going to improve the spectral efficiency by 50% or even 100%. It depends on the case,” said Tenorio. Whatever the exact improvement, it would be “really good” and is something the industry needs, he told the audience.

In April, Nokia’s rival Ericsson said it had tested “AI-native” link adaptation, a RAN algorithm, in the network of Bell Canada without needing any GPU. “That’s an algorithm we have optimized for decades,” said Per Narvinger, the head of Ericsson’s mobile networks business group. “Despite that, through a large language model, but a really small one, we gained 10% of spectral efficiency.”

Before Nvidia invested in Nokia, the latter claimed to have sufficient AI and machine-learning capabilities in the custom silicon provided by Marvell Technology, its historical supplier of 5G base station chips.

Executives at Cohere Technology praises Nvidia’s investment in Nokia, seeing it as an important AI spur for telecom. Yet their own software does not run on Nvidia GPUs. It promises to boost spectral efficiency on today’s 5G networks, massively reducing what telcos would have to spend on new radios. It has won plaudits from Vodafone’s Pignatelli as well as Bell Canada and Telstra, both of which have invested in Cohere. The challenge is getting the kit vendors to accommodate a technology that could hurt their own sales. Regardless, Bell Canada’s recent field trials of Cohere have used a standard Dell server without GPUs.

Finally, if GPUs are so critical in AI for RAN, why has neither Ericsson or Samsung using Nvidia GPU’s in their RAN equipment?

Morris sums up:

“Currently, the AI-RAN strategy adopted by Nokia looks like a massive gamble on the future. “The world is developing on Nvidia,” Vasishta told Light Reading in the summer, before the company’s share price had gained another 35%. That vast and expanding ecosystem holds attractions for RAN developers bothered by the diminishing returns on investment in custom silicon.”

“Intel’s general-purpose chips and virtual RAN approach drew interest several years ago for all the same reasons. But Intel’s recent decline has made Nvidia shine even more brightly. Telcos might not have to worry. Nvidia is already paying a big 5G vendor (Nokia) to use its technology. For a company that is so outrageously wealthy, paying a big operator to deploy it would be the next logical step.

…………………………………………………………………………………………………………………………………………………

References:

https://capacityglobal.com/news/indosat-nokia-and-nvidia-launch-ai-ran-research-centre-in-indonesia/

https://www.telecoms.com/ai/indosat-nokia-and-nvidia-open-ai-ran-research-centre-in-indonesia

https://www.lightreading.com/5g/nokia-and-nvidia-s-ai-ran-plan-hits-telco-resistance

https://resources.nvidia.com/en-us-aerial-ran-computer-pro

Nvidia pays $1 billion for a stake in Nokia to collaborate on AI networking solutions

Dell’Oro: AI RAN to account for 1/3 of RAN market by 2029; AI RAN Alliance membership increases but few telcos have joined

Nvidia AI-RAN survey results; AI inferencing as a reinvention of edge computing?

Dell’Oro: RAN revenue growth in 1Q2025; AI RAN is a conundrum

The case for and against AI-RAN technology using Nvidia or AMD GPUs

AI RAN Alliance selects Alex Choi as Chairman

AI spending boom accelerates: Big tech to invest an aggregate of $400 billion in 2025; much more in 2026!

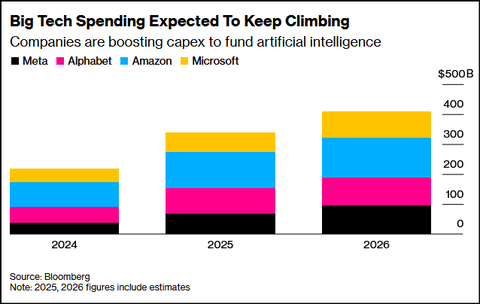

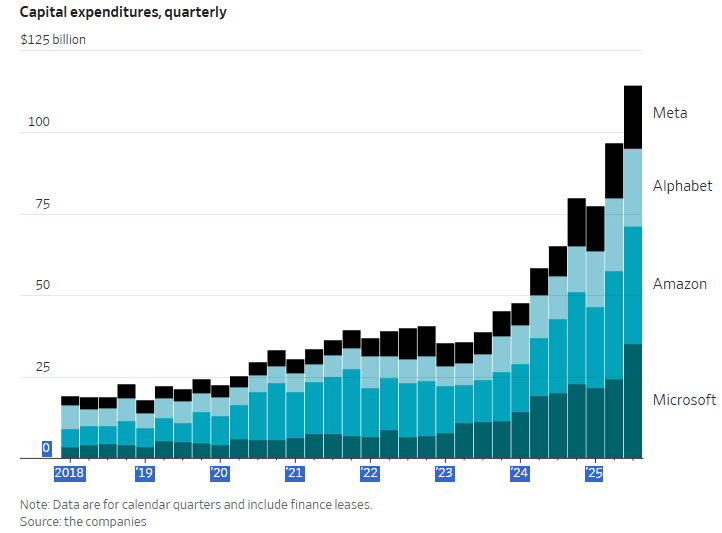

- Meta Platforms says it continues to experience capacity constraints as it simultaneously trains new AI models and supports existing product infrastructure. Meta CEO Mark Zuckerberg described an unsatiated appetite for more computing resources that Meta must work to fulfill to ensure it’s a leader in a fast-moving AI race. “We want to make sure we’re not underinvesting,” he said on an earnings call with analysts Wednesday after posting third-quarter results. Meta signaled in the earnings report that capital expenditures would be “notably larger” next year than in 2025, during which Meta expects to spend as much as $72 billion. He indicated that the company’s existing advertising business and platforms are operating in a “compute-starved state.” This condition persists because Meta is allocating more resources toward AI research and development efforts rather than bolstering existing operations.

- Microsoft reported substantial customer demand for its data-center-driven services, prompting plans to double its data center footprint over the next two years. Concurrently, Amazon is working aggressively to deploy additional cloud capacity to meet demand. Amy Hood, Microsoft’s chief financial officer, said: “We’ve been short [on computing power] now for many quarters. I thought we were going to catch up. We are not. Demand is increasing.” She further elaborated, “When you see these kinds of demand signals and we know we’re behind, we do need to spend.”

- Alphabet (Google’s parent company) reported that capital expenditures will jump from $85 billion to between $91 billion and $93 billion. Google CFO Anat Ashkenazi said the investments are already yielding returns: “We already are generating billions of dollars from AI in the quarter. But then across the board, we have a rigorous framework and approach by which we evaluate these long-term investments.”

- Amazon has not provided a specific total dollar figure for its planned AI investment in 2026. However, the company has announced it expects its total capital expenditures (capex) in 2026 to be even higher than its 2025 projection of $125 billion, with the vast majority of this spending dedicated to AI and related infrastructure for Amazon Web Services (AWS).

- Apple: Announced it is also increasing its AI investments, though its overall spending remains smaller in comparison to the other tech giants.

As big as the spending projections were this week, they look pedestrian compared with OpenAI, which has announced roughly $1 trillion worth of AI infrastructure deals of late with partners including Nvidia , Oracle and Broadcom.

Despite the big capex tax write-offs (due to the 2025 GOP tax act) there is a large degree of uncertainty regarding the eventual outcomes of this substantial AI infrastructure spending. The companies themselves, along with numerous AI proponents, assert that these investments are essential for machine-learning systems to achieve artificial general intelligence (AGI), a state where they surpass human intelligence.

Yet skeptics question whether investing billions in large-language models (LLMs), the most prevalent AI system, will ultimately achieve that objective. They also highlight the limited number of paying users for existing technology and the prolonged training period required before a global workforce can effectively utilize it.

During investor calls following the earnings announcements, analysts directed incisive questions at company executives. On Microsoft’s call, one analyst voiced a central market concern, asking: “Are we in a bubble?” Similarly, on the call for Google’s parent company, Alphabet, another analyst questioned: “What early signs are you seeing that gives you confidence that the spending is really driving better returns longer term?”

Bank of America (BofA) credit strategists Yuri Seliger and Sohyun Marie Lee write in a client note that capital spending by five of the Magnificent Seven megacap tech companies (Amazon.com, Alphabet, and Microsoft, along with Meta and Oracle) has been growing even faster than their prodigious cash flows. “These companies collectively may be reaching a limit to how much AI capex they are willing to fund purely from cash flows,” they write. Consensus estimates of AI capex suggest will climb to 94% of operating cash flows, minus dividends and share repurchases, in 2025 and 2026, up from 76% in 2024. That’s still less than 100% of cash flows, so they don’t need to borrow to fund spending, “but it’s getting close,” they add.

………………………………………………………………………………………………………………………………………………………………….

Google, which projected a rise in its full-year capital expenditures from $85 billion to a range of $91 billion to $93 billion, indicated that these investments were already proving profitable. Google’s Ashkenazi stated: “We already are generating billions of dollars from AI in the quarter. But then across the board, we have a rigorous framework and approach by which we evaluate these long-term investments.”

Microsoft reported that it expects to face capacity shortages that will affect its ability to power both its current businesses and AI research needs until at least the first half of the next year. The company noted that its cloud computing division, Azure, is absorbing “most of the revenue impact.”

Amazon informed investors of its expedited efforts to bring new capacity online, citing its ability to immediately monetize these investments.

“You’re going to see us continue to be very aggressive in investing capacity because we see the demand,” said Amazon Chief Executive Andy Jassy. “As fast as we’re adding capacity right now, we’re monetizing it.”

Meta’s chief financial officer, Susan Li, stated that the company’s capital expenditures—which have already nearly doubled from last year to $72 billion this year—will grow “notably larger” in 2026, though specific figures were not provided. Meta brought this year’s biggest investment-grade corporate bond deal to market, totaling some $30 billion, the latest in a parade of recent data-center borrowing.

Apple confirmed during its earnings call it is also increasing investments in AI . However, its total spending levels remain significantly lower compared to the outlays planned by the other major technology firms.

Skepticism and Risk:

While proponents argue the investments are necessary for AGI and offer a competitive advantage, skeptics question if huge spending (capex) on AI infrastructure and large-language models will achieve this goal and point to limited paying users for current AI technology. Meta CEO Zuckerberg addressed this by telling investors the company would “simply pivot” if its AGI spending strategy proves incorrect.

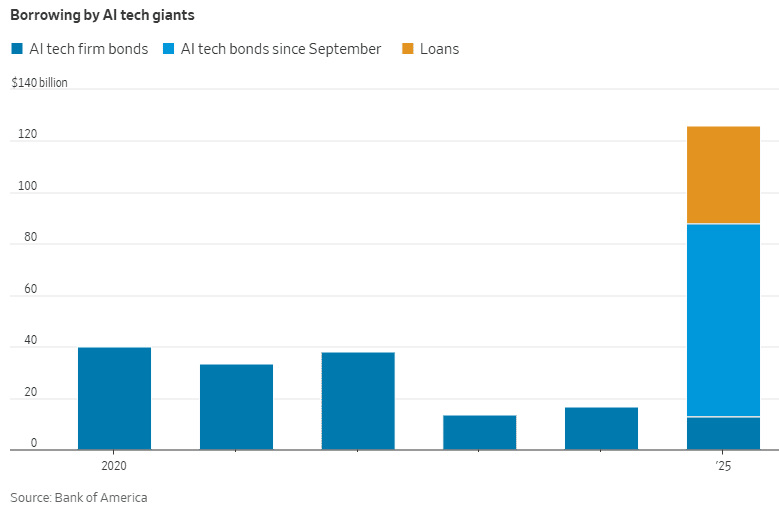

The mad scramble by mega tech companies and Open AI to build AI data centers is largely relying on debt markets, with a slew of public and private mega deals since September. Hyperscalers would have to spend 94% of operating cash flow to pay for their AI buildouts so are turning to debt financing to help defray some of that cost, according to Bank of America. Unlike earnings per share, cash flow can’t be manipulated by companies. If they spend more on AI than they generate internally, they have to finance the difference.

Hyperscaler debt taken on so far this year have raised almost as much money as all debt financings done between 2020 and 2024, the BofA research said. BofA calculates $75 billion of AI-related public debt offerings just in the past two months!

In bubbles, everyone gets caught up in the idea that spending on the hot theme will deliver vast profits — eventually. When the bubble is big enough, it shifts the focus of the market as a whole from disliking capital expenditure, and hating speculative capital spending in particular, to loving it. That certainly seems the case today with surging AI spending. For much more, please check-out the References below.

Postscript: November 23, 2025:

In this new AI era, consumers and workers are not what drives the economy anymore. Instead, it’s spending on all things AI, mostly with borrowed money or circular financing deals.

BofA Research noted that Meta and Oracle issued $75 billion in bonds and loans in September and October 2025 alone to fund AI data center build outs, an amount more than double the annual average over the past decade. They warned that “The AI boom is hitting a money wall” as capital expenditures consume a large portion of free cash flow. Separately, a recent Bank of America Global Fund Manager Survey found that 53% of participating fund managers felt that AI stocks had reached bubble proportions. This marked a slight decrease from a record 54% in the prior month’s survey, but the concern has grown over time, with the “AI bubble” cited as the top “tail risk” by 45% of respondents in the November 2025 poll.

JP Morgan Chase estimates up to $7 trillion of AI spending will be with borrowed money. “The question is not ‘which market will finance the AI-boom?’ Rather, the question is ‘how will financings be structured to access every capital market?’ according to strategists at the bank led by Tarek Hamid.

As an example of AI debt financing, Meta did a $27 billion bond offering. It wasn’t on their balance sheet. They paid 100 basis points over what it would cost to put it on their balance sheet. Special purpose vehicles happen at the tail end of the cycle, not the early part of the cycle, notes Rajiv Jain of GQG Partners.

References:

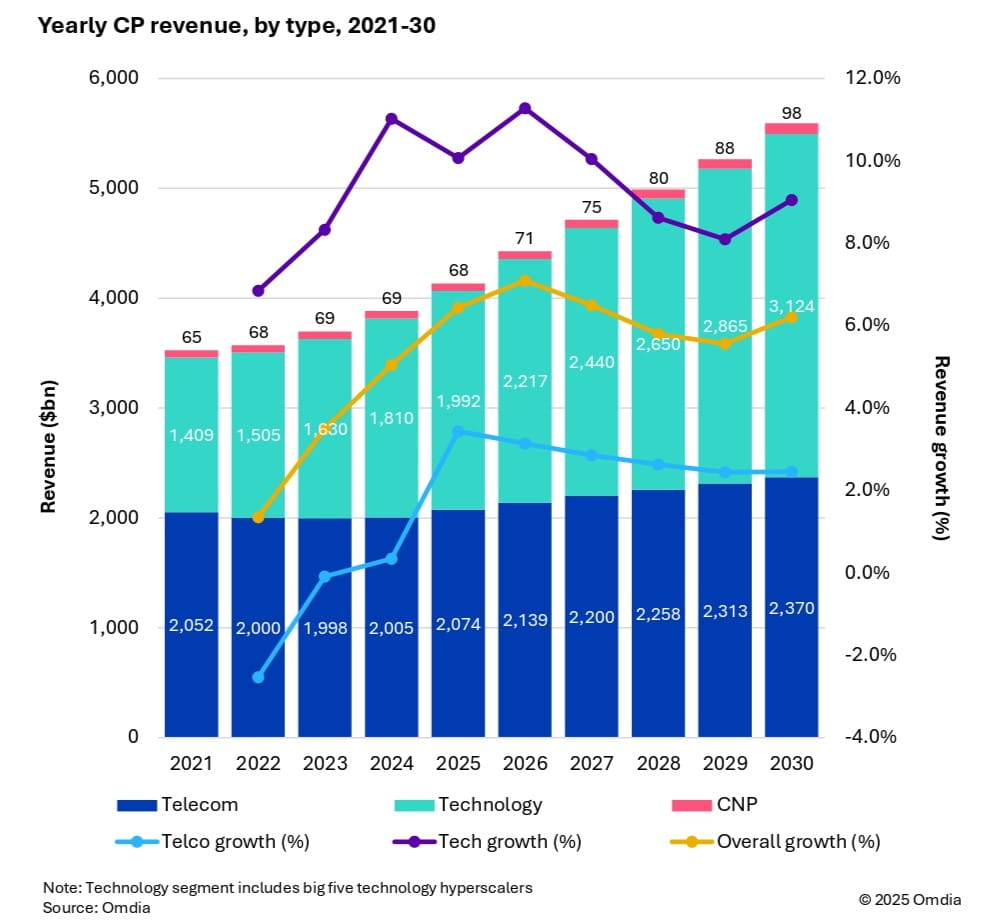

Market research firms Omdia and Dell’Oro: impact of 6G and AI investments on telcos

Market research firm Omdia (owned by Informa) this week forecast that 6G and AI investments are set to drive industry growth in the global communications market. As a result, global communications providers’ revenue is expected to reach $5.6 trillion by 2030, growing at a 6.2% CAGR from 2025. Investment momentum is also expected to shift toward mobile networks from 2028 onward, as tier 1 markets prepare for 6G deployments. Telecoms capex is forecast to reach $395 billion by 2030, with a 3.6% CAGR, while technology capex will surge to $545 billion, reflecting a 9.3% CAGR.

Fixed telecom capex will gradually decline due to market saturation. Meanwhile, AI infrastructure, cloud services, and digital sovereignty policies are driving telecom operators to expand data centers and invest in specialized hardware.

Key market trends:

- CP capex per person will increase from $74 in 2024 to $116 in 2030, with CP capex reaching 2.5% of global GDP investment.

-

Capital intensity in telecom will decline until 2027, then rise due to mobile network upgrades.

-

Regional leaders in revenue and capex include North America, Oceania & Eastern Asia, and Western Europe, with Central & Southern Asia showing the highest growth potential.

Dario Talmesio, research director at Omdia said, “telecom operators are entering a new phase of strategic investment. With 6G on the horizon and AI infrastructure demands accelerating, the connectivity business is shifting from volume-based pricing to value-driven connectivity.”

Dario Talmesio, research director at Omdia said, “telecom operators are entering a new phase of strategic investment. With 6G on the horizon and AI infrastructure demands accelerating, the connectivity business is shifting from volume-based pricing to value-driven connectivity.”

Omdia’s forecast is based on a comprehensive model incorporating historical data from 67 countries, local market dynamics, regulatory trends, and technology migration patterns.

…………………………………………………………………………………………………………………………………………………

Separately, Dell’Oro Group sees 6G capex ramping around 2030, although it warns that the RAN market remains flat, “raising key questions for the industry’s future.” Cumulative 6G RAN investments over the 2029-2034 period are projected to account for 55% to 60% of the total RAN capex over the same forecast period.

“Our long-term position and characterization of this market have not changed,” said Stefan Pongratz, Vice President of RAN and Telecom Capex research at Dell’Oro Group. “The RAN network plays a pivotal role in the broader telecom market. There are opportunities to expand the RAN beyond the traditional MBB (mobile broadband) use cases. At the same time, there are serious near-term risks tilted to the downside, particularly when considering the slowdown in data traffic,” continued Pongratz.

Additional highlights from Dell’Oro’s October 2025 6G Advanced Research Report:

- The baseline scenario is for the broader RAN market to stay flat over the next 10 years. This is built on the assumption that the mobile network will run into utilization challenges by the end of the decade, spurring a 6G capex ramp dominated by Massive MIMO systems in the Sub-7GHz/cm Wave spectrum, utilizing the existing macro grid as much as possible.

- The report also outlines more optimistic and pessimistic growth scenarios, depending largely on the mobile data traffic growth trajectory and the impact beyond MBB, including private wireless and FWA (fixed wireless access).

- Cumulative 6G RAN investments over the 2029-2034 period are projected to account for 55 to 60 percent of the total RAN capex over the same forecast period.

Dell’Oro Group’s 6G Advanced Research Report offers an overview of the RAN market by technology, with tables covering manufacturers’ revenue for total RAN over the next 10 years. 6G RAN is analyzed by spectrum (Sub-7 GHz, cmWave, mmWave), by Massive MIMO, and by region (North America, Europe, Middle East and Africa, China, Asia Pacific Excl. China, and CALA). To purchase this report, please contact by email at [email protected].

References:

https://www.lightreading.com/6g/6g-momentum-is-building

6G Capex Ramp to Start Around 2030, According to Dell’Oro Group

https://www.lightreading.com/6g/6g-course-correction-vendors-hear-mno-pleas

https://www.lightreading.com/6g/what-at-t-really-wants-from-6g

Nvidia pays $1 billion for a stake in Nokia to collaborate on AI networking solutions

This is not only astonishing but unheard of: the world’s largest and most popular fabless semiconductor company –Nvidia– taking a $1 billion stake in a telco/reinvented data center connectivity company-Nokia.

Indeed, GPU king Nvidia will pay $1 billion for a stake of 2.9% in Nokia as part of a deal focused on AI and data centers, the Finnish telecom equipment maker said on Tuesday as its shares hit their highest level in nearly a decade on hope for AI to lift their business revenue and profits. The nonexclusive partnership and the investment will make Nvidia the second-largest shareholder in Nokia. Nokia said it will issue 166,389,351 new shares for Nvidia, which the U.S. company will subscribe to at $6.01 per share.

Nokia said the companies will collaborate on artificial intelligence networking solutions and explore opportunities to include its data center communications products in Nvidia’s future AI infrastructure plans. Nokia and its Swedish rival Ericsson both make networking equipment for connectivity inside (intra-) data centers and between (inter-) data centers and have been benefiting from increased AI use.

Summary:

- NVIDIA and Nokia to establish a strategic partnership to enable accelerated development and deployment of next generation AI native mobile networks and AI networking infrastructure.

- NVIDIA introduces NVIDIA Arc Aerial RAN Computer, a 6G-ready telecommunications computing platform.

- Nokia to expand its global access portfolio with new AI-RAN product based on NVIDIA platform.

- T-Mobile U.S. is working with Nokia and NVIDIA to integrate AI-RAN technologies into its 6G development process.

- Collaboration enables new AI services and improved consumer experiences to support explosive growth in mobile AI traffic.

- Dell Technologies provides PowerEdge servers to power new AI-RAN solution.

- Partnership marks turning point for the industry, paving the way to AI-native 6G by taking AI-RAN to innovation and commercialization at a global scale.

In some respects, this new partnership competes with Nvidia’s own data center connectivity solutions from its Mellanox Technologies division, which it acquired for $6.9 billion in 2019. Meanwhile, Nokia now claims to have worked on a redesign to ensure its RAN software is compatible with Nvidia’s compute unified device architecture (CUDA) platform, meaning it can run on Nvidia’s GPUs. Nvidia has also modified its hardware offer, creating capacity cards that will slot directly into Nokia’s existing AirScale baseband units at mobile sites.

Having dethroned Intel several years ago, Nvidia now has a near-monopoly in supplying GPU chips for data centers and has partnered with companies ranging from OpenAI to Microsoft. AMD is a distant second but is gaining ground in the data center GPU space as is ARM Ltd with its RISC CPU cores. Capital expenditure on data center infrastructure is expected to exceed $1.7 trillion by 2030, consulting firm McKinsey, largely because of the expansion of AI.

Nvidia CEO Jensen Huang said the deal would help make the U.S. the center of the next revolution in 6G. “Thank you for helping the United States bring telecommunication technology back to America,” Huang said in a speech in Washington, addressing Nokia CEO Justin Hotard (x-Intel). “The key thing here is it’s American technology delivering the base capability, which is the accelerated computing stack from Nvidia, now purpose-built for mobile,” Hotard told Reuters in an interview. “Jensen and I have been talking for a little bit and I love the pace at which Nvidia moves,” Hotard said. “It’s a pace that I aspire for us to move at Nokia.” He expects the new equipment to start contributing to revenue from 2027 as it goes into commercial deployment, first with 5G, followed by 6G after 2030.

Nvidia has been on a spending spree in recent weeks. The company in September pledged to invest $5 billion in beleaguered chip maker Intel. The investment pairs the world’s most valuable company, which has been a darling of the AI boom, with a chip maker that has almost completely fallen out of the AI conversation.

Later that month, Nvidia said it planned to invest up to $100 billion in OpenAI over an unspecified period that will likely span at least a few years. The partnership includes plans for an enormous data-center build-out and will allow OpenAI to build and deploy at least 10 gigawatts of Nvidia systems.

…………………………………………………………………………………………………………………………………………………………………………………………………………………………………

Tech Details:

Nokia uses Marvell Physical Layer (1) baseband chips for many of its products. Among other things, this ensured Nokia had a single software stack for all its virtual and purpose-built RAN products. Pallavi Mahajan, Nokia’s recently joined chief technology and AI officer recently told Light Reading that their software could easily adapt to run on Nvidia’s GPUs: “We built a hardware abstraction layer so that whether you are on Marvell, whether you are on any of the x86 servers or whether you are on GPUs, the abstraction takes away from that complexity, and the software is still the same.”

Fully independent software has been something of a Holy Grail for the entire industry. It would have ramifications for the whole market and its economics. Yet Nokia has conceivably been able to minimize the effort required to put its Layer 1 and specific higher-layer functions on a GPU. “There are going to be pieces of the software that are going to leverage on the accelerated compute,” said Mahajan. “That’s where we will bring in the CUDA integration pieces. But it’s not the entire software,” she added. The appeal of Nvidia as an alternative was largely to be found in “the programmability pieces that you don’t have on any other general merchant silicon,” said Mahajan. “There’s also this entire ecosystem, the CUDA ecosystem, that comes in.” One of Nvidia’s most eye-catching recent moves is the decision to “open source” Aerial, its own CUDA-based RAN software framework, so that other developers can tinker, she says. “What it now enables is the entire ecosystem to go out and contribute.”

…………………………………………………………………………………………………………………………………………………………………………………………………………………………………

Quotes:

“Telecommunications is a critical national infrastructure — the digital nervous system of our economy and security,” said Jensen Huang, founder and CEO of NVIDIA. “Built on NVIDIA CUDA and AI, AI-RAN will revolutionize telecommunications — a generational platform shift that empowers the United States to regain global leadership in this vital infrastructure technology. Together with Nokia and America’s telecom ecosystem, we’re igniting this revolution, equipping operators to build intelligent, adaptive networks that will define the next generation of global connectivity.”

“The next leap in telecom isn’t just from 5G to 6G — it’s a fundamental redesign of the network to deliver AI-powered connectivity, capable of processing intelligence from the data center all the way to the edge. Our partnership with NVIDIA, and their investment in Nokia, will accelerate AI-RAN innovation to put an AI data center into everyone’s pocket,” said Justin Hotard, President and CEO of Nokia. “We’re proud to drive this industry transformation with NVIDIA, Dell Technologies, and T-Mobile U.S., our first AI-RAN deployments in T-Mobile’s network will ensure America leads in the advanced connectivity that AI needs.”

……………………………………………………………………………………………………………………………………………………………………………………

Editor’s Notes:

1. In more advanced 5G networks, Physical Layer functions have demanded the support of custom silicon, or “accelerators.” A technique known as “lookaside,” favored by Ericsson and Samsung, uses an accelerator for only a single problematic Layer 1 task – forward error correction – and keeps everything else on the CPU. Nokia prefers the “inline” approach, which puts the whole of Layer 1 on the accelerator.

2. The huge AI-RAN push that Nvidia started with the formation of the AI-RAN Alliance in early 2024 has not met with an enthusiastic telco response so far. Results from Nokia as well as Ericsson show wireless network operators are spending less on 5G rollouts than they were in the early 2020s. Telco numbers indicate the appetite for smartphone and other mobile data services has not produced any sales growth. As companies prioritize efficiency above all else, baseband units that include Marvell and Nvidia cards may seem too expensive.

……………………………………………………………………………………………………………………………………………………………………………………….

Other Opinions and Quotes:

Nvidia chips are likely to be more expensive, said Mads Rosendal, analyst at Danske Bank Credit Research, but the proposed partnership would be mutually beneficial, given Nvidia’s large share in the U.S. data center market.

“This is a strong endorsement of Nokia’s capabilities,” said PP Foresight analyst Paolo Pescatore. “Next-generation networks, such as 6G, will play a significant role in enabling new AI-powered experiences,” he added.

Iain Morris, International Editor, Light Reading: “Layer 1 control software runs on ARM RISC CPU cores in both Marvell and Nvidia technologies. The bigger differences tend to be in the hardware acceleration “kernels,” or central components, which have some unique demands. Yet Nokia has been working to put as much as it possibly can into a bucket of common software. Regardless, if Nvidia is effectively paying for all this with its $1 billion investment, the risks for Nokia may be small………….Nokia’s customers will in future have an AI-RAN choice that limits or even shrinks the floorspace for Marvell. The development also points to more subtle changes in Nokia’s thinking. The message earlier this year was that Nokia did not require a GPU to implement AI for RAN, whereby machine-generated algorithms help to improve network performance and efficiency. Marvell had that covered because it had incorporated AI and machine-learning technologies into the baseband chips used by Nokia.”

“If you start doing inline, you typically get much more locked into the hardware,” said Per Narvinger, the president of Ericsson’s mobile networks business group, on a recent analyst call. During its own trials with Nvidia, Ericsson said it was effectively able to redeploy virtual RAN software written for Intel’s x86 CPUs on the Grace CPU with minimal changes, leaving the GPU only as a possible option for the FEC accelerator. Putting the entire Layer 1 on a GPU would mean “you probably also get more tightly into that specific implementation,” said Narvinger. “Where does it really benefit from having that kind of parallel compute system?”

………………………………………………………………………………………………………………………………………………….

Separately, Nokia and Nvidia will partner with T-Mobile U.S. to develop and test AI RAN technologies for developing 6G, Nokia said in its press release. Trials are expected to begin in 2026, focused on field validation of performance and efficiency gains for customers.

References:

https://nvidianews.nvidia.com/news/nvidia-nokia-ai-telecommunications

https://www.reuters.com/world/europe/nvidia-make-1-billion-investment-finlands-nokia-2025-10-28/

https://www.lightreading.com/5g/nvidia-takes-1b-stake-in-nokia-which-promises-5g-and-6g-overhaul

https://www.wsj.com/business/telecom/nvidia-takes-1-billion-stake-in-nokia-69f75bb6

Analysis: Cisco, HPE/Juniper, and Nvidia network equipment for AI data centers

Nvidia’s networking solutions give it an edge over competitive AI chip makers

Telecom sessions at Nvidia’s 2025 AI developers GTC: March 17–21 in San Jose, CA

Nvidia AI-RAN survey results; AI inferencing as a reinvention of edge computing?

FT: Nvidia invested $1bn in AI start-ups in 2024

The case for and against AI-RAN technology using Nvidia or AMD GPUs

Highlights of Nokia’s Smart Factory in Oulu, Finland for 5G and 6G innovation

Nokia & Deutsche Bahn deploy world’s first 1900 MHz 5G radio network meeting FRMCS requirements

Will the wave of AI generated user-to/from-network traffic increase spectacularly as Cisco and Nokia predict?

Indosat Ooredoo Hutchison and Nokia use AI to reduce energy demand and emissions

Verizon partners with Nokia to deploy large private 5G network in the UK

Reuters: US Department of Energy forms $1 billion AI supercomputer partnership with AMD

The U.S. Department of Energy has formed a $1 billion partnership with Advanced Micro Devices (AMD) to construct two supercomputers that will tackle large scientific problems ranging from nuclear power to cancer treatments to national security, U.S. Energy Secretary Chris Wright and AMD CEO Lisa Su told Reuters.

The U.S. is building the two machines to ensure the country has enough supercomputers to run increasingly complex experiments that require harnessing enormous amounts of data-crunching capability. The machines can accelerate the process of making scientific discoveries in areas the U.S. is focused on.

U.S. Energy Secretary Wright said the systems would “supercharge” advances in nuclear power, fusion energy, technologies for defense and national security, and the development of drugs. Scientists and companies are trying to replicate nuclear fusion, the reaction that fuels the sun, by jamming light atoms in a plasma gas under intense heat and pressure to release massive amounts of energy. “We’ve made great progress, but plasmas are unstable, and we need to recreate the center of the sun on Earth,” Wright told Reuters.

The second, more advanced computer called Discovery will be based around AMD’s MI430 series of AI chips that are tuned for high-performance computing.

184K global tech layoffs in 2025 to date; ~27.3% related to AI replacing workers

As of October, over 184,000 global tech jobs were cut in 2025, according to a report from Silicon Valley Business Journal. 50,184 were directly related to the implementation of artificial intelligence (AI) and automation tools by businesses. Silicon Valley’s AI boom has been pummeling headcounts across major companies in the region — and globally. U.S. companies accounted for about 123,000 of the layoffs.

These are the 10 tech companies with the most significant mass layoffs since January 2025:

- Intel: 33,900 layoffs. The company has cited the need to reduce costs and restructure its organization after years of technical and financial setbacks.

- Microsoft: 19,215 layoffs. The tech giant has conducted multiple rounds of cuts throughout the year across various departments as it prioritizes AI investments.

- TCS: 12,000 layoffs. As a major IT firm, Tata Consultancy Services’ cuts largely affected mid-level and senior positions, which are becoming redundant due to AI and evolving client demands.

- Accenture: 11,000 layoffs. The consulting company reduced its headcount as it shifts toward greater automation and AI-driven services.

- Panasonic: 10,000 layoffs. The Japanese manufacturer announced these job cuts as part of a strategy to improve efficiency and focus on core business areas.

- IBM: 9,000 layoffs as part of a restructuring effort to shift some roles to India and align the workforce with areas like AI and hybrid cloud. The layoffs were reportedly concentrated in certain teams, including the Cloud Classic division, and impacted locations such as Raleigh, New York, Dallas, and California.

- Amazon: 5,555 layoffs. Cuts have impacted various areas, including the Amazon Web Services (AWS) cloud unit and the consumer retail business.

- Salesforce: 5,000 layoffs. Many of these cuts impacted the customer service division, where AI agents now handle a significant portion of client interactions.

- STMicro: 5,000 cuts in the next three years, including 2,800 job cuts announced earlier this year, its chief executive said on Wednesday. Around 2,000 employees will leave the Franco-Italian chipmaker due to attrition, bringing the total count with voluntary departures to 5,000, Jean-Marc Chery said at a June 4th event in Paris, hosted by BNP Paribas.

- Meta: 3,720 layoffs. The company has made multiple rounds of cuts targeting “low-performers” and positions within its AI and virtual reality divisions. More details below.

……………………………………………………………………………………………………………………………………………………………………..

Image Credit: simplehappyart via Getty Images

……………………………………………………………………………………………………………………………………………………………………..

In a direct contradiction in August, Cisco announced layoffs of 221 employees in the San Francisco Bay Area, affecting roles in Milpitas and San Francisco. This occurred despite strong financial results and the CEO’s previous statement that the company would not cut jobs in favor of AI. The cuts, which included software engineering roles, are part of the company’s broader strategy to streamline operations & focus on AI.

About two-thirds of all job cuts — roughly 123,000 positions — came from U.S.-based companies, with the remainder spread across mainly Ireland, India and Japan. The report compiles data from WARN notices, TrueUp, TechCrunch and Layoffs.fyi through Oct. 21st.

- Shift to AI and automation: Many companies are restructuring their workforce to focus on AI-centric growth and are automating tasks previously done by human workers, particularly in customer service and quality assurance.

- Economic headwinds: Ongoing economic uncertainty, inflation, and higher interest rates are prompting tech companies to cut costs and streamline operations.

- Market corrections: Following a period of rapid over-hiring, many tech companies are now “right-sizing” their staff to become leaner and more efficient.

References:

Report: Broadcom Announces Further Job Cuts as Global Tech Layoffs Approach 185,000 in 2025

Tech layoffs continue unabated: pink slip season in hard-hit SF Bay Area

HPE cost reduction campaign with more layoffs; 250 AI PoC trials or deployments

High Tech Layoffs Explained: The End of the Free Money Party

Massive layoffs and cost cutting will decimate Intel’s already tiny 5G network business

Big Tech post strong earnings and revenue growth, but cuts jobs along with Telecom Vendors

Telecom layoffs continue unabated as AT&T leads the pack – a growth engine with only 1% YoY growth?

Cisco restructuring plan will result in ~4100 layoffs; focus on security and cloud based products

Cloud Computing Giants Growth Slows; Recession Looms, Layoffs Begin

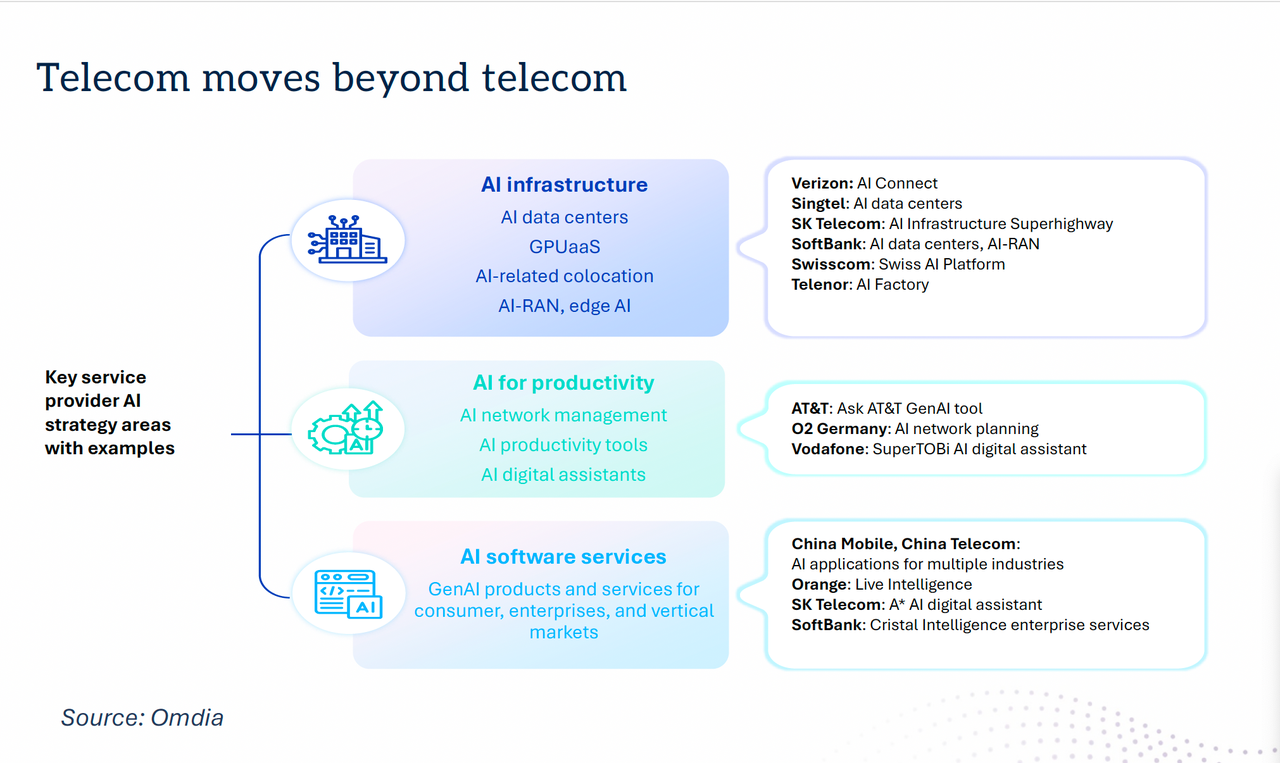

Omdia: How telcos will evolve in the AI era

Dario Talmesio, research director, service provider, strategy and regulation at market research firm Omdia (owned by Informa) sees positive signs for network operators.

“After many years of plumbing, now telecom operators are starting to see some of the benefits of their network and beyond network strategies. Furthermore, the investor community is now appreciating telecom investments, after many years of poor valuation, he said during his analyst keynote presentation at Network X, a conference organized by Light Reading and Informa in Paris, France last week.

“What has changed in the telecoms industry over the past few years is the fact that we are no longer in a market that is in contraction,” he said. Although telcos are generally not seeing double-digit percentage increases in revenue or profit, “it’s a reliable business … a business that is able to provide cash to investors.”

Omdia forecasts that global telecoms revenue will have a CAGR of 2.8% in the 2025-2030 timeframe. In addition, the industry has delivered two consecutive years of record free cash flow, above 17% of sales.

However, Omdia found that telcos have reduced capex, which is trending towards 15% of revenues. Opex fell by -0.2% in 2024 and is broadly flatlining. There was a 2.2% decline in global labor opex following the challenging trend in 2023, when labor opex increased by 4% despite notable layoffs.

“Overall, the positive momentum is continuing, but of course there is more work to be done on the efficiency side,” Talmesio said. He added that it is also still too early to say what impact AI investments will have over the longer term. “All the work that has been done so far is still largely preparatory, with visible results expected to materialize in the near(ish) future,” he added. His Network X keynote presentation addressed the following questions:

- How will telcos evolve their operating structures and shift their business focuses in the next 5 years?

- AI, cloud and more to supercharge efficiencies and operating models?

- How will big tech co-opetition evolve and impact traditional telcos?

Customer care was seen as the area first impacted by AI, building on existing GenAI implementations. In contrast, network operations are expected to ultimately see the most significant impact of agentic AI.

Talmesio said many of the building blocks are in place for telecoms services and future revenue generation, with several markets reaching 60% to 70% fiber coverage, and some even approaching 100%.

Network operators are now moving beyond monetizing pure data access and are able to charge more for different gigabit speeds, home gaming, more intelligent home routers and additional WiFi access points, smart home services such as energy, security and multi-room video, and more.

While noting that connectivity remains the most important revenue driver, when contributions from various telecoms-adjacent services are added up “it becomes a significant number,” Talmesio said.

Mobile networks are another important building block. While acknowledging that 5G has been something of a disappointment in the first five years of the deployment cycle, “this is really changing” as more operators deploy 5G standalone (5G SA core) networks, Omdia observed.

Talmesio said: “At the end of June, there were only 66 telecom operators launching or commercially using 5G SA. But those 66 operators are those operators that carry the majority of the world’s 5G subscribers. And with 5G SA, we have improved latency and more devices among other factors. Monetization is still in its infancy, perhaps, but then you can see some really positive progress in 5G Advanced, where as of June, we had 13 commercial networks available with some good monetization examples, including uplink.”

“Telecom is moving beyond telecoms,” with a number of new AI strategies in place. For example, telcos are increasingly providing AI infrastructure in their data centers, offering GPU as-a-service, AI-related colocation, AI-RAN and edge AI functionality.

Dario Talmesio, Omdia

……………………………………………………………………………………………………………………………………………………

AI is also being used for network management, with AI productivity tools and AI digital assistants, as well as AI software services including GenAI products and services for consumer, enterprises and vertical markets.

“There is an additional boost for telecom operators to move beyond connectivity, which is the sovereignty agenda,” Talmesio noted. While sovereignty in the past was largely applied to data residency, “in reality, there are more and more aspects of sovereignty that are in many ways facilitating telecom operators in retaining or entering business areas that probably ten years ago were unthinkable for them.” These include cloud and data center infrastructure, sovereign AI, cyberdefense and quantum safety, satellite communication, data protection and critical communications.

“The telecom business is definitely improving,” Talmesio concluded, noting that the market is now also being viewed more favorably by investors. “In many ways, the glass is maybe still half full, but there’s more water being poured into the telecom industry.”

References:

https://networkxevent.com/speakers/dario-talmesio/

https://www.mckinsey.com/industries/technology-media-and-telecommunications/our-insights/pushing-telcos-ai-envelope-on-capital-decisions

Omdia on resurgence of Huawei: #1 RAN vendor in 3 out of 5 regions; RAN market has bottomed

Omdia: Huawei increases global RAN market share due to China hegemony

Dell’Oro & Omdia: Global RAN market declined in 2023 and again in 2024

Omdia: Cable network operators deploy PONs

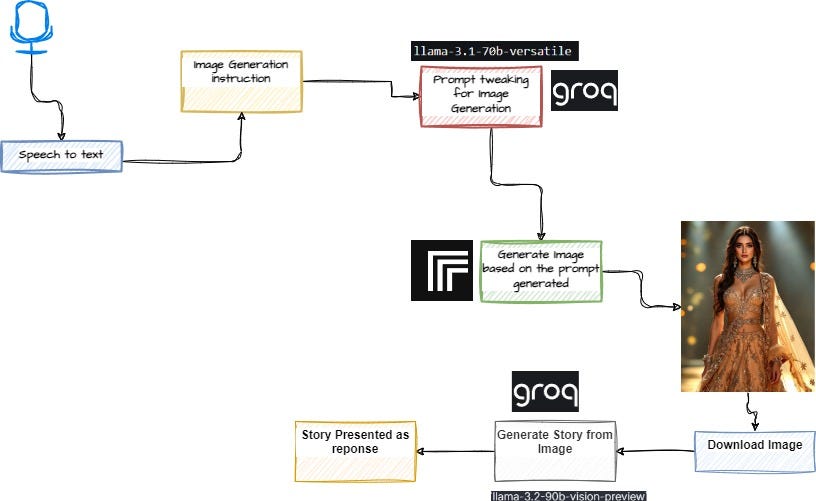

IBM and Groq Partner to Accelerate Enterprise AI Inference Capabilities

IBM and Groq [1.] today announced a strategic market and technology partnership designed to give clients immediate access to Groq’s inference technology — GroqCloud, on watsonx Orchestrate – providing clients high-speed AI inference capabilities at a cost that helps accelerate agentic AI deployment. As part of the partnership, Groq and IBM plan to integrate and enhance RedHat open source vLLM technology with Groq’s LPU architecture. IBM Granite models are also planned to be supported on GroqCloud for IBM clients.

………………………………………………………………………………………………………………………………………………….

Note 1. Groq is a privately held company founded by Jonathan Ross in 2016. As a startup, its ownership is distributed among its founders, employees, and a variety of venture capital and institutional investors including BlackRock Private Equity Partners. Groq developed the LPU and GroqCloud to make compute faster and more affordable. The company says it is trusted by over two million developers and teams worldwide and is a core part of the American AI Stack.

NOTE that Grok, a conversational AI assistant developed by Elon Musk’s xAI is a completely different entity.

………………………………………………………………………………………………………………………………………………….

Enterprises moving AI agents from pilot to production still face challenges with speed, cost, and reliability, especially in mission-critical sectors like healthcare, finance, government, retail, and manufacturing. This partnership combines Groq’s inference speed, cost efficiency, and access to the latest open-source models with IBM’s agentic AI orchestration to deliver the infrastructure needed to help enterprises scale.

Powered by its custom LPU, GroqCloud delivers over 5X faster and more cost-efficient inference than traditional GPU systems. The result is consistently low latency and dependable performance, even as workloads scale globally. This is especially powerful for agentic AI in regulated industries.

For example, IBM’s healthcare clients receive thousands of complex patient questions simultaneously. With Groq, IBM’s AI agents can analyze information in real-time and deliver accurate answers immediately to enhance customer experiences and allow organizations to make faster, smarter decisions.

This technology is also being applied in non-regulated industries. IBM clients across retail and consumer packaged goods are using Groq for HR agents to help enhance automation of HR processes and increase employee productivity.

“Many large enterprise organizations have a range of options with AI inferencing when they’re experimenting, but when they want to go into production, they must ensure complex workflows can be deployed successfully to ensure high-quality experiences,” said Rob Thomas, SVP, Software and Chief Commercial Officer at IBM. “Our partnership with Groq underscores IBM’s commitment to providing clients with the most advanced technologies to achieve AI deployment and drive business value.”

“With Groq’s speed and IBM’s enterprise expertise, we’re making agentic AI real for business. Together, we’re enabling organizations to unlock the full potential of AI-driven responses with the performance needed to scale,” said Jonathan Ross, CEO & Founder at Groq. “Beyond speed and resilience, this partnership is about transforming how enterprises work with AI, moving from experimentation to enterprise-wide adoption with confidence, and opening the door to new patterns where AI can act instantly and learn continuously.”

IBM will offer access to GroqCloud’s capabilities starting immediately and the joint teams will focus on delivering the following capabilities to IBM clients, including:

- High speed and high-performance inference that unlocks the full potential of AI models and agentic AI, powering use cases such as customer care, employee support and productivity enhancement.

- Security and privacy-focused AI deployment designed to support the most stringent regulatory and security requirements, enabling effective execution of complex workflows.

- Seamless integration with IBM’s agentic product, watsonx Orchestrate, providing clients flexibility to adopt purpose-built agentic patterns tailored to diverse use cases.

The partnership also plans to integrate and enhance RedHat open source vLLM technology with Groq’s LPU architecture to offer different approaches to common AI challenges developers face during inference. The solution is expected to enable watsonx to leverage capabilities in a familiar way and let customers stay in their preferred tools while accelerating inference with GroqCloud. This integration will address key AI developer needs, including inference orchestration, load balancing, and hardware acceleration, ultimately streamlining the inference process.

Together, IBM and Groq provide enhanced access to the full potential of enterprise AI, one that is fast, intelligent, and built for real-world impact.

References:

FT: Scale of AI private company valuations dwarfs dot-com boom

AI adoption to accelerate growth in the $215 billion Data Center market

Big tech spending on AI data centers and infrastructure vs the fiber optic buildout during the dot-com boom (& bust)

Will billions of dollars big tech is spending on Gen AI data centers produce a decent ROI?

Can the debt fueling the new wave of AI infrastructure buildouts ever be repaid?