Edge Computing

T-Mobile and Google Cloud collaborate on 5G and edge compute

T-Mobile and Google Cloud announced today they are working together to combine the power of 5G and edge compute, giving enterprises more ways to embrace digital transformation. T-Mobile will connect the 5G Advanced Network Solutions (ANS) [1.] suite of public, private and hybrid 5G networks with Google Distributed Cloud Edge (GDC Edge) to help customers embrace next-generation 5G applications and use cases — like AR/VR experiences.

Note 1. 5G ANS is an end-to-end portfolio of deployable 5G solutions, comprised of 5G Connectivity, Edge Computing, and Industry Solutions – along with a partnership that simplifies creating, deploying and managing unique solutions to unique problems.

More companies are turning to edge computing as they focus on digital transformation. In fact, the global edge compute market size is expected to grow by 37.9% to $155.9 billion in 2030. And the combination of edge computing with the low latency, high speeds, and reliability of 5G will be key to promising use cases in industries like retail, manufacturing, logistics, and smart cities. GDC Edge customers across industries will be able to leverage T-Mobile’s 5G ANS easily to get the low latency, high speeds, and reliability they will need for any use case that requires data-intensive computing processes such as AR or computer vision.

For example, manufacturing companies could use computer vision technology to improve safety by monitoring equipment and automatically notifying support personnel if there are issues. And municipalities could leverage augmented reality to keep workers at a safe distance from dangerous situations by using machines to remotely perform hazardous tasks.

To demonstrate the promise of 5G ANS and GDC Edge in a retail setting, T-Mobile created a proof of concept at T-Mobile’s Tech Experience 5G Hub called the “magic mirror” with the support of Google Cloud. This interactive display leverages cloud-based processing and image rendering at the edge to make retail products “magically” come to life. Users simply hold a product in front of the mirror to make interactive videos or product details — such as ingredients or instructions — appear onscreen in near real-time.

“We’ve built the largest and fastest 5G network in the country. This partnership brings together the powerful combination of 5G and edge computing to unlock the expansion of technologies such as AR and VR from limited applications to large-scale adoption,” said Mishka Dehghan, Senior Vice President, Strategy, Product, and Solutions Engineering, T-Mobile Business Group. “From providing a shopping experience in a virtual reality environment to improving safety through connected sensors or computer vision technologies, T-Mobile’s 5G ANS combined with Google Cloud’s innovative edge compute technology can bring the connected world to businesses across the country.”

“Google Cloud is committed to helping telecommunication companies accelerate their growth, competitiveness, and digital journeys,” said Amol Phadke, General Manager, Global Telecom Industry, Google Cloud. “Google Distributed Cloud Edge and T-Mobile’s 5G ANS will help businesses deliver more value to their customers by unlocking new capabilities through 5G and edge technologies.”

T-Mobile is also working with Microsoft Azure, Amazon Web Services and Ericsson on advanced 5G solutions.

References:

https://www.t-mobile.com/news/business/t-mobile-and-google-cloud-join-5g-advanced-network-solutions

https://www.t-mobile.com/business/solutions/networking/5G-advanced-solutions

AWS enabling edge computing, supports mobile & IoT devices, 5G core network and new services

In an AWS re-Invent Leadership session titled “AWS Wherever You Need It,” [1.] Wayne Duso, vice president of engineering and product at AWS, expressed similar goals. “Today, customers want to use AWS services in a growing range of applications, operating wherever they want, whenever they require. And they’re striving to do so to deliver the best possible customer experience they can, regardless of where their customers or users happen to be located. One way AWS helps customers accomplish this is by bringing the AWS value to our regions, to metro areas, to on-premises, and to the furthest and evolving edge.”

Note 1. You can watch the 1 hour “AWS Wherever You Need It” session here (top right).

“We’re helping customers by providing the same experience from cloud to on-prem to the evolving edge, regardless of where your application may need to reside,” Duso explained. “AWS is enabling customers to use the same infrastructure, services and tools to accomplish that. And we do that by providing a continuum of consistent cloud-scale services that allow you to operate seamlessly across this range of environments.”

Duso explained how AWS is enabling edge computing by adding capabilities for mobile and IoT devices. “There are more than 14 billion smart devices in the world today. And it’s often in things we think about, like wristwatches, cameras, cellphones and speakers,” he said.

“But more often, it’s the stuff that you don’t see every day powering industries of all types and for all types of customers.” Duso cited the example of Hilcorp, a leading energy producer, which is using smart devices to monitor the health of its wells, optimize production and proactively predict failures so it can minimize capital expenditures.

With IoT devices becoming common among energy providers, edge computing is on the rise to handle the volume of data these devices generate. “Now, AWS IoT provides a deep and broad set of services and partner solutions to make it really simple to build secure, managed and scalable IoT applications,” Duso added.

Duso pointed to Couchbase as a use case for flexible AWS services: “Couchbase is a non-SQL database company that uses AWS hybrid edge services such as Local Zones, Wavelength, Outposts and the Snow Family to deploy its applications and highly scalable, reliable and performant environments to reduce latency by over 18 percent for its customers.” Each of these AWS managed services enables Couchbase to move data from the edge to the cloud or manage and process it where it’s generated.

“What we built on these AWS compute environments was a highly distributed, managed or self-managed database,” Duso explained. “For the cloud, an internet gateway for accessing that data securely over the web and synchronizing that data down to the edge. And that works across cloud, edge and on the offline, first-compute environments.”

“Our goal is I want to make AWS the best place to run 5G networks. That is the overarching objective. How can I make AWS, whether we are running it in the region, in a Local Zone, on an Outposts, on a Snow device, how do we make it the best place to run a 5G network, and then provide that infrastructure.”

AWS’ 5G network efforts include a cloud architecture that can support an operator’s 5G SA core network and applications, similar to what AWS is doing with greenfield U.S. wireless network operator Dish Network. Sidd Chenumolu, VP of technology development and network services at Dish Network, recently explained that the wireless carrier’s 5G core network was using three of AWS’ four public regions, was deployed in “multiple availability zones and almost all the Local Zones, but most were deployed with Nokia applications across AWS around the country.”

AWS is also working with Verizon to support a part of that carrier’s public MEC system. This includes use of AWS’ Outposts and Wavelengths, the latter of which AWS recently expanded in the United Kingdom with Vodafone.

Hofmeyr continued, “I think you have a spectrum (of different wireless carrier networks), from the total greenfields like what we did with Dish to the large tier-ones. The one thing that’s common across the board is the desire to modernize and become more cloud-like. That is common. Everyone wants that. Each one has a very unique job. There’s not one way that they all are executing in the same way. They’re taking this one workload and then building, so all of them are focusing on different workloads in the network and put it in the cloud.”

In conclusion Hofmeyr said, “I think all over the edge we find these use cases for which purpose-built systems were designed to handle that. And our goal is how do you make that available in the cloud.”

References:

https://reinvent.awsevents.com/leadership-sessions/

https://reinvent.awsevents.com/on-demand/?trk=www.google.com#leadership-sessions

https://www.sdxcentral.com/articles/analysis/aws-wants-to-be-the-best-place-for-5g-edge/2022/12/

https://biztechmagazine.com/article/2022/12/aws-reinvent-2022-harvesting-data-cloud-edge

Analysys Mason: few private networks include edge computing, despite the synergies between the two technologies

In a report on Private LTE/5G network deployments, Analysys Mason said that “Only 58 of the 363 private network announcements in our tracker explicitly mention that the private network is working with edge computing.” That’s no surprise as per our recent IEEE Techblog post that edge computing has not lived up to its promise and potential.

The number of announced private networks increased from 256 during the third quarter of last year, to 363 announced deployments during Q3 of this year. Most of that growth is coming from more advanced countries and China “where the IoT markets are mature and are driving demand for private networks.” Private networks using 4G LTE technology continue to dominate the overall market “because it is able to meet the connectivity requirements of most private network applications.” However, 5G is gaining ground with more than 70% of new networks announced this year stating the use of 5G technology.

Private networks and edge computing are complementary as each adds value to the other. When combined, the technologies can support applications that have requirements for low latency and high bandwidth or that need to be located on site for security purposes.

The adoption of edge computing with private networks has been limited thus far due to several factors, such as the relative immaturity of edge technology. The drivers of private network adoption are also different to those of edge computing adoption.

Private LTE/5G networks are often introduced to replace existing Wi-Fi or fibre access networks, and no other changes are made. Nevertheless, the share of private network announcements that mention edge is growing, and more than 20% of the private networks that have been publicly announced in 2022 so far include edge. We expect that more private networks will include edge computing in the next 18–24 months. Some vendors are promoting edge as part of a packaged private network solution (such as Nokia with its NDAC solution).

A few operators, such as Verizon, Vodafone and the Chinese MNOs, are promoting the combination, and many other service providers have trials that combine the technologies.

Verizon Business CEO Sowmyanarayan Sampath who during a recent NSR & BCG Innovation Conference explained that private 5G network momentum was outpacing demand for the carrier’s mobile edge computing (MEC) services.

“On the MEC, what we are finding is demand is taking a little longer to go,” Sampath said. “And part of that is we are having to work back and integrate deeper into their operating system. So it’s going to be much stickier when it does happen [but] it’s going to take a little longer. We’ve got loads of proof of concepts and early commercial deployments, but we shouldn’t see revenue till the back half of next year and into 2024.”

References:

https://www.sdxcentral.com/edge/definitions/what-multi-access-edge-computing-mec/

Has Edge Computing Lived Up to Its Potential? Barriers to Deployment

Has Edge Computing Lived Up to Its Potential? Barriers to Deployment

Despite years of touting and hype, edge computing (aka Multi-access Edge Computing or MEC) has not yet provided the payoff promised by its many cheerleaders. Here are a few rosy forecasts and company endorsements:

In an October 27th report, Markets and Markets forecast the Edge Computing Market size is to grow from $44.7 billion in 2022 to $101.3 billion by 2027, which is a Compound Annual Growth Rate (CAGR) of 17.8% over those five years.

IDC defines edge computing as the technology-related actions that are performed outside of the centralized datacenter, where edge is the intermediary between the connected endpoints and the core IT environment.

“Edge computing continues to gain momentum as digital-first organizations seek to innovate outside of the datacenter,” said Dave McCarthy, research vice president, Cloud and Edge Infrastructure Services at IDC. “The diverse needs of edge deployments have created a tremendous market opportunity for technology suppliers as they bring new solutions to market, increasingly through partnerships and alliances.”

IDC has identified more than 150 use cases for edge computing across various industries and domains. The two edge use cases that will see the largest investments in 2022 – content delivery networks and virtual network functions – are both foundational to service providers’ edge services offerings. Combined, these two use cases will generate nearly $26 billion in spending this year (2022). In total, service providers will invest more than $38 billion in enabling edge offerings this year. The market research firm believes spending on edge compute could reach $274 billion globally by 2025 – though that figure would be inclusive of a wide range of products and services.

HPE CEO Antonio Neri recently told Yahoo Finance that edge computing is “the next big opportunity for us because we live in a much more distributed enterprise than ever before.”

DigitalBridge CEO Marc Ganzi said his company continues to see growth in demand for edge computing capabilities, with site leasing rates up 10% to 12% in the company’s most recent quarter. “So this notion of having highly interconnected data centers on the edge is where you want to be,” he said, according to a Seeking Alpha transcript.

Equinix CEO Charles Meyers said his company recently signed a “major design win” to provide edge computing services to an unnamed pediatric treatment and research operation across a number of major US cities. Equinix is one of the world’s largest data center operators, and has recently begun touting its edge computing operations.

……………………………………………………………………………………………………………………………………………………………..

In 2019, Verizon CEO Hans Vestberg said his company would generate “meaningful” revenues from edge computing within a year. But it still hasn’t happened yet!

BofA Global Research wrote in an October 25th report to clients, “Verizon, the largest US wireless provider and the second largest wireline provider, has invested more resources in this [edge computing] topic than any other carrier over the last seven years, yet still cannot articulate how it can make material money in this space over an investable timeframe. Verizon is in year 2 of its beta test of ‘edge compute’ applications and has no material revenue to point to nor any conviction in where real demand may emerge.”

“Gartner believes that communications and manufacturing will be the main drivers of the edge market, given they are infrastructure-intensive segments. We highlight existing use cases, like content delivery in communications, or

‘device control’ in manufacturing, as driving edge compute proliferation. However, as noted above, the market is still undefined and these are only two possible outcomes of many.”

Raymond James wrote in an August research note, “Regarding the edge, carriers and infrastructure companies are still trying to define, size and time the opportunity. But as data demand (and specifically demand for low-latency applications) grows, it seems inevitable that compute power will continue to move toward the customer.”

……………………………………………………………………………………………………………………………………………

BofA Global Research – Challenges with Edge Compute:

The distributed nature of edge compute can pose several risks to enterprises. The number of nodes needed between stores, factories, automobiles, homes, etc. can vary wildly. Different geographies may have different environmental issues, regulatory requirements, and network access. Furthermore, the distributed scale in edge compute puts a greater burden on ensuring that edge compute nodes are secured and that the enterprise is protected. Real-time decision making on the edge device requires a platform to be able to anonymize data used in analytics, and secure data in transit and information stored on the edge device. As more devices are added to the network, each one becomes a potential vulnerability target and as data entry points expand across a corporate network, so do opportunities for intrusion.

On the other hand, the risk is somewhat double-sided as some security risk is mitigated by keeping the data distributed so that a data breach only impacts a fraction of the data or applications. Other barriers to deploying edge applications include high costs as a result of its distributed nature, as well as a lack of a standard edge compute stack and APIs.

Another challenge to edge compute is the issue of extensibility. Edge computing nodes have historically been very purpose-specific and use-case dependent to environments and workloads in order to meet specific requirements and keep costs down. However, workloads will continuously change and new ones will emerge, and existing edge compute nodes may not adequately cover additional use cases. Edge computing platforms need to be both special-purpose and extensible. While enterprises typically start their edge compute journey on a use-case basis, we expect that as the market matures, edge compute will increasingly be purchased on a vertical and horizontal basis to keep up with expanding use cases.

References:

The Amorphous “Edge” as in Edge Computing or Edge Networking?

Edge computing refuses to mature | Light Reading

Multi-access Edge Computing (MEC) Market, Applications and ETSI MEC Standard-Part I

ETSI MEC Standard Explained – Part II

Lumen Technologies expands Edge Computing Solutions into Europe

NTT, VMware & Intel collaborate to launch Edge-as-a-Service and Private 5G Managed Services

Japan’s NTT Ltd. today announced the launch of Edge-as-a-Service, a managed edge compute platform that gives enterprises the ability to deploy quickly, manage and monitor applications closer to the edge.

NTT and VMware, in collaboration with Intel (whose role was not specified), are partnering to innovate on edge-focused solutions and services. NTT uses VMware’s Edge Compute Stack to power its new Edge-as-a-Service offering. Additionally, VMware is adopting NTT’s Private 5G technologies as part of its edge solution. The companies will jointly market the offering through coordinated co-innovation, sales, and business development.

NTT’s Edge-as-a-Service offering is a globally available integrated solution that accelerates business process automation. It delivers near-zero latency for enterprise applications at the network edge, optimizing costs and boosting end-user experiences in a secure environment.

NTT’s Edge-as-a-Service offering, powered by VMware’s Edge Compute Stack, includes Private 5G connectivity and will be delivered by NTT across its global footprint running on Intel network and edge technology. This work is an extension of NTT’s current membership in VMware’s Cloud Partner Program. VMware and NTT will each market their corresponding new services to their respective customer bases.

“Combining Edge and Private 5G is a game changer for our customers and the entire industry, and we are making it available today,” said Shahid Ahmed, Group EVP, New Ventures and Innovation CEO, NTT.

“The combination of NTT and VMware’s Edge Compute Stack and Private 5G delivers a unique solution that will drive powerful outcomes for enterprises eager to optimize the performance and cost efficiencies of critical applications at the network edge. Minimum latency, maximum processing power, and global coverage are exactly what enterprises need to accelerate their unique digital transformation journeys.”

“The whole premise behind it is that many of our customers are looking for an end-to-end solution when they’re buying either edge or private 5G architectures as opposed to buying edge compute from XYZ and then a private 5G from somebody else and an IoT solution from someone else. So we thought we would do a full one-stop solution for our customers, particularly those that are in manufacturing and industrial sectors.” Ahmed also said that NTT will also be able to break these services apart for customers that just want one of the services, but they will all be managed by NTT.

Ahmed added: “We have a very simple pricing structure, which is predictable and tier-based so the customer doesn’t have to put up upfront capex, it’s all opex based. Obviously, some verticals like to purchase or acquire technology as a capex, so we can do that as well.”

As factories increase their reliance on robotics, vehicles become autonomous, and manufacturers move to omnichannel models, there is a greater need for distributed compute processing power and data storage with near-instantaneous response times. VMware’s secure application development, resource management automation, and real-time processing capabilities combined with NTT’s multi-cloud and edge platforms, creates a fully integrated Edge+Private 5G managed service. VMware and NTT’s innovative offering resides closer to where the data is generated or collected, enabling enterprises to access and react to information instantaneously.

This solution, which leverages seamless multi-cloud and multi-tenant connectivity, combined with NTT’s capabilities in network segmentation, and expertise with movement from private to public 5G, provides critical benefits for multiple industries, including manufacturing, retail, logistics, and entertainment.

“Enterprises are increasingly distributed — from the digital architecture they rely on to the human workforce that powers their business daily. This has spurred a sea change across every industry, altering where data is produced, delivered, and consumed,” said Sanjay Uppal, senior vice president and general manager, service provider, and edge business unit, VMware. “Bringing VMware’s Edge Compute Stack to NTT’s Edge-as-a-Service will enable our mutual customers to build, run, manage, connect and better protect edge-native applications at the Near and Far Edge while leveraging consistent infrastructure and operations with the power of edge computing.”

NTT’s Edge-as-a-Service platform was developed to help secure, optimize and simplify organizations’ digital transformation journeys. Edge-as-a-Service is part of NTT’s Managed Service portfolio, which includes Network-as-a-Service and Multi-Cloud-as-a-Service, all designed for enterprises to focus on their core business.

References:

https://www.sdxcentral.com/articles/news/ntt-vmware-intel-team-for-private-5g-edge-tasks/2022/08/

Lumen Technologies expands Edge Computing Solutions into Europe

Lumen Technologies announced the expansion of its edge computing services into Europe. The low-latency platform businesses need to extend their high-bandwidth, data-intensive applications out to the cloud edge. This expansion is part of Lumen’s continued investment in next-generation solutions that transform digital experiences and meet the demands of today’s global businesses.

“Edge computing is a game-changer. It will drive the next wave of business innovation and growth across virtually all industries,” said Annette Murphy, regional president, EMEA and APAC, Lumen Technologies. “Customers in Europe can now tap into the power of the Lumen platform, underpinned by Lumen’s extensive fiber footprint, to deploy data-heavy applications and workloads that demand ultra-low latency at the cloud edge. This delivers peak performance and reliability, as well as more capability to drive amazing digital experiences. Customers can focus efforts on developing applications and bringing them to market, rather than on time-consuming infrastructure deployment.”

Today, Lumen Edge Computing Solutions can meet approximately 70% of enterprise demand within 5 milliseconds of latency in the UK, France, Germany, Belgium, and the Netherlands. Additional locations are planned by end of year. Lumen Edge Computing Solutions bring together the power of the company’s expansive global fiber network, on-demand networking, integrated security, and managed services, with edge facilities and compute and storage services. This allows for quick and efficient deployment of applications and workloads at the edge, closer to the point of digital interaction. Customers can procure Lumen Edge Computing Solutions online, and within an hour gain access to high-powered computing infrastructure on the Lumen platform.

Lumen offers several edge infrastructure and services solutions to support enterprise innovation and applications of the 4th Industrial Revolution. These include:

- Lumen Edge Bare Metal offers dedicated, pay-as-you-go server hardware hosted in distributed locations and connected to the Lumen global fiber network. Edge Bare Metal delivers enhanced security and connectivity with dedicated, single tenancy servers designed to isolate and protect data and deliver high-performance.

- Lumen Network Storage enables customers to take advantage of secure, scalable, and fast storage where and when they need it. The service allows enterprises and public sector organizations to ingest and update data at the edge using whatever file storage protocol meets their needs.

- Lumen Edge Private Cloud provides pre-built infrastructure for high performance private cloud computing connected to the Lumen global fiber network. Lumen Edge Private Cloud is fully managed by Lumen and helps businesses go-to-market quickly with the capacity needed for interaction-intensive applications.

- Lumen Edge Gateway is a scalable Multi-access Edge Compute (MEC) platform for the premises. The service offers a compute platform for the delivery of virtualized wide area networking (WAN), security, and IT applications from multiple vendors on the premises edge.

Key Facts:

- Lumen Edge Computing Solutions meet approximately 97% of U.S. enterprise demand and approximately 70% of enterprise demand in the UK, France, Germany, Belgium, and the Netherlands within 5 milliseconds of latency.

- For a current list of live and planned Lumen edge locations, visit: https://www.lumen.com/en-uk/resources/network-maps.html#edge-roadmap

- As part of the Edge Computing Solutions deployment in Europe, Lumen enabled an additional 100G MPLS and IP network connectivity, as well as increased power and cooling at key edge data center locations.

- Lumen manages and operates one of the largest, most connected, most deeply peered networks in the world. It is comprised of approximately 500,000 (805,000 km) global route miles of fiber and more than 190,000 on-net buildings, seamlessly connected to 2,200 public and private third-party data centers and leading public cloud service providers.

- In EMEA, the Lumen network is comprised of approximately 42,000 (67,000 km) route miles of fiber and connects to more than 2,500 on-net buildings and 540 public and private third-party data centers.

Additional Resources:

- Lumen Edge Computing Solutions: https://www.lumen.com/en-us/solutions/edge-computing.html

- Lumen Edge Bare Metal: https://www.lumen.com/en-us/edge-computing/bare-metal.html

- Lumen Network Storage: https://www.lumen.com/en-us/hybrid-it-cloud/network-storage.html

- Lumen Edge Private Cloud: https://www.lumen.com/en-us/hybrid-it-cloud/private-cloud.html

- Lumen Edge Gateway: https://www.lumen.com/en-us/edge-computing/edge-gateway.html

About Lumen Technologies and the People of Lumen:

Lumen is guided by our belief that humanity is at its best when technology advances the way we live and work. With approximately 500,000 route fiber miles and serving customers in more than 60 countries, we deliver the fastest, most secure platform for applications and data to help businesses, government and communities deliver amazing experiences.

References:

Bell Canada deploys the first AWS Wavelength Zone at the edge of its 5G network

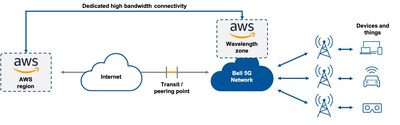

In yet another tie-up between telcos and cloud computing giants, Bell Canada is the first Canadian network operator to launch multi-access edge computing (MEC) services using Amazon Web Services’ (AWS) Wavelength platform.

Building on Bell’s agreement with AWS, announced last year, together the two companies are deploying AWS Wavelength Zones throughout the country at the edge of Bell’s 5G network starting in Toronto.

The Bell Canada Public MEC service embeds AWS compute and software defined storage capabilities at the edge of Bell’s 5G network.

The Wavelength technology is then tied into AWS cloud regions that host the applications. This moves access closer to the end user or device to lower latency and increase performance for services such as real-time visual data processing, augmented/virtual reality (AR/VR), artificial intelligence and machine learning (AI/ML), and advanced robotics.

Source: Bell Canada

……………………………………………………………………………………………………………………………………………………………………………….

“Because that link between the application and the edge device is a completely controllable link – it doesn’t involve the internet, doesn’t involve these multiple hops of the traffic to reach the application – it allows us to have a very particular controlled link that can give you different quality of service,” explained George Elissaios, director and GM for EC2 Core Product Management at AWS, during a briefing call with analysts.

Network infrastructure is the backbone for Canadian businesses today as they innovate and advance in the digital age. Organizations across retail, transportation, manufacturing, media & entertainment and more can unlock new growth opportunities with 5G and MEC to be more agile, drive efficiency, and transform customer experiences.

Optimized for MEC applications, AWS Wavelength deployed on service providers’ 5G networks provides seamless access to cloud services running in AWS Regions. By doing so, AWS Wavelength minimizes the latency and network hops required to connect from a 5G device to an application hosted on AWS. AWS Wavelength is now available in Canada, the United States, the United Kingdom, Germany, South Korea, and Japan in partnership with global communications service providers.

Creating an immersive shopping experience with Bell Canada 5G:

Increasingly, retailers want to offer omni-channel shopping experiences so that consumers can access products, offers, and support services on the channels, platforms, and devices they prefer. For instance, there’s a growing appetite for online shopping to replicate the in store experience – particularly for apparel retailers. These kinds of experiences require seamless connectivity so that customers can easily and immediately pick up on a channel after they leave another channel to continue the experience. These experiences also must be optimized for high-quality viewing and interactivity.

Rudsak worked with Bell and AWS to deploy Summit Tech’s immersive shopping platform, Odience, to offer its customers an immersive and seamless virtual shopping experience with live sales associates and the ability to see merchandise up close. With 360-degree cameras at its pop-up locations and launch events, Rudsak customers can browse the racks and view a new product line via their smartphones or VR headsets from either the comfort of their own home or while on the go. To find out more, please click here.

Bell Canada Public MEC with AWS Wavelength is now available in the Toronto area, with additional Wavelength Zones to be deployed in the future. To find out more, please visit: Bell.ca/publicmec

AWS currently has Wavelength customers (see References below) in the United States, the United Kingdom, Germany, South Korea, Japan, and now Canada. It also has deals with Verizon, Vodafone, SK Telecom, and Dish Network.

Bell Canada explained that the service is targeted at enterprise customers. It will initially offer services to enterprises in Toronto, with expansion planned into other major Canadian markets.

“We’re excited to partner with AWS to bring together Bell’s 5G network leadership with the world’s leading cloud and AWS’ robust portfolio of compute and storage services. With general availability of AWS Wavelength Zones on Canada’s fastest network, it becomes possible for businesses to tap into all-new capabilities, reaching new markets and serving customers in exciting new ways. With our help, customers are thinking bigger, innovating faster and pushing boundaries like never before. Our team of experts are with customers every step of the way on their digital transformation journey. With our ongoing investments in supporting emerging MEC use cases, coupled with our end-to-end security built into our 5G network, we are able to give Canadian businesses a platform to innovate, harness the power of 5G and drive competitiveness for their businesses.”

– Jeremy Wubs, Senior Vice President of Product, Marketing and Professional Services, Bell Business Markets

“AWS Wavelength brings the power of the world’s leading cloud to the edge of 5G networks so that customers like Rudsak, Tiny Mile and Drone Delivery Canada can build highly performant applications that transform consumers’ experiences. We are particularly excited about our deep collaboration with Bell as it accelerates innovation across Canada, by offering access to 5G edge technology to the whole AWS ecosystem of partners and customers. This enables any enterprise or developer with an AWS account to power new kinds of mobile applications that require ultra-low latencies, massive bandwidth, and high speeds.”

– George Elissaios, Director and General Manager, EC2 Core Product Management, AWS

“With Bell’s Public MEC and AWS Wavelength we are able to offer new, fully immersive shopping experiences to our customers. Shoppers can virtually explore our new arrivals and interact in real-time with our staff and industry experts during interactive events and pop-ups. Thanks to the hard work, support and expertise of Bell, AWS and Summit Tech, we were able to successfully deliver our first immersive/interactive shopping event with the quality, innovation and excellence that our brand is known for.”

– Evik Asatoorian, President and Founder, Rudsak

“Canadian organizations across all industries are transforming their workflows by harnessing the power of new technologies to launch new products and services. In fact, 85% of Canadian businesses are already using the Internet of Things (IoT). In order to maximize the benefits of cloud computing, intelligent endpoints and AI, while adding emerging technologies like 5G, we need to modernize our digital infrastructure to embrace multi-access edge computing (MEC). Modernized edge computing interconnects core, cloud and diverse edge sites, enabling CIOs and business leaders to optimize their architectures to resolve technical challenges around latency, bandwidth and compute power, financial concerns about cloud ingress/egress and compute costs as well as governance issues such as regulatory compliance without losing advanced features like machine learning, AI and analytics. MEC offers the possibility of deploying modernized, cloud-like resources everywhere to support the ability to extract value from data.”

– Nigel Wallis, Research VP, Canadian Industries and IoT, IDC Canada

- Bell is the first Canadian telecommunications company to offer AWS-powered public MEC to business customers

- First AWS Wavelength Zone to launch in the Toronto region, with additional locations in Canada to follow

- Apparel retailer Rudsak among the first to leverage Bell Public MEC with AWS Wavelength to deliver an immersive virtual shopping experience

Bell is Canada’s largest communications company, providing advanced broadband wireless, TV, Internet, media and business communication services throughout the country. Founded in Montréal in 1880, Bell is wholly owned by BCE Inc. To learn more, please visit Bell.ca or BCE.ca.

References:

AWS looks to dominate 5G edge with telco partners that include Verizon, Vodafone, KDDI, SK Telecom

Verizon, AWS and Bloomberg media work on 4K video streaming over 5G with MEC

AWS deployed in Digital Realty Data Centers at 100Gbps & for Bell Canada’s 5G Edge Computing

Amazon AWS and Verizon Business Expand 5G Collaboration with Private MEC Solution

Omdia: Enterprise edge services market to hit $214 billion by 2026

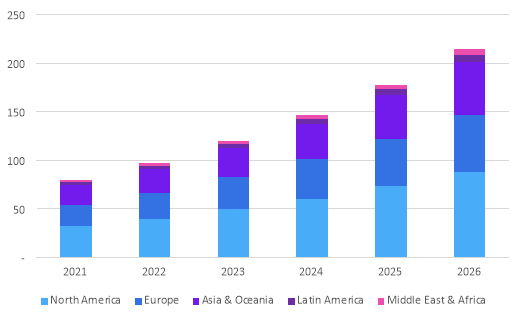

According to a new report by Informa’s Omdia, revenue from edge services (where EXACTLY is the edge?) will reach $214 billion by 2026. That’s more than double the current size of the enterprise edge services market, which will reach $97.0 billion in 2022, says Omdia. With a compound annual growth rate (CAGR) of 20.4%, North America is predicted to dominate with 41% of global revenue share between 2021 and 2026.

This Omdia report discusses the latest global enterprise edge services forecast including edge consulting, integration, network, security, storage/compute and managed edge services considering use cases, verticals and edge deployment models.

Enterprise edge services forecast by region, 2021-26 ($ billions)

Source: Omdia (owned by Informa)

…………………………………………………………………………………………………………

While hyperscalers build out edge access points and systems integrators (SIs) design consulting and professional services for edge use cases, enterprises are looking to service providers to define business cases, run pilot projects and scope out different approaches to edge computing use cases, according to Omdia.

The Informa owned market research group outlines two main consumption models for edge services.

- In one model, enterprises will need consulting, systems integration and other support services to deploy physical edge infrastructure.

- The second method is a cloud-based, as-a-service and fully managed approach, where services provided by hyperscalers and independent software vendors (ISVs) are extended to the edge using local access points or gateways.

Omdia sees several opportunities for network providers to assist enterprises with the challenges that arise from implementing their edge strategies. The firm notes that telcos can help enterprises navigate data location and management considerations; regulatory compliance; network considerations such as the need for and availability of 5G, WAN/LAN and private networks; selecting the right edge setup and location; balancing use of internal skills with managed edge services; defining clear business cases; and more.

Edge consulting services from SIs, telcos, ICT solutions vendors and consulting firms form the largest part of the enterprise edge services market at 39.3% in 2022, says Omdia. While cybersecurity and network management subscriptions from service providers are critical to edge service packages, these subscription-based telco services are declining over time, the research group adds.

However, fully managed, cloud-delivered edge services, including multi-access edge computing (MEC) and workload and database management, are increasing in popularity. Omdia predicts that edge storage and compute services will be the strongest area of growth, with the services emerging as cloud services extensions to the edge provided by major hyperscalers, service providers and data center operators.

“As data volumes continue to grow and enterprises aim to move more workloads to the edge, they require more compute and storage capacity in the form of IaaS and PaaS at edge access points,”Omdia explained.

Edge locations will also shift from customers’ premises (53% in 2022 and 38% in 2026) to PoPs (point of presence) such as cloud access points and to a lesser extent, data centers.

“By 2026, over a third of edge services revenues will be realized as part of PoP deployments, which provides key opportunities and challenges for ICT service providers,” says Omdia.

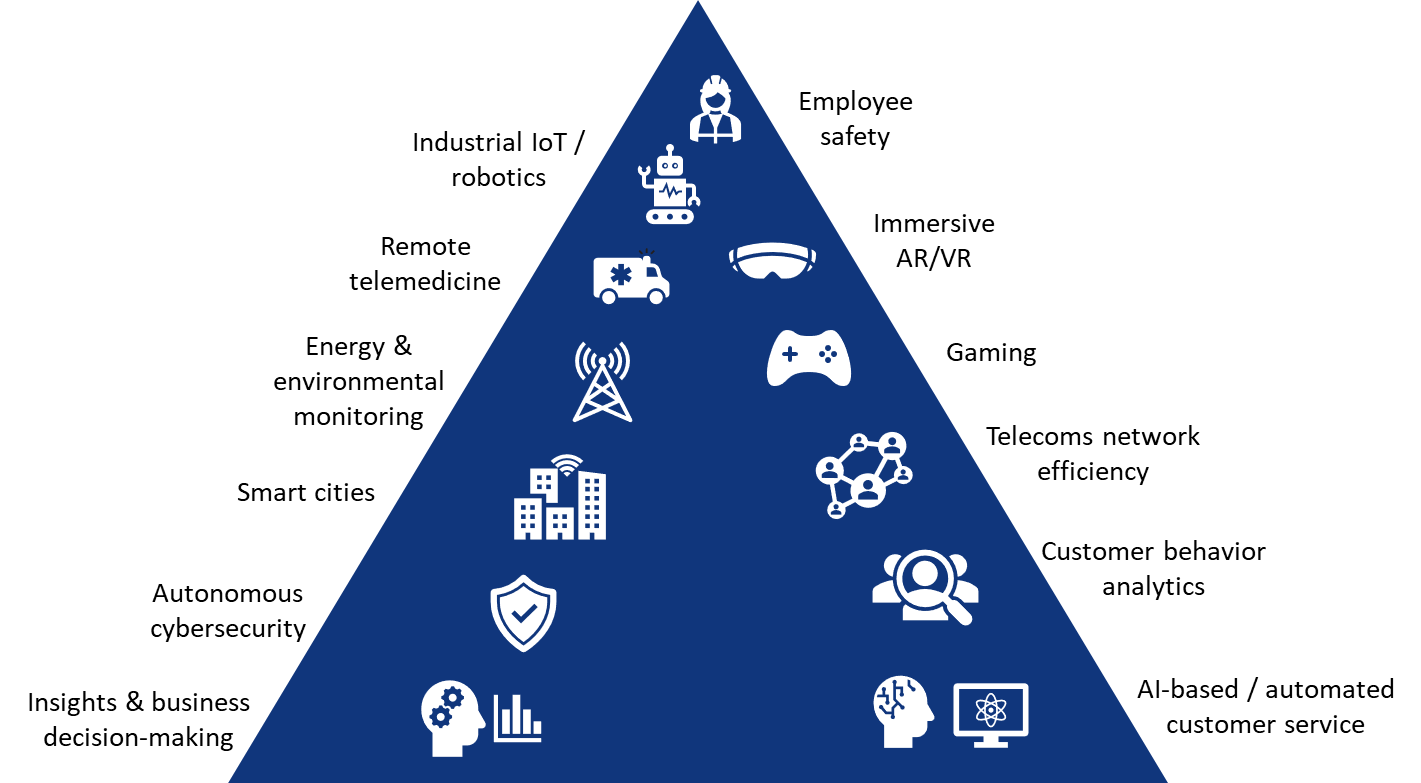

Emerging edge use cases

Edge use cases initially took flight in industrial applications and IoT use cases for worker safety, automated production lines, mining and logistics, explains Omdia. Over the next five years, the largest vertical forecasted to lead growth in edge services is the financial market, which could use AI-based analytics and cognitive systems for business decisions, market insight, risk assessment and customer service platforms.

Source: Omdia

Source: Omdia

…………………………………………………………………………………………………………………

Additional edge service use cases, which network operators could deliver as managed services, include smart meters for energy use and environmental monitoring; transport and container tracking; customer behavior analytics in retail; network efficiency; and data protection compliance and cybersecurity.

What applications do enterprises expect to run at the edge?

Omdia recommends several approaches for service providers, SIs, hyperscalers and ICT solutions vendors to consider when working with enterprises on edge services. Suggestions include developing vertical and workload-specific edge services that can be largely replicated to different customers, creating innovation hubs for edge solutions to test edge setups with customers, developing consulting services and creating a partner ecosystem to reduce vendor lock-in for customers.

References:

https://omdia.tech.informa.com/OM024012/Enterprise-Services-at-the-Edge–Forecast-202226

The Amorphous “Edge” as in Edge Computing or Edge Networking?

Multi-access Edge Computing (MEC) Market, Applications and ETSI MEC Standard-Part I

IBM says 5G killer app is connecting industrial robots: edge computing with private 5G

ONF’s Private 5G Connected Edge Platform Aether™ Released to Open Source

The Amorphous “Edge” as in Edge Computing or Edge Networking?

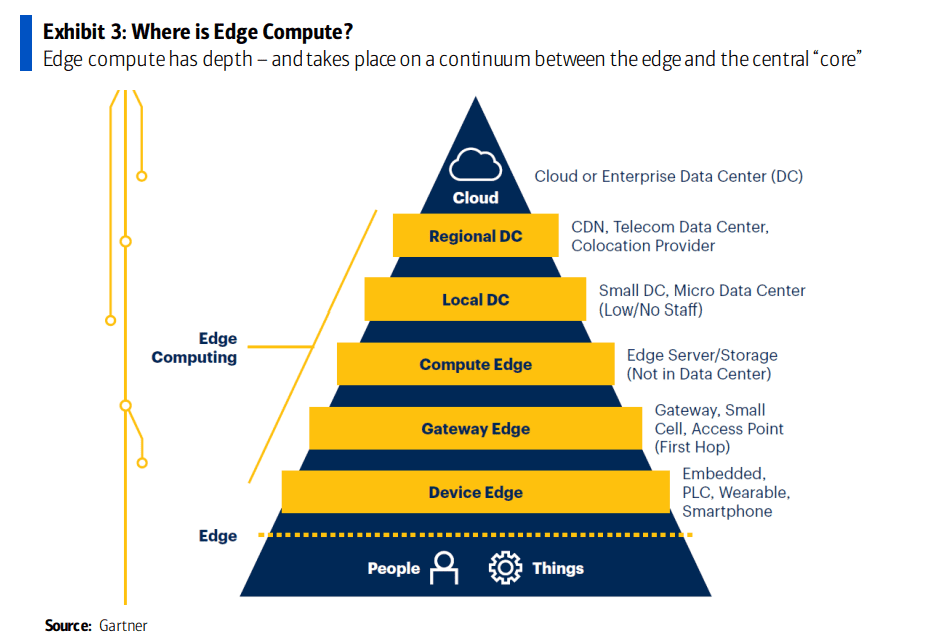

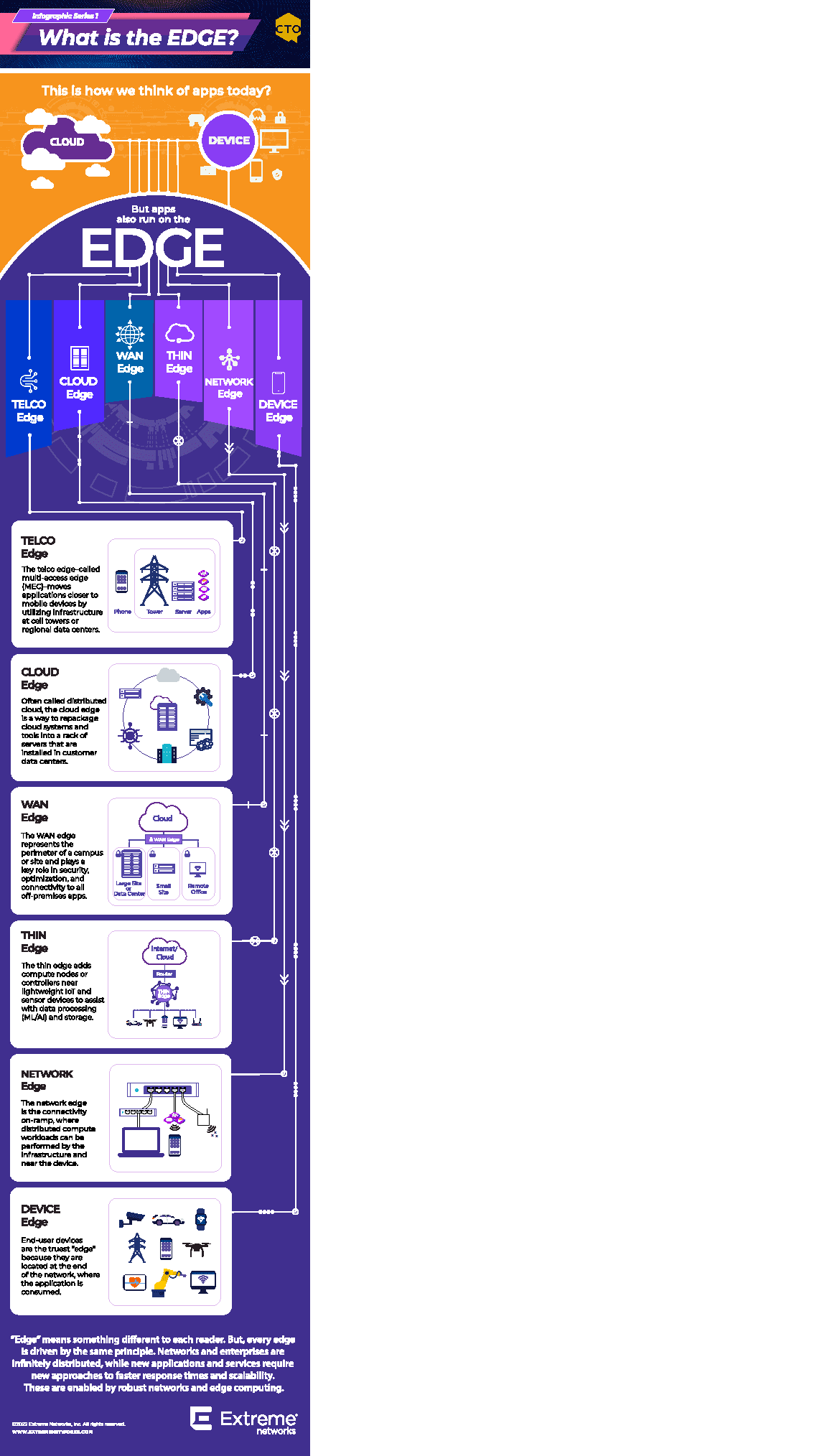

There are many definitions for where the “edge” is actually located. To datacenter experts, the edge is a small datacenter closer to users. For telecom people, the edge is regional data center or carrier owned point of presence that is not in the cloud. To enterprise users, the edge can be on premises. What does the “edge” mean to you?

Extreme Networks suggests the edge is “any form of application delivery that is not in the cloud. To illustrate the concept, and the multiplicity of edges, the company created an infographic that highlights some of the most common meanings of “edge.”

Image Courtesy of Extreme Networks

References:

Multi-access Edge Computing (MEC) Market, Applications and ETSI MEC Standard-Part I

Exium Collaborates with IBM on Secure Edge Compute for AI and IoT Applications

Exium, a 5G security company [1.], today announced that it is collaborating with IBM to help clients adopt an edge computing strategy designed to enable them to run AI or IoT applications seamlessly across hybrid cloud environments, from private data centers to the edge. Exium offers clients an end-to-end AI deployment solution designed for high performance on the Edge that can extend to any cloud. This platform can help clients address vendor lock-in by providing flexibility to run their centralized Data/AI resources across any cloud or in private data centers.

Note 1. Exium was founded in 2019 by wireless telecommunications entrepreneur Farooq Khan (ex-Phazr, ex-JMA Wireless). The company believes that the current Cybersecurity Model is broken. Existing cybersecurity approaches and technologies simply no longer provide the levels of security and access control modern digital organizations need. These organizations demand immediate, uninterrupted secure access for their users, teams, and IoT/ OT devices, no matter where they are located.

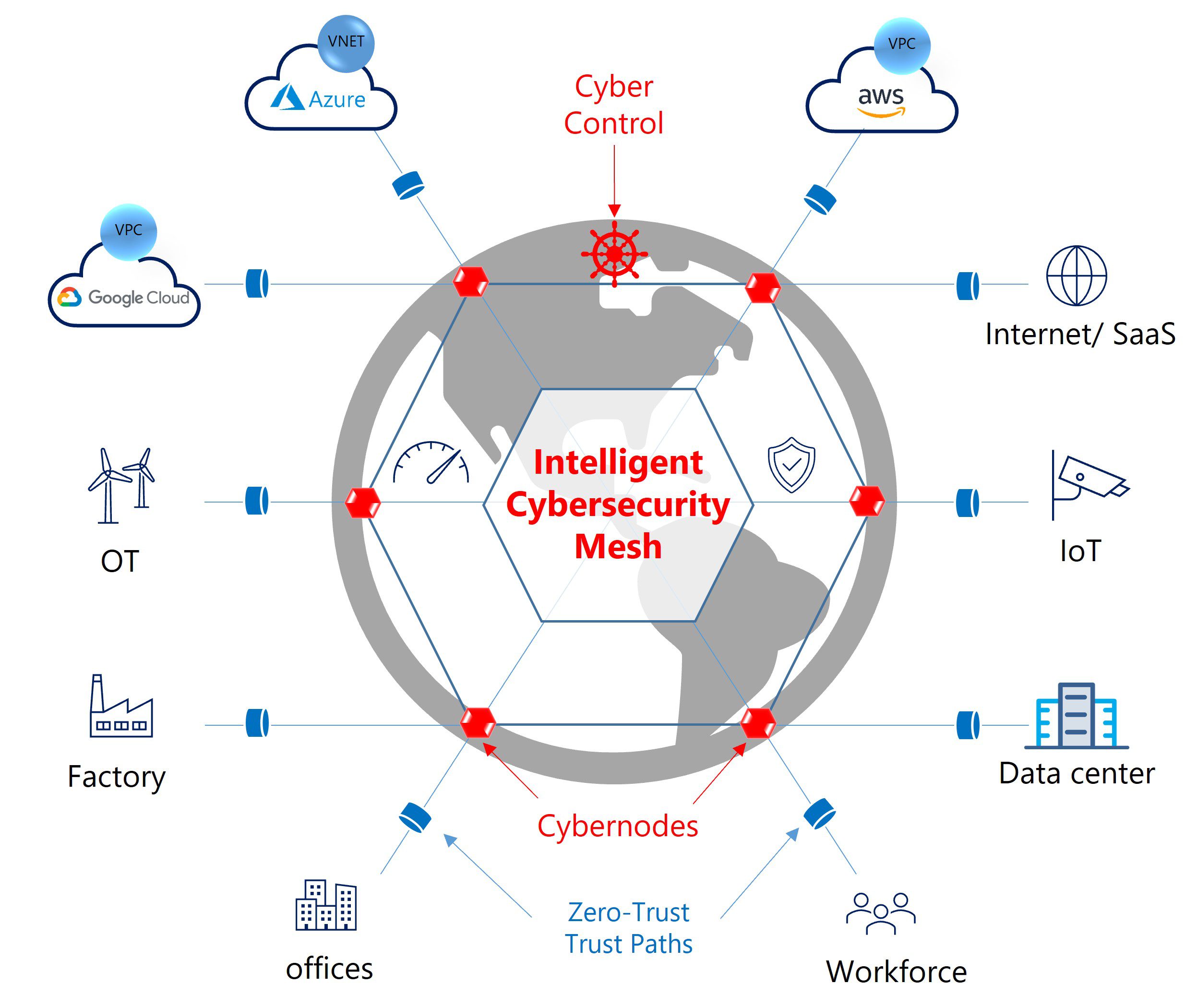

Exium’s Intelligent Cybersecurity Mesh™ (see diagram below) provides secure access for a distributed workforce, IoT devices, and mission-critical Operational Technology (OT) infrastructure, protecting businesses from malware, ransomware, phishing, denial of service, and botnet infections in one easy to use cloud service.

CyberMesh consolidates three technologies, 5G, Secure Access Services Edge, or SASE, and Extended Detection and Response, or XDR in a single powerful cloud platform.

The Intelligent Cybersecurity Mesh is the first network security platform rooted in internationally accepted digital trust standards and is a reflection of Exium’s commitment to an open, interoperable, and secure global internet for all.

……………………………………………………………………………………………………………………………………………

Exium’s Secure Edge AI is designed to provide a secured, highly performant Edge for IoT data collection and AI execution that works with WiFi/Ethernet/4G today and will be able to assist enterprises to upgrade to 5G in the future.

Exium’s CyberMesh is designed to deliver Zero-Trust Edge Security, Intent-Driven Edge Network Performance, and connect Edge and Cloud locations to help provide scalability and resilience out of the box. Zero-Trust Edge Security addresses trust assumptions to help build the connection between users, devices, and edge applications. Intent-Driven Edge Network enables edge applications to influence the 5G network for traffic routing, steering and QoS control.

“With computing done in so many places—on public and private clouds and the edge–we believe the challenge that businesses face today is to securely connect all these different elements into a cohesive, end-to-end platform,” said Farooq Khan, Founder & CEO at Exium. “Through our collaboration, Exium plans to integrate with IBM Edge Application Manager to offer edge solutions at scale for our clients.”

“We look forward to collaborating with Exium to help clients deploy, operate and manage thousands of endpoints throughout their operations with IBM Edge Application Manager,” said Evaristus Mainsah, GM, IBM Hybrid Cloud and Edge Ecosystem. “Together, we can help enterprises accelerate their digital transformation by acting on insights closer to where their data is being created, at the edge.”

A recent IBM Institute for Business Value report, “Why organizations are betting on edge computing: Insights from the edge,” revealed that 91% of the 1,500 executives surveyed indicated that their organizations plan to implement edge computing strategies within five years. IBM Edge Application Manager, an autonomous management solution that runs on Red Hat OpenShift, enables the secured deployment, continuous operations and remote management of AI, analytics, and IoT enterprise workloads to deliver real-time analysis and insights at scale. The introduction of Intel® Secure Device Onboard (SDO) made available as open source through the Linux Foundation, provides zero-touch provisioning of edge nodes, and enables multi-tenant support for enterprises to manage up to 40,000 edge devices simultaneously per edge hub. IBM Edge Application Manager is the industry’s first solution powered by the open-source project, Linux Foundation Open Horizon.

Exium is part of IBM’s partner ecosystem, collaborating with more than 30 equipment manufacturers, networking, IT & software providers to implement open standards-based cloud-native solutions that can autonomously manage edge applications at scale. IBM’s partner ecosystem fuels hybrid cloud environments by helping clients manage and modernize workloads from bare-metal to multicloud and everything in between with Red Hat OpenShift, the industry’s leading enterprise Kubernetes platform.

About Exium:

Exium is a U.S. full-stack cybersecurity and 5G clean networking pioneer helping organizations to connect and secure their teams, users, and mission-critical assets with ease, wherever they are.

To learn more about Exium, please visit https://exium.net/

About Farooq Khan, PhD:

Before founding Exium, Farooq Khan was founder and CEO of PHAZR, a 5G Millimeter wave radio network solutions company that was sold to JMA Wireless . Before that he was the President and Head of Samsung Research America, Samsung’s U.S.-based R&D unit, where he led high impact collaborative research programs in mobile technology. He also held engineering positions at Bell Labs, Ericsson and Paktel.

Farooq earned a PhD in Computer Science from Université de Versailles Saint-Quentin-en-Yvelines in France. He holds over 200 U.S. patents, has written over 50 research articles and a best-selling book.