Year: 2022

MoffettNathanson: Fiber Bubble May Pop; AT&T is by far the largest (fiber) overbuilder in U.S.

Fiber network build-outs are still going strong, even as the pace of those builds slowed a bit in 2022. Our colleague Craig Moffett warns that the fiber future isn’t looking quite as bright due to an emerging set of economic challenges that could reduce the overall rate of return on those build-outs. Rising costs, reflecting labor cost inflation, equipment cost inflation, and higher cost of capital, all point to diminished investment returns for fiber overbuilds. Craig wrote in a note to clients:

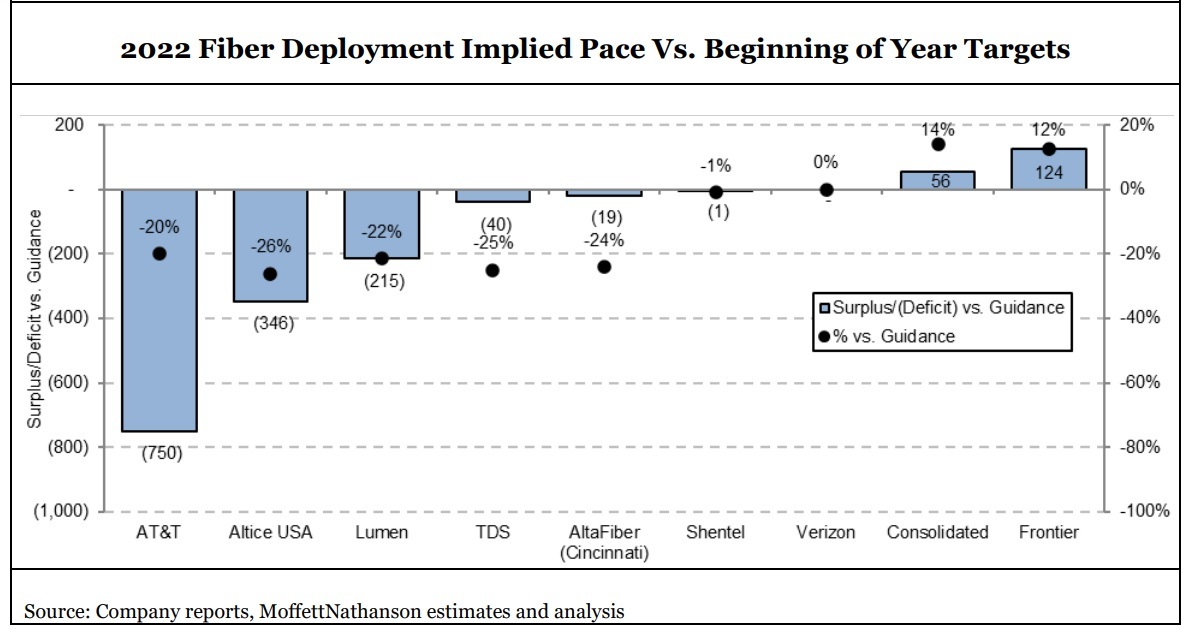

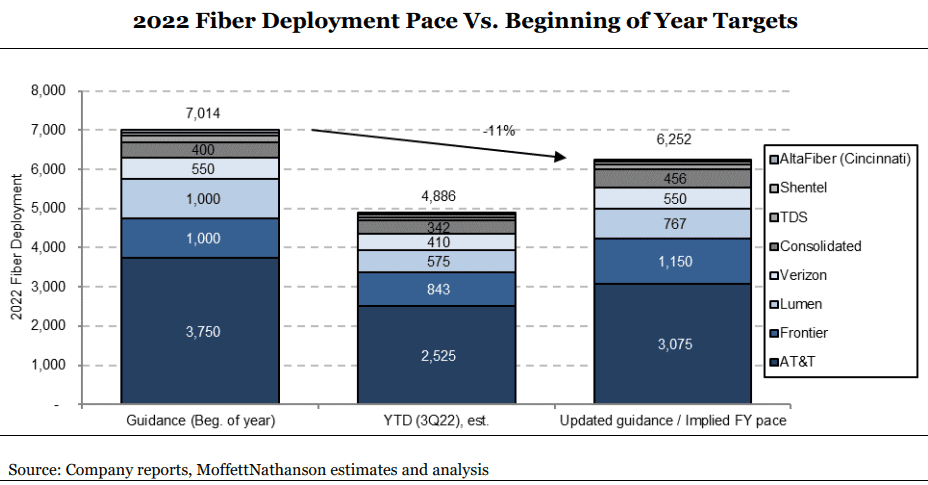

Our by-now familiar tally of planned competitive fiber builds for 2022 started the year at to 6M or so homes passed. By early Spring it had climbed to 7M. It currently sits at ~8M. Next year’s number is flirting with 10M. All for an industry that has never built even half that many in a single year. As we approach the end of the year, however, it is clear that the actual number, at least for this year, will fall short. The number is still high, to be sure… but lower.

There does not appear to be a single explanation for the construction shortfall; some operators blame labor supply, some permitting delays. And some, of course, are actually doing just fine. For the industry as a whole, however, notably including AT&T, by far the nation’s largest (fiber) overbuilder, the number will almost certainly end the year meaningfully below plan. Costs appear to be rising, as well. Here again, there is no single explanation. Labor costs are frequently cited, but equipment costs are rising as well. For example, despite construction shortfalls, AT&T’s capital spending show no such shortfall, suggesting higher cost per home passed. Higher cost per home passed, coupled with a higher cost of capital, portend lower returns on invested capital.

If, as we expect, investment returns for fiber overbuilds increasingly prove to be inadequate, the capital markets will eventually withdraw funding. Indeed, this is how all bubbles ultimately pop. There are already signs of growing hesitancy. To be sure, we don’t expect a near-term curtailment; operators’ plans for the next year or two are largely locked in. Our skepticism is instead about longer-term projections that call for as much as 70% of the country to be overbuilt by fiber. We believe those kinds of forecasts are badly overstated.

As recent as its Q3 2022 earnings call, AT&T has reiterated that it’s on track to expand its fiber footprint to more than 30 million locations by 2025. The company deployed fiber to about 2.3 million locations through the third quarter of this year, but appears hard-pressed to meet its guidance to build 3.5 million to 4 million fiber locations per year.

Given that the fourth quarter is typically a slow construction period, AT&T “looks to be well behind its deployment goals,” Moffett wrote. “If the company retains the pace of deployment in Q3, they will end the year 675K homes short of their goal, or an 18% shortfall compared to the midpoint of their target.”

But AT&T isn’t alone. Lumen has also fallen behind its target, as has TDS and altafiber (formerly Cincinnati Bell) and Altice USA. Those on track or ahead of pace include Frontier Communications, Consolidated Communications and Verizon.

The analysts at Wells Fargo recently lowered their fiber buildout forecasts for 2022 and 2023. They cut their 2022 forecast for the US to about 8 million new fiber locations, down from 9 million. For 2023, they expect the industry to build about 10 million locations, cut from a previous expectation of 11 million.

Though overall buildout figures are still relatively high, some operators recently have blamed a blend of reasons for the recent slowdown in pace, including a challenging labor supply, permitting delays and rising costs for capital and equipment.

“Labor costs are frequently cited, but equipment costs are rising as well,” Moffett noted. “For example, despite construction shortfalls, AT&T’s capital spending show no such shortfall, suggesting higher cost per home passed.”

And, like the pace of buildouts, the cost situation is clearly not the same for all operators. While Consolidated is seeing the cost per home passed rising to a range of $600 to $650 (up from $550 to $600), Frontier expects its costs to remain at the expected range of $900 to $1,000.

But more generally, Moffett believes the returns on those investments “will only weaken further as buildouts are necessarily pushed out to less attractive, lower density, markets.”

One takeaway from that, Craig warns, is that fiber overbuilding is poised not only to generate lower returns that originally hoped, but that there also will be upward, not downward, pressure on broadband prices.

With respect to the pace of fiber build-outs, there’s heavy demand for labor for today’s overbuilding plans, and it will only get heavier as the $42.5 billion Broadband Equity, Access and Deployment (BEAD) program gets started.

With respect to the pace of fiber build-outs, there’s heavy demand for labor for today’s overbuilding plans, and it will only get heavier as the $42.5 billion Broadband Equity, Access and Deployment (BEAD) program gets started.

Remedies are out there, with Moffett pointing to the Fiber Broadband Association’s rollout of its OpTICs Path fiber technician training program earlier this year as one example. ATX Networks, a network tech supplier, is contributing with the recent launch of a Field Personnel Replenishment Program.

But they might not completely bridge the gap. “These efforts may help expand capacity, but they are unlikely to fully meet demand, and they are almost certainly not going to forestall near-term labor cost inflation, in our view,” Moffett wrote.

With rising equipment costs and the cost of capital also factoring in, Moffett views a 20% rise in fiber deployment (for both passing and connecting homes) a “reasonable range” in the coming two to three years.

Moffett wonders if network operators will be forced to raise prices to help restore returns to the levels anticipated when fiber buildout plans were first conceived. While it’s unclear if competitive dynamics will allow for that, “it does appear to us that expectations of falling ARPU [average revenue per user] are misplaced,” Craig wrote.

But the mix of higher cost of capital and deployment for fiber projects, paired with deployment in lower density markets or those with more buried infrastructure, stand to reduce the value of such fiber projects further.

“Capital markets will sniff out this dynamic long before the companies themselves do, and they will withdraw capital. This is, of course, how bubbles are popped,” Moffett warned.

The MoffettNathanson’s report also provided an update on broadband subscriber metrics. US cable turned in a modest gain of 38,000 broadband subs in Q3 2022, an improvement from cable’s first-ever negative result in Q2. Cable saw broadband subscriber growth of 1.2% in Q3, down from +4.4% in the year-ago quarter. U.S. telcos saw broadband subscriber growth fall to -0.5% in Q3, versus +.06% in the year-ago quarter.

Meanwhile, fixed wireless additions set a new record thanks to continued growth at both Verizon and T-Mobile. However, T-Mobile’s 5G Home business posted 578,000 FWA subscriber adds in Q3, up just 3.2% from the prior quarter.

References:

EU to launch IRIS – a new satellite constellation for secure connectivity

Following an agreement with the Council of the European Union and the European Parliament, the European Commission is to go ahead with its plan to build a third strategic satellite constellation to add to the existing Galileo and Copernicus networks. The network is called IRIS (Infrastructure for Resilience, Interconnection & Security by Satellites) and will be part-funded with €2.4bn of European Union cash, though the total cost of building the network is expected to be about €6bn.

The framework defined today is as fpllows:

– IRIS² will be a sovereign constellation, which imposes strict eligibility criteria and security requirements.

– IRIS² will be a constellation focused on government services, including defence applications.

– IRIS² will provide connectivity to the whole of Europe, including areas that do not currently benefit from broadband Internet, as well as to the whole of Africa, using the constellation’s North-South orbits.

– IRIS² will be a “new space” constellation the European way, integrating the know-how of the major European space industries – but also the dynamism of our start-ups, who will build 30% of the infrastructure.

– IRIS² will be a constellation at the cutting edge of technology, to give Europe a lead, for example in quantum encryption. It will be a vector of innovation.

– IRIS² will be a multi-orbit constellation, capable of creating synergies with our existing Galileo and Copernicus constellations. The objective here is to reduce the risk of space congestion.

European Parliament and the Council have supported this initiative to create Europe’s third pillar in space. After satellite positioning and earth observation, Europe will now have a secure European connectivity infrastructure.

“Secure and efficient connectivity will play a key role in Europe’s digital transformation and make us more competitive”, said EU digital chief Margrethe Vestager.

“Through this programme, the EU will be at the forefront of secure satellite communications.”

“IRIS² establishes space as a vector of our European autonomy, a vector of connectivity and resilience. It heightens Europe’s role as a true space power”, Internal Market Commissioner and the real driver of the initiative, Thierry Breton, wrote in a LinkedIn post.

Allowing the private sector to facilitate the rollout of high-speed broadband to “dead zones” that currently lack connectivity is also listed as a critical goal of the project.

“IRIS² will provide connectivity to the whole of Europe”, said Breton, “including areas that do not currently benefit from broadband Internet, as well as to the whole of Africa, using the constellation’s North-South orbits.”

Inspired by Iris, goddess of Greek mythology, messenger of the gods to humans… Iris² will bring secure European connectivity to all!

References:

Brussels to launch new satellite constellation for secure connectivity – EURACTIV.com

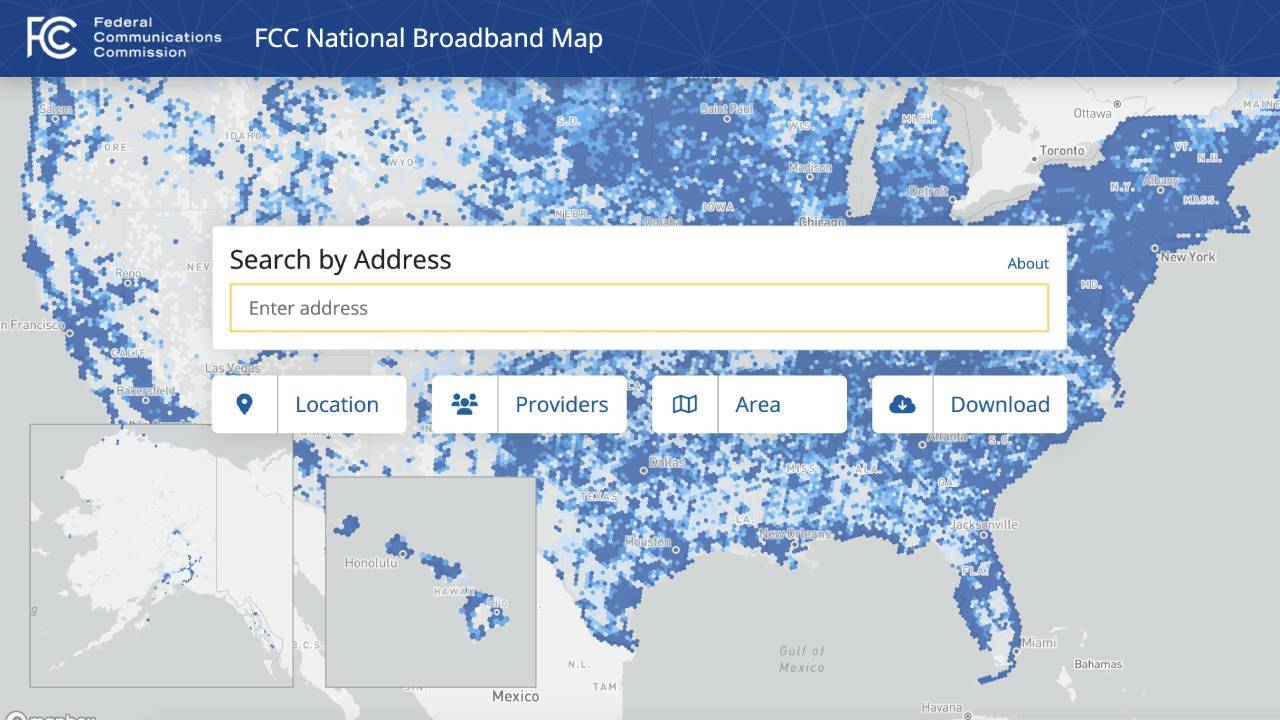

FCC Releases New National Broadband Maps & FCC Speed Test App

At long last, the FCC has released the first public version of its National Broadband Map. The new map is consequential as it will inform how many millions or billions of dollars each state and territory gets from the federal government for broadband infrastructure.

The broadband map’s release follows an effort that began in September to give Internet service providers (ISPs) and local governments an opportunity to review and challenge broadband data findings. That followed an initial FCC broadband data collection process that began in June. IEEE Techblog summarized that and more in this post.

“Today is an important milestone in our effort to help everyone, everywhere get specific information about what broadband options are available for their homes, and pinpointing places in the country where communities do not have the service they need,” said Chairwoman Rosenworcel. “Our pre-production draft maps are a first step in a long-term effort to continuously improve our data as consumers, providers and others share information with us. By painting a more accurate picture of where broadband is and is not, local, state, and federal partners can better work together to ensure no one is left on the wrong side of the digital divide.”

The public will be able to view the maps at broadbandmap.fcc.gov and search for their address to see information about the fixed and mobile services that internet providers report are available there. If the fixed internet services shown are not available at the user’s location, they may file a challenge with the FCC directly through the map interface to correct the information.

Map users will also be able correct information about their location and add their location to the map if it is missing. The draft map will also allow users to view the mobile wireless coverage reported by cellular service providers.

The FCC also announced the launch of an updated version of the FCC Speed Test App that will enable users to quickly compare the performance and coverage of their mobile networks to that reported by their provider. The app allows users to submit their mobile speed test data in support of a challenge to a wireless service provider’s claimed coverage.

Today’s debut marks the start of the public’s ability to offer challenges as well. The FCC has asked for challenges to the map data to be submitted between now and January 13, 2023, so that corrections can be included in a finalized version of the map.

The final version of the map will be used to distribute funding from the Broadband, Equity, Access and Deployment (BEAD) program in summer 2023. As determined by the Department of Commerce last year, each state will get an initial $100 million from the $42.5 billion BEAD program, with additional funding to be distributed based on the number of unserved and underserved locations, according to the new national broadband map.

Members of the public, along with local governments and providers, will now be able to submit two different types of challenges: location (for example, incorrect location address, incorrect location unit count, etc.) and availability (for example, if the map incorrectly lists a certain provider or broadband technology as available).

While the FCC will continue collecting crowdsourced speed data for fixed speeds, that data is not part of the challenge process. Rather, the map is relying on maximum available advertised speeds.

References:

https://www.fcc.gov/document/fcc-releases-new-national-broadband-maps

https://broadbandmap.fcc.gov/home

Additional information sources:

- New users can download the FCC Speed Test App in both the Apple App Store and Google Play Store.

- Existing app users should update the app to gain these new features.

- A video tutorial and more information on how to submit challenges is available at fcc.gov/BroadbandData/consumers.

- For more information about the BDC, please visit the Broadband Data Collection website at fcc.gov/BroadbandData.

Cisco restructuring plan will result in ~4100 layoffs; focus on security and cloud based products

Cisco’s Restructuring Plan:

Cisco plans to lay off over 4,100 employees or 5% of its workforce, the company announced yesterday. That move is part of a restructuring plan to realign its workforce over the coming months to strengthen its optical networking, security and platform offerings. Cisco noted in financial filings it expects to spend a total of $600 million, with half of that outlay coming in the current quarter. The company will also reduce its real estate portfolio to reflect an increase in hybrid work.

In a transcript of Cisco’s Q1 2023 Earnings Call on November 17th, Cisco Chief Financial Officer Scott Herren characterized the move as a “rebalancing.” On that call, Chairman and Chief Executive Officer Chuck Robbins said the company was “rightsizing certain businesses.”

Herren and CEO Chuck Robbins said the company is looking to put more resources behind its enterprise networking, platform, security and cloud-based products. In the long run, analysts expect Cisco margins to improve as more revenue comes from security and software products.

By inference Cisco is de-emphasizing sales of routers to service providers who are moving towards white boxes/bare metal switches and/or designing their own switch/routers.

A Cisco representative told Fierce Telecom:

“This decision was not taken lightly, and we will do all we can to offer support to those impacted, including generous severance packages, job placement services and other benefits wherever possible. The job placement assistance will include doing “everything we can do” to help affected employees step into other open positions at the company.”

Cisco implemented a similar restructuring plan in mid-2020 which included a substantial number of layoffs.

Growth through Acquisitions:

Much of Cisco’s revenue growth over the years has come from acquisitions. The acquisitions included Ethernet switch companies like Crescendo Communications. Kalpana and Grand Junction from 1993-1995. Prior to those acquisitions, Cisco had not developed its own LAN switches and was primarily a company selling routers to enterprises, telcos and ISPs.

Here are a few of Cisco’s acquisitions over the last five years:

- In 2017, Cisco acquired software maker AppDynamics for $3.7 billion. It bought BroadSoft for $1.9 billion in late 2017.

- In July 2019, Cisco acquired Duo Security for $2.35 billion, marking its biggest cybersecurity acquisition since its purchase of Sourcefire in 2013. Acquiring Duo Security bolstered Cisco in an emerging category called zero trust cybersecurity.

- In late 2019, Cisco agreed to buy U.K.-based IMImobile, which sells cloud communications software, in a deal valued at $730 million.

- In May 2020, Cisco acquired ThousandEyes, a networking intelligence company, for about $1 billion.

Aside from acquisitions, new accounting rules have been a plus for revenue recognition. The rules known as ASC 606 require upfront recognition of multiyear software licenses.

One bright spot for Cisco have been sales of the Catalyst 9000 Ethernet switches. The company claims they are the first purpose-built platform designed for complete access control using the Cisco DNA architecture and software-defined SD access. This means that this series of switches simplifies the design, provision and maintenance of security across the entire access network to the network core.

There is also an opportunity for Cisco in data center upgrades. The so-called “internet cloud” is made up of warehouse-sized data centers. They’re packed with racks of computer servers, data storage systems and networking gear. Most cloud computing data centers now use 100 gigabit-per-second communications gear. A data center upgrade cycle to 400G technology has been delayed.

Routed Optical Networking:

Cisco in 2019 agreed to buy optical components maker Acacia Communications for $2.6 billion in cash. China’s government delayed approval of the deal. In January 2021, Cisco upped its offer for Acacia to $4.5 billion and the deal finally closed on March 1, 2021. Acacia designs, manufactures, and sells a complete portfolio of high-speed optical interconnect technologies addressing a range of applications across datacenter, metro, regional, long-haul, and undersea networks.

Acacia’s Bright 400ZR+ pluggable coherent optical modules can plug into Cisco routers, enabling service providers to deploy simpler and more scalable architectures consisting of Routed Optical Networking, combining innovations in silicon, optics and routing systems.

Routed Optical Networking works by merging IP and private line services onto a single layer where all the switching is done at Layer 3. Routers are connected with standardized 400G ZR/ZR+ coherent pluggable optics.

With a single service layer based upon IP, flexible management tools can leverage telemetry and model-driven programmability to streamline lifecycle operations. This simplified architecture integrates open data models and standard APIs, enabling a provider to focus on automation initiatives for a simpler topology. It may be a big winner for Cisco in the near future as service providers move to 400G transport.

References:

https://www.fiercetelecom.com/telecom/cisco-plans-cut-5-workforce-under-600m-restructuring-plan

https://www.cisco.com/site/us/en/products/networking/switches/catalyst-9000-switches/index.html

https://www.cisco.com/c/en/us/about/corporate-strategy-office/acquisitions/acacia.html

Heavy Reading: Coherent Optics for 400G transport and 100G metro edge

Heavy Reading: Coherent Optics for 400G transport and 100G metro edge

To understand the future of high speed coherent optics, Heavy Reading launched the Coherent Optics Market Leadership Program with industry partners Ciena, Effect Photonics, Infinera and Ribbon. The 2022 project was based on a global network operator survey, conducted in August, that attracted 87 qualified responses.

Heavy Reading reports that network operators are using or evaluating coherent optics for 400G transport services for both internal and external applications, as well as 100G data rates for the metro edge.

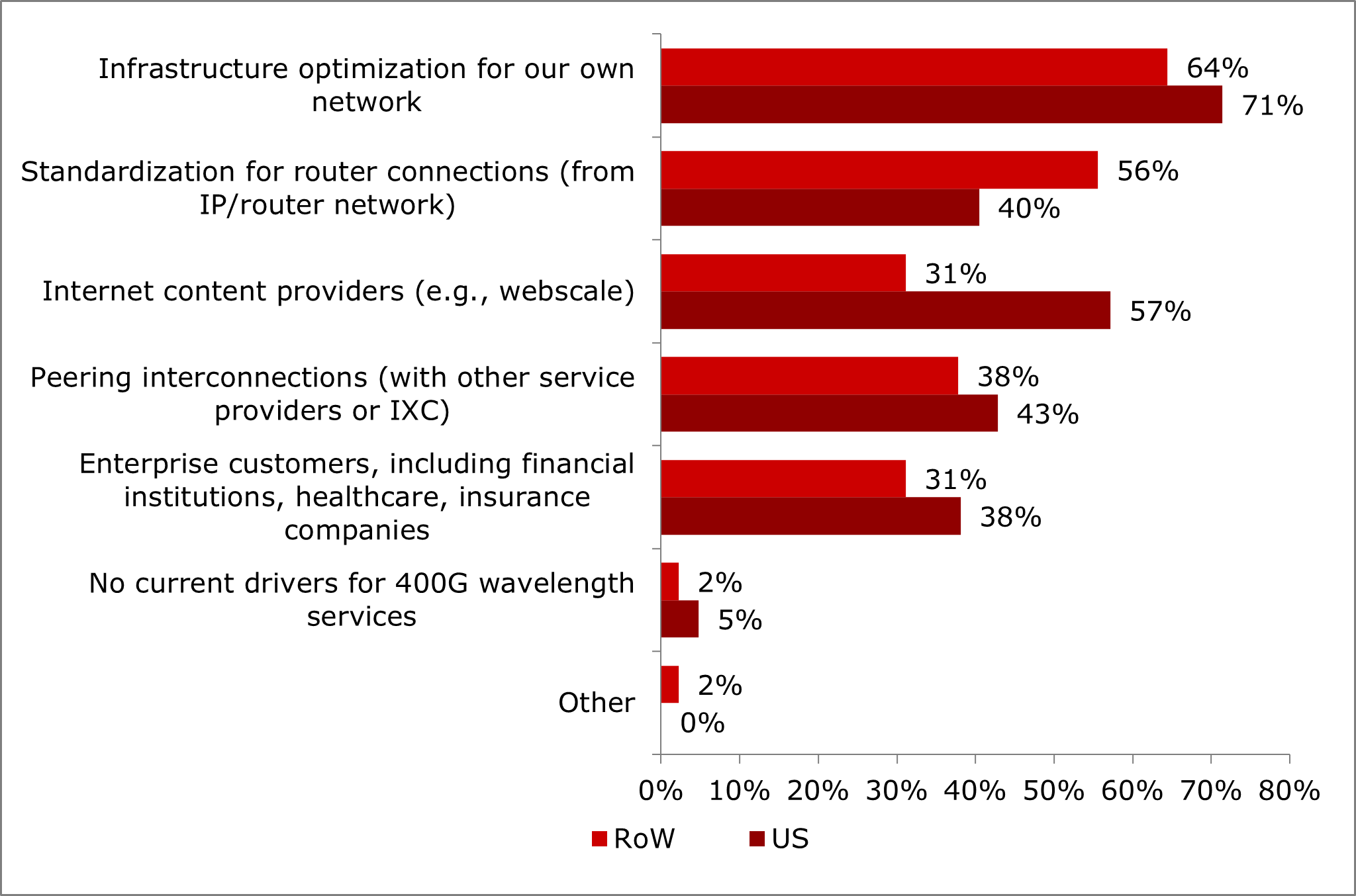

Nearly half of operator respondents in Heavy Reading’s survey will have deployed 400G transport services in their network between now and the end of 2023. But what are their top motivations for offering 400G transport services? Globally, the top driver for 400G services by far is infrastructure optimization for internal networks. This driver was selected by more than two-thirds of operators surveyed and well ahead of the second choice, standardization of router connections.

The data suggests that, for the global audience of operators, there are not that many customers currently that require full 400Gbit/s connectivity. Yet, there is value in grooming internal traffic up to 400G for greater efficiency and lower costs (e.g., fewer ports, lower cost per bit, etc.).

Beyond internal infrastructure, however, the drivers vary significantly by region, particularly when separating U.S. respondents from their Rest of World (RoW) counterparts. 57% of U.S.operators surveyed identified hyperscalers (or Internet content providers) as a 400G service driver — a strong showing and second only to internal infrastructure in the U.S. In contrast, just 31% of RoW operators identified Internet content providers as a top driver.

What are your top drivers for 400G wavelength (or optical) transport services?

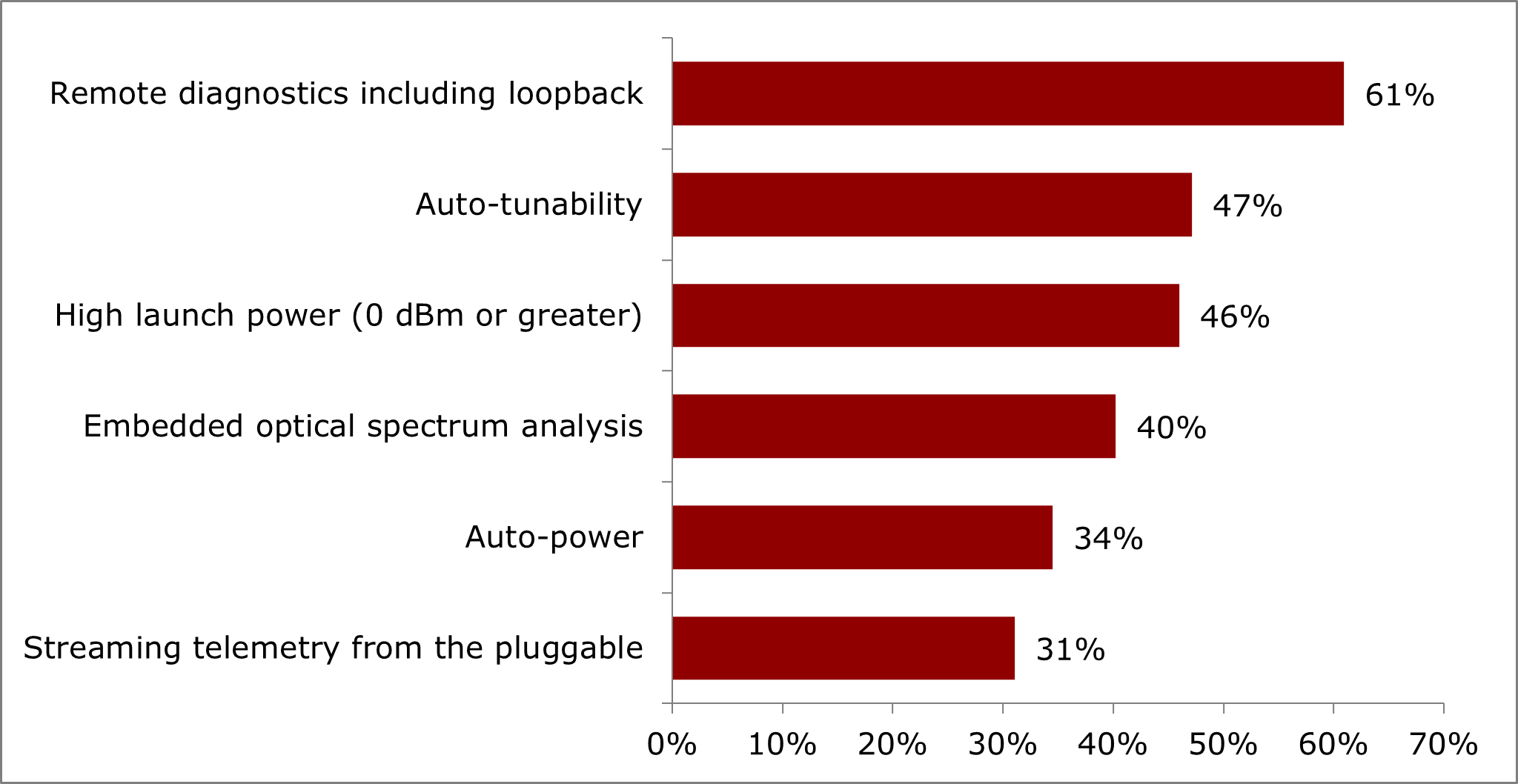

High launch power optics are widely seen as essential for 400G+ pluggables in telecom applications, which are typically brownfield networks with lots of ROADM nodes. However, when filtering the survey results to include optical specialists only (i.e., those who identify as working in optical transport), higher launch power is the number one requirement, well ahead of remote diagnostics. It is likely that, at this early stage, optical specialists within operators understand the criticality of this particular technical requirement better than their peers.

What advanced coherent DWDM pluggable features or capabilities do you find most beneficial to your network/operations?

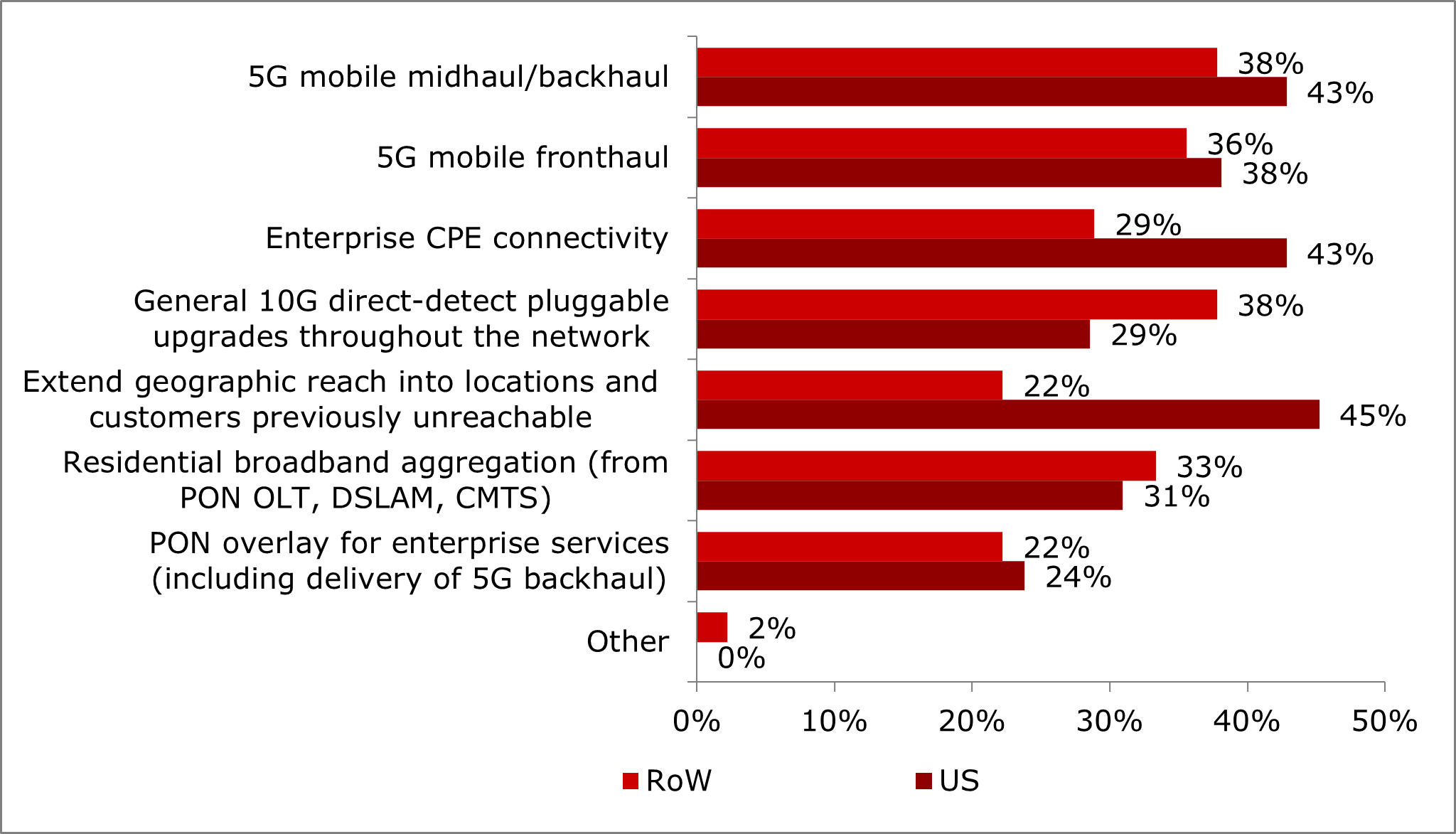

Coherent pluggable optics at 100G have use case priorities which vary significantly based on geographic region, particularly when separating U.S. respondents from their RoW counterparts. For the U.S., extending geographic reach is the top use case, followed by 5G backhaul/midhaul and enterprise connectivity. For RoW operators, however, extending geographic reach is not a priority use case.

When considering 100G coherent pluggables, what do you see as the most common use cases?

References:

https://www.lightreading.com/new-frontiers-for-coherent-optics/a/d-id/781813?

Coherent Optics: 100G, 400G and Beyond

Coherent Optics: 100G, 400G, & Beyond: A 2022 Heavy Reading Survey

Cable Labs: Interoperable 200-Gig coherent optics via Point-to-Point Coherent Optics (P2PCO) 2.0 specs

Microchip and Cisco-Acacia Collaborate to Progress 400G Pluggable Coherent Optics

Cignal AI: Metro WDM forecast cut; IP-over-DWDM and Coherent Pluggables to impact market

Smartoptics Takes Leading Role in Sustainable Optical Networking

OpenVault: Broadband data usage surges as 1-Gig adoption climbs to 15.4% of wireline subscribers

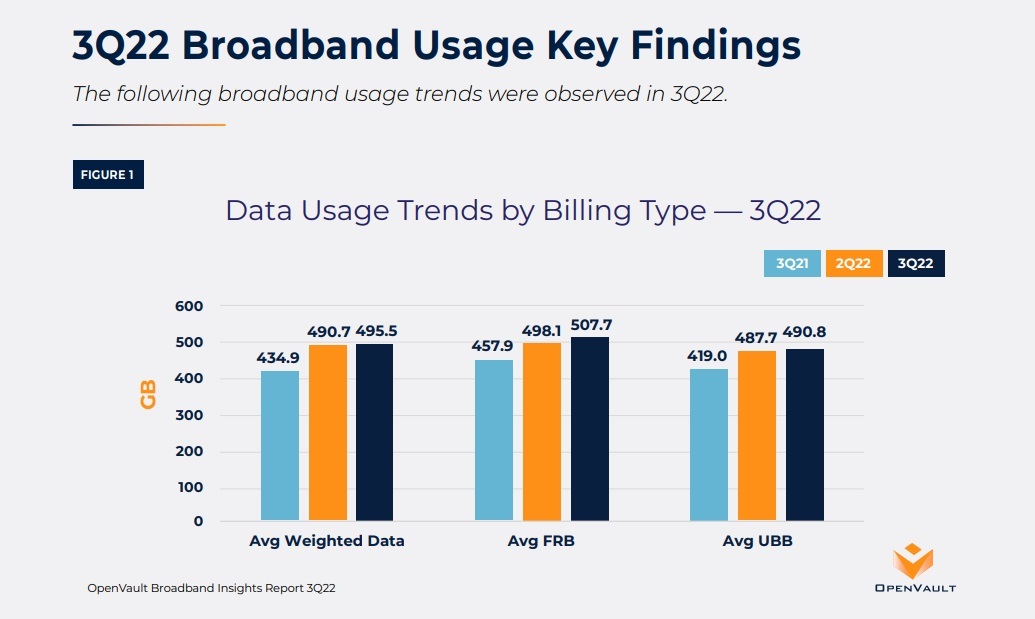

Dramatic increases in provisioned speeds are continuing to shift the broadband landscape, according to the Q3 2022 edition of the (OpenVault Broadband Insights) report. The report was issued today by OpenVault [1.], a market-leading source of SaaS-based revenue and network improvement solutions and data-driven insights for the broadband industry.

Note 1. OpenVault is a company that specializes in collecting and analyzing household broadband usage data. It bases its quarterly reports on anonymized and aggregated data from “millions of individual broadband subscribers.”

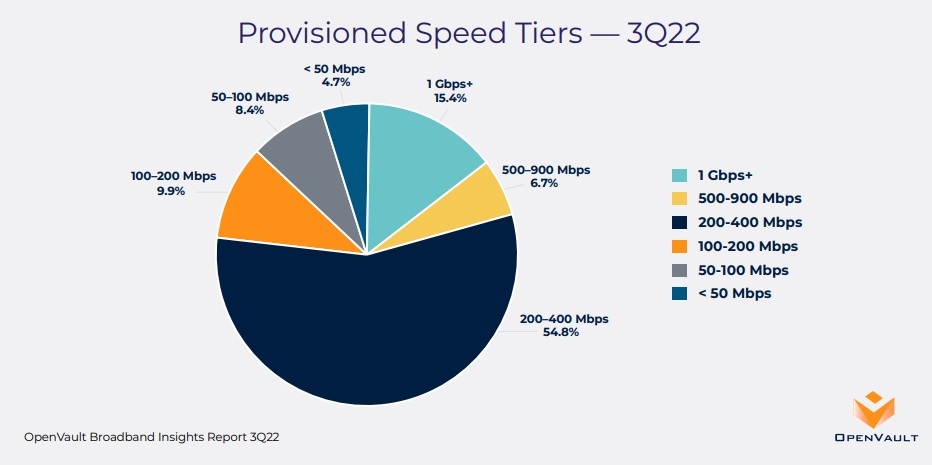

Using data aggregated from OpenVault’s broadband management tools, the 3Q22 OVBI shows a continued increase in gigabit tier adoption, as well as migration of subscribers to speeds of 200 Mbps or higher. Fifteen percent of subscribers were on gigabit tier plans in 3Q22, an increase of 35% over the 11.4% figure in 3Q21, and the percentage of subscribers on plans between 200-400 Mbps doubled to 54.8% from 27.4% in 3Q21. At the end of the third quarter, only 4.7% of all subscribers were provisioned for speeds of less than 50 Mbps, a reduction of more than 50% from the 3Q21 figure of 9.8%.

Gigabit tier subscribers are up more than 600% since the third quarter of 2019 and are now 15.4% of all wireline internet subs. “This trend is impacting bandwidth usage characteristics, with faster growth in power users and median bandwidth usage. Faster speeds are fueling greater consumption that may be reflected in the need for greater capacity in the future.”

Key findings in the 3Q22 report:

Other Highlights:

-

- Average monthly usage of 495.5 GB was up 13.9% from 3Q21’s average of 434.9 GB, and represented a slight increase over 2Q22’s 490.7 GB. Median broadband was up 14.3% year over year, representing broader growth across all subscribers.

- The speed tier with the fastest annual growth is in the range of 200 Mbit/s to 400 Mbit/s, which doubled to 54.8% of all subscribers. The percentage of customers on low-end 50Mbit/s tiers shrank to just 4.7% in Q3 2022, down more than 50% from the year-ago quarter.

- Year-over-year growth of power users of 1TB or more was 18%, to 13.7% of all subscribers, while the super power user category of consumers of 2 TB or more rose almost 50% during the same time frame.

- Participants in the FCC’s Affordable Connectivity Program (ACP) consumed 615.2 GB of data in 3Q22, 24% more than the 495.5 used by the general population.

OpenVault founder and CEO Mark Trudeau told Light Reading in October that high levels of data usage among ACP participants is surprising, but he said it’s likely due to households in the program that use the funds to upgrade to faster speed packages. As a successor to the original Emergency Broadband Benefit (EBB) program, ACP provides qualifying low-income households with a $30 per month subsidy ($75 for tribal households) that can be applied toward Internet subscriptions.

The entire report is at https://xprss.io/zdgeG if you fill in a form and click SEND (to OpenVault).

OpenVault also provides continuously updated broadband consumption figures at https://openvault.com/broadbandtracker/.

References:

https://www.telecompetitor.com/clients/openvault/2022/Q3/index.php

Ookla Ranks Internet Performance in the World’s Largest Cities: China is #1

Cloud Computing Giants Growth Slows; Recession Looms, Layoffs Begin

Among the megatrends driving the technology industry, cloud computing has been a major force. But for the first time in its brief history, the cloud has grown stormy as third-quarter cloud giant earnings details made very clear:

- Amazon Web Services (AWS) fell short of the mark on both earnings and revenue. Reports say parent Amazon.com (AMZN) has frozen hiring at its cloud computing unit and will be laying off 10,000 employees.

- Microsoft’s (MSFT) Azure cloud business at posted an unexpected slowdown in cloud computing growth. At Microsoft, “Intelligent Cloud” revenue rose 24% to $25.7 billion during the company’s fiscal first quarter, including Azure’s 35% growth to $14.4 billion. Excluding the impact of currency exchange rates, Azure revenue climbed 42%

- Alphabet’s (GOOGL) Google Cloud business came in ahead of forecasts, but Oppenheimer analyst Tim Horan said in a note to clients that it has “no line of sight to meaningful profits.”

Note: We don’t consider Facebook/Meta Platforms a cloud service provider, even though they build the IT infrastructure for their cloud resident data centers. They are first and foremost a social network provider that’s now desperately trying to create a market for the Metaverse, which really does not exist and may never be!

In late October, Synergy Research reported that Amazon, Microsoft and Google combined had a 66% share of the worldwide cloud services market in the 3rd quarter, up from 61% a year ago. Alibaba and IBM placed fourth and fifth, respectively according to Synergy. In aggregate, all cloud service providers excluding the big three have tripled their revenues since late 2017, yet their collective market share has plunged from 50% to 34% as their growth rates remain far below the market leaders.

In 2022, capital spending on internet data centers by the three big cloud computing companies will jump a healthy 25% to $74 billion, estimates Dell’Oro Group. In 2023, spending on warehouse-size data centers packed with computer servers and data storage gear is expected to slow. Dell’Oro puts growth at just 7%, which would take the market up to $79 billion.

Oppenheimer’s Horan wrote, “Cloud providers remain very bullish on long-term trends, but investors have been surprised at how economically sensitive the sector is. “Sales cycles in cloud services have elongated and customers are looking to cut cloud spending by becoming more efficient. Despite the deceleration, cloud is now a $160 billion-plus industry. But investors will be concerned given this is our first real cloud recession, which makes forecasts difficult.”

“This macro slowdown clearly will impact all aspects of tech spending over the next 12 to 18 months. Cloud spending is not immune to the dark macro backdrop as seen during earnings season over the past few weeks,” Wedbush analyst Daniel Ives told Investor’s Business Daily via an email. “That said, we estimate 45% of workloads have moved to the cloud globally and (the share is) poised to hit 70% by 2025 in a massive $1 trillion shift. Enterprises will aggressively push to the cloud and we do not believe this near-term period takes that broader thesis off course. The near-term environment is more of a speed bump rather than a brick wall on the cloud transformation underway. Microsoft, Amazon, Google, IBM (IBM) and Oracle (ORCL) will be clear beneficiaries of this cloud shift over the coming years and will power through this Category 5 (hurricane) economic storm.”

Bank of America expects a boost from next-generation cloud services that cater to “edge computing.” Amazon, Microsoft and Google are “treating the edge as an extension of their public cloud,” said a BofA report. The giant cloud computing companies have all partnered with telecom firms AT&T (T), Verizon (VZ) and T-Mobile US (TMUS). Their aim to embed their cloud services within 5G wireless networks. “Telcos are leveraging the hyperscale cloud to launch their own edge compute businesses,” BofA said.

At BMO Capital Markets, analyst Keith Bachman says investors need to reset their expectations as the coronavirus pandemic eases. The corporate switch to working from home spurred demand for cloud services. Online shopping boomed. And consumers turned to internet video and online gaming for entertainment.

“We think many organizations accelerated the journey to the cloud as Covid and hybrid work requirements exposed weaknesses in existing on-premise IT capabilities,” Bachman said in a note. “While spend remains healthy in the cloud category, growth has decelerated for the past few quarters. We believe economic forces are at work as well as a slower pace of cloud migrations post-Covid.”

Market research heavyweight Gartner updated its global cloud computing growth forecast Oct. 31. The new forecast was completed before third-quarter earnings were released by Amazon, Microsoft and Google. Gartner forecasted worldwide end-user spending on public cloud services will grow 20.7% in 2023 to $591.8 billion. That’s up from 18.8% growth in 2022.

In a press release, Gartner analyst Sid Nag cautioned: “Organizations can only spend what they have. Cloud spending could decrease if overall IT budgets shrink, given that cloud continues to be the largest chunk of IT spend and proportionate budget growth.

AWS, Microsoft Azure and Google’s cloud computing units are all growing at an above-industry-average rate. Still, AWS and Azure are slowing, perhaps a bit due to size as well as the economy.

- At Wolfe Research, MSFT stock analyst Alex Zukin said in his note: “The damage in Microsoft’s case came from another Azure miss in the quarter, but the bigger surprise was the guide of 37%. That is the largest sequential growth deceleration on record.”

- Google’s cloud computing revenue rose 38% to $6.28 billion. That’s up 2% from the previous quarter and topped estimates from GOOGL stock analysts by 4%. However, the company reported an operating loss of $644 million for the cloud business versus a $699 million loss a year earlier. Hoping to take market share from bigger AWS and Microsoft’s Azure, Google has priced cloud services aggressively, analysts say. It also stepped up hiring and spending on data centers. And it acquired cybersecurity firm Mandiant for $5.4 billion.

- “Amazon noted it has seen an uptick in AWS customers focused on controlling costs and is working to help customers cost-optimize,” Amazon stock analyst Youssef Squali at Truist Securities said in a report to clients. “The company is also seeing slower growth from certain industries (financial services, mortgage and crypto sectors),” he added.

- Oppenheimer’s Horan estimates that AWS will produce $13.9 billion in free cash flow in 2022. But he sees Google’s cloud unit having $10.6 billion in negative free cash flow.

Nonetheless, Deutsche Bank analyst Brad Zelnick remains upbeat on the cloud computing business. He wrote in a research note:

“We see a temporary slowdown in bringing new workloads to the cloud, though importantly not a change in organizations’ long-term cloud ambitions. The near-term forces of optimization can obscure what we believe remain very supportive underlying trends. We remain confident that we are in the early innings of a generational shift to cloud.”

References:

The First Real Cloud Computing Recession Is Here — What It Means For Tech Stocks

Synergy: Q3 Cloud Spending Up Over $11 Billion YoY; Google Cloud gained market share in 3Q-2022

AST SpaceMobile Deploys Largest-Ever LEO Satellite Communications Array

AST SpaceMobile, the company building the first and only space-based cellular broadband network accessible directly by standard mobile phones, announced today that it had successfully completed deployment of the communications array for its test satellite, BlueWalker 3 (“BW3”), in orbit.

BW3 is the largest-ever commercial communications array deployed in Low Earth Orbit (LEO) and is designed to communicate directly with cellular devices via 5G frequencies (which have yet to be standardized by ITU-R in M.1036 revision 6).

The satellite spans 693 square feet in size, a design feature critical to support a space-based cellular broadband network. The satellite is expected to have a field of view of over 300,000 square miles on the surface of the Earth.

The unfolding of BW3 was made possible by years of R&D, testing and operational preparation. AST SpaceMobile has a portfolio of more than 2,400 patent and patent-pending claims supporting its space-based cellular broadband technology. Additional details on the BlueWalker 3 mission can be seen in this video.

“Every person should have the right to access cellular broadband, regardless of where they live or work. Our goal is to close the connectivity gaps that negatively impact billions of lives around the world,” said Abel Avellan, Chairman and Chief Executive Officer of AST SpaceMobile. “The successful unfolding of BlueWalker 3 is a major step forward for our patented space-based cellular broadband technology and paves the way for the ongoing production of our BlueBird satellites.”

AST SpaceMobile has agreements and understandings with mobile network operators (“MNOs”) globally that have over 1.8 billion existing subscribers, including a mutual exclusivity with Vodafone in 24 countries. Interconnecting with AST SpaceMobile’s planned network will allow MNOs, including Vodafone Group, Rakuten Mobile, AT&T, Bell Canada, MTN Group, Orange, Telefonica, Etisalat, Indosat Ooredoo Hutchison, Smart Communications, Globe Telecom, Millicom, Smartfren, Telecom Argentina, Telstra, Africell, Liberty Latin America and others, the ability to offer extended cellular broadband coverage to their customers who live, work and travel in areas with poor or non-existent cell coverage, with the goal of eliminating dead zones with cellular broadband from space.

“We want to close coverage gaps in our markets, particularly in territories where terrain makes it extremely challenging to reach with a traditional ground-based network. Our partnership with AST SpaceMobile – connecting satellite directly to conventional mobile devices – will help in our efforts to close the digital divide,” said Luke Ibbetson, Head of Group R&D, Vodafone and an AST SpaceMobile director.

Tareq Amin, CEO of Rakuten Mobile and Rakuten Symphony and an AST SpaceMobile director, added “Our mission is to democratize access to mobile connectivity: That is why we are so excited about the potential of AST SpaceMobile to support disaster-readiness and meet our goal of 100% geographical coverage to our customers in Japan. I look forward not only to testing BW3 on our world-leading cloud-native network in Japan, but also working with AST SpaceMobile on integrating our virtualized radio network technology to help bring connectivity to the world.”

Chris Sambar, President – Network, AT&T, added “We’re excited to see AST SpaceMobile reach this significant milestone. AT&T’s core mission is connecting people to greater possibilities on the largest wireless network in America. Working with AST SpaceMobile, we believe there is a future opportunity to even further extend our network reach including to otherwise remote and off-grid locations.”

About AST SpaceMobile:

AST SpaceMobile is building the first and only global cellular broadband network in space to operate directly with standard, unmodified mobile devices based on our extensive IP and patent portfolio. Our engineers and space scientists are on a mission to eliminate the connectivity gaps faced by today’s five billion mobile subscribers and finally bring broadband to the billions who remain unconnected. For more information, follow AST SpaceMobile on YouTube, Twitter, LinkedIn and Facebook. Watch this video for an overview of the SpaceMobile mission.

References:

Musk’s SpaceX and T-Mobile plan to connect mobile phones to LEO satellites in 2023

New developments from satellite internet companies challenging SpaceX and Amazon Kuiper

Quantum Technologies Update: U.S. vs China now and in the future

The quantum computing market could be worth up to $5 billion by 2025, driven by competition between the US and China, according to London-based data analytics fir GlobalData whose Patent Analytics Database reveals that the U.S. is the global leader in quantum computing. The analytics company notes that China is currently about five years behind the U.S., and the recently passed U.S. CHIPS and Science Act will enhance U.S. quantum capabilities while hindering China.

Sidebar; What is a Quantum Computer:

Unlike a classical computer, which performs calculations one bit or word at a time, a quantum computer can perform many calculations concurrently. Quantum computers use a basic memory unit called a qubit, which has the flexibility to represent either zero, one or both at the same time. This ability of an object to exist in more than one form at the same time is known as superposition. The concept of entanglement is when multiple particles in a quantum system are connected and affect each other. If two particles become entangled, they can theoretically transmit and receive information over very long distances. However, the transmission error rates have yet to be determined.

Because quantum computers’ basic information units can represent all possibilities at the same time, they are theoretically much faster and more powerful than the regular computers we are used to.

Physicists in China recently launched a quantum computer they said took 1 millisecond to perform a task that would take a conventional computer 30 trillion years.

The aforementioned U.S. CHIPS and Science Act, signed into law in August 2022, represents an escalation in the growing tech war between the U.S. and China. The act includes measures designed to cut off China’s access to US-made technology. In addition, new export restrictions were announced on October 10, some of which took immediate effect. These restrictions prevent the export of semiconductors manufactured using US equipment to China. Currently, the U.S. is negotiating with allied nations to implement similar restrictions. Included in the CHIPS Act is a detailed package of domestic funding to support US quantum computing initiatives, including discovery, infrastructure, and workforce.

Among the many commercial companies researching the technology, IBM, Alphabet (parent company of Google), and Northrop Grumman have filed the most patents, with a respective 1,885, 1,000, and 623 total publications.

Earlier this week, IBM unveiled the largest quantum bit count (433 qubits) of its quantum computers to date, named Osprey, at this week’s IBM Quantum Summit. The company also introduced its latest modular quantum computing system.

“The new 433 qubit ‘Osprey’ processor brings us a step closer to the point where quantum computers will be used to tackle previously unsolvable problems,” IBM SVP Darío Gil said in a statement.

The IBM Osprey more than tripled the qubit count of its predecessor — the 127-qubit Eagle processor, launched in 2021. “Like Eagle, Osprey includes multi-level wiring to provide flexibility for signal routing and device layout, while also adding in integrated filtering to reduce noise and improve stability,” Jay Gambetta, VP of IBM Quantum wrote in a blog post.

The company claims Osprey is more powerful to run complex computations and the number of classical bits needed to represent a state on this latest processor far exceeds the total number of atoms in the known universe.

Gambetta noted IBM has been following along its quantum technology development roadmap. The company put its first quantum computer on the cloud in 2016 and aims to launch its first 1000-plus qubit quantum processor (Condor) next year and a 4000-plus qubit processor around 2025.

The US government has committed $3 billion in funding for federal quantum projects, which are either being planned or already underway, including the $1.2 billion National Quantum Computing initiative. In addition, the U.S. government almost certainly conducts quantum projects in secret through the Defense Advanced Research Projects Agency (DARPA) and the National Security Agency (NSA).

The U.S. government has committed $3bn in funding to federal quantum projects that are either already in train or being planned. The biggest project is the $1.2bn U.S. National Quantum Computing Initiative. Of course, the military and security services will be assiduously tending their own quantum gardens.

As expected, considerably less is known about China’s advancements and investments in quantum technology. The country proclaims itself to be the world-leader in secure quantum satcoms. The CCP (which runs the People’s Republic of China or PRC) can devote huge resources to any technology perceived to give the PRC a strategic geo-political advantage – such as global quantum supremacy.

“Quantum computing has become the latest battleground between the U.S. and China,” GlobalData associate analyst Benjamin Chin said in a statement. “Both countries want to claim quantum supremacy, not only as a matter of national pride but also because of the financial, industrial, scientific, and military advantages quantum computing can offer. “China has already established itself as a world leader in secure quantum satellite communications. Moreover, thanks to its autocratic economic model, it can pool resources from institutions, corporations, and the government. This gives China a distinct advantage as it can work collectively to achieve a single aim – quantum supremacy.”

China has already developed quantum equipment with potential military applications:

- This year, scientists from Tsinghua University developed a quantum radar that could detect stealth aircraft by generating a small electromagnetic storm.

- In 2017, the Chinese Academy of Sciences also developed a quantum submarine detector that could spot submarines from far away.

- In December 2021, China created a quantum communication network in space to protect its electric power grid against attacks, according to scientists involved in the project. Part of the network links the power grid of Fujian, the southeastern province closest to Taiwan, to a national emergency command centre in Beijing.

Consider Alibaba’s innocuously named DAMO Academy (Discovery, Adventure, Momentum and Outlook), which has already invested $15bn in quantum technology and will continue to plough more and more money into the venture. The Chinese government has also invested at least $10bn in the National Laboratory for Quantum Information Science, whose sole purpose is to conduct R&D only into quantum technologies with “direct military applications.”

Photo: Shutterstock Images

Swiss company ID Quantique, a spin-off from the Group of Applied Physics at the University of Geneva, is launching technology to make satellite security quantum proof. The company was founded in 2011 and has more than a decade of experience in quantum key distribution systems, quantum safe network encryption, single photon counters and hardware random number generators. The latest additions to its portfolio are two extremely robust, ruggedized and radiation-hardened QRNG (Quantum Random Number Generator) chips designed and fabricated especially for space applications.

The generation of genuine randomness is a vital component of cybersecurity: Systems that rely on deterministic processes, such as Pseudo Random Number Generators (PRNGs), to generate randomness are insecure because they rely on deterministic algorithms and these are, by their nature, predictable and therefore crackable. The most reliable way to generate random numbers is based on quantum physics, which is fundamentally random. Indeed, the intrinsic randomness of the behaviour of subatomic particles at the quantum level is one of the very few absolutely random processes known to exist. Thus, by linking the outputs of a random number generator to the utterly random behaviour of a quantum particle, a truly unbiased and unpredictable system is guaranteed and can be assured via live verification of the numbers and monitoring of the hardware to ensure it is operating properly.

The two new space-hardened microprocessors, the snappily named IDQ20MC1-S1 and IDQ20MC1-S3, are certified to the equally instantly memorable ECSS-Q-ST-60-13, the standard that defines the requirements for selection, control, procurement and usage of electrical, electronic and electro-mechanical (EEE) commercial components for space projects. The IDQ20MC1-S3 is a Class 3 device, predominantly for use in low-earth orbit (LEO) missions. The IDQ20MC1-S1 is a Class 1 device, for use in MEO and GEO mission systems. IDQ is the first to enable satellite security designers to upgrade their encryption keys to quantum enhanced keys.

References:

https://ibm-com-qc-dev.quantum-computing.ibm.com/quantum/summit

Has Edge Computing Lived Up to Its Potential? Barriers to Deployment

Despite years of touting and hype, edge computing (aka Multi-access Edge Computing or MEC) has not yet provided the payoff promised by its many cheerleaders. Here are a few rosy forecasts and company endorsements:

In an October 27th report, Markets and Markets forecast the Edge Computing Market size is to grow from $44.7 billion in 2022 to $101.3 billion by 2027, which is a Compound Annual Growth Rate (CAGR) of 17.8% over those five years.

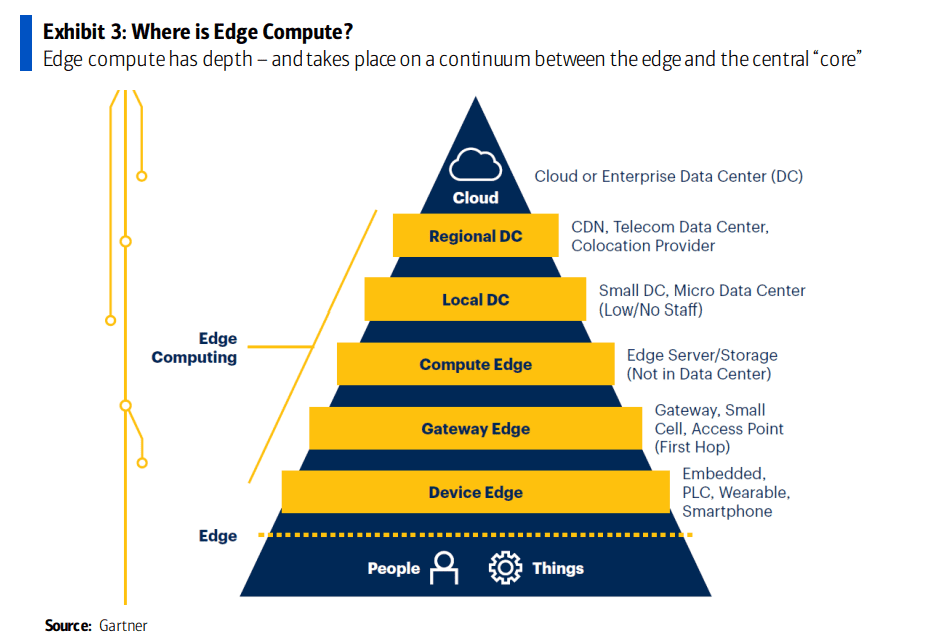

IDC defines edge computing as the technology-related actions that are performed outside of the centralized datacenter, where edge is the intermediary between the connected endpoints and the core IT environment.

“Edge computing continues to gain momentum as digital-first organizations seek to innovate outside of the datacenter,” said Dave McCarthy, research vice president, Cloud and Edge Infrastructure Services at IDC. “The diverse needs of edge deployments have created a tremendous market opportunity for technology suppliers as they bring new solutions to market, increasingly through partnerships and alliances.”

IDC has identified more than 150 use cases for edge computing across various industries and domains. The two edge use cases that will see the largest investments in 2022 – content delivery networks and virtual network functions – are both foundational to service providers’ edge services offerings. Combined, these two use cases will generate nearly $26 billion in spending this year (2022). In total, service providers will invest more than $38 billion in enabling edge offerings this year. The market research firm believes spending on edge compute could reach $274 billion globally by 2025 – though that figure would be inclusive of a wide range of products and services.

HPE CEO Antonio Neri recently told Yahoo Finance that edge computing is “the next big opportunity for us because we live in a much more distributed enterprise than ever before.”

DigitalBridge CEO Marc Ganzi said his company continues to see growth in demand for edge computing capabilities, with site leasing rates up 10% to 12% in the company’s most recent quarter. “So this notion of having highly interconnected data centers on the edge is where you want to be,” he said, according to a Seeking Alpha transcript.

Equinix CEO Charles Meyers said his company recently signed a “major design win” to provide edge computing services to an unnamed pediatric treatment and research operation across a number of major US cities. Equinix is one of the world’s largest data center operators, and has recently begun touting its edge computing operations.

……………………………………………………………………………………………………………………………………………………………..

In 2019, Verizon CEO Hans Vestberg said his company would generate “meaningful” revenues from edge computing within a year. But it still hasn’t happened yet!

BofA Global Research wrote in an October 25th report to clients, “Verizon, the largest US wireless provider and the second largest wireline provider, has invested more resources in this [edge computing] topic than any other carrier over the last seven years, yet still cannot articulate how it can make material money in this space over an investable timeframe. Verizon is in year 2 of its beta test of ‘edge compute’ applications and has no material revenue to point to nor any conviction in where real demand may emerge.”

“Gartner believes that communications and manufacturing will be the main drivers of the edge market, given they are infrastructure-intensive segments. We highlight existing use cases, like content delivery in communications, or

‘device control’ in manufacturing, as driving edge compute proliferation. However, as noted above, the market is still undefined and these are only two possible outcomes of many.”

Raymond James wrote in an August research note, “Regarding the edge, carriers and infrastructure companies are still trying to define, size and time the opportunity. But as data demand (and specifically demand for low-latency applications) grows, it seems inevitable that compute power will continue to move toward the customer.”

……………………………………………………………………………………………………………………………………………

BofA Global Research – Challenges with Edge Compute:

The distributed nature of edge compute can pose several risks to enterprises. The number of nodes needed between stores, factories, automobiles, homes, etc. can vary wildly. Different geographies may have different environmental issues, regulatory requirements, and network access. Furthermore, the distributed scale in edge compute puts a greater burden on ensuring that edge compute nodes are secured and that the enterprise is protected. Real-time decision making on the edge device requires a platform to be able to anonymize data used in analytics, and secure data in transit and information stored on the edge device. As more devices are added to the network, each one becomes a potential vulnerability target and as data entry points expand across a corporate network, so do opportunities for intrusion.

On the other hand, the risk is somewhat double-sided as some security risk is mitigated by keeping the data distributed so that a data breach only impacts a fraction of the data or applications. Other barriers to deploying edge applications include high costs as a result of its distributed nature, as well as a lack of a standard edge compute stack and APIs.

Another challenge to edge compute is the issue of extensibility. Edge computing nodes have historically been very purpose-specific and use-case dependent to environments and workloads in order to meet specific requirements and keep costs down. However, workloads will continuously change and new ones will emerge, and existing edge compute nodes may not adequately cover additional use cases. Edge computing platforms need to be both special-purpose and extensible. While enterprises typically start their edge compute journey on a use-case basis, we expect that as the market matures, edge compute will increasingly be purchased on a vertical and horizontal basis to keep up with expanding use cases.

References:

The Amorphous “Edge” as in Edge Computing or Edge Networking?

Edge computing refuses to mature | Light Reading

Multi-access Edge Computing (MEC) Market, Applications and ETSI MEC Standard-Part I

ETSI MEC Standard Explained – Part II

Lumen Technologies expands Edge Computing Solutions into Europe