AWS

Verizon to build new, long-haul, high-capacity fiber pathways to connect AWS data centers

Verizon Business has announced a new Verizon AI Connect deal with Amazon Web Services (AWS) to provide resilient high-capacity, low-latency network infrastructure essential for the next wave of AI innovation. As part of the deal, Verizon will build new, long-haul, high-capacity fiber pathways to connect AWS data center locations. This will enable AWS to continue to deliver and scale its secure, reliable, and high-performance cloud services for customers building and deploying advanced AI applications at scale.

These new fiber segments mark a significant commitment in Verizon’s network buildout, to enable the AI ecosystem to intelligently deliver the exponential data growth driven by generative AI. The Verizon AI Connect solution will provide AWS with resilient network paths that will enhance the performance and reliability of AI workloads underpinned by Verizon’s award-winning network. The Verizon-AWS collaboration also encompasses joint development of private mobile edge computing solutions that provide secure, dedicated connectivity for enterprise customers. These existing collaborations have delivered significant value across multiple industries, from manufacturing and healthcare to retail and entertainment, by combining Verizon’s powerful network infrastructure with AWS’s comprehensive cloud services.

“AI will be essential to the future of business and society, driving innovation that demands a network to match,” said Scott Lawrence, SVP and Chief Product Officer, Verizon Business. “This deal with Amazon demonstrates our continued commitment to meet the growing demands of AI workloads for the businesses and developers building our future.”

“The next wave of innovation will be driven by generative AI, which requires a combination of secure, scalable cloud infrastructure and flexible, high-performance networking,” said Prasad Kalyanaraman, vice president, AWS Infrastructure Services. “By working with Verizon, AWS will enable high-performance network connections that ensure customers across every industry can build and deliver compelling, secure, and reliable AI applications at scale. This collaboration builds on our long-standing commitment to provide customers with the most secure, powerful, and efficient cloud infrastructure available today.”

This initiative strengthens Verizon’s long-standing strategic relationship with AWS. The companies have already established several key engagements, including Verizon’s adoption of AWS as a preferred strategic public cloud provider for its digital transformation initiatives. Previous engagements have targeted use cases across sectors such as manufacturing, healthcare, retail and media, pairing Verizon’s network capabilities with AWS’s cloud stack. It should be noted, however, that AWS also uses other major carriers and dark fiber providers, such as Lumen Technologies, Zayo, AT&T, and others, to ensure a highly redundant and diverse global inter-data center network.

This deal highlights how telecommunications companies are becoming critical enablers of the AI-driven economy by investing in the foundational fiber optic infrastructure required for large-scale AI processing.

References:

https://www.verizon.com/about/news/verizon-business-and-aws-new-fiber-deal

Verizon transports 1.2 terabytes per second of data across a single wavelength

Verizon, AWS and Bloomberg media work on 4K video streaming over 5G with MEC

Amazon AWS and Verizon Business Expand 5G Collaboration with Private MEC Solution

Lumen deploys 400G on a routed optical network to meet AI & cloud bandwidth demands

Big Tech and VCs invest hundreds of billions in AI while salaries of AI experts reach the stratosphere

Introduction:

Two and a half years after OpenAI set off the generative artificial intelligence (AI) race with the release of the ChatGPT, big tech companies are accelerating their A.I. spending, pumping hundreds of billions of dollars into their frantic effort to create systems that can mimic or even exceed the abilities of the human brain. The areas of super huge AI spending are data centers, salaries for experts, and VC investments. Meanwhile, the UAE is building one of the world’s largest AI data centers while Softbank CEO Masayoshi Son believes that Artificial General Intelligence (AGI) will surpass human-level cognitive abilities (Artificial General Intelligence or AGI) within a few years. And that Artificial Super Intelligence (ASI) will surpass human intelligence by a factor of 10,000 within the next 10 years.

AI Data Center Build-out Boom:

Tech industry’s giants are building AI data centers that can cost more than $100 billion and will consume more electricity than a million American homes. Meta, Microsoft, Amazon and Google have told investors that they expect to spend a combined $320 billion on infrastructure costs this year. Much of that will go toward building new data centers — more than twice what they spent two years ago.

As OpenAI and its partners build a roughly $60 billion data center complex for A.I. in Texas and another in the Middle East, Meta is erecting a facility in Louisiana that will be twice as large. Amazon is going even bigger with a new campus in Indiana. Amazon’s partner, the A.I. start-up Anthropic, says it could eventually use all 30 of the data centers on this 1,200-acre campus to train a single A.I system. Even if Anthropic’s progress stops, Amazon says that it will use those 30 data centers to deliver A.I. services to customers.

Amazon is building a data center complex in New Carlisle, Ind., for its work with the A.I. company Anthropic. Photo Credit…AJ Mast for The New York Times

……………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………..

Stargate UAE:

OpenAI is partnering with United Arab Emirates firm G42 and others to build a huge artificial-intelligence data center in Abu Dhabi, UAE. The project, called Stargate UAE, is part of a broader push by the U.A.E. to become one of the world’s biggest funders of AI companies and infrastructure—and a hub for AI jobs. The Stargate project is led by G42, an AI firm controlled by Sheikh Tahnoon bin Zayed al Nahyan, the U.A.E. national-security adviser and brother of the president. As part of the deal, an enhanced version of ChatGPT would be available for free nationwide, OpenAI said.

The first 200-megawatt chunk of the data center is due to be completed by the end of 2026, while the remainder of the project hasn’t been finalized. The buildings’ construction will be funded by G42, and the data center will be operated by OpenAI and tech company Oracle, G42 said. Other partners include global tech investor, AI/GPU chip maker Nvidia and network-equipment company Cisco.

……………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………..

Softbank and ASI:

Not wanting to be left behind, SoftBank, led by CEO Masayoshi Son, has made massive investments in AI and has a bold vision for the future of AI development. Son has expressed a strong belief that Artificial Super Intelligence (ASI), surpassing human intelligence by a factor of 10,000, will emerge within the next 10 years. For example, Softbank has:

- Significant investments in OpenAI, with planned investments reaching approximately $33.2 billion. Son considers OpenAI a key partner in realizing their ASI vision.

- Acquired Ampere Computing (chip designer) for $6.5 billion to strengthen their AI computing capabilities.

- Invested in the Stargate Project alongside OpenAI, Oracle, and MGX. Stargate aims to build large AI-focused data centers in the U.S., with a planned investment of up to $500 billion.

Son predicts that AI will surpass human-level cognitive abilities (Artificial General Intelligence or AGI) within a few years. He then anticipates a much more advanced form of AI, ASI, to be 10,000 times smarter than humans within a decade. He believes this progress is driven by advancements in models like OpenAI’s o1, which can “think” for longer before responding.

……………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………..

Super High Salaries for AI Researchers:

Salaries for A.I. experts are going through the roof and reaching the stratosphere. OpenAI, Google DeepMind, Anthropic, Meta, and NVIDIA are paying over $300,000 in base salary, plus bonuses and stock options. Other companies like Netflix, Amazon, and Tesla are also heavily invested in AI and offer competitive compensation packages.

Meta has been offering compensation packages worth as much as $100 million per person. The owner of Facebook made more than 45 offers to researchers at OpenAI alone, according to a person familiar with these approaches. Meta’s CTO Andrew Bosworth implied that only a few people for very senior leadership roles may have been offered that kind of money, but clarified “the actual terms of the offer” wasn’t a “sign-on bonus. It’s all these different things.” Tech companies typically offer the biggest chunks of their pay to senior leaders in restricted stock unit (RSU) grants, dependent on either tenure or performance metrics. A four-year total pay package worth about $100 million for a very senior leader is not inconceivable for Meta. Most of Meta’s named officers, including Bosworth, have earned total compensation of between $20 million and nearly $24 million per year for years.

Meta CEO Mark Zuckerberg on Monday announced its new artificial intelligence organization, Meta Superintelligence Labs, to its employees, according to an internal post reviewed by The Information. The organization includes Meta’s existing AI teams, including its Fundamental AI Research lab, as well as “a new lab focused on developing the next generation of our models,” Zuckerberg said in the post. Scale AI CEO Alexandr Wang has joined Meta as its Chief AI Officer and will partner with former GitHub CEO Nat Friedman to lead the organization. Friedman will lead Meta’s work on AI products and applied research.

“I’m excited about the progress we have planned for Llama 4.1 and 4.2,” Zuckerberg said in the post. “In parallel, we’re going to start research on our next generation models to get to the frontier in the next year or so,” he added.

On Thursday, researcher Lucas Beyer confirmed he was leaving OpenAI to join Meta along with the two others who led OpenAI’s Zurich office. He tweeted: “1) yes, we will be joining Meta. 2) no, we did not get 100M sign-on, that’s fake news.” (Beyer politely declined to comment further on his new role to TechCrunch.) Beyer’s expertise is in computer vision AI. That aligns with what Meta is pursuing: entertainment AI, rather than productivity AI, Bosworth reportedly said in that meeting. Meta already has a stake in the ground in that area with its Quest VR headsets and its Ray-Ban and Oakley AI glasses.

……………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………..

VC investments in AI are off the charts:

Venture capitalists are strongly increasing their AI spending. U.S. investment in A.I. companies rose to $65 billion in the first quarter, up 33% from the previous quarter and up 550% from the quarter before ChatGPT came out in 2022, according to data from PitchBook, which tracks the industry.

This astounding VC spending, critics argue, comes with a huge risk. A.I. is arguably more expensive than anything the tech industry has tried to build, and there is no guarantee it will live up to its potential. But the bigger risk, many executives believe, is not spending enough to keep pace with rivals.

“The thinking from the big C.E.O.s is that they can’t afford to be wrong by doing too little, but they can afford to be wrong by doing too much,” said Jordan Jacobs, a partner with the venture capital firm Radical Ventures. “Everyone is deeply afraid of being left behind,” said Chris V. Nicholson, an investor with the venture capital firm Page One Ventures who focuses on A.I. technologies.

Indeed, a significant driver of investment has been a fear of missing out on the next big thing, leading to VCs pouring billions into AI startups at “nosebleed valuations” without clear business models or immediate paths to profitability.

Conclusions:

Big tech companies and VCs acknowledge that they may be overestimating A.I.’s potential. Developing and implementing AI systems, especially large language models (LLMs), is incredibly expensive due to hardware (GPUs), software, and expertise requirements. One of the chief concerns is that revenue for many AI companies isn’t matching the pace of investment. Even major players like OpenAI reportedly face significant cash burn problems. But even if the technology falls short, many executives and investors believe, the investments they’re making now will be worth it.

……………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………..

References:

https://www.nytimes.com/2025/06/27/technology/ai-spending-openai-amazon-meta.html

Meta is offering multimillion-dollar pay for AI researchers, but not $100M ‘signing bonuses’

https://www.theinformation.com/briefings/meta-announces-new-superintelligence-lab

OpenAI partners with G42 to build giant data center for Stargate UAE project

AI adoption to accelerate growth in the $215 billion Data Center market

Will billions of dollars big tech is spending on Gen AI data centers produce a decent ROI?

Networking chips and modules for AI data centers: Infiniband, Ultra Ethernet, Optical Connections

Superclusters of Nvidia GPU/AI chips combined with end-to-end network platforms to create next generation data centers

Proposed solutions to high energy consumption of Generative AI LLMs: optimized hardware, new algorithms, green data centers

Google Cloud targets telco network functions, while AWS and Azure are in holding patterns

Overview:

Network operators have used public clouds for analytics and IT, including their business and operational support systems, but the vast majority have been reluctant to rely on hyper-scaler public clouds to host their network functions. However, there have been a few exceptions:

1. AWS counts Boost Mobile, Dish Network, Swisscom and Telefónica Germany as network operators running part of their 5G network in its public cloud. In a cloud-native 5G stand alone (SA) core network, the network functions are virtualized and run as software, rather than relying on dedicated hardware.

a] Dish Network is using Nokia’s cloud-native, 5G standalone core software which is deployed on the AWS public cloud. This includes software for subscriber data management, device management, packet core, voice and data core, and integration services. Dish invokes several AWS services, including Regions, Local Zones and Outposts, to host its 5G core network and related components.

b] Swisscom is migrating its core applications, including OSS/BSS and portions of its 5G core, to AWS according to Business Wire. This is part of a broader digital transformation strategy to modernize its infrastructure and services.

c] Telefónica Germany (O2 Telefónica) has moved its 5G core network to Amazon Web Services (AWS). This move, in collaboration with Nokia, makes them the first telecom company to switch an existing 5G core to a public cloud provider, specifically AWS. They launched their 5G cloud core, built entirely in the cloud, in July 2024, initially serving around one million subscribers.

2. Microsoft’s Azure cloud is running AT&T and the Middle East’s Etisalat 5G core network. AT&T is using Microsoft’s Azure Operator Nexus platform to run its 5G core network, including both standalone (SA) and non-standalone (NSA) deployments, according to AT&T and Microsoft. This move is part of a strategic partnership between the two companies where AT&T is shifting its 5G mobile network to the Microsoft cloud. However, AT&T’s 5G core network is not yet commercially available nationwide.

3. Ericsson has partnered with Google Cloud to offer 5G core as a service (5GCaaS) leveraging Google Cloud’s infrastructure. This allows operators to deploy and manage their 5G core network functions on Google’s cloud, rather than relying solely on traditional on-premises infrastructure. This Ericsson on-demand service recently launched with Google seems aimed mainly at smaller telcos, keen to avoid big upfront costs, or specific scenarios. To address much bigger needs, Google has an Outposts competitor it markets under the brand of Google Distributed Cloud (or GDC).

A serious concern with this Ericsson -Google offering is cloud provider lock-in, i.e. that a telco would not be able to move its 5GCaaS provided by Ericsson to an alternative cloud platform. Going “native,” in this case, meant building on top of Google-specific technologies, which rules out any prospect of a “lift and shift” to AWS, Microsoft or someone else, said Eric Parsons, Ericsson’s vice president of emerging segments in core networks, on a recent call with Light Reading.

……………………………………………………………………………………………………………………………………………………………………….

Google Cloud for Network Functions:

Angelo Libertucci, Google’s global head of telecom told Light Reading, the “timing is right” for a Google campaign that targets telco networks after years of sluggish industry progress. “The pressures that telcos are dealing with – the higher capex, lower ARPU [average revenue per user], competitiveness – it’s been a tough two years and there have been a number of layoffs, at least in North America,” he told Light Reading at last week’s Digital Transformation World event in Copenhagen.

“We run the largest private network on the planet,” said Libertucci. “We have over 2 million miles of fiber.” Services for more than a billion users are supported “with a fraction of the people that even the smallest regional telcos have, and that’s because everything we do is automated,” he claimed.

“There haven’t been that many network functions that run in the cloud – you can probably name them on less than four fingers,” he said. “So we don’t think we’ve really missed the boat yet on that one.” Indeed, most network functions are still deployed on telco premises (aka central offices).

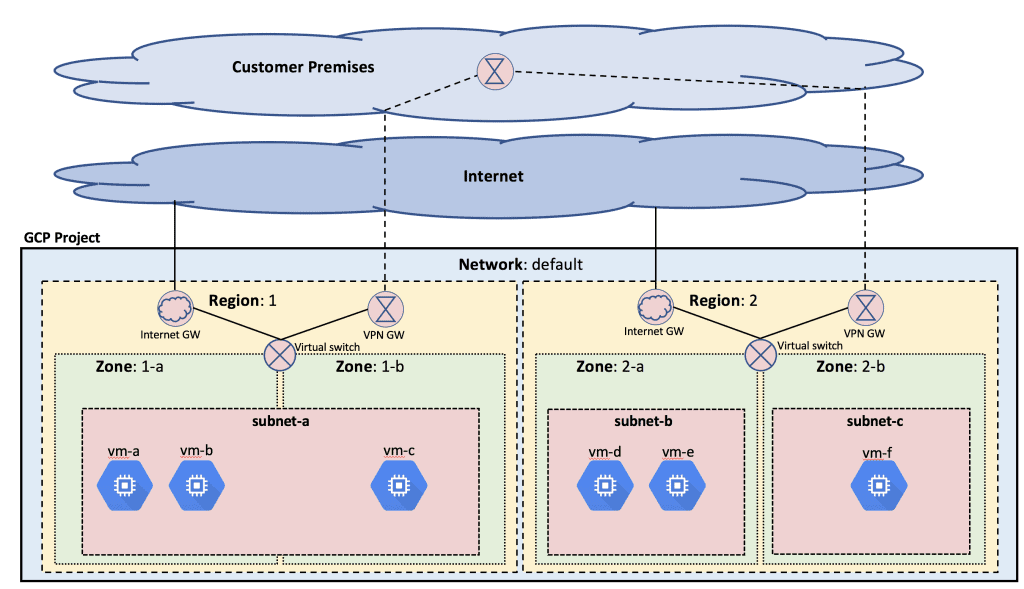

Image Credit: Google Cloud Platform

Deutsche Telekom has partnered with Google earlier this year to build an agentic AI called RAN Guardian, which can assess network data, detect performance issues and even take corrective action without manual intervention. Built using Gemini 2.0 in Vertex AI from Google Cloud, the agent can analyze network behavior, detect performance issues, and implement corrective actions to improve network reliability, reduce operational costs, and enhance customer experiences. Deutsche Telekom keeps the network data at its own facilities but relies on interconnection to Google Cloud for the above listed functions.

“Do I then decide to keep it (network functions and data) on-prem and maintain that pre-processing pipeline that I have? Or is there a cost benefit to just run it in cloud, because then you have all the native integration? You don’t have any interconnect, you have all the data for any use case that you ever wanted or could think of. It’s much easier and much more seamless.” Such autonomous networking, in his view, is now the killer use case for the public cloud.

Yet many telco executives believe that public cloud facilities are incapable of handling certain network functions. European telcos including BT, Deutsche Telekom, Orange and Vodafone, have made investments in their own private cloud platforms for their telco workloads. Also, regulators in some countries may block operators from using public clouds. BT this year said local legislation now prevents it from using the public cloud for network functions. European authorities increasingly talk of the need for a “sovereign cloud” under the full control of local players.

Google does claim to have a set of “sovereign cloud” products that ensure data is stored in the country where the telco operates. “We have fully air-gapped sovereign cloud offerings with Google Cloud binaries that we’ve done in partnership with telcos for years now,” said Libertucci. The uncertainty is whether these will always meet the definition. “If sovereign means you can’t use an American-owned organization, then that’s another part of the definition that somehow we will have to find a way to address,” he added. “If you are cloud-native, it’s supposed to be easier to move to any cloud, but with telco it’s not that simple because it’s a very performance-oriented workload,” said Libertucci.

What’s likely, then, is that operators will assign whole regions to specific combinations of public cloud providers and telco vendors, he thinks, as they have done on the network side. “You see telcos awarding a region to Huawei and another to Ericsson with complete separation between them. They might choose to go down that route with network vendors as well and so you may have an Ericsson and Google part of the network.”

“We’re a platform company, we’re a data company and we’re an AI company,” said Libertucci. “I think we’re happy now with being a platform others develop on.”

………………………………………………………………………………………………………………………………………………………………………………….

Cloud RAN Disappoints:

Outside a trial with Ericsson almost two years ago, there is not much sign of Google activity in cloud RAN, the use of general-purpose chips and cloud platforms to support RAN workloads. “So far, no one’s really pushed us down into that area,” said Libertucci. AWS, by contrast, has this year begun to show off an Outposts server built around one of its own Graviton central processing units for cloud RAN. Currently, however, it does not appear to be supporting a cloud RAN deployment for any telco.

………………………………………………………………………………………………………………………………………………………………………………

References:

https://www.lightreading.com/cloud/google-preps-public-cloud-charge-at-telecom-as-microsoft-wobbles

Deutsche Telekom and Google Cloud partner on “RAN Guardian” AI agent

Ericsson revamps its OSS/BSS with AI using Amazon Bedrock as a foundation

At this week’s TM Forum-organized Digital Transformation World (DTW) event in Copenhagen, Ericsson has given its operations support systems (BSS/OSS) portfolio a complete AI makeover. This BSS/OSS revamp aims to improve operational efficiency, boost business growth, and elevate customer experiences. It includes a Gen-AI Lab, where telcos can try out their latest BSS/OSS-related ideas; a Telco Agentic AI Studio, where developers are invited to come and build generative AI products for telcos; and a range of Ericsson’s own Telco IT AI apps. Underpinning all this is the Telco IT AI Engine, which handles various tasks to do with BSS/OSS orchestration.

Ericsson is investing to enable CSPs make a real impact with AI, intent and automation. AI is now embedded throughout the portfolio, and the other updates range across five critical, interlinked transformation areas within a CSP’s operational transformation, with each area of evolution based on a clear rationale and vision for the value it generates. Ericsson sites several benefits for telcos:

- Data – Make your data more useful. Introducing Telco DataOps Platform. An evolution from the existing Ericsson Mediation, the platform enables unified data collection, processing, management, and governance, removing silos and complexity to make data more useful across the whole business, and fuel effective AI to run their business and operations more smoothly.

- Cloud and IT – Stay ahead of the business. Introducing Ericsson Intelligent IT Suite. A holistic end-to-end approach supporting OSS/BSS evolution designed for Telco scale to accelerate delivery, streamline operations, and empower teams with the tools to unlock value from day one and beyond. It enables CSPs to embrace innovative transformative approaches that deliver real-time business agility and impact to stay ahead of business demands in rapidly evolving OSS/BSS landscapes.

- Monetization – Make sure you get paid. Introducing Ericsson Charging and Billing Evolved. A cloud-native monetization platform that enables real-time charging and billing for multi-sided business models. It is powered by cutting-edge AI capabilities that makes it easy to accelerate partner-led growth, launch and monetize enterprise services efficiently, and capture revenue across all business lines at scale.

- Service Orchestration – Deliver as fast as you can sell. Upgraded Ericsson Service Orchestration and Assurance with Agentic AI: Uses AI and intent to automatically set up and manage services based on a CSP’s business goals, providing a robust engine for transforming to autonomous networks. It empowers CSPs to cut out manual steps and provides the infrastructure to launch and scale differentiated connectivity services

- Core Commerce – Be easy to buy from. AI-enabled core commerce. Streamline selling with intelligent offer creation. Key capabilities include efficient offering design through a Gen-AI capable product configuration assistant and guided selling using an intelligent telco-specific CPQ for seamless ‘Quote to Cash’ processes, supported by a CRM-agnostic approach. CSPs can launch tailored enterprise solutions faster and co-create offers with partners all while delivering seamless omni-channel experiences

Grameenphone, a Bangladesh telco with more than 80 million subscribers is an Ericsson BSS/OSS customer. “They can’t do massive investments in areas that aren’t going to give a return,” said Jason Keane, the head of Ericsson’s business and operational support systems portfolio who noted the low average revenue per user (ARPU) in the Bangladeshi telecom market. The technologies developed by Ericsson are helping Grameenphone’s subscribers with top-ups, bill payments and operations issues.

“What they’re saying is we want to enable our customers to have a fast, seamless experience, where AI can help in some of the interaction flows between external systems. “AI itself isn’t free. You’ve got to pay your consumption, and it can add up if you don’t use it correctly.”

To date, very few companies have seen financial benefits in either higher sales or lower costs from AI. The ROI just isn’t there. If organizations end up spending more on AI systems than they would on manual effort to achieve the same results, money would be wasted. Another issue is the poor quality of telco data which can’t be effectively used to train AI agents.

Ericsson’ Booth at DTW Ignite 2025 event in Copenhagen

………………………………………………………………………………………………………………………………………………………..

Ericsson appears to have been heavily reliant on Amazon Web Services (AWS) for the technologies it is advertising at DTW this week. Amazon Bedrock, a managed service for building generative AI models, is the foundation of the Gen-AI Lab and the Telco Agentic AI Studio. “We had to pick one, right?” said Keane. “I picked Amazon. It’s a good provider, and this is the model I do my development against.”

Regarding AI’s threat to jobs of OSS/BSS workers, Light Reading’s Iain Morris, wrote:

“Wider adoption by telcos of Ericsson’s latest technologies, and similar offerings from rivals, might be a big negative for many telco operations employees. At most immediate risk are the junior technicians or programmers dealing with basic code that can be easily handled by AI. But the senior programmers had to start somewhere, and even they don’t look safe. AI enthusiasts dream of what the TM Forum calls the fully autonomous network, when people are out of the loop and the operation is run almost entirely by machines.”

Ericsson has realized its OSS and BSS tools need to address the requirements of network operators that either already, or will in the near future, adopt cloud-native processes, run cloud-based horizontal IT platforms and make extensive use of AI to automate back-office processes and introduce autonomous network operations that reduce manual intervention and the time to address problems while also introducing greater agility (as long as the right foundations are in place).

Mats Karlsson, Head of Solution Area Business and Operations Support Systems, Ericsson says: “What we are unveiling today illustrates a transformative step into industrializing Business and Operations Support Systems for the autonomous age. Using AI and automation, as well as our decades of knowledge and experience in our people, technology, processes – we get results. These changes will ensure we empower CSPs to unlock value precisely when and where it can be captured. We operate in a complex industry, one which is evidently in need of a focus on no nonsense OSS/BSS. These changes, and our commitment to continuous evolution for innovation, will help simplify it where possible, ensuring that CSPs can get on with their key goals of building better, more efficient services for their customers while securing existing revenue and striving for new revenue opportunities.”

Ahmad Latif Ali, Associate Vice President, EMEA Telecommunications Insights at IDC says: “Our recent research, featured in the IDC InfoBrief “Mapping the OSS/BSS Transformation Journey: Accelerate Innovation and Commercial Success,” highlights recurring challenges organizations faced in transformation initiatives, particularly the complex and often simultaneous evolution of systems, processes, and organizational structures. Ericsson’s continuous evolution of OSS/BSS addresses these key, interlinked transformation challenges head-on, paving the way for automation powered by advanced AI capabilities. This approach creates effective pathways to modernize OSS/BSS and supports meaningful progress across the transformation journey.”

References:

McKinsey: AI infrastructure opportunity for telcos? AI developments in the telecom sector

Telecom sessions at Nvidia’s 2025 AI developers GTC: March 17–21 in San Jose, CA

Quartet launches “Open Telecom AI Platform” with multiple AI layers and domains

Goldman Sachs: Big 3 China telecom operators are the biggest beneficiaries of China’s AI boom via DeepSeek models; China Mobile’s ‘AI+NETWORK’ strategy

Generative AI in telecom; ChatGPT as a manager? ChatGPT vs Google Search

Allied Market Research: Global AI in telecom market forecast to reach $38.8 by 2031 with CAGR of 41.4% (from 2022 to 2031)

The case for and against AI in telecommunications; record quarter for AI venture funding and M&A deals

SK Group and AWS to build Korea’s largest AI data center in Ulsan

Amazon Web Services (AWS) is partnering with the SK Group to build South Korea’s largest AI data center. The two companies are expected to launch the project later this month and will hold a groundbreaking ceremony for the 100MW facility in August, according to state news service Yonhap.

Scheduled to begin operations in 2027, the AI Zone will empower organizations in Korea to develop innovative AI applications locally while leveraging world-class AWS services like Amazon SageMaker, Bedrock, and Q. SK Group expects to bolster Korea’s AI competitiveness and establish the region as a key hub for hyperscale infrastructure in Asia-Pacific through AI initiatives.

AWS provides on-demand cloud computing platforms and application programming interfaces (APIs) to individuals, businesses and governments on a pay-per-use basis.The data center will be built on a 36,000-square-meter site in an industrial park in Ulsan, 305 km southeast of Seoul. It will be powered by 60,000 GPUs, making it the country’s first large-scale AI data center.

The facility will be located in the Mipo industrial complex in Ulsan, 305 kilometers southeast of Seoul. It will house 60,000 graphics processing units (GPUs) and have a power capacity of 100 megawatts, making it the country’s first AI infrastructure of such scale, the sources said.

Ryu Young-sang, chief executive officer (CEO) of SK Telecom Co., had announced the company’s plan to build a hyperscale AI data center equipped with 60,000 GPUs in collaboration with a global tech partner, during the Mobile World Congress (MWC) 2025 held in Spain in March.

SK Telecom plans to invest 3.4 trillion won (US$2.49 billion) in AI infrastructure by 2028, with a significant portion expected to be allocated to the data center project. SK Telecom- South Korea’s biggest mobile operator and 31% owned by the SK Group – will manage the project. “They have been working on the project, but the exact timeline and other details have yet to be finalized,” an SK Group spokesperson said.

The AI data center will be developed in two phases, with the initial 40MW phase to be completed by November 2027 and the full 100MW capacity to be operational by February 2029, the Korea Herald reported Monday. Once completed, the facility, powered by 60,000 graphics processing units, will have a power capacity of 103 megawatts, making it the country’s largest AI infrastructure, sources said.

SK Group appears to have chosen Ulsan as the site, considering its proximity to SK Gas’ liquefied natural gas combined heat and power plant, ensuring a stable supply of large-scale electricity essential for data center operations. The facility is also capable of utilizing LNG cold energy for data center cooling.

SKT last month released its revised AI pyramid strategy, targeting AI infrastructure including data centers, GPUaaS and customized data centers. It is also developing personal agents A. and Aster for consumers and AIX services for enterprise customers.

Globally, it has found partners through the Global Telecom Alliance, which it co-founded, and is collaborating with US firms Anthropic and Lambda.

SKT’s AI business unit is still small, however, recording just KRW156 billion ($115 million) in revenue in Q1, two-thirds of it from data center infrastructure. Its parent SK Group, which also includes memory chip giant SK Hynix and energy firm SK Innovation, reported $88 billion in revenue last year.

AWS, the world’s largest cloud services provider, has been expanding its footprint in Korea. It currently runs a data center in Seoul and began constructing its second facility in Incheon’s Seo District in late 2023. The company has pledged to invest 7.85 trillion won in Korea’s cloud computing infrastructure by 2027.

“When SK Group’s exceptional technical capabilities combine with AWS’s comprehensive AI cloud services, we’ll empower customers of all sizes, and across all industries here in Korea to build and innovate with safe, secure AI technologies,” said Prasad Kalyanaraman, VP of Infrastructure Services at AWS. “This partnership represents our commitment to Korea’s AI future, and I couldn’t be more excited about what we’ll achieve together.”

Earlier this month AWS launched its Taiwan cloud region – its 15th in Asia-Pacific – with plans to invest $5 billion on local cloud and AI infrastructure.

References:

https://en.yna.co.kr/view/AEN20250616004500320?section=k-biz/corporate

https://www.koreaherald.com/article/10510141

https://www.lightreading.com/data-centers/aws-sk-group-to-build-korea-s-largest-ai-data-center

Does AI change the business case for cloud networking?

For several years now, the big cloud service providers – Amazon Web Services (AWS), Microsoft Azure, and Google Cloud – have tried to get wireless network operators to run their 5G SA core network, edge computing and various distributed applications on their cloud platforms. For example, Amazon’s AWS public cloud, Microsoft’s Azure for Operators, and Google’s Anthos for Telecom were intended to get network operators to run their core network functions into a hyperscaler cloud.

AWS had early success with Dish Network’s 5G SA core network which has all its functions running in Amazon’s cloud with fully automated network deployment and operations.

Conversely, AT&T has yet to commercially deploy its 5G SA Core network on the Microsoft Azure public cloud. Also, users on AT&T’s network have experienced difficulties accessing Microsoft 365 and Azure services. Those incidents were often traced to changes within the network’s managed environment. As a result, Microsoft has drastically reduced its early telecom ambitions.

Several pundits now say that AI will significantly strengthen the business case for cloud networking by enabling more efficient resource management, advanced predictive analytics, improved security, and automation, ultimately leading to cost savings, better performance, and faster innovation for businesses utilizing cloud infrastructure.

“AI is already a significant traffic driver, and AI traffic growth is accelerating,” wrote analyst Brian Washburn in a market research report for Omdia (owned by Informa). “As AI traffic adds to and substitutes conventional applications, conventional traffic year-over-year growth slows. Omdia forecasts that in 2026–30, global conventional (non-AI) traffic will be about 18% CAGR [compound annual growth rate].”

Omdia forecasts 2031 as “the crossover point where global AI network traffic exceeds conventional traffic.”

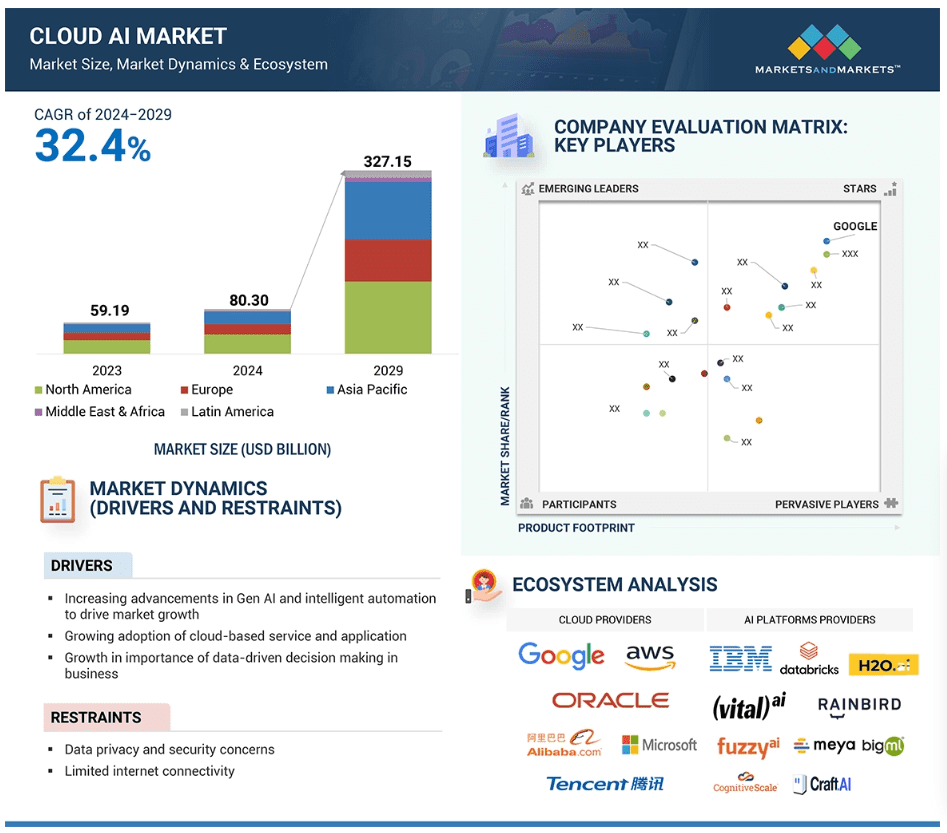

Markets & Markets forecasts the global cloud AI market (which includes cloud AI networking) will grow at a CAGR of 32.4% from 2024 to 2029.

AI is said to enhance cloud networking in these ways:

- Optimized resource allocation:

AI algorithms can analyze real-time data to dynamically adjust cloud resources like compute power and storage based on demand, minimizing unnecessary costs. - Predictive maintenance:

By analyzing network patterns, AI can identify potential issues before they occur, allowing for proactive maintenance and preventing downtime. - Enhanced security:

AI can detect and respond to cyber threats in real-time through anomaly detection and behavioral analysis, improving overall network security. - Intelligent routing:

AI can optimize network traffic flow by dynamically routing data packets to the most efficient paths, improving network performance. - Automated network management:

AI can automate routine network management tasks, freeing up IT staff to focus on more strategic initiatives.

The pitch is that AI will enable businesses to leverage the full potential of cloud networking by providing a more intelligent, adaptable, and cost-effective solution. Well, that remains to be seen. Google’s new global industry lead for telecom, Angelo Libertucci, told Light Reading:

“Now enter AI,” he continued. “With AI … I really have a power to do some amazing things, like enrich customer experiences, automate my network, feed the network data into my customer experience virtual agents. There’s a lot I can do with AI. It changes the business case that we’ve been running.”

“Before AI, the business case was maybe based on certain criteria. With AI, it changes the criteria. And it helps accelerate that move [to the cloud and to the edge],” he explained. “So, I think that work is ongoing, and with AI it’ll actually be accelerated. But we still have work to do with both the carriers and, especially, the network equipment manufacturers.”

Google Cloud last week announced several new AI-focused agreements with companies such as Amdocs, Bell Canada, Deutsche Telekom, Telus and Vodafone Italy.

As IEEE Techblog reported here last week, Deutsche Telekom is using Google Cloud’s Gemini 2.0 in Vertex AI to develop a network AI agent called RAN Guardian. That AI agent can “analyze network behavior, detect performance issues, and implement corrective actions to improve network reliability and customer experience,” according to the companies.

And, of course, there’s all the buzz over AI RAN and we plan to cover expected MWC 2025 announcements in that space next week.

https://www.lightreading.com/cloud/google-cloud-doubles-down-on-mwc

Nvidia AI-RAN survey results; AI inferencing as a reinvention of edge computing?

The case for and against AI-RAN technology using Nvidia or AMD GPUs

Generative AI in telecom; ChatGPT as a manager? ChatGPT vs Google Search

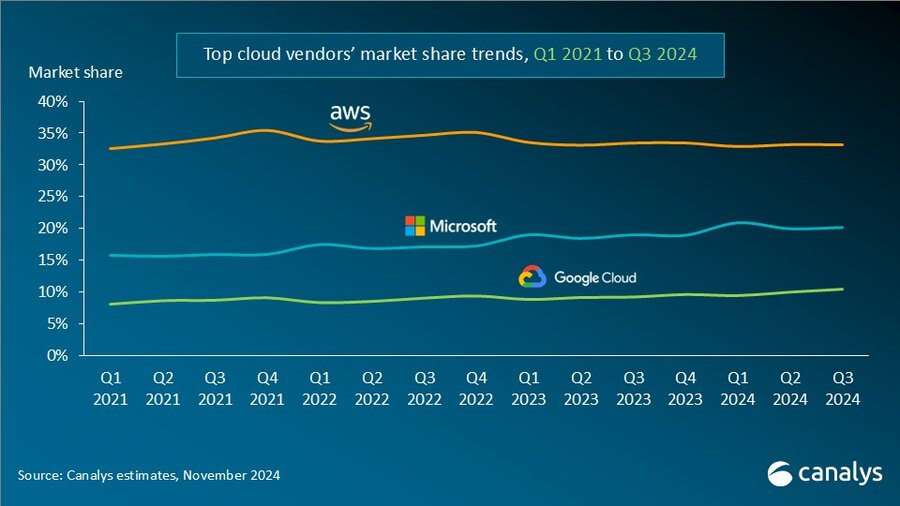

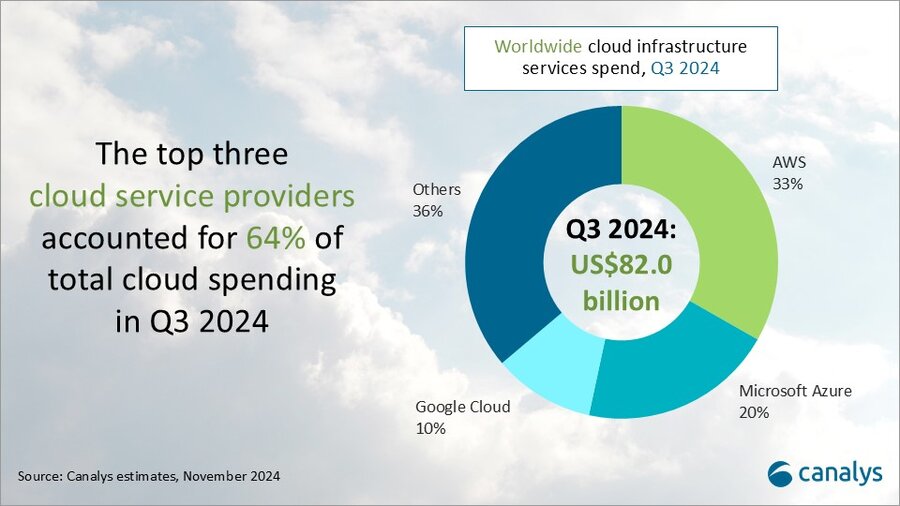

Canalys & Gartner: AI investments drive growth in cloud infrastructure spending

According to market research firm Canalys, global spending on cloud infrastructure services [1.] increased by 21% year on year, reaching US$82.0 billion in the 3rd quarter of 2024. Customer investment in the hyperscalers’ AI offerings fueled growth, prompting leading cloud vendors to escalate their investments in AI.

Note 1. Canalys defines cloud infrastructure services as services providing infrastructure (IaaS and bare metal) and platforms that are hosted by third-party providers and made available to users via the Internet.

The rankings of the top three cloud service providers – Amazon AWS, Microsoft Azure and Google Cloud – remained stable from the previous quarter, with these providers together accounting for 64% of total expenditure. Total combined spending with these three providers grew by 26% year on year, and all three reported sequential growth. Market leader AWS maintained a year-on-year growth rate of 19%, consistent with the previous quarter. That was outpaced by both Microsoft, with 33% growth, and Google Cloud, with 36% growth. In actual dollar terms, however, AWS outgrew both Microsoft and Google Cloud, increasing sales by almost US$4.4 billion on the previous year.

In Q3 2024, the cloud services market saw strong, steady growth. All three cloud hyperscalers reported positive returns on their AI investments, which have begun to contribute to their overall cloud business performance. These returns reflect a growing reliance on AI as a key driver for innovation and competitive advantage in the cloud.

With the increasing adoption of AI technologies, demand for high-performance computing and storage continues to rise, putting pressure on cloud providers to expand their infrastructure. In response, leading cloud providers are prioritizing large-scale investments in next-generation AI infrastructure. To mitigate the risks associated with under-investment – such as being unprepared for future demand or missing key opportunities – they have adopted over-investment strategies, ensuring their ability to scale offerings in line with the growing needs of their AI customers. Enterprises are convinced that AI will deliver an unprecedented boost in efficiency and productivity, so they are pouring money into hyperscalers’ AI solutions. Accordingly, cloud service provider capital spending (CAPEX) will sustain their rapid growth trajectories and are expected to continue on this path into 2025.

“Continued substantial expenditure will present new challenges, requiring cloud vendors to carefully balance their investments in AI with the cost discipline needed to fund these initiatives,” said Rachel Brindley, Senior Director at Canalys. “While companies should invest sufficiently in AI to capitalize on technological growth, they must also exercise caution to avoid overspending or inefficient resource allocation. Ensuring the sustainability of these investments over time will be vital to maintaining long-term financial health and competitive advantage.”

“On the other hand, the three leading cloud providers are also expediting the update and iteration of their AI foundational models, continuously expanding their associated product portfolios,” said Yi Zhang, Analyst at Canalys. “As these AI foundational models mature, cloud providers are focused on leveraging their enhanced capabilities to empower a broader range of core products and services. By integrating these advanced models into their existing offerings, they aim to enhance functionality, improve performance and increase user engagement across their platforms, thereby unlocking new revenue streams.”

Amazon Web Services (AWS) maintained its lead in the global cloud market in Q3 2024, capturing a 33% market share and achieving 19% year-on-year revenue growth. It continued to enhance and broaden its AI offerings by launching new models through Amazon Bedrock and SageMaker, including Anthropic’s upgraded Claude 3.5 Sonnet and Meta’s Llama 3.2. It reported a triple-digit year-on-year increase in AI-related revenue, outpacing its overall growth by more than three times. Over the past 18 months, AWS has introduced nearly twice as many machine learning and generative AI features as the combined offerings of the other leading cloud providers. In terms of capital expenditure, AWS announced plans to further increase investment, with projected spending of approximately US$75 billion in 2024. This investment will primarily be allocated to expanding technology infrastructure to meet the rising demand for AI services, underscoring AWS’ commitment to staying at the forefront of technological innovation and service capability.

Microsoft Azure remains the second-largest cloud provider, with a 20% market share and impressive annual growth of 33%. This growth was partly driven by AI services, which contributed approximately 12% to the overall increase. Over the past six months, use of Azure OpenAI has more than doubled, driven by increased adoption by both digital-native companies and established enterprises transitioning their applications from testing phases to full-scale production environments. To further enhance its offerings, Microsoft is expanding Azure AI by introducing industry-specific models, including advanced multimodal medical imaging models, aimed at providing tailored solutions for a broader customer base. Additionally, the company announced new cloud and AI infrastructure investments in Brazil, Italy, Mexico and Sweden to expand capacity in alignment with long-term demand forecasts.

Google Cloud, the third-largest provider, maintained a 10% market share, achieving robust year-on-year growth of 36%. It showed the strongest AI-driven revenue growth among the leading providers, with a clear acceleration compared with the previous quarter. As of September 2024, its revenue backlog increased to US$86.8 billion, up from US$78.8 billion in Q2, signaling continued momentum in the near term. Its enterprise AI platform, Vertex, has garnered substantial user adoption, with Gemini API calls increasing nearly 14-fold over the past six months. Google Cloud is actively seeking and developing new ways to apply AI tools across different scenarios and use cases. It introduced the GenAI Partner Companion, an AI-driven advisory tool designed to offer service partners personalized access to training resources, enhancing learning and supporting successful project execution. In Q3 2024, Google announced over US$7 billion in planned data center investments, with nearly US$6 billion allocated to projects within the United States.

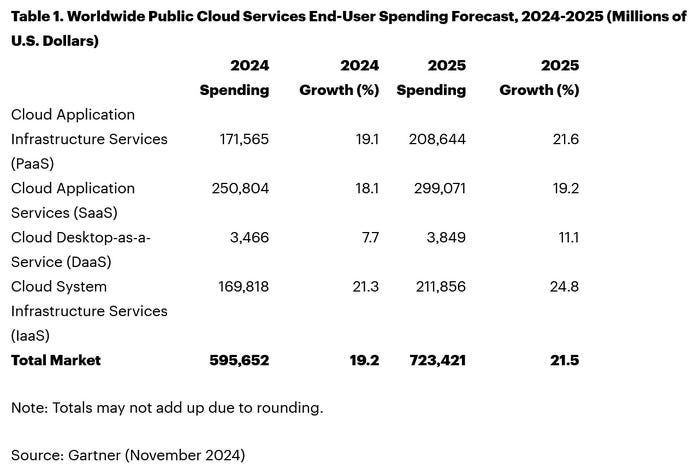

Separate statistics from Gartner corroborate hyperscale CAPEX optimism. Gartner predicts that worldwide end-user spending on public cloud services is on course to reach $723.4 billion next year, up from a projected $595.7 billion in 2024. All segments of the cloud market – platform-as-a-service (PaaS), software-as-a-service (SaaS), desktop-as-a-service (DaaS), and infrastructure-as-a-service (IaaS) – are expected to achieve double-digit growth.

While SaaS will be the biggest single segment, accounting for $299.1 billion, IaaS will grow the fastest, jumping 24.8 percent to $211.9 million.

Like Canalys, Gartner also singles out AI for special attention. “The use of AI technologies in IT and business operations is unabatedly accelerating the role of cloud computing in supporting business operations and outcomes,” said Sid Nag, vice president analyst at Gartner. “Cloud use cases continue to expand with increasing focus on distributed, hybrid, cloud-native, and multicloud environments supported by a cross-cloud framework, making the public cloud services market achieve a 21.5 percent growth in 2025.”

……………………………………………………………………………………………………………………………………………………………………………………………………..

References:

https://canalys.com/newsroom/global-cloud-services-q3-2024

https://www.telecoms.com/public-cloud/ai-hype-fuels-21-percent-jump-in-q3-cloud-spending

Cloud Service Providers struggle with Generative AI; Users face vendor lock-in; “The hype is here, the revenue is not”

MTN Consulting: Top Telco Network Infrastructure (equipment) vendors + revenue growth changes favor cloud service providers

IDC: Public Cloud software at 2/3 of all enterprise applications revenue in 2026; SaaS is essential!

IDC: Cloud Infrastructure Spending +13.5% YoY in 4Q-2021 to $21.1 billion; Forecast CAGR of 12.6% from 2021-2026

IDC: Worldwide Public Cloud Services Revenues Grew 29% to $408.6 Billion in 2021 with Microsoft #1?

Synergy Research: Microsoft and Amazon (AWS) Dominate IT Vendor Revenue & Growth; Popularity of Multi-cloud in 2021

Google Cloud revenues up 54% YoY; Cloud native security is a top priority

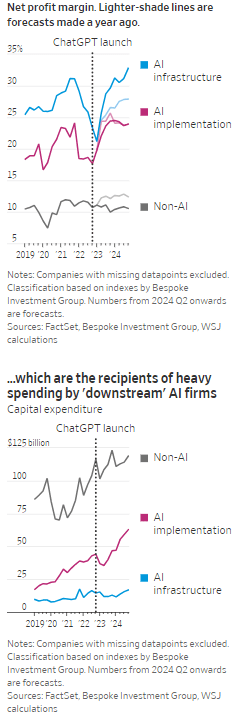

AI Echo Chamber: “Upstream AI” companies huge spending fuels profit growth for “Downstream AI” firms

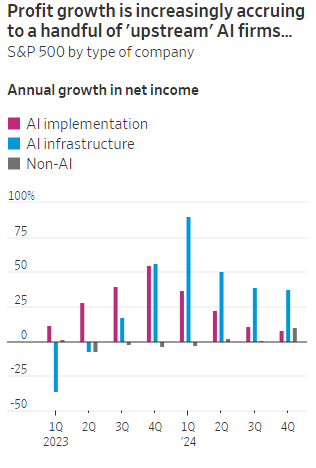

According to the Wall Street Journal, the AI industry has become an “Echo Chamber,” where huge capital spending by the AI infrastructure and application providers have fueled revenue and profit growth for everyone else. Market research firm Bespoke Investment Group has recently created baskets for “downstream” and “upstream” AI companies.

- The Downstream group involves “AI implementation,” which consist of firms that sell AI development tools, such as the large language models (LLMs) popularized by OpenAI’s ChatGPT since the end of 2022, or run products that can incorporate them. This includes Google/Alphabet, Microsoft, Amazon, Meta Platforms (FB), along with IBM, Adobe and Salesforce.

- Higher up the supply chain (Upstream group), are the “AI infrastructure” providers, which sell AI chips, applications, data centers and training software. The undisputed leader is Nvidia, which has seen its sales triple in a year, but it also includes other semiconductor companies, database developer Oracle and owners of data centers Equinix and Digital Realty.

The Upstream group of companies have posted profit margins that are far above what analysts expected a year ago. In the second quarter, and pending Nvidia’s results on Aug. 28th , Upstream AI members of the S&P 500 are set to have delivered a 50% annual increase in earnings. For the remainder of 2024, they will be increasingly responsible for the profit growth that Wall Street expects from the stock market—even accounting for Intel’s huge problems and restructuring.

It should be noted that the lines between the two groups can be blurry, particularly when it comes to giants such as Amazon, Microsoft and Alphabet, which provide both AI implementation (e.g. LLMs) and infrastructure: Their cloud-computing businesses are responsible for turning these companies into the early winners of the AI craze last year and reported breakneck growth during this latest earnings season. A crucial point is that it is their role as ultimate developers of AI applications that have led them to make super huge capital expenditures, which are responsible for the profit surge in the rest of the ecosystem. So there is a definite trickle down effect where the big tech players AI directed CAPEX is boosting revenue and profits for the companies down the supply chain.

As the path for monetizing this technology gets longer and harder, the benefits seem to be increasingly accruing to companies higher up in the supply chain. Meta Platforms Chief Executive Mark Zuckerberg recently said the company’s coming Llama 4 language model will require 10 times as much computing power to train as its predecessor. Were it not for AI, revenues for semiconductor firms would probably have fallen during the second quarter, rather than rise 18%, according to S&P Global.

………………………………………………………………………………………………………………………………………………………..

………………………………………………………………………………………………………………………………………………………..

A paper written by researchers from the likes of Cambridge and Oxford uncovered that the large language models (LLMs) behind some of today’s most exciting AI apps may have been trained on “synthetic data” or data generated by other AI. This revelation raises ethical and quality concerns. If an AI model is trained primarily or even partially on synthetic data, it might produce outputs lacking human-generated content’s richness and reliability. It could be a case of the blind leading the blind, with AI models reinforcing the limitations or biases inherent in the synthetic data they were trained on.

In this paper, the team coined the phrase “model collapse,” claiming that training models this way will answer user prompts with low-quality outputs. The idea of “model collapse” suggests a sort of unraveling of the machine’s learning capabilities, where it fails to produce outputs with the informative or nuanced characteristics we expect. This poses a serious question for the future of AI development. If AI is increasingly trained on synthetic data, we risk creating echo chambers of misinformation or low-quality responses, leading to less helpful and potentially even misleading systems.

……………………………………………………………………………………………………………………………………………

In a recent working paper, Massachusetts Institute of Technology (MIT) economist Daron Acemoglu argued that AI’s knack for easy tasks has led to exaggerated predictions of its power to enhance productivity in hard jobs. Also, some of the new tasks created by AI may have negative social value (such as design of algorithms for online manipulation). Indeed, data from the Census Bureau show that only a small percentage of U.S. companies outside of the information and knowledge sectors are looking to make use of AI.

References:

https://deepgram.com/learn/the-ai-echo-chamber-model-collapse-synthetic-data-risks

https://economics.mit.edu/sites/default/files/2024-04/The%20Simple%20Macroeconomics%20of%20AI.pdf

AI wave stimulates big tech spending and strong profits, but for how long?

AI winner Nvidia faces competition with new super chip delayed

SK Telecom and Singtel partner to develop next-generation telco technologies using AI

Telecom and AI Status in the EU

Vodafone: GenAI overhyped, will spend $151M to enhance its chatbot with AI

Data infrastructure software: picks and shovels for AI; Hyperscaler CAPEX

AI wave stimulates big tech spending and strong profits, but for how long?

Big tech companies have made it clear over the last week that they have no intention of slowing down their stunning levels of spending on artificial intelligence (AI), even though investors are getting worried that a big payoff is further down the line than most believe.

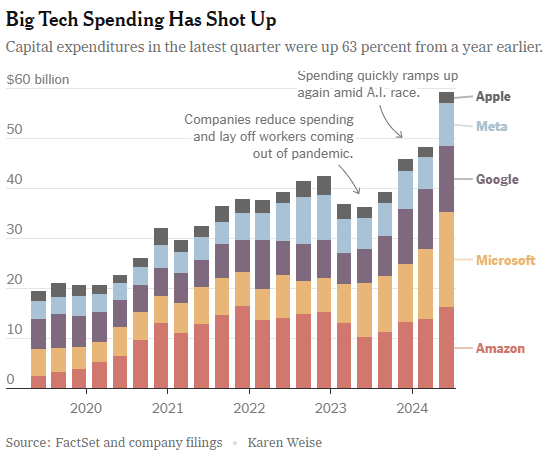

In the last quarter, Apple, Amazon, Meta, Microsoft and Google’s parent company Alphabet spent a combined $59 billion on capital expenses, 63% more than a year earlier and 161 percent more than four years ago. A large part of that was funneled into building data centers and packing them with new computer systems to build artificial intelligence. Only Apple has not dramatically increased spending, because it does not build the most advanced AI systems and is not a cloud service provider like the others.

At the beginning of this year, Meta said it would spend more than $30 billion in 2024 on new tech infrastructure. In April, he raised that to $35 billion. On Wednesday, he increased it to at least $37 billion. CEO Mark Zuckerberg said Meta would spend even more next year. He said he’d rather build too fast “rather than too late,” and allow his competitors to get a big lead in the A.I. race. Meta gives away the advanced A.I. systems it develops, but Mr. Zuckerberg still said it was worth it. “Part of what’s important about A.I. is that it can be used to improve all of our products in almost every way,” he said.

………………………………………………………………………………………………………………………………………………………..

This new wave of Generative A.I. is incredibly expensive. The systems work with vast amounts of data and require sophisticated computer chips and new data centers to develop the technology and serve it to customers. The companies are seeing some sales from their A.I. work, but it is barely moving the needle financially.

In recent months, several high-profile tech industry watchers, including Goldman Sachs’s head of equity research and a partner at the venture firm Sequoia Capital, have questioned when or if A.I. will ever produce enough benefit to bring in the sales needed to cover its staggering costs. It is not clear that AI will come close to having the same impact as the internet or mobile phones, Goldman’s Jim Covello wrote in a June report.

“What $1 trillion problem will AI solve?” he wrote. “Replacing low wage jobs with tremendously costly technology is basically the polar opposite of the prior technology transitions I’ve witnessed in my 30 years of closely following the tech industry.” “The reality right now is that while we’re investing a significant amount in the AI.space and in infrastructure, we would like to have more capacity than we already have today,” said Andy Jassy, Amazon’s chief executive. “I mean, we have a lot of demand right now.”

That means buying land, building data centers and all the computers, chips and gear that go into them. Amazon executives put a positive spin on all that spending. “We use that to drive revenue and free cash flow for the next decade and beyond,” said Brian Olsavsky, the company’s finance chief.

There are plenty of signs the boom will persist. In mid-July, Taiwan Semiconductor Manufacturing Company, which makes most of the in-demand chips designed by Nvidia (the ONLY tech company that is now making money from AI – much more below) that are used in AI systems, said those chips would be in scarce supply until the end of 2025.

Mr. Zuckerberg said AI’s potential is super exciting. “It’s why there are all the jokes about how all the tech C.E.O.s get on these earnings calls and just talk about A.I. the whole time.”

……………………………………………………………………………………………………………………

Big tech profits and revenue continue to grow, but will massive spending produce a good ROI?

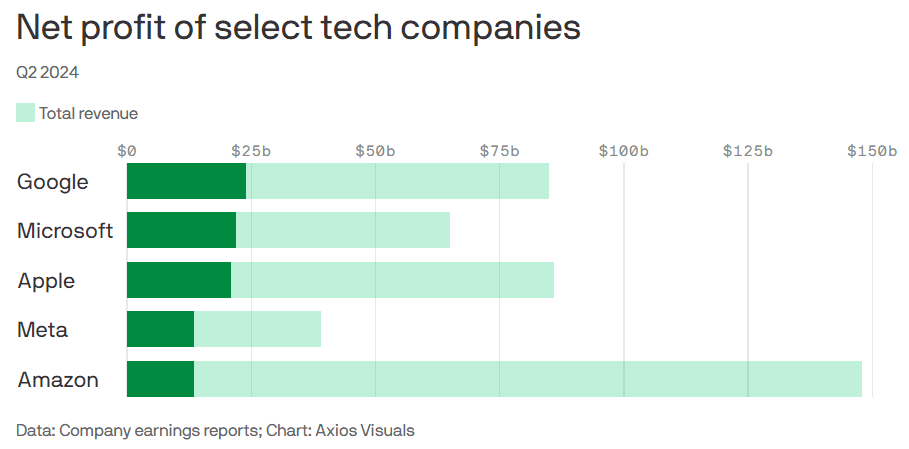

Last week’s Q2-2024 results:

- Google parent Alphabet reported $24 billion net profit on $85 billion revenue.

- Microsoft reported $22 billion net profit on $65 billion revenue.

- Meta reported $13.5 billion net profit on $39 billion revenue.

- Apple reported $21 billion net profit on $86 billion revenue.

- Amazon reported $13.5 billion net profit on $148 billion revenue.

This chart sums it all up:

………………………………………………………………………………………………………………………………………………………..

References:

https://www.nytimes.com/2024/08/02/technology/tech-companies-ai-spending.html

https://www.axios.com/2024/08/02/google-microsoft-meta-ai-earnings

https://www.nvidia.com/en-us/data-center/grace-hopper-superchip/

AI Frenzy Backgrounder; Review of AI Products and Services from Nvidia, Microsoft, Amazon, Google and Meta; Conclusions

Deutsche Telekom with AWS and VMware demonstrate a global enterprise network for seamless connectivity across geographically distributed data centers

Deutsche Telekom (DT) has partnered up with AWS and VMware to demonstrate what the German network operator describes as a “globally distributed enterprise network” that combines Deutsche Telekom connectivity services in federation with third party connectivity, compute, and storage resources at campus locations in Prague, Czech Republic and Seattle, USA and an OGA (Open Grid Alliance) grid node in Bonn, Germany.

The goal is to allow customers to book connectivity services directly from DT using a unified interface for the management of the network across its various locations.

The POC demonstrates how the approach supports an optimized resource allocation for advanced AI based applications such as video analytics, autonomous vehicles and robotics. The demonstration use case is video analytics with distributed AI inference.

PoC setup:

The global enterprise network integrates Deutsche Telekom private 5G wireless solutions, AWS services and infrastructure, VMware’s multi-cloud telco platform, OGA grid nodes and Mavenir’s RAN/Core functions. Two 5G Standalone (SA) private wireless networks deployed at locations in Prague, Czech Republic and Seattle, USA are connected to a Mavenir 5G Core hosted on AWS Frankfurt Region leveraging the framework of the Integrated Private Wireless on AWS program. The convergence of the global network with local high-speed 5G connectivity is enabled by the AWS backbone and infrastructure.

The 5G SA private wireless network with User Plane Function (UPF) and RAN hosted at the Seattle location, is operating on the VMware Telco Cloud Platform to enable low latency services. The VMware Service Management and Orchestration (SMO) is also deployed in the same location and serves as the global orchestrator. The SMO framework helps to simplify, optimize and automate the RAN, Core and its applications in a multi-cloud environment.

To demonstrate the benefit of this approach, the deployed POC used a video analytics application where cameras were installed at both Prague and Seattle locations and connected through a private wireless global enterprise network. Using this approach, operators were able to run AI components concurrently for immediate analysis and inferencing. This helps demonstrate the ability for customers to seamlessly connect devices across locations using the global enterprise network. Leveraging OGA architectural principles for Distributed Edge AI Networking, an OGA grid node was established on Dell infrastructure in Bonn facilitating seamless connectivity across the European locations.

Statements:

“As AI gets engrained deeper in the ecosystem of our lives, it necessitates equitable access to compute and connectivity for everyone, everywhere across the globe. Multi-national enterprises are seeking trusted and sovereign compute & connectivity constructs that underpin an equitable and seamless access. Deutsche Telekom is excited to partner with the OGA ecosystem for co-creation on these essential constructs and the enablement of the Distributed Edge AI Networking applications of the future,” – Kaniz Mahdi, Group Chief Architect and SVP Technology Architecture and Innovation at Deutsche Telekom.

“VMware is proud to support this Proof of Concept – contributing know-how and a modern and scalable platform that aims to offer the agility required in distributed environments. VMware Telco Cloud Platform is suited to deliver the compute resources on-demand wherever critical customer workloads are needed. As a founding member of the Open Grid Alliance, VMware embraces both the principles of this initiative and the opportunity to collaborate more deeply with fellow alliance members AWS and Deutsche Telekom to help meet the evolving needs of global enterprise customers.” – Stephen Spellicy, vice president, Service Provider Marketing, Enablement and Business Development, VMware

References:

https://www.telekom.com/en/media/media-information/archive/global-enterprise-network-1050910

Deutsche Telekom Global Carrier Launches New Point-of-Presence (PoP) in Miami, Florida

AWS Integrated Private Wireless with Deutsche Telekom, KDDI, Orange, T-Mobile US, and Telefónica partners

Deutsche Telekom Achieves End-to-end Data Call on Converged Access using WWC standards

Deutsche Telekom exec: AI poses massive challenges for telecom industry