Microsoft Azure

Big Tech and VCs invest hundreds of billions in AI while salaries of AI experts reach the stratosphere

Introduction:

Two and a half years after OpenAI set off the generative artificial intelligence (AI) race with the release of the ChatGPT, big tech companies are accelerating their A.I. spending, pumping hundreds of billions of dollars into their frantic effort to create systems that can mimic or even exceed the abilities of the human brain. The areas of super huge AI spending are data centers, salaries for experts, and VC investments. Meanwhile, the UAE is building one of the world’s largest AI data centers while Softbank CEO Masayoshi Son believes that Artificial General Intelligence (AGI) will surpass human-level cognitive abilities (Artificial General Intelligence or AGI) within a few years. And that Artificial Super Intelligence (ASI) will surpass human intelligence by a factor of 10,000 within the next 10 years.

AI Data Center Build-out Boom:

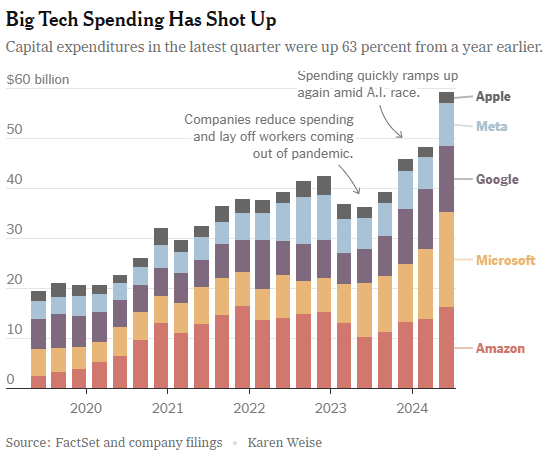

Tech industry’s giants are building AI data centers that can cost more than $100 billion and will consume more electricity than a million American homes. Meta, Microsoft, Amazon and Google have told investors that they expect to spend a combined $320 billion on infrastructure costs this year. Much of that will go toward building new data centers — more than twice what they spent two years ago.

As OpenAI and its partners build a roughly $60 billion data center complex for A.I. in Texas and another in the Middle East, Meta is erecting a facility in Louisiana that will be twice as large. Amazon is going even bigger with a new campus in Indiana. Amazon’s partner, the A.I. start-up Anthropic, says it could eventually use all 30 of the data centers on this 1,200-acre campus to train a single A.I system. Even if Anthropic’s progress stops, Amazon says that it will use those 30 data centers to deliver A.I. services to customers.

Amazon is building a data center complex in New Carlisle, Ind., for its work with the A.I. company Anthropic. Photo Credit…AJ Mast for The New York Times

……………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………..

Stargate UAE:

OpenAI is partnering with United Arab Emirates firm G42 and others to build a huge artificial-intelligence data center in Abu Dhabi, UAE. The project, called Stargate UAE, is part of a broader push by the U.A.E. to become one of the world’s biggest funders of AI companies and infrastructure—and a hub for AI jobs. The Stargate project is led by G42, an AI firm controlled by Sheikh Tahnoon bin Zayed al Nahyan, the U.A.E. national-security adviser and brother of the president. As part of the deal, an enhanced version of ChatGPT would be available for free nationwide, OpenAI said.

The first 200-megawatt chunk of the data center is due to be completed by the end of 2026, while the remainder of the project hasn’t been finalized. The buildings’ construction will be funded by G42, and the data center will be operated by OpenAI and tech company Oracle, G42 said. Other partners include global tech investor, AI/GPU chip maker Nvidia and network-equipment company Cisco.

……………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………..

Softbank and ASI:

Not wanting to be left behind, SoftBank, led by CEO Masayoshi Son, has made massive investments in AI and has a bold vision for the future of AI development. Son has expressed a strong belief that Artificial Super Intelligence (ASI), surpassing human intelligence by a factor of 10,000, will emerge within the next 10 years. For example, Softbank has:

- Significant investments in OpenAI, with planned investments reaching approximately $33.2 billion. Son considers OpenAI a key partner in realizing their ASI vision.

- Acquired Ampere Computing (chip designer) for $6.5 billion to strengthen their AI computing capabilities.

- Invested in the Stargate Project alongside OpenAI, Oracle, and MGX. Stargate aims to build large AI-focused data centers in the U.S., with a planned investment of up to $500 billion.

Son predicts that AI will surpass human-level cognitive abilities (Artificial General Intelligence or AGI) within a few years. He then anticipates a much more advanced form of AI, ASI, to be 10,000 times smarter than humans within a decade. He believes this progress is driven by advancements in models like OpenAI’s o1, which can “think” for longer before responding.

……………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………..

Super High Salaries for AI Researchers:

Salaries for A.I. experts are going through the roof and reaching the stratosphere. OpenAI, Google DeepMind, Anthropic, Meta, and NVIDIA are paying over $300,000 in base salary, plus bonuses and stock options. Other companies like Netflix, Amazon, and Tesla are also heavily invested in AI and offer competitive compensation packages.

Meta has been offering compensation packages worth as much as $100 million per person. The owner of Facebook made more than 45 offers to researchers at OpenAI alone, according to a person familiar with these approaches. Meta’s CTO Andrew Bosworth implied that only a few people for very senior leadership roles may have been offered that kind of money, but clarified “the actual terms of the offer” wasn’t a “sign-on bonus. It’s all these different things.” Tech companies typically offer the biggest chunks of their pay to senior leaders in restricted stock unit (RSU) grants, dependent on either tenure or performance metrics. A four-year total pay package worth about $100 million for a very senior leader is not inconceivable for Meta. Most of Meta’s named officers, including Bosworth, have earned total compensation of between $20 million and nearly $24 million per year for years.

Meta CEO Mark Zuckerberg on Monday announced its new artificial intelligence organization, Meta Superintelligence Labs, to its employees, according to an internal post reviewed by The Information. The organization includes Meta’s existing AI teams, including its Fundamental AI Research lab, as well as “a new lab focused on developing the next generation of our models,” Zuckerberg said in the post. Scale AI CEO Alexandr Wang has joined Meta as its Chief AI Officer and will partner with former GitHub CEO Nat Friedman to lead the organization. Friedman will lead Meta’s work on AI products and applied research.

“I’m excited about the progress we have planned for Llama 4.1 and 4.2,” Zuckerberg said in the post. “In parallel, we’re going to start research on our next generation models to get to the frontier in the next year or so,” he added.

On Thursday, researcher Lucas Beyer confirmed he was leaving OpenAI to join Meta along with the two others who led OpenAI’s Zurich office. He tweeted: “1) yes, we will be joining Meta. 2) no, we did not get 100M sign-on, that’s fake news.” (Beyer politely declined to comment further on his new role to TechCrunch.) Beyer’s expertise is in computer vision AI. That aligns with what Meta is pursuing: entertainment AI, rather than productivity AI, Bosworth reportedly said in that meeting. Meta already has a stake in the ground in that area with its Quest VR headsets and its Ray-Ban and Oakley AI glasses.

……………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………..

VC investments in AI are off the charts:

Venture capitalists are strongly increasing their AI spending. U.S. investment in A.I. companies rose to $65 billion in the first quarter, up 33% from the previous quarter and up 550% from the quarter before ChatGPT came out in 2022, according to data from PitchBook, which tracks the industry.

This astounding VC spending, critics argue, comes with a huge risk. A.I. is arguably more expensive than anything the tech industry has tried to build, and there is no guarantee it will live up to its potential. But the bigger risk, many executives believe, is not spending enough to keep pace with rivals.

“The thinking from the big C.E.O.s is that they can’t afford to be wrong by doing too little, but they can afford to be wrong by doing too much,” said Jordan Jacobs, a partner with the venture capital firm Radical Ventures. “Everyone is deeply afraid of being left behind,” said Chris V. Nicholson, an investor with the venture capital firm Page One Ventures who focuses on A.I. technologies.

Indeed, a significant driver of investment has been a fear of missing out on the next big thing, leading to VCs pouring billions into AI startups at “nosebleed valuations” without clear business models or immediate paths to profitability.

Conclusions:

Big tech companies and VCs acknowledge that they may be overestimating A.I.’s potential. Developing and implementing AI systems, especially large language models (LLMs), is incredibly expensive due to hardware (GPUs), software, and expertise requirements. One of the chief concerns is that revenue for many AI companies isn’t matching the pace of investment. Even major players like OpenAI reportedly face significant cash burn problems. But even if the technology falls short, many executives and investors believe, the investments they’re making now will be worth it.

……………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………………..

References:

https://www.nytimes.com/2025/06/27/technology/ai-spending-openai-amazon-meta.html

Meta is offering multimillion-dollar pay for AI researchers, but not $100M ‘signing bonuses’

https://www.theinformation.com/briefings/meta-announces-new-superintelligence-lab

OpenAI partners with G42 to build giant data center for Stargate UAE project

AI adoption to accelerate growth in the $215 billion Data Center market

Will billions of dollars big tech is spending on Gen AI data centers produce a decent ROI?

Networking chips and modules for AI data centers: Infiniband, Ultra Ethernet, Optical Connections

Superclusters of Nvidia GPU/AI chips combined with end-to-end network platforms to create next generation data centers

Proposed solutions to high energy consumption of Generative AI LLMs: optimized hardware, new algorithms, green data centers

Does AI change the business case for cloud networking?

For several years now, the big cloud service providers – Amazon Web Services (AWS), Microsoft Azure, and Google Cloud – have tried to get wireless network operators to run their 5G SA core network, edge computing and various distributed applications on their cloud platforms. For example, Amazon’s AWS public cloud, Microsoft’s Azure for Operators, and Google’s Anthos for Telecom were intended to get network operators to run their core network functions into a hyperscaler cloud.

AWS had early success with Dish Network’s 5G SA core network which has all its functions running in Amazon’s cloud with fully automated network deployment and operations.

Conversely, AT&T has yet to commercially deploy its 5G SA Core network on the Microsoft Azure public cloud. Also, users on AT&T’s network have experienced difficulties accessing Microsoft 365 and Azure services. Those incidents were often traced to changes within the network’s managed environment. As a result, Microsoft has drastically reduced its early telecom ambitions.

Several pundits now say that AI will significantly strengthen the business case for cloud networking by enabling more efficient resource management, advanced predictive analytics, improved security, and automation, ultimately leading to cost savings, better performance, and faster innovation for businesses utilizing cloud infrastructure.

“AI is already a significant traffic driver, and AI traffic growth is accelerating,” wrote analyst Brian Washburn in a market research report for Omdia (owned by Informa). “As AI traffic adds to and substitutes conventional applications, conventional traffic year-over-year growth slows. Omdia forecasts that in 2026–30, global conventional (non-AI) traffic will be about 18% CAGR [compound annual growth rate].”

Omdia forecasts 2031 as “the crossover point where global AI network traffic exceeds conventional traffic.”

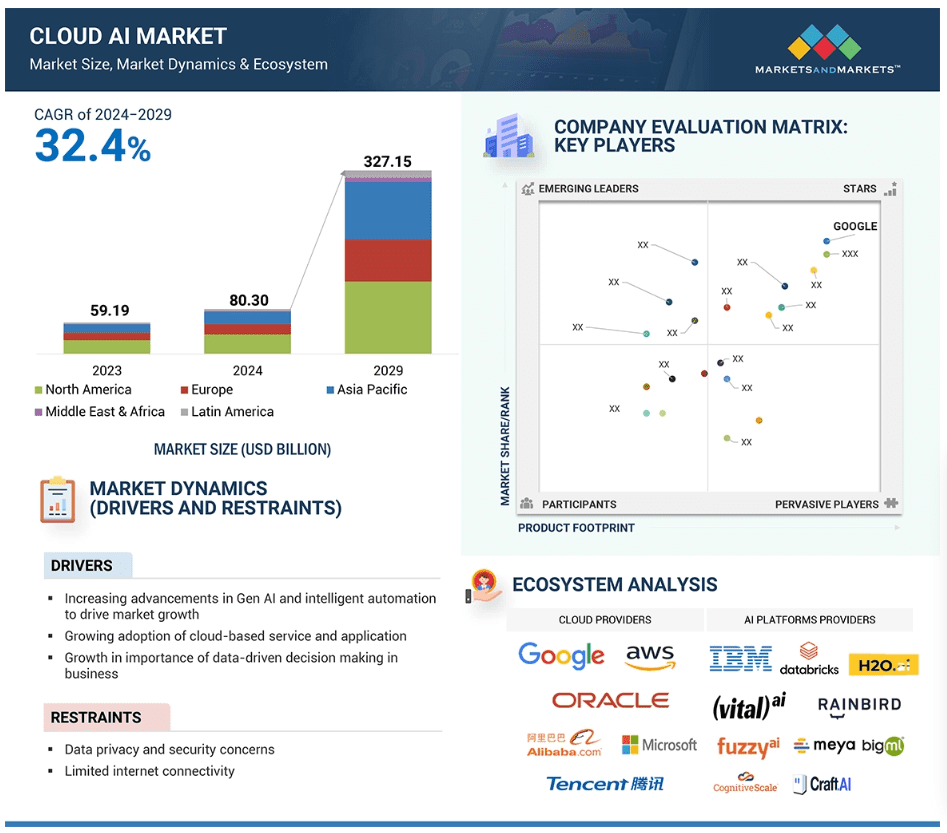

Markets & Markets forecasts the global cloud AI market (which includes cloud AI networking) will grow at a CAGR of 32.4% from 2024 to 2029.

AI is said to enhance cloud networking in these ways:

- Optimized resource allocation:

AI algorithms can analyze real-time data to dynamically adjust cloud resources like compute power and storage based on demand, minimizing unnecessary costs. - Predictive maintenance:

By analyzing network patterns, AI can identify potential issues before they occur, allowing for proactive maintenance and preventing downtime. - Enhanced security:

AI can detect and respond to cyber threats in real-time through anomaly detection and behavioral analysis, improving overall network security. - Intelligent routing:

AI can optimize network traffic flow by dynamically routing data packets to the most efficient paths, improving network performance. - Automated network management:

AI can automate routine network management tasks, freeing up IT staff to focus on more strategic initiatives.

The pitch is that AI will enable businesses to leverage the full potential of cloud networking by providing a more intelligent, adaptable, and cost-effective solution. Well, that remains to be seen. Google’s new global industry lead for telecom, Angelo Libertucci, told Light Reading:

“Now enter AI,” he continued. “With AI … I really have a power to do some amazing things, like enrich customer experiences, automate my network, feed the network data into my customer experience virtual agents. There’s a lot I can do with AI. It changes the business case that we’ve been running.”

“Before AI, the business case was maybe based on certain criteria. With AI, it changes the criteria. And it helps accelerate that move [to the cloud and to the edge],” he explained. “So, I think that work is ongoing, and with AI it’ll actually be accelerated. But we still have work to do with both the carriers and, especially, the network equipment manufacturers.”

Google Cloud last week announced several new AI-focused agreements with companies such as Amdocs, Bell Canada, Deutsche Telekom, Telus and Vodafone Italy.

As IEEE Techblog reported here last week, Deutsche Telekom is using Google Cloud’s Gemini 2.0 in Vertex AI to develop a network AI agent called RAN Guardian. That AI agent can “analyze network behavior, detect performance issues, and implement corrective actions to improve network reliability and customer experience,” according to the companies.

And, of course, there’s all the buzz over AI RAN and we plan to cover expected MWC 2025 announcements in that space next week.

https://www.lightreading.com/cloud/google-cloud-doubles-down-on-mwc

Nvidia AI-RAN survey results; AI inferencing as a reinvention of edge computing?

The case for and against AI-RAN technology using Nvidia or AMD GPUs

Generative AI in telecom; ChatGPT as a manager? ChatGPT vs Google Search

Will billions of dollars big tech is spending on Gen AI data centers produce a decent ROI?

One of the big tech themes in 2024 was the buildout of data center infrastructure to support generative (Gen) artificial intelligence (AI) compute servers. Gen AI requires massive computational power, which only huge, powerful data centers can provide. Big tech companies like Amazon (AWS), Microsoft (Azure), Google (Google Cloud), Meta (Facebook) and others are building or upgrading their data centers to provide the infrastructure necessary for training and deploying AI models. These investments include high-performance GPUs, specialized hardware, and cutting-edge network infrastructure.

- Barron’s reports that big tech companies are spending billions on that initiative. In the first nine months of 2024, Amazon, Microsoft, and Alphabet spent a combined $133 billion building AI capacity, up 57% from the previous year, according to Barron’s. Much of the spending accrued to Nvidia, whose data center revenue reached $80 billion over the past three quarters, up 174%. The infrastructure buildout will surely continue in 2025, but tough questions from investors about return on investment (ROI) and productivity gains will take center stage from here.

- Amazon, Google, Meta and Microsoft expanded such investments by 81% year over year during the third quarter of 2024, according to an analysis by the Dell’Oro Group, and are on track to have spent $180 billion on data centers and related costs by the end of the year. The three largest public cloud providers, Amazon Web Services (AWS), Azure and Google Cloud, each had a spike in their investment in AI during the third quarter of this year. Baron Fung, a senior director at Dell’Oro Group, told Newsweek: “We think spending on AI infrastructure will remain elevated compared to other areas over the long-term. These cloud providers are spending many billions to build larger and more numerous AI clusters. The larger the AI cluster, the more complex and sophisticated AI models that can be trained. Applications such as Copilot, chatbots, search, will be more targeted to each user and application, ultimately delivering more value to users and how much end-users will pay for such a service,” Fung added.

- Efficient and scalable data centers can lower operational costs over time. Big tech companies could offer AI cloud services at scale, which might result in recurring revenue streams. For example, AI infrastructure-as-a-service (IaaS) could be a substantial revenue driver in the future, but no one really knows when that might be.

Microsoft has a long history of pushing new software and services products to its large customer base. In fact, that greatly contributed to the success of its Azure cloud computing and storage services. The centerpiece of Microsoft’s AI strategy is getting many of those customers to pay for Microsoft 365 Copilot, an AI assistant for its popular apps like Word, Excel, and PowerPoint. Copilot costs $360 a year per user, and that’s on top of all the other software, which costs anywhere from $72 to $657 a year. Microsoft’s AI doesn’t come cheap. Alistair Speirs, senior director of Microsoft Azure Global Infrastructure told Newsweek: “Microsoft’s datacenter construction has been accelerating for the past few years, and that growth is guided by the growing demand signals that we are seeing from customers for our cloud and AI offerings. “As we grow our infrastructure to meet the increasing demand for our cloud and AI services, we do so with a holistic approach, grounded in the principle of being a good neighbor in the communities in which we operate.”

Venture capitalist David Cahn of Sequoia Capital estimates that for AI to be profitable, every dollar invested on infrastructure needs four dollars in revenue. Those profits aren’t likely to come in 2025, but the companies involved (and there investors) will no doubt want to see signs of progress. One issue they will have to deal with is the popularity of free AI, which doesn’t generate any revenue by itself.

An August 2024 survey of over 4,600 adult Americans from researchers at the Federal Reserve Bank of St. Louis, Vanderbilt University, and Harvard University showed that 32% of respondents had used AI in the previous week, a faster adoption rate than either the PC or the internet. When asked what services they used, free options like OpenAI’s ChatGPT, Google’s Gemini, Meta Platform’s Meta AI, and Microsoft’s Windows Copilot were cited most often. Unlike 365, versions of Copilot built into Windows and Bing are free.

The unsurprising popularity of free AI services creates a dilemma for tech firms. It’s expensive to run AI in the cloud at scale, and as of now there’s no revenue behind it. The history of the internet suggests that these free services will be monetized through advertising, an arena where Google, Meta, and Microsoft have a great deal of experience. Investors should expect at least one of these services to begin serving ads in 2025, with the others following suit. The better AI gets—and the more utility it provides—the more likely consumers will go along with those ads.

Productivity Check:

We’re at the point in AI’s rollout where novelty needs to be replaced by usefulness—and investors will soon be looking for signs that AI is delivering productivity gains to business. Here we can turn to macroeconomic data for answers. According to the U.S. Bureau of Labor Statistics, since the release of ChatGPT in November 2022, labor productivity has risen at an annualized rate of 2.3% versus the historical median of 2.0%. It’s too soon to credit AI for those gains, but if above-median productivity growth continues into 2025, the conversation gets more interesting.

There’s also the continued question of AI and jobs, a fraught conversation that isn’t going to get any easier. There may already be AI-related job loss happening in the information sector, home to media, software, and IT. Since the release of ChatGPT, employment is down 3.9% in the sector, even as U.S. payrolls overall have grown by 3.3%. The other jobs most at risk are in professional and business services and in the financial sector. To be sure, the history of technological change is always complicated. AI might take away jobs, but it’s sure to add some, too.

“Some jobs will likely be automated. But at the same time, we could see new opportunities in areas requiring creativity, judgment, or decision-making,” economists Alexander Bick of the Federal Reserve Bank of St. Louis and Adam Blandin of Vanderbilt University tell Barron’s. “Historically, every big tech shift has created new types of work we couldn’t have imagined before.”

Closing Quote:

“Generative AI (GenAI) is being felt across all technology segments and subsegments, but not to everyone’s benefit,” said John-David Lovelock, Distinguished VP Analyst at Gartner. “Some software spending increases are attributable to GenAI, but to a software company, GenAI most closely resembles a tax. Revenue gains from the sale of GenAI add-ons or tokens flow back to their AI model provider partner.”

References:

AI Stocks Face a New Test. Here Are the 3 Big Questions Hanging Over Tech in 2025

Big Tech Increases Spending on Infrastructure Amid AI Boom – Newsweek

Superclusters of Nvidia GPU/AI chips combined with end-to-end network platforms to create next generation data centers

Ciena CEO sees huge increase in AI generated network traffic growth while others expect a slowdown

Proposed solutions to high energy consumption of Generative AI LLMs: optimized hardware, new algorithms, green data centers

SK Telecom unveils plans for AI Infrastructure at SK AI Summit 2024

Huawei’s “FOUR NEW strategy” for carriers to be successful in AI era

Initiatives and Analysis: Nokia focuses on data centers as its top growth market

India Mobile Congress 2024 dominated by AI with over 750 use cases

Reuters & Bloomberg: OpenAI to design “inference AI” chip with Broadcom and TSMC

Canalys & Gartner: AI investments drive growth in cloud infrastructure spending

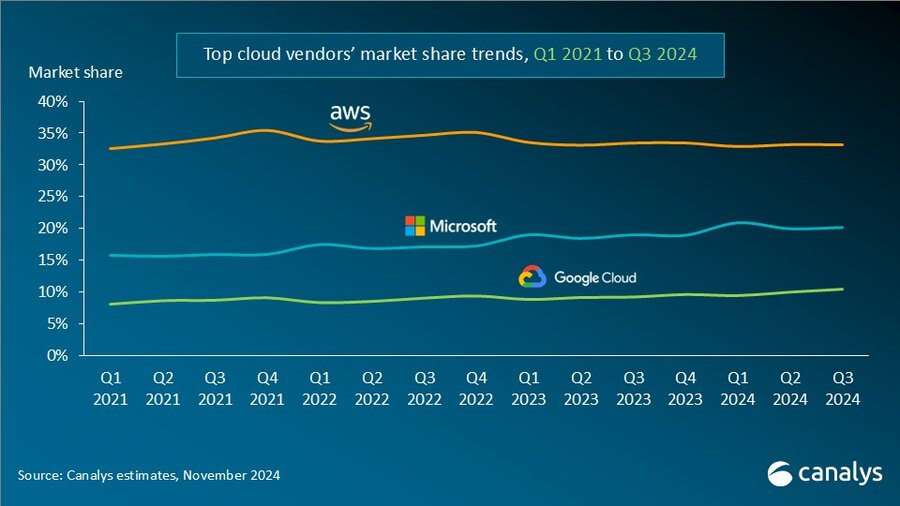

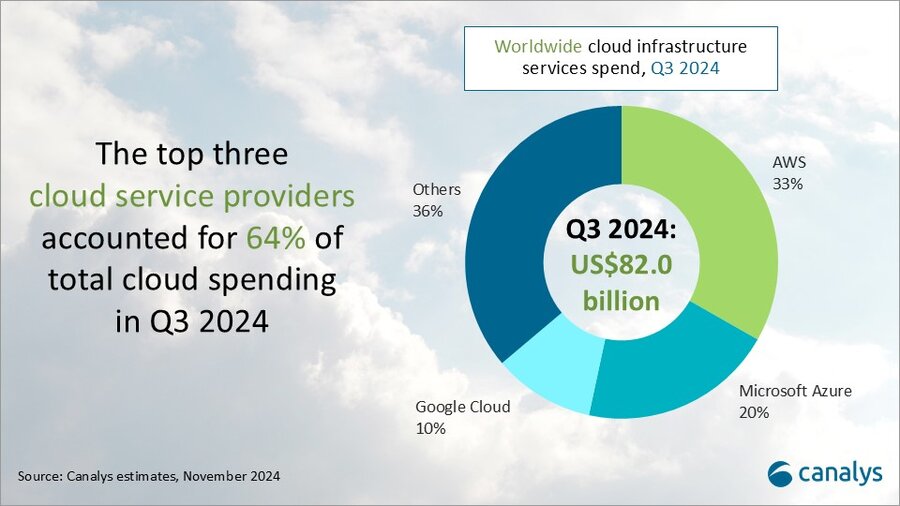

According to market research firm Canalys, global spending on cloud infrastructure services [1.] increased by 21% year on year, reaching US$82.0 billion in the 3rd quarter of 2024. Customer investment in the hyperscalers’ AI offerings fueled growth, prompting leading cloud vendors to escalate their investments in AI.

Note 1. Canalys defines cloud infrastructure services as services providing infrastructure (IaaS and bare metal) and platforms that are hosted by third-party providers and made available to users via the Internet.

The rankings of the top three cloud service providers – Amazon AWS, Microsoft Azure and Google Cloud – remained stable from the previous quarter, with these providers together accounting for 64% of total expenditure. Total combined spending with these three providers grew by 26% year on year, and all three reported sequential growth. Market leader AWS maintained a year-on-year growth rate of 19%, consistent with the previous quarter. That was outpaced by both Microsoft, with 33% growth, and Google Cloud, with 36% growth. In actual dollar terms, however, AWS outgrew both Microsoft and Google Cloud, increasing sales by almost US$4.4 billion on the previous year.

In Q3 2024, the cloud services market saw strong, steady growth. All three cloud hyperscalers reported positive returns on their AI investments, which have begun to contribute to their overall cloud business performance. These returns reflect a growing reliance on AI as a key driver for innovation and competitive advantage in the cloud.

With the increasing adoption of AI technologies, demand for high-performance computing and storage continues to rise, putting pressure on cloud providers to expand their infrastructure. In response, leading cloud providers are prioritizing large-scale investments in next-generation AI infrastructure. To mitigate the risks associated with under-investment – such as being unprepared for future demand or missing key opportunities – they have adopted over-investment strategies, ensuring their ability to scale offerings in line with the growing needs of their AI customers. Enterprises are convinced that AI will deliver an unprecedented boost in efficiency and productivity, so they are pouring money into hyperscalers’ AI solutions. Accordingly, cloud service provider capital spending (CAPEX) will sustain their rapid growth trajectories and are expected to continue on this path into 2025.

“Continued substantial expenditure will present new challenges, requiring cloud vendors to carefully balance their investments in AI with the cost discipline needed to fund these initiatives,” said Rachel Brindley, Senior Director at Canalys. “While companies should invest sufficiently in AI to capitalize on technological growth, they must also exercise caution to avoid overspending or inefficient resource allocation. Ensuring the sustainability of these investments over time will be vital to maintaining long-term financial health and competitive advantage.”

“On the other hand, the three leading cloud providers are also expediting the update and iteration of their AI foundational models, continuously expanding their associated product portfolios,” said Yi Zhang, Analyst at Canalys. “As these AI foundational models mature, cloud providers are focused on leveraging their enhanced capabilities to empower a broader range of core products and services. By integrating these advanced models into their existing offerings, they aim to enhance functionality, improve performance and increase user engagement across their platforms, thereby unlocking new revenue streams.”

Amazon Web Services (AWS) maintained its lead in the global cloud market in Q3 2024, capturing a 33% market share and achieving 19% year-on-year revenue growth. It continued to enhance and broaden its AI offerings by launching new models through Amazon Bedrock and SageMaker, including Anthropic’s upgraded Claude 3.5 Sonnet and Meta’s Llama 3.2. It reported a triple-digit year-on-year increase in AI-related revenue, outpacing its overall growth by more than three times. Over the past 18 months, AWS has introduced nearly twice as many machine learning and generative AI features as the combined offerings of the other leading cloud providers. In terms of capital expenditure, AWS announced plans to further increase investment, with projected spending of approximately US$75 billion in 2024. This investment will primarily be allocated to expanding technology infrastructure to meet the rising demand for AI services, underscoring AWS’ commitment to staying at the forefront of technological innovation and service capability.

Microsoft Azure remains the second-largest cloud provider, with a 20% market share and impressive annual growth of 33%. This growth was partly driven by AI services, which contributed approximately 12% to the overall increase. Over the past six months, use of Azure OpenAI has more than doubled, driven by increased adoption by both digital-native companies and established enterprises transitioning their applications from testing phases to full-scale production environments. To further enhance its offerings, Microsoft is expanding Azure AI by introducing industry-specific models, including advanced multimodal medical imaging models, aimed at providing tailored solutions for a broader customer base. Additionally, the company announced new cloud and AI infrastructure investments in Brazil, Italy, Mexico and Sweden to expand capacity in alignment with long-term demand forecasts.

Google Cloud, the third-largest provider, maintained a 10% market share, achieving robust year-on-year growth of 36%. It showed the strongest AI-driven revenue growth among the leading providers, with a clear acceleration compared with the previous quarter. As of September 2024, its revenue backlog increased to US$86.8 billion, up from US$78.8 billion in Q2, signaling continued momentum in the near term. Its enterprise AI platform, Vertex, has garnered substantial user adoption, with Gemini API calls increasing nearly 14-fold over the past six months. Google Cloud is actively seeking and developing new ways to apply AI tools across different scenarios and use cases. It introduced the GenAI Partner Companion, an AI-driven advisory tool designed to offer service partners personalized access to training resources, enhancing learning and supporting successful project execution. In Q3 2024, Google announced over US$7 billion in planned data center investments, with nearly US$6 billion allocated to projects within the United States.

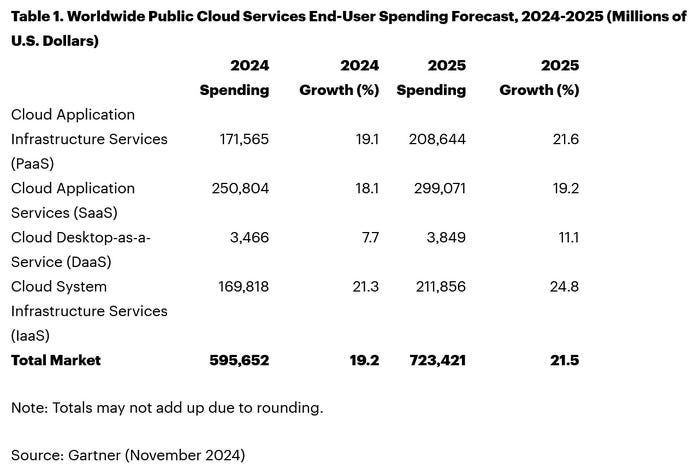

Separate statistics from Gartner corroborate hyperscale CAPEX optimism. Gartner predicts that worldwide end-user spending on public cloud services is on course to reach $723.4 billion next year, up from a projected $595.7 billion in 2024. All segments of the cloud market – platform-as-a-service (PaaS), software-as-a-service (SaaS), desktop-as-a-service (DaaS), and infrastructure-as-a-service (IaaS) – are expected to achieve double-digit growth.

While SaaS will be the biggest single segment, accounting for $299.1 billion, IaaS will grow the fastest, jumping 24.8 percent to $211.9 million.

Like Canalys, Gartner also singles out AI for special attention. “The use of AI technologies in IT and business operations is unabatedly accelerating the role of cloud computing in supporting business operations and outcomes,” said Sid Nag, vice president analyst at Gartner. “Cloud use cases continue to expand with increasing focus on distributed, hybrid, cloud-native, and multicloud environments supported by a cross-cloud framework, making the public cloud services market achieve a 21.5 percent growth in 2025.”

……………………………………………………………………………………………………………………………………………………………………………………………………..

References:

https://canalys.com/newsroom/global-cloud-services-q3-2024

https://www.telecoms.com/public-cloud/ai-hype-fuels-21-percent-jump-in-q3-cloud-spending

Cloud Service Providers struggle with Generative AI; Users face vendor lock-in; “The hype is here, the revenue is not”

MTN Consulting: Top Telco Network Infrastructure (equipment) vendors + revenue growth changes favor cloud service providers

IDC: Public Cloud software at 2/3 of all enterprise applications revenue in 2026; SaaS is essential!

IDC: Cloud Infrastructure Spending +13.5% YoY in 4Q-2021 to $21.1 billion; Forecast CAGR of 12.6% from 2021-2026

IDC: Worldwide Public Cloud Services Revenues Grew 29% to $408.6 Billion in 2021 with Microsoft #1?

Synergy Research: Microsoft and Amazon (AWS) Dominate IT Vendor Revenue & Growth; Popularity of Multi-cloud in 2021

Google Cloud revenues up 54% YoY; Cloud native security is a top priority

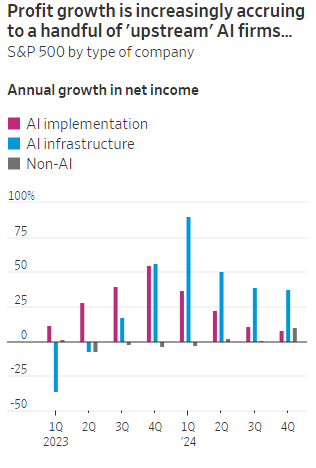

AI Echo Chamber: “Upstream AI” companies huge spending fuels profit growth for “Downstream AI” firms

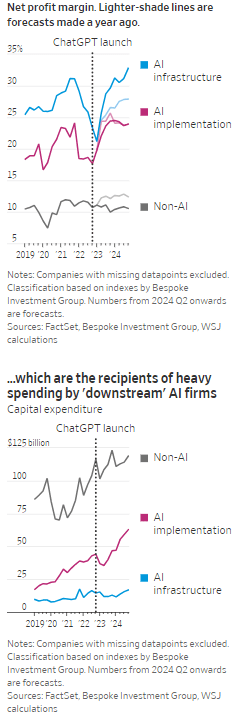

According to the Wall Street Journal, the AI industry has become an “Echo Chamber,” where huge capital spending by the AI infrastructure and application providers have fueled revenue and profit growth for everyone else. Market research firm Bespoke Investment Group has recently created baskets for “downstream” and “upstream” AI companies.

- The Downstream group involves “AI implementation,” which consist of firms that sell AI development tools, such as the large language models (LLMs) popularized by OpenAI’s ChatGPT since the end of 2022, or run products that can incorporate them. This includes Google/Alphabet, Microsoft, Amazon, Meta Platforms (FB), along with IBM, Adobe and Salesforce.

- Higher up the supply chain (Upstream group), are the “AI infrastructure” providers, which sell AI chips, applications, data centers and training software. The undisputed leader is Nvidia, which has seen its sales triple in a year, but it also includes other semiconductor companies, database developer Oracle and owners of data centers Equinix and Digital Realty.

The Upstream group of companies have posted profit margins that are far above what analysts expected a year ago. In the second quarter, and pending Nvidia’s results on Aug. 28th , Upstream AI members of the S&P 500 are set to have delivered a 50% annual increase in earnings. For the remainder of 2024, they will be increasingly responsible for the profit growth that Wall Street expects from the stock market—even accounting for Intel’s huge problems and restructuring.

It should be noted that the lines between the two groups can be blurry, particularly when it comes to giants such as Amazon, Microsoft and Alphabet, which provide both AI implementation (e.g. LLMs) and infrastructure: Their cloud-computing businesses are responsible for turning these companies into the early winners of the AI craze last year and reported breakneck growth during this latest earnings season. A crucial point is that it is their role as ultimate developers of AI applications that have led them to make super huge capital expenditures, which are responsible for the profit surge in the rest of the ecosystem. So there is a definite trickle down effect where the big tech players AI directed CAPEX is boosting revenue and profits for the companies down the supply chain.

As the path for monetizing this technology gets longer and harder, the benefits seem to be increasingly accruing to companies higher up in the supply chain. Meta Platforms Chief Executive Mark Zuckerberg recently said the company’s coming Llama 4 language model will require 10 times as much computing power to train as its predecessor. Were it not for AI, revenues for semiconductor firms would probably have fallen during the second quarter, rather than rise 18%, according to S&P Global.

………………………………………………………………………………………………………………………………………………………..

………………………………………………………………………………………………………………………………………………………..

A paper written by researchers from the likes of Cambridge and Oxford uncovered that the large language models (LLMs) behind some of today’s most exciting AI apps may have been trained on “synthetic data” or data generated by other AI. This revelation raises ethical and quality concerns. If an AI model is trained primarily or even partially on synthetic data, it might produce outputs lacking human-generated content’s richness and reliability. It could be a case of the blind leading the blind, with AI models reinforcing the limitations or biases inherent in the synthetic data they were trained on.

In this paper, the team coined the phrase “model collapse,” claiming that training models this way will answer user prompts with low-quality outputs. The idea of “model collapse” suggests a sort of unraveling of the machine’s learning capabilities, where it fails to produce outputs with the informative or nuanced characteristics we expect. This poses a serious question for the future of AI development. If AI is increasingly trained on synthetic data, we risk creating echo chambers of misinformation or low-quality responses, leading to less helpful and potentially even misleading systems.

……………………………………………………………………………………………………………………………………………

In a recent working paper, Massachusetts Institute of Technology (MIT) economist Daron Acemoglu argued that AI’s knack for easy tasks has led to exaggerated predictions of its power to enhance productivity in hard jobs. Also, some of the new tasks created by AI may have negative social value (such as design of algorithms for online manipulation). Indeed, data from the Census Bureau show that only a small percentage of U.S. companies outside of the information and knowledge sectors are looking to make use of AI.

References:

https://deepgram.com/learn/the-ai-echo-chamber-model-collapse-synthetic-data-risks

https://economics.mit.edu/sites/default/files/2024-04/The%20Simple%20Macroeconomics%20of%20AI.pdf

AI wave stimulates big tech spending and strong profits, but for how long?

AI winner Nvidia faces competition with new super chip delayed

SK Telecom and Singtel partner to develop next-generation telco technologies using AI

Telecom and AI Status in the EU

Vodafone: GenAI overhyped, will spend $151M to enhance its chatbot with AI

Data infrastructure software: picks and shovels for AI; Hyperscaler CAPEX

AI wave stimulates big tech spending and strong profits, but for how long?

Big tech companies have made it clear over the last week that they have no intention of slowing down their stunning levels of spending on artificial intelligence (AI), even though investors are getting worried that a big payoff is further down the line than most believe.

In the last quarter, Apple, Amazon, Meta, Microsoft and Google’s parent company Alphabet spent a combined $59 billion on capital expenses, 63% more than a year earlier and 161 percent more than four years ago. A large part of that was funneled into building data centers and packing them with new computer systems to build artificial intelligence. Only Apple has not dramatically increased spending, because it does not build the most advanced AI systems and is not a cloud service provider like the others.

At the beginning of this year, Meta said it would spend more than $30 billion in 2024 on new tech infrastructure. In April, he raised that to $35 billion. On Wednesday, he increased it to at least $37 billion. CEO Mark Zuckerberg said Meta would spend even more next year. He said he’d rather build too fast “rather than too late,” and allow his competitors to get a big lead in the A.I. race. Meta gives away the advanced A.I. systems it develops, but Mr. Zuckerberg still said it was worth it. “Part of what’s important about A.I. is that it can be used to improve all of our products in almost every way,” he said.

………………………………………………………………………………………………………………………………………………………..

This new wave of Generative A.I. is incredibly expensive. The systems work with vast amounts of data and require sophisticated computer chips and new data centers to develop the technology and serve it to customers. The companies are seeing some sales from their A.I. work, but it is barely moving the needle financially.

In recent months, several high-profile tech industry watchers, including Goldman Sachs’s head of equity research and a partner at the venture firm Sequoia Capital, have questioned when or if A.I. will ever produce enough benefit to bring in the sales needed to cover its staggering costs. It is not clear that AI will come close to having the same impact as the internet or mobile phones, Goldman’s Jim Covello wrote in a June report.

“What $1 trillion problem will AI solve?” he wrote. “Replacing low wage jobs with tremendously costly technology is basically the polar opposite of the prior technology transitions I’ve witnessed in my 30 years of closely following the tech industry.” “The reality right now is that while we’re investing a significant amount in the AI.space and in infrastructure, we would like to have more capacity than we already have today,” said Andy Jassy, Amazon’s chief executive. “I mean, we have a lot of demand right now.”

That means buying land, building data centers and all the computers, chips and gear that go into them. Amazon executives put a positive spin on all that spending. “We use that to drive revenue and free cash flow for the next decade and beyond,” said Brian Olsavsky, the company’s finance chief.

There are plenty of signs the boom will persist. In mid-July, Taiwan Semiconductor Manufacturing Company, which makes most of the in-demand chips designed by Nvidia (the ONLY tech company that is now making money from AI – much more below) that are used in AI systems, said those chips would be in scarce supply until the end of 2025.

Mr. Zuckerberg said AI’s potential is super exciting. “It’s why there are all the jokes about how all the tech C.E.O.s get on these earnings calls and just talk about A.I. the whole time.”

……………………………………………………………………………………………………………………

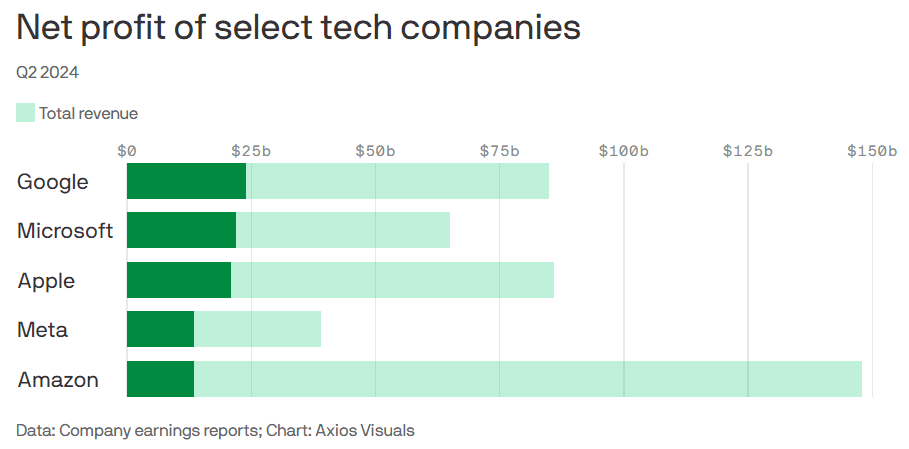

Big tech profits and revenue continue to grow, but will massive spending produce a good ROI?

Last week’s Q2-2024 results:

- Google parent Alphabet reported $24 billion net profit on $85 billion revenue.

- Microsoft reported $22 billion net profit on $65 billion revenue.

- Meta reported $13.5 billion net profit on $39 billion revenue.

- Apple reported $21 billion net profit on $86 billion revenue.

- Amazon reported $13.5 billion net profit on $148 billion revenue.

This chart sums it all up:

………………………………………………………………………………………………………………………………………………………..

References:

https://www.nytimes.com/2024/08/02/technology/tech-companies-ai-spending.html

https://www.axios.com/2024/08/02/google-microsoft-meta-ai-earnings

https://www.nvidia.com/en-us/data-center/grace-hopper-superchip/

AI Frenzy Backgrounder; Review of AI Products and Services from Nvidia, Microsoft, Amazon, Google and Meta; Conclusions

Microsoft choses Lumen’s fiber based Private Connectivity Fabric℠ to expand Microsoft Cloud network capacity in the AI era

Lumen Technologies and Microsoft Corp. announced a new strategic partnership today. Microsoft has chosen Lumen to expand its network capacity and capability to meet the growing demand on its datacenters due to AI (i.e. huge processing required for Large Language Models, including data collection, preprocessing, training, and evaluation). Datacenters have become critical infrastructure that power the compute capabilities for the millions of people and organizations who rely on and trust the Microsoft Cloud.

Microsoft claims they are playing a leading role in ushering in the era of AI, offering tools and platforms like Azure OpenAI Service, Microsoft Copilot and others to help people be more creative, more productive and to help solve some of humanity’s biggest challenges. As Microsoft continues to evolve and scale its ecosystem, it is turning to Lumen as a strategic supplier for its network infrastructure needs and is investing with Lumen to support its next generation of applications for Microsoft platform customers worldwide.

Lumen’s Private Connectivity Fabric℠ is a custom network that includes dedicated access to existing fiber in the Lumen network, the installation of new fiber on existing and new routes, and the use of Lumen’s new digital services. This AI-ready infrastructure will strengthen the connectivity capabilities between Microsoft’s datacenters by providing the network capacity, performance, stability and speed that customers need as data demands increase.

Art by Midjourney for Fierce Network

…………………………………………………………………………………………………………………………………………………………………………………..

“AI is reshaping our daily lives and fundamentally changing how businesses operate,” said Erin Chapple, corporate vice president of Azure Core Product and Design, Microsoft. “We are focused both on the impact and opportunity for customers relative to AI today, and a generation ahead when it comes to our network infrastructure. Lumen has the network infrastructure and the digital capabilities needed to help support Azure’s mission in creating a reliable and scalable platform that supports the breadth of customer workloads—from general purpose and mission-critical, to cloud-native, high-performance computing, and AI, plus what’s on the horizon. Our work with Lumen is emblematic of our investments in our own cloud infrastructure, which delivers for today and for the long term to empower every person and every organization on the planet to achieve more.”

“We are preparing for a future where AI is the driving force of innovation and growth, and where a powerful network infrastructure is essential for companies to thrive,” said Kate Johnson, president and CEO, Lumen Technologies (a former Microsoft executive). “Microsoft has an ambitious vision for AI and this level of innovation requires a network that can make it reality. Lumen’s expansive network meets this challenge, with unique routes, unmatched coverage, and a digital platform built to give companies the flexibility, access and security they need to create an AI-enabled world.”

Lumen has launched an enterprise-wide transformation to simplify and optimize its operations. By embracing Microsoft’s cloud and AI technology, Lumen can reduce its overall technology costs, remove legacy systems and silos, improve its offerings, and create new solutions for its global customer base. Lumen will migrate and modernize its workloads to Microsoft Azure, use Microsoft Entra solutions to safeguard access and prevent identity attacks and partner with Microsoft to create and deliver new telecom industry-specific solutions. This element alone is expected to improve Lumen’s cash flow by more than $20 million over the next 12 months while also improving the company’s customer experience.

“Azure’s advanced global infrastructure helps customers and partners quickly adapt to changing economic conditions, accelerate technology innovation, and transform their business with AI,” said Chapple. “We are committed to partnering with Lumen to help deliver on their transformation goals, reimagine cloud connectivity and AI synergies, drive business growth, and help customers achieve more.”

This collaboration expands upon the longstanding relationship between Lumen Technologies and Microsoft. The companies have worked together for several years, with Lumen leveraging Copilot to automate routine tasks and reduce employee workloads and enhance Microsoft Teams.

……………………………………………………………………………………………………………………………………………………………………………………………………..

Lumen’s CMO Ryan Asdourian hinted the deal could be the first in a series of such partnerships, as network infrastructure becomes the next scarce resource in the era of AI. “When the world has talked about what’s needed for AI, you usually hear about power, space and cooling…[these] have been the scarce resources,” Asdourian told Fierce Telecom. Asdourian said Lumen will offer Microsoft access to a combination of new and existing routes in the U.S., and will overpull fiber where necessary. However, he declined to specify the speeds which will be made available or exactly how many of Microsoft’s data centers it will be connecting.

Microsoft will retain full control over network speeds, routes and redundancy options through Lumen’s freshly launched Private Connectivity Fabric digital interface. “That is not something traditional telecom has allowed,” Asdourian said.

Asdourian added that Lumen isn’t just looking to enable AI, but also incorporate it into its own operations. Indeed, part of its partnership deal with Microsoft involves Lumen’s adoption of Azure cloud and other Microsoft services to streamline its internal and network systems. Asdourian said AI could be used to make routing and switching on its network more intelligent and efficient.

…………………………………………………………………………………………………………………………………………………………………………………..

About Lumen Technologies:

Lumen connects the world. We are igniting business growth by connecting people, data, and applications – quickly, securely, and effortlessly. Everything we do at Lumen takes advantage of our network strength. From metro connectivity to long-haul data transport to our edge cloud, security, and managed service capabilities, we meet our customers’ needs today and as they build for tomorrow. For news and insights visit news.lumen.com, LinkedIn: /lumentechnologies, Twitter: @lumentechco, Facebook: /lumentechnologies, Instagram: @lumentechnologies and YouTube: /lumentechnologies.

About Microsoft:

Microsoft (Nasdaq “MSFT” @microsoft) creates platforms and tools powered by AI to deliver innovative solutions that meet the evolving needs of our customers. The technology company is committed to making AI available broadly and doing so responsibly, with a mission to empower every person and every organization on the planet to achieve more.

…………………………………………………………………………………………………………………………………………………………………………………..

References:

https://news.lumen.com/2024-07-24-Microsoft-and-Lumen-Technologies-partner-to-power-the-future-of-AI-and-enable-digital-transformation-to-benefit-hundreds-of-millions-of-customers

https://fierce-network.com/cloud/microsoft-taps-lumens-fiber-network-help-it-meet-ai-demand

AI Frenzy Backgrounder; Review of AI Products and Services from Nvidia, Microsoft, Amazon, Google and Meta; Conclusions

Lumen, Google and Microsoft create ExaSwitch™ – a new on-demand, optical networking ecosystem

ACSI report: AT&T, Lumen and Google Fiber top ranked in fiber network customer satisfaction

Lumen to provide mission-critical communications services to the U.S. Department of Defense

Dell’Oro: Optical Transport market to hit $17B by 2027; Lumen Technologies 400G wavelength market

Cloud Service Providers struggle with Generative AI; Users face vendor lock-in; “The hype is here, the revenue is not”

Everyone agrees that Generative AI has great promise and potential. Martin Casado of Andreessen Horowitz recently wrote in the Wall Street Journal that the technology has “finally become transformative:”

“Generative AI can bring real economic benefits to large industries with established and expensive workloads. Large language models could save costs by performing tasks such as summarizing discovery documents without replacing attorneys, to take one example. And there are plenty of similar jobs spread across fields like medicine, computer programming, design and entertainment….. This all means opportunity for the new class of generative AI startups to evolve along with users, while incumbents focus on applying the technology to their existing cash-cow business lines.”

A new investment wave caused by generative AI is starting to loom among cloud service providers, raising questions about whether Big Tech’s spending cutbacks and layoffs will prove to be short lived. Pressed to say when they would see a revenue lift from AI, the big U.S. cloud companies (Microsoft, Alphabet/Google, Meta/FB and Amazon) all referred to existing services that rely heavily on investments made in the past. These range from the AWS’s machine learning services for cloud customers to AI-enhanced tools that Google and Meta offer to their advertising customers.

Microsoft offered only a cautious prediction of when AI would result in higher revenue. Amy Hood, chief financial officer, told investors during an earnings call last week that the revenue impact would be “gradual,” as the features are launched and start to catch on with customers. The caution failed to match high expectations ahead of the company’s earnings, wiping 7% off its stock price (MSFT ticker symbol) over the following week.

When it comes to the newer generative AI wave, predictions were few and far between. Amazon CEO Andy Jassy said on Thursday that the technology was in its “very early stages” and that the industry was only “a few steps into a marathon”. Many customers of Amazon’s cloud arm, AWS, see the technology as transformative, Jassy noted that “most companies are still figuring out how they want to approach it, they are figuring out how to train models.” He insisted that every part of Amazon’s business was working on generative AI initiatives and the technology was “going to be at the heart of what we do.”

There are a number of large language models that power generative AI, and many of the AI companies that make them have forged partnerships with big cloud service providers. As business technology leaders make their picks among them, they are weighing the risks and benefits of using one cloud provider’s AI ecosystem. They say it is an important decision that could have long-term consequences, including how much they spend and whether they are willing to sink deeper into one cloud provider’s set of software, tools, and services.

To date, AI large language model makers like OpenAI, Anthropic, and Cohere have led the charge in developing proprietary large language models that companies are using to boost efficiency in areas like accounting and writing code, or adding to their own products with tools like custom chatbots. Partnerships between model makers and major cloud companies include OpenAI and Microsoft Azure, Anthropic and Cohere with Google Cloud, and the machine-learning startup Hugging Face with Amazon Web Services. Databricks, a data storage and management company, agreed to buy the generative AI startup MosaicML in June.

If a company chooses a single AI ecosystem, it could risk “vendor lock-in” within that provider’s platform and set of services, said Ram Chakravarti, chief technology officer of Houston-based BMC Software. This paradigm is a recurring one, where a business’s IT system, software and data all sit within one digital platform, and it could become more pronounced as companies look for help in using generative AI. Companies say the problem with vendor lock-in, especially among cloud providers, is that they have difficulty moving their data to other platforms, lose negotiating power with other vendors, and must rely on one provider to keep its services online and secure.

Cloud providers, partly in response to complaints of lock-in, now offer tools to help customers move data between their own and competitors’ platforms. Businesses have increasingly signed up with more than one cloud provider to reduce their reliance on any single vendor. That is the strategy companies could end up taking with generative AI, where by using a “multiple generative AI approach,” they can avoid getting too entrenched in a particular platform. To be sure, many chief information officers have said they willingly accept such risks for the convenience, and potentially lower cost, of working with a single technology vendor or cloud provider.

A significant challenge in incorporating generative AI is that the technology is changing so quickly, analysts have said, forcing CIOs to not only keep up with the pace of innovation, but also sift through potential data privacy and cybersecurity risks.

A company using its cloud provider’s premade tools and services, plus guardrails for protecting company data and reducing inaccurate outputs, can more quickly implement generative AI off-the-shelf, said Adnan Masood, chief AI architect at digital technology and IT services firm UST. “It has privacy, it has security, it has all the compliance elements in there. At that point, people don’t really have to worry so much about the logistics of things, but rather are focused on utilizing the model.”

For other companies, it is a conservative approach to use generative AI with a large cloud platform they already trust to hold sensitive company data, said Jon Turow, a partner at Madrona Venture Group. “It’s a very natural start to a conversation to say, ‘Hey, would you also like to apply AI inside my four walls?’”

End Quotes:

“Right now, the evidence is a little bit scarce about what the effect on revenue will be across the tech industry,” said James Tierney of Alliance Bernstein.

Brent Thill, an analyst at Jefferies, summed up the mood among investors: “The hype is here, the revenue is not. Behind the scenes, the whole industry is scrambling to figure out the business model [for generative AI]: how are we going to price it? How are we going to sell it?”

………………………………………………………………………………………………………………

References:

https://www.ft.com/content/56706c31-e760-44e1-a507-2c8175a170e8

https://www.wsj.com/articles/companies-weigh-growing-power-of-cloud-providers-amid-ai-boom-478c454a

https://www.techtarget.com/searchenterpriseai/definition/generative-AI?Offer=abt_pubpro_AI-Insider

Global Telco AI Alliance to progress generative AI for telcos

Curmudgeon/Sperandeo: Impact of Generative AI on Jobs and Workers

Bain & Co, McKinsey & Co, AWS suggest how telcos can use and adapt Generative AI

Generative AI Unicorns Rule the Startup Roost; OpenAI in the Spotlight

Generative AI in telecom; ChatGPT as a manager? ChatGPT vs Google Search

Generative AI could put telecom jobs in jeopardy; compelling AI in telecom use cases

Qualcomm CEO: AI will become pervasive, at the edge, and run on Snapdragon SoC devices

Microsoft announces Azure Operator Nexus; Enea to deliver subscriber data management and traffic management in 4G & 5G

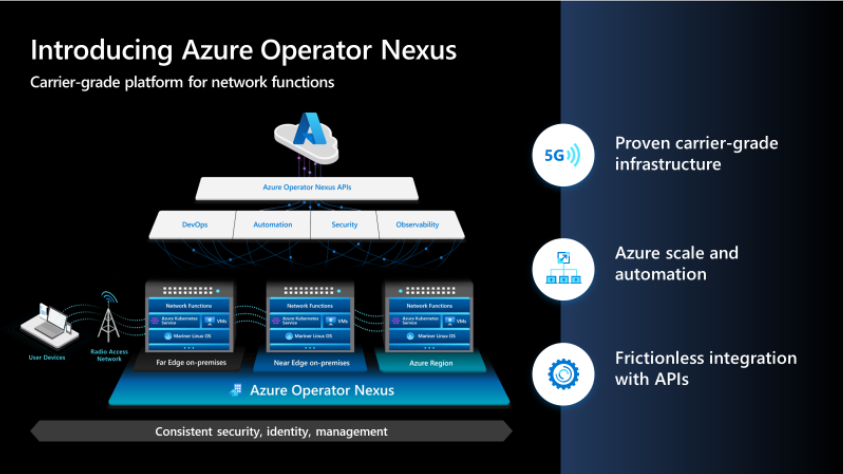

Microsoft launched its brand new next-gen hybrid cloud platform – Azure Operator Nexus – for network operators today. Azure Operator Nexus is an expansion of the Azure Operator Distributed Services private preview. Azure Operator Nexus is a hybrid, carrier-grade cloud platform designed for the specific needs of the operator in running network functions such as packet core, virtualized radio access networks (vRAN), subscriber data management, and billing policy. Azure Operator Nexus is a first-party Microsoft product that builds on the functionality of its predecessor, adding essential features of key Microsoft technologies such as Mariner Linux, Hybrid AKS, and Arc while continuing to leverage Microsoft Services for security, lifecycle management, Observability, DevOps and automation.

Azure Operator Nexus has already been released to our flagship customer, AT&T, and the results have been incredibly positive. Now, we’re selectively working with operators for potential deployments around the world. In this blog post, we provide an overview of the service from design and development to deployment and also discuss benefits the customers can expect, including research and analysis into the total cost of ownership (TCO).

Overview:

Microsoft Azure Operator Nexus leverages cloud technology to modernize and monetize operator network investments to deliver benefits such as:

- Lower overall TCO

- Greater operations efficiency and resiliency through AI and automation

- Improved security for highly-distributed, software-based networks

Azure Operator Nexus is a purpose-built service for hosting carrier-grade network functions. The service is specifically designed to bring carrier-grade performance and resiliency to traditional cloud infrastructures. Azure Operator Nexus delivers operator mobile core and vRAN network functions securely in on-premises (far-edge, near-edge, core datacenters) and on-Azure regions. This delivers a rich Azure experience, including visibility into logging, monitoring, and alerting for infrastructure components and workloads. Operators will have a consistent environment across both on-premises and Azure regions, allowing network function workloads to move seamlessly from one location to another based on application needs and economics.

Whether deployed on-premises or in Azure infrastructure, network functions may access an identical set of platform capabilities. On-premises, the service uses a curated hardware BOM of commercial off-the-shelf (COTS)-based servers, network switches, dedicated storage arrays, and terminal servers. Both deployment models are Linux-based, in alignment with network function needs, telecommunications industry trends, and relevant open-source communities. Additionally, the service supports both virtualized network functions (VNFs) and containerized network functions (CNFs).

The Azure Operator Nexus is based on the experience of a large telecommunications operator that has spent the past seven years virtualizing more than 75 percent of its network and overcoming the scale challenges of network-function virtualization. From this deep networking and virtualization experience, Operator Nexus was designed to:

- Provide the network function runtime that allows the fast-packet processing required to meet the carrier-grade-network demands of network functions supporting tens of millions of subscribers. Examples of requirements the platform delivers include optimized container support, flexible, fine-grained VM sizing, NUMA alignment to avoid UPI performance penalties, Huge Pages, CPU pinning, CPU isolation, Multiple Network Attachments, SR-IOV & OVS/DPDK host coexistence, SR-IOV trusted mode capabilities and complex scheduling support across failure domains.

- Ensure the quality, resiliency, and security required by network-function workloads through robust test automation.

- Deliver lifecycle automation to manage cloud instances and workloads from their creation through minor updates and configuration changes, and even major uplifts such as VMs and Kubernetes upgrades. This is accomplished via a unified and declarative framework driving low operational cost, high-quality performance, and minimal impact on mission-critical running network workloads.

In addition to the performance-enhancing features, Azure Operator Nexus also includes a fully integrated solution of software-defined networking (SDN), low latency storage, and an integrated packet broker. The connectivity between the Operator premises and Azure leverages Express Route Local capabilities to address the transfer of large volumes of operational data in a cost-effective manner.

One of the key benefits of a hybrid cloud infrastructure is its ability to provide harmonized observability for both infrastructure and applications. This means one can easily monitor and troubleshoot any issues that may arise, ensuring systems are running smoothly and efficiently. The platform collects logs, metrics, and traces from network function virtualization infrastructure (NFVI) and network functions (NFs). It also offers a rich analytical, AI/ML-based toolset to develop descriptive and prescriptive analytics. Our goal with this observability architecture is to securely bring all operator data into a single data lake where it can be processed to provide a global-network view and harvested for operational and business insights.

………………………………………………………………………………………………………………………………………………………………………

Stockholm Sweden based Enea is amongst the first to join the program. They will deliver subscriber data management and traffic management in 4G and 5G for the new platform.

The introduction of Enea’s Telecom product portfolio will further enhance mobile operators’ ability to unlock the potential of 5G and provide more choice in pre-validated solutions to ensure a faster time to deployment for solutions. Enea’s telecom products include the Stratum Network Data layer, 5G Service Engine, Subscription Manager and Policy Manager, providing a range of subscriber data management, authorization and traffic management capabilities for both 4G & 5G mobile environments.

Azure Operator Nexus program provides an API layer to automate and manage network functions. The Enea network functions will integrate and validate at both the API interoperability level and the automated deployment level to provide telecom operators the option to build, host and operate these containerized functions as part of a network in a cloud or hybrid cloud environment. As pre-validated services, the Enea network functions will be available in the Azure Marketplace.

“The integration with Microsoft Azure Operator Nexus demonstrates Enea’s commitment to multi-vendor telecom architecture, software-based solution and open interoperability.”, said Osvaldo Aldao, Vice President of Product Management at Enea. Further adding, “The addition of our Stratum network data layer as an open 5G UDR & UDSF will provide the data management foundation to drive a fully cloud native architecture with Azure Operator Nexus”.

“Enea joining the Microsoft Azure Operator Nexus Ready Program enables both network function expertise and deployment experience from their extensive portfolio”, said Ross Ortega, Vice President – Azure for Operators, “Enea’s pre-validated functions in the Azure Marketplace will be an essential building block for operator networks.”

References:

Microsoft Azure for Operators:

https://azure.microsoft.com/en-us/solutions/industries/telecommunications/#overview

Enea software portfolio:

Network Data Layer: https://www.enea.com/solutions/4g-5g-network-data-layer/

5G Applications https://www.enea.com/solutions/data-management-applications/

Traffic Management – https://www.enea.com/solutions/traffic-management/4g-5g-user-plane-dual-mode-services/

About Enea:

Enea is a world-leading specialist in software for telecom and cybersecurity. The company’s cloud-native solutions connect, optimize, and secure services for mobile subscribers, enterprises, and the Internet of Things. More than 100 communication service providers and 4.5 billion people rely on Enea technologies every day.

Enea has strengthened its product portfolio and global market position by integrating a number of acquisitions, including Qosmos, Openwave Mobility, Aptilo Networks, and AdaptiveMobile Security.

Contact: Stephanie Huf, Chief Marketing Officer [email protected]

Tech Mahindra and Microsoft partner to bring cloud-native 5G SA core network to global telcos

India’s Tech Mahindra and Microsoft have announced a collaboration to enable cloud-powered 5G SA core network for telecom operators worldwide. As a part of the collaboration, Tech Mahindra will provide its expertise, comprehensive solutions, and managed services offerings to telecom operators for their 5G SA Core networks. Tech Mahindra will provide its expertise like “Network Cloudification as a Service” and AIOps to global telecom operators for their 5G Core networks. AIOps will help operators combine big data and machine learning to automate network operations processes, including anomaly detection, predicting fault and performance issues.

CP Gurnani, Managing Director and Chief Executive Officer, Tech Mahindra said, “Today, it is critical to leverage next-gen technologies to build relevant and resilient services and solutions for customers across the globe. At Tech Mahindra, we are well-positioned to help telecom operators realize the full potential of their networks and provide innovative and agile services to their customers while also helping them meet their ESG commitments. Our collaboration with Microsoft will further strengthen our service portfolio by combining our deep expertise across the telecom industry with Microsoft Cloud. Further to this collaboration, Tech Mahindra and Microsoft will work together to help telecom operators simplify and transform their operations in order to build green and secure networks by leveraging the power of cloud technologies. At Tech Mahindra, we are well-positioned to help telecom operators realize the full potential of their networks and provide innovative and agile services to their customers while also helping them meet their ESG commitments.”

Tech Mahindra believes the 5G core network will enable use cases such as Augmented Reality (AR), Virtual Reality (VR), IoT (Internet of Things, and edge computing. Of course, 5G URLLC performance requirements, especially ultra low latency, in the RAN and core network must be met first, which they are not at this time. The company will leverage the Microsoft Azure cloud for its sustainability solution iSustain to measure and monitor KPIs across all three aspects of E, S & G. iSustain will help operators address the challenge of measuring and reducing carbon emissions from the networks while meeting demands of the countless energy intense digital technologies, from AR/ VR to IoT.

Anant Maheshwari, President, Microsoft India said, “Harnessing the power of Microsoft Azure, telecom operators can provide more flexibility and scalability, save infrastructure cost, use AI to automate operations, and differentiate their customer offerings. The collaboration between Tech Mahindra and Microsoft will help our customers build green and secured networks with seamless experiences across the Microsoft cloud and the operator’s network. Azure provides operators with cloud solutions that enable them to create new revenue generating services and move existing services to the cloud. Through our collaboration with Tech Mahindra, Microsoft will further help telcos overcome challenges, drive innovation and build green and secured networks that provide seamless experiences by leveraging the power of Microsoft Cloud for Operators.”

The partnership is in line with Tech Mahindra’s NXT.NOWTM framework, which aims to enhance the ‘Human Centric Experience’, Tech Mahindra focuses on investing in emerging technologies and solutions that enable digital transformation and meet the evolving needs of the customer.

About Tech Mahindra:

Tech Mahindra offers innovative and customer-centric digital experiences, enabling enterprises, associates and the society to Rise. We are a USD 6 billion organization with 163,000+ professionals across 90 countries helping 1279 global customers, including Fortune 500 companies. We are focused on leveraging next-generation technologies including 5G, Blockchain, Metaverse, Quantum Computing, Cybersecurity, Artificial Intelligence, and more, to enable end-to-end digital transformation for global customers. Tech Mahindra is the only Indian company in the world to receive the HRH The Prince of Wales’ Terra Carta Seal for its commitment to creating a sustainable future. We are the fastest growing brand in ‘brand strength’ and amongst the top 7 IT brands globally. With the NXT.NOWTM framework, Tech Mahindra aims to enhance ‘Human Centric Experience’ for our ecosystem and drive collaborative disruption with synergies arising from a robust portfolio of companies. Tech Mahindra aims at delivering tomorrow’s experiences today and believes that the ‘Future is Now.’

References: