Google Cloud

Palo Alto Networks and Google Cloud expand partnership with advanced AI infrastructure and cloud security

- End-to-End AI Security from Code to Cloud: Customers can protect live AI workloads and data on Google Cloud, including instances on Vertex AI and Agent Engine, using Palo Alto Networks Prisma AIRS. Securing key developer tools like the Agent Development Kit (ADK) with Prisma AIRS provides a secure foundation for developing next-generation AI applications on Google Cloud. This includes capabilities such as AI Posture Management, AI Runtime Security™, AI Agent Security for autonomous systems, AI Red Teaming, and AI Model Security.

- AI-Driven, Next-Generation Software Firewall (SWFW): Palo Alto Networks VM-Series firewalls, designed for securing cloud and virtualized environments via deep packet inspection and Threat Prevention, will feature deep integrations with Google Cloud to help customers maintain robust security policies and accelerate cloud adoption.

- AI-Driven Secure Access Service Edge (SASE) Platform: Palo Alto Networks Prisma SASE platform secures access and networking for remote users and branch offices. Deeper integration with Google Cloud’s native AI services will improve the user experience by leveraging Google’s network for Prisma Access execution and utilizing Google Cloud Interconnect for consistent security policies across multi-cloud WAN infrastructure.

- Simplified and Unified Security Experience: The deep engineering alignment ensures that joint solutions are pre-vetted and optimized for seamless interoperability, reducing integration complexity and operational overhead for security teams. This enables faster deployment of protective measures, simplified compliance, and a unified security posture across the entire hybrid multicloud ecosystem.

- BJ Jenkins, President, Palo Alto Networks: “The critical question for modern governance boards is how to leverage AI without introducing undue risk. This partnership provides the definitive answer. We are eliminating the operational friction between security and development, delivering a unified platform where cutting-edge security is an inherent component of innovation. By embedding our AI-powered security deeply into the Google Cloud infrastructure, we are transforming the platform into a proactive defense system.”

- Matt Renner, President and Chief Revenue Officer, Google Cloud: “Enterprises increasingly rely on the combined capabilities of Google Cloud and Palo Alto Networks for seamless application and data security. This partnership expansion guarantees our joint clientele access to the necessary solutions for securing their most critical AI infrastructure and developing secure-by-design AI agents from inception.”

About Palo Alto Networks:

As the global AI and cybersecurity leader, Palo Alto Networks (NASDAQ: PANW) is dedicated to protecting our digital way of life via continuous innovation. Trusted by more than 70,000 organizations worldwide, we provide comprehensive AI-powered security solutions across network, cloud, security operations and AI, enhanced by the expertise and threat intelligence of Unit 42®. Our focus on platformization allows enterprises to streamline security at scale, ensuring protection fuels innovation. Explore more at www.paloaltonetworks.com.

Palo Alto Networks, Prisma, Prisma AIRS, and the Palo Alto Networks logo are trademarks of Palo Alto Networks, Inc. in the United States and in jurisdictions throughout the world. All other trademarks, trade names, or service marks used or mentioned herein belong to their respective owners.

About Google Cloud:

Google Cloud is the new way to the cloud, providing AI, infrastructure, developer, data, security, and collaboration tools built for today and tomorrow. Google Cloud offers a powerful, fully integrated and optimized AI stack with its own planet-scale infrastructure, custom-built chips, generative AI models and development platform, as well as AI-powered applications, to help organizations transform. Customers in more than 200 countries and territories turn to Google Cloud as their trusted technology partner.

- Learn more about Palo Alto Networks and the Google Cloud partnership here.

- Please see References below for Google Cloud initiatives

……………………………………………………………………………………………………………………………………………………………….

References:

https://www.paloaltonetworks.com/engage/global-multi-platform-security/palo-alto-devops-in

NTT Data and Google Cloud partner to offer industry-specific cloud and AI solutions

Google Cloud targets telco network functions, while AWS and Azure are in holding patterns

Deutsche Telekom and Google Cloud partner on “RAN Guardian” AI agent

Google Cloud announces TalayLink subsea cable and new connectivity hubs in Thailand and Australia

Ericsson and Google Cloud expand partnership with Cloud RAN solution

Google Cloud infrastructure enhancements: AI accelerator, cross-cloud network and distributed cloud

T-Mobile and Google Cloud collaborate on 5G and edge compute

Cloud RAN with Google Distributed Cloud Edge; Strategy: host network functions of other vendors on Google Cloud

Casa Systems and Google Cloud strengthen partnership to progress cloud-native 5G SA core, MEC, and mobile private networks

Google Cloud expands footprint with 34 global regions

Google Cloud announces TalayLink subsea cable and new connectivity hubs in Thailand and Australia

In yet another example of hyperscaler all inclusive infrastructure design and development, Google Cloud has announced plans for a new subsea system, TalayLink, which will connect Australia to Thailand. It’s part of the company’s wider Australia Connect initiative to expand infrastructure across the Indo-Pacific. While Google has not provided a firm completion date, the company confirmed that TalayLink, alongside planned connectivity hubs in the Maldives and Christmas Island, will form a critical, resilient network backbone connecting Australia, Southeast Asia, Africa, and the Middle East.

- Mandurah, Western Australia: This facility provides essential network diversity, acting as a resilient alternative to existing landing points near Perth.

- South Thailand: Strategically located at a major subsea cable crossroads, this hub will be developed in partnership with AIS, with International Gateway Company (IGC), a subsidiary of ALT Telecom, managing the cable landing specifics.

In addition to the TalayLink subsea cable system, Google announced plans for new connectivity hubs in Western Australia (Mandurah) and South Thailand. These strategic investments are designed to future-proof regional connectivity and accelerate the delivery of advanced digital and AI services through cable switching, content caching, and colocation capabilities. The Mandurah connectivity hub will establish a diverse landing point from Perth, where the majority of existing subsea cables currently land in Western Australia. In South Thailand, an established crossroads for subsea cables, we are partnering with colocation provider AIS to accelerate our deployment and benefit from existing local infrastructure investments.

“The TalayLink cable will serve as a pivotal piece of digital infrastructure, enhancing Thailand’s connectivity and resilience. Together with Google’s upcoming Google Cloud region and data center in Thailand, these forward‑looking investments will significantly expand regional network and computing capacity, while firmly positioning Thailand as a critical digital gateway for next‑generation cloud and AI innovation in Southeast Asia. The Thailand Board of Investment (BOI) is fully committed to supporting Google’s investment in Thailand, fostering the growth of the nation’s digital economy, and advancing digital skills to ensure inclusive and sustainable development.” – Narit Therdsteerasukdi, Secretary General, Thailand Board of Investment (BOI).

“AIS is excited to be extending our relationship with Google as a strategic partner by supporting the connectivity hub in Southern Thailand. The combination of Google’s new, diverse submarine cable path and AIS’s high-reliability colocation capabilities will ensure the digital infrastructure in the region is capable of supporting the country’s AI strategy.” – Pratthana Leelapanang Chief Executive Officer, AIS

“International Gateway Company (IGC), a subsidiary of ALT Telecom PLC, is delighted to be a key Google partner in landing a new submarine cable in Thailand. IGC brings to the project its extensive experience operating a nationwide network and international cable gateway. This cable is an important new piece of digital infrastructure that will accelerate Thailand’s ambitious digital economy development strategy.” – Preeyaporn Tangpaosak, President, ALT Telecom

When they’re complete, TalayLink and the connectivity hubs will support network resilience across Australia, Africa and Southeast Asia. When combined with our previously announced connectivity hubs in the Maldives and Christmas Island, these investments will provide onward connectivity across the Indian Ocean and beyond to the Middle East.

This new cable and our regional connectivity hubs will directly support Western Australia’s roadmap to secure a safe, inclusive digital future, as well as the Royal Thai Government’s objective of economic transformation through AI and digital inclusion. We are excited to contribute to the economic and social growth across Australia, Thailand and Southeast Asia through resilient, reliable internet infrastructure.

Google’s Bosun subsea cable to link Darwin, Australia to Christmas Island in the Indian Ocean

Google’s Equiano subsea cable lands in Namibia en route to Cape Town, South Africa

NTT Data and Google Cloud partner to offer industry-specific cloud and AI solutions

Google Cloud targets telco network functions, while AWS and Azure are in holding patterns

Deutsche Telekom and Google Cloud partner on “RAN Guardian” AI agent

Ericsson and Google Cloud expand partnership with Cloud RAN solution

TechCrunch: Meta to build $10 billion Subsea Cable to manage its global data traffic

Orange Deploys Infinera’s GX Series to Power AMITIE Subsea Cable

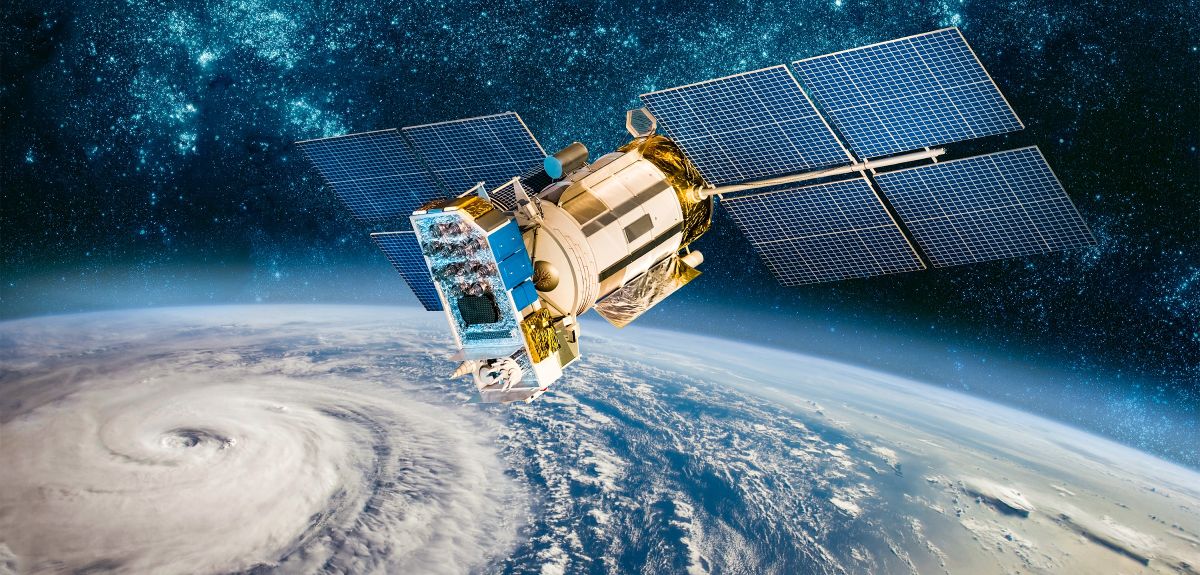

Google’s Project Suncatcher: a moonshot project to power ML/AI compute from space

Overview:

Google’s Project Suncatcher will equip solar-powered satellite constellations with TPUs and free-space optical links to one day scale machine learning (ML) and AI compute in space. The sun is the ultimate energy source and solar panels can be much more productive in orbit, thereby significantly reducing the need for heavy batteries. With energy use being a key consideration for terrestrial data centers, especially with the ongoing and seemingly relentless need to scale up for ML/AI, space might be the right location for AI.

Goals and Objectives of Project Suncatcher:

References:

https://research.google/blog/exploring-a-space-based-scalable-ai-infrastructure-system-design/

https://blog.google/technology/research/google-project-suncatcher/

https://services.google.com/fh/files/misc/suncatcher_paper.pdf

Muon Space in deal with Hubble Network to deploy world’s first satellite-powered Bluetooth network

Nokia’s Bell Labs to use adapted 4G and 5G access technologies for Indian space missions

5G connectivity from space: Exolaunch contract with Sateliot for launch and deployment of LEO satellites

Hubble Network Makes Earth-to-Space Bluetooth Satellite Connection; Life360 Global Location Tracking Network

AT&T deal with AST SpaceMobile to provide wireless service from space

NTT Data and Google Cloud partner to offer industry-specific cloud and AI solutions

NTT Data and Google Cloud plan to combine their expertise in AI and the cloud to offer customized solutions to accelerate enterprise transformation across sectors including banking, insurance, manufacturing, retail, healthcare, life sciences and the public sector.. The partnership will include agentic AI solutions, security, sovereign cloud and developer tools. This collaboration combines NTT DATA’s deep industry expertise in AI, cloud-native modernization and data engineering with Google Cloud’s advanced analytics, AI and cloud technologies to deliver tailored, scalable enterprise solutions.

With a focus on co-innovation, the partnership will drive industry-specific cloud and AI solutions, leveraging NTT DATA’s proven frameworks and best practices along with Google Cloud’s capabilities to deliver customized solutions backed by deep implementation expertise. Significant joint go-to-market investments will support seamless adoption across key markets.

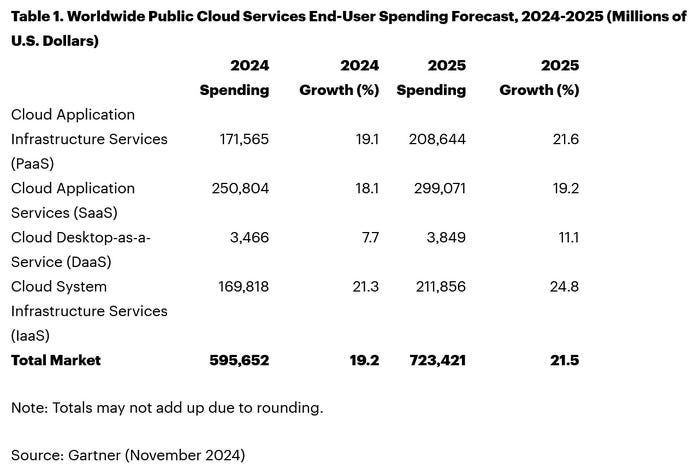

According to Gartner®, worldwide end-user spending on public cloud services is forecast to reach $723 billion in 2025, up from $595.7 billion in 2024.1 The use of AI deployments in IT and business operations is accelerating the reliance on modern cloud infrastructure, highlighting the critical importance of this strategic global partnership.

“This collaboration with Google Cloud represents a significant milestone in our mission to drive innovation and digital transformation across industries,” said Marv Mouchawar, Head of Global Innovation, NTT DATA. “By combining NTT DATA’s deep expertise in AI, cloud-native modernization and enterprise solutions with Google Cloud’s advanced technologies, we are helping businesses accelerate their AI-powered cloud adoption globally and unlock new opportunities for growth.”

“Our partnership with NTT DATA will help enterprises use agentic AI to enhance business processes and solve complex industry challenges,” said Kevin Ichhpurani, President, Global Partner Ecosystem at Google Cloud. “By combining Google Cloud’s AI with NTT DATA’s implementation expertise, we will enable customers to deploy intelligent agents that modernize operations and deliver significant value for their organizations.”

Photo Credit: Phil Harvey/Alamy Stock Photo

In financial services, this collaboration will support regulatory compliance and reporting through NTT DATA solutions like Regla, which leverage Google Cloud’s scalable AI infrastructure. In hospitality, NTT DATA’s Virtual Travel Concierge enhances customer experience and drives sales with 24×7 multilingual support, real-time itinerary planning and intelligent travel recommendations. It uses the capabilities of Google’s Gemini models to drive personalization across more than 3 million monthly conversations.

Key focus areas include:

- Industry-specific agentic AI solutions: NTT DATA will build new industry solutions that transform analytics, decision-making and client experiences using Google Agentspace, Google’s Gemini models, secure data clean rooms and modernized data platforms.

- AI-driven cloud modernization: Accelerating enterprise modernization with Google Distributed Cloud for secure, scalable modernization built and managed on NTT DATA’s global infrastructure, from data centers to edge to cloud.

- Next-generation application and security modernization: Strengthening enterprise agility and resilience through mainframe modernization, DevOps, observability, API management, cybersecurity frameworks and SAP on Google Cloud.

- Sovereign cloud innovation: Delivering secure, compliant solutions through Google Distributed Cloud in both air-gapped and connected deployments. Air-gapped environments operate offline for maximum data isolation. Connected deployments enable secure integration with cloud services. These scenarios meet data sovereignty and regulatory demands in sectors such as finance, government and healthcare without compromising innovation.

- Google Distributed Cloud sandbox environment: Google Distributed Cloud sandbox environment is a digital playground where developers can build, test and deploy industry-specific and sovereign cloud deployments. This sandbox will help teams upskill through hands-on training and accelerate time to market with Google Distributed Cloud technologies through preconfigured, ready-to-deploy templates.

NTT DATA will support these innovations through a full-stack suite of services including advisory, building, implementation and ongoing hosting and managed services.

By combining NTT DATA’s proven blueprints and delivery expertise with Google Cloud’s technology, the partnership will accelerate the development of repeatable, scalable solutions for enterprise transformation. At the heart of this innovation strategy is Takumi, NTT DATA’s GenAI framework that guides clients from ideation to enterprise-wide deployment. Takumi integrates seamlessly with Google Cloud’s AI stack, enabling rapid prototyping and operationalization of GenAI use cases.

This initiative expands NTT DATA’s Smart AI Agent Ecosystem, which unites strategic technology partnerships, specialized assets and an AI-ready talent engine to help clients deploy and manage responsible, business-driven AI at scale.

Accelerating global delivery with a dedicated Google Cloud Business Group:

To achieve excellence, NTT DATA has established a dedicated global Google Cloud Business Group comprising thousands of engineers, architects and advisory consultants. This global team at NTT DATA will work in close collaboration with Google Cloud teams to help clients adopt and scale AI-powered cloud technologies.

NTT DATA is also investing in advanced training and certification programs ensuring teams across sales, pre-sales and delivery are equipped to sell, secure, migrate and implement AI-powered cloud solutions. The company aims to certify 5,000 engineers in Google Cloud technology, further reinforcing its role as a leader in cloud transformation on a global scale.

Additionally, both companies are co-investing in global sales and go-to-market campaigns to accelerate client adoption across priority industries. By aligning technical, sales and marketing expertise, the companies aim to scale transformative solutions efficiently across global markets.

This global partnership builds on NTT DATA and Google Cloud’s 2024 co-innovation agreement in APAC. In addition it further strengthens NTT DATA’s acquisition of Niveus Solutions, a leading Google Cloud specialist recognized with three 2025 Google Cloud Awards – “Google Cloud Country Partner of the Year – India”, “Google Cloud Databases Partner of the Year – APAC” and “Google Cloud Country Partner of the Year – Chile,” further validating NTT DATA’s commitment to cloud excellence and innovation.

“We’re excited to see the strengthened partnership between NTT DATA and Google Cloud, which continues to deliver measurable impact. Their combined expertise has been instrumental in migrating more than 380 workloads to Google Cloud to align with our cloud-first strategy,” said José Luis González Santana, Head of IT Infrastructure, Carrefour. “By running SAP HANA on Google Cloud, we have consolidated 100 legacy applications to create a powerful, modernized e-commerce platform across 200 hypermarkets. This transformation has given us the agility we need during peak times like Black Friday and enabled us to launch new services faster than ever. Together, NTT DATA and Google Cloud are helping us deliver more connected, seamless experiences for our customers,”

About NTT DATA:

NTT DATA is a $30+ billion trusted global innovator of business and technology services. We serve 75% of the Fortune Global 100 and are committed to helping clients innovate, optimize and transform for long-term success. As a Global Top Employer, we have experts in more than 50 countries and a robust partner ecosystem of established and start-up companies. Our services include business and technology consulting, data and artificial intelligence, industry solutions, as well as the development, implementation and management of applications, infrastructure and connectivity. We are also one of the leading providers of digital and AI infrastructure in the world. NTT DATA is part of NTT Group, which invests over $3.6 billion each year in R&D to help organizations and society move confidently and sustainably into the digital future.

Resources:

https://www.nttdata.com/global/en/news/press-release/2025/august/081300

Google Cloud targets telco network functions, while AWS and Azure are in holding patterns

Deutsche Telekom and Google Cloud partner on “RAN Guardian” AI agent

Ericsson and Google Cloud expand partnership with Cloud RAN solution

NTT & Yomiuri: ‘Social Order Could Collapse’ in AI Era

Google Cloud targets telco network functions, while AWS and Azure are in holding patterns

Overview:

Network operators have used public clouds for analytics and IT, including their business and operational support systems, but the vast majority have been reluctant to rely on hyper-scaler public clouds to host their network functions. However, there have been a few exceptions:

1. AWS counts Boost Mobile, Dish Network, Swisscom and Telefónica Germany as network operators running part of their 5G network in its public cloud. In a cloud-native 5G stand alone (SA) core network, the network functions are virtualized and run as software, rather than relying on dedicated hardware.

a] Dish Network is using Nokia’s cloud-native, 5G standalone core software which is deployed on the AWS public cloud. This includes software for subscriber data management, device management, packet core, voice and data core, and integration services. Dish invokes several AWS services, including Regions, Local Zones and Outposts, to host its 5G core network and related components.

b] Swisscom is migrating its core applications, including OSS/BSS and portions of its 5G core, to AWS according to Business Wire. This is part of a broader digital transformation strategy to modernize its infrastructure and services.

c] Telefónica Germany (O2 Telefónica) has moved its 5G core network to Amazon Web Services (AWS). This move, in collaboration with Nokia, makes them the first telecom company to switch an existing 5G core to a public cloud provider, specifically AWS. They launched their 5G cloud core, built entirely in the cloud, in July 2024, initially serving around one million subscribers.

2. Microsoft’s Azure cloud is running AT&T and the Middle East’s Etisalat 5G core network. AT&T is using Microsoft’s Azure Operator Nexus platform to run its 5G core network, including both standalone (SA) and non-standalone (NSA) deployments, according to AT&T and Microsoft. This move is part of a strategic partnership between the two companies where AT&T is shifting its 5G mobile network to the Microsoft cloud. However, AT&T’s 5G core network is not yet commercially available nationwide.

3. Ericsson has partnered with Google Cloud to offer 5G core as a service (5GCaaS) leveraging Google Cloud’s infrastructure. This allows operators to deploy and manage their 5G core network functions on Google’s cloud, rather than relying solely on traditional on-premises infrastructure. This Ericsson on-demand service recently launched with Google seems aimed mainly at smaller telcos, keen to avoid big upfront costs, or specific scenarios. To address much bigger needs, Google has an Outposts competitor it markets under the brand of Google Distributed Cloud (or GDC).

A serious concern with this Ericsson -Google offering is cloud provider lock-in, i.e. that a telco would not be able to move its 5GCaaS provided by Ericsson to an alternative cloud platform. Going “native,” in this case, meant building on top of Google-specific technologies, which rules out any prospect of a “lift and shift” to AWS, Microsoft or someone else, said Eric Parsons, Ericsson’s vice president of emerging segments in core networks, on a recent call with Light Reading.

……………………………………………………………………………………………………………………………………………………………………….

Google Cloud for Network Functions:

Angelo Libertucci, Google’s global head of telecom told Light Reading, the “timing is right” for a Google campaign that targets telco networks after years of sluggish industry progress. “The pressures that telcos are dealing with – the higher capex, lower ARPU [average revenue per user], competitiveness – it’s been a tough two years and there have been a number of layoffs, at least in North America,” he told Light Reading at last week’s Digital Transformation World event in Copenhagen.

“We run the largest private network on the planet,” said Libertucci. “We have over 2 million miles of fiber.” Services for more than a billion users are supported “with a fraction of the people that even the smallest regional telcos have, and that’s because everything we do is automated,” he claimed.

“There haven’t been that many network functions that run in the cloud – you can probably name them on less than four fingers,” he said. “So we don’t think we’ve really missed the boat yet on that one.” Indeed, most network functions are still deployed on telco premises (aka central offices).

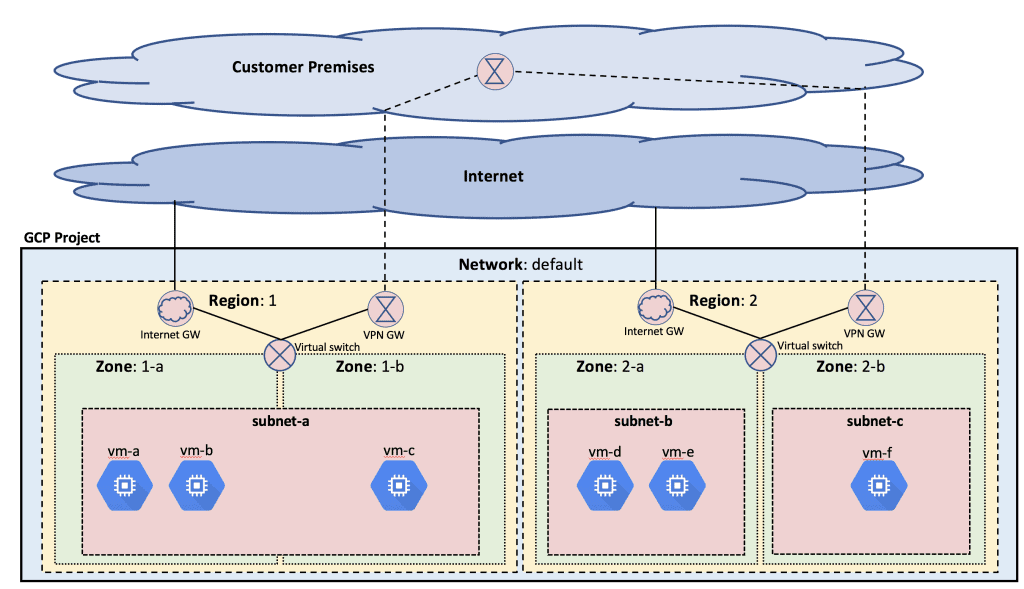

Image Credit: Google Cloud Platform

Deutsche Telekom has partnered with Google earlier this year to build an agentic AI called RAN Guardian, which can assess network data, detect performance issues and even take corrective action without manual intervention. Built using Gemini 2.0 in Vertex AI from Google Cloud, the agent can analyze network behavior, detect performance issues, and implement corrective actions to improve network reliability, reduce operational costs, and enhance customer experiences. Deutsche Telekom keeps the network data at its own facilities but relies on interconnection to Google Cloud for the above listed functions.

“Do I then decide to keep it (network functions and data) on-prem and maintain that pre-processing pipeline that I have? Or is there a cost benefit to just run it in cloud, because then you have all the native integration? You don’t have any interconnect, you have all the data for any use case that you ever wanted or could think of. It’s much easier and much more seamless.” Such autonomous networking, in his view, is now the killer use case for the public cloud.

Yet many telco executives believe that public cloud facilities are incapable of handling certain network functions. European telcos including BT, Deutsche Telekom, Orange and Vodafone, have made investments in their own private cloud platforms for their telco workloads. Also, regulators in some countries may block operators from using public clouds. BT this year said local legislation now prevents it from using the public cloud for network functions. European authorities increasingly talk of the need for a “sovereign cloud” under the full control of local players.

Google does claim to have a set of “sovereign cloud” products that ensure data is stored in the country where the telco operates. “We have fully air-gapped sovereign cloud offerings with Google Cloud binaries that we’ve done in partnership with telcos for years now,” said Libertucci. The uncertainty is whether these will always meet the definition. “If sovereign means you can’t use an American-owned organization, then that’s another part of the definition that somehow we will have to find a way to address,” he added. “If you are cloud-native, it’s supposed to be easier to move to any cloud, but with telco it’s not that simple because it’s a very performance-oriented workload,” said Libertucci.

What’s likely, then, is that operators will assign whole regions to specific combinations of public cloud providers and telco vendors, he thinks, as they have done on the network side. “You see telcos awarding a region to Huawei and another to Ericsson with complete separation between them. They might choose to go down that route with network vendors as well and so you may have an Ericsson and Google part of the network.”

“We’re a platform company, we’re a data company and we’re an AI company,” said Libertucci. “I think we’re happy now with being a platform others develop on.”

………………………………………………………………………………………………………………………………………………………………………………….

Cloud RAN Disappoints:

Outside a trial with Ericsson almost two years ago, there is not much sign of Google activity in cloud RAN, the use of general-purpose chips and cloud platforms to support RAN workloads. “So far, no one’s really pushed us down into that area,” said Libertucci. AWS, by contrast, has this year begun to show off an Outposts server built around one of its own Graviton central processing units for cloud RAN. Currently, however, it does not appear to be supporting a cloud RAN deployment for any telco.

………………………………………………………………………………………………………………………………………………………………………………

References:

https://www.lightreading.com/cloud/google-preps-public-cloud-charge-at-telecom-as-microsoft-wobbles

Deutsche Telekom and Google Cloud partner on “RAN Guardian” AI agent

Does AI change the business case for cloud networking?

For several years now, the big cloud service providers – Amazon Web Services (AWS), Microsoft Azure, and Google Cloud – have tried to get wireless network operators to run their 5G SA core network, edge computing and various distributed applications on their cloud platforms. For example, Amazon’s AWS public cloud, Microsoft’s Azure for Operators, and Google’s Anthos for Telecom were intended to get network operators to run their core network functions into a hyperscaler cloud.

AWS had early success with Dish Network’s 5G SA core network which has all its functions running in Amazon’s cloud with fully automated network deployment and operations.

Conversely, AT&T has yet to commercially deploy its 5G SA Core network on the Microsoft Azure public cloud. Also, users on AT&T’s network have experienced difficulties accessing Microsoft 365 and Azure services. Those incidents were often traced to changes within the network’s managed environment. As a result, Microsoft has drastically reduced its early telecom ambitions.

Several pundits now say that AI will significantly strengthen the business case for cloud networking by enabling more efficient resource management, advanced predictive analytics, improved security, and automation, ultimately leading to cost savings, better performance, and faster innovation for businesses utilizing cloud infrastructure.

“AI is already a significant traffic driver, and AI traffic growth is accelerating,” wrote analyst Brian Washburn in a market research report for Omdia (owned by Informa). “As AI traffic adds to and substitutes conventional applications, conventional traffic year-over-year growth slows. Omdia forecasts that in 2026–30, global conventional (non-AI) traffic will be about 18% CAGR [compound annual growth rate].”

Omdia forecasts 2031 as “the crossover point where global AI network traffic exceeds conventional traffic.”

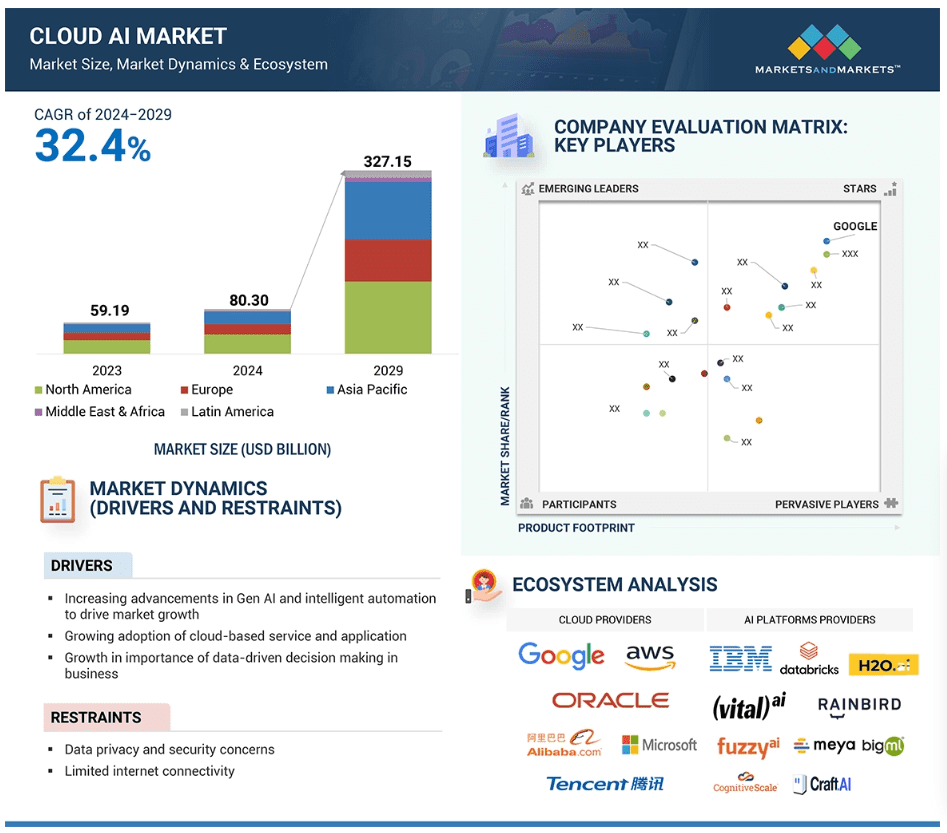

Markets & Markets forecasts the global cloud AI market (which includes cloud AI networking) will grow at a CAGR of 32.4% from 2024 to 2029.

AI is said to enhance cloud networking in these ways:

- Optimized resource allocation:

AI algorithms can analyze real-time data to dynamically adjust cloud resources like compute power and storage based on demand, minimizing unnecessary costs. - Predictive maintenance:

By analyzing network patterns, AI can identify potential issues before they occur, allowing for proactive maintenance and preventing downtime. - Enhanced security:

AI can detect and respond to cyber threats in real-time through anomaly detection and behavioral analysis, improving overall network security. - Intelligent routing:

AI can optimize network traffic flow by dynamically routing data packets to the most efficient paths, improving network performance. - Automated network management:

AI can automate routine network management tasks, freeing up IT staff to focus on more strategic initiatives.

The pitch is that AI will enable businesses to leverage the full potential of cloud networking by providing a more intelligent, adaptable, and cost-effective solution. Well, that remains to be seen. Google’s new global industry lead for telecom, Angelo Libertucci, told Light Reading:

“Now enter AI,” he continued. “With AI … I really have a power to do some amazing things, like enrich customer experiences, automate my network, feed the network data into my customer experience virtual agents. There’s a lot I can do with AI. It changes the business case that we’ve been running.”

“Before AI, the business case was maybe based on certain criteria. With AI, it changes the criteria. And it helps accelerate that move [to the cloud and to the edge],” he explained. “So, I think that work is ongoing, and with AI it’ll actually be accelerated. But we still have work to do with both the carriers and, especially, the network equipment manufacturers.”

Google Cloud last week announced several new AI-focused agreements with companies such as Amdocs, Bell Canada, Deutsche Telekom, Telus and Vodafone Italy.

As IEEE Techblog reported here last week, Deutsche Telekom is using Google Cloud’s Gemini 2.0 in Vertex AI to develop a network AI agent called RAN Guardian. That AI agent can “analyze network behavior, detect performance issues, and implement corrective actions to improve network reliability and customer experience,” according to the companies.

And, of course, there’s all the buzz over AI RAN and we plan to cover expected MWC 2025 announcements in that space next week.

https://www.lightreading.com/cloud/google-cloud-doubles-down-on-mwc

Nvidia AI-RAN survey results; AI inferencing as a reinvention of edge computing?

The case for and against AI-RAN technology using Nvidia or AMD GPUs

Generative AI in telecom; ChatGPT as a manager? ChatGPT vs Google Search

Deutsche Telekom and Google Cloud partner on “RAN Guardian” AI agent

Deutsche Telekom and Google Cloud today announced a new partnership to improve Radio Access Network (RAN) operations through the development of a network AI agent. Built using Gemini 2.0 in Vertex AI from Google Cloud, the agent can analyze network behavior, detect performance issues, and implement corrective actions to improve network reliability, reduce operational costs, and enhance customer experiences.

Deutsche Telekom says that as telecom networks become increasingly complex, traditional rule-based automation falls short in addressing real-time challenges. The solution is to use Agentic AI which leverages large language models (LLMs) and advanced reasoning frameworks to create intelligent agents that can think, reason, act, and learn independently.

The RAN Guardian agent, which has been tested and verified at Deutsche Telekom, collaborates in a human-like manner, detecting network anomalies and executing self-healing actions to optimize RAN performance. It will be exhibited at next week’s Mobile World Congress (MWC) in Barcelona, Spain.

–>This cooperative initiative appears to be a first step towards building autonomous and self-healing networks.

In addition to Gemini 2.0 in Vertex AI, the RAN Guardian also uses CloudRun, BigQuery, and Firestore to help deliver:

- Autonomous RAN performance monitoring: The RAN Guardian will continuously analyze key network parameters in real time to predict and detect anomalies.

- AI-driven issue classification and routing: The agent will identify and prioritize network degradations based on multiple data sources, including network monitoring data, inventory data, performance data, and coverage data.

- Proactive network optimization: The agent will also recommend or autonomously implement corrective actions, including resource reallocation and configuration adjustments.

“By combining Deutsche Telekom’s deep telecom expertise with Google Cloud’s cutting-edge AI capabilities, we’re building the next generation of intelligent networks,” said Angelo Libertucci, Global Industry Lead, Telecommunications, Google Cloud. “This means fewer disruptions, faster speeds, and an overall enhanced mobile experience for Deutsche Telekom’s customers.”

“Traditional network management approaches are no longer sufficient to meet the demands of 5G and beyond. We are pioneering AI agents for networks, working with key partners like Google Cloud to unlock a new level of intelligence and automation in RAN operations as a step towards autonomous, self-healing networks” said Abdu Mudesir, Group CTO, Deutsche Telekom.

Mr. Mudesir and Google Cloud’s Muninder Sambi will discuss the role of AI agents in the future of network operations at MWC next week.

References:

https://www.telecoms.com/ai/deutsche-telekom-and-google-cloud-team-up-on-ai-agent-for-ran-operations

Nvidia AI-RAN survey results; AI inferencing as a reinvention of edge computing?

The case for and against AI-RAN technology using Nvidia or AMD GPUs

AI RAN Alliance selects Alex Choi as Chairman

AI sparks huge increase in U.S. energy consumption and is straining the power grid; transmission/distribution as a major problem

Google’s Bosun subsea cable to link Darwin, Australia to Christmas Island in the Indian Ocean

“Vocus is thrilled to have the opportunity to deepen our strategic network partnership with Google, and to play a part in establishing critical digital infrastructure for our region. Australia Connect will bolster our nation’s strategic position as a vital gateway between Asia and the United States by connecting key nodes located in Australia’s East, West, and North to global digital markets,” said Jarrod Nink, Interim Chief Executive Officer, Vocus.

“The combination of the new Australia Connect subsea cables with Vocus’ existing terrestrial route between Darwin and Brisbane, will create a low latency, secure, and stable network architecture. It will also establish Australia’s largest and most diverse domestic inter-capital network, with unparalleled reach and protection across terrestrial and subsea paths.

“By partnering with Google, we are ensuring that Vocus customers have access to high capacity, trusted and protected digital infrastructure linking Australia to the Asia Pacific and to the USA. “The new subsea paths, combined with Vocus’ existing land-based infrastructure, will provide unprecedented levels of diversity, capacity and reliability for Google, our customers and partners,” Nink said.

“Australia Connect advances Google’s mission to make the world’s information universally accessible and useful. We’re excited to collaborate with Vocus to build out the reach, reliability, and resiliency of internet access in Australia and across the Indo-Pacific region,” said Brian Quigley, VP, Global Network Infrastructure, Google Cloud.

Perth, Darwin, and Brisbane are key beneficiaries of this investment and are now emerging as key nodes on the global internet utilizing the competitive and diverse subsea and terrestrial infrastructure established by the Vocus network. Vocus will be in a position to supply an initial 20-30Tbps of capacity per fiber pair on the announced systems, depending on the length of the segment.

References:

Google’s Equiano subsea cable lands in Namibia en route to Cape Town, South Africa

Google’s Topaz subsea cable to link Canada and Japan

“SMART” undersea cable to connect New Caledonia and Vanuatu in the southwest Pacific Ocean

Telstra International partners with: Trans Pacific Networks to build Echo cable; Google and APTelecom for central Pacific Connect cables

HGC Global Communications, DE-CIX & Intelsat perspectives on damaged Red Sea internet cables

Orange Deploys Infinera’s GX Series to Power AMITIE Subsea Cable

NEC completes Patara-2 subsea cable system in Indonesia

SEACOM telecom services now on Equiano subsea cable surrounding Africa

Bharti Airtel and Meta extend 2Africa Pearls subsea cable system to India

China seeks to control Asian subsea cable systems; SJC2 delayed, Apricot and Echo avoid South China Sea

Intentional or Accident: Russian fiber optic cable cut (1 of 3) by Chinese container ship under Baltic Sea

Altice Portugal MEO signs landing party agreement for Medusa subsea cable in Lisbon

2Africa subsea cable system adds 4 new branches

Echo and Bifrost: Facebook’s new subsea cables between Asia-Pacific and North America

Equinix and Vodafone to Build Digital Subsea Cable Hub in Genoa, Italy

Canalys & Gartner: AI investments drive growth in cloud infrastructure spending

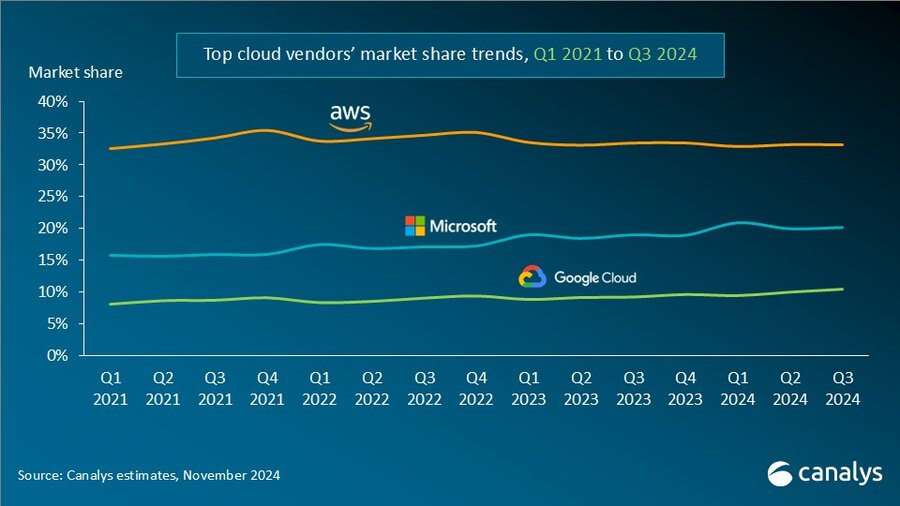

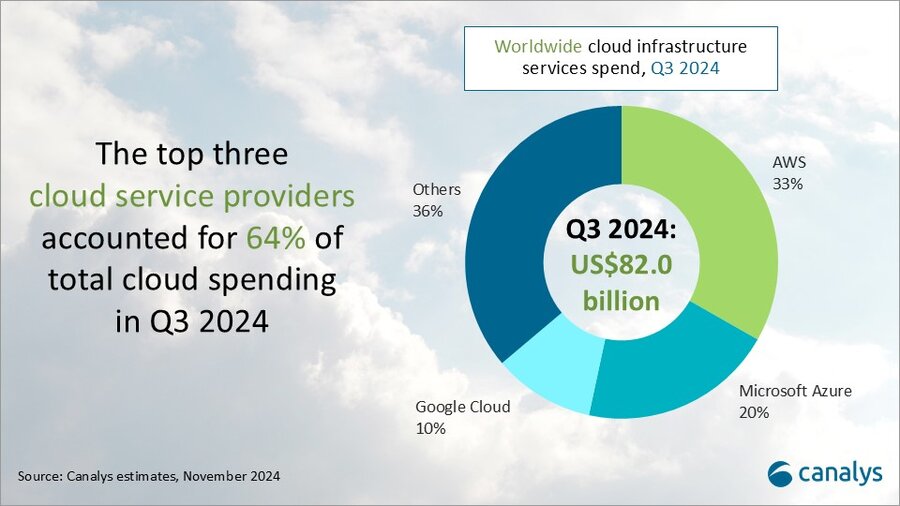

According to market research firm Canalys, global spending on cloud infrastructure services [1.] increased by 21% year on year, reaching US$82.0 billion in the 3rd quarter of 2024. Customer investment in the hyperscalers’ AI offerings fueled growth, prompting leading cloud vendors to escalate their investments in AI.

Note 1. Canalys defines cloud infrastructure services as services providing infrastructure (IaaS and bare metal) and platforms that are hosted by third-party providers and made available to users via the Internet.

The rankings of the top three cloud service providers – Amazon AWS, Microsoft Azure and Google Cloud – remained stable from the previous quarter, with these providers together accounting for 64% of total expenditure. Total combined spending with these three providers grew by 26% year on year, and all three reported sequential growth. Market leader AWS maintained a year-on-year growth rate of 19%, consistent with the previous quarter. That was outpaced by both Microsoft, with 33% growth, and Google Cloud, with 36% growth. In actual dollar terms, however, AWS outgrew both Microsoft and Google Cloud, increasing sales by almost US$4.4 billion on the previous year.

In Q3 2024, the cloud services market saw strong, steady growth. All three cloud hyperscalers reported positive returns on their AI investments, which have begun to contribute to their overall cloud business performance. These returns reflect a growing reliance on AI as a key driver for innovation and competitive advantage in the cloud.

With the increasing adoption of AI technologies, demand for high-performance computing and storage continues to rise, putting pressure on cloud providers to expand their infrastructure. In response, leading cloud providers are prioritizing large-scale investments in next-generation AI infrastructure. To mitigate the risks associated with under-investment – such as being unprepared for future demand or missing key opportunities – they have adopted over-investment strategies, ensuring their ability to scale offerings in line with the growing needs of their AI customers. Enterprises are convinced that AI will deliver an unprecedented boost in efficiency and productivity, so they are pouring money into hyperscalers’ AI solutions. Accordingly, cloud service provider capital spending (CAPEX) will sustain their rapid growth trajectories and are expected to continue on this path into 2025.

“Continued substantial expenditure will present new challenges, requiring cloud vendors to carefully balance their investments in AI with the cost discipline needed to fund these initiatives,” said Rachel Brindley, Senior Director at Canalys. “While companies should invest sufficiently in AI to capitalize on technological growth, they must also exercise caution to avoid overspending or inefficient resource allocation. Ensuring the sustainability of these investments over time will be vital to maintaining long-term financial health and competitive advantage.”

“On the other hand, the three leading cloud providers are also expediting the update and iteration of their AI foundational models, continuously expanding their associated product portfolios,” said Yi Zhang, Analyst at Canalys. “As these AI foundational models mature, cloud providers are focused on leveraging their enhanced capabilities to empower a broader range of core products and services. By integrating these advanced models into their existing offerings, they aim to enhance functionality, improve performance and increase user engagement across their platforms, thereby unlocking new revenue streams.”

Amazon Web Services (AWS) maintained its lead in the global cloud market in Q3 2024, capturing a 33% market share and achieving 19% year-on-year revenue growth. It continued to enhance and broaden its AI offerings by launching new models through Amazon Bedrock and SageMaker, including Anthropic’s upgraded Claude 3.5 Sonnet and Meta’s Llama 3.2. It reported a triple-digit year-on-year increase in AI-related revenue, outpacing its overall growth by more than three times. Over the past 18 months, AWS has introduced nearly twice as many machine learning and generative AI features as the combined offerings of the other leading cloud providers. In terms of capital expenditure, AWS announced plans to further increase investment, with projected spending of approximately US$75 billion in 2024. This investment will primarily be allocated to expanding technology infrastructure to meet the rising demand for AI services, underscoring AWS’ commitment to staying at the forefront of technological innovation and service capability.

Microsoft Azure remains the second-largest cloud provider, with a 20% market share and impressive annual growth of 33%. This growth was partly driven by AI services, which contributed approximately 12% to the overall increase. Over the past six months, use of Azure OpenAI has more than doubled, driven by increased adoption by both digital-native companies and established enterprises transitioning their applications from testing phases to full-scale production environments. To further enhance its offerings, Microsoft is expanding Azure AI by introducing industry-specific models, including advanced multimodal medical imaging models, aimed at providing tailored solutions for a broader customer base. Additionally, the company announced new cloud and AI infrastructure investments in Brazil, Italy, Mexico and Sweden to expand capacity in alignment with long-term demand forecasts.

Google Cloud, the third-largest provider, maintained a 10% market share, achieving robust year-on-year growth of 36%. It showed the strongest AI-driven revenue growth among the leading providers, with a clear acceleration compared with the previous quarter. As of September 2024, its revenue backlog increased to US$86.8 billion, up from US$78.8 billion in Q2, signaling continued momentum in the near term. Its enterprise AI platform, Vertex, has garnered substantial user adoption, with Gemini API calls increasing nearly 14-fold over the past six months. Google Cloud is actively seeking and developing new ways to apply AI tools across different scenarios and use cases. It introduced the GenAI Partner Companion, an AI-driven advisory tool designed to offer service partners personalized access to training resources, enhancing learning and supporting successful project execution. In Q3 2024, Google announced over US$7 billion in planned data center investments, with nearly US$6 billion allocated to projects within the United States.

Separate statistics from Gartner corroborate hyperscale CAPEX optimism. Gartner predicts that worldwide end-user spending on public cloud services is on course to reach $723.4 billion next year, up from a projected $595.7 billion in 2024. All segments of the cloud market – platform-as-a-service (PaaS), software-as-a-service (SaaS), desktop-as-a-service (DaaS), and infrastructure-as-a-service (IaaS) – are expected to achieve double-digit growth.

While SaaS will be the biggest single segment, accounting for $299.1 billion, IaaS will grow the fastest, jumping 24.8 percent to $211.9 million.

Like Canalys, Gartner also singles out AI for special attention. “The use of AI technologies in IT and business operations is unabatedly accelerating the role of cloud computing in supporting business operations and outcomes,” said Sid Nag, vice president analyst at Gartner. “Cloud use cases continue to expand with increasing focus on distributed, hybrid, cloud-native, and multicloud environments supported by a cross-cloud framework, making the public cloud services market achieve a 21.5 percent growth in 2025.”

……………………………………………………………………………………………………………………………………………………………………………………………………..

References:

https://canalys.com/newsroom/global-cloud-services-q3-2024

https://www.telecoms.com/public-cloud/ai-hype-fuels-21-percent-jump-in-q3-cloud-spending

Cloud Service Providers struggle with Generative AI; Users face vendor lock-in; “The hype is here, the revenue is not”

MTN Consulting: Top Telco Network Infrastructure (equipment) vendors + revenue growth changes favor cloud service providers

IDC: Public Cloud software at 2/3 of all enterprise applications revenue in 2026; SaaS is essential!

IDC: Cloud Infrastructure Spending +13.5% YoY in 4Q-2021 to $21.1 billion; Forecast CAGR of 12.6% from 2021-2026

IDC: Worldwide Public Cloud Services Revenues Grew 29% to $408.6 Billion in 2021 with Microsoft #1?

Synergy Research: Microsoft and Amazon (AWS) Dominate IT Vendor Revenue & Growth; Popularity of Multi-cloud in 2021

Google Cloud revenues up 54% YoY; Cloud native security is a top priority

AI Echo Chamber: “Upstream AI” companies huge spending fuels profit growth for “Downstream AI” firms

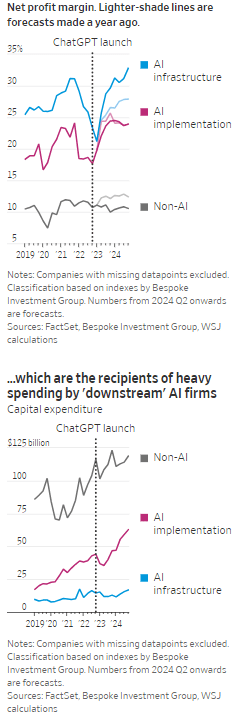

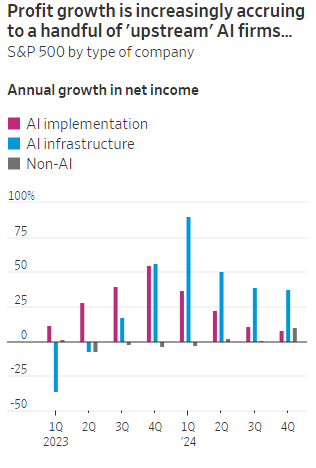

According to the Wall Street Journal, the AI industry has become an “Echo Chamber,” where huge capital spending by the AI infrastructure and application providers have fueled revenue and profit growth for everyone else. Market research firm Bespoke Investment Group has recently created baskets for “downstream” and “upstream” AI companies.

- The Downstream group involves “AI implementation,” which consist of firms that sell AI development tools, such as the large language models (LLMs) popularized by OpenAI’s ChatGPT since the end of 2022, or run products that can incorporate them. This includes Google/Alphabet, Microsoft, Amazon, Meta Platforms (FB), along with IBM, Adobe and Salesforce.

- Higher up the supply chain (Upstream group), are the “AI infrastructure” providers, which sell AI chips, applications, data centers and training software. The undisputed leader is Nvidia, which has seen its sales triple in a year, but it also includes other semiconductor companies, database developer Oracle and owners of data centers Equinix and Digital Realty.

The Upstream group of companies have posted profit margins that are far above what analysts expected a year ago. In the second quarter, and pending Nvidia’s results on Aug. 28th , Upstream AI members of the S&P 500 are set to have delivered a 50% annual increase in earnings. For the remainder of 2024, they will be increasingly responsible for the profit growth that Wall Street expects from the stock market—even accounting for Intel’s huge problems and restructuring.

It should be noted that the lines between the two groups can be blurry, particularly when it comes to giants such as Amazon, Microsoft and Alphabet, which provide both AI implementation (e.g. LLMs) and infrastructure: Their cloud-computing businesses are responsible for turning these companies into the early winners of the AI craze last year and reported breakneck growth during this latest earnings season. A crucial point is that it is their role as ultimate developers of AI applications that have led them to make super huge capital expenditures, which are responsible for the profit surge in the rest of the ecosystem. So there is a definite trickle down effect where the big tech players AI directed CAPEX is boosting revenue and profits for the companies down the supply chain.

As the path for monetizing this technology gets longer and harder, the benefits seem to be increasingly accruing to companies higher up in the supply chain. Meta Platforms Chief Executive Mark Zuckerberg recently said the company’s coming Llama 4 language model will require 10 times as much computing power to train as its predecessor. Were it not for AI, revenues for semiconductor firms would probably have fallen during the second quarter, rather than rise 18%, according to S&P Global.

………………………………………………………………………………………………………………………………………………………..

………………………………………………………………………………………………………………………………………………………..

A paper written by researchers from the likes of Cambridge and Oxford uncovered that the large language models (LLMs) behind some of today’s most exciting AI apps may have been trained on “synthetic data” or data generated by other AI. This revelation raises ethical and quality concerns. If an AI model is trained primarily or even partially on synthetic data, it might produce outputs lacking human-generated content’s richness and reliability. It could be a case of the blind leading the blind, with AI models reinforcing the limitations or biases inherent in the synthetic data they were trained on.

In this paper, the team coined the phrase “model collapse,” claiming that training models this way will answer user prompts with low-quality outputs. The idea of “model collapse” suggests a sort of unraveling of the machine’s learning capabilities, where it fails to produce outputs with the informative or nuanced characteristics we expect. This poses a serious question for the future of AI development. If AI is increasingly trained on synthetic data, we risk creating echo chambers of misinformation or low-quality responses, leading to less helpful and potentially even misleading systems.

……………………………………………………………………………………………………………………………………………

In a recent working paper, Massachusetts Institute of Technology (MIT) economist Daron Acemoglu argued that AI’s knack for easy tasks has led to exaggerated predictions of its power to enhance productivity in hard jobs. Also, some of the new tasks created by AI may have negative social value (such as design of algorithms for online manipulation). Indeed, data from the Census Bureau show that only a small percentage of U.S. companies outside of the information and knowledge sectors are looking to make use of AI.

References:

https://deepgram.com/learn/the-ai-echo-chamber-model-collapse-synthetic-data-risks

https://economics.mit.edu/sites/default/files/2024-04/The%20Simple%20Macroeconomics%20of%20AI.pdf